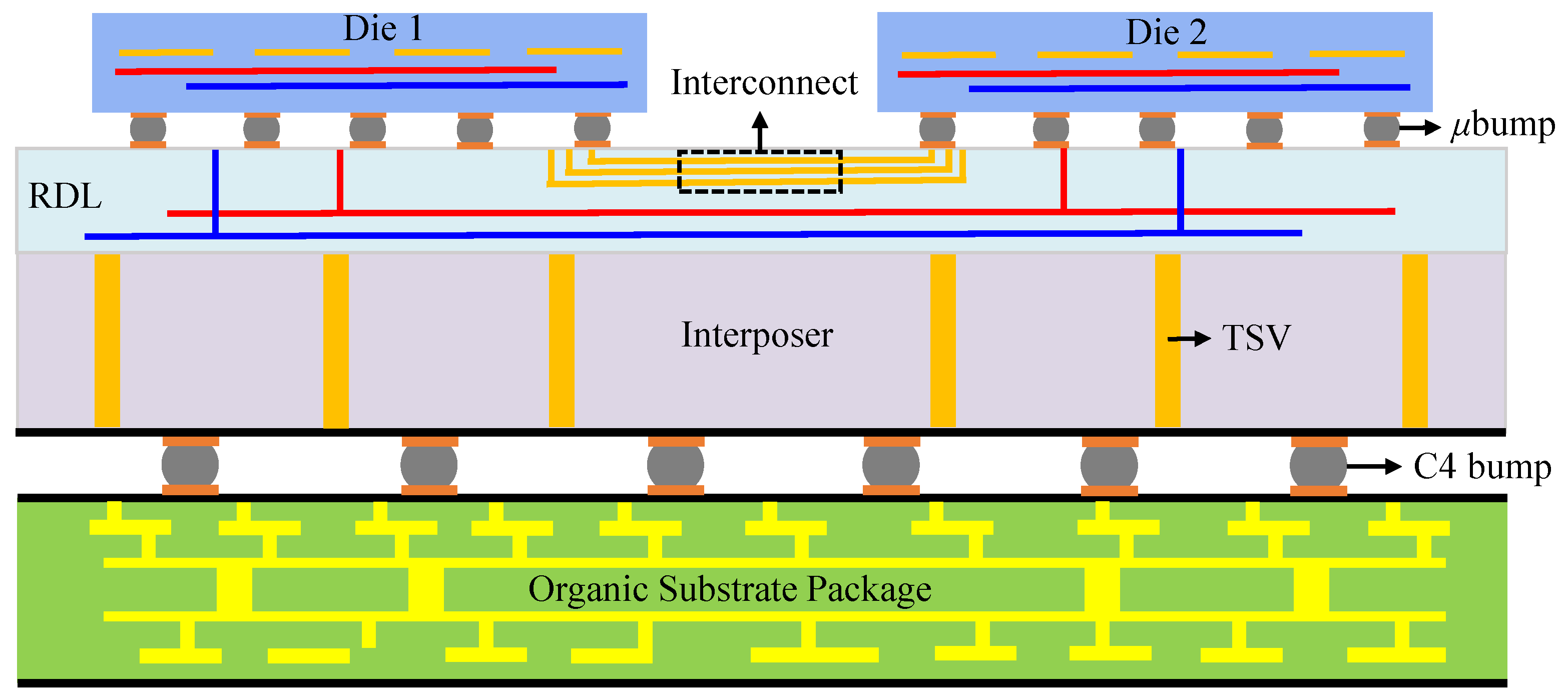

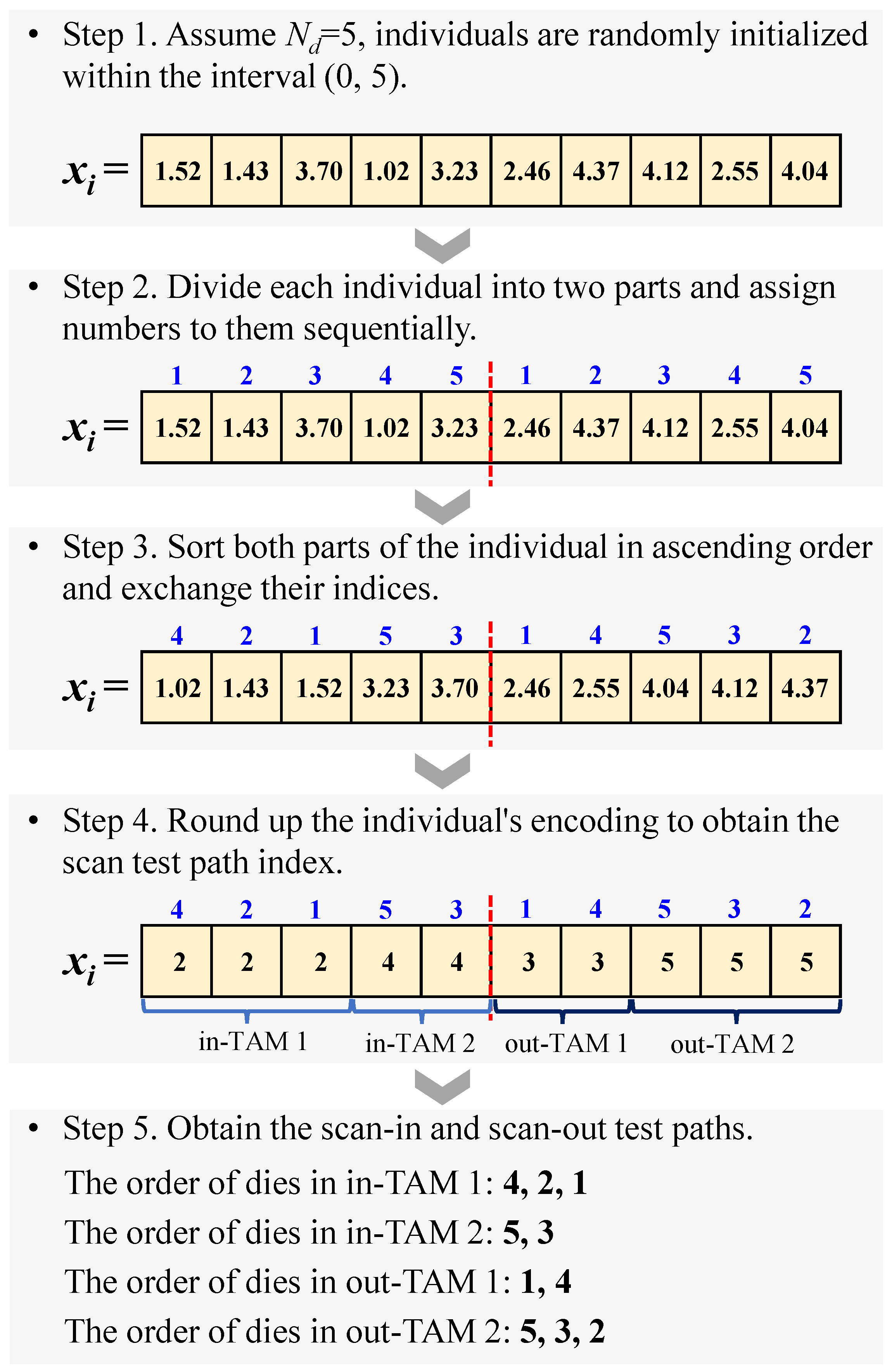

In this section, we present a series of experiments to evaluate the effectiveness of the proposed optimization method for solving the test-path scheduling problem in 2.5D ICs. We begin by describing the experimental setup. Subsequently, small-scale experiments are performed to validate the accuracy of the formulated mathematical model and the effectiveness of the proposed optimization algorithm. Then, large-scale experiments are conducted to assess the performance of OLELS-DE through comprehensive comparative analysis with other state-of-the-art optimization methods. Finally, experiments are performed to analyze the sensitivity of the proposed algorithm to several critical parameters.

4.2. Validation of Model and Algorithm’s Accuracy

In this subsection, a basic case study with small-scale experiments is conducted to validate the accuracy of both the mathematical model and the proposed optimization algorithm. Specifically, ten dies (die 1 to die 10) from

Table 2 are selected as candidates to be placed on the interposer for testing. The test wire lengths between different die pairs are listed in

Table 3. These values are non-dimensional and represent relative, rather than absolute, distances, which vary depending on the distribution of dies. The values are randomly generated within a reasonable range for the purpose of this study.

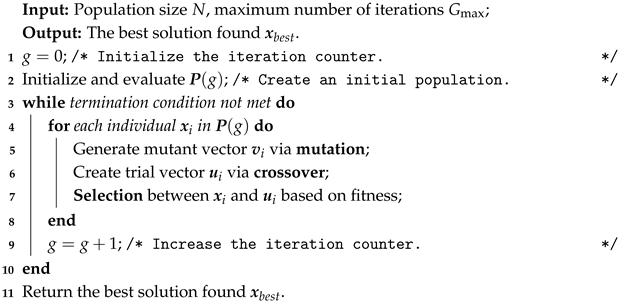

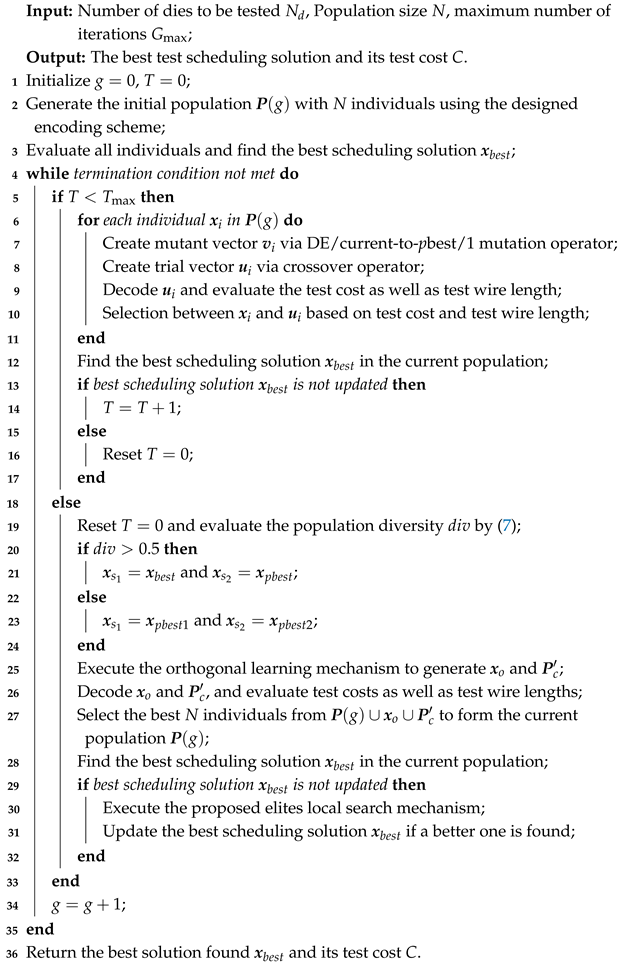

For comparison purposes, we employ the basic DE algorithm using both the DE/rand/1 and DE/best/1 mutation strategies to solve the test-path scheduling problem. We also include the results obtained by the ILP-based method as reported in [

8] for reference. Additionally, two baseline methods are considered for validation. The first baseline (BL1) assigns an independent scan-in and scan-out chain to each die, enabling full parallel testing of all dies. The second baseline (BL2) uses a single scan-in and scan-out chain to test all dies sequentially. The scheduling results produced by OLELS-DE and other optimization methods for this basic case are summarized in

Table 4.

As shown in the results, the proposed OLELS-DE algorithm achieves a competitive test cost of

$63.33, which matches the cost obtained by the ILP method [

8], demonstrating the accuracy and effectiveness of the optimization approach. In contrast, both DE/rand/1 and DE/best/1 result in higher costs,

$66.76 and

$65.26, respectively, due to suboptimal scheduling paths. Although DE/rand/1 and DE/best/1 achieve slightly shorter test lengths, they introduce more TAMs (e.g., DE/rand/1 uses 4 in-TAMs and 5 out-TAMs), which increases hardware resource consumption and associated cost. The test wire length obtained by OLELS-DE (247) is reasonably low, striking a balance between resource usage and routing complexity. Compared with the baseline methods, OLELS-DE significantly outperforms both BL1 and BL2 in terms of cost. BL1, which uses independent scan chains for each die, results in the highest cost (

$122.76) due to excessive TAM usage. BL2, although using minimal TAMs, leads to the longest test length (13,658), resulting in a cost of

$120.49.

These experimental results confirm that OLELS-DE not only achieves optimal or near-optimal cost efficiency comparable to the exact method ILP, but also significantly outperforms the basic DE algorithms and baseline strategies in balancing test time, TAM usage, and wire length.

4.3. Performance Comparison

In this subsetion, we rigorously evaluate the effectiveness of the proposed OLELS-DE algorithm through comprehensive comparative experiments against five state-of-the-art metaheuristic algorithms and CPLEX across nine specially designed test instances (labeled P1 to P9). The number of dies to be tested in these instances ranges from 20 to 100, increasing in increments of 10. For comparative analysis,

Table 5 presents the best, worst, and average test costs achieved by each algorithm across multiple independent runs, along with their corresponding CPU runtimes and statistical results obtained by the Wilcoxon signed-rank test. It should be noted that, since CPLEX fails to solve most instances to optimality within a reasonable time limit, we report its results based on runs capped at 1800 s (0.5 h) of CPU time for P1–P4 and 3600 s (1 h) for P5–P9. For OLELS-DE and other metaheuristic algorithms, the reported runtime refers to the CPU time consumed after completing 2000 iterations.

4.3.1. General Performance

From the experimental results, it is immediately evident that OLELS-DE demonstrates superior or highly competitive performance across the majority of the nine test instances. In most cases, it ranks first in at least two of the three reported metrics (i.e., best, worst, and mean test costs) while maintaining competitive results in the remaining cases. Even when not achieving the lowest value, its mean test cost is consistently close to the best performer, indicating strong competitiveness. For example, in instance P1, the mean test cost of OLELS-DE is $104.26, which is better than those of JADE ($114.89), EFDE ($117.82), MGDE ($118.13), WOA ($111.24), and HHO ($119.34). In larger instances, such as P9 with 100 dies, OLELS-DE reaches a mean test cost of $236.86, notably outperforming all compared methods.

In terms of robustness, which is measured by the gap between the best and worst results across 20 runs, OLELS-DE again demonstrates remarkable consistency. Taking P6 as an example, the difference between its best and worst test cost is only $9.19, while the same range for JADE, EFDE, MGDE, WOA, and HHO is $38, $118.43, $44.33, $53.9 and $66.12, respectively. Even in large and complex instances like P8 and P9, OLELS-DE maintains a relatively tight variance, showcasing its reliability. This robustness is especially crucial in practical test scheduling scenarios, where deterministic performance is often preferred over erratic optimization behavior. The performance of the compared five metaheuristic algorithms varies noticeably with problem scale. Among them, JADE generally performs better than EFDE and MGDE due to its adaptive parameter mechanism, yet it still significantly trails behind OLELS-DE in both quality and stability. The other two algorithms, WOA and HHO, based on swarm intelligence heuristics, are more prone to stagnation and suboptimal convergence, particularly in larger instances. This is reflected by their steep increase in test costs from P5 to P9, suggesting weaker scalability. For instance, on P9, the worst test cost of HHO reaches $292.31, and EFDE and MGDE go as high as $563.80 and $480.73, respectively, which are more than double the cost of OLELS-DE. For CPLEX, although it can obtain competitive results for instances P1–P4, its applicability diminishes rapidly with problem size. Even with extended CPU time limits, it fails to solve most large-scale instances to optimality, and in some cases returns solutions inferior to those of OLELS-DE.

The consistently superior results of OLELS-DE can be attributed to its layered exploration and exploitation strategy, along with the elite learning mechanism, which together promote diversified search in the early stages and intensified refinement in the later stages. This balance allows the algorithm to avoid local optima while effectively converging toward global solutions. Compared to conventional DE variants that often lack adaptive control of exploration depth or elite knowledge reuse, OLELS-DE integrates these mechanisms in a structured and cooperative manner.

4.3.2. Statistical Analysis

Building upon the performance trends discussed in

Section 4.3.1, a statistical analysis is further conducted to rigorously verify whether the observed differences among algorithms are significant. To this end, two widely accepted non-parametric statistical tests are employed: Wilcoxon signed-rank test and Friedman test with post hoc multiple comparisons.

The Wilcoxon signed-rank test results presented in

Table 5 reveal that OLELS-DE consistently outperforms all five compared metaheuristic algorithms (JADE, EFDE, MGDE, WOA, and HHO) across nearly all test instances. This is evidenced by the predominance of “(−)” symbols, indicating that each competitor is significantly worse than OLELS-DE in terms of the test cost. No instance shows a metaheuristic algorithm significantly outperforming OLELS-DE (i.e., no “(+)” signs). In contrast, the comparison with the exact solver CPLEX shows a more nuanced picture: CPLEX significantly surpasses OLELS-DE on instances P1 and P5, performs equivalently on P2 and P3, but is significantly outperformed by OLELS-DE on larger instances from P4 onwards. These findings align with the expected computational limitations of exact solvers when facing large-scale, complex problems within reasonable time budgets.

Complementing the pairwise Wilcoxon analysis, the Friedman test is conducted to provide a global ranking of the algorithms based on their performance across all nine test instances. As summarized in

Table 6, OLELS-DE achieves the lowest (best) average rank of 1.3333, closely followed by CPLEX at 1.6667, whereas the remaining algorithms exhibit considerably inferior rankings, with MGDE and EFDE occupying the bottom positions.

Subsequent post hoc multiple comparison procedures, including Bonferroni–Dunn, Holm, and Hochberg corrections, are applied to ascertain the statistical significance of OLELS-DE’s superiority over each competitor individually.

Table 7 confirms that OLELS-DE significantly outperforms MGDE, EFDE, JADE, and HHO at a highly stringent significance level (adjusted

p-values all below 0.01). The difference between OLELS-DE and WOA is also significant at a less strict threshold (unadjusted

), while no statistically significant difference is observed between OLELS-DE and CPLEX (unadjusted

).

Collectively, these statistical test results substantiate the consistent and robust superiority of OLELS-DE over state-of-the-art metaheuristic approaches and demonstrate its competitiveness with exact optimization methods, especially on large-scale 2.5D IC test scheduling problems.

4.3.3. Convergence Performance

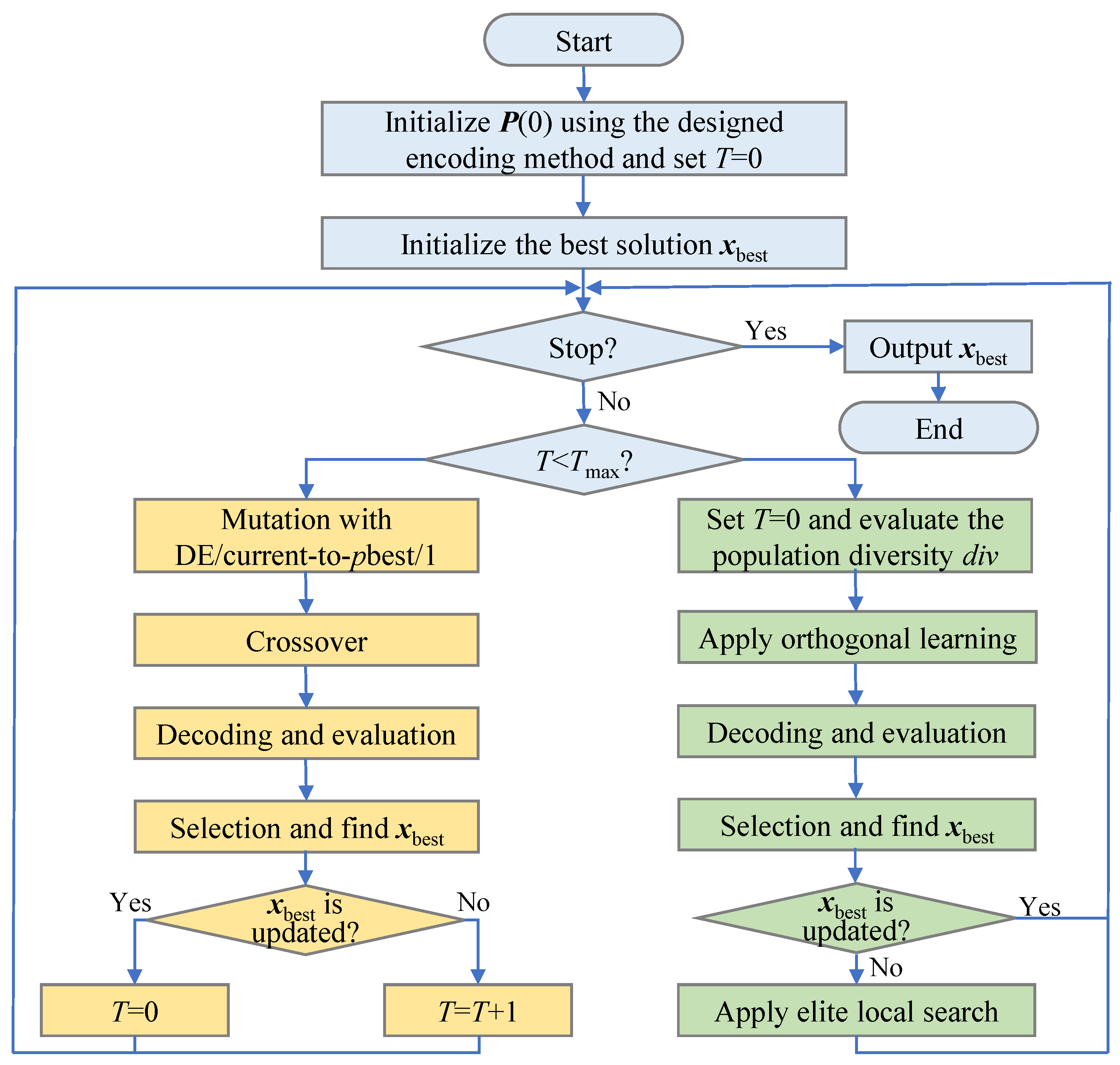

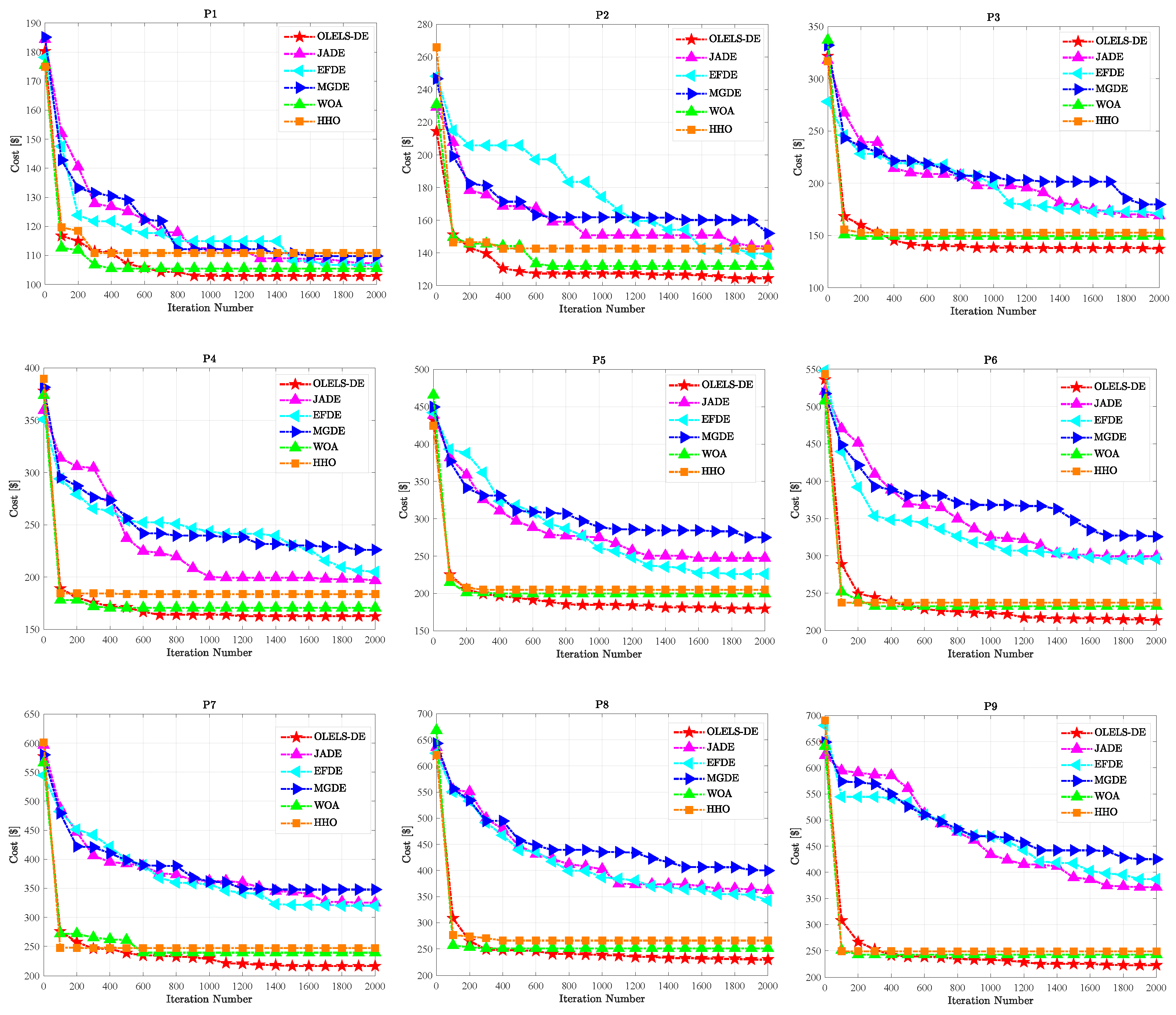

To further assess the optimization dynamics and stability of the compared algorithms, convergence curves are plotted to visualize the evolution of the test cost over iterations.

Figure 4 depicts the convergence behavior of the proposed OLELS-DE algorithm compared with five other metaheuristic algorithms across nine test instances. Each curve corresponds to the run that achieves the best test cost among 20 independent runs, thereby reflecting the most efficient convergence process observed for each algorithm. It is evident that OLELS-DE consistently exhibits the most favorable convergence patterns across all instances, excelling in both convergence speed and final solution quality. In nearly every case, OLELS-DE rapidly reduces the test cost within the first few hundred iterations and then stabilizes at a near-optimal value with minimal oscillation. This behavior underscores the effectiveness of its orthogonal learning-based search mechanism and elite learning-based local search strategy, which together enable fast exploitation while preserving strong global search capability.

In contrast, the performance of other compared algorithms appears more volatile and often suboptimal. JADE and EFDE, although capable of early cost reduction, frequently stagnate at higher values, indicating premature convergence. MGDE shows slower and less stable descent, especially on larger instances (e.g., P6–P9), suggesting difficulty in escaping local optima and adjusting to increased problem complexity. WOA and HHO, while sometimes achieving rapid early progress, often exhibit oscillating or irregular convergence patterns, reflecting weak solution refinement and susceptibility to randomness in their search dynamics.

Importantly, the advantage of OLELS-DE becomes more obvious as the problem scale increases. On medium-scale instances (e.g., P1–P3), the gap in final test cost among some algorithms is relatively small. However, on larger instances (P6–P9), OLELS-DE maintains its performance with minimal degradation, while the compared methods suffer significant slowdowns or fail to reach competitive solutions even after 2000 iterations. This indicates that OLELS-DE not only achieves strong convergence but also scales effectively with problem complexity, which is an essential property for practical applications involving large-scale 2.5D ICs. Moreover, the convergence curves of OLELS-DE are notably smoother and more stable, revealing its inherent robustness and low variance. This stands in contrast to the jagged, fluctuating paths observed in HHO, MGDE, and EFDE, which are often affected by poor parameter control or excessive randomness. The elite learning mechanism in OLELS-DE ensures that high-quality solutions are retained and exploited efficiently, avoiding regression or instability in the optimization trajectory.

4.3.4. Computational Runtime Comparison

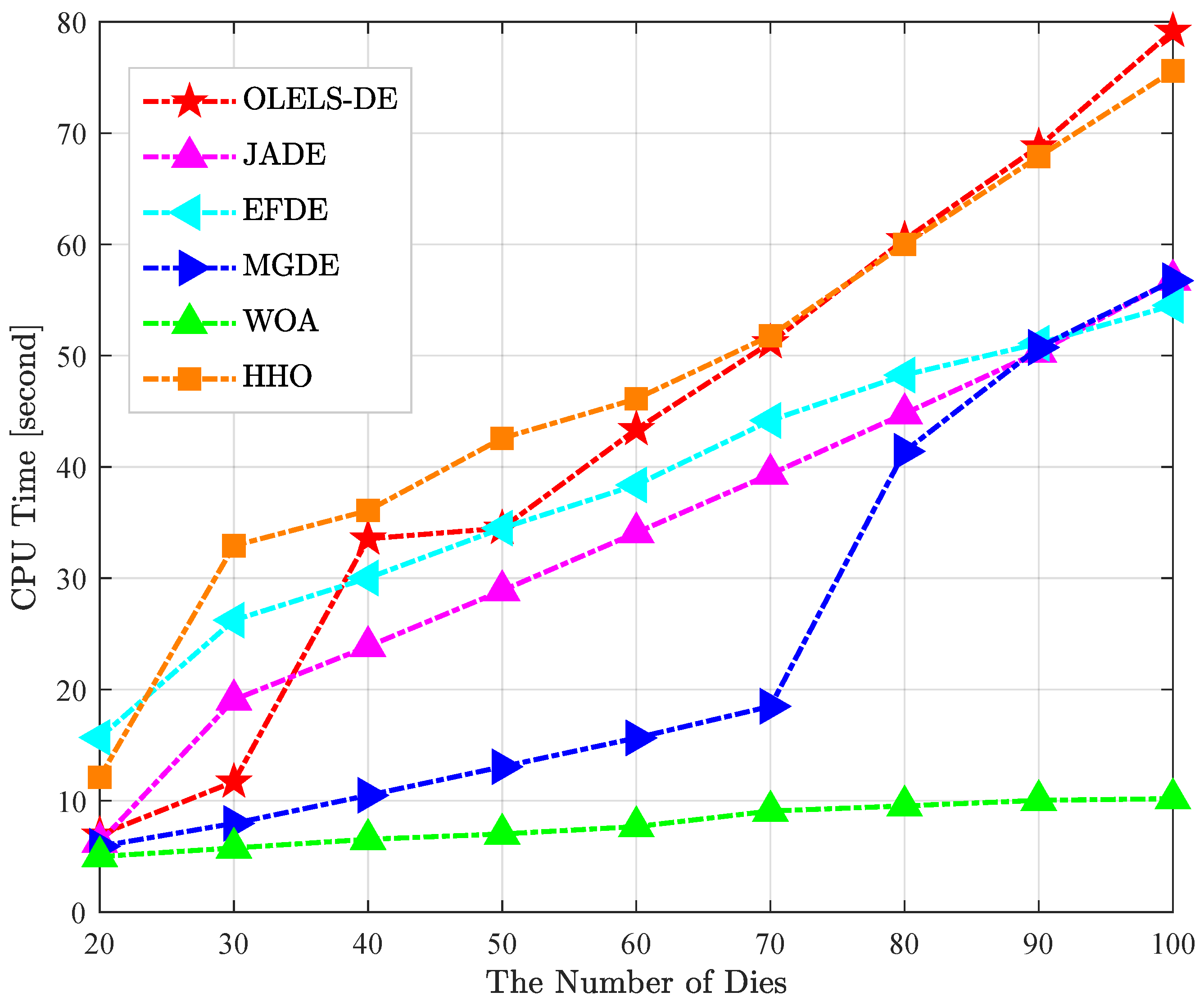

Figure 5 presents the CPU runtime of each algorithm after completing 2000 iterations as the number of dies increases, based on the “time” values reported in

Table 5. The curves clearly illustrate how computational cost scales with problem size and reveal distinct runtime characteristics for different algorithms.

WOA consistently records the lowest runtime and the flattest growth trend: its CPU time rises only slightly from 4.99 s at 20 dies to 10.19 s at 100 dies. This reflects very low per-iteration overhead, making WOA the fastest method; however, its solution quality is comparatively poor as previously discussed. JADE and EFDE show moderate and stable runtime growth. JADE’s CPU time increases from 6.27 s to 56.80 s, while EFDE ranges from 15.69 s to 54.52 s. Both exhibit steady scaling behaviour, with balanced runtime–quality performance. OLELS-DE exhibits a predictable upward trend from 6.95 s at 20 dies to 79.17 s at 100 dies. Although its CPU runtime is higher than WOA and somewhat higher than JADE/EFDE, it remains within practical limits. Crucially, this additional computational cost is offset by significantly better solution quality, as demonstrated in the earlier cost and statistical analyses. HHO follows a similar trajectory to OLELS-DE but with slightly higher average runtime and inferior cost performance. MGDE runs quickly on medium instances but shows a sudden runtime surge for larger cases (notably P7–P9), likely due to algorithm-specific overhead that becomes prominent at higher complexity, undermining its scalability.

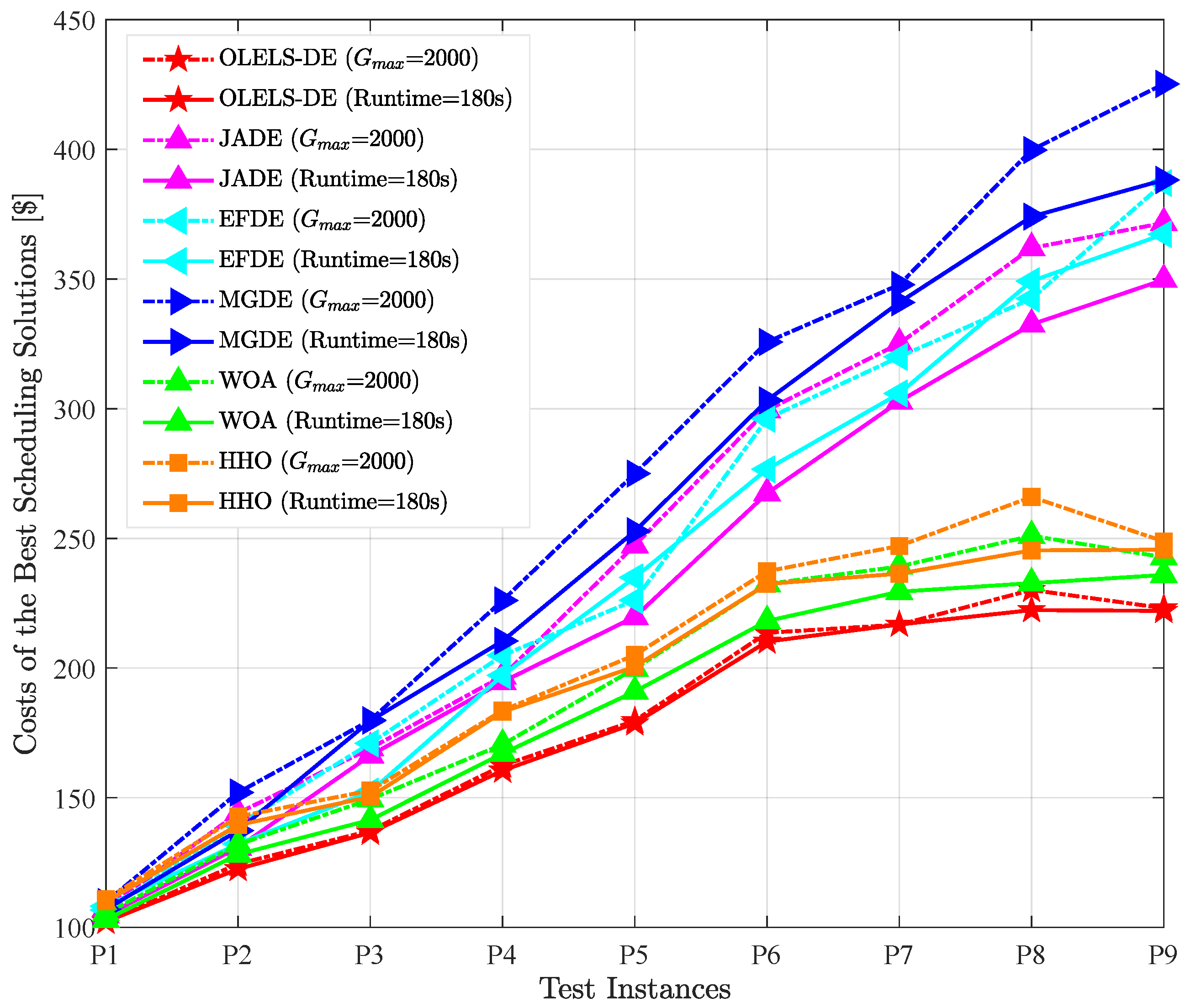

4.3.5. Results Evaluation with Runtime-Based Stopping Criterion

To further evaluate the practical efficiency of the proposed OLELS-DE algorithm under different termination strategies, an additional set of experiments is conducted in which the maximum CPU runtime is used as the stopping criterion instead of a fixed number of iterations. Specifically, the metaheuristic algorithms are terminated after a fixed 180 s CPU budget, and CPLEX is stopped after the same time allowed previously (1800 s for P1–P4, 3600 s for P5–P9). This setting ensures that metaheuristic algorithms are provided with an equal computational budget in real time, thereby enabling a fair evaluation of their search efficiency and solution improvement potential over extended runs. Comprehensive results are presented in

Table 8, and

Figure 6 visually contrasts the best test cost obtained by each algorithm under iteration-limited (

= 2000) and time-limited (Runtime = 180 s) conditions. This setup evaluates each method’s efficiency in utilizing a constrained computational budget.

Compared with the iteration-limited results, OLELS-DE maintains its leading performance under the time cap, consistently achieving the lowest or near-lowest scheduling cost across all problem instances. This robustness indicates that OLELS-DE’s search process is both fast and effective, enabling it to reach even higher-quality solutions when granted additional runtime beyond the fixed-iteration setting.

Notably, several algorithms exhibit improved relative performance under the time-based stopping condition. In particular, JADE, MGDE and HHO show smaller performance gaps to OLELS-DE than in the iteration-limited case, suggesting that their solution quality benefits substantially from the extended computational time and that they can converge to competitive solutions when allowed to run longer. WOA also benefits moderately from the time limit, as its lightweight search operations allow more iterations to be executed within the extended runtime, partially compensating for its lower per-iteration effectiveness. In contrast, EFDE exhibit a decline in relative performance under the time cap in some test instances (e.g., P5 and P8). This can be attributed to its higher per-iteration computational overhead and slower convergence speed, which limit the ability to fully exploit the additional runtime and refine solutions effectively.

From a broader perspective, the results demonstrate that algorithms capable of sustaining improvement throughout extended runs can gain a comparative advantage when more runtime is available. OLELS-DE’s combination of effective local search and balanced exploration–exploitation still ensures that it outperforms all other metaheuristics in most instances, while also remaining competitive with CPLEX for larger problems where the latter cannot complete an exhaustive search within its own time budget.

4.4. Sensitivity Analysis

In this subsection, we conduct experiments to analyze the sensitivity of the proposed OLELS-DE algorithm to two critical parameters when solving the test scheduling problems in 2.5D ICs. The first is the population size N, which directly affects the diversity of search directions and search efficiency. The second is the threshold parameter , which determines the execution frequency of the designed orthogonal learning-based search mechanism and elite-based local search strategy.

4.4.1. Sensitivity Analysis of Population Size N

The population size N plays a crucial role in determining the search behavior of OLELS-DE. Generally speaking, a small N may lead to insufficient diversity in the population, thereby increasing the risk of premature convergence or stagnation to suboptimal solutions. Conversely, an excessively large N can enhance diversity but often dilutes the selective pressure, potentially slowing down convergence. Moreover, a larger population size inevitably increases the number of fitness evaluations required in each iteration, which results in longer CPU runtime for the same number of iterations. Therefore, it is necessary to determine an appropriate N that balances solution quality and computational efficiency.

In the experiments, we select five candidate values of

N, i.e.,

. The scheduling results of OLELS-DE with different settings of

N are presented in

Table 9. The results show that

N = 100 achieves competitive or superior mean costs across most instances while keeping the runtime within a reasonable range. In contrast, smaller populations such as

N = 50 tend to produce worse solutions, as confirmed by the Wilcoxon signed-rank test indicating significant performance degradation in most cases. Larger populations (

) occasionally yield marginally better best-case results, but these gains are inconsistent and come at the expense of substantially increased runtime, which is often more than double that of

N = 100. Considering both solution quality and computational efficiency,

N = 100 is identified as the most suitable population size for OLELS-DE.

4.4.2. Sensitivity Analysis of Parameter

In the OLELS-DE algorithm, the parameter controls the detection frequency of premature convergence and stagnation, thereby determining how often the orthogonal learning-based search mechanism and elite-based local search strategy are activated. A smaller triggers these strategies more frequently, which can enhance exploitation capability and improve solution quality by helping the search escape from local optima. However, this also increases computational overhead due to the additional search operations. Conversely, a larger reduces the activation frequency, lowering the runtime but potentially compromising solution quality because of insufficient exploitation.

To examine its impact, experiments are conducted with

on the P1–P9 instances, and the results are summarized in

Table 10. The results show that when

is small (e.g.,

= 10 or 30), the algorithm often achieves competitive or even best results in terms of mean and best scheduling cost across multiple instances, confirming the benefit of frequent execution of the orthogonal learning-based search mechanism and elite-based local search strategy. However, excessively large values of

(≥90) generally lead to notable degradation in solution quality, as the algorithm becomes less responsive to premature convergence and stagnation. In contrast, the runtime consistently decreases as

increases, with reductions of over 50% observed in some large-scale instances when moving from

= 10 to

= 100, primarily due to the reduced number of additional search activations. Statistical analysis further indicates that

= 30 and

= 50 deliver similar performance without significant differences, while

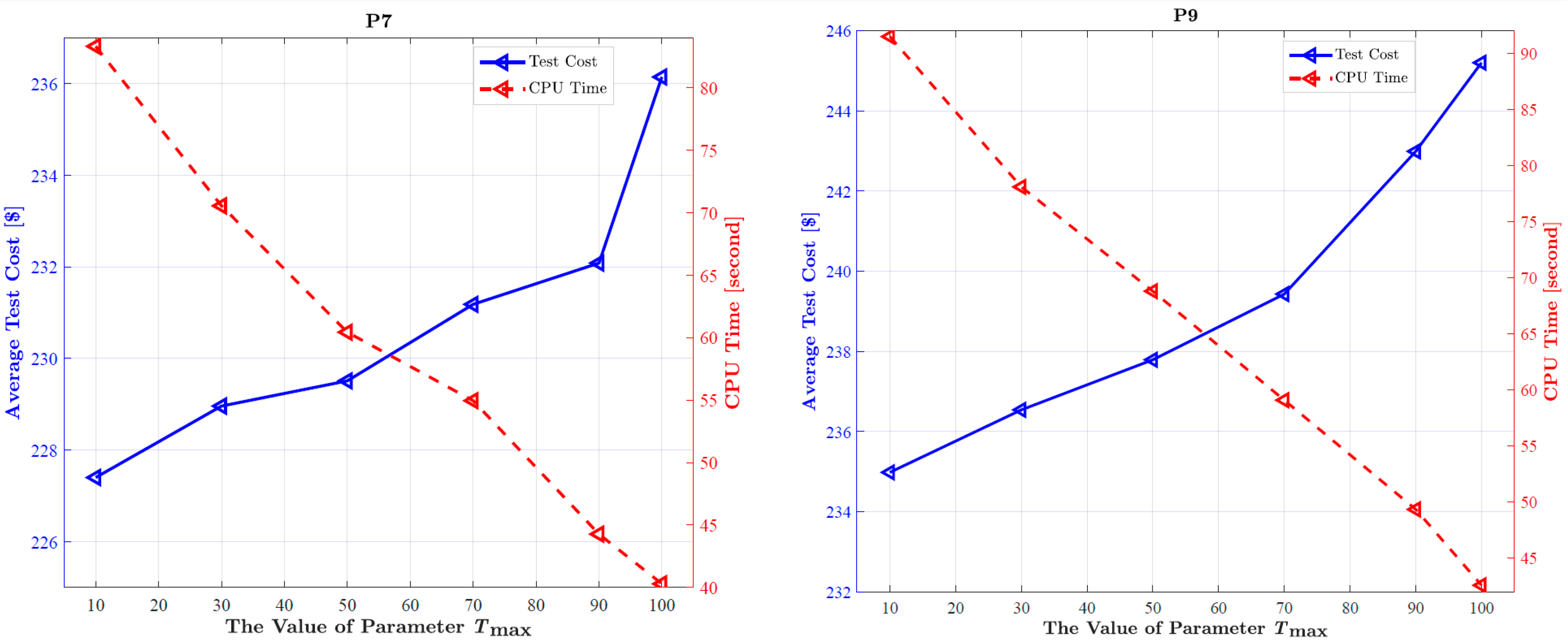

performs significantly worse in most cases. As illustrated in

Figure 7, which takes instances P7 and P9 as representative examples, the average test cost (blue curve) remains low for smaller

values but increases noticeably when

exceeds 70, while the CPU runtime (red dashed curve) exhibits a clear decreasing trend with increasing

—a pattern that fully aligns with the above observations.

Based on these observations, = 10 offers slightly better solution quality in certain cases but at a substantial runtime cost, whereas = 50 provides a more favorable trade-off between solution quality and computational efficiency. Therefore, = 50 is adopted as the default parameter setting for OLELS-DE in this study.