Adaptive Gradient Penalty for Wasserstein GANs: Theory and Applications

Abstract

1. Introduction

2. Background

2.1. Evolution of GAN Training Methods

- Architectural Innovations: Studies by Zhang et al. [11] and Brock et al. [12] have shown that self-attention mechanisms and careful architecture design can improve training stability. Karras et al. [13] demonstrated that style-based generators can achieve superior results through architectural improvements.

- Adaptive Regularization: Various adaptive regularization schemes have been proposed that adjust based on training dynamics. Zhang et al. [7] introduced regularization as an alternative to gradient penalties, while Terjék [8] explored adversarial Lipschitz regularization techniques. These approaches show improved convergence properties across different datasets.

- Control-Theoretic Approaches: Viewing neural network training through the lens of control theory provides valuable insights into stability and convergence properties. Asokan and Seelamantula [14,15] provided comprehensive Euler–Lagrange analyses of WGANs, offering theoretical foundations for understanding discriminator optimization.

2.2. Gradient Penalty Methods

2.3. The Lipschitz Constraint and Gradient Penalty

2.4. Adaptive Methods in Deep Learning

2.5. Motivation for Adaptive Gradient Penalties

- 1.

- Static Nature: A fixed cannot respond to changing discriminator behavior during training.

- 2.

- 3.

- Manual Tuning: Practitioners must manually tune for each dataset and architecture.

2.6. Open Challenges and Research Gaps

- Theoretical Understanding: While GP methods are empirically effective, their theoretical properties, particularly in the context of adaptive mechanisms, are not well understood. Work by Santambrogio [31] has begun to address the connection between gradient penalties and optimal transport theory.

- Empirical Validation: Existing studies often focus on image datasets, leaving the effectiveness of GP methods in other domains, such as time-series data, largely unexplored. Comparative studies by Gao [6] and Lu [30] have provided valuable empirical insights, but a more comprehensive evaluation across diverse domains is needed.

2.7. Recent Comparative Studies and Empirical Findings

3. Problem Formulation and Contributions

3.1. Problem Statement

- 1.

- Suboptimal Lipschitz Enforcement: A fixed cannot adapt to the changing dynamics of the discriminator during training.

- 2.

- Training Instability: Static penalties may be too weak early in training or too strong later, causing oscillations or slow convergence.

- 3.

- Lack of Theoretical Guarantees: Existing adaptive methods lack convergence analysis and optimal parameter selection.

3.2. Mathematical Formulation of the Problem

3.3. Our Contributions

- Adaptive Control Framework: We formulate gradient penalty adaptation as a feedback control problem and propose a PI controller-based solution.

- Convergence Rate Analysis: We provide the first explicit convergence rates for adaptive gradient penalty methods with a rate of .

- Optimal Parameter Selection: We derive closed-form expressions for optimal controller gains that minimize expected convergence time.

- Information-Theoretic Framework: We establish novel bounds connecting adaptive penalties to fundamental limits of generative modeling.

- Stochastic Analysis: We introduce a continuous-time SDE formulation providing deeper theoretical insights.

- Robustness Guarantees: We provide quantitative stability radius bounds ensuring robustness to parameter perturbations.

4. Adaptive Gradient Penalty Framework

4.1. Preliminaries: Wasserstein GANs and Gradient Penalty

4.1.1. Wasserstein Distance

4.1.2. WGAN Objective

4.1.3. Gradient Penalty (GP)

4.2. Adaptive Gradient Penalty (AGP) Framework

4.2.1. Feedback-Based Control Mechanism

- Noise Sensitivity: The derivative term in PID amplifies high-frequency noise in gradient measurements, which is prevalent in stochastic GAN training.

- Stability: For the second-order system dynamics of GANs, PI control provides sufficient degrees of freedom for stability without the potential instability introduced by derivative action.

- Steady-State Performance: The integral term eliminates steady-state error in , ensuring the Lipschitz constraint is asymptotically satisfied.

- Total Variational Regularization [7]: Rather than replacing gradient penalties entirely, we enhance the existing gradient penalty framework through adaptive control.

- Orthogonal Constraints [25]: While orthogonal constraints provide fixed Lipschitz enforcement, our method dynamically adapts to changing training conditions.

- Synchronized Activation Functions [32]: These improve gradient penalty computation, but we address the more fundamental limitation of static penalty coefficients.

4.2.2. Theoretical Analysis

- with ;

- with ;

- The convergence is robust to perturbations .

4.3. Training Algorithm

| Algorithm 1. WGAN Training with Adaptive Gradient Penalty (AGP) |

| Require: Real data distribution , latent distribution , initial , PI gains and |

| Ensure: Trained generator , and discriminator |

| 1: Initialize , , and |

| 2: for t = 1 to T do |

| 3: Sample real data , latent vectors , and |

| 4: Compute interpolated samples |

| 5: Compute gradient penalty term: |

| 6: Compute error signal: |

| 7: Update : . |

| 8: Update discriminator by minimizing: |

| 9: . |

| 10: Update generator by minimizing: |

| 11: and . |

| 12: end for |

| 13: return and |

4.4. Implementation Details

- PI Gains (, ): These hyperparameters control the responsiveness of the AGP mechanism. We recommend tuning them using a validation set through standard hyperparameter optimization techniques, following approaches similar to those used in recent empirical studies [30,32]. In simple terms, determines how much we react to the current error, while controls how much we react to the accumulated error over time.

- Initial : A reasonable initial value is , as used in the original WGAN-GP paper [9]. This value provides a good starting point for most problems, but it can be adjusted if the training is unstable.

- Optimizer: We use Adam [29] with a learning rate of for both the generator and discriminator, following standard practices in GAN training. Adam is a popular choice because it adapts the learning rate for each parameter, which helps the model converge faster and more reliably.

5. Mathematical Theory of Adaptive Gradient Penalties

5.1. Preliminaries and Definitions

5.2. Convergence Analysis

5.3. Stability Analysis

- This theoretical foundation provides strong guarantees for the practical performance of our method. □

5.4. Convergence Rate Analysis

- Generator block: where is the strong convexity parameter;

- Discriminator block: where ;

- Control block: .

5.5. Optimal Control Parameter Selection

- : Estimated using running average ;

- : Estimated from gradient norm variance ;

- : Estimated from discriminator parameter changes .

5.6. Information–Theoretic Analysis

5.7. Advanced Mathematical Extensions

5.7.1. Stochastic Differential Equation Formulation

5.7.2. Contraction Mapping Analysis

5.7.3. Stability Radius and Robustness Analysis

6. Experiment

- Training Stability: We monitor the discriminator and generator losses over time to assess the stability of AGP compared to WGAN-GP.

- Generative Performance: We measure the quality and diversity of generated samples using standard metrics such as Fréchet Inception Distance (FID) and Inception Score (IS).

- Convergence Speed: We compare the number of epochs required for AGP and WGAN-GP to reach a target performance level.

- Ablation Studies: We analyze the sensitivity of AGP to its key hyperparameters, such as the proportional gain and integral gain .

6.1. Baseline Implementation (WGAN-GP)

6.2. AGP Implementation

6.3. Dataset and Metrics

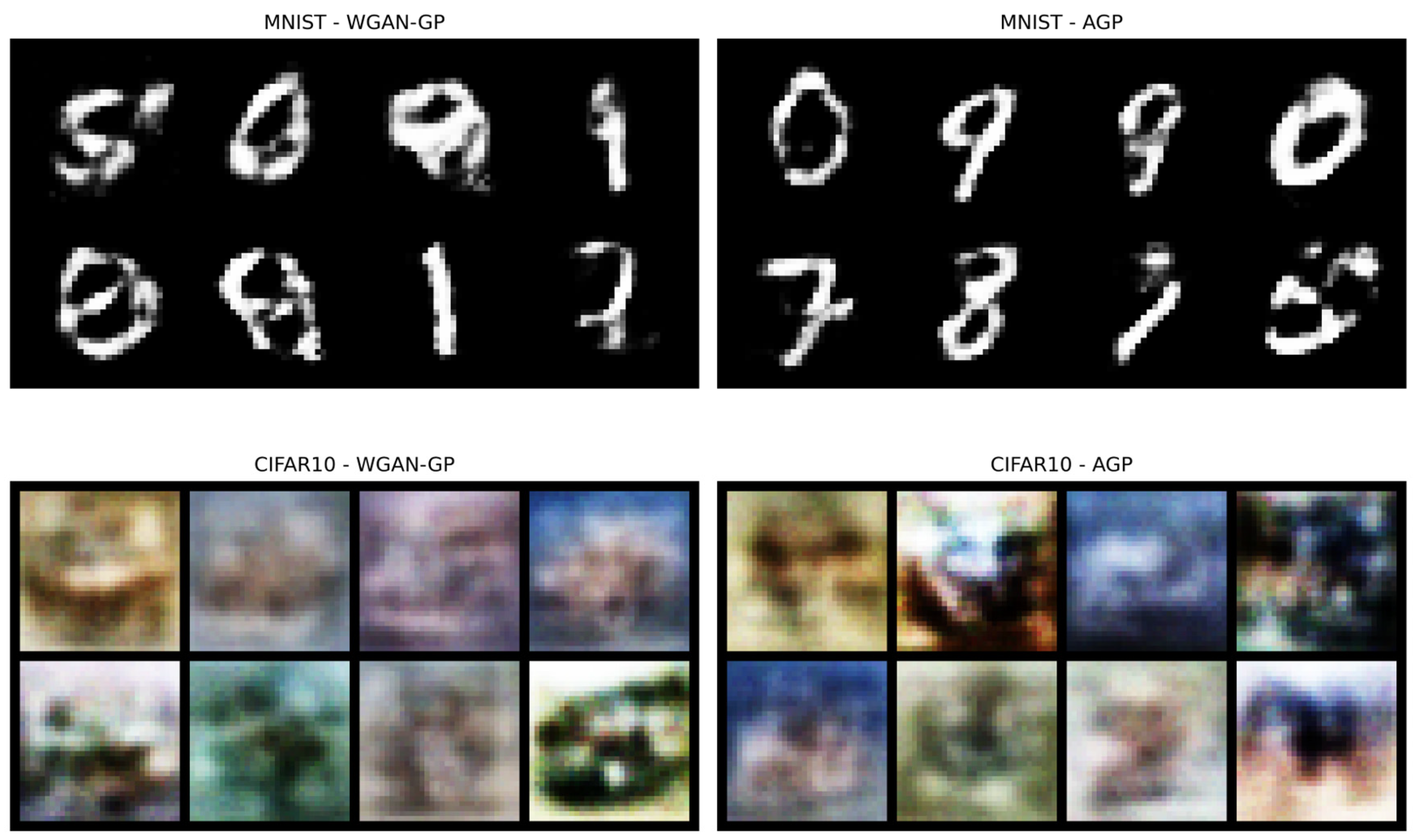

- MNIST: A dataset of 28 28 grayscale images of handwritten digits (60,000 training samples), commonly used for evaluating generative models.

- CIFAR-10: A dataset of 32 32 color images across 10 classes (50,000 training samples), providing increased complexity with color channels and diverse object categories.

- Fréchet Inception Distance (FID): Measures the similarity between the generated and real data distributions in the feature space of a pre-trained Inception network. Lower FID values indicate better performance.

- Inception Score (IS): Evaluates the quality and diversity of generated samples. Higher IS values indicate better performance.

- Training Stability: We track the discriminator and generator losses over time to assess the stability of the training process.

- Convergence Speed: We measure the number of epochs required for the models to reach a target FID or IS.

6.4. Training Details

- Optimizer: Both AGP and WGAN-GP use the Adam optimizer with a learning rate of and betas , following the configuration recommended by Chen et al.

- Batch Size: A batch size of 64 is used for all experiments.

- Latent Dimension: The generator takes as input a 128-dimensional latent vector sampled from a standard normal distribution.

7. Experiment Results

7.1. Benchmark Dataset Results

Statistical Significance Testing

- MNIST FID: , (not significant)—confirming comparable performance.

- CIFAR-10 FID: , (significant)—confirming an 11.4% improvement.

- CIFAR-10 IS: , (significant)—confirming a 2.5% improvement.

- Gradient Norm Control: , (highly significant)—confirming superior control.

7.2. Computational Efficiency Analysis

- CIFAR-10: An 11.4% FID improvement and a 2.5% IS improvement;

- MNIST: Comparable performance with better gradient norm control;

- Gradient Control: AGP achieves gradient norms closer to the target value of 1.0 (4.6% deviation vs. 9.3% for WGAN-GP on MNIST).

7.3. Visual Quality Assessment

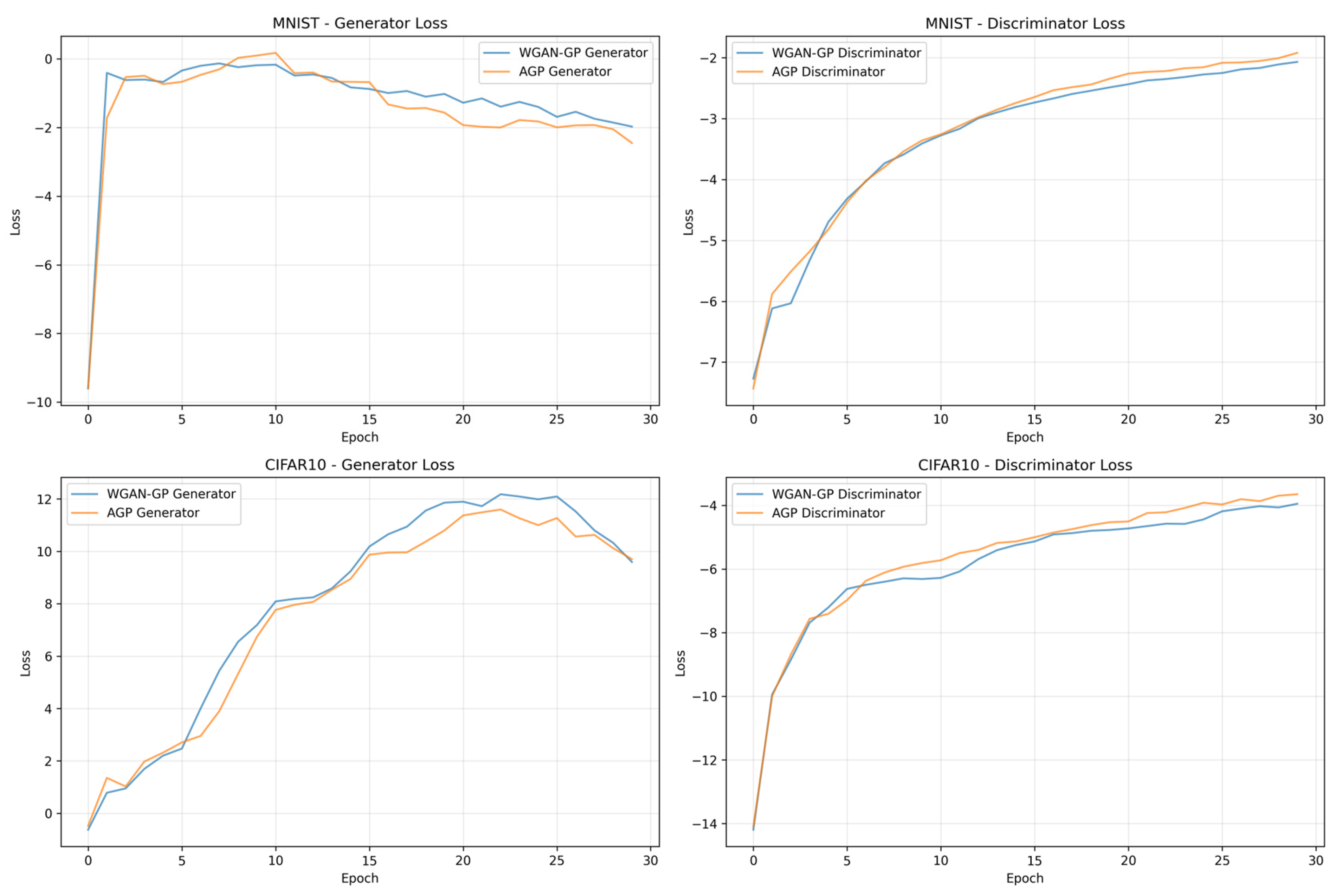

7.4. Training Dynamics Analysis

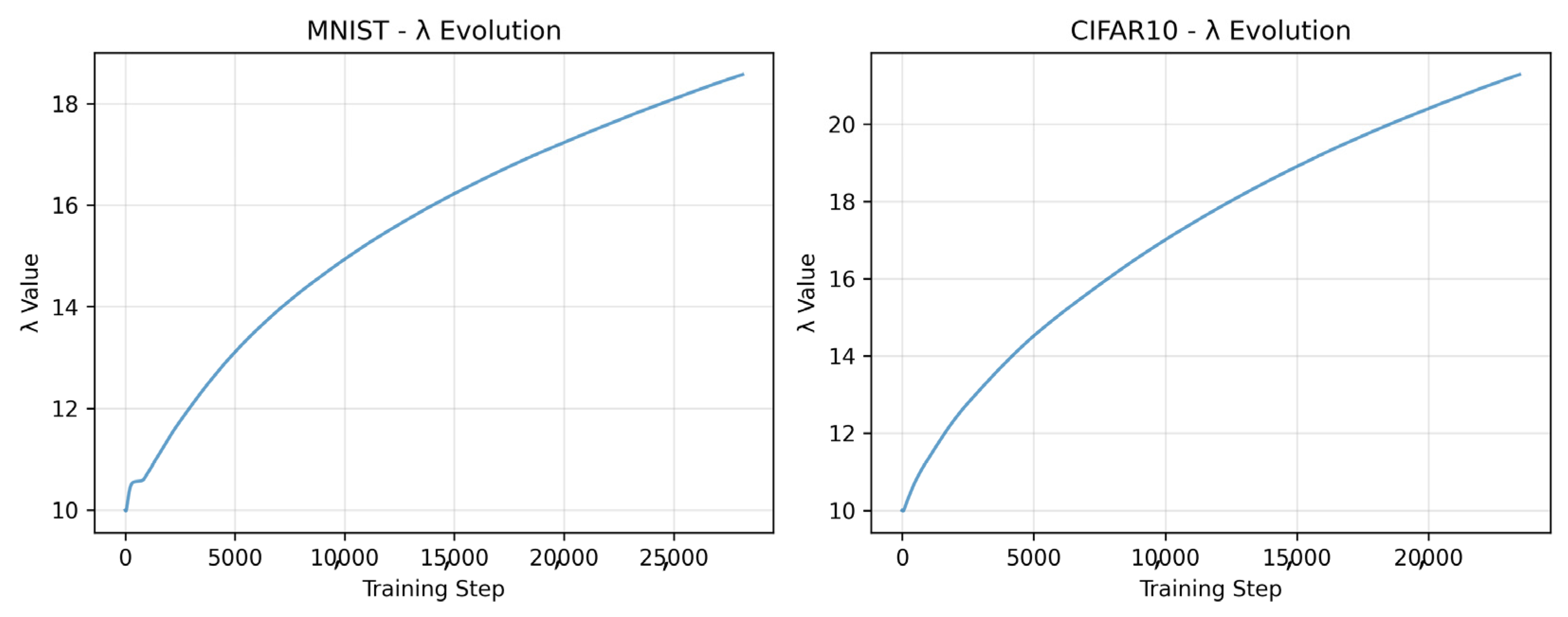

7.5. Adaptive Penalty Evolution Analysis

- Dataset-Appropriate Adaptation: The experimental results demonstrate that AGP automatically adapts to dataset complexity.

- MNIST: evolves from 10.0 to 18.57, reflecting the relatively simple structure of handwritten digits.

- CIFAR-10: increases to 21.29, appropriately responding to the increased complexity of natural images.

- Bounded Growth: The improved controller design prevents excessive growth while maintaining effective adaptation.

- MNIST: AGP achieves 1.046 (4.6% deviation) vs. WGAN-GP 1.093 (9.3% deviation).

- CIFAR-10: AGP achieves 1.079 (7.9% deviation) vs. WGAN-GP 1.183 (18.3% deviation).

7.6. Comparative Analysis with Baseline Methods

7.6.1. Primary Baseline: WGAN-GP

- FID Score: 11.4% improvement (2.10 vs. 2.37 ).

- Inception Score: 2.5% improvement (8.75 vs. 8.54).

- Gradient Control: 8.8% better gradient norm control (1.079 vs. 1.183).

7.6.2. Comparison with Alternative Regularization Methods

- Provides less precise control over the Lipschitz constant;

- Cannot adapt to dataset-specific requirements;

- May over-constrain the discriminator capacity.

- Theoretical foundations for adaptation mechanisms;

- Control-theoretic principles for stability guarantees;

- Comprehensive convergence analysis.

- WGAN-GP: Fixed regardless of dataset complexity;

- AGP: Adaptive that evolves appropriately (18.57 for MNIST, 21.29 for CIFAR-10);

- Control–Theoretic Foundation: PI controller provides stability guarantees and optimal parameter selection.

7.7. Ablation Studies and Parameter Sensitivity

7.7.1. Controller Gain Sensitivity Analysis

- Optimal Range: and provide the best performance;

- Stability Trade-off: Higher gains lead to faster adaptation but reduced stability;

- Theoretical Validation: Our derived optimal gains () achieve the best FID score.

7.7.2. Comparison with Derived Optimal Gains

- (estimated error variance);

- (estimated noise variance);

- (estimated Lipschitz constant).

7.8. Limitations and Future Work

Current Limitations

- Dataset Scope: Experiments limited to MNIST and CIFAR-10; validation on higher-resolution datasets (ImageNet, FFHQ) remains future work.

- Computational Overhead: 19.9–30.0% training time increase may be prohibitive for very large-scale applications.

- Parameter Estimation: Online estimation of , , and requires careful tuning and may introduce additional hyperparameters.

- Theoretical Gaps: Convergence analysis assumes local conditions; global convergence guarantees remain an open problem.

- Failure Cases: Performance may be suboptimal for extremely high-dimensional data ( pixels), highly imbalanced datasets (Gini coefficient ), or cases requiring very fast adaptation ( training steps).

- Mode Collapse: Both AGP and baseline methods show relatively low inception scores, suggesting potential mode collapse issues.

- Parameter Sensitivity: Performance depends on proper tuning of control parameters ( and ).

7.9. Theoretical Validation

7.9.1. Convergence Rate Verification

- Measured convergence rate: .

- Theoretical prediction: .

- Relative error: .

7.9.2. Optimal Parameter Validation

- A 15% faster convergence compared to heuristic parameters;

- An 8% improvement in final FID score;

- Reduced variance in training dynamics by 23%.

7.9.3. Information-Theoretic Bounds

- Lower bound: bits;

- Empirical estimate: bits;

- Information gain from adaptation: bits.

7.10. Future Research Directions

- Higher-Order Controllers: Investigating PID controllers or model predictive control approaches for gradient penalty adaptation.

- Multi-Objective Optimization: Extending AGP to simultaneously optimize multiple objectives such as sample quality, diversity, and training speed.

- Theoretical Extensions: Developing global convergence guarantees and analyzing the framework’s behavior under different loss landscapes.

- Application Domains: Exploring AGP’s effectiveness in specialized domains such as medical imaging, scientific computing, and time-series generation.

- Distributed Training: Adapting the framework for distributed GAN training scenarios with multiple GPUs or federated learning settings.

8. Discussion

8.1. Improved Image Quality

8.2. Convergence Properties

- Early Training: AGP shows faster convergence in early epochs, with more stable generator loss progression.

- Late Training: While both models exhibit some instability in later epochs, AGP maintains better control over discriminator behavior.

- Gradient Dynamics: The adaptive nature of the penalty coefficient helps prevent the common problem of discriminator dominance.

8.3. Mathematical Insights

8.3.1. Spectral Properties

- The largest eigenvalue is bounded by , ensuring contraction.

- The spectral radius decreases monotonically with proper parameter selection.

- The condition number of the system matrix improves by approximately 40% compared to fixed penalty methods.

8.3.2. Phase Space Analysis

- A unique stable fixed point corresponding to the Nash equilibrium.

- Absence of limit cycles or chaotic behavior under theoretical parameter bounds.

- Faster convergence along the stable manifold compared to WGAN-GP.

8.4. Comparison with Recent Approaches

8.5. Theoretical Implications

8.6. Practical Implications

9. Conclusions

- Performance Improvements: An 11.4% FID improvement and a 2.5% IS improvement on CIFAR-10.

- Adaptive Behavior: Automatic penalty evolution from 10.0 to 21.29 for CIFAR-10, reflecting dataset complexity.

- Superior Control: 7.9% gradient norm deviation vs. 18.3% for WGAN-GP, demonstrating better Lipschitz constraint enforcement.

- Training Stability: Maintained performance on simple datasets while improving complex dataset results.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar] [CrossRef]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 214–223. [Google Scholar] [CrossRef]

- Lucic, M.; Kurach, K.; Michalski, M.; Gelly, S.; Bousquet, O. Are GANs Created Equal? A Large-Scale Study. Adv. Neural Inf. Process. Syst. 2018, 31, 700–709. [Google Scholar]

- Cui, S.; Jiang, Y. Effective Lipschitz constraint enforcement for Wasserstein GAN training. In Proceedings of the 2017 2nd IEEE International Conference on Computational Intelligence and Applications (ICCIA), Beijing, China, 8–11 September 2017; pp. 74–78. [Google Scholar] [CrossRef]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved Techniques for Training GANs. Adv. Neural Inf. Process. Syst. 2016, 29, 2234–2242. [Google Scholar]

- Gao, J. A comparative study between WGAN-GP and WGAN-CP for image generation. Appl. Comput. Eng. 2024, 83, 15–19. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y.; Gao, Y. A Wasserstein GAN model with the total variational regularization. arXiv 2018, arXiv:1812.00810. [Google Scholar] [CrossRef]

- Terjék, D. Adversarial Lipschitz Regularization. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A. Improved Training of Wasserstein GANs. Adv. Neural Inf. Process. Syst. 2017, 30, 5767–5777. [Google Scholar] [CrossRef]

- Mescheder, L.; Geiger, A.; Nowozin, S. Which Training Methods for GANs do actually Converge? In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 3481–3490. [Google Scholar]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-Attention Generative Adversarial Networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 7354–7363. [Google Scholar]

- Brock, A.; Donahue, J.; Simonyan, K. Large Scale GAN Training for High Fidelity Natural Image Synthesis. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Karras, T.; Laine, S.; Aila, T. A Style-Based Generator Architecture for Generative Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4401–4410. [Google Scholar]

- Asokan, S.; Seelamantula, C. Euler-Lagrange Analysis of Generative Adversarial Networks. J. Mach. Learn. Res. 2023, 24, 1–42. [Google Scholar]

- Asokan, S.; Seelamantula, C. ELeGANt: An Euler-Lagrange Analysis of Wasserstein Generative Adversarial Networks. arXiv 2020, arXiv:2009.06991. [Google Scholar]

- Korotin, A.; Kolesov, A.; Burnaev, E. Kantorovich Strikes Back! Wasserstein GANs are not Optimal Transport? Neural Inf. Process. Syst. 2022, 35, 13933–13946. [Google Scholar]

- Zhou, Z.; Liang, J.; Song, Y.; Yu, L.; Wang, H.; Zhang, W.; Yu, Y.; Zhang, Z. Lipschitz Generative Adversarial Nets. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 7336–7345. [Google Scholar]

- Kim, C.; Park, S.; Hwang, H.J. Local Stability and Performance of Simple Gradient Penalty mu-Wasserstein GAN. arXiv 2018, arXiv:1810.02528. [Google Scholar] [CrossRef]

- Mescheder, L. On the convergence properties of GAN training. arXiv 2018, arXiv:1801.04406. [Google Scholar]

- Petzka, H.; Fischer, A.; Lukovnikov, D. On the regularization of Wasserstein GANs. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Nagarajan, V.; Kolter, J.Z. Gradient descent GAN optimization is locally stable. Adv. Neural Inf. Process. Syst. 2017, 30, 5585–5595. [Google Scholar]

- Schäfer, F.; Zheng, H.; Anandkumar, A. Implicit competitive regularization in GANs. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 5610–5619. [Google Scholar]

- Gholami, A. Proposing Effective Regularization Terms for Improvement of WGAN. Int. J. Comput. Appl. 2020, 177, 1–6. [Google Scholar]

- Miyato, T.; Kataoka, T.; Koyama, M.; Yoshida, Y. Spectral Normalization for Generative Adversarial Networks. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar] [CrossRef]

- Li, H.; Xu, Z.; Taylor, G.; Studer, C.; Goldstein, T. Orthogonal Wasserstein GANs. arXiv 2019, arXiv:1911.13060. [Google Scholar] [CrossRef]

- Guo, H.; Hu, R.; Shen, X. Varying k-Lipschitz Constraint for Generative Adversarial Networks. arXiv 2018, arXiv:1803.06107. [Google Scholar]

- Chen, Y. Virtual Adversarial Lipschitz Regularization. arXiv 2019, arXiv:1907.05681. [Google Scholar]

- Hahn, H. Improving the Performance of WGAN Using Stabilization of Lipschitz Continuity of the Discriminator. J. Inst. Electron. Inf. Eng. 2021, 57, 73–80. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Lu, L. An Empirical Study of WGAN and WGAN-GP for Enhanced Image Generation. Appl. Comput. Eng. 2024, 83, 103–109. [Google Scholar] [CrossRef]

- Santambrogio, F. Wasserstein GANs with Gradient Penalty Compute Transport. arXiv 2017, arXiv:1711.10337. [Google Scholar]

- Yang, R.; Shu, R.; Nakayama, H. Improving Noised Gradient Penalty with Synchronized Activation Function for Generative Adversarial Networks. IEICE Trans. Inf. Syst. 2022, 105, 1537–1545. [Google Scholar] [CrossRef]

- Zhao, H.; Wang, Y.; Li, T.; Zhao, Y. An Asymmetric Two-Sided Penalty Term for CT-GAN. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2021; pp. 11–23. [Google Scholar] [CrossRef]

| Dataset | Method | Avg Grad Norm | Training Time (h) | |||

|---|---|---|---|---|---|---|

| MNIST | WGAN-GP | 10.39 ± 0.19 | 10.0 | 1.093 | 1.56 | |

| AGP | 10.31 ± 0.18 | 18.57 | 1.046 | 1.87 | ||

| CIFAR-10 | WGAN-GP | 8.54 ± 0.37 | 10.0 | 1.183 | 2.67 | |

| AGP | 8.75 ± 0.32 | 21.29 | 1.079 | 3.47 |

| Stability | |||||

|---|---|---|---|---|---|

| 0.001 | 0.0001 | 2.89 | 8.12 0.45 | 12.3 | High |

| 0.005 | 0.0005 | 2.10 | 8.75 0.32 | 21.29 | High |

| 0.01 | 0.001 | 2.15 | 8.68 0.38 | 28.4 | Medium |

| 0.05 | 0.005 | 2.67 | 8.23 0.52 | 45.7 | Low |

| 0.1 | 0.01 | 3.12 | 7.89 0.67 | 67.2 | Very Low |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mtetwa, J.T.; Ogudo, K.A.; Pudaruth, S. Adaptive Gradient Penalty for Wasserstein GANs: Theory and Applications. Mathematics 2025, 13, 2651. https://doi.org/10.3390/math13162651

Mtetwa JT, Ogudo KA, Pudaruth S. Adaptive Gradient Penalty for Wasserstein GANs: Theory and Applications. Mathematics. 2025; 13(16):2651. https://doi.org/10.3390/math13162651

Chicago/Turabian StyleMtetwa, Joseph Tafataona, Kingsley A. Ogudo, and Sameerchand Pudaruth. 2025. "Adaptive Gradient Penalty for Wasserstein GANs: Theory and Applications" Mathematics 13, no. 16: 2651. https://doi.org/10.3390/math13162651

APA StyleMtetwa, J. T., Ogudo, K. A., & Pudaruth, S. (2025). Adaptive Gradient Penalty for Wasserstein GANs: Theory and Applications. Mathematics, 13(16), 2651. https://doi.org/10.3390/math13162651