A New Proximal Iteratively Reweighted Nuclear Norm Method for Nonconvex Nonsmooth Optimization Problems

Abstract

1. Introduction

1.1. Problem Description

1.2. Related Work

1.3. Our Contribution

- We propose a Proximal Iteratively Reweighted Nuclear Norm algorithm with Extrapolation and Line Search, denoted by PIRNNE-LS. This framework integrates line search with extrapolation and dimensionality reduction, circumventing parametric limitations. Parameters withinthe proposed method initialize aggressively above defined thresholds then undergo criterion-driven adaptive recalibration per iteration.

- We prove the subsequential convergence that each generated sequence converges to a stationary point of the considered problem. Especially, when the line search is monotone, we further establish its global convergence and linear convergence rate under the Kurdyka–Łojasiewicz framework.

- We conduct some experiments to evaluate the performance of the proposed method for solving the matrix completion problem. Some numerical results are reported the effectiveness and superiority of our proposed method.

2. Preliminaries

2.1. Basic Concepts in Variational and Convex Analysis

- (i)

- For a given , the Fréchet subdifferential of J at x, written by , is the set of all vectors that satisfyWhen , we set .

- (ii)

- The limiting subdifferential, or simply the subdifferential, of J at x, written by , is defined by

- (iii)

- A point is called the (limiting) critical point or stationary point of J if it satisfies , and the set of critical points of J is denoted by critJ.

2.2. Kurdyka–Łojasiewicz Property

- (i)

- The function J is said to have KL property at if there exists , a neighborhood U of , and a continuous and concave function such that

- (a)

- and φ is continuously differentiable on with

- (b)

- for all , the following KL inequality holds:

- (ii)

- If J satisfies the KL property at each point of , then J is called a KL function.

3. The Proposed Method

| Algorithm 1 PIRNNE-LS for solving (1) |

|

Choose , , , , , , , , .

For given , , let and set . while stopping criterion is not satisfied, do Step 1. Choose , and , set , , , then (1a) Compute by (2) and (3), respectively. (1b) Compute the SVD of , i.e., ; Compute the singular value of , and let for . (1c) Compute where . (1d) If is satisfied, go to Step 2, where is defined in (9). Otherwise, set , , and go to Step (1a). Step 2. for , then let and go to Step 1. end while |

4. Convergence Analysis

4.1. Subsequential Convergence of Nonmontone Line Search

- (i)

- the sequence is nonincreasing;

- (ii)

- (iii)

- is bounded and any cluster point of is a critical point of

4.2. Global Convergence and Linear Convergence Rate of Monotone Line Search

- (i)

- Ξ is nonempty and ;

- (ii)

- (iii)

- and Ψ are equal and constant on Ξ, i.e., there exists a constant κ such that for any , .

- (i)

- The whole sequence manifests finite length and globally converges to a point in

- (ii)

- Moreover, if the KL function can be taken in the form for some , the whole sequences and are linearly convergent.

- (i)

- Assume that Then, there exists a subsequence of converging to . Let be such that for all , and we know that . It follows from Theorem 1 (iii) and the continuity of that Again from Theorem 1 (i) and (ii), we have and is nonincreasing for all . Thus, we get , and for all .If there exists an integer such that , then from (8), , we haveThus, we have for any and the assertion holds directly. Otherwise, since is nonincreasing for all , we have for all . Now, we consider the sequence . It follows from Lemma 6 that the cluster point set of is nonempty and compact, and for any , we haveThus, for any , there exists a nonnegative integer such that for any . In addition, for any , there exists a positive integer such that for all . Consequently, for any , , where K is given by Lemma 5, we haveBy using Lemma 1 with , for any , we haveThe remaining global convergence arguments are similar to ([1], Theorem 2); is a Cauchy sequence and, hence, it is convergent. By using Lemma 6 (i), there exists with such that .

- (ii)

- Denote . It follows from (20) thatwhere , the second inequality follows from Lemma 5 and the fourth one follows from (8), together with the . Since , there exists such that . Then, for all , it follows from (20) that for any ,which means thatSo, the sequences and are both Q-linearly convergent. This indicates that the entire sequence is R-linearly convergent. By combining this with (8), we can infer that there exist and and so that for each . Consequently,which means that is R-linearly convergent. This completes the proof. □

5. Numerical Results

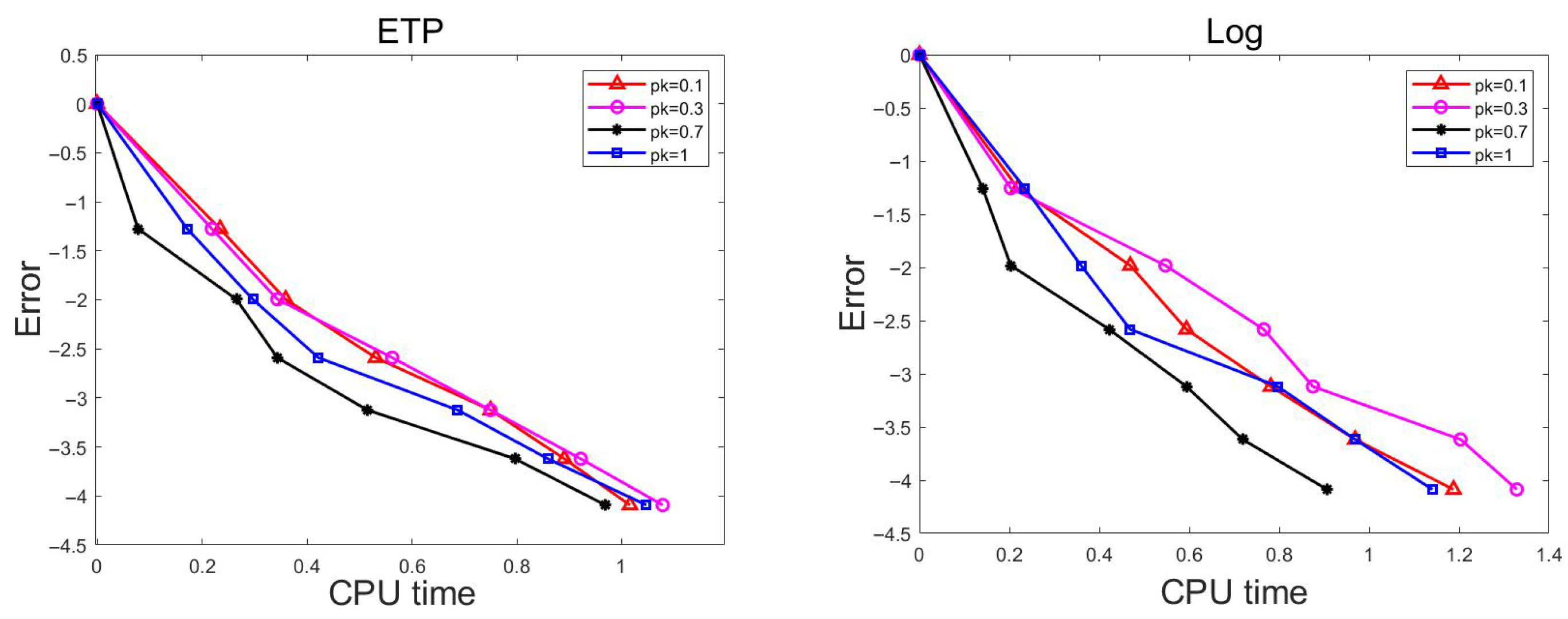

5.1. Synthetic Data

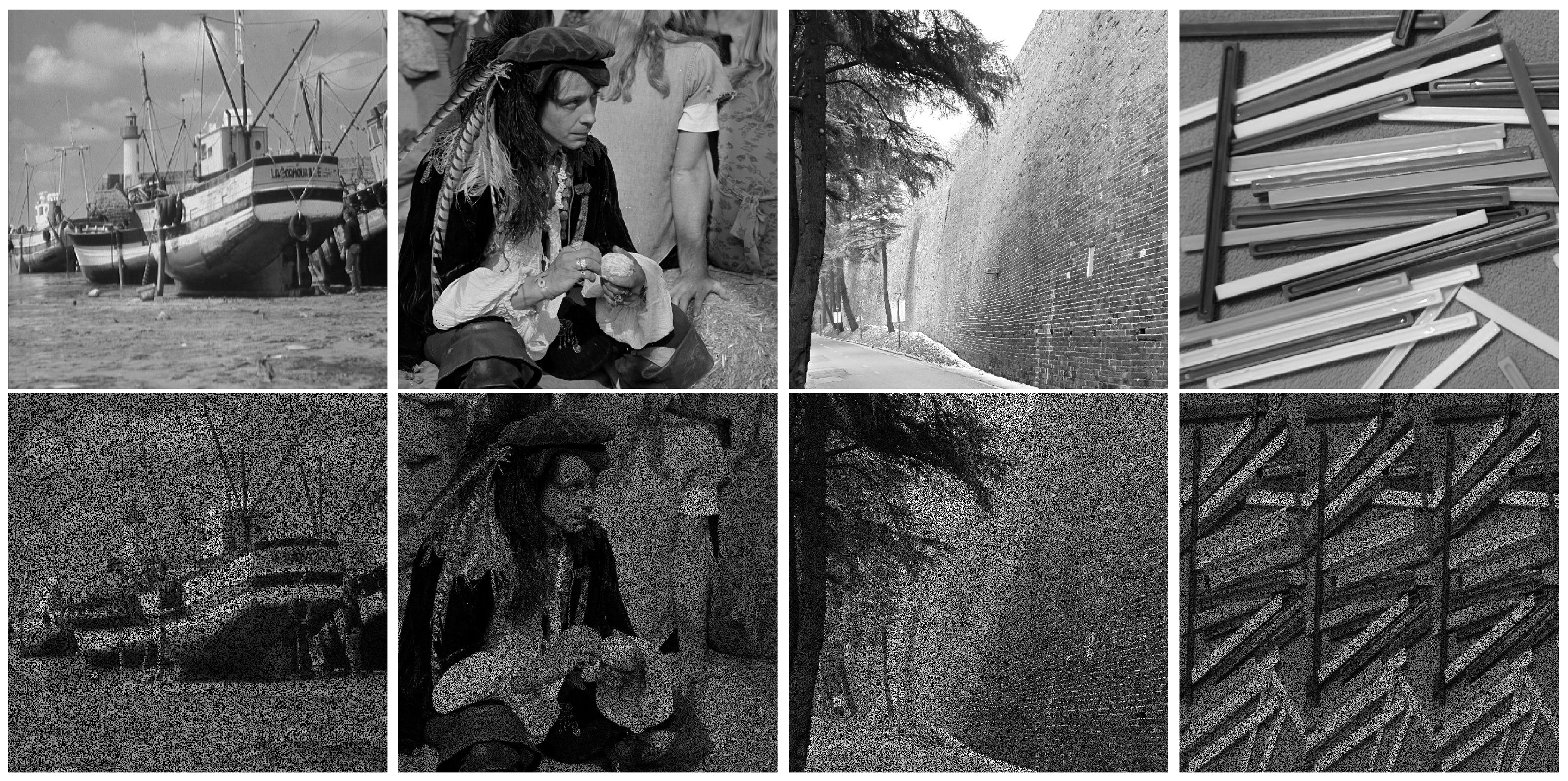

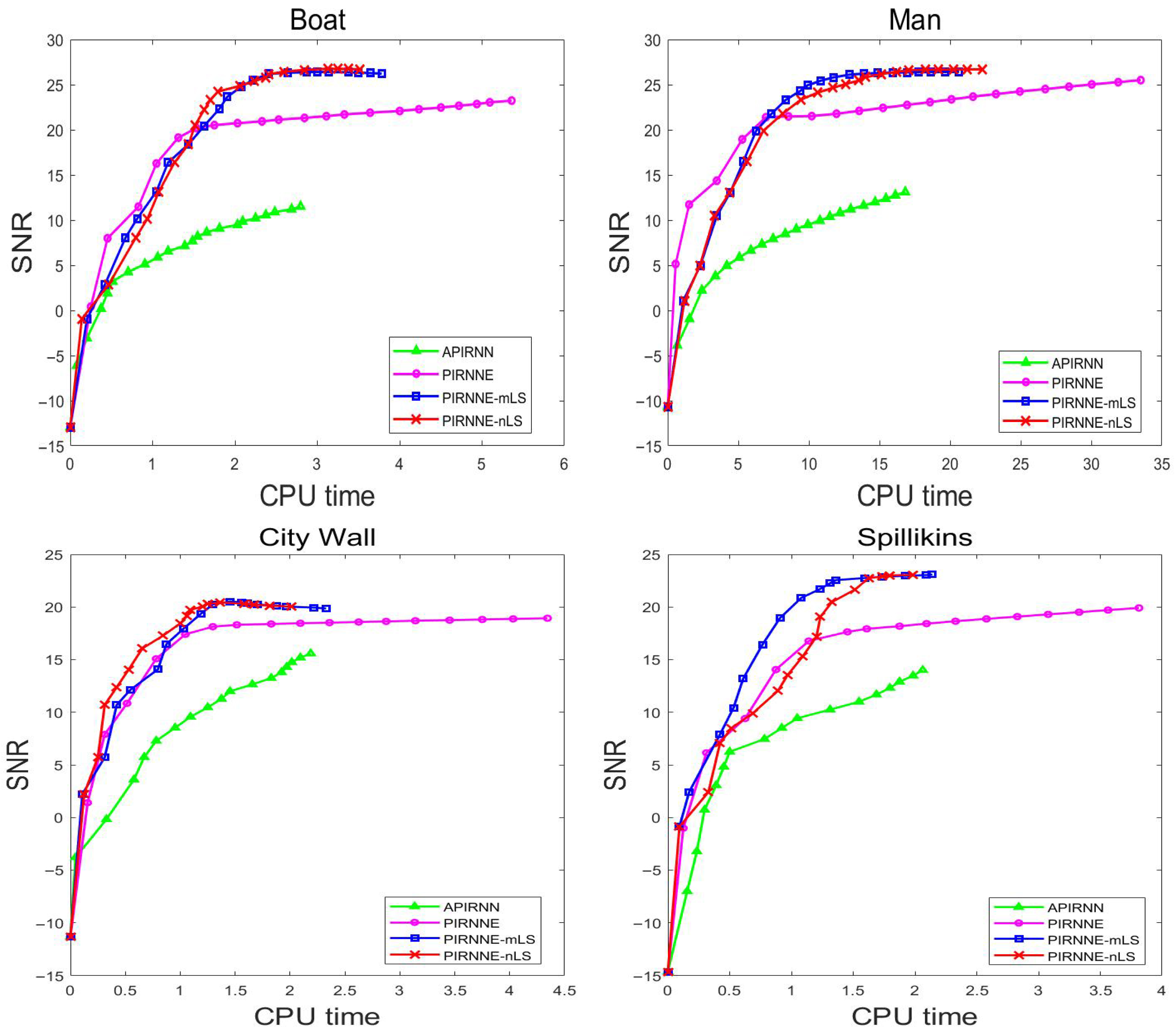

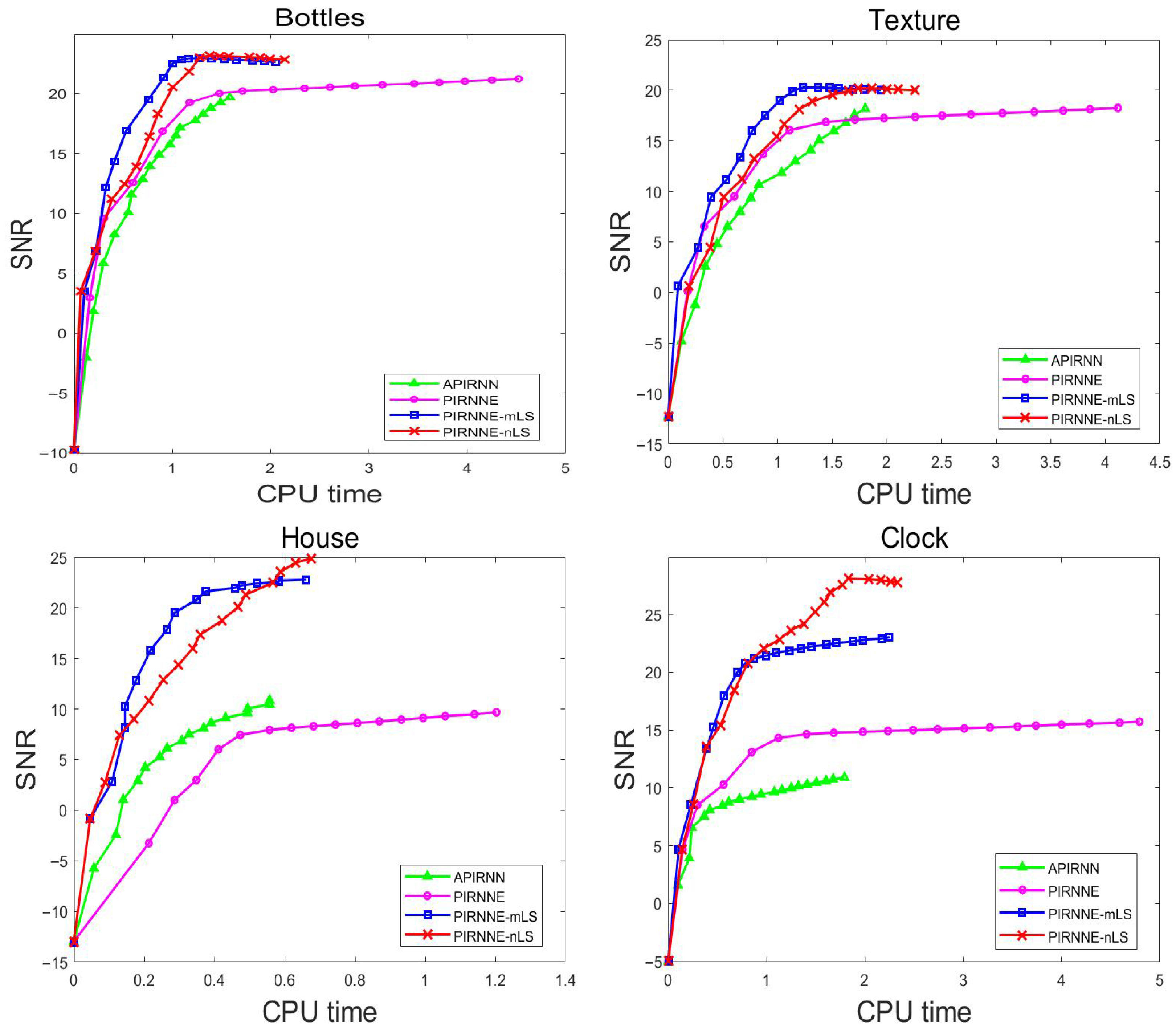

5.2. Real Images

5.3. Movie Recommendation System

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ge, Z.L.; Zhang, X.; Wu, Z.M. A fast proximal iteratively reweighted nuclear norm algorithm for nonconvex low-rank matrix minimization problems. Appl. Numer. Math. 2022, 179, 66–86. [Google Scholar] [CrossRef]

- Argyriou, A.; Evgeniou, T.; Pontil, M. Convex multi-task feature learning. Mach. Learn. 2008, 73, 243–272. [Google Scholar] [CrossRef]

- Amit, Y.; Fink, M.; Srebro, N.; Ullman, S. Uncovering shared structures in multiclass classification. In Proceedings of the the 24th International Conference on Machine Learning, Corvallis, OR, USA, 20–24 June 2007. [Google Scholar] [CrossRef]

- Lu, C.Y.; Tang, J.H.; Yan, S.C.; Lin, Z.C. Generalized nonconvex nonsmooth low-rank minimization. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; IEEE Computer Society: Washington, DC, USA, 2014; pp. 4130–4137. [Google Scholar]

- Dong, W.S.; Shi, G.M.; Li, X.; Ma, Y.; Huang, F. Compressive sensing via nonlocal low-rank regularization. IEEE Trans. Image Process. 2014, 23, 3618–3632. [Google Scholar] [CrossRef]

- Fazel, M.; Hindi, H.; Boyd, S.P. Log-det heuristic for matrix rank minimization with applications to Hankel and Euclidean distance matrices. In Proceedings of the 2003 American Control Conference, Denver, CO, USA, 4–6 June 2003; IEEE: New York, NY, USA, 2003; pp. 2156–2162. [Google Scholar]

- Hu, Y.; Zhang, D.B.; Ye, J.P.; Li, X.L.; He, X.F. Fast and accurate matrix completion via truncated nuclear norm regularization. IEEE Trans. Pattern Anal. 2013, 35, 2117–2130. [Google Scholar] [CrossRef]

- Lu, C.Y.; Zhu, C.B.; Xu, C.Y.; Yan, S.C.; Lin, Z.C. Generalized singular value thresholding. In Proceedings of the 29th AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 1215–1221. [Google Scholar] [CrossRef]

- Todeschini, A.; Caron, F.; Chavent, M. Probabilistic low-rank matrix completion with adaptive spectral regularization algorithms. Adv. Neural Inf. Process. Syst. 2013, 26, 845–853. [Google Scholar]

- Toh, K.C.; Yun, S.W. An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problems. Pac. J. Optim. 2010, 6, 615–640. [Google Scholar]

- Zhang, X.; Peng, D.T.; Su, Y.Y. A singular value shrinkage thresholding algorithm for folded concave penalized low-rank matrix optimization problems. J. Glob. Optim. 2024, 88, 485–508. [Google Scholar] [CrossRef]

- Tao, T.; Xiao, L.H.; Zhong, J.Y. A Fast Proximal Alternating Method for Robust Matrix Factorization of Matrix Recovery with Outliers. Mathematics 2025, 13, 1466. [Google Scholar] [CrossRef]

- Cui, A.G.; He, H.Z.; Yuan, H. A Designed Thresholding Operator for Low-Rank Matrix Completion. Mathematics 2024, 12, 1065. [Google Scholar] [CrossRef]

- Gong, P.H.; Zhang, C.S.; Lu, Z.S.; Huang, J.H.Z.; Ye, J.P. A general iterative shrinkage and thresholding algorithm for non-convex regularized optimization problems. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 37–45. [Google Scholar]

- Nakayama, S.; Narushima, Y.; Yabe, H. Inexact proximal DC Newton-type method for nonconvex composite functions. Comput. Optim. Appl. 2024, 87, 611–640. [Google Scholar] [CrossRef]

- Sun, T.; Jiang, H.; Cheng, L.Z. Convergence of proximal iteratively reweighted nuclear norm algorithm for image processing. IEEE Trans. Image Process. 2017, 26, 5632–5644. [Google Scholar] [CrossRef]

- Phan, D.N.; Nguyen, T.N. An accelerated IRNN-Iteratively Reweighted Nuclear Norm algorithm for nonconvex nonsmooth low-rank minimization problems. J. Comput. Appl. Math. 2021, 396, 113602. [Google Scholar] [CrossRef]

- Xu, Z.Q.; Zhang, Y.L.; Ma, C.; Yan, Y.C.; Peng, Z.L.; Xie, S.L.; Wu, S.Q.; Yang, X.K. LERE: Learning-Based Low-Rank Matrix Recovery with Rank Estimation. In Proceedings of the 38th AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 16228–16236. [Google Scholar] [CrossRef]

- Wen, Y.W.; Li, K.X.; Chen, H.F. Accelerated matrix completion algorithm using continuation strategy and randomized SVD. J. Comput. Appl. Math. 2023, 429, 115215. [Google Scholar] [CrossRef]

- Zhang, H.M.; Qian, F.; Shi, P.; Du, W.L.; Tang, Y.; Qian, J.J.; Gong, C.; Yang, J. Generalized Nonconvex Nonsmooth Low-Rank Matrix Recovery Framework With Feasible Algorithm Designs and Convergence Analysis. IEEE Trans. Neur. Net. Lear. 2023, 34, 5342–5353. [Google Scholar] [CrossRef] [PubMed]

- Li, B.J.; Pan, S.H.; Qian, Y.T. Factorization model with total variation regularizer for image reconstruction and subgradient algorithm. Pattern Recogn. 2026, 170, 112038. [Google Scholar] [CrossRef]

- Guo, H.Y.; Huang, Z.H.; Zhang, X.Z. Low rank matrix recovery with impulsive noise. Appl. Math. Lett. 2022, 134, 108364. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Y.; Yang, X.Y. Efficient Active Manifold Identification via Accelerated Iteratively Reweighted Nuclear Norm Minimization. J. Mach. Learn. Res. 2024, 25, 1–44. [Google Scholar]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Li, H.; Lin, Z.C. Accelerated proximal gradient methods for nonconvex programming. Adv. Neural Inf. Process. Syst. 2015, 28, 377–387. [Google Scholar]

- Grippo, L.; Lampariello, F.; Lucidi, S. A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 1986, 23, 707–716. [Google Scholar] [CrossRef]

- Wright, S.J.; Nowak, R.; Figueiredo, M.A.T. Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 2008, 57, 2479–2493. [Google Scholar] [CrossRef]

- Wu, Z.M.; Li, C.S.; Li, M.; Andrew, L. Inertial proximal gradient methods with Bregman regularization for a class of nonconvex optimization problems. J. Glob. Optim. 2021, 79, 617–644. [Google Scholar] [CrossRef]

- Liu, J.Y.; Cui, Y.; Pang, J.S.; Sen, S. Two-stage stochastic programming with linearly bi-parameterized quadratic recourse. SIAM J. Optimiz. 2020, 30, 2530–2558. [Google Scholar] [CrossRef]

- Wang, J.Y.; Petra, C.G. A sequential quadratic programming algorithm for nonsmooth problems with upper-C2 Objective. SIAM J. Optimiz. 2023, 33, 2379–2405. [Google Scholar] [CrossRef]

- Yang, L. Proximal gradient method with extrapolation and line search for a class of nonconvex and nonsmooth problems. J. Optimiz. Theory App. 2024, 200, 68–103. [Google Scholar] [CrossRef]

- Attouch, H.; Bolte, J.; Svaiter, B.F. Convergence of descent methods for semi-algebraic and tame problems: Proximal algorithms, forward-backward splitting, and regularized Gauss-Seidel methods. Math. Program. 2013, 137, 91–129. [Google Scholar] [CrossRef]

- Bolte, J.; Sabach, S.; Teboulle, M. Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 2014, 146, 459–494. [Google Scholar] [CrossRef]

- Kurdyka, K. On gradients of functions definable in o-minimal structures. Ann. I. Fourier 1998, 48, 769–783. [Google Scholar] [CrossRef]

- Attouch, H.; Bolte, J.; Redont, P.; Soubeyran, A. Proximal alternating minimization and projection methods for nonconvex problems: An approach based on the Kurdyka-Lojasiewicz inequality. Math. Oper. Res. 2010, 35, 438–457. [Google Scholar] [CrossRef]

- Ge, Z.L.; Wu, Z.M.; Zhang, X. An extrapolated proximal iteratively reweighted method for nonconvex composite optimization problems. J. Glob. Optim. 2023, 86, 821–844. [Google Scholar] [CrossRef]

- Guo, K.; Han, D.R. A note on the Douglas-Rachford splitting method for optimization problems involving hypoconvex functions. J. Glob. Optim. 2018, 72, 431–441. [Google Scholar] [CrossRef]

- Wen, B.; Chen, X.J.; Pong, T.K. Linear convergence of proximal gradient algorithm with extrapolation for a class of nonconvex nonsmooth minimization problems. SIAM J. Optimiz. 2017, 27, 124–145. [Google Scholar] [CrossRef]

- Wu, Z.M.; Li, M. General inertial proximal gradient method for a class of nonconvex nonsmooth optimization problems. Comput. Optim. Appl. 2019, 73, 129–158. [Google Scholar] [CrossRef]

- Nesterov, Y. Introductory Lectures on Convex Optimization: A Basic Course; Springer: New York, NY, USA, 2013. [Google Scholar] [CrossRef]

- Harper, F.M.; Konstan, J.A. The MovieLens Datasets: History and Context. ACM TiiS 2015, 5, 1–19. [Google Scholar] [CrossRef]

- Li, S.; Li, Q.W.; Zhu, Z.H.; Tang, G.G.; Wakin, M.B. The global geometry of centralized and distributed low-rank matrix recovery without regularization. IEEE Signal Proc. Let. 2020, 27, 1400–1404. [Google Scholar] [CrossRef]

- Doostmohammadian, M.; Gabidullina, Z.R.; Rabiee, H.R. Nonlinear perturbation-based non-convex optimization over time-varying networks. IEEE Trans. Netw. Sci. Eng. 2024, 11, 6461–6469. [Google Scholar] [CrossRef]

| APIRNN | PIRNNE | PIRNNE-mLS | PIRNNE-nLS | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Iter. | Time | SNR | Iter. | Time | SNR | Iter. | Time | SNR | Iter. | Time | SNR | |

| Boat | 121 | 3.94 | 19.49 | 51 | 2.87 | 23.96 | 43 | 1.90 | 26.23 | 43 | 1.90 | 26.69 |

| Man | 117 | 30.02 | 23.29 | 56 | 23.90 | 26.64 | 45 | 15.61 | 26.35 | 44 | 15.41 | 26.79 |

| City Wall | 56 | 1.43 | 17.88 | 40 | 1.61 | 19.08 | 38 | 1.05 | 19.74 | 35 | 0.96 | 20.05 |

| Spillikins | 68 | 1.57 | 20.05 | 59 | 1.96 | 22.39 | 34 | 1.03 | 23.08 | 33 | 1.01 | 23.09 |

| Bottles | 66 | 4.76 | 21.92 | 56 | 6.34 | 22.26 | 46 | 3.10 | 22.50 | 38 | 2.98 | 22.74 |

| Texture | 57 | 4.72 | 19.81 | 52 | 6.09 | 19.55 | 42 | 3.13 | 20.94 | 35 | 2.80 | 21.95 |

| House | 109 | 1.17 | 21.96 | 114 | 2.74 | 21.37 | 39 | 0.58 | 23.94 | 38 | 0.55 | 24.76 |

| Clock | 229 | 12.10 | 20.43 | 155 | 17.36 | 24.92 | 55 | 5.01 | 24.28 | 47 | 3.30 | 27.99 |

| Dataset | Users | Movies | Ratings | |

|---|---|---|---|---|

| MovieLens | 100 K | 943 | 1682 | 100,000 |

| 1 M | 6040 | 3449 | 999,714 | |

| 10 M | 69,878 | 10,677 | 10,000,054 |

| Dataset | Method | Time | RMSE | Objective Value | |

|---|---|---|---|---|---|

| MovieLens | 100 K | APIRNN | 2.06 | 1.0410 | |

| PIRNNE | 1.11 | 1.0216 | |||

| PIRNNE-mLS | 2.00 | 1.0468 | |||

| PIRNNE-nLS | 1.98 | 1.0450 | |||

| 1 M | APIRNN | 6.24 | 0.8855 | ||

| PIRNNE | 7.88 | 1.0343 | |||

| PIRNNE-mLS | 5.23 | 0.8844 | |||

| PIRNNE-nLS | 5.22 | 0.8844 | |||

| 10 M | APIRNN | 28.23 | 0.9483 | ||

| PIRNNE | 238.86 | 1.0063 | |||

| PIRNNE-mLS | 14.49 | 0.9483 | |||

| PIRNNE-nLS | 13.78 | 0.9483 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ge, Z.; Zhang, S.; Zhang, X.; Cui, Y. A New Proximal Iteratively Reweighted Nuclear Norm Method for Nonconvex Nonsmooth Optimization Problems. Mathematics 2025, 13, 2630. https://doi.org/10.3390/math13162630

Ge Z, Zhang S, Zhang X, Cui Y. A New Proximal Iteratively Reweighted Nuclear Norm Method for Nonconvex Nonsmooth Optimization Problems. Mathematics. 2025; 13(16):2630. https://doi.org/10.3390/math13162630

Chicago/Turabian StyleGe, Zhili, Siyu Zhang, Xin Zhang, and Yan Cui. 2025. "A New Proximal Iteratively Reweighted Nuclear Norm Method for Nonconvex Nonsmooth Optimization Problems" Mathematics 13, no. 16: 2630. https://doi.org/10.3390/math13162630

APA StyleGe, Z., Zhang, S., Zhang, X., & Cui, Y. (2025). A New Proximal Iteratively Reweighted Nuclear Norm Method for Nonconvex Nonsmooth Optimization Problems. Mathematics, 13(16), 2630. https://doi.org/10.3390/math13162630