AI-Enabled Condition Monitoring Framework for Autonomous Pavement-Sweeping Robots

Abstract

1. Introduction

1.1. Problem Statement

1.2. Related Works

1.3. Contributions

- A novel condition monitoring (CM) framework is proposed, utilizing rotation, vibration, and current consumption data, specifically designed for large, heavy-duty outdoor pavement-sweeping robots considering the growing demand for urban hygiene.

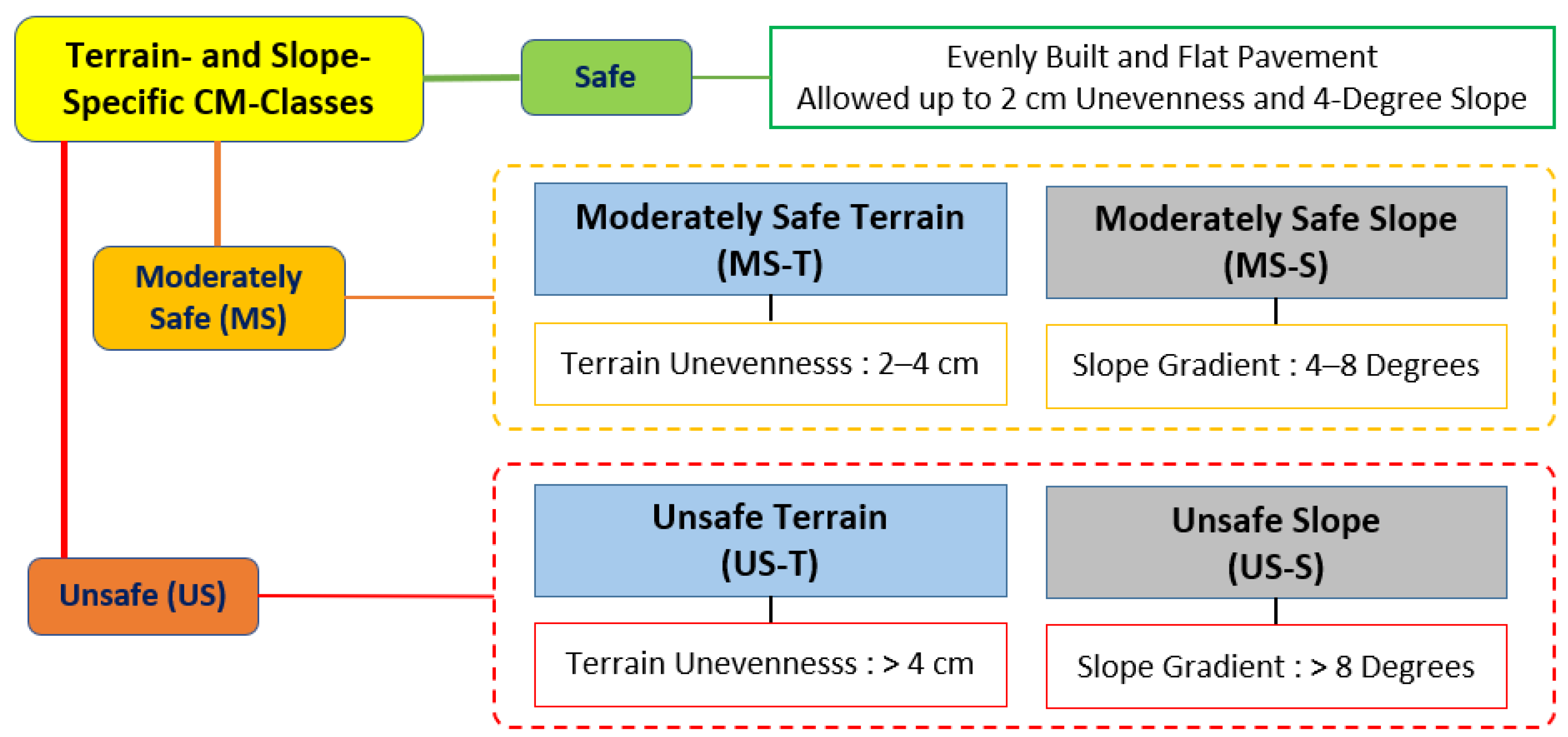

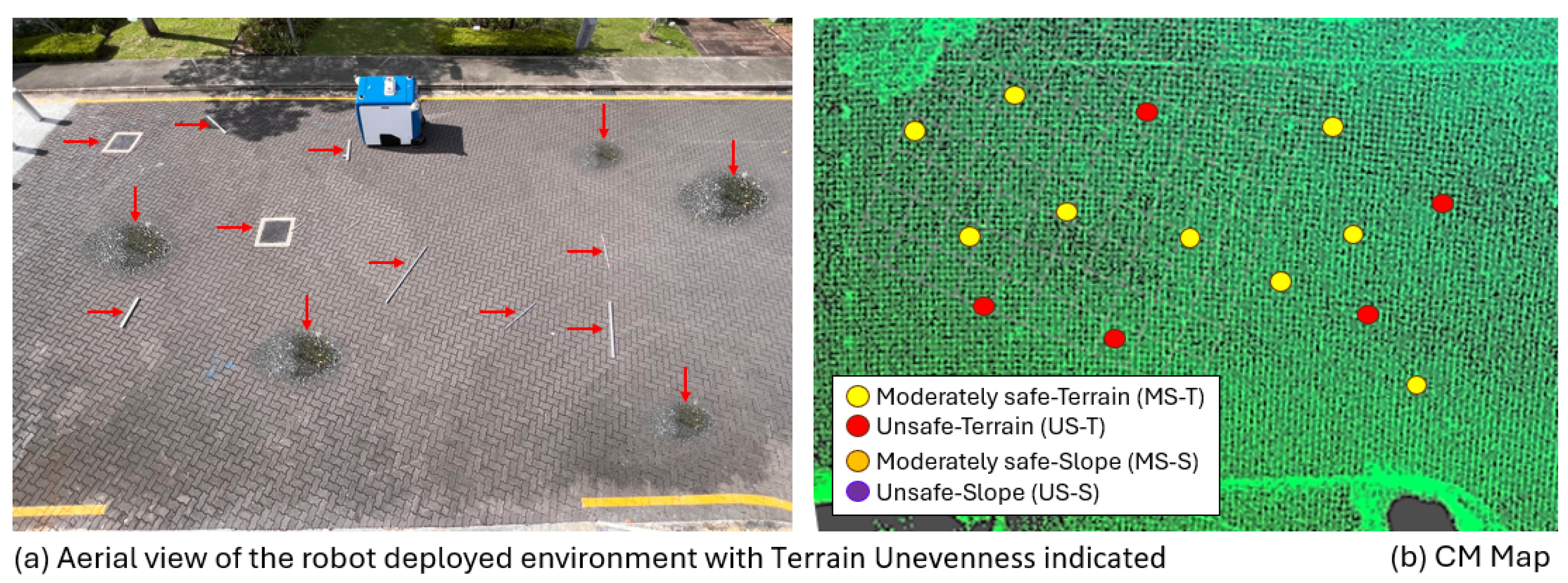

- To ensure the robot’s health and operational safety under typical outdoor pavement challenges, such as uneven surfaces, unstructured grounds, and varying slopes, the study defines four distinct anomaly classes: moderately safe terrain, unsafe terrain, moderately safe slope, and unsafe slope.

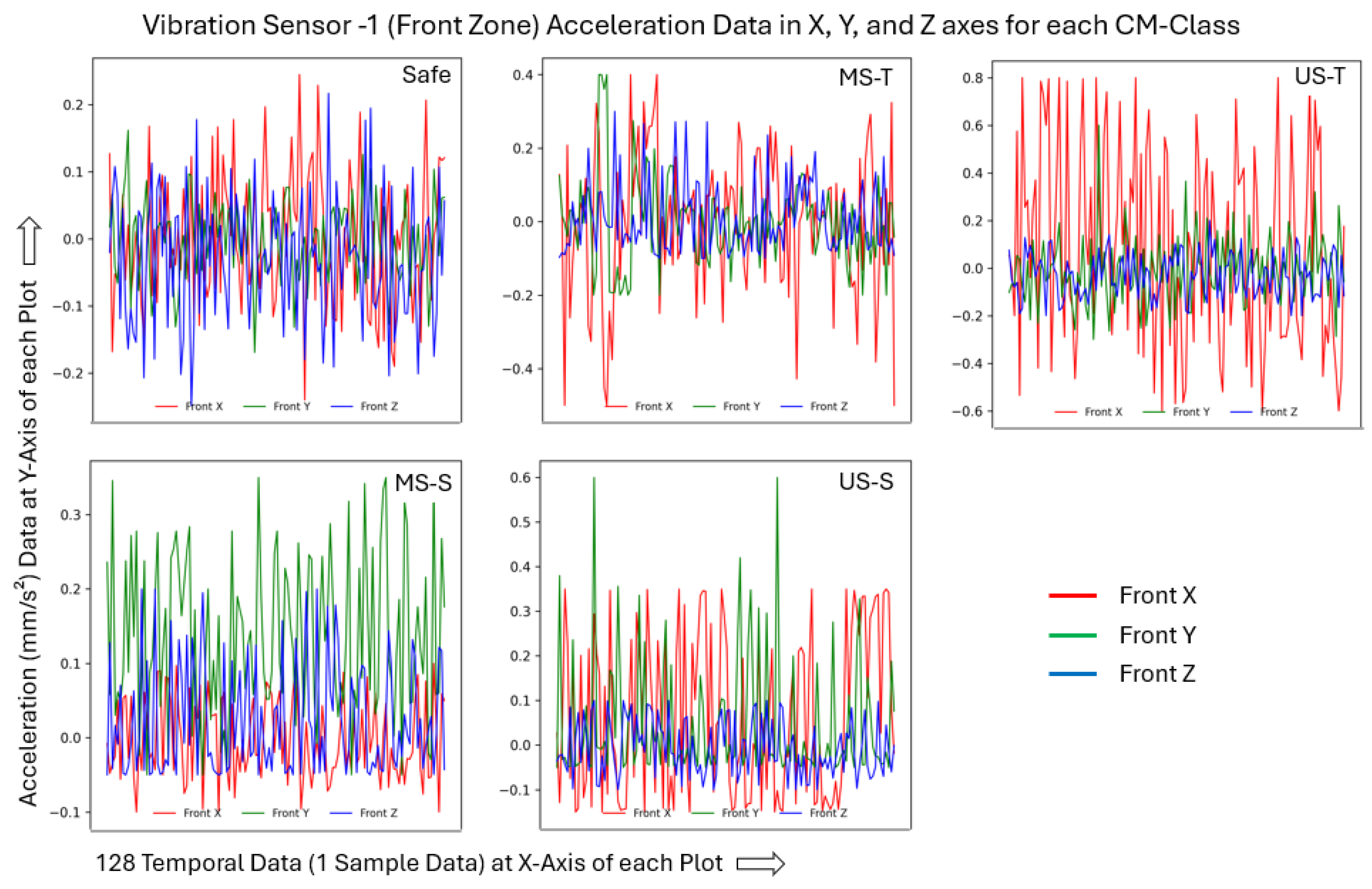

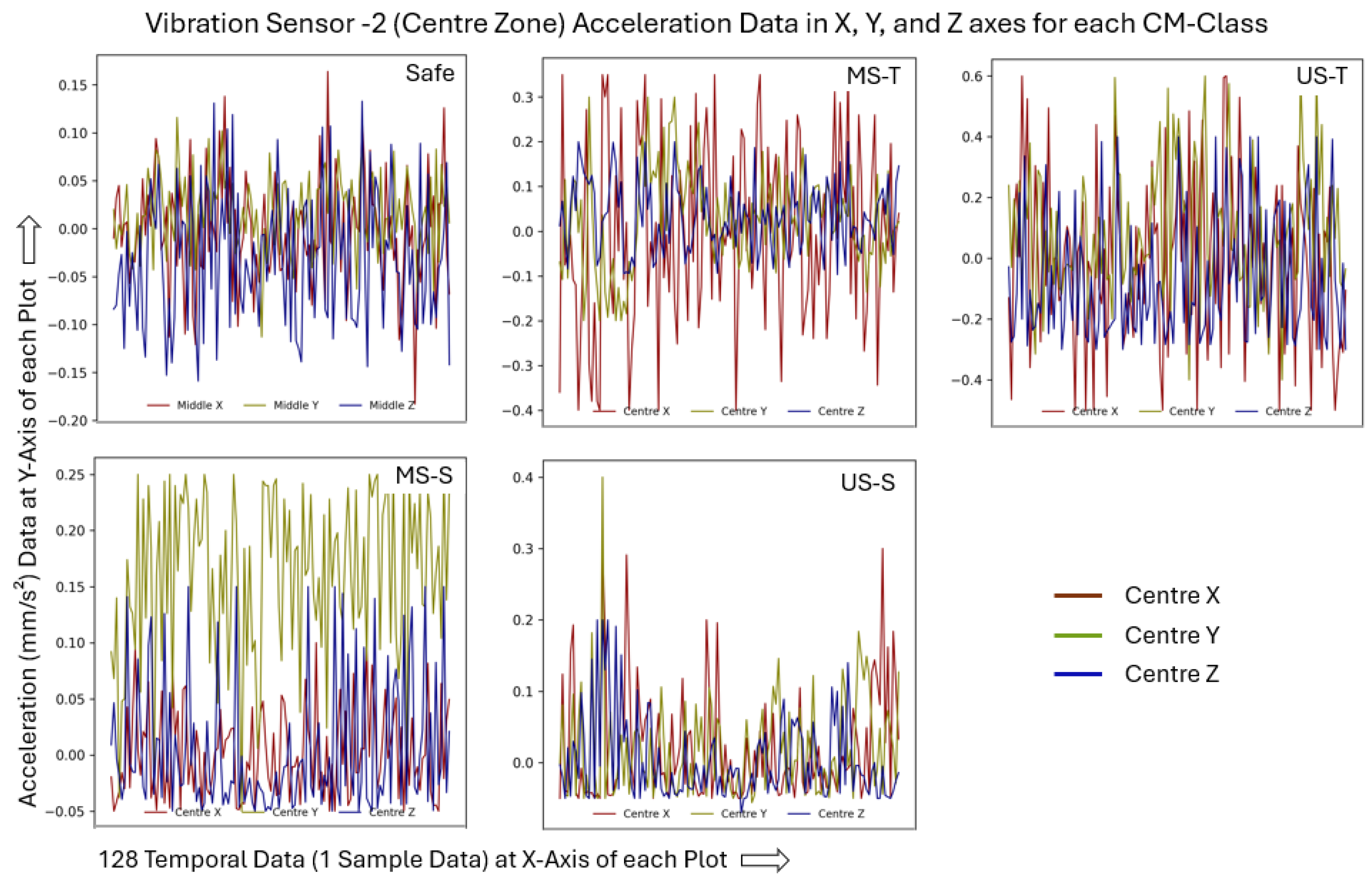

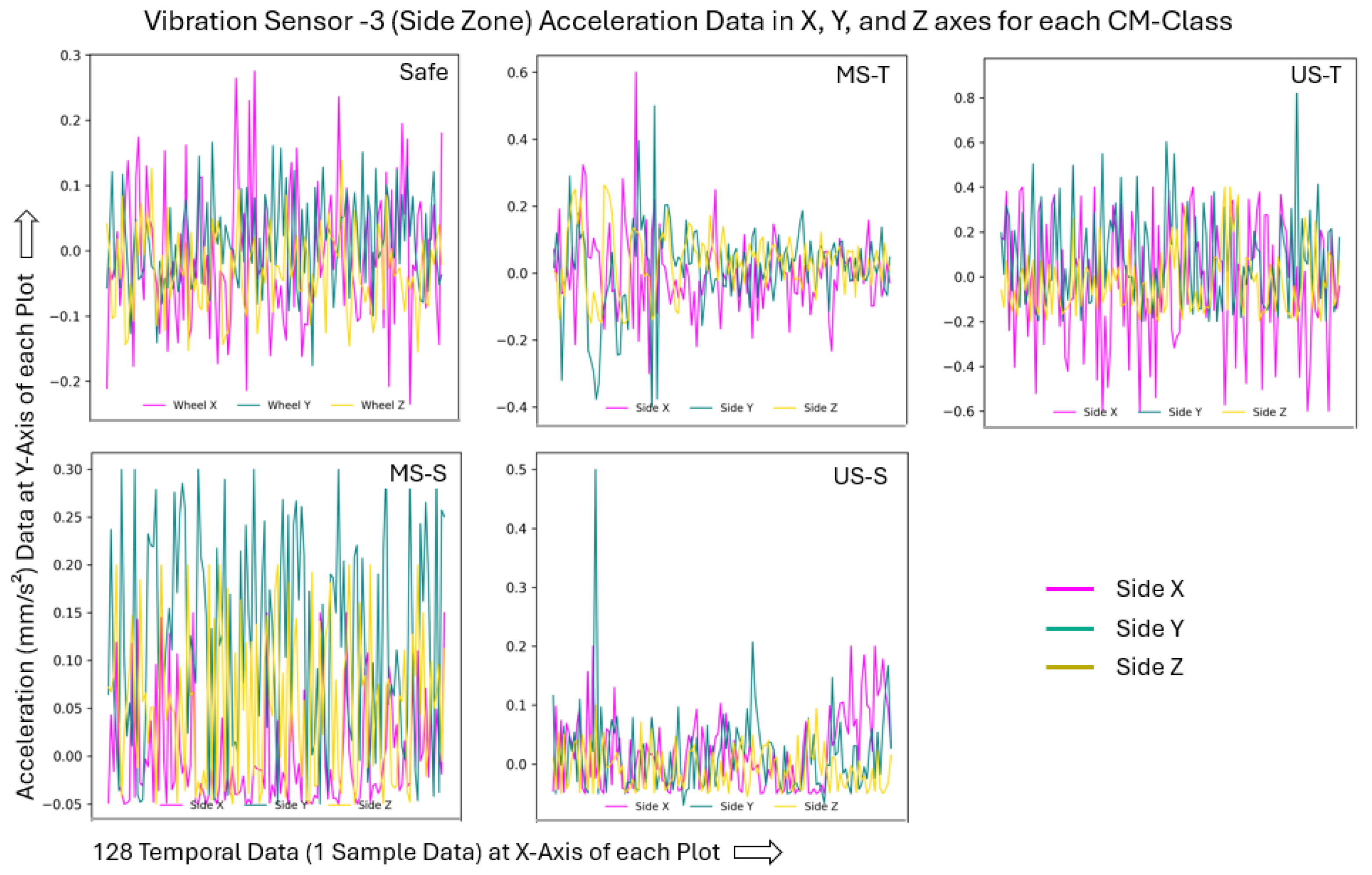

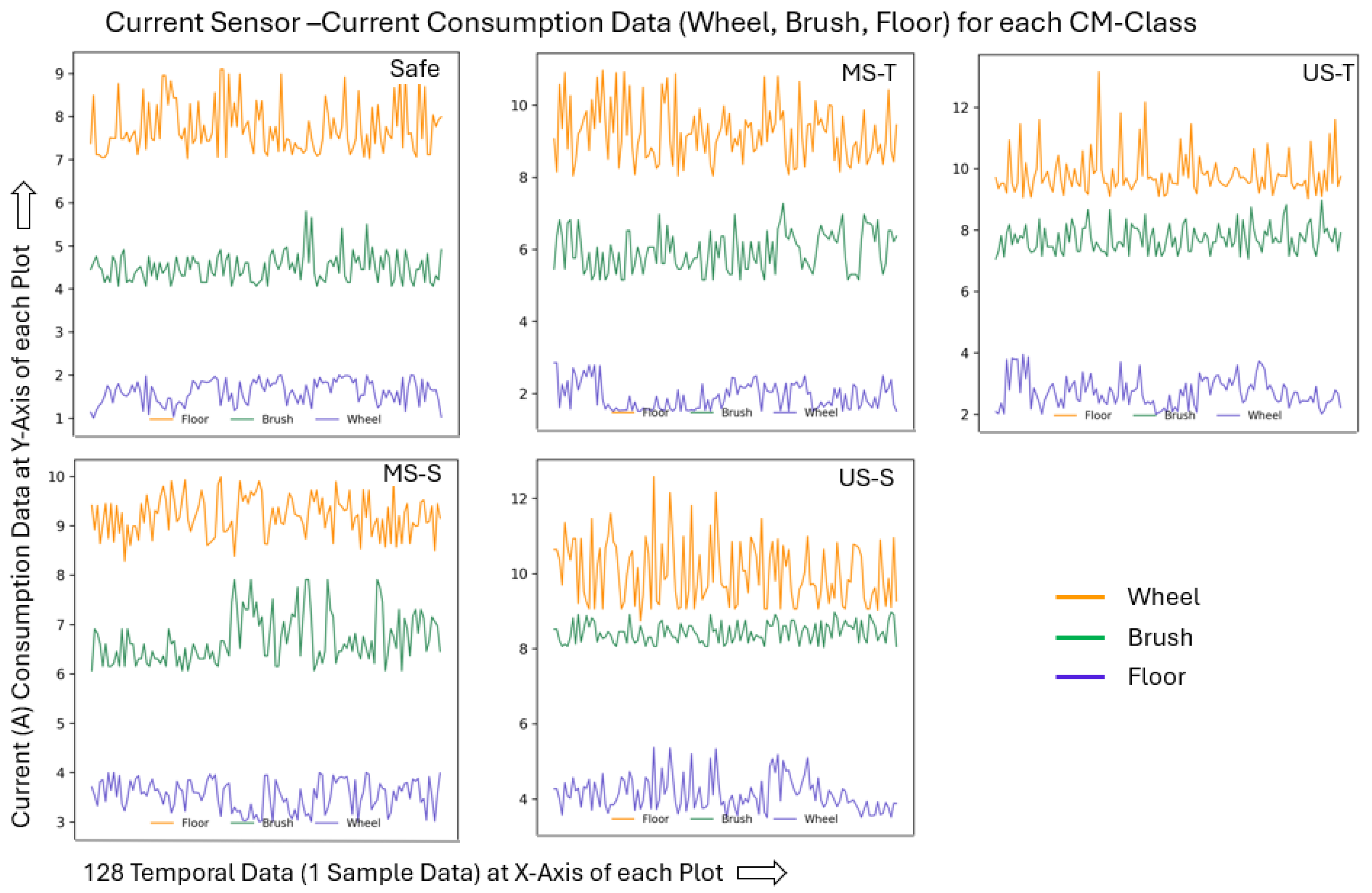

- A comprehensive heterogeneous dataset is developed by integrating data from multiple sensors: an IMU capturing triaxial angular velocity (roll, pitch, yaw); three vibration sensors positioned in high-impact zones, measuring triaxial vibration acceleration; and current sensors collecting current consumption data from the side brush, floor tool, and drive wheel motors. This integration effectively enables the accurate modeling of both safe and anomalous classes within the CM framework.

- A 1D CNN model is designed for efficient training and real-time classification, ensuring fast and reliable detection of anomalous states while maintaining low computational overhead.

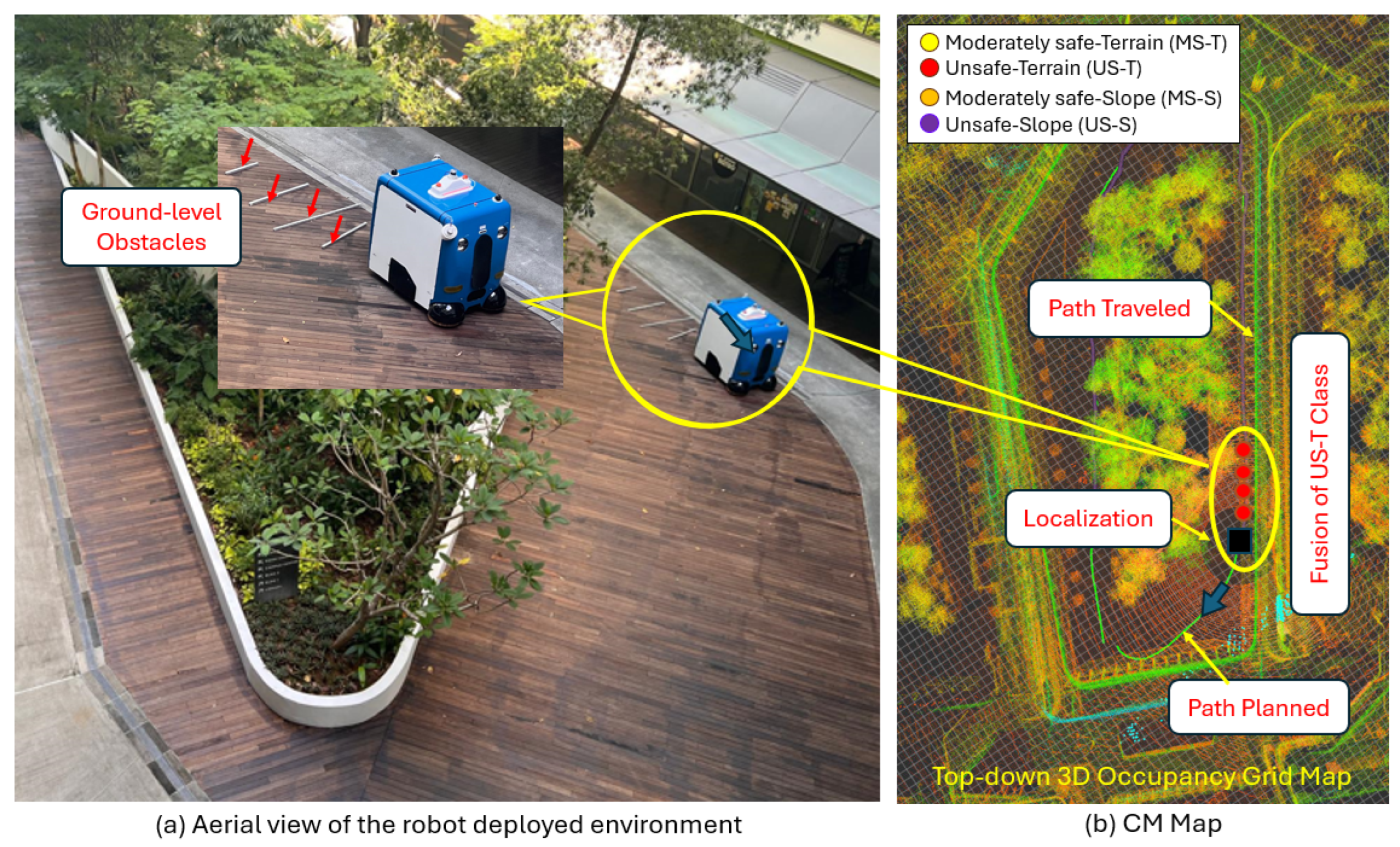

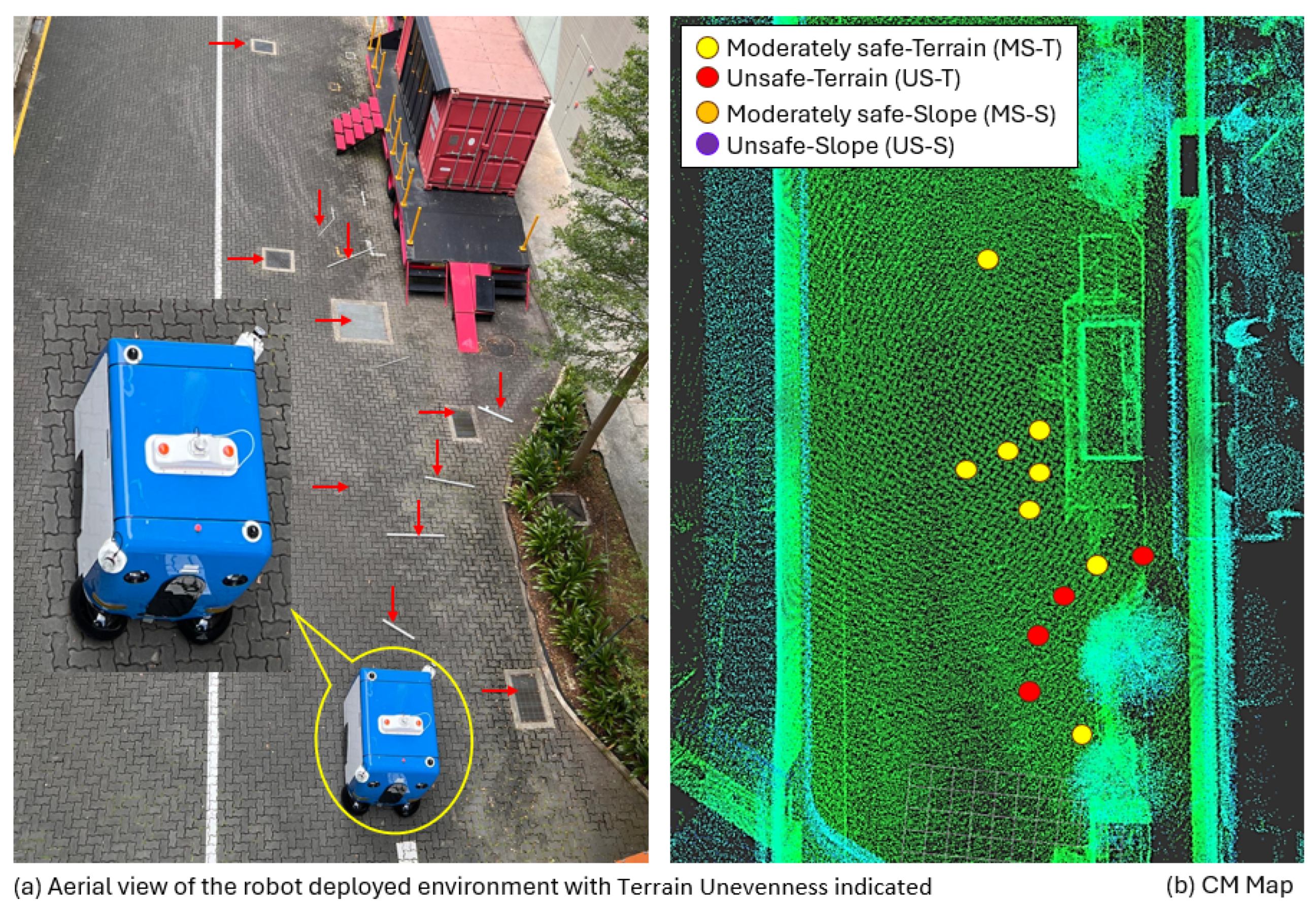

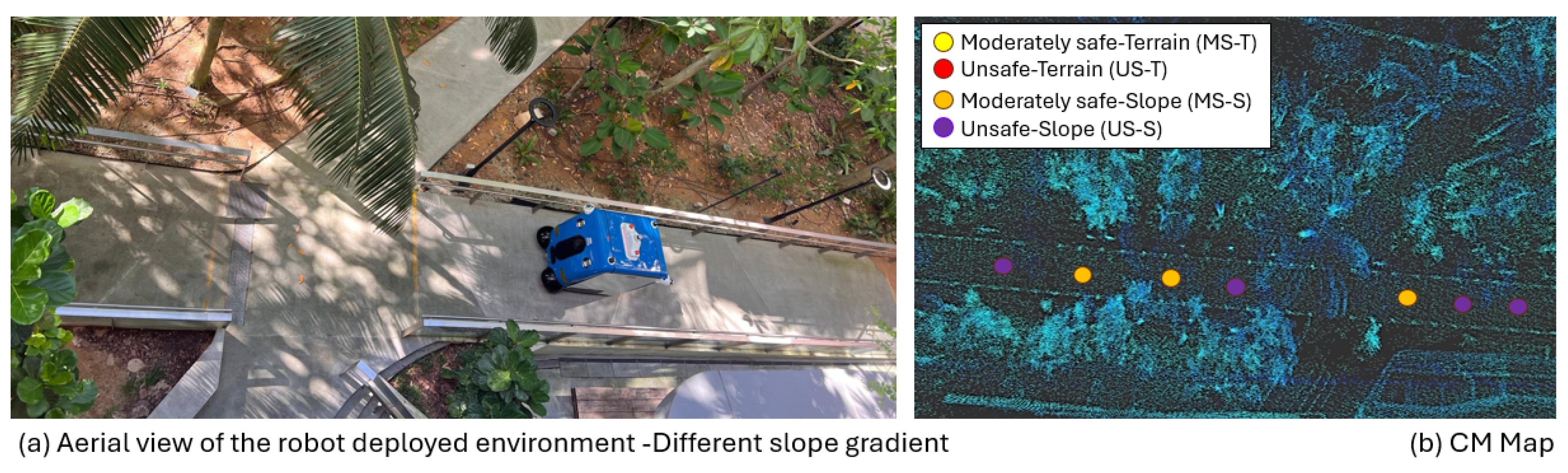

- A CM-map framework is developed for real-time robot monitoring by overlaying predicted anomalous classes onto a 3D occupancy map of the workspace, enabling the maintenance team to take prompt action.

- Based on case-study findings with the in-house-developed PANTHERA 2.0 robot, this study introduces a first-of-its-kind AI-driven, real-time CM framework that integrates multi-sensor heterogeneous data and incorporates slope as a new class of monitoring feature to enhance robotic health, operational safety, and support CBM in large-scale autonomous pavement-sweeping robots.

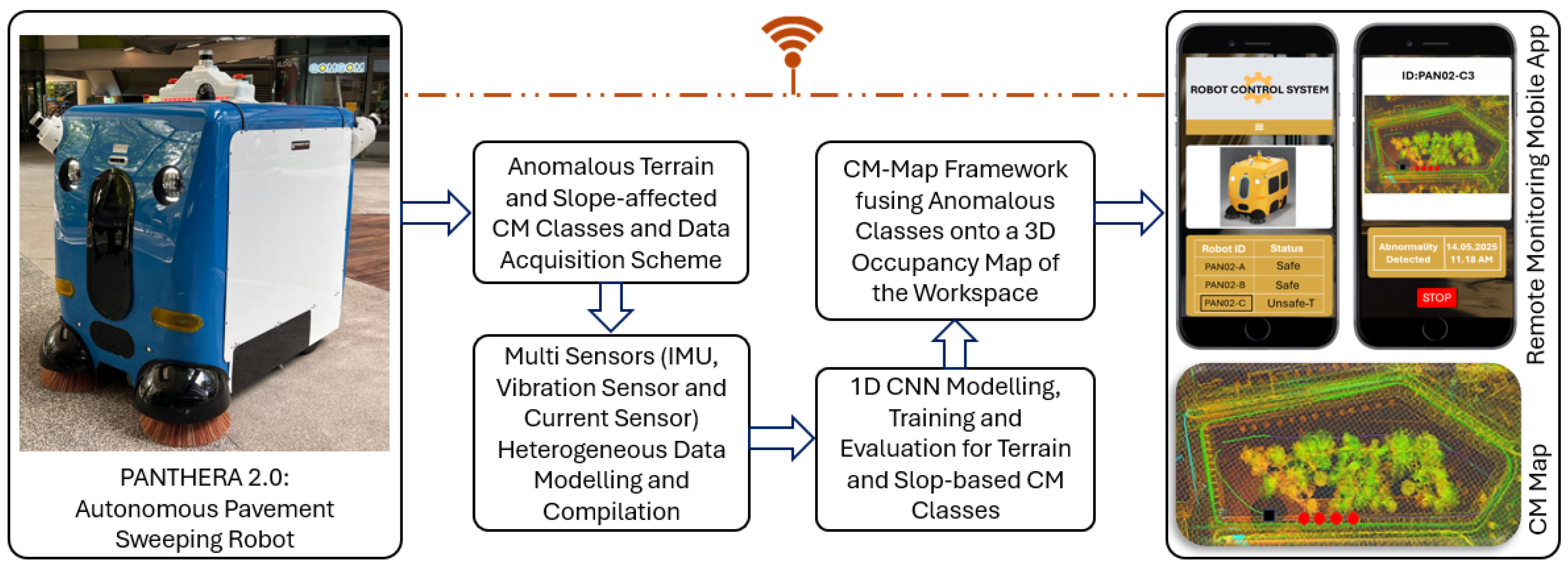

2. Overview of the Proposed CM Framework

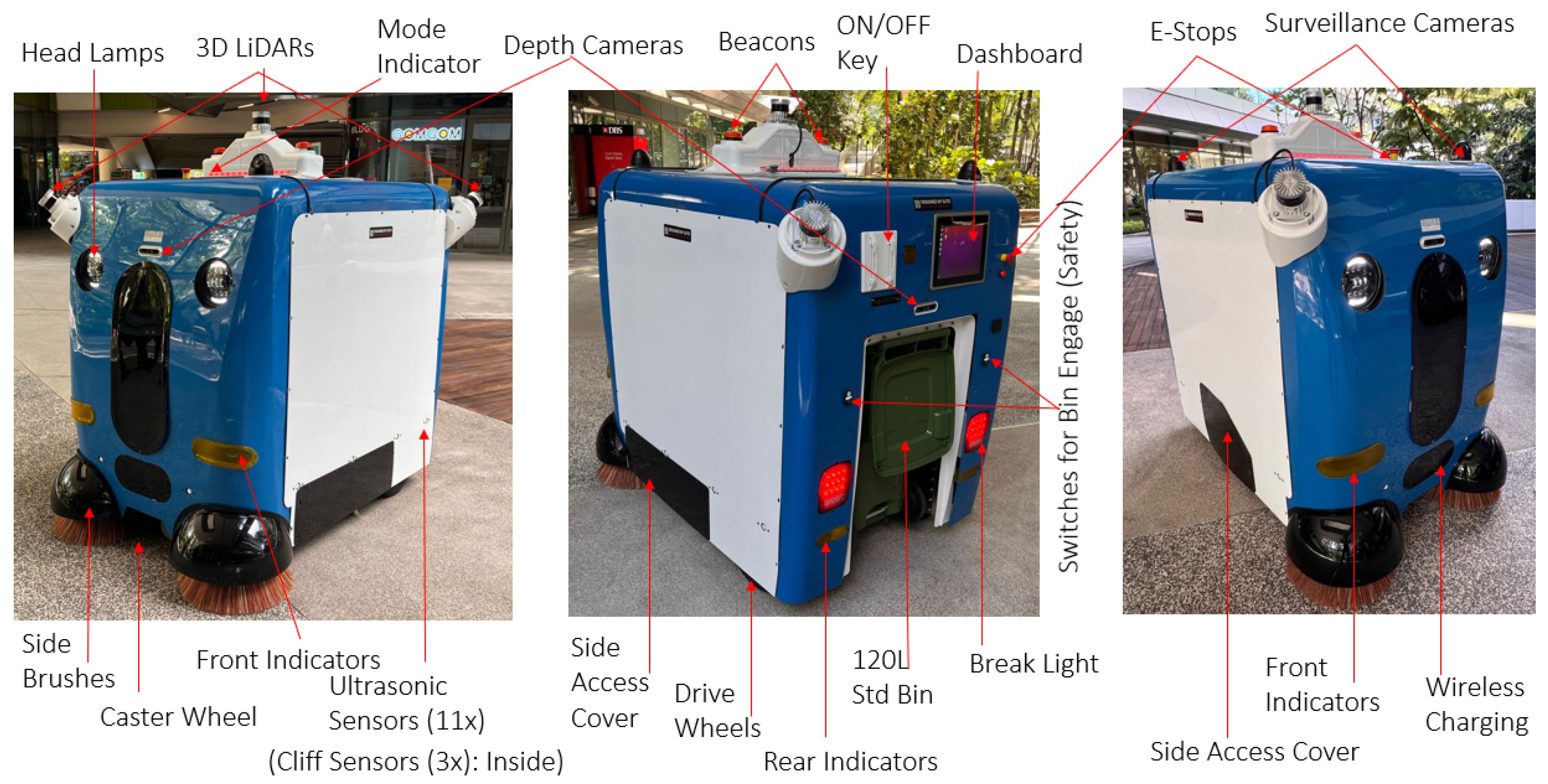

2.1. PANTHERA 2.0: A Heavy-Duty Outdoor Autonomous Robot for Pavement Sweeping

2.2. Terrain and Slope CM Classes for Health and Operational Safety of Pavement-Sweeping Robots

2.3. Dataset Modeling for Terrain and Slope-Specific CM Classes

2.4. 1D Convolutional Neural Network Modeling for CM Classes

2.5. CM Map for Real-Time Monitoring

3. Experiments and Results

3.1. Training Dataset Preparation and Visualization

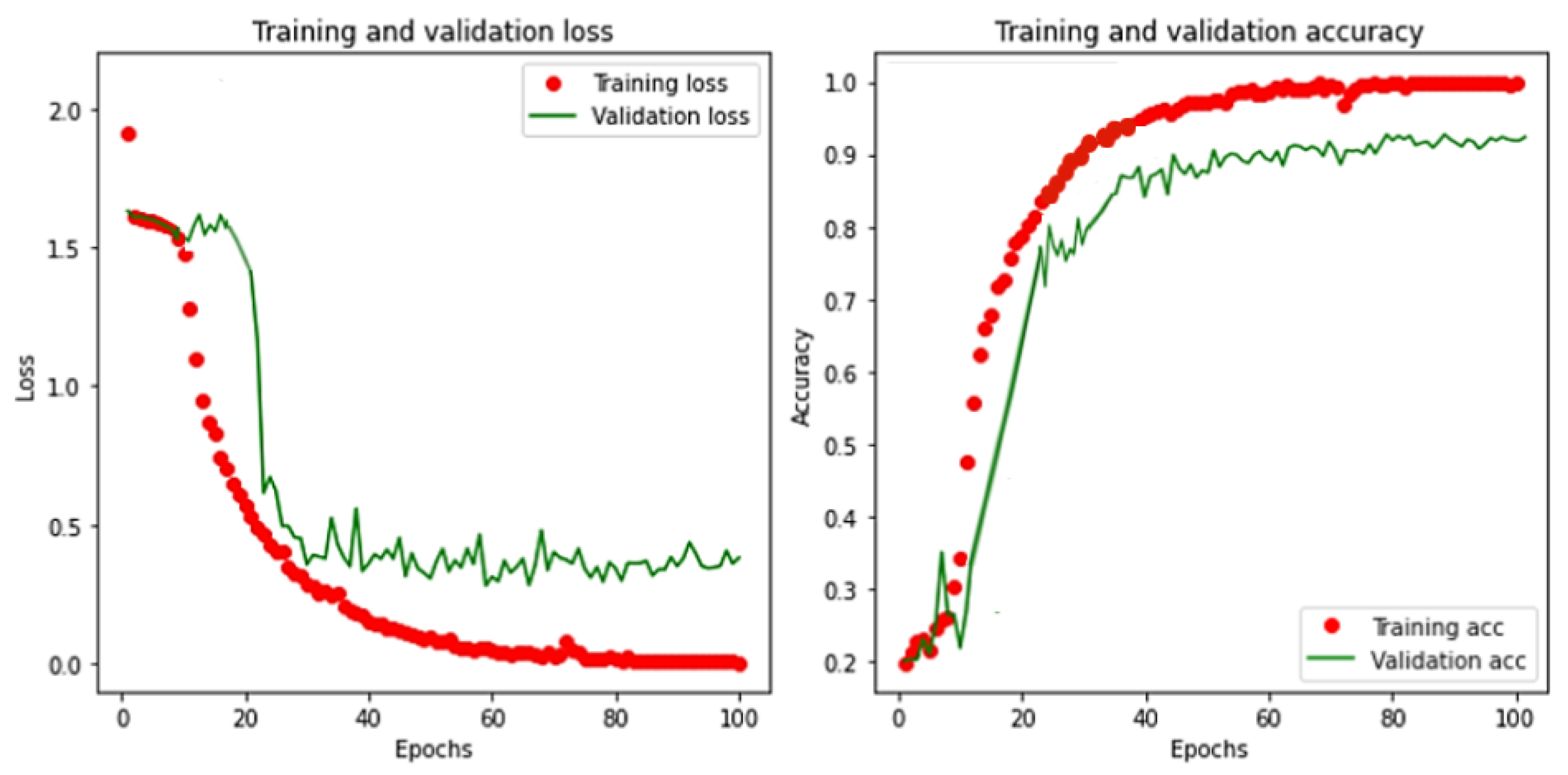

3.2. One-Dimensional CNN Model Training, Evaluation, and Comparison

4. Real-Time Field Case Studies and Discussion

| Algorithm 1 Inference engine |

|

| Algorithm 2 CM class mapping |

|

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- ABIResearch. Shipments of Outdoor Mobile Robots to 350,000 by 2030. Available online: https://www.abiresearch.com/press/labor-shortages-and-workplace-safety-concerns-propel-shipments-of-outdoor-mobile-robots-to-350000-by-2030/ (accessed on 10 January 2023).

- Zhang, F.S.; Ge, D.Y.; Song, J.; Xiang, W.J. Outdoor scene understanding of mobile robot via multi-sensor information fusion. J. Ind. Inf. Integr. 2022, 30, 100392. [Google Scholar] [CrossRef]

- Liang, Z.; Fang, T.; Dong, Z.; Li, J. An Accurate Visual Navigation Method for Wheeled Robot in Unstructured Outdoor Environment Based on Virtual Navigation Line. In Proceedings of the International Conference on Image, Vision and Intelligent Systems (ICIVIS 2021), Changsha, China, 18–20 June 2022; pp. 635–656. [Google Scholar]

- Yang, L.; Wang, L. A semantic SLAM-based dense mapping approach for large-scale dynamic outdoor environment. Measurement 2022, 204, 112001. [Google Scholar] [CrossRef]

- Dong, Y.; Liu, S.; Zhang, C.; Zhou, Q. Path Planning Research for Outdoor Mobile Robot. In Proceedings of the 2022 12th International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Changbai Mountain, China, 27–31 July 2022; pp. 543–547. [Google Scholar]

- Liu, F.; Li, X.; Yuan, S.; Lan, W. Slip-aware motion estimation for off-road mobile robots via multi-innovation unscented Kalman filter. IEEE Access 2020, 8, 43482–43496. [Google Scholar] [CrossRef]

- Manikandan, N.; Kaliyaperumal, G. Collision avoidance approaches for autonomous mobile robots to tackle the problem of pedestrians roaming on campus road. Pattern Recognit. Lett. 2022, 160, 112–121. [Google Scholar] [CrossRef]

- Research, G.V. Outdoor Robot—Cleaning Robot Market Statistics. Available online: https://www.grandviewresearch.com/horizon/statistics/cleaning-robot-market/product/outdoor-robot/global/ (accessed on 10 December 2024).

- Ahmed, N.; Day, A.; Victory, J.; Zeall, L.; Young, B. Condition monitoring in the management of maintenance in a large scale precision CNC machining manufacturing facility. In Proceedings of the 2012 IEEE International Conference on Condition Monitoring and Diagnosis, Kitakyushu, Japan, 13–18 November 2012; pp. 842–845. [Google Scholar]

- Davies, A. Handbook of Condition Monitoring: Techniques and Methodology; Springer Science & Business Media: New York, NY, USA, 2012. [Google Scholar]

- Chng, G. Softbank Robotics Launches First Rent-a-Robot Offering for Cleaning Services in Singapore. Available online: https://www.techgoondu.com/2019/09/25/softbank-robotics-launches-first-rent-a-robot-offering-for-cleaning-services-in-singapore/ (accessed on 28 November 2021).

- Dupont, E.M.; Moore, C.A.; Collins, E.G.; Coyle, E. Frequency response method for terrain classification in autonomous ground vehicles. Auton. Robot. 2008, 24, 337–347. [Google Scholar] [CrossRef]

- Csík, D.; Odry, Á.; Sárosi, J.; Sarcevic, P. Inertial sensor-based outdoor terrain classification for wheeled mobile robots. In Proceedings of the 2021 IEEE 19th International Symposium on Intelligent Systems and Informatics (SISY), Subotica, Serbia, 16–18 September 2021; pp. 159–164. [Google Scholar]

- Khan, Y.N.; Komma, P.; Bohlmann, K.; Zell, A. Grid-based visual terrain classification for outdoor robots using local features. In Proceedings of the 2011 IEEE Symposium on Computational Intelligence in Vehicles and Transportation Systems (CIVTS) Proceedings, Paris, France, 11–15 April 2011; pp. 16–22. [Google Scholar]

- Weiss, C.; Tamimi, H.; Zell, A. A combination of vision-and vibration-based terrain classification. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 2204–2209. [Google Scholar]

- Suger, B.; Steder, B.; Burgard, W. Traversability analysis for mobile robots in outdoor environments: A semi-supervised learning approach based on 3D-LIDAR data. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 3941–3946. [Google Scholar]

- Laible, S.; Khan, Y.N.; Bohlmann, K.; Zell, A. 3D LIDAR-and camera-based terrain classification under different lighting conditions. In Proceedings of the Autonomous Mobile Systems 2012: 22. Fachgespräch Stuttgart, Stuttgart, Germany, 26–28 September 2012; pp. 21–29. [Google Scholar]

- Janssens, O.; Slavkovikj, V.; Vervisch, B.; Stockman, K.; Loccufier, M.; Verstockt, S.; Van de Walle, R.; Van Hoecke, S. Convolutional neural network based fault detection for rotating machinery. J. Sound Vib. 2016, 377, 331–345. [Google Scholar] [CrossRef]

- Kumar, P.; Shankar Hati, A. Convolutional neural network with batch normalisation for fault detection in squirrel cage induction motor. IET Electr. Power Appl. 2021, 15, 39–50. [Google Scholar] [CrossRef]

- Abdeljaber, O.; Sassi, S.; Avci, O.; Kiranyaz, S.; Ibrahim, A.A.; Gabbouj, M. Fault detection and severity identification of ball bearings by online condition monitoring. IEEE Trans. Ind. Electron. 2018, 66, 8136–8147. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Eren, L.; Ince, T.; Kiranyaz, S. A generic intelligent bearing fault diagnosis system using compact adaptive 1D CNN classifier. J. Signal Process. Syst. 2019, 91, 179–189. [Google Scholar] [CrossRef]

- Ince, T.; Kiranyaz, S.; Eren, L.; Askar, M.; Gabbouj, M. Real-time motor fault detection by 1-D convolutional neural networks. IEEE Trans. Ind. Electron. 2016, 63, 7067–7075. [Google Scholar] [CrossRef]

- Abdeljaber, O.; Avci, O.; Kiranyaz, S.; Gabbouj, M.; Inman, D.J. Real-time vibration-based structural damage detection using one-dimensional convolutional neural networks. J. Sound Vib. 2017, 388, 154–170. [Google Scholar] [CrossRef]

- Wang, J.; Wang, D.; Wang, X. Fault diagnosis of industrial robots based on multi-sensor information fusion and 1D convolutional neural network. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; pp. 3087–3091. [Google Scholar]

- Constantin, G.; Maroșan, I.A.; Crenganiș, M.; Botez, C.; Gîrjob, C.E.; Biriș, C.M.; Chicea, A.L.; Bârsan, A. Monitoring the Current Provided by a Hall Sensor Integrated in a Drive Wheel Module of a Mobile Robot. Machines 2023, 11, 385. [Google Scholar] [CrossRef]

- Rapalski, A.; Dudzik, S. Energy Consumption Analysis of the Selected Navigation Algorithms for Wheeled Mobile Robots. Energies 2023, 16, 1532. [Google Scholar] [CrossRef]

- Kryter, R.; Haynes, H. Condition Monitoring of Machinery Using Motor Current Signature Analysis; Technical Report; Oak Ridge National Lab.: Oak Ridge, TN, USA, 1989. [Google Scholar]

- Pookkuttath, S.; Rajesh Elara, M.; Sivanantham, V.; Ramalingam, B. AI-Enabled Predictive Maintenance Framework for Autonomous Mobile Cleaning Robots. Sensors 2022, 22, 13. [Google Scholar] [CrossRef] [PubMed]

- Pookkuttath, S.; Gomez, B.F.; Elara, M.R.; Thejus, P. An optical flow-based method for condition-based maintenance and operational safety in autonomous cleaning robots. Expert Syst. Appl. 2023, 222, 119802. [Google Scholar] [CrossRef]

- Pookkuttath, S.; Veerajagadheswar, P.; Rajesh Elara, M. AI-Enabled Condition Monitoring Framework for Indoor Mobile Cleaning Robots. Mathematics 2023, 11, 3682. [Google Scholar] [CrossRef]

- Pookkuttath, S.; Palanisamy, P.A.; Elara, M.R. AI-Enabled Condition Monitoring Framework for Outdoor Mobile Robots Using 3D LiDAR Sensor. Mathematics 2023, 11, 3594. [Google Scholar] [CrossRef]

- Pookkuttath, S.; Abdulkader, R.E.; Elara, M.R.; Veerajagadheswar, P. AI-Enabled Vibrotactile Feedback-Based Condition Monitoring Framework for Outdoor Mobile Robots. Mathematics 2023, 11, 3804. [Google Scholar] [CrossRef]

- Pookkuttath, S.; Gomez, B.F.; Elara, M.R. RL-Based Vibration-Aware Path Planning for Mobile Robots’ Health and Safety. Mathematics 2025, 13, 913. [Google Scholar] [CrossRef]

- Reza, S.; Ferreira, M.C.; Machado, J.J.; Tavares, J.M.R. Traffic state prediction using one-dimensional convolution neural networks and long short-term memory. Appl. Sci. 2022, 12, 5149. [Google Scholar] [CrossRef]

- Shrestha, A.; Dang, J. Deep learning-based real-time auto classification of smartphone measured bridge vibration data. Sensors 2020, 20, 2710. [Google Scholar] [CrossRef] [PubMed]

- Jiang, W. Time series classification: Nearest neighbor versus deep learning models. SN Appl. Sci. 2020, 2, 721. [Google Scholar] [CrossRef]

- Senjoba, L.; Sasaki, J.; Kosugi, Y.; Toriya, H.; Hisada, M.; Kawamura, Y. One-dimensional convolutional neural network for drill bit failure detection in rotary percussion drilling. Mining 2021, 1, 297–314. [Google Scholar] [CrossRef]

- Zhang, W.; Yang, G.; Lin, Y.; Ji, C.; Gupta, M.M. On Definition of Deep Learning. In Proceedings of the 2018 World Automation Congress (WAC), Stevenson, WA, USA, 3–6 June 2018; pp. 1–5. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar]

- Kiranyaz, S.; Ince, T.; Abdeljaber, O.; Avci, O.; Gabbouj, M. 1-D convolutional neural networks for signal processing applications. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 8360–8364. [Google Scholar]

- Chen, S.; Yu, J.; Wang, S. One-dimensional convolutional auto-encoder-based feature learning for fault diagnosis of multivariate processes. J. Process Control 2020, 87, 54–67. [Google Scholar] [CrossRef]

- Mitiche, I.; Nesbitt, A.; Conner, S.; Boreham, P.; Morison, G. 1D-CNN based real-time fault detection system for power asset diagnostics. IET Gener. Transm. Distrib. 2020, 14, 5766–5773. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Koide, K.; Miura, J.; Menegatti, E. A portable three-dimensional LIDAR-based system for long-term and wide-area people behavior measurement. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419841532. [Google Scholar] [CrossRef]

- Koide, K. HDL-Graph-SLAM Algorithm. Available online: https://github.com/koide3/hdl_graph_slam (accessed on 10 August 2022).

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016; Volume 16, pp. 265–283. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Grandini, M.; Bagli, E.; Visani, G. Metrics for multi-class classification: An overview. arXiv 2020, arXiv:2008.05756. [Google Scholar] [CrossRef]

- Wang, M.; Ye, L.; Sun, X. Adaptive online terrain classification method for mobile robot based on vibration signals. Int. J. Adv. Robot. Syst. 2021, 18, 17298814211062035. [Google Scholar] [CrossRef]

- Tick, D.; Rahman, T.; Busso, C.; Gans, N. Indoor robotic terrain classification via angular velocity based hierarchical classifier selection. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St. Paul, MN, USA, 14–18 May 2012; pp. 3594–3600. [Google Scholar]

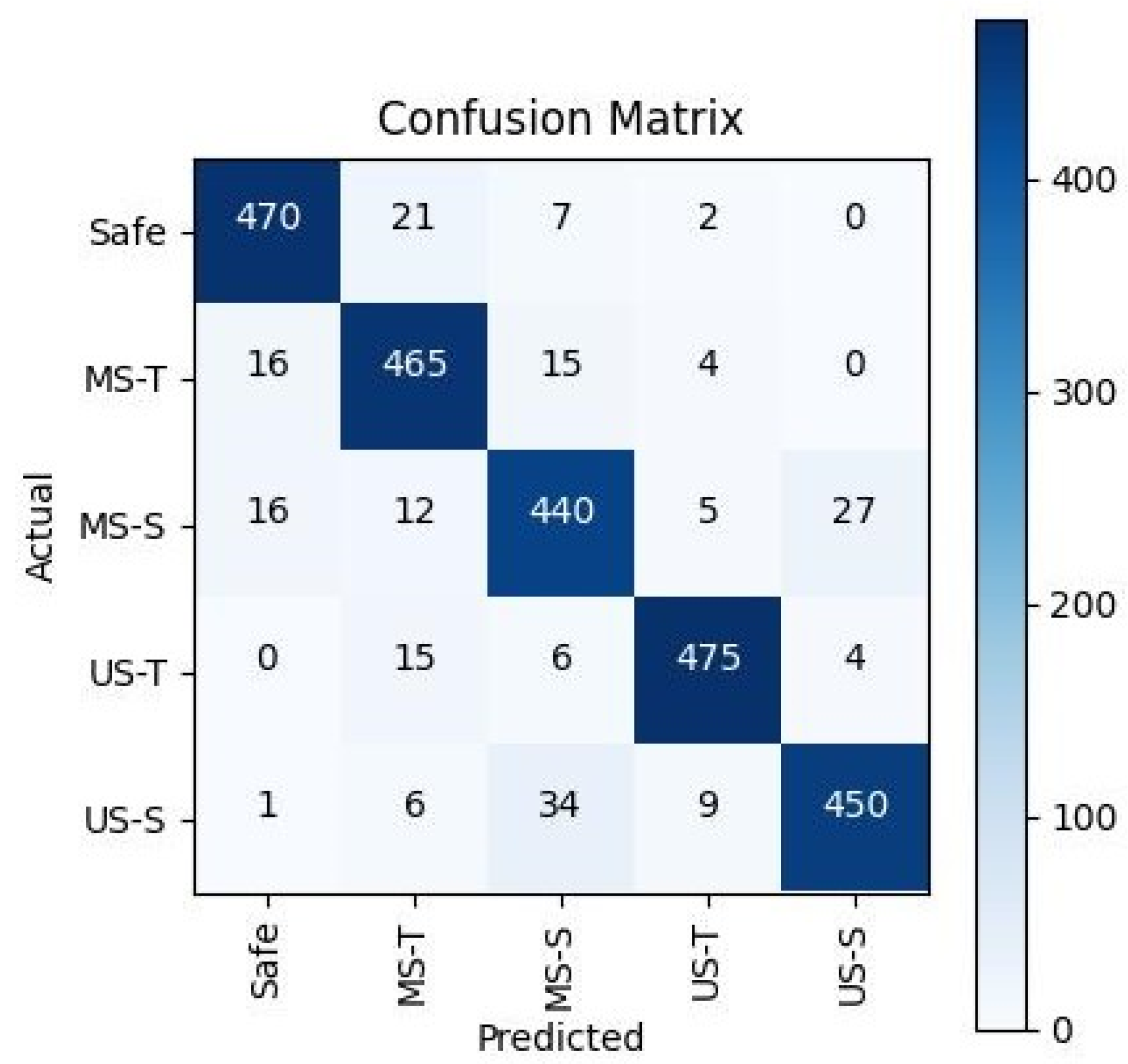

| Vibration Class | Precision | Recall | F1 Score | Accuracy |

|---|---|---|---|---|

| Safe | 0.94 | 0.92 | 0.95 | 0.94 |

| MS-T | 0.92 | 0.91 | 0.94 | 0.93 |

| MS-S | 0.86 | 0.90 | 0.87 | 0.88 |

| US-T | 0.93 | 0.96 | 0.94 | 0.95 |

| US-S | 0.90 | 0.93 | 0.88 | 0.90 |

| Model | Accuracy (%) | Inference Time (ms) |

|---|---|---|

| 1D CNN | 92.0 | 2.068 |

| CNN-LSTM | 90.3 | 5.206 |

| LSTM | 86.5 | 4.617 |

| MLP | 82.3 | 3.845 |

| SVM | 80.6 | 18.421 |

| CM Class | Safe | MS-T | MS-S | US-T | US-S |

|---|---|---|---|---|---|

| Accuracy Prediction (%) | 93 | 91 | 88 | 93 | 89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pookkuttath, S.; Zin, A.K.; Jayadeep, A.; Muthugala, M.A.V.J.; Elara, M.R. AI-Enabled Condition Monitoring Framework for Autonomous Pavement-Sweeping Robots. Mathematics 2025, 13, 2306. https://doi.org/10.3390/math13142306

Pookkuttath S, Zin AK, Jayadeep A, Muthugala MAVJ, Elara MR. AI-Enabled Condition Monitoring Framework for Autonomous Pavement-Sweeping Robots. Mathematics. 2025; 13(14):2306. https://doi.org/10.3390/math13142306

Chicago/Turabian StylePookkuttath, Sathian, Aung Kyaw Zin, Akhil Jayadeep, M. A. Viraj J. Muthugala, and Mohan Rajesh Elara. 2025. "AI-Enabled Condition Monitoring Framework for Autonomous Pavement-Sweeping Robots" Mathematics 13, no. 14: 2306. https://doi.org/10.3390/math13142306

APA StylePookkuttath, S., Zin, A. K., Jayadeep, A., Muthugala, M. A. V. J., & Elara, M. R. (2025). AI-Enabled Condition Monitoring Framework for Autonomous Pavement-Sweeping Robots. Mathematics, 13(14), 2306. https://doi.org/10.3390/math13142306