1. Introduction

The autoregressive (AR) model, as a versatile tool for analyzing and forecasting time series data, plays an important role in economics, weather forecasting, signal processing, and healthcare fields. In the application of the autoregressive model, the stationarity of time series data, in which the mean, variance, and autocovariance for the given processes remain constant over time, is one of the key assumptions. Unfortunately, in real-world scenarios, most signals and processes exhibit non-stationary behavior, which makes them unsuitable for AR modeling and can lead to systematic bias or inconsistent results [

1]. Beyond signal processing, traditional risk models often rely on assumptions of stationarity and constant interdependencies, which are frequently violated during periods of market stress or structural shifts. In such turbulent conditions, asset correlations tend to rise sharply, a phenomenon known as correlation breakdown, resulting in a substantial underestimation of portfolio risk when using static or constant-parameter models. Thus, to effectively characterize such processes, it is essential to account for their non-stationary nature using either parametric or non-parametric models. Following this thought, one well-established approach in this context is the time-varying autoregressive (TV-AR) model, which is described in detail in [

2]. In general, the framework of the TV-AR model with

r time lags can be expressed as:

where

is time-varying intercept,

are time-varying parameters, and perturbation

is zero-mean stationary process with

, and

for

. In contrast to the AR model, the TV-AR model has a significant advantage in flexibility and adaptability due to its time-dependent parameters, making it more suitable for capturing non-stationary behavior and structural changes in time series data.

Next, in scenarios involving the investigation of complex internal relationships, where the internal relationship is non-negligible, the Vector Autoregressive (VAR) approach is employed as an efficient high-dimensional analytical method. However, as in the case of the AR model, the stationarity assumption is often violated in multivariate settings; therefore, the Time-Varying Vector Autoregressive (TV-VAR) model is employed as a multivariate framework to capture this temporal dynamics and interdependencies among multiple variables [

3]. Typically, the TV-VAR model with

r time lags can be expressed as:

where vector time series data

, time-varying intercepts

, time-varying matrix

, and

are independent samples drawn from a multivariate zero-mean stationary process with covariance matrix

. Although TV-VAR models are widely used to capture non-stationary dynamics in multivariate time series, existing estimation approaches, such as local kernel smoothing and Time-Varying Parameter Bayesian VAR (TVP-BVAR), often lack explicit mechanisms for enforcing model stability. In particular, traditional methods do not directly control the stability of the time-varying process, despite its inherent tendency toward explosiveness in the absence of appropriate constraints. While TVP-BVAR is capable of estimating time-varying coefficients, its high computational cost and the complexity of modeling evolving parameters limit its practical applicability, particularly in high-dimensional or large-scale settings. In contrast, the generalized additive framework (GAM) and one-sided kernel smoothing (OKS) based frameworks adopted in this study offer more tractable and scalable alternatives for estimating time-varying dynamics with greater computational efficiency. Hence, in this study, we focus on modeling the TV-VAR process by incorporating the GAM- and OKS-based techniques, as proposed by [

4]. We aim to enhance these methods by making the following contributions:

We first derive recursive formulas to compute the expectation and covariance matrix of the TV-VAR process with r lags. These statistical properties serve as the foundation for reformulating the TV-VAR model as a constrained optimization problem. To ensure stable and reliable estimation of the time-varying coefficients, we conduct a detailed analysis of the underlying statistical structure and implement the solution using a numerical optimization framework in Python 3.9.

In applying the generalized additive framework and kernel smoothing techniques, we further reformulate the TV-VAR optimization problem in a way that allows it to be solved using a variety of optimization methods.

We present the performance results of our GAM-based and OKS-based methods using simulations of non-homogeneous Markov chains and discuss their respective strengths and limitations.

Ultimately, our research suggests that non-stationary processes are better captured by a TV-VAR structure than traditional VAR models. Within both the GAM and OKS frameworks, gradient-based optimization algorithms incorporating stability constraints yield high performance in estimating time-varying coefficients.

The structure of the paper is as follows:

Section 3 presents the recursive formulas for the mean and covariance, along with the corresponding optimization problems for three types of processes.

Section 4 introduces the reformulated optimization framework, incorporating the generalized additive model and one-sided kernel smoothing techniques.

Section 5 provides simulation results for four different scenarios of non-homogeneous Markov chains. Finally,

Section 6 demonstrates the effectiveness of our approach using a finance-related dataset.

2. Literature Review

The Time-Varying Autoregressive model, as an extension of the AR model, offers non-negligible advantages in coefficient agility, making it highly suitable for non-stationary or structurally changing environments. Currently, TV-AR is widely used in psychology, for example, according to the research by [

5,

6], TV-AR shows a significant advantage in the study of psychology changing dynamics, especially for the emotion dynamics detection. In the research conducted by [

7], the model was used to investigate potential changes of intra-individual dynamics in the perception of situations and emotions of individuals varying in personality traits. In addition to psychology, TV-AR is also widely used in fields such as finance and economics. For example, according to the research by [

8], TV-AR can be used to examine how monetary policy jointly affects asset prices and the real economy in the United States. In the study conducted by [

9], the TV-AR model was employed to quantify the economic and policy influences, capturing both regime-dependent and time-varying effects. In a study by [

10], a TV-AR model was developed to analyze the effects of oil revenue on economic growth, providing empirical support for the resource curse hypothesis in Nigeria. Another area where TV-AR models are widely applied is engineering, including domains such as aerospace, mechanical systems, transportation, environmental monitoring, and infrastructure management. For example, in the research conducted by [

11], a TV-AR model was developed to identify modal in structural health monitoring, and [

12] used TV-AR to analyze the global or volcano seismological signals.

Compared to the AR model, when interdependencies among multiple variables are significant, the VAR model is a more effective approach for capturing autoregressive dynamics. For example, in the research conducted by [

13], VAR was used to analyze the interrelationships between price spreads and the effects of wind forecast and demand forecast errors, and other exogenous variables. In the research conducted by [

14], a Bayesian variant based VAR models was developed to forecast the international set of macroeconomic and financial variables. In the study conducted by [

15], VAR was used to detect and analyze the relationships between two subgroups in eating behaviour, depression, anxiety, and eating control. However, as with the TV-AR model, incorporating time-varying parameters becomes essential when the assumption of stationarity is violated; hence, to accommodate both non-stationarity and inter-variable dynamics, the TV-VAR model provides a crucial extension. In practical applications, TV-AR and TV-VAR often share methodological similarities; however, TV-VAR places greater emphasis on modeling the dynamic interactions among variables. For example, in the field of psychology, the study by [

16] demonstrated that time-varying parameters provide a robust framework for analyzing co-occurrence, synchrony, and the directionality of lagged relationships in real-world psychological data. Similarly, ref. [

17] employed a TV-VAR model to examine the dynamics of automatically tracked head movements in mothers and infants, revealing temporal variations both within and across episodes of the Still-Face Paradigm. Furthermore, the research by [

18] applied the TV-VAR model to capture complex multivariate interactions among psychological variables.

The TV-VAR model also plays a crucial role in the fields of economics and marketing. For instance, [

19] employed a TV-VAR model to analyze the Japanese economy and monetary policy, uncovering their evolving structure over the period from 1981 to 2008. Similarly, ref. [

20] used a TV-VAR framework to investigate the dynamic relationship between West Texas Intermediate crude oil and the U.S. S& P 500 stock market. In the context of stock market forecasting, ref. [

21] demonstrated that the TV-VAR model holds significant potential for enhancing portfolio allocation strategies. Similarly, in the study by [

22], the TV-VAR model was employed to analyze four types of oil price fluctuations: oil supply shocks, global demand shocks, domestic demand shocks, and oil-specific demand shocks. Additionally, ref. [

23] applied this model to examine the dynamic interactions among economic policy uncertainty, investor sentiment, and financial stability. Further, ref. [

24] used the TV-VAR model to explore return spillovers among major financial markets, including equity indices, exchange rates, Brent crude oil prices in the Asia-Pacific region, and the NASDAQ index. In addition to economic analysis, the TV-VAR model has also been widely applied in quantitative assessments of policy impacts, including analyses of monetary policy shocks [

25], the effects of geopolitical risks and trade policy uncertainty [

26], and international spillovers of US monetary policy [

27].

5. Evaluating Performance via Simulation

In this section, we present our simulation studies based on non-homogeneous Markov chains to evaluate the performance of the proposed methods in practical scenarios. The non-homogeneous Markov chain is a stochastic process in which the transition probabilities vary over time, in contrast to a homogeneous Markov chain, where the transition probabilities remain fixed and independent of the time step. In real-world applications, this type of Markov chain is widely used for mathematical modeling, simulation, and analysis of complex systems with uncertain or evolving dynamics, particularly in network analysis across various fields, including social sciences, environmental sciences, bioinformatics, and finance [

33,

34,

35]. Specifically, this research investigates two approaches to modeling non-homogeneous Markov chains: the first employs a transition matrix that evolves smoothly and continuously over time without an intercept term, while the second introduces an additional time-varying constant to capture subtle dynamic variations. Accordingly, the primary process for generating the second-order time-varying test data is defined as follows:

where

denotes a time-varying intercept,

and

are time-varying coefficient matrices,

is a Gaussian noise vector with zero mean and covariance

, and

is a scalar controlling the noise magnitude. Subsequently, to evaluate the performance of our TV-VAR–based methods, the mean absolute error (MAE), as defined in Equation (

17), is computed over a forecast horizon of length

H. Initially, two additional evaluation metrics, Mean Absolute Percentage Error (MAPE) and Root Mean Squared Error (RMSE), were considered for performance assessment. However, the presence of values approaching zero in some test cases led to numerical instability in MAPE, thereby undermining its reliability. Furthermore, the differences between RMSE and MAE were consistently minimal, suggesting that RMSE provided limited additional analytical value beyond what was captured by MAE. Therefore, this study focuses exclusively on MAE as the primary evaluation metric.

For the GAM-based approach, the time-varying coefficient matrix, constructed using basis functions, was estimated from past data and then used to predict the values for the next H time steps, with the corresponding prediction error calculated. In contrast, the OKS method assumed a fixed coefficient matrix over the forecast horizon, and predictions were made under this assumption, followed by evaluation of the resulting prediction error. All simulations in this study were conducted using Python, with the source code and related datasets available as detailed in the Data Availability Statement.

5.1. Simulation Preparation

For the case of a transition matrix that changes smoothly over time without time-varying intercepts, we consider the following specific scenarios:

Scenario 1: consider a zero-intercept time-series data with dimension

, where the initial value is set to

, a small noise scaling parameter which fixed to

, and the Gaussian noise covariance matrix

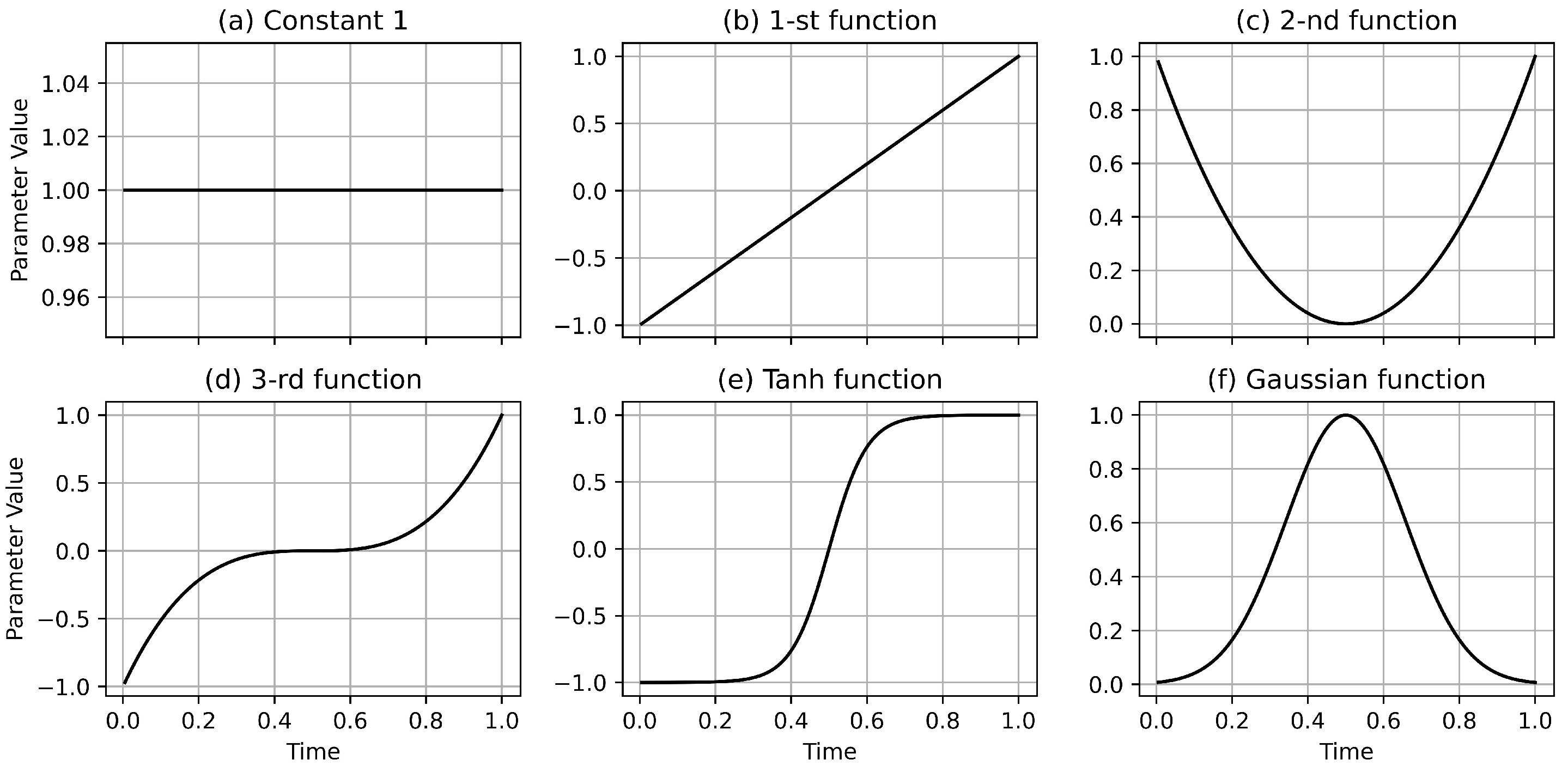

. The time-varying coefficient matrix is initialized as a uniform random matrix, normalized row-wise to form a row-stochastic matrix, and updated using the following functions:

Using the same initial value, noise scaling parameter, and strategy for generating the initial time-varying coefficient matrix as in Scenario 1, but with an increased dimension

, the Gaussian noise covariance matrix

, and the new time-varying coefficient matrix update function, which is defined as follows:

Next, a small time-varying intercept

is introduced in Scenarios 1 and 2 to enhance the dynamic behavior and evaluate the model performance. In addition to the prediction analysis, to better analyze the performance of the TV-VAR model in visualization, we apply the GAM in multiple cases of Scenarios 1 and 3 to reconstruct the whole process, as the stationarity and local stationarity assumptions of VAR and OKS make them unsuitable for this type of visualization. Specifically, we consider a time series consisting of 200 data points, with the first 180 used for training and the remaining 20 reserved for prediction. For visualization analysis, after estimating the time-varying coefficient matrices using GAM, we feed the first

r lag values into the model and let it recursively estimate the remaining

data points. The initial time-varying coefficient matrix is defined below:

5.2. Simulation Results

For each scenario, the predictions are made two steps ahead using 100 randomly generated time series samples, each of length 200, with time

t normalized from 0 to 1, and the performance is evaluated using MAE across these steps. In this study, we implement the examples using GAM, OKS, traditional Vector Autoregressive (VAR) models (estimated via matrix-based updates), and L-VAR (with coefficients estimated through Lasso regression) with lags

and

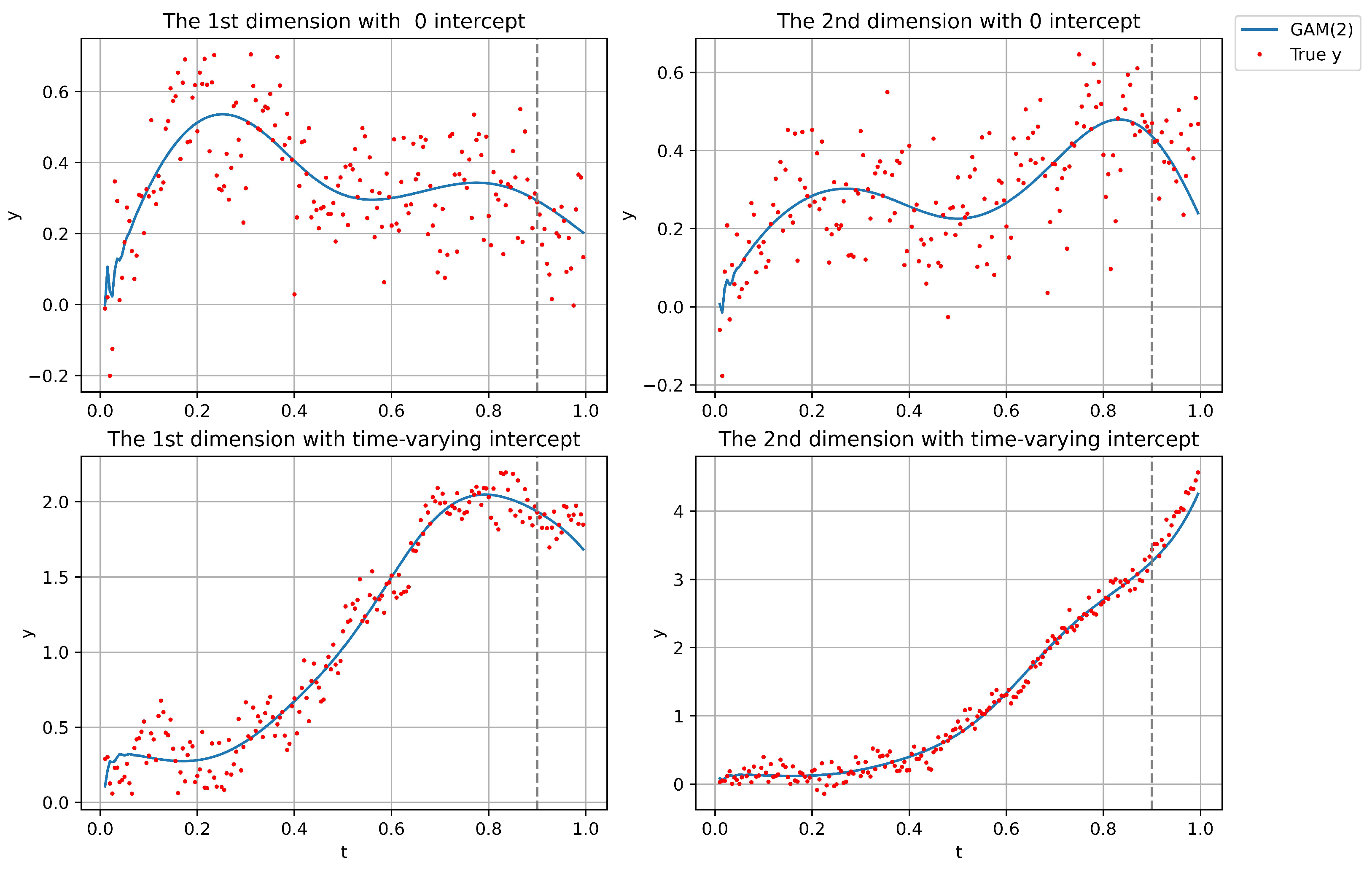

. The results for the two scenarios without an intercept are shown in

Table 1 and

Table 2, while the results for the scenarios, including MAE, mean, sample variance (SV), and minimum (Min) and maximum (Max) values, with time-varying intercepts are presented in

Table 3 and

Table 4. Next, the reconstructed results of the GAM-based method are shown in

Figure 4. Finally, to evaluate the processing time of the GAM- and OKS-based methods across different time series dimensions, we ran each method 50 times per dimension under Scenario 1. The average processing time is presented in

Table 5.

5.3. Discussion

The simulation results above compare the prediction performance of GAM, OKS, and VAR in modeling non-homogeneous Markov chains, both with and without an intercept. As expected, the MAE generally increases as the forecast horizon H extends from 1 to 2. In scenarios with stronger time-varying dynamics, both GAM and OKS outperform VAR and L-VAR, which aligns with our hypothesis, given that VAR and L-VAR assume stationarity in the whole process. However, OKS performs worse than GAM because it assumes that the estimated time-varying coefficient matrix remains fixed after the prediction point, which limits its adaptability, especially in situations where the coefficient matrix changes dramatically over time. Next, the performance of the GAM(2) model appears less effective in the zero-intercept setting compared to the non-zero intercept case. This is because, in processes with non-zero intercepts, the influence of time-varying effects tends to diminish in significance, thereby allowing even a stationary VAR model to achieve satisfactory performance. Furthermore, when reconstructing the entire time series, neither VAR nor OKS is suitable due to their reliance on stationarity assumptions; VAR assumes global stationarity, while OKS relies on local stationarity. As a result, they are only capable of forecasting or simulating specific time points, rather than capturing the full dynamic process. Finally, to evaluate robustness with respect to input length, we assessed the performance of both the GAM- and OKS-based models across time series ranging from 50 to 200 observations, and found their performance to be largely insensitive to series length. This insensitivity is likely due to the short forecasting horizon, a fixed two-step-ahead prediction, which limits temporal dependency and thereby reduces the influence of the overall input length on predictive accuracy.

Unfortunately, both our GAM-based and OKS-based methods require significantly more coefficients compared to traditional VAR, leading to increased computational complexity and higher time costs, which can limit overall performance. For example, consider a vector time series with dimension , and assume five different basis functions. In the case of a second-order model, GAM(2) requires estimating coefficients, similarly, OKS(2) requires coefficients. Next, the time complexity of the proposed approach largely depends on the choice of optimization algorithm and the computational hardware used. In this study, we employed the ’trust-constr’ algorithm from the SciPy package, which utilizes Lagrangian gradient descent to handle constrained optimization. While switching to a faster algorithm, such as ’L-BFGS-B’, may reduce computation time, it generally lacks support for constraints, potentially compromising solution feasibility. Specifically, all experiments were conducted on a system equipped with an NVIDIA RTX 3060 GPU. Under this setup, training the GAM-based method with a lag order of one required approximately 2 to 71 s, depending on the time series dimension. When the lag order increased to two, the per-sample training time rose to approximately 3 to 144 s, reflecting the increased model complexity. Additionally, the OKS-based method exhibited a distinct trend. Although significantly slower than GAM at lower dimensions, due to the additional computation required for cross-validation, it became substantially more efficient at higher dimensions. In contrast, although VAR requires matrix inversion operations, it remains significantly faster than both GAM and OKS in practice. Overall, increasing the number of lags enables the model to capture longer-term dependencies and reveal stronger connections within the time series, potentially improving forecast performance, but it also significantly increases parameter complexity and the risk of overfitting. Therefore, effectively applying TV-VAR models requires a careful balance between model complexity and the number of lags to capture temporal dependencies without overfitting.

6. Estimating Time-Varying VAR Model on Finance-Related Datasets

In the real-world application, we consider the time series data in the finance domain, incorporating some key market indicators, including the S&P 500 index (as a broad measure of U.S. equity market performance), the VIX index (reflecting market volatility and investor sentiment), and U.S. Treasury securities with a one-month constant maturity (serving as a proxy for short-term interest rates and risk-free returns). In this research, all financial data were obtained from publicly available sources, including the Federal Reserve Economic Data at

https://fred.stlouisfed.org/ (accessed on 1 May 2025) and the Chicago Board Options Exchange at

https://www.cboe.com/ (accessed on 1 May 2025). These indices are widely used in financial modeling and risk assessment due to their relevance in capturing market dynamics, investor behavior, and macroeconomic conditions.

6.1. Data Preparation

Before modeling, several preprocessing steps are essential. For the S&P 500 index, prior research has traditionally focused on various forms of returns, such as simple and logarithmic returns, based on the assumption of stationarity. While this return-based approach facilitates certain statistical modeling techniques, it may overlook important information embedded in the actual index levels, making such models less effective in accurately predicting future price levels. Hence, to address this limitation, this study instead focuses on modeling the index itself rather than its derived returns. Next, occasional breakpoints in the one-month constant maturity series, caused by sudden shifts in market conditions or expectations, such as spikes in volatility, changes in monetary policy, or other technical factors, are filled using the value from the preceding day. The data used in this study were collected for the period spanning 4 January 2016 to 31 December 2024.

6.2. Results

To evaluate the performance of our methods on real-world financial data, we use the period from 4 January 2016 to 28 June 2024, to train the TV-VAR model under both the GAM and OKS frameworks with a lag order of

. After that, we perform one-step-ahead forecasting and compare the predicted values with the actual observations, denoted as

, which is then fed back into the model to retrain both GAM and OKS for the next prediction step. The complete prediction results, including the mean absolute error, are presented in

Figure 5 and

Table 6.

The results show that both GAM and OKS perform well, achieving low MAEs across all three indices, although GAM exhibits slightly more fluctuation than OKS in predicting U.S. Treasury securities. Additionally, the findings suggest that when the VIX is high, U.S. Treasury securities tend to decline the following day. This aligns with expectations, as high market volatility often drives investors toward safe-haven assets, such as Treasury bonds, which in turn affects yields. Conversely, when the VIX decreases, investors tend to reallocate capital into equities such as the S&P 500, leading to reduced demand for Treasuries. Finally, the relationship between the VIX and the S&P 500 also holds: when the VIX is elevated, the S&P 500 typically declines or exhibits only modest gains, consistent with established market behavior.

7. Conclusions

Compared to stationarity, non-stationarity—where a time series’ statistical properties change over time—better aligns with real-world dynamics in fields such as finance, climate science, healthcare, and signal processing. Failing to account for it may lead to poor forecasts, especially in long-term predictions. The autoregressive model, a foundational tool in time series analysis, plays a crucial role in modeling time-dependent data, and its extension, the time-varying vector autoregressive (TV-VAR) model, broadens this framework to effectively handle both non-stationary dynamics and high-dimensional settings. Recent research on TV-VAR models has highlighted the effectiveness of the generalized additive model (GAM) framework and kernel smoothing (KS) methods in capturing dynamic relationships. However, few studies explicitly consider the case of TV-VAR with r lags. To model this process efficiently, we reformulate it as a series of optimization problems under appropriate constraints, which preserves the covariance structure between predictions and historical data without overfitting it over time. The methods presented in this research model TV-VAR time series data using optimization solvers, and simulation results demonstrate a clear advantage in prediction accuracy, especially under strong time variation. Furthermore, compared to the stationary VAR model and the OKS method, the GAM-based model not only delivers significantly better predictive performance but also enables reconstruction of the entire training process from the initial r lags, providing enhanced interpretability and flexibility. Compared to traditional hedging strategies, which assume stationarity, the TV-VAR framework offers significant advantages by capturing time-varying relationships and enabling the implementation of adaptive hedge ratios. In other words, when the model detects increased portfolio sensitivity to shocks or rising market volatility, it can trigger more proactive hedging with appropriate instruments, an adaptability that is especially crucial for managing tail risk, where sudden market shifts require swift and responsive adjustments.

As with other studies, our approaches—both GAM-based and OKS-based—have certain limitations. The primary drawback is the large number of parameters that must be estimated, especially in models with high dimensionality and many lags, making the model’s performance highly dependent on the efficiency of the optimization solver and susceptible to accuracy loss in the presence of high data noise. Hence, future research could explore two potential directions: first, reconstructing the GAM-based model using neural networks, particularly physics-informed neural networks, by integrating the time-varying framework in TV-VAR with physical constraints to enhance model structure and interpretability; second, refining the bandwidth selection for the OKS method, as the current cross-validation approach is time-consuming, and developing a more efficient method could significantly enhance its effectiveness. Therefore, our future work will involve reformulating the TV-VAR model with physics-based constraints and integrating it with deep learning techniques.