Abstract

This study presents a reinforcement learning–based approach to optimize replenishment policies in the presence of uncertainty, with the objective of minimizing total costs, including inventory holding, shortage, and ordering costs. The focus is on single-level assembly systems, where both component delivery lead times and finished product demand are subject to randomness. The problem is formulated as a Markov decision process (MDP), in which an agent determines optimal order quantities for each component by accounting for stochastic lead times and demand variability. The Deep Q-Network (DQN) algorithm is adapted and employed to learn optimal replenishment policies over a fixed planning horizon. To enhance learning performance, we develop a tailored simulation environment that captures multi-component interactions, random lead times, and variable demand, along with a modular and realistic cost structure. The environment enables dynamic state transitions, lead time sampling, and flexible order reception modeling, providing a high-fidelity training ground for the agent. To further improve convergence and policy quality, we incorporate local search mechanisms and multiple action space discretizations per component. Simulation results show that the proposed method converges to stable ordering policies after approximately 100 episodes. The agent achieves an average service level of 96.93%, and stockout events are reduced by over 100% relative to early training phases. The system maintains component inventories within operationally feasible ranges, and cost components—holding, shortage, and ordering—are consistently minimized across 500 training episodes. These findings highlight the potential of deep reinforcement learning as a data-driven and adaptive approach to inventory management in complex and uncertain supply chains.

Keywords:

assembly system; inventory management; replenishment planning; stochastic demand; uncertain lead times; deep reinforcement learning; deep q-network (DQN); data-driven inventory management MSC:

90B30; 90B05; 90B05; 90B15; 90B22; 68T05; 90C39; 68T07; 68T05; 90C90; 62P20

1. Introduction

In the context of supply chain and inventory management, planning plays a critical role in the effectiveness of replenishment strategies [1]. Well-designed planning processes help maintain optimal inventory levels, balancing the risk of overstocking—which leads to increased storage costs—with the risk of stockouts, which can cause lost sales and diminished customer satisfaction [2]. By ensuring the timely availability of products, components, or raw materials to meet production schedules or customer demand, effective planning contributes directly to improved service levels and enhanced customer loyalty [3]. These challenges are further compounded under conditions of uncertainty, where variability in demand and supplier lead times can significantly disrupt replenishment decisions.

In replenishment management, planning is essential to maintaining a balance between supply and demand, minimizing costs, and ensuring customer satisfaction [4]. Effective planning enables the optimal implementation of replenishment policies [5], aligning inventory decisions with strategic business objectives such as cost reduction and high product availability. These policies adjust order quantities and stock levels based on real-time market conditions. The effectiveness of such planning depends largely on its ability to adapt to various sources of uncertainty arising from collaborative operations between manufacturers and customers, interactions with suppliers of raw materials or critical components, and even internal manufacturing processes [6]. The nature of this uncertainty is multifaceted [7], often resulting in increased operational costs, reduced profitability, and diminished customer satisfaction [8]. Numerous studies emphasize key sources of uncertainty in manufacturing environments, including demand variability, fluctuations in supplier lead times, quality issues, and capacity constraints [9].

Demand uncertainty significantly impacts supply chain design. While stochastic programming models outperform deterministic approaches in optimizing strategic and tactical decisions [10], most studies overlook lead time variability caused by real-world disruptions. Companies typically address supply uncertainty through safety stocks and safety lead times [11], which trade off shortage risks against higher inventory costs. The key challenge lies in finding the optimal balance between these competing costs. For a long time, lead time uncertainty received relatively little attention in the literature, with most research in inventory management focusing predominantly on demand uncertainty [12]. In assembly systems, component lead times are often subject to uncertainty; they are rarely deterministic and typically exhibit variability [13].

The literature on stochastic lead times in assembly systems has seen significant contributions that have shaped current approaches to inventory control under uncertainty [14]. A notable study by [15] investigates a single-level assembly system under the assumptions of stochastic lead times, fixed and known demand, unlimited production capacity, a lot-for-lot policy, and a multi-period dynamic setting. In this work, lead times are modeled as independent and identically distributed (i.i.d.) discrete random variables. The authors focus on optimizing inventory policies by balancing component holding costs and backlogging costs for finished products, ultimately deriving optimal safety stock levels when all components share identical holding costs. This problem is further extended in [16], which considers a different replenishment strategy—the Periodic Order Quantity (POQ) policy. In [17], the lot-for-lot policy is retained, but a service level constraint is introduced. A branch and bound algorithm is employed to manage the combinatorial complexity associated with lead time variability. Subsequent studies [18,19,20] build on this foundation by refining models to better capture lead time uncertainty in single-level assembly systems, while also proposing extensions to multi-level systems and providing a more detailed analysis of the trade-offs between holding and backlogging costs.

Modeling multi-product, multi-component assembly systems under demand uncertainty is inherently complex. Ref. [21] proposes a modular framework for supply planning optimization, though its effectiveness depends on computational reductions and assumptions about probability distributions. For Assembly-to-Order systems, ref. [22] develops a cost-minimization model incorporating lead time uncertainty, solved via simulated annealing. Several studies [23,24,25,26] address single-period supply planning for two-level assembly systems with stochastic lead times and fixed end-product demand. Using Laplace transforms, evolutionary algorithms, and multi-objective methods, they optimize component release dates and safety lead times to minimize total expected costs (including backlogging and storage costs). Ref. [2] later improved upon [18]’s work, while [27] extended the framework to multi-level systems under similar assumptions.

Despite these advances, many existing models rely on oversimplified assumptions about delivery times and demand, limiting their applicability in real industrial contexts [21]. This highlights the urgent need for new optimization frameworks that better capture real-world complexities and component interdependencies in assembly systems. In this work, we enhance the method of [15] by explicitly incorporating (1) stochastic demand models, (2) ordering, holding, and stockout penalty costs, and (3) a Markov decision process (MDP) formulation enabling stochastic modeling of lead time and demand uncertainties.

Traditional optimization approaches for these problems face three key challenges:

- Overly simplistic assumptions: Classical deterministic or stochastic optimization methods often require explicit assumptions about demand and delivery time distributions, which may not hold in practice due to complex, unknown, or non-stationary stochastic processes [28].

- Curse of dimensionality: The high-dimensional state and action spaces in multi-component, multi-period inventory problems cause an explosion in computational complexity, rendering classical dynamic programming and linear programming approaches impractical [29,30].

- Static or computationally intensive decision-making: Many existing methods rely on static policies or require frequent re-optimization to adapt to changing system dynamics, which is computationally expensive and slow in real-time environments [31].

To overcome these limitations, this paper employs deep reinforcement learning (DRL), specifically the Deep Q-Network (DQN) algorithm, which learns optimal replenishment policies through continuous interaction with the inventory environment without requiring explicit distributional assumptions. DQN’s neural network-based function approximation enables generalization across high-dimensional states, mitigating the curse of dimensionality [32,33]. Furthermore, DQN dynamically adapts policies online, providing scalable, flexible solutions suited for complex, stochastic supply chain problems [34].

Recent trends in production planning and control (PPC) emphasize integrating artificial intelligence and machine learning, especially reinforcement learning, to handle increasing supply chain complexity and uncertainty [35,36]. Industry 4.0’s shift towards automation and decision-making autonomy further motivates adoption of data-driven, adaptive methods like DQN for inventory management [37].

Recent studies have explored the integration of deep reinforcement learning (DRL) into inventory management, particularly for complex multi-echelon systems. For instance, a modified Dyna-Q algorithm incorporating transfer learning has been proposed to address the cold-start problem in inventory control for newly launched products without historical demand data [38]. In another direction, a novel Q-network architecture based on radial basis functions (RBFs) has been introduced to simplify neural network design in DRL [39]. To further address scalability challenges, the Iterative Multi-agent Reinforcement Learning (IMARL) framework combines graph neural networks and multi-agent learning for large-scale supply chains [40].

This study develops a discrete inventory optimization model for single-level assembly systems (multi-component, multi-period) under stochastic demand and delivery conditions. Our main contributions include integrating multiple logistics cost components, relaxing common assumptions (e.g., uniform delivery distributions, fixed demand), introducing component-level stockout calculations, and employing DQN for efficient policy learning. The model supports integer decision variables for MRP compatibility while addressing dual uncertainties in demand and delivery.

The remainder of the paper is organized as follows: Section 2 defines the problem setting and sources of uncertainty. Section 3 presents the MDP formulation. Section 4 details the DQN methodology and implementation. Section 5 analyzes experimental results, demonstrating the efficacy of the proposed approach. Finally, Section 6 concludes with a summary of contributions, limitations, and future work.

2. Problem Description

Replenishment planning in single-level assembly systems under stochastic demand and lead time uncertainty presents a complex optimization problem where component orders must be determined amid two key sources of variability: (1) uncertain demand for the finished product and (2) random lead times for each component. The core optimization challenge involves minimizing the total expected costs comprising inventory holding costs, stockout penalties, and ordering costs. Crucially, demand follows a known probability distribution, while each component’s lead time is characterized by its own distinct distribution. These stochastic elements create a cascading risk effect—the failure to secure any single component due to lead time variability can halt the entire assembly process. The fundamental objective is to develop an optimal ordering strategy that achieves robust system performance while maintaining cost efficiency under uncertainty, requiring careful consideration of both demand-side and supply-side stochasticity in an integrated framework.

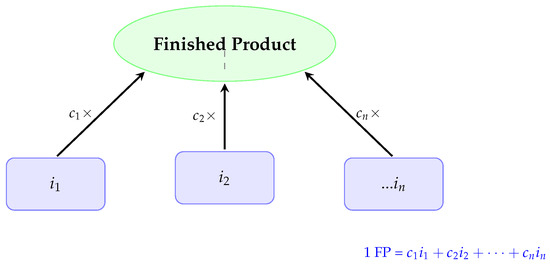

Figure 1 illustrates a single-level Bill of Materials (BOM) for an assembly system, depicting the relationship between a finished product (FP) and its components (i1, i2, …, in) The diagram shows that one unit of FP is assembled from multiple components, each with a specific consumption coefficient (ci), representing the quantity required per unit of FP.

Figure 1.

Single-level Bill of Materials (BOM) structure. The dotted line in the center represents additional components not individually shown, alongside the explicitly labeled components to , which are also required for the assembly. Source: Authors’ own elaboration.

3. Methodology

3.1. Formulating the Replenishment Problem as a Markov Decision Process (MDP)

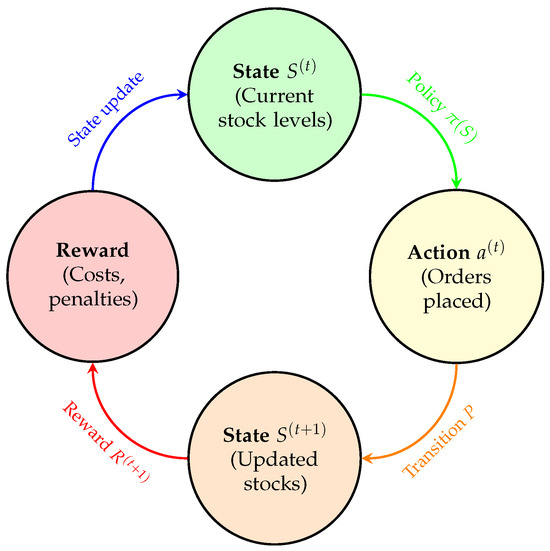

To model the replenishment planning problem under lead-time and demand uncertainty as a reinforcement learning (RL) task, we formulate it as a Markov decision process (MDP). The MDP framework captures the dynamics of inventory management, where the agent makes decisions on ordering and stock management at each time step. The reward function is designed to incorporate key cost components, including storage costs for each component, the shortage cost of finished products, penalties for stockouts of components, and the ordering costs associated with replenishment decisions.

3.1.1. Structure of the MDP Environment

The environment in a Markov decision process (MDP) is everything external to the agent. It defines how the world responds to the agent’s actions and evolves over time.

At each time step t:

- The agent observes the current state .

- It chooses an action .

- The environment responds:

- -

- It transitions to a new state .

- -

- It emits a reward .

This structure is summarized in the diagram shown in Figure 2.

Figure 2.

Reinforcement learning process. Source: Authors’ own elaboration.

Figure 2 represents a reinforcement learning process or a dynamic decision-making system in the context of inventory management. It is divided into four main components, arranged in a circular flow to illustrate the sequence of steps in the process. Each component is represented by a colored circle, and the transitions between them are shown with curved arrows.

The key parameters used in the model are summarized in Table 1.

Table 1.

List of Variables and Notations.

3.1.2. State Representation: Inventory Levels of Components

The state of the system can be represented by a vector of the current stock levels for all components i.

3.1.3. Action Representation: Component Order Quantities

An action represents the decisions regarding the quantities to be ordered at each period t. Each corresponds to the quantity ordered for each component at the beginning of the period.

This is the vector of quantities ordered for each component i at period . denotes the initial action space (discrete values). Each component i can take any value in the interval .

3.1.4. Adaptive Action Space Based on Component Importance

To ensure that the algorithm adjusts ordered quantities based on the specific characteristics of each component and avoids restricted or similar order quantities, it is important to define a flexible action space. A poorly defined action space (i.e., limited or uniform order quantities) could reduce the algorithm’s adaptability.

To address this, we dynamically adjust the action range for each component, ensuring sufficient diversity in possible order quantities. This allows the algorithm to adapt orders based on each component’s specific needs.

If the action space were too limited or similar across components, the algorithm might lack the flexibility needed to optimize orders effectively. To prevent this, we assign different quantity ranges to each component based on its importance. Instead of using a fixed set of possible actions, we adapt order ranges according to consumption coefficients and maximum delivery times. Components with higher consumption and longer delivery times have wider action ranges, allowing the algorithm to order more or less as needed. This flexibility enhances the algorithm’s ability to optimize stock management.

So to adjust the action space for each component based on its consumption coefficient and maximum delivery time, it is useful to dynamically adapt the action space according to the consumption of each component and its delivery period ().

Scaling the Action Space

The more a component is consumed and the longer it takes to be delivered, the larger its action range should be for better stock management. This can be achieved by adjusting the upper limit of the action space for each component.

Action Space Modeling Logic

Instead of defining a fixed-size discrete space for each component (e.g., from 0 to ), we can implement an adaptive action space tailored to each component’s characteristics.

Action Space for a Component

with Estimated requirement =

This strategy is cautious, as it ensures that the model accounts for scenarios where demand is at its peak and delivery times are at their longest. By considering only the maximum demand and maximum delivery time, we prevent underestimating needs, even in extreme conditions, by creating an action space that includes all possible situations. In cases of overestimation, the agent will learn an optimal policy that naturally avoids unnecessary actions.

Global Action Space

3.1.5. Modeling Transition Dynamics with Random Demand and Lead Times

The transition function in a replenishment planning problem under uncertainty describes how the state of the system evolves from one period to the next. This evolution depends on the current inventory levels, the order quantities decided by the agent, and the realizations of two sources of randomness: the demand D and the delivery lead times. Specifically, the function captures how incoming deliveries (subject to random lead times) and outgoing demand (which is also stochastic) affect the inventory position and outstanding orders over time. It forms the core mechanism through which uncertainty propagates through the system and impacts future decisions.

Formally, the transition function can be written as

3.1.6. Modeling Demand and Delivery Time Uncertainties

- Let D be a random variable representing the demand for the finished product. It follows a discrete distribution on , with known associated probabilities:

- The random variable represents the delivery time of component ii, which follows a discrete probability distribution on the set with the associated probabilities:

3.1.7. Inventory Update and Received Quantities Computation

The inventory level for each component i at the end of period t is given by

The quantity received is the sum of orders placed in previous periods that are delivered in period t.

- : An indicator function that is equal to 1 if the order for component i placed in period is delivered in period t and 0 otherwise, i.e., an indicator that is equal to 1 if the delivery time for the order for component i placed in period k is exactly equal to , and 0 otherwise.

3.1.8. Reward Function: Cost-Based Definition

The reward for a period t is defined as the inverse of the total cost:

3.1.9. Cost Structure by Item Type

The total cost at each decision step is composed of multiple cost elements, where each cost type (e.g., storage, shortage, zero-inventory penalty, and ordering) is applied to all components based on their respective inventory levels and ordering decisions.

- Storage cost is the cost associated with maintaining component inventory in the warehouse. It is calculated based on the number of units of the components and the unit storage cost. The following formula calculates the storage cost associated with components .: Represents the storage cost for each component when the stock level is positive.We add the storage costs of the components only if their stock levels are positive (), that is, if the components are in stock.

- Shortage cost is calculated based on the inventory levels of the components—specifically, the number of missing units—and the shortage cost per component unit . It represents the cost associated with the unavailability of components required for the production of the finished product, which can result in stockouts and lost sales. A shortage of components, referred to as a “component shortage,” may therefore cause disruptions in the production process.: Represents the shortage cost for each component. This shortage is linked to the lack of component i, when its stock level is negative.We therefore include the cost of component shortages only when the stock is insufficient (). A negative stock level for component i indicates an inability to meet the production requirements of the finished product.

- Empty inventory cost (zero inventory): represents a situation where replenishment is needed quickly. It is not a complete shortage (because there is still time to react), but it is a situation that could quickly lead to a shortage. The inventory cost is defined by

- Order launch cost: The order launch cost (or order placement cost) refers to all the expenses associated with issuing and processing an order. The order launch cost is paid each time an order of i is launched at t so if

3.1.10. Total Cost Function

3.1.11. Definition of the Optimal Ordering Policy

A function that determines the optimal quantity to order in each period to minimize the expected total cost over horizon T:

The policy is a function that defines the quantity to order for each state . Your objective is to find the policy that minimizes the expected total cost across all periods . So the policy defines how to choose the quantities to order based on the current state of the environment (e.g., current inventory levels, lead time probabilities, etc.).

3.1.12. Objective

Minimize the cumulative reward function R over the planning horizon, which represents the total cost, by adapting the ordering policy to minimize inventory and stockout costs. This model takes into account the stochastic demand for the finished product and random lead times of components with specified probability distributions and seeks to determine the optimal order quantities to minimize inventory, stockout, and order release costs.

4. DQN (Deep Q-Network)

Deep reinforcement learning (deep RL) is a combination of reinforcement learning (RL) and deep neural networks (deep learning) [41]. It allows an agent to learn optimal strategies in complex environments using powerful nonlinear approximations. Deep RL can solve complex problems (games, robotics, NLP) thanks to the power of deep networks. Major challenges (instability, divergence) have been partially addressed by techniques such as DQN [42].

4.1. Structure of the DQN Algorithm

The implemented Deep Q-Network (DQN) algorithm is structured around four core components. The first is the Replay Buffer (Algorithm 1), which stores past transitions in the form (state, action, reward, next_state, done) and enables the agent to learn from a randomized mini-batch of experiences, thereby reducing temporal correlation and stabilizing learning. The second component is the Q-Network (Algorithm 2), a feedforward neural network consisting of an input layer, a hidden layer with ReLU activations, and an output layer that approximates Q-values for each possible action. The third module is the DQN Agent (Algorithm 3), which initializes the Q-Network and Target Network, sets essential hyperparameters (e.g., learning rate, discount factor , and exploration rate ), and manages the optimization and interaction with the replay buffer. Finally, the Action Selection (Algorithm 4) mechanism adopts an epsilon-greedy strategy to balance exploration and exploitation, selecting either a random action with probability or the action with the maximum estimated Q-value. This architecture provides a modular and efficient foundation for deep reinforcement learning in environments with discrete and multi-dimensional action spaces.

| Algorithm 1 Replay Buffer |

|

| Algorithm 2 Q-Network Forward Pass |

|

| Algorithm 3 DQN Agent |

|

| Algorithm 4 Select Action |

|

4.2. Multi-Space DQN Architecture for Stochastic Assembly Systems

4.2.1. Motivation and Contribution

In traditional deep Q-network (DQN) implementations, a fixed and uniform action space is often assumed across all decisions. However, in stochastic assembly systems, such an assumption is inadequate due to the heterogeneity of components—specifically, variations in lead times and consumption rates. Through empirical analysis, we observed that using a single action space across components results in convergence towards suboptimal, overly generalized policies that fail to account for item-specific dynamics.

4.2.2. Proposed Method: Component-Specific Action Spaces

To overcome this limitation, we propose a novel DQN architecture that assigns a separate action space to each component i. The maximum admissible order quantity is computed based on the structural characteristics of the system as follows:

This formulation ensures that each component is given a sufficiently expressive and structurally consistent decision space, enabling the learning algorithm to adapt replenishment strategies under uncertainty in a more targeted and efficient manner.

4.2.3. Novelty and Differentiation

Unlike existing deep reinforcement learning (DRL) approaches that rely on a global policy over a shared action space, our method introduces a unified multi-agent structure within the DQN framework, where each component is governed by a distinct policy defined over its own scaled action space. This design allows the learning process to capture component-specific characteristics, such as delay risks or high consumption frequency, thereby avoiding systematic underordering. To the best of our knowledge, this form of component-wise action space scaling has not been previously applied in the context of RL-based inventory control for assembly systems, making it a novel and valuable contribution to the literature.

4.2.4. Summary of Empirical Design Validation

Through a series of ablation and sensitivity analyses, we validated the effectiveness of our heuristic for defining the component-wise action space in the Multi-Space DQN architecture. Alternative formulations—based on mean values or exaggerated scaling—led to suboptimal behavior, including frequent stockouts, overordering, or unstable learning. In contrast, our proposed heuristic:

proved to offer the best trade-off between decision space expressiveness and learning efficiency. It allowed the agent to maintain stable training, reduce stockouts, and avoid excessive holding costs. These empirical evaluations confirm that the heuristic is not only computationally efficient but also essential for ensuring robust policy learning under uncertainty.

4.3. Comprehensive Comparison of Inventory Planning Methods

Table 2 compares classical models, optimization methods, machine learning approaches, and metaheuristics for inventory and replenishment planning.

Table 2.

Comparison of Inventory Planning Methods.

Table 3 provides a comprehensive summary of the key parameters used in both the inventory environment and the deep Q-network (DQN) agent, outlining their values and respective roles in the learning and decision-making processes.

Table 3.

Key parameters used in the simulation and learning processes. All parameters were empirically selected and tuned based on the system’s dynamics and performance during preliminary experiments. The parameter choices reflect standard practices in DRL for inventory control and are fine-tuned for the context of the study.

We deliberately used a small-scale experimental setup with three components and simplified parameter values to maintain clarity, interpretability, and computational feasibility. This choice enabled us to clearly isolate and analyze the learning behavior of the DQN agent, particularly in terms of cost trade-offs and policy convergence. However, we would like to emphasize that the underlying simulation environment and DQN framework are fully generalizable. We highlight that scaling up to larger systems is computationally intensive due to the growth in state and action spaces and thus may require more powerful hardware. That said, the current model architecture is designed to support such extensions and larger-scale testing is a planned direction for future work.

5. Results and Discussion

The environment parameters define the operational characteristics of the inventory system, such as the number of components, penalty and holding costs, demand and lead time uncertainties, and the structure of consumption. These parameters ensure the simulation accurately reflects the complexities of real-world supply chains. The agent parameters govern the learning process, including the neural network architecture, learning rate, exploration behavior, and training configuration. The inclusion of a replay buffer further enhances learning stability by breaking temporal correlations in the training data. The DQN algorithm is employed to learn optimal ordering policies over time. By interacting with the environment through episodes, the agent receives feedback in the form of rewards, which reflect the balance between minimizing shortage penalties and storage costs. To facilitate the simulation and make the analysis more interpretable, simple parameter values were used in the first table, including limited lead time possibilities and a small range of demand values. Additionally, the reward function was normalized (reward divided by 10,000) to simplify numerical computations and stabilize the learning process. Despite this simplification, the implemented environment and reinforcement learning algorithms are fully generalizable: they can handle any number of components (n), multiple lead time scenarios, and a range of demand values (m), making the model adaptable to more complex and realistic settings. However, scaling up to larger instances requires significant computational resources, as the state and action spaces grow exponentially. Therefore, a powerful machine is recommended to run simulations efficiently when moving beyond the simplified test case.

The algorithm is developed using the Python version: 3.11.13 programming language, which is particularly well-suited for research in reinforcement learning and operations management due to its expressive syntax and the availability of advanced scientific libraries. The implementation employs NumPy version: 2.0.2 for efficient numerical computation, particularly for manipulating multi-dimensional arrays and performing vectorized operations that are essential for simulating the environment and computing rewards. Visualization of training performance and inventory dynamics is facilitated through Matplotlib version: 3.10.0, which provides robust tools for plotting time series and comparative analyses. The core reinforcement learning model is implemented using PyTorch version: 2.6.0+cu124, a state-of-the-art deep learning framework that supports dynamic computation graphs and automatic differentiation. PyTorch is used to construct and train the deep q-network (DQN), manage the neural network architecture, optimize parameters through backpropagation, and handle experience replay to stabilize the learning process. Collectively, these libraries provide a powerful and flexible platform for modeling and solving complex inventory optimization problems under stochastic conditions.

The formulation and relative weighting of cost components critically affect the learning behavior and convergence of the DQN agent. To maintain numerical stability, we normalized the reward function by dividing it by 10,000. Empirical results showed that overemphasizing shortage costs led to excessive inventory, while high holding cost weights increased shortages. Large ordering costs caused delayed or infrequent ordering. These findings highlight the significant impact of reward design on policy outcomes. Although we selected weights based on empirical performance, a formal sensitivity analysis remains a valuable direction for future research.

With this reward structure and cost weighting scheme established, we proceed to evaluate the agent’s learning performance and policy behavior during training.

5.1. Performance and Policy Evaluation

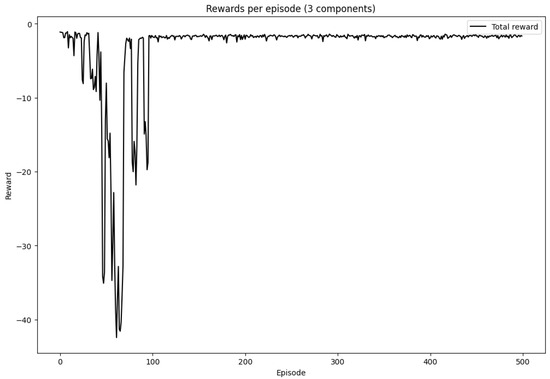

Figure 3. Average reward per episode during training. The reward function is based on the negative of the total cost (holding, shortage, and ordering) and is normalized by dividing by 10,000 for numerical stability. The learning curve demonstrates convergence over time.

Figure 3.

Rewards per episode. Source: Generated by authors using experimental data.

5.2. Quantitative Performance Summary

To complement the qualitative learning curves, we conducted a rigorous quantitative evaluation of our proposed DQN-based approach. We computed key statistical metrics over 500 independent training runs, including:

- Mean total cost (i.e., cumulative negative reward),

- Average number of stockouts per episode,

- Global service level,

- Standard deviation of total cost (to capture variability),

- Total number of training episodes,

- Training time (minutes),

- Component-wise availability.

Table 4 summarizes these results, providing a comprehensive assessment of performance and robustness.

Table 4.

Quantitative Performance Metrics of the Proposed DQN Approach.

These quantitative results confirm that our approach maintains a high service level while effectively balancing stockouts and inventory costs, demonstrating both reliability and robustness.

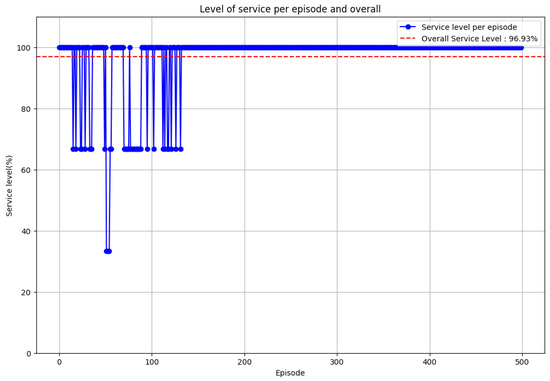

5.3. Global Service Level Plot

Figure 4 illustrates the evolution of the global service level over training episodes, further emphasizing the stability and effectiveness of the learned policy.

Figure 4.

Global service level over training episodes. Source: Generated by authors using experimental data.

5.4. Policy and Inventory Evolution

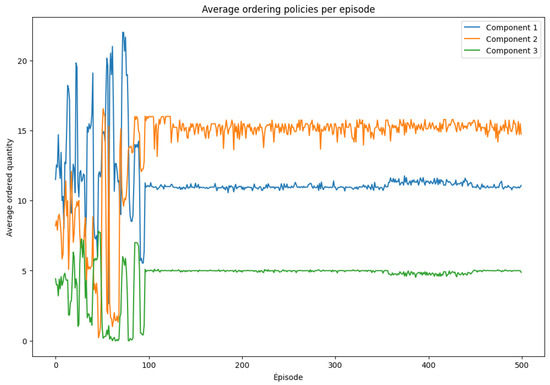

Figure 5 illustrates the agent’s learned ordering policies over training episodes. Early in training, the ordered quantities fluctuate significantly, reflecting the exploration phase of learning. As training progresses, the policy converges toward more stable and consistent ordering behaviors. Notably, Component 2 is ordered most frequently and in larger quantities, indicating its higher importance or greater risk of shortage. In contrast, Component 3 is ordered less often, which may be attributed to lower consumption rates or more favorable lead times.

Figure 5.

Average ordering policies per episode. Source: Generated by authors using experimental data.

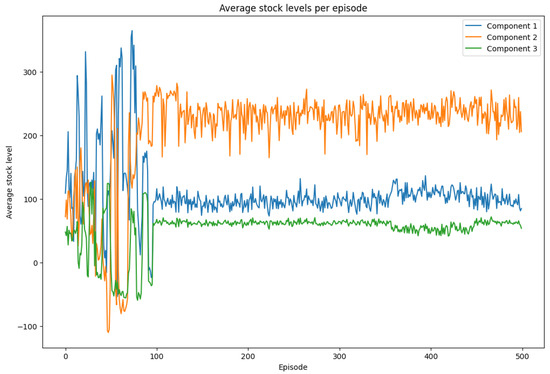

The evolution of average stock levels is shown in Figure 6. Initially, stock levels exhibit considerable volatility, including frequent shortages (negative inventory values). Over time, the agent learns to anticipate demand and lead time uncertainties more effectively, resulting in smoother inventory trajectories. The final stock levels-particularly higher for component 2 underscore its strategic importance and confirm the ordering strategy observed in Figure 5.

Figure 6.

Average stock levels per episode. Source: Generated by authors using experimental data.

Together, these results clearly demonstrate the capability of the DQN algorithm to adaptively optimize inventory policies under uncertainty. The convergence of rewards, consistency in ordering patterns, and stabilization of stock levels collectively validate the effectiveness and robustness of the learned policy.

5.5. Computational Efficiency and Scalability

Our experiments, conducted on a MacBook Air M1 (2020), designed by Apple Inc. (Cupertino, CA, USA), and assembled in China with 8 GB RAM and a 256 GB SSD via Google Colab, show that training a three-component system for 500 episodes with 50 steps each takes approximately 7 min, demonstrating computational efficiency on moderate hardware for small-scale problems. However, scaling up to more components, complex lead times, and varied demand significantly enlarges the action space, increasing training time and memory demands. This highlights a practical trade-off between model complexity and available computational resources.

5.6. Comparison of Decision Latency Across Policies

We aim to quantify and compare the decision latency of the proposed Deep Q-Network (DQN)policy with three classical replenishment strategies: the (Q, R) policy, the (s, S) policy, and the Newsvendor model. Decision latency is defined here as the time elapsed from observing the system state to issuing a replenishment action. This metric is critical in real-time supply chain systems where decisions must be made quickly in response to dynamic changes in inventory levels and demand.

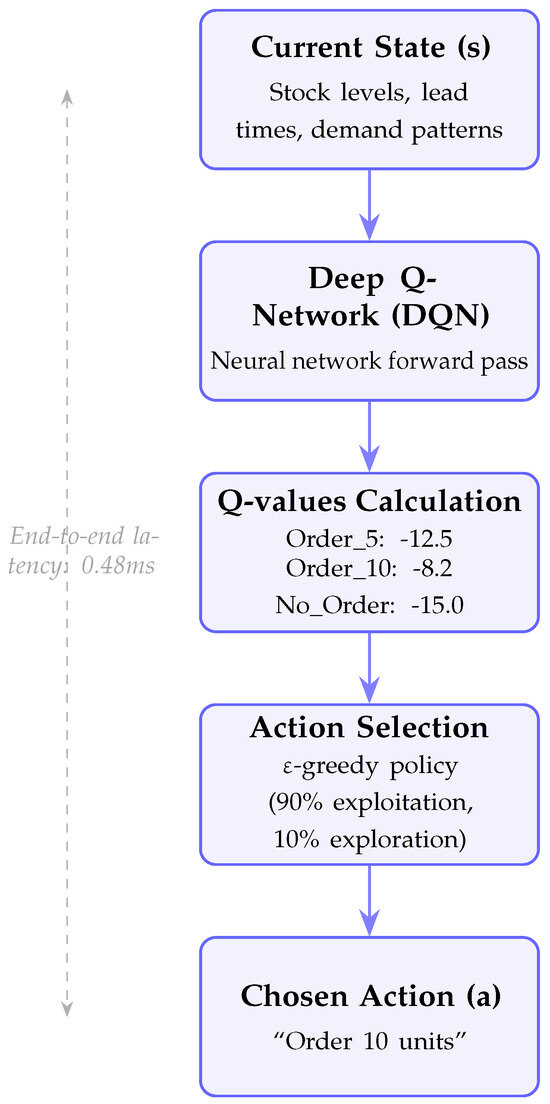

5.6.1. DQN-Based Inventory Replenishment Decision Process

The Figure 7 outlines the DQN’s decision-making process for inventory control: it takes the current state as input, computes Q-values via a forward pass, selects the optimal action, and outputs a replenishment decision. This inference process is fast (e.g., 0.48 ms), enabling real-time application in dynamic supply environments.

Figure 7.

DQN-based inventory replenishment decision process. The system takes the current state (e.g., stock levels, demand) as input, computes Q-values for possible actions, and selects the optimal replenishment decision (e.g., order quantity). The entire process is executed in near real-time, making it suitable for dynamic inventory control. Source: Authors’ own elaboration.

5.6.2. Policy Logic and Latency Source

Table 5 presents a qualitative comparison of the policies under consideration, including their decision logic and what we aim to measure for latency. While classical policies rely on simple threshold or closed-form expressions, the DQN involves neural network inference, which may introduce non-negligible computational delay.

Table 5.

Policy Decision Logic and Measured Quantity.

5.6.3. Measurement of Inference Time

The inference time for each policy was measured over 500 runs using time.perf_coun-ter(), and only the computation time of decision logic was considered (excluding simulation steps or reward calculation). For the DQN policy, the action was computed by executing the forward pass through the trained neural network using the select_action function, integrated within our custom simulation environment, InventoryMDPEnv. This environment was specifically developed for this research to simulate stochastic assembly systems with uncertain demand and lead times. It allows realistic evaluation of inference latency within a dynamic and controlled decision-making context. To ensure fairness, all policies—including (Q, R), (s, S), and Newsvendor—were also executed under the same environment, taking identical state inputs and returning replenishment actions. This guarantees a consistent basis for latency measurement across all strategies.

Inference Diagnostic Example: Representative outputs from the DQN agent during a single inference step (one complete episode with max-steps = 1 (one run)) are summarized in Table 6, demonstrating policy decision latency, resulting inventory actions under initial stock conditions, and the selected action index, and its corresponding Q-value.

Table 6.

DQN inference diagnostic (3-component system).

Table 7 summarizes the inference time results collected over 500 independent runs using our custom InventoryMDPEnv simulation environment.

Table 7.

Decision latency comparison for 3-component system with action space MultiDiscrete ([27, 18, 9]).

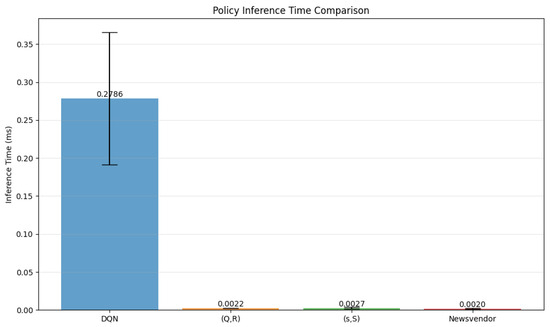

Analysis:: The DQN policy demonstrates significantly higher inference times compared to classical threshold-based policies due to the computational overhead of neural network forward passes over a large action space. For the 3-component system, the average DQN inference time is approximately 0.27 ms, which is about 80–120 times slower than rule-based methods (e.g., (Q, R), (s, S), Newsvendor). Nevertheless, this remains well within practical real-time constraints for inventory replenishment decisions.

Figure 8 illustrates that the DQN policy incurs a noticeably higher inference time relative to classical inventory management approaches.

Figure 8.

Comparison of policy inference times (in milliseconds) across different reinforcement learning algorithms (DQN) and inventory management policies (Q, R), (s, S), and Newsvendor. Source: Generated by authors using experimental data.

5.6.4. Reasons for DQN Inference Delay

Despite being suitable for real-time decision-making, the DQN policy exhibits higher inference latency than classical models due to several factors:

- -

- Large Action Space

The DQN outputs a Q-value for every possible action in the discrete action space. In our system, the action space is defined using the following rule:

This results in a MultiDiscrete action space, where the total number of possible joint actions is:

Table 8 shows an example of how the action space size grows combinatorially based on component-specific parameters.

Table 8.

Action Space Size for 3-Component System.

- -

- Neural Network Output Size

- Our Q-network architecture has an input layer of size n, two hidden layers with 128 neurons each, and an output layer with neurons.

- -

- CPU-Only Inference

Inference is run on CPU (e.g., MacBook Air or Google Colab CPU backend), without GPU acceleration. PyTorch forward passes on CPU are typically 5–20× slower than on GPU for high-dimensional output layers.

- -

- Simulation and System-Level Factors

Extra delays may stem from the simulation environment InventoryMDPEnv (our custom simulator), which handles transitions, order queues, and lead-time sampling. Background CPU load and memory garbage collection also introduce runtime variance.

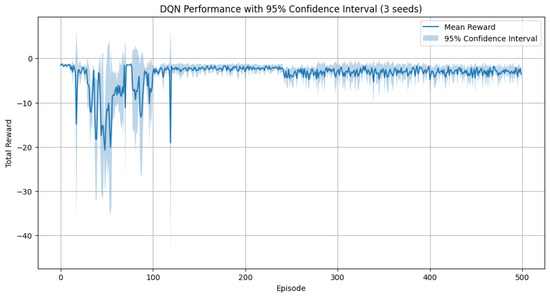

5.7. Evaluation with Multiple Random Seeds and Confidence Metrics

To ensure the robustness, reproducibility, and generalizability of our proposed Deep Q-Network (DQN) policy, we conducted all experiments using three independent random seeds: 42, 123, and 999. Each seed initialized the random number generators for NumPy version: 2.0.2, PyTorch, and Python’s random module, thereby controlling the initialization of network weights, environment behavior, and replay buffer sampling.

For each seed, the DQN agent was trained from scratch and evaluated over a fixed number of episodes. After aggregating the results from all seeds, we computed both central performance metricsand 95% confidence intervalsfor each episode using the following formulation:

This approach provides statistically meaningful insights into the stabilityand variabilityof learning outcomes due to stochastic factors in both environment and policy optimization. In addition to cumulative reward, we evaluated the DQN policy using the following operational performance metrics:

- Service Level: the percentage of component units available to satisfy assembly demand without shortage.The service level percentage is calculated as:where:

- -

- : Stock vector at timestep t (for N components)

- Total Stockouts: the number of timesteps at which at least one component was unavailable when required.The stockout count is defined as:

DQN Performance over Seeds

The results are summarized below. Table 9 presents the evaluation metrics of the DQN policy, including service level and total stockouts, averaged over three random seeds.

Table 9.

DQN Evaluation Metrics (averaged over 3 seeds).

Figure 9 illustrates the DQN policy performance along with 95% confidence intervals across three different training seeds.

Figure 9.

DQN Performance with 95% Confidence Interval (3 seeds). Source: Generated by authors using experimental data.

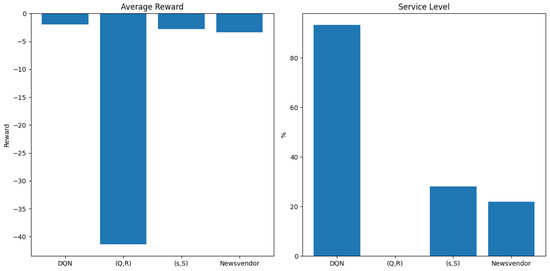

5.8. Baseline Policy Comparison

To benchmark the effectiveness of the learned DQN policy, we compared it with three classical inventory control strategies: 1. (Q, R) Policy (Quantity-Reorder Point): A continuous review policy that orders fixed quantity Q whenever inventory drops below reorder point R. Our implementation uses component-specific parameters (Q = [3, 3, 2], R = [2, 2, 1]), ordering the predetermined quantity for each component when stock falls below its reorder threshold. 2. (s, S) Policy (Min-Max): A periodic review policy that orders up to level S when inventory falls below s. We implemented this with s = [1, 1, 1] and S = [4, 4, 3], triggering replenishment to the target level S for each component when current stock is below its minimum threshold s. 3. Newsvendor Policy (Optimal Quantile Rule): A single-period model that determines optimal order quantities based on demand distribution quantiles. Our simplified implementation maintains target inventory levels [2, 2, 2], ordering exactly enough to reach these predetermined targets regardless of demand distribution. The policies were evaluated under identical simulation settings, using the same cost structure, lead time distributions, and demand distribution as the DQN agent.

Key differences in implementation approach:

- The (Q, R) policy makes independent per-component decisions when thresholds are crossed.

- The (s, S) policy coordinates batch replenishment across components at periodic intervals.

- The Newsvendor policy maintains fixed target inventory levels per component.

- All baseline policies are static and hand-tuned, whereas the DQN policy learns adaptively from the environment.

Table 10 presents a comparative summary of the DQN and baseline inventory policies in terms of reward, service level, stockouts, and decision latency.

Table 10.

Policy Comparison.

Figure 10 compares the DQN policy with three classical baselines in terms of average reward and service level.

Figure 10.

Comparison of DQN, (Q, R), (s, S), and Newsvendor policies in terms of average reward (top) and service level (bottom). The DQN policy achieves the highest average reward (−1.93) and service level (93.24%), while (Q,R) performs poorly across both metrics. Results are aggregated over 500 episodes with a max-step of 50 per episode. Source: Generated by authors using experimental data.

These results demonstrate that the DQN-based replenishment policy significantly outperforms classical baselinesin terms of service level and stockout reduction, albeit at the cost of slightly higher inference time. This trade-off remains acceptable in real-time production settings where high service availability is critical.

5.9. Impact of Lead Times on Ordered Quantities

When a component has a longer and more uncertain lead time, the agent tends to adapt its ordering strategy to mitigate the risk of stockouts. This often results in placing orders more frequently in anticipation of potential delays. Additionally, the agent may choose to maintain a higher inventory level as a buffer, ensuring that production is not interrupted due to late deliveries.

Table 11 presents a detailed analysis of the relationship between each component’s consumption level, its lead time uncertainty, and the resulting ordering behavior by the agent. Components with higher consumption but more reliable lead times, such as , allow for stable and moderate ordering. In contrast, components like and especially , which are subject to longer and more uncertain delivery delays, require the agent to compensate by increasing order frequency. This strategic adjustment helps to avoid shortages despite the variability in supplier lead times. The table summarizes these insights for each component.

Table 11.

Analysis of Order Quantities and Lead Times for Each Component.

To better understand the agent’s ordering behavior, Table 12 addresses key questions regarding the observed stock levels for each component. Although one might expect ordering decisions to align directly with consumption coefficient, this is not always the case. In reality, the agent adjusts order quantities and stock levels by taking into account both the demand and the uncertainty in lead times. As shown below, components with a higher risk of delivery delays tend to be stocked more heavily, even if their consumption coefficient is relatively low, while components with reliable lead times require less buffer stock.

Table 12.

Explanation of Observed Results.

Summary of Effects

Final Observation: The agent adjusts decisions based on lead time risks, not just consumption rates. Table 13 summarizes the lead time characteristics and the corresponding agent strategies for each component, highlighting how uncertainty in delivery impacts stock levels.

Table 13.

Summary of lead time effects on stock levels.

5.10. Impact of Uncertain Demand on Order Quantities

The expected demand for the finished product is:

Table 14 presents the expected demand per component, calculated by multiplying the consumption coefficients by the average demand.

Table 14.

Expected Demand per Component.

This suggests that, in an ideal case without lead time risks, the order quantities should follow the ratio:

5.11. Variability in Demand and Its Effect

In addition to lead time uncertainties, the agent must also consider variability in demand and cost-related trade-offs when determining order quantities. The decision-making process is not only influenced by the likelihood of delayed deliveries but also by how demand fluctuates over time and how different types of costs interact. Table 15 outlines the key factors that affect the agent’s ordering behavior and explains their respective impacts on the optimal policy formulation.

Table 15.

Factors Influencing the Optimal Ordering Policy.

5.12. Interplay Between Demand Uncertainty and Lead Time Risks

If the observed order quantities do not strictly follow the expected pattern , this may be due to the agent anticipating delivery delays and adjusting order sizes accordingly. Such adjustments also reflect an effort to minimize shortage costs, potentially resulting in overstocking components with uncertain lead times. Additionally, the agent may adopt a dynamic policy that evolves over time in response to past shortages, modifying future decisions based on observed system performance. Demand uncertainty forces the agent to carefully balance risk and cost, and while component should theoretically be ordered the most due to its high consumption, the impact of lead time variability and the need to hedge against delays can significantly alter this behavior.

5.13. Good Points in Our Model

- Model convergence: The total reward stabilizes after approximately 100 episodes, indicating that the agent has found an efficient replenishment policy.

- Improved ordering decisions: The ordered quantities for each component become more consistent, avoiding excessive fluctuations observed at the beginning.

- Reduction of stockouts: Despite some variations, the average stock levels tend to remain positive, meaning the agent learns to anticipate demand and delivery lead times.

- Adaptation to uncertainties: The agent appears to adapt to random lead times and adjusts its orders accordingly.

6. Conclusions

This study presents significant advancements in the application of deep reinforcement learning techniques to replenishment planning under uncertainty. A novel discrete-time Markov decision process (MDP) model is introduced for single-level assembly systems, accounting for random delivery lead times and stochastic demand. The optimization criterion integrates stockout costs, inventory holding costs, and ordering costs. To address this problem, a custom simulation environment is developed to model the dynamics of a replenishment system under uncertainty. The adapted Deep Q-Network (DQN) algorithm is then applied to derive optimal replenishment policies for each component. In addition, the integration of local search—through multiple action spaces specific to each component—accelerates convergence and significantly improves solution quality. Experimental results demonstrate the effectiveness and robustness of the proposed DQN-based approach.

Research Outlook

Future research should focus on extending the model to multi-level assembly systems, which pose additional challenges due to increased structural complexity and interdependencies among components. A key direction involves reformulating the total expected cost function to accommodate nonlinearities more explicitly. Further investigation may also include the integration of interpolation techniques and queueing mechanisms to improve production planning and scheduling under various sources of uncertainty.

Importantly, while this study is based on a convergent (parallel) assembly structure—where all components are independently required to assemble the final product-future work should explore alternative and heterogeneous topologies, such as star configurations with central bottlenecks or mesh networks with redundant paths. This would enable a more comprehensive assessment of the proposed method’s adaptability to complex and realistic supply chain structures.

A critical need persists for the ongoing refinement of supply planning models to more effectively manage uncertainty, especially within complex, multi-tiered production systems. While this work contributes to addressing demand and lead time variability, future efforts should aim to incorporate broader uncertainty dimensions and enhance the scalability of the proposed methods. Advancing the balance between theoretical rigor and industrial applicability will be crucial for driving practical improvements in supply chain performance.

Author Contributions

All authors contributed to the conceptualization, modeling, implementation, analysis, and writing of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The datasets generated and analyzed during the current study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Hill, C.A.; Zhang, G.P.; Miller, K.E. Collaborative planning, forecasting, and replenishment & firm performance: An empirical evaluation. Int. J. Prod. Econ. 2018, 196, 12–23. [Google Scholar]

- Sakiani, R.; Ghomi, S.F.; Zandieh, M. Multi-objective supply planning for two-level assembly systems with stochastic lead times. Comput. Oper. Res. 2012, 39, 1325–1332. [Google Scholar]

- Zhang, G.; Shang, J.; Li, W. Collaborative production planning of supply chain under price and demand uncertainty. Eur. J. Oper. Res. 2011, 215, 590–603. [Google Scholar]

- Lee, H.; Wu, J. A study on inventory replenishment policies in a two-echelon supply chain system. Comput. Ind. Eng. 2006, 51, 257–263. [Google Scholar]

- Ross, D.F. Replenishment Inventory Planning. In Distribution Planning and Control: Managing in the Era of Supply Chain Management; Springer: Berlin/Heidelberg, Germany, 2004; pp. 297–358. [Google Scholar]

- Pan, W.; So, K.C. Component procurement strategies in decentralized assembly systems under supply uncertainty. IIE Trans. 2016, 48, 267–282. [Google Scholar]

- Ji, Q.; Wang, Y.; Hu, X. Optimal production planning for assembly systems with uncertain capacities and random demand. Eur. J. Oper. Res. 2016, 253, 383–391. [Google Scholar]

- Dolgui, A.; Prodhon, C. Supply planning under uncertainties in MRP environments: A state of the art. Annu. Rev. Control 2007, 31, 269–279. [Google Scholar]

- Wazed, M.A.; Ahmed, S.; Nukman, Y. Uncertainty factors in real manufacturing environment. Aust. J. Basic Appl. Sci. 2009, 3, 342–351. [Google Scholar]

- Hamta, N.; Akbarpour Shirazi, M.; Fatemi Ghomi, S.; Behdad, S. Supply chain network optimization considering assembly line balancing and demand uncertainty. Int. J. Prod. Res. 2015, 53, 2970–2994. [Google Scholar]

- Van Kampen, T.J.; Van Donk, D.P.; Van Der Zee, D.J. Safety stock or safety lead time: Coping with unreliability in demand and supply. Int. J. Prod. Res. 2010, 48, 7463–7481. [Google Scholar]

- Ammar, O.B.; Marian, H.; Dolgui, A. Supply planning for multi-levels assembly system under random lead times. IFAC-PapersOnLine 2015, 48, 254–259. [Google Scholar]

- Ammar, O.B.; Hnaien, F.; Marian, H.; Dolgui, A. Optimization approaches for multi-level assembly systems under stochastic lead times. In Metaheuristics for Production Systems; Springer: Berlin/Heidelberg, Germany, 2016; pp. 93–107. [Google Scholar]

- Dolgui, A.; Ammar, O.B.; Hnaien, F.; Louly, M.A. A state of the art on supply planning and inventory control under lead time uncertainty. Stud. Inform. Control 2013, 22, 255–268. [Google Scholar]

- Dolgui, A.; Ould-Louly, M.A. A model for supply planning under lead time uncertainty. Int. J. Prod. Econ. 2002, 78, 145–152. [Google Scholar]

- Ould-Louly, M.A.; Dolgui, A. The MPS parameterization under lead time uncertainty. Int. J. Prod. Econ. 2004, 90, 369–376. [Google Scholar]

- Louly, M.A.; Dolgui, A.; Hnaien, F. Supply planning for single-level assembly system with stochastic component delivery times and service-level constraint. Int. J. Prod. Econ. 2008, 115, 236–247. [Google Scholar]

- Hnaien, F.; Dolgui, A.; Ould Louly, M.A. Planned lead time optimization in material requirement planning environment for multilevel production systems. J. Syst. Sci. Syst. Eng. 2008, 17, 132–155. [Google Scholar]

- Louly, M.A.; Dolgui, A. Optimal time phasing and periodicity for MRP with POQ policy. Int. J. Prod. Econ. 2011, 131, 76–86. [Google Scholar]

- Louly, M.A.; Dolgui, A. Optimal MRP parameters for a single item inventory with random replenishment lead time, POQ policy and service level constraint. Int. J. Prod. Econ. 2013, 143, 35–40. [Google Scholar]

- Danilovic, M.; Vasiljevic, D. A novel relational approach for assembly system supply planning under environmental uncertainty. Int. J. Prod. Res. 2014, 52, 4007–4025. [Google Scholar]

- Chauhan, S.S.; Dolgui, A.; Proth, J.M. A continuous model for supply planning of assembly systems with stochastic component procurement times. Int. J. Prod. Econ. 2009, 120, 411–417. [Google Scholar]

- Tang, O.; Grubbström, R.W. The detailed coordination problem in a two-level assembly system with stochastic lead times. Int. J. Prod. Econ. 2003, 81, 415–429. [Google Scholar]

- Fallah-Jamshidi, S.; Karimi, N.; Zandieh, M. A hybrid multi-objective genetic algorithm for planning order release date in two-level assembly system with random lead times. Expert Syst. Appl. 2011, 38, 13549–13554. [Google Scholar]

- Hnaien, F.; Delorme, X.; Dolgui, A. Genetic algorithm for supply planning in two-level assembly systems with random lead times. Eng. Appl. Artif. Intell. 2009, 22, 906–915. [Google Scholar]

- Hnaien, F.; Delorme, X.; Dolgui, A. Multi-objective optimization for inventory control in two-level assembly systems under uncertainty of lead times. Comput. Oper. Res. 2010, 37, 1835–1843. [Google Scholar]

- Ammar, O.B.; Marian, H.; Wu, D.; Dolgui, A. Mathematical model for supply planning of multi-level assembly systems with stochastic lead times. IFAC Proc. Vol. 2013, 46, 389–394. [Google Scholar]

- Levi, R.; Roundy, R.O.; Shmoys, D.B.; Truong, V.A. Approximation algorithms for capacitated stochastic inventory control models. Oper. Res. 2008, 56, 1184–1199. [Google Scholar]

- Powell, W.B. Clearing the jungle of stochastic optimization. In Bridging Data and Decisions; Informs: Catonsville, MD, USA, 2014; pp. 109–137. [Google Scholar]

- Shapiro, A. Analysis of stochastic dual dynamic programming method. Eur. J. Oper. Res. 2011, 209, 63–72. [Google Scholar]

- Powell, W.B. A unified framework for stochastic optimization. Eur. J. Oper. Res. 2019, 275, 795–821. [Google Scholar]

- Özalp, R.; Varol, N.K.; Taşci, B.; Uçar, A. A review of deep reinforcement learning algorithms and comparative results on inverted pendulum system. In Machine Learning Paradigms: Advances in Deep Learning-Based Technological Applications; Springer: Berlin/Heidelberg, Germany, 2020; pp. 237–256. [Google Scholar]

- Rolf, B.; Jackson, I.; Müller, M.; Lang, S.; Reggelin, T.; Ivanov, D. A review on reinforcement learning algorithms and applications in supply chain management. Int. J. Prod. Res. 2023, 61, 7151–7179. [Google Scholar]

- Nguyen, T.T.; Nguyen, N.D.; Nahavandi, S. Deep reinforcement learning for multiagent systems: A review of challenges, solutions, and applications. IEEE Trans. Cybern. 2020, 50, 3826–3839. [Google Scholar]

- Usuga Cadavid, J.P.; Lamouri, S.; Grabot, B.; Pellerin, R.; Fortin, A. Machine learning applied in production planning and control: A state-of-the-art in the era of industry 4.0. J. Intell. Manuf. 2020, 31, 1531–1558. [Google Scholar]

- Alves, J.C.; Mateus, G.R. Multi-echelon supply chains with uncertain seasonal demands and lead times using deep reinforcement learning. arXiv 2022, arXiv:2201.04651. [Google Scholar]

- Esteso, A.; Peidro, D.; Mula, J.; Díaz-Madroñero, M. Reinforcement learning applied to production planning and control. Int. J. Prod. Res. 2023, 61, 5772–5789. [Google Scholar]

- Qu, X.; Liu, L.; Huang, W. Data-driven inventory management for new products: A warm-start and adjusted Dyna-Q approach. arXiv 2025, arXiv:2501.08109. [Google Scholar]

- Cheng, L.; Luo, J.; Fan, W.; Zhang, Y.; Li, Y. A Deep Q-Network Based on Radial Basis Functions for Multi-Echelon Inventory Management. In Proceedings of the 2023 Winter Simulation Conference (WSC), San Antonio, TX, USA, 10–13 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1581–1592. [Google Scholar]

- Ziegner, G.; Choi, M.; Le, H.M.C.; Sakhuja, S.; Sarmadi, A. Iterative Multi-Agent Reinforcement Learning: A Novel Approach Toward Real-World Multi-Echelon Inventory Optimization. arXiv 2025, arXiv:2503.18201. [Google Scholar]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. A brief survey of deep reinforcement learning. arXiv 2017, arXiv:1708.05866. [Google Scholar]

- Li, Y. Deep reinforcement learning: An overview. arXiv 2017, arXiv:1701.07274. [Google Scholar]

- Badhan, I.A.; Hasnain, M.N.; Rahman, M.H. Enhancing Operational Efficiency: A Comprehensive Analysis of Machine Learning Integration in Industrial Automation. J. Bus. Insight Innov. 2022, 1, 61–77. [Google Scholar]

- Keswani, M. A comparative analysis of metaheuristic algorithms in interval-valued sustainable economic production quantity inventory models using center-radius optimization. Decis. Anal. J. 2024, 12, 100508. [Google Scholar]

- Di Nardo, M.; Clericuzio, M.; Murino, T.; Sepe, C. An economic order quantity stochastic dynamic optimization model in a logistic 4.0 environment. Sustainability 2020, 12, 4075. [Google Scholar] [CrossRef]

- Đorđević, L.; Antić, S.; Čangalović, M.; Lisec, A. A metaheuristic approach to solving a multiproduct EOQ-based inventory problem with storage space constraints. Optim. Lett. 2017, 11, 1137–1154. [Google Scholar]

- Baghizadeh, K.; Ebadi, N.; Zimon, D.; Jum’a, L. Using four metaheuristic algorithms to reduce supplier disruption risk in a mathematical inventory model for supplying spare parts. Mathematics 2022, 11, 42. [Google Scholar] [CrossRef]

- Bushuev, M.A.; Guiffrida, A.; Jaber, M.; Khan, M. A review of inventory lot sizing review papers. Manag. Res. Rev. 2015, 38, 283–298. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).