Abstract

Tomato ripeness detection in open-field environments is challenged by dense planting, heavy occlusion, and complex lighting conditions. Existing methods mainly rely on color and texture cues, limiting boundary perception and causing redundant predictions in crowded scenes. To address these issues, we propose an improved detection framework called Edge-Guided DETR (EG-DETR), based on the DEtection TRansformer (DETR). EG-DETR introduces edge prior information by extracting multi-scale edge features through an edge backbone network. These features are fused in the transformer decoder to guide queries toward foreground regions, which improves detection under occlusion. We further design a redundant box suppression strategy to reduce duplicate predictions caused by clustered fruits. We evaluated our method on a multimodal tomato dataset that included varied lighting conditions such as natural light, artificial light, low light, and sodium yellow light. Our experimental results show that EG-DETR achieves an of 83.7% under challenging lighting and occlusion, outperforming existing models. This work provides a reliable intelligent sensing solution for automated harvesting in smart agriculture.

MSC:

68T07

1. Introduction

Intelligent sensing technology is becoming a key support in smart agriculture, with wide applications in crop growth monitoring, fruit ripeness detection, and automated harvesting. Tomatoes are among the most important and widely cultivated vegetables worldwide, prized for their rich nutrients and flavor. The ripeness of tomatoes not only directly affects post-harvest quality but also plays a crucial role in their market value. Traditional manual harvesting methods are highly subjective and inefficient. These methods not only result in significant labor costs but also lack scalability, making it difficult to meet the demands of large-scale tomato production [1]. This inefficiency hinders accurate assessment of ripeness, ultimately compromising the quality and economic value of the harvested tomatoes. As a result, there is a pressing need to develop precise and automated tomato ripeness detection algorithms that can improve harvesting efficiency while reducing operational costs.

In practice, however, tomato ripeness detection in open-field cultivation remains a challenging task. In open-field cultivation, high planting density, severe leaf occlusion, and the clustered distribution of fruits often degrade detection accuracy. Moreover, lighting conditions in field environments are complex and highly variable across different time periods; as a result, sensors often struggle to capture images with consistent illumination. Therefore, developing a high-precision intelligent sensing model that can adapt to complex lighting conditions and perform effectively under occlusion is of great significance for promoting the intelligent and precise harvesting of tomatoes.

To tackle these challenges, researchers have explored a range of tomato detection models. In [2], the authors introduced a multi-head self-attention mechanism to enhance YOLOv8’s feature extraction under complex lighting, thereby improving its robustness to illumination changes. In [3], YOLOv4 was combined with the Hue, Saturation, and Value (HSV) color space features to effectively detect ripe tomatoes in natural environments. In [4], the authors proposed a foreground–foreground class balance method and an improved YOLOv8s network, enhancing the detection performance of tomato fruits in complex environments. While these approaches show promising results under controlled conditions, they encounter significant limitations in real-world agricultural settings where complex occlusions and lighting variations often lead to inconsistent image quality. Consequently, conventional CNN-based models still struggle with detection stability, frequently producing missed or false results.

Given the limitations of traditional convolutional architectures, transformer-based models have recently gained traction in object detection research. DEtection TRansformer (DETR) [5] pioneered introduction of the transformer architecture [6] into object detection, breaking away from the limitations of traditional hand-designed components. Compared to convolutional neural networks with limited receptive fields, DETR shows stronger feature extraction and global perception capabilities when dealing with field images captured by sensors under varying lighting conditions and complex backgrounds. Based on [5], several improved versions have been proposed to further enhance performance. For example, Deformable-DETR [7] introduces multi-scale deformable attention to better focus on key regions, while DAB-DETR [8] improves spatial localization through query initialization based on bounding box coordinates and DN-DETR [9] applies a denoising training strategy to improve the robustness of matching. These enhancements have significantly improved the training efficiency and detection accuracy of DETR-based models. However, the limited fine-grained perception of object boundaries in DETR must still be addressed, especially in images with heavy occlusion from leaves and densely overlapping fruits in real field conditions.

In practical field environments, tomatoes differ from the surrounding background mainly in their color and contour; however, only tomatoes that are near full ripeness exhibit strong color contrast, whereas their contours remain consistently distinguishable across all stages of ripeness. This makes edge information a more stable and reliable visual cue under diverse conditions. Thus, leveraging such contour features can provide valuable guidance for object detection.

Motivated by this observation, we turn to recent advances in deep learning-based edge detection that have shown promising results across diverse domains. Models such as HED [10] and RCF [11] utilize multi-scale feature fusion to significantly improve edge representation. Building upon this, DexiNed [12] and TEED [10] further promote cross-domain generalization and lightweight design. Due to their strong generalization capabilities, these models can generate precise structural edge maps without requiring fine-tuning on agricultural datasets. Consequently, they are suitable as auxiliary modules for agricultural object detection tasks. Incorporating such edge information into detection frameworks enhances the model’s perception of object boundaries, leading to improved performance in challenging scenarios involving occlusion, clutter, or lighting variation.

Inspired by the above research, this paper proposes a novel tomato ripeness detection method called Edge-Guided DETR (EG-DETR). To address the negative impact of complex foliage occlusion on detection performance, EG-DETR incorporates multi-scale edge features generated by an edge detection network into the transformer decoder. This guides the query process to focus more on foreground regions, thereby enhancing detection accuracy under occluded conditions. In addition, we alleviate redundant predictions caused by clustered fruit growth by designing a redundant box suppression strategy that filters highly similar queries, resulting in improved training efficiency and detection accuracy. EG-DETR can effectively handle variations in illumination caused by different lighting conditions. This approach demonstrates strong adaptability and significantly improves detection performance in complex field environments, especially in images captured by sensors.

To evaluate the practical applicability of our method in real-world agricultural scenarios, we conducted experiments on a multimodal tomato dataset collected by intelligent sensors deployed in open-field settings [13]. The images in this dataset cover a wide range of typical lighting conditions, including natural light, artificial light, low light, and sodium yellow light, closely simulating the visual challenges caused by changing illumination in actual farming environments. Our experimental results show that EG-DETR achieves excellent ripeness detection performance under various lighting and occlusion conditions, demonstrating its practicality and reliability in intelligent sensing systems for complex agricultural environments.

The main contributions of this paper are as follows:

- We propose EG-DETR, a tomato ripeness detection method designed for complex environments that achieves high-precision detection even under severe fruit overlap and leaf occlusion conditions. EG-DETR also demonstrates outstanding performance under various lighting conditions, effectively addressing detection challenges caused by illumination variations in sensor-captured images.

- We introduce a novel approach that uses edge information to provide guidance to the queries in the DETR decoder, which effectively enhances its foreground focus capability during feature fusion while reducing background interference. Additionally, a redundant box suppression strategy alleviates query redundancy caused by overlapping fruits, further improving detection performance.

2. Related Work

Object detection technology has undergone significant evolution. Its development path has transitioned from traditional two-stage detectors to single-stage methods. In recent years, it has further evolved to incorporate transformer-based detection frameworks.

CNN-based object detection has gradually evolved from two-stage to one-stage architectures. Faster R-CNN [14] is a representative two-stage detection model. It first uses a convolutional neural network to extract global feature maps from the entire image, then applies ROI pooling to classify and regress candidate regions. This approach strikes a good balance between speed and accuracy and performs well across various standard detection tasks. However, Faster R-CNN shows limitations in multi-scale feature representation and sensitivity to overlapping objects. Its detection performance significantly declines in complex scenarios with severe occlusion and densely packed objects [15].

In contrast, the You Only Look Once (YOLO) series [16,17,18,19] exemplifies the paradigm of one-stage object detectors. YOLO formulates object detection as a regression problem, directly predicting bounding box locations and class probabilities in a single forward pass through the image. This greatly enhances inference speed and makes YOLO highly suitable for real-time applications. Nevertheless, YOLO is more prone to missing small and overlapping objects in dense scenes [20].

Despite the progress made by both paradigms, these CNN-based detectors still rely heavily on hand-designed components such as anchor boxes and Non-Maximum Suppression (NMS), which limits their flexibility and adaptability.

To address these limitations, DEtection TRansformer (DETR) [5] was introduced as a novel end-to-end object detection framework built on the basis of the transformer architecture [6]. By leveraging attention mechanisms, DETR directly learns global context and object relationships, eliminating the need for hand-crafted components. Following its introduction, a significant amount of research has focused on improving the performance of DETR-based methods from various perspectives. These approaches have optimized the DETR architecture in different ways, such as enhancing the execution process [21,22], redesigning query representations [8,23], reformulating the model [24,25], or incorporating prior knowledge [23]. Deformable-DETR [7] introduces a multi-scale deformable attention mechanism that selectively focuses on a few key sampled points within the reference bounding box, leading to improved computational efficiency. DN-DETR [9] employs denoising training to reduce the difficulty of bipartite graph matching. DINO [26] applies contrastive denoising training and uses a mixed query selection method for anchor initialization.

Building on the above methods, researchers have further focused on improving DETR-based models in situations involving small object detection and complex scenes. DQ-DETR [22] dynamically adjusts the number of queries to address the imbalance between images and instances, thereby improving the detection accuracy of small objects. Salience-DETR [27] introduces a hierarchical salience filtering mechanism and a novel scale-invariant salience supervision strategy, which effectively alleviates scale bias and enhances the model’s ability to focus on targets. Relation-DETR [28] incorporates a positional relation embedding module that encodes the spatial layout between objects, gradually guiding the decoder to learn more accurate positional relationships.

While detectors based on CNNs and transformers have advanced the field of agricultural object detection, they still face considerable limitations in complex real-world scenarios. CNN-based models such as YOLO variants exhibit high inference efficiency but often fail to capture subtle object boundaries, particularly in cases involving small, overlapping, or heavily occluded fruits. Transformer-based methods such as DETR currently exhibit insufficient fine-grained perception, making it difficult to distinguish densely packed tomatoes under varying lighting conditions and foliage interference. Moreover, most existing models rely primarily on color and texture features, which are insufficient in field conditions where illumination is inconsistent and tomato ripeness stages are visually similar. Despite their potential to provide localization cues under occlusion and clutter, the stable structural contours of tomatoes across ripeness stages are often overlooked.

By contrast, we propose EG-DETR, a novel transformer-based framework that integrates multi-scale edge information into the decoder to enhance boundary perception and foreground focus. Additionally, we design a redundant box suppression strategy to reduce overlapping queries in dense fruit regions. These contributions aim to improve detection accuracy under challenging real-world agricultural conditions.

3. EG-DETR

3.1. Overall of EG-DETR

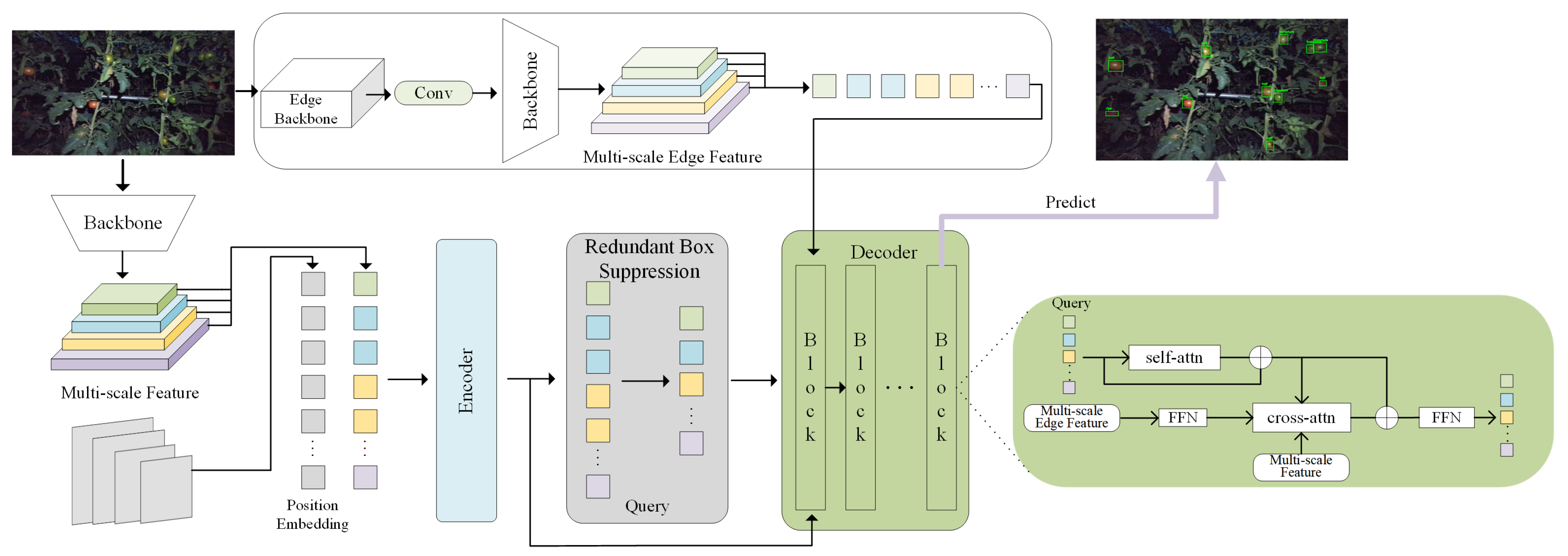

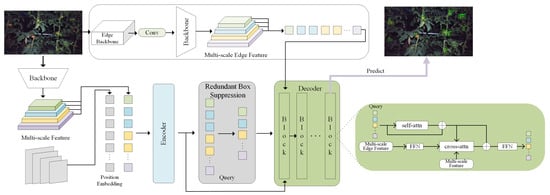

Figure 1 presents the overall architecture of the proposed EG-DETR framework, which extends the DETR structure with targeted enhancements for challenging agricultural environments. The model consists of four main components: a visual backbone, a transformer encoder–decoder, an edge feature extraction module, and a redundant box suppression module.

Figure 1.

Model architecture of EG-DETR.

The visual backbone leverages a ResNet network to extract multi-scale semantic features from input images. These features are passed into a transformer encoder to capture global context. Following this, a fixed set of object queries is generated from learnable parameters. Object queries are then refined through a transformer decoder, which applies self-attention and cross-attention mechanisms to integrate image features and progressively improve detection precision.

To enhance the model’s perception of object boundaries and foreground focus, we integrate an edge feature extraction module, which uses a pretrained lightweight edge detection network to extract edge features. These features are introduced into the decoder as edge prior information, guiding the queries toward object contours and foreground. This design improves boundary localization, especially under occlusion from dense foliage.

Lastly, to address the issue of redundant predictions in clustered scenes, a redundant box suppression module is applied during the query selection stage. This module filters out highly overlapping queries, reducing duplication and enhancing overall detection efficiency.

3.2. Extraction of Edge Features

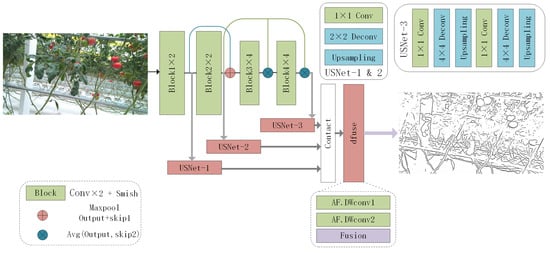

For edge information extraction, we use the pretrained model named Tiny and Efficient Edge Detector (TEED) [29], which is specifically designed to offer high accuracy while maintaining low computational complexity. TEED has only 58K parameters, making it a lightweight and efficient model. This significantly reduces the demand for computational resources.

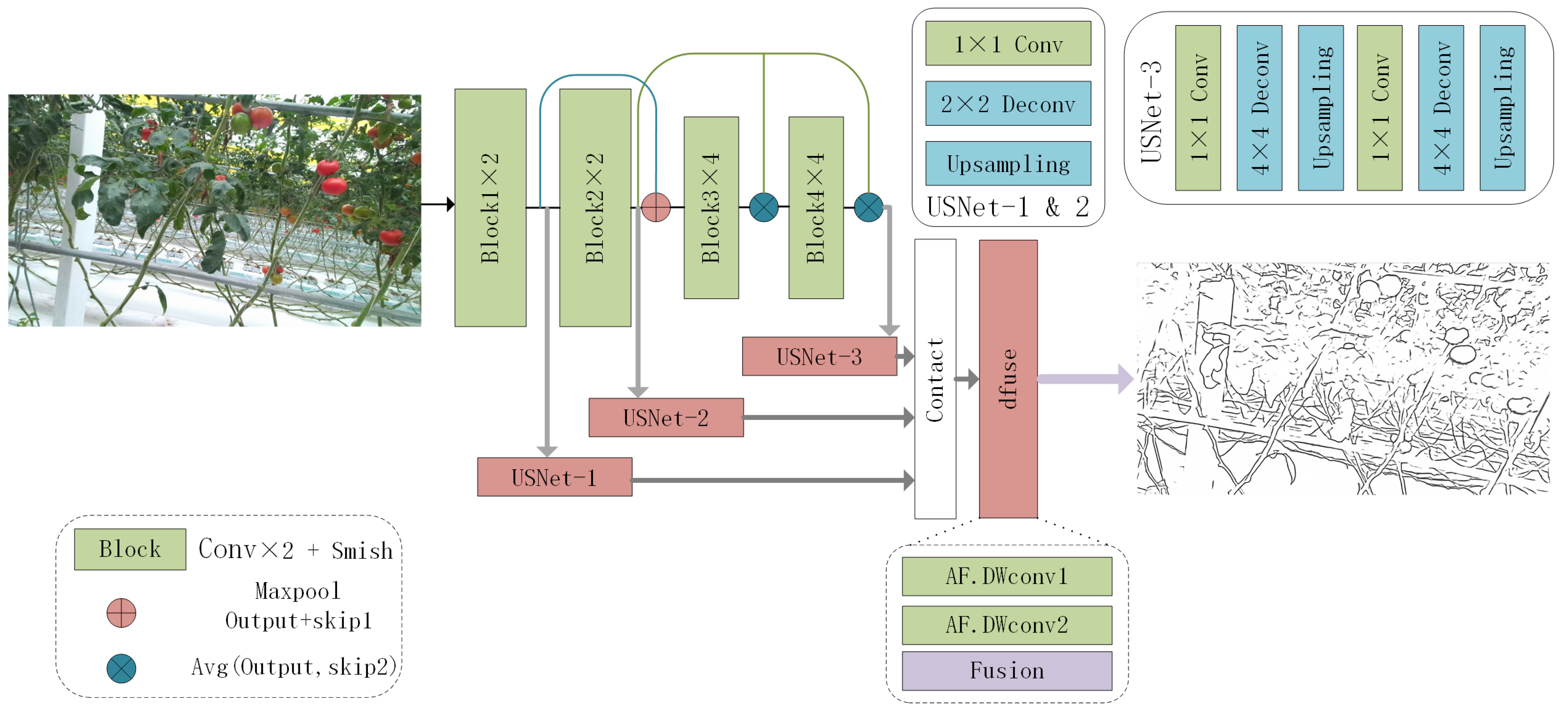

Figure 2 presents the architecture of TEED, which consists of three core components designed to effectively extract and integrate edge features: edge feature extraction blocks, USNet, and the Dfuse fusion module. The edge feature extraction blocks comprise stacked convolutional layers with nonlinear activations, and is enhanced by skip connections to improve feature propagation and preserve spatial structure. Building on these features, USNet employs a convolutional layer followed by activation and a deconvolutional layer to generate edge maps that maintain high spatial resolution. Finally, the Dfuse fusion module processes the edge predictions generated by USNet through two Depth-Wise Convolution (DWConv) layers, producing the edge output.

Figure 2.

Model architecture of TEED.

Compared to traditional edge extraction methods, pretrained models offer stronger generalization capabilities, allowing them to adapt effectively to agricultural images and achieve high-quality edge extraction without requiring additional training or fine-tuning on specific datasets. The edge information extracted by the model can effectively suppress the background noise and highlight the object contour, thereby providing more discriminative edge information for subsequent query and update.

For an input image , we first input it into the edge extraction backbone to obtain edge information . Considering the input dimension requirements of the backbone network in the DETR method, we expand the number of channels of E from 1 to 3 through the convolution layer to obtain to match the feature extraction backbone input shape used by DETR. Subsequently, is further fed into the backbone network to extract multi-scale edge information . The original image is also extracted through the backbone to obtain the feature map , with and having the same shape at the same scale.

3.3. Redundant Box Suppression

In the two-stage DETR framework, the model generates a fixed number of object queries based on the learnable parameters. These queries are then iteratively updated in the decoder, focusing on specific regions of interest in the image. However, in scenarios involving complex backgrounds or small objects, noisy or ambiguous feature representations may interfere with the attention learning process. The resulting redundant queries negatively affect the accuracy of subsequent target localization and classification, ultimately reducing detection performance. To address this issue, we adopt a redundant box suppression strategy inspired by the approach in Salience-DETR [27]. This strategy first performs an initial selection of queries, then applies NMS [14] to remove redundant queries with high overlap. The selected queries are assigned a bounding box with a fixed center at and a width and height of 2, as shown in Equation (1):

NMS is then applied to these bounding boxes at both the image level and hierarchical levels. To further optimize this process, we incorporate a dynamic IoU thresholding strategy that is scale-aware. Rather than using a fixed IoU threshold across all levels, we define thresholds based on the area of each feature map.

Specifically, feature maps with an area smaller than 512 are classified as small-scale, those with areas between 512 and 2048 are considered medium-scale, and those exceeding 2048 are treated as large-scale. Correspondingly, the IoU thresholds are set to 0.20, 0.30, and 0.35 for the feature maps with small, medium, and large scales, respectively.

This division is motivated by the dense and heavily overlapping nature of tomato fruits in real-world cultivation scenarios. When dealing with lower-resolution feature maps, a single region may attract multiple queries, resulting in a large number of overlapping candidate boxes. Applying a looser IoU threshold in such cases facilitates more effective suppression of redundant boxes. In contrast, a stricter threshold helps preserve valid predictions for higher-resolution feature maps where objects are more spatially separated.

Although the thresholds are manually defined, this scale-aware strategy offers practical effectiveness in dense object detection tasks without adding computational overhead.

3.4. Application of Edge Features in Decoder

In the decoder, we follow the approach of Relation-DETR [28] by incorporating a position relation encoder to help the queries learn the spatial relationships between objects. Specifically, Relation-DETR encodes normalized relative geometry features between predicted boxes, represented as . Given a set of predicted boxes, the relation matrix is formed by computing for each box pair, as described in Equation (2):

According to Equation (3), these four-dimensional features are transformed into high-dimensional embeddings using a sine–cosine positional encoding with a shape of , where s, T, and are parameters. The obtained embeddings are then processed through a linear transformation to obtain :

The relational embedding which captures the relationship between bounding boxes from the -th and l-th decoder layers is integrated into the self-attention mechanism based on Equation (4) to refine query representations:

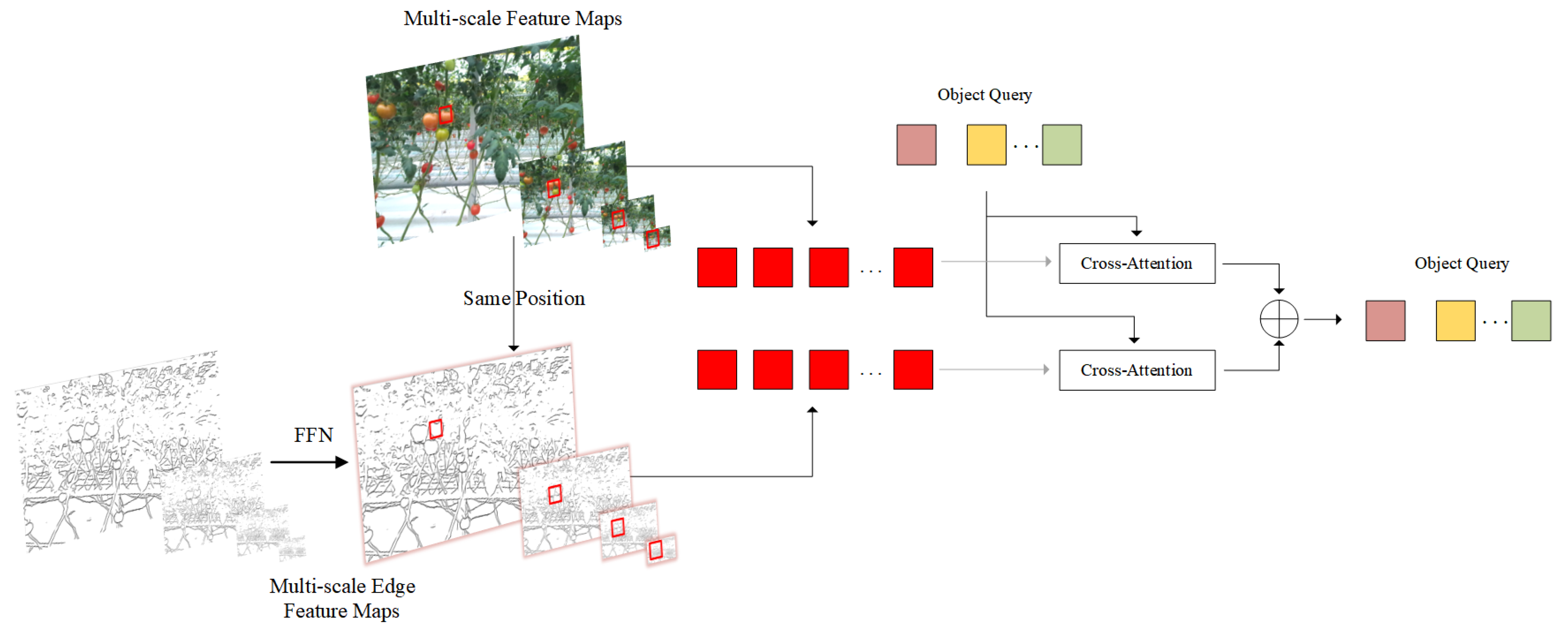

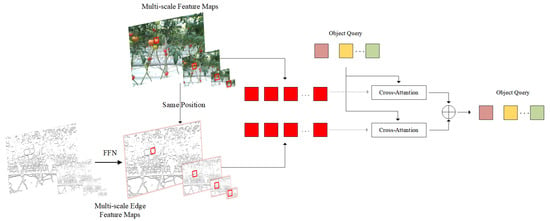

To improve the focus of queries and enhance detection performance, we incorporate additional edge information into the decoder. This supplementary edge context allows the decoder to better focus on the foreground regions of interest in the image. Specifically, as illustrated in Figure 3, provides additional information during the cross-attention mechanism of the decoder, which facilitates more accurate location of objects.

Figure 3.

The decoder’s query fusion process for combining the original image and edge information.

To begin with, the edge feature map undergoes further feature extraction through a Feed-Forward Network (FFN) layer. This FFN layer consists of two linear layers and an activation function, which helps to refine the edge features for subsequent processing. The enhanced edge feature map is denoted as and is defined in Equation (5):

The l-layer of the decoder performs synchronous coordinate sampling on based on the sampling position set learned from the image feature map . This ensures spatial consistency between image features and edge features. The decoder then applies cross-attention for both the image feature map and the edge-enhanced feature map using the deformable attention mechanism. The cross-attention mechanisms are defined in Equation (6):

where is the cross-attention between the query and the image feature map and represents the cross-attention between the query and the edge-enhanced feature map .

To effectively combine the information from the image and the edge features, we introduce a learnable parameter to control the fusion process. The final update of the query is obtained by weighted fusion of the two cross-attention outputs, followed by Layer Normalization (LayerNorm) and another FFN. During training, is adaptively optimized to balance the contributions from both modalities. This fusion mechanism is formulated by Equation (7):

This hybrid query update process ensures that the information of both the image and the edges is utilized. This allows the model to achieve better object detection performance, especially under challenging scene conditions such as occlusion or clutter.

4. Experiment

4.1. Dataset

Our method was primarily evaluated on the Multimodal Tomato Dataset from [13]. This dataset presents complex tomato fruit scenarios with dense foliage occlusion and high fruit overlap, closely reflecting the challenges encountered in agricultural environments. To assess generalization performance across diverse scenarios, we performed comparison experiments using the COCO2017 and VisDrone datasets. These datasets encompass a wide range of object categories and varying background complexities, allowing for a comprehensive evaluation of the model’s performance in general object detection tasks. Furthermore, we validated our method on the NEU-DET industrial defect detection dataset to examine the model’s effectiveness in specialized tasks.

The Multimodal Tomato Dataset is sourced from [13]. This dataset, dating from September 2024, poses unique challenges due to dense foliage occlusion and high fruit overlap. It captures tomatoes under various lighting conditions and comprises a dataset of 4000 images. The lighting conditions include natural light, artificial light, low light, and sodium yellow light. The image resolution is 1280 × 720. The dataset includes three maturity stages for tomato objects: immature, semi-mature, and mature. The dataset does not have predefined training and testing splits; instead, we randomly partitioned it into 3600 samples for training and 400 samples for validation.

The Common Objects in Context (COCO) 2017 dataset [30] is a large-scale benchmark dataset for object detection, instance segmentation, and keypoint detection. It consists of over 200,000 labeled images, with 118,000 images in the training set, 5000 in the validation set, and 40,000 in the test set. The dataset includes 80 object categories captured in diverse real-world scenes, including people, animals, vehicles, and household items. These images feature complex contexts including challenges such as occlusion, scale variation, and object overlap. The COCO2017 dataset provides high-quality bounding box annotations across various scales and complex backgrounds, offering a reliable and challenging benchmark for evaluating object detection algorithms in real-world conditions.

The VisDrone [31] dataset includes 10,209 drone captured images, including 6471 in the training set, 548 in the validation set, and 3190 in the test set. This dataset includes twelve object categories, including pedestrians, cars, bicycles, and tricycles. It spans a broad range of scenes collected from multiple cities, covering diverse urban and rural environments. It also includes scenes with varying densities, ranging from sparse to highly crowded scenes.

The NEU-DET [32] dataset comprises 1800 grayscale images of surface defects on hot-rolled steel strips. It covers six defect categories: cracks (cr), inclusions (in), patches (pa), pitted surfaces (ps), rolled-in scale (rs), and scratches (sc). Each category includes 300 images with a resolution of 200 × 200 pixels.

4.2. Setup

To comprehensively validate our model, we conducted experiments on the agricultural Multimodal Tomato Dataset as well as the COCO2017, VisDrone, and NEU-DET datasets. Detection performance was measured using the standard Average Precision () [30]. Training was performed on an A40 (46 G) GPU with six layers in both the encoder and the decoder. The CNN backbone was ResNet-50 [33], while edge extraction was performed using the TEED architecture. Additionally, the learnable fusion parameter was initialized to 0.5. Image enhancement strategies such as random cropping and scale augmentation were the same as those used in DETR-based methods. AdamW was used as the optimizer with an initial learning rate of for the Multimodal Tomato Dataset and for the other datasets. The weight decay was set to . The learning rate was reduced by a factor of 0.1 in later stages. Other settings followed the Relation DETR method, with the positional encoder parameters T, , and s selected as 10,000, 16, and 100, respectively. The training batch size was set to 2 for the Multimodal Tomato Dataset, COCO2017, and NEU-DET and to 1 for the VisDrone dataset. The number of queries was set to 900.

4.3. Experimental Results

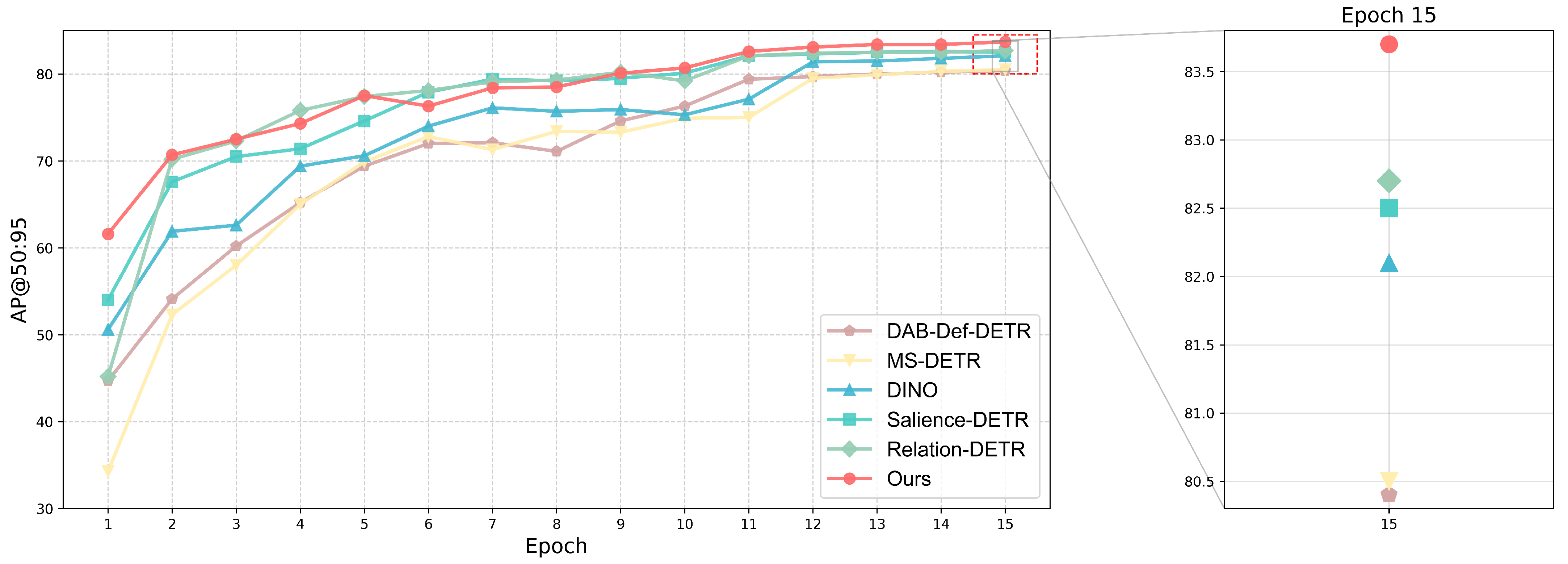

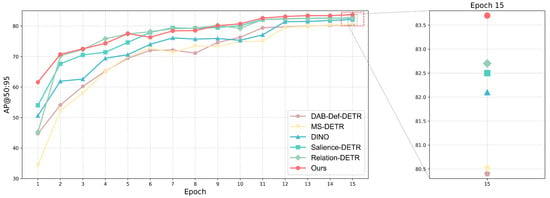

Comparison on Multimodal Tomato Dataset. Table 1 compares the performance of our approach with several state-of-the-art object detectors on the Multimodal Tomato Dataset. All methods have been re-implemented for a fair comparison. EG-DETR demonstrates superior performance, achieving the highest overall detection accuracy with an of 83.7%, which surpasses Relation-DETR by 1.0%. This confirms the adaptability of our model in complex scenarios involving occlusion and high fruit overlap. Figure 4 illustrates the convergence curves of the different methods. Notably, EG-DETR reaches an impressive 61.5% in the first epoch, indicating faster convergence and a more effective initialization. Our model maintains a clear advantage throughout the training process, showcasing both stability and high detection performance across epochs.

Table 1.

Comparison with state-of-the-art methods on the Multimodal Tomato Dataset. The best and second-best results are marked in red and blue, respectively.

Figure 4.

The convergence curves of our method and several previous DETR models (listed in Table 1) on the Multimodal Tomato Dataset.

Comparison on the COCO2017val and VisDrone datasets. Table 2 shows the detection performance on the COCO2017 validation set. Our proposed EG-DETR achieves a competitive of 51.8%, surpassing the second-best Relation-DETR (51.5%) by 0.3%. On the VisDrone dataset, where we re-implemented multiple DETR-based baselines to ensure a fair comparison, our proposed method again demonstrates superior detection performance. As shown in Table 3, our model achieves the highest of 32.3%, surpassing the next-best Relation-DETR (32.0%) by 0.3%. Even in scenarios with relatively clean backgrounds, our approach still maintains a consistent performance advantage. This highlights the effectiveness of EG-DETR not only under complex agricultural conditions such as dense foliage occlusion and severe fruit overlap but also in general detection environments.

Table 2.

Quantitative comparison on COCO2017val using ResNet50 (IN-1K) backbone under setting. Here, * means that we re-implemented the methods and report the corresponding results. The best and second-best results are marked in red and blue, respectively.

Table 3.

Comparison with DETR-like methods on the VisDrone dataset. The best and second-best results are marked in red and blue, respectively.

Comparison on NEU-DET. We additionally compared the performance of various DETR-based and YOLO-based object detection methods on the NEU-DET dataset, which contains images of surface defects in steel materials. According to Table 4, the experimental results show that our proposed method achieves the best performance with an of 48.4%, demonstrating detection capability in handling complex structures. Although the is lower than that of Salience-DETR (82.0%), the overall performance is more stable and reliable. These results indicate that our method maintains competitive performance even in specialized tasks, highlighting its versatility across different application domains.

Table 4.

Comparison of different methods on the NEU-DET dataset. The best and second-best results are marked in red and blue, respectively.

4.4. Ablation Study

This section presents comprehensive ablation studies conducted to evaluate the effectiveness of each proposed component within our method. We investigated four key aspects: (1) the contribution of individual components to the overall detection performance on the Multimodal Tomato Dataset; (2) the impact of different edge extraction backbones on the accuracy of the detector; (3) the effect of on the fusion results; and (4) the generalization ability of our method when integrated into alternative DETR-based architectures. The aim of these experiments was to provide a thorough understanding of the design choices and their practical implications.

Ablation Study of Components. Table 5 presents the ablation results for the key components of our method. First, the integration of the edge extraction backbone into EG-DETR results in a 0.7% improvement in overall , reaching 83.4%. This demonstrates the effectiveness of incorporating edge features, which provide additional structural information to aid in target localization during the decoder training process. Furthermore, the introduction of Redundant Box Suppression (RBS) during the query initialization stage yields an additional 0.3% improvement in , bringing the final score to 83.7%. This indicates the value of RBS in reducing redundant queries by improving the accuracy of object localization, especially in scenarios with highly overlapping bounding boxes. In addition, we investigated different IoU thresholding strategies within RBS. Using a fixed IoU threshold results in improvement (83.4% ). Employing the scale-aware dynamic IoU strategy achieves the best performance (83.7% ), with particularly noticeable gains in small-object detection (). These results suggest that the scale-aware IoU setting is more effective in handling object scale variations and spatial density, leading to more representative query regions. Thus, our results confirm the positive impact of both the edge extraction backbone and RBS on the overall performance of EG-DETR.

Table 5.

Ablation study on key components of EG-DETR (ResNet-50) on the Multimodal Tomato Dataset. RBS stands for redundant box suppression; “IoU Setting” indicates the configuration of the IoU thresholding strategy within the RBS module.

Different Edge Extraction Backbone. To evaluate the impact of different edge extraction backbones on the overall performance of the detection model, we conducted comparative experiments using two representative edge detection networks, namely, RCF and DexiNed. These models were chosen for their excellent performance and typicality in edge detection tasks. As shown in Table 6, incorporating either of these edge backbones leads to consistent improvements in detection accuracy over the baseline. Notably, the model achieves the highest performance when integrated with TEED, reaching an of 83.7%. This result highlights the importance of effective edge information in enhancing feature representation and improving the precision of object boundaries, especially in complex agricultural scenes with occlusions and overlaps.

Table 6.

Results of EG-DETR using different edge extraction backbones on the Multimodal Tomato Dataset.

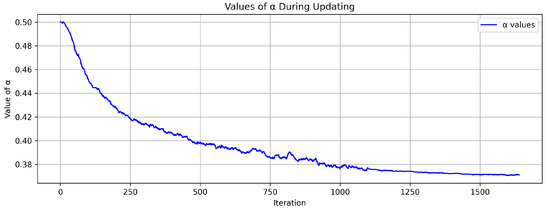

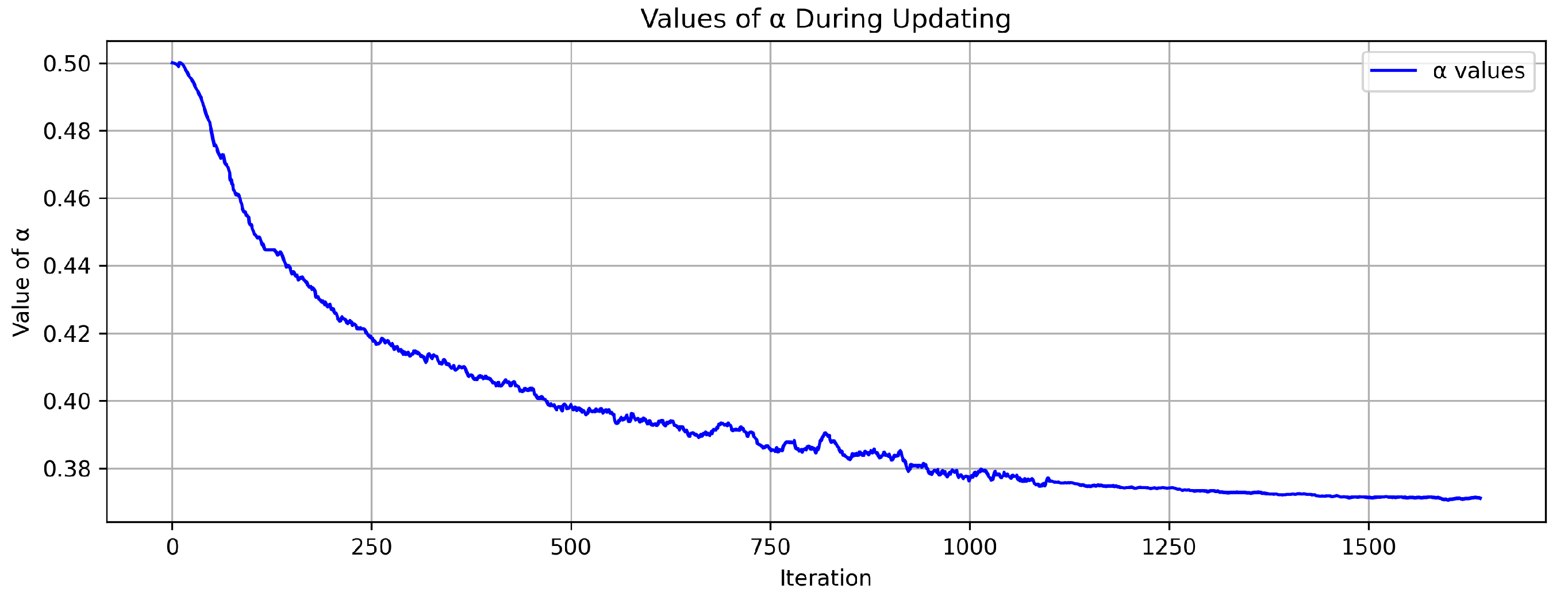

Effect of on Fusion Results. In this work, the fusion parameter is designed as a learnable variable to enable adaptive adjustment. To validate its effectiveness, we conducted experiments comparing fixed values (0.3, 0.5, and 0.7) with a learnable optimized during training, as shown in Table 7. The results indicate that larger values (e.g., 0.7) lead to performance degradation due to excessive reliance on edge features, while moderate values (≤0.5) achieve a better balance between image and edge features, resulting in improved detection performance. During training, the learnable gradually adjusts and stabilizes around 0.375 (see the convergence curve provided in Appendix A), achieving the best overall performance with an of 83.7%. These findings demonstrate the effectiveness of adaptive fusion weighting in enhancing model performance.

Table 7.

Performance comparison between fixed and learnable settings.

Transferability study. Table 8 presents the results of transferring the proposed edge extraction backbone to Salience-DETR and integrating edge information into the decoder during the training process. The incorporation of our edge-guided strategy leads to an improvement in the detection performance of Salience-DETR, with an increase of 0.4% in compared to the original model. This performance gain further validates the effectiveness of our method, demonstrating that our method can be integrated into other DETR-based architectures. The proposed strategy offers consistent benefits in accuracy even under different model designs and training configurations.

Table 8.

Results of transferring the edge extraction backbone to Salience-DETR.

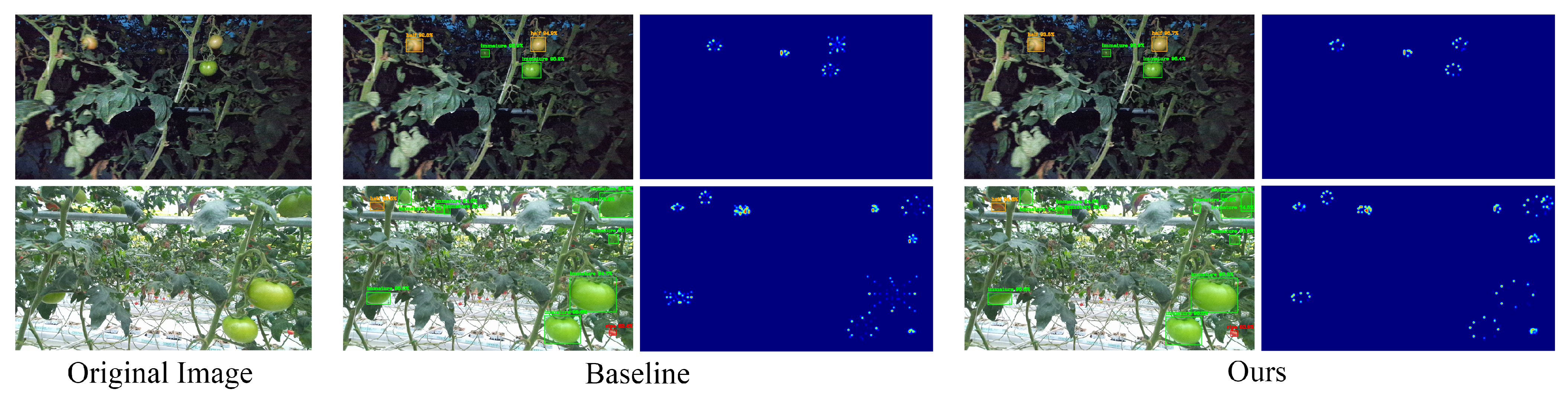

4.5. Visualization

To better understand how the integration of edge information guides the model’s attention, we visualize the cross-attention maps of the decoder. As shown in Figure 5, we present the attention maps of several representative queries from both the baseline and our proposed method. It can be observed that the edge-enhanced queries tend to focus more precisely on object contours compared to those from the baseline. This contour-focused attention enables the model to better adapt to complex environments with occluding branches and leaves. Additionally, emphasizing object boundaries helps to reduce missed detections caused by overlapping fruits.

Figure 5.

Comparison of cross-attention focus on sampled points in the decoder between baseline and edge-guided queries.

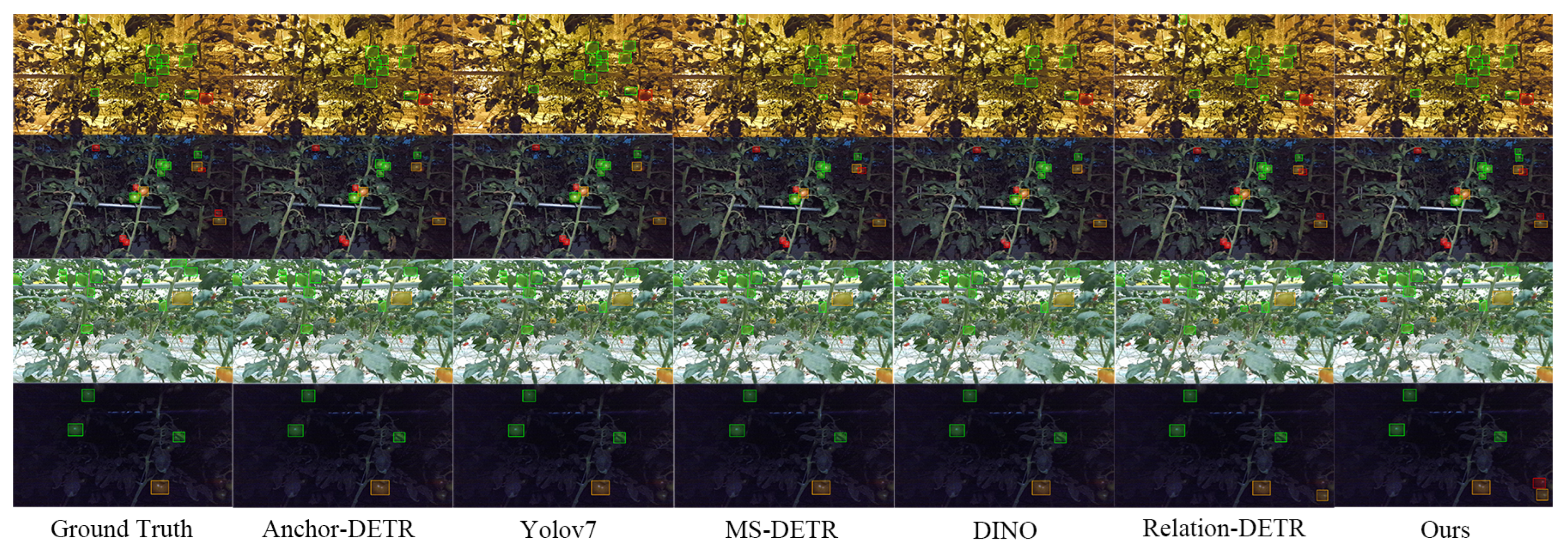

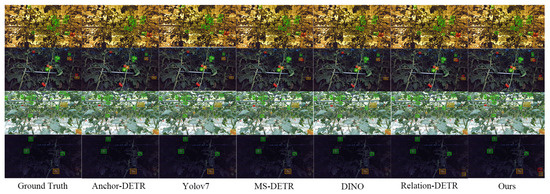

Figure 6 shows the ripeness detection results of different models on the Multimodal Tomato Dataset under four distinct lighting conditions. These lighting conditions reflect common variations in intensity and angle found in open-field cultivation environments. EG-DETR demonstrates outstanding detection capabilities in complex tomato-growing scenarios characterized by high planting density, severe foliage occlusion, and significant fruit overlap. Our method not only accurately identifies the annotated mature tomatoes but also successfully detects some heavily occluded or unannotated fruits, which are typically difficult to locate using traditional methods. This further validates the generalization ability of EG-DETR in complex scenes, highlighting its potential for real-world agricultural applications.

Figure 6.

Visualization of different methods, showing the detection results of tomato pictures in four different lighting scenarios. The prediction confidence threshold is set to 0.5; the green box represents immature tomatoes, the orange box semi-mature tomatoes, and the red box mature tomatoes.

5. Discussion

The proposed EG-DETR model effectively improves detection performance under complex conditions by incorporating edge information, which is particularly helpful in cases of occlusion and fruit clustering. Additionally, our proposed redundant box suppression strategy reduces query overlaps during detection, further enhancing training efficiency and stability. Experimental results on a multimodal tomato dataset and several public benchmarks validate the model’s generalization and adaptability.

While these results confirm the effectiveness of our method, it is equally important to consider the practical constraints of real-world agricultural applications. In recent years, achieving high detection accuracy under limited computational resources has become an increasingly important research focus in agricultural intelligent sensing. This trend is largely driven by the demand for models that can be deployed on edge computing devices in resource-constrained environments. Consequently, many recent studies have proposed lightweight architectures aimed at reducing model complexity while maintaining competitive detection performance [38,39,40].

Despite the promising performance of EG-DETR, certain limitations remain that restrict its immediate application in these scenarios. First, the relatively high computational overhead of EG-DETR restricts its deployment on edge devices with limited resources; thus, it is currently only suitable for agricultural applications with relatively relaxed latency requirements, such as crop monitoring systems. Moreover, the diversity and coverage of the training data are not yet comprehensive enough to guarantee robust generalization across diverse environmental conditions.

To address these challenges, future work will focus on two main directions. Primarily, we intend to apply model compression techniques such as pruning and quantization to reduce computational requirements, thereby enabling efficient deployment on edge devices. Second, we intend to explore multimodal data fusion methods by integrating information from spectral, thermal, or other sensors in order to improve the adaptability of the model in different agricultural environments.

6. Conclusions

This paper presents a novel intelligent sensing method named EG-DETR intended for tomato ripeness detection. By introducing edge information into the DETR framework, EG-DETR guides queries in the decoder to focus more effectively on foreground regions, resulting in enhanced detection performance under challenging conditions such as occlusion and fruit clustering. EG-DETR further employs a redundant box suppression strategy to reduce query overlap, improving both training efficiency and detection stability.

We evaluated EG-DETR on a multimodal tomato dataset to assess its effectiveness in real agricultural scenarios. It achieved 83.7% , surpassing existing methods and showing strong maturity recognition for automated harvesting. The model also demonstrated good generalization ability, with scores of 51.8% on COCO2017, 32.3% on VisDrone, and 48.4% on NEU-DET. Ablation studies confirmed the effectiveness of our method in handling occlusion and overlapping.

EG-DETR shows great potential for applications in complex and dynamic agricultural environments. It remains stable across various lighting conditions and is able to adapt to image variations captured by sensors in open-field environments. This adaptability enables reliable detection across different times of day, weather conditions, and sensor modalities, making EG-DETR a promising solution for intelligent sensing in open-field environments.

Author Contributions

Methodology: J.Y. and J.Z.; formal analysis: J.Y. and J.Z.; validation: J.Y., J.Z. and Y.N.; writing—original draft: J.Z.; writing—review and editing: J.Y. and Y.N.; visualization: J.X.; data curation: K.L.; investigation: L.T.; funding acquisition: Y.N. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Foundation of Guangdong Province, China (No. 2025A1515011755).

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Figure A1.

Convergence of the learnable fusion parameter ().

Figure A1.

Convergence of the learnable fusion parameter ().

References

- Magalhães, S.A.; Castro, L.; Moreira, G.; dos Santos, F.N.; Cunha, M.; Dias, J.; Moreira, A.P. Evaluating the Single-Shot MultiBox Detector and YOLO Deep Learning Models for the Detection of Tomatoes in a Greenhouse. Sensors 2021, 21, 3569. [Google Scholar] [CrossRef] [PubMed]

- Li, P.; Zheng, J.; Li, P.; Long, H.; Li, M.; Gao, L. Tomato maturity detection and counting model based on MHSA-YOLOv8. Sensors 2023, 23, 6701. [Google Scholar] [CrossRef] [PubMed]

- Tianhua, L.; Meng, S.; Xiaoming, D.; Li Yuhua, Z.G.; Guoying, S.; Wenxian, L. Tomato recognition method at the ripening stage based on YOLO v4 and HSV. Trans. Chin. Soc. Agric. Eng. 2021, 37, 183–190. [Google Scholar]

- Wang, A.; Qian, W.; Li, A.; Xu, Y.; Hu, J.; Xie, Y.; Zhang, L. NVW-YOLOv8s: An improved YOLOv8s network for real-time detection and segmentation of tomato fruits at different ripeness stages. Comput. Electron. Agric. 2024, 219, 108833. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Liu, S.; Li, F.; Zhang, H.; Yang, X.; Qi, X.; Su, H.; Zhu, J.; Zhang, L. Dab-detr: Dynamic anchor boxes are better queries for detr. arXiv 2022, arXiv:2201.12329. [Google Scholar]

- Li, F.; Zhang, H.; Liu, S.; Guo, J.; Ni, L.M.; Zhang, L. Dn-detr: Accelerate detr training by introducing query denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 13619–13627. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December; pp. 1395–1403.

- Liu, Y.; Cheng, M.M.; Hu, X.; Wang, K.; Bai, X. Richer convolutional features for edge detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3000–3009. [Google Scholar]

- Poma, X.S.; Riba, E.; Sappa, A. Dense extreme inception network: Towards a robust cnn model for edge detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020; pp. 1923–1932. [Google Scholar]

- Zhang, Y.; Rao, Y.; Chen, W.J.; Hou, W.H.; Yan, S.L.; Li, Y.; Zhou, C.Q.; Wang, F.Y.; Chu, Y.Y.; Shi, Y.L. Multimodal Image Dataset of Tomato Fruits with Different Maturity. China Sci. Data 2025, 10, 73–88. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Eggert, C.; Brehm, S.; Winschel, A.; Zecha, D.; Lienhart, R. A closer look: Small object detection in faster R-CNN. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 421–426. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Li, H.; Qu, H. DASSF: Dynamic-attention scale-sequence fusion for aerial object detection. In Proceedings of the 13th International Conference, CVM 2025, Hong Kong SAR, China, 19–21 April 2025; Springer: Berlin/Heidelberg, Germany, 2025; pp. 212–227. [Google Scholar]

- Cao, X.; Yuan, P.; Feng, B.; Niu, K. Cf-detr: Coarse-to-fine transformers for end-to-end object detection. Proc. AAAI Conf. Artif. Intell. 2022, 36, 185–193. [Google Scholar] [CrossRef]

- Huang, Y.X.; Liu, H.I.; Shuai, H.H.; Cheng, W.H. Dq-detr: Detr with dynamic query for tiny object detection. In Proceedings of the 18th European Conference, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 290–305. [Google Scholar]

- Wang, Y.; Zhang, X.; Yang, T.; Sun, J. Anchor detr: Query design for transformer-based detector. Proc. AAAI Conf. Artif. Intell. 2022, 36, 2567–2575. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, L.; Han, B.; Guo, S. Adamixer: A fast-converging query-based object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5364–5373. [Google Scholar]

- Zhang, G.; Luo, Z.; Yu, Y.; Cui, K.; Lu, S. Accelerating DETR convergence via semantic-aligned matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 949–958. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.M.; Shum, H.Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Hou, X.; Liu, M.; Zhang, S.; Wei, P.; Chen, B. Salience DETR: Enhancing Detection Transformer with Hierarchical Salience Filtering Refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 17574–17583. [Google Scholar]

- Hou, X.; Liu, M.; Zhang, S.; Wei, P.; Chen, B.; Lan, X. Relation detr: Exploring explicit position relation prior for object detection. In Proceedings of the 18th European Conference, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 89–105. [Google Scholar]

- Soria, X.; Li, Y.; Rouhani, M.; Sappa, A.D. Tiny and efficient model for the edge detection generalization. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Paris, France, 2–3 October 2023; pp. 1364–1373. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Song, K.; Meng, Q.; Yan, Y. An End-to-End Steel Surface Defect Detection Approach via Fusing Multiple Hierarchical Features. IEEE Trans. Instrum. Meas. 2020, 69, 1493–1504. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhao, C.; Sun, Y.; Wang, W.; Chen, Q.; Ding, E.; Yang, Y.; Wang, J. MS-DETR: Efficient DETR Training with Mixed Supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 17027–17036. [Google Scholar]

- Ye, M.; Ke, L.; Li, S.; Tai, Y.W.; Tang, C.K.; Danelljan, M.; Yu, F. Cascade-DETR: Delving into high-quality universal object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 6704–6714. [Google Scholar]

- Pu, Y.; Liang, W.; Hao, Y.; Yuan, Y.; Yang, Y.; Zhang, C.; Hu, H.; Huang, G. Rank-DETR for high quality object detection. In Proceedings of the Advances in Neural Information Processing Systems 36 (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. In Proceedings of the Advances in Neural Information Processing Systems 37 (NeurIPS 2024), Vancouver, BC, Canada, 10–15 December 2024; pp. 107984–108011. [Google Scholar]

- Huang, Y.; Wang, X.; Liu, X.; Cai, L.; Feng, X.; Chen, X. A Lightweight Citrus Ripeness Detection Algorithm Based on Visual Saliency Priors and Improved RT-DETR. Agronomy 2025, 15, 1173. [Google Scholar] [CrossRef]

- Wang, S.; Jiang, H.; Yang, J.; Ma, X.; Chen, J.; Li, Z.; Tang, X. Lightweight tomato ripeness detection algorithm based on the improved RT-DETR. Front. Plant Sci. 2024, 15, 1415297. [Google Scholar] [CrossRef] [PubMed]

- Sapkota, R.; Cheppally, R.H.; Sharda, A.; Karkee, M. RF-DETR Object Detection vs YOLOv12: A Study of Transformer-based and CNN-based Architectures for Single-Class and Multi-Class Greenfruit Detection in Complex Orchard Environments Under Label Ambiguity. arXiv 2025, arXiv:2504.13099. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).