Dual-Aspect Active Learning with Domain-Adversarial Training for Low-Resource Misinformation Detection

Abstract

1. Introduction

- The integration of dual-aspect active learning with domain-adversarial training. To our knowledge, this is the first work explicitly combining a dual-feature (textual and affective) active learning approach with adversarial domain adaptation, effectively addressing the challenges posed by limited labeled resources and domain discrepancies in misinformation detection.

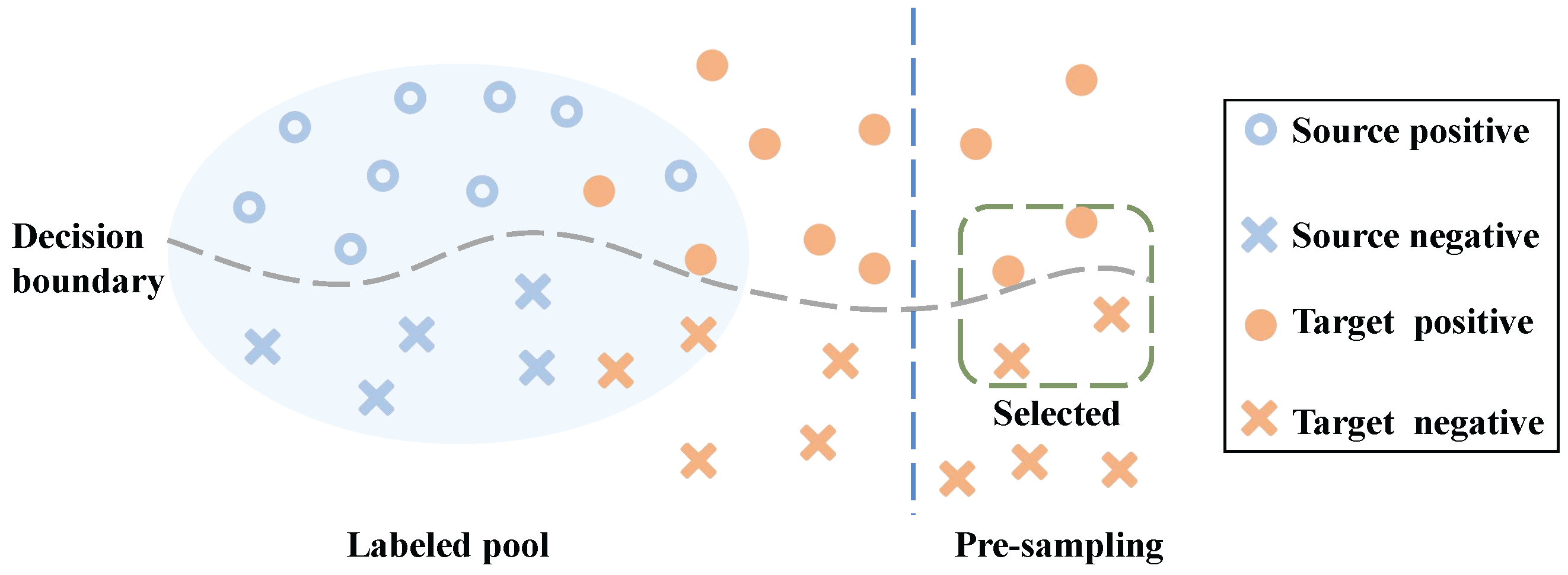

- A novel dual-aspect sampling strategy. We propose an innovative two-stage active learning sampling mechanism, simultaneously leveraging textual and affective features to identify samples that are both informative (diverse from labeled data) and uncertain (close to the decision boundary), optimizing the annotation process.

- Enhanced effectiveness under low-resource conditions. Extensive experimental evaluations demonstrate that DDT significantly outperforms existing methods in low-resource misinformation detection tasks. Our detailed analyses confirm the individual contributions and effectiveness of both the dual-aspect sampling and domain-adversarial training components.

2. Related Work

2.1. Fake Information Detection

2.2. Emotion-Aware Detection

2.3. Active Learning

2.4. Active Domain Adaptation

3. Approach

3.1. Problem Definition

- Textual representational divergence: the semantic dissimilarity between an unlabeled instance and the existing labeled data in embedding space;

- Affective signal variance: the emotional distinctiveness or salience derived from affective features.

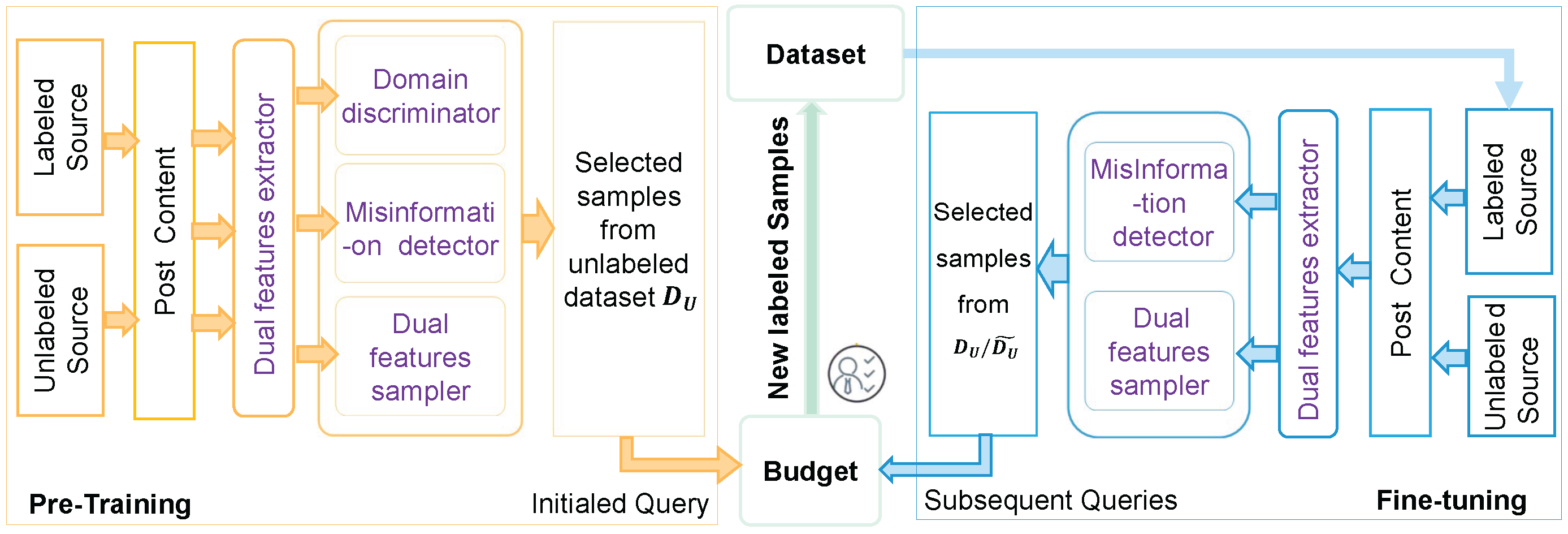

3.2. Framework Overview

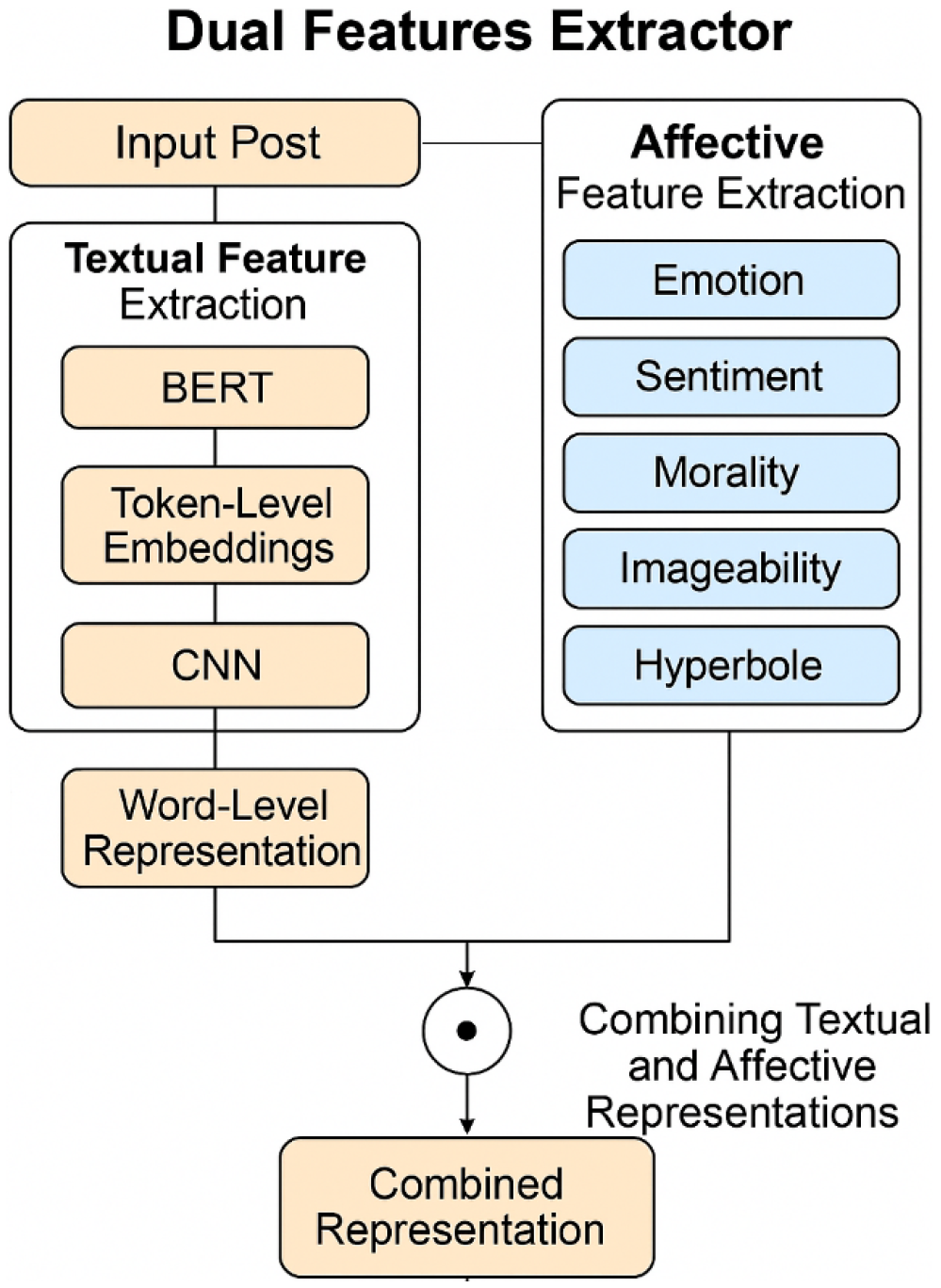

3.3. Dual-Feature Extractor

3.3.1. Textual Feature Extraction

3.3.2. Affective Feature Extraction

- Emotion: We utilize the NRC Emotion Lexicon, which associates English words with eight basic emotions: anger, fear, anticipation, trust, surprise, sadness, joy, and disgust. For each post, we identify the presence of words linked to these emotions and represent them as an eight-dimensional binary vector, where each dimension indicates the presence (1) or absence (0) of words associated with the corresponding emotion.

- Sentiment: Using the same NRC Lexicon, we detect whether the text expresses positive or negative sentiment. This is encoded as a two-dimensional binary vector, indicating the presence of positive and negative sentiment words, respectively.

- Morality: We employ the Moral Foundations Dictionary (MFD), which categorizes words into five moral foundations: Care/Harm, Fairness/Cheating, Loyalty/Betrayal, Authority/Subversion, and Purity/Degradation. Each foundation is divided into virtue and vice dimensions, resulting in ten categories in total. We scan each post for words associated with these categories and represent the findings as a 10-dimensional binary vector.

- Imageability: We reference the MRC Psycholinguistic Database to obtain imageability scores for words within the post. Imageability refers to the ease with which a word evokes a mental image. We compute the average imageability score of content words in the post, normalize it to the range [0, 1], and encode it as a scalar value.

- Hyperbole: We compile a lexicon of hyperbolic terms—words that convey exaggerated or overstated expression. Each post is examined for the presence of such terms, and this feature is encoded as a binary indicator (1 if any hyperbolic word is present, 0 otherwise).

3.3.3. Combining Textual and Affective Representations

3.4. Domain Discriminator for Domain-Adversarial Training

3.4.1. Domain Discriminator

3.4.2. Adversarial Learning with Gradient Reversal

3.5. Dual-Feature Sampler and Misinformation Detector for Sampling Strategies

3.5.1. Misinformation Detector

3.5.2. Dual-Feature Sampler

3.5.3. Sampling Strategy

- Pre-sampling using dual-feature scores. We compute a score for each unlabeled sample, , that reflects its dissimilarity from the labeled pool, using the dual-feature sampler output:A higher score indicates greater textual and affective divergence from the labeled pool. We then select the top unlabeled samples with the highest scores as our pre-sampled set.

- Uncertainty-based refinement. From this pre-sampled set of candidates, we use the fake information detector to compute each sample’s entropy (Equation (7)). We then pick the b samples with the highest entropy values, indicating the greatest uncertainty, as our final query set.

3.6. Algorithm Optimization

| Algorithm 1 Pre-training process in DDT. |

|

| Algorithm 2 Fine-tuning process in DDT. |

|

4. Experiments

4.1. Experimental Setup

4.2. Baselines

4.2.1. Group A: Active Learning Strategies

- Random. A simple sampling strategy that selects unlabeled instances uniformly at random, without considering any particular features or domain knowledge.

- Uncertainty [46]. An approach that picks samples for which the model is least certain. We estimate uncertainty using the predicted probability distribution (entropy), where higher entropy signifies greater uncertainty.

- Core-set [47]. This strategy queries samples that are farthest (in Euclidean distance) from any labeled instance, under the assumption that these will yield the most novel information for model improvement.

- TQS [38]. Transferable Query Selection combines three criteria—atransferable committee, uncertainty, and domainness—to identify highly informative samples under domain shifts. It also incorporates random sampling to increase diversity among the selected queries.

- DAAL [48]. Domain-adversarial active learning leverages textual and affective features to identify samples most dissimilar to labeled data and uses adversarial domain training to learn transferable representations.

4.2.2. Group B: Cross-Domain Fake Information Detection Models

- EANNs. Event-Adversarial Neural Networks primarily incorporate text features from multiple domains through adversarial learning to boost cross-domain detection performance.

- EDDFNs [49]. These learn domain vectors via unsupervised methods and augments them with both domain-specific and cross-domain information for improved fake information detection across diverse domains.

- MDFEND [50]. This employs multiple domain experts and domain gating to integrate text features with domain-aware representations, thereby enhancing cross-domain detection capability.

- DAAL [48]. As noted above, DAAL combines textual and affective features with adversarial domain training, effectively transferring knowledge across domains.

- FinDCL [51]. Fine-Grained Discrepancy Contrastive Learning simulates nuanced differences between fake news and event-related truths via adversarial pre-training. It then refines truth extraction under a contrastive framework to better capture subtle falsehood patterns and reduce redundancy.

4.2.3. Comparison with Baselines

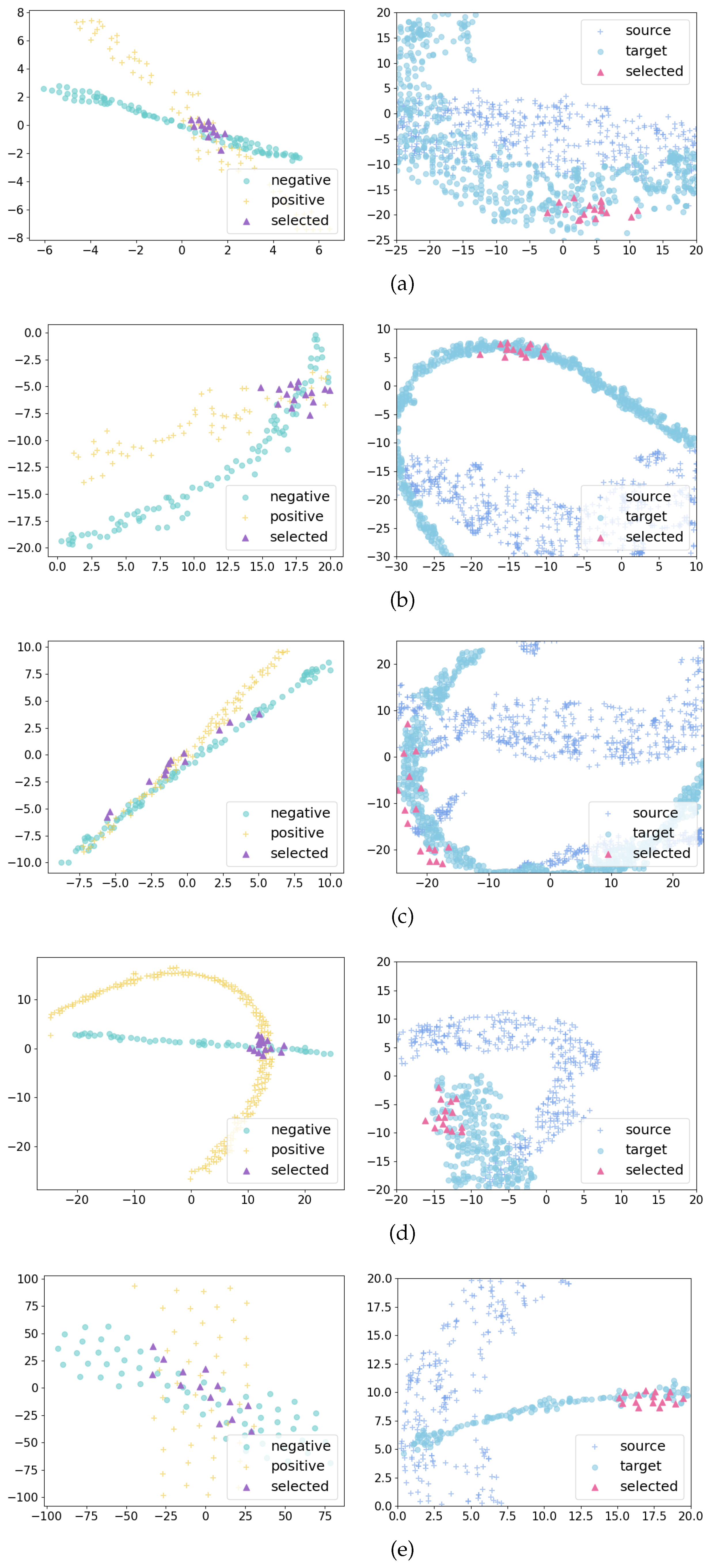

4.2.4. Analysis of Active Learning Sampling

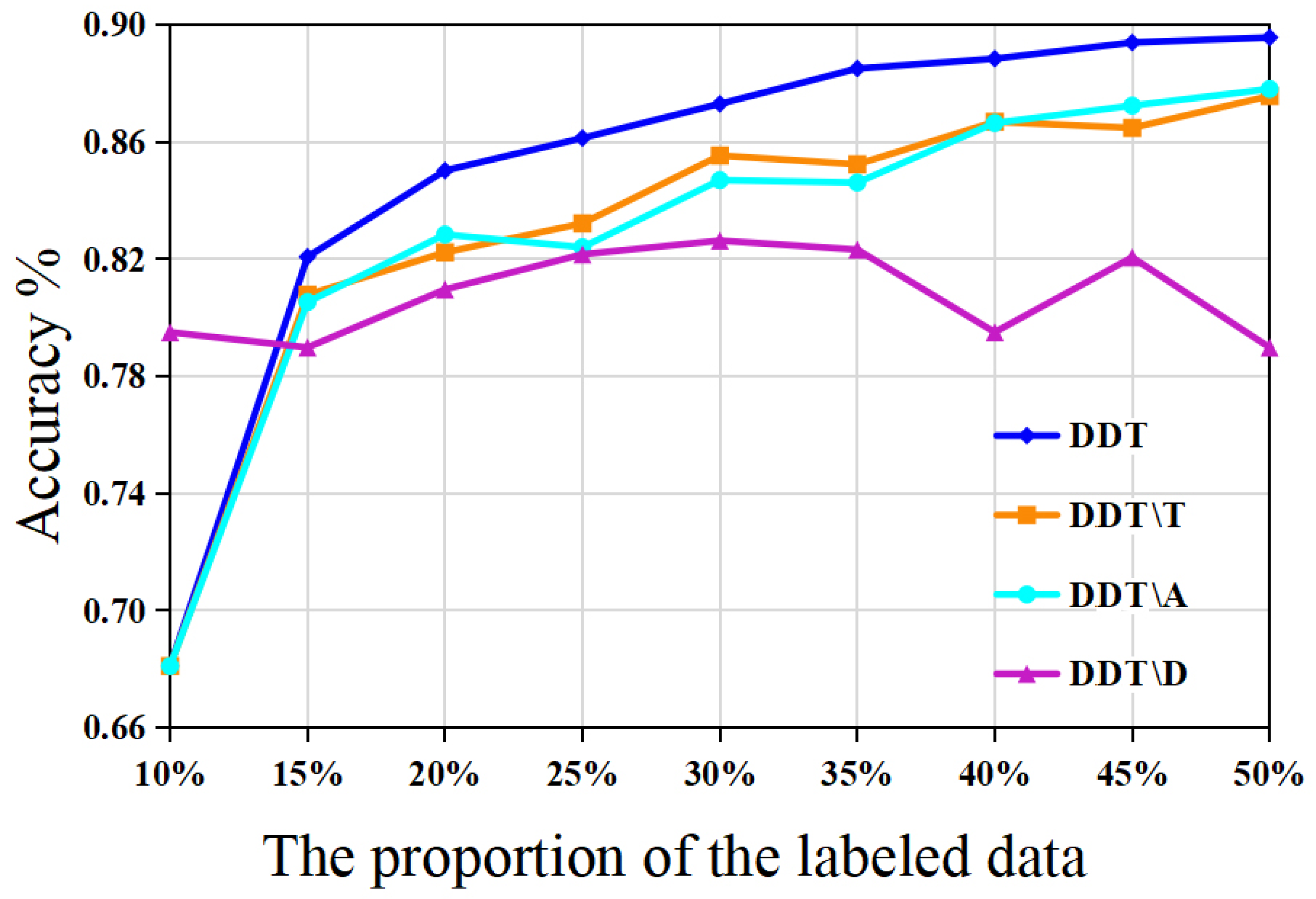

4.2.5. Ablation Analysis

- DDT\T: DDT without textual features in the dual-feature sampler; only affective cues were used for sample selection.

- DDT\A: DDT without affective features in the sampler, relying purely on textual signals to pick informative samples.

- DDT\D: DDT without a domain discriminator, i.e., removing adversarial domain training during the pre-training stage and relying solely on labeled target data.

- The integration of textual and affective features proves advantageous for DDT to query the most informative posts.

- The shared domain features learned by the dual-feature extractor are conducive to improving DDT’s performance in a new domain.

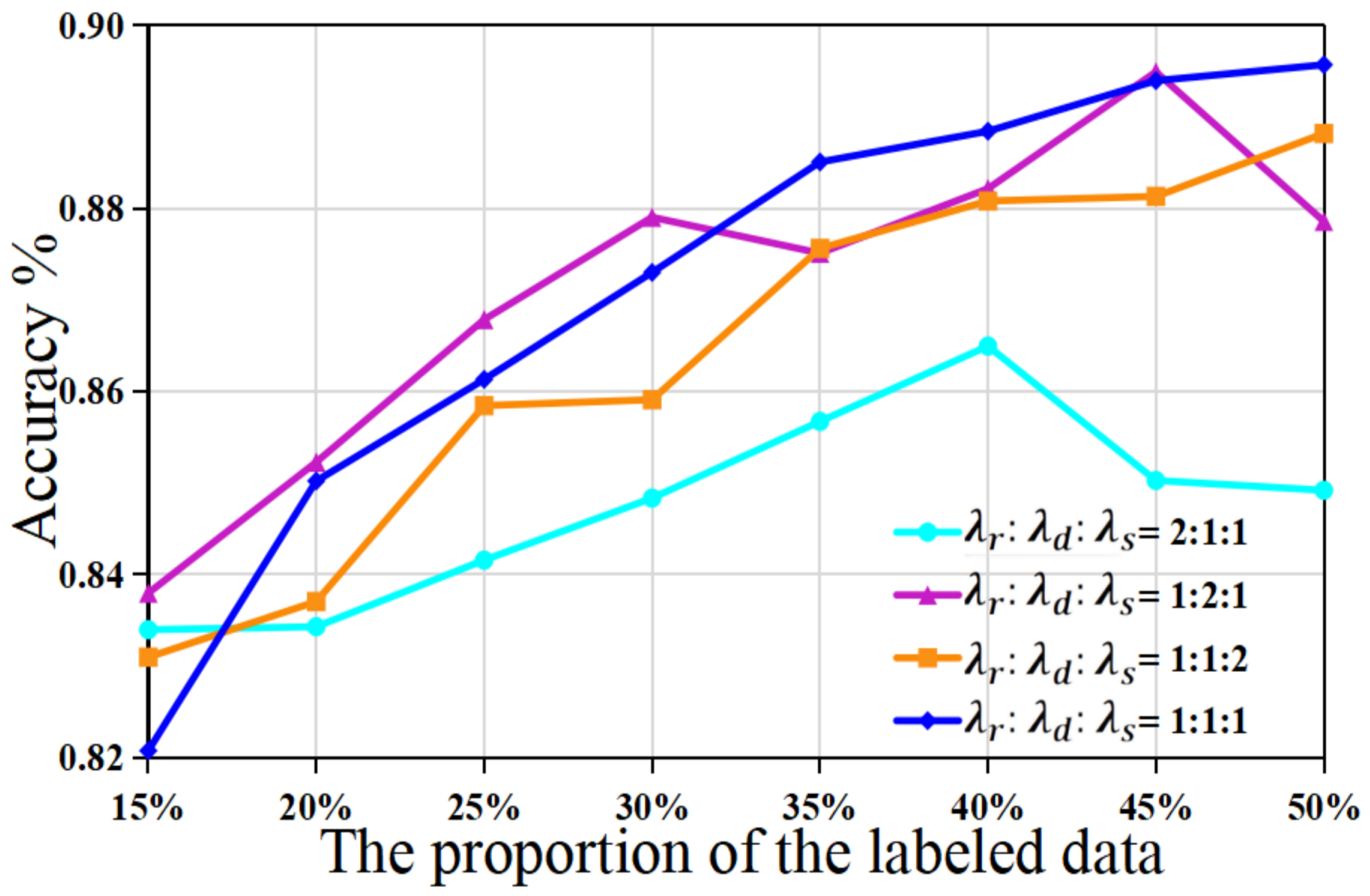

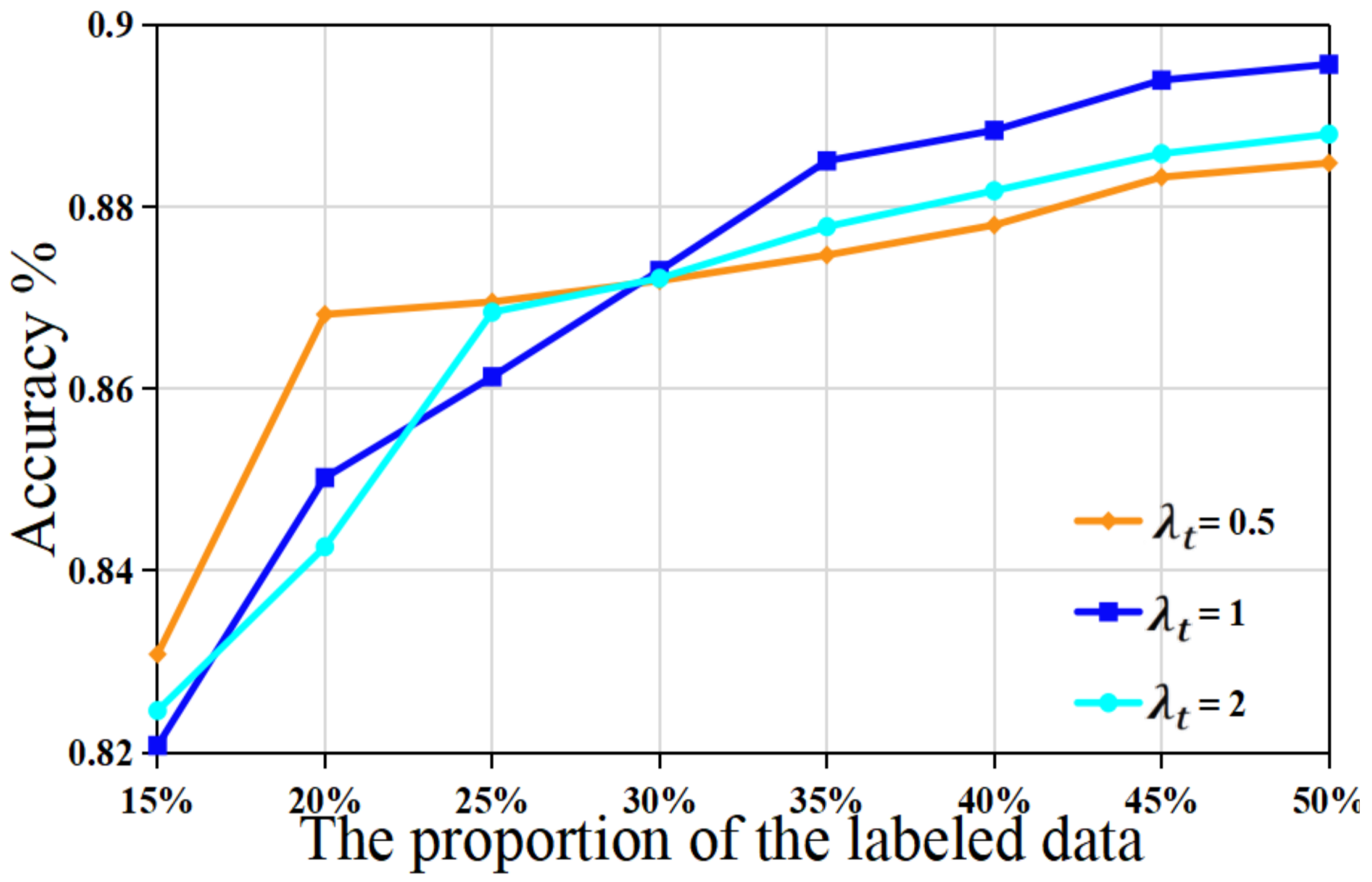

4.2.6. Hyperparameter Sensitivity

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Gao, M.; Wang, Z.; Wang, R.; Wen, J. Robustness Analysis of Triangle Relations Attack. In Proceedings of the 2020 IEEE 13th International Conference on Cloud Computing, Beijing, China, 18–24 October 2020; pp. 557–565. [Google Scholar]

- Liu, Z.; Qin, T.; Sun, Q.; Li, S.; Song, H.H.; Chen, Z. SIRQU: Dynamic Quarantine Defense Model for Online Rumor Propagation Control. IEEE Trans. Comput. Soc. Syst. 2022, 9, 1703–1714. [Google Scholar] [CrossRef]

- Amira, A.; Derhab, A.; Hadjar, S.; Merazka, M.; Alam, M.G.R.; Hassan, M.M. Detection and Analysis of Fake News Users’ Communities in Social Media. IEEE Trans. Comput. Soc. Syst. 2023, 11, 5050–5059. [Google Scholar] [CrossRef]

- Yu, W.; Ge, J.; Chen, Z.; Liu, H.; Ouyang, M.; Zheng, Y.; Kong, W. Research on Fake News Detection Based on Dual Evidence Perception. Eng. Appl. Artif. Intell. 2024, 133, 108271. [Google Scholar] [CrossRef]

- Jing, J.; Li, F.; Song, B.; Zhang, Z.; Choo, K.K.R. Disinformation Propagation Trend Analysis and Identification Based on Social Situation Analytics and Multilevel Attention Network. IEEE Trans. Comput. Soc. Syst. 2023, 10, 507–522. [Google Scholar] [CrossRef]

- Dong, X.; Victor, U.; Qian, L. Two-Path Deep Semisupervised Learning for Timely Fake News Detection. IEEE Trans. Comput. Soc. Syst. 2020, 7, 1386–1398. [Google Scholar] [CrossRef]

- Babaei, M.; Kulshrestha, J.; Chakraborty, A.; Redmiles, E.M.; Cha, M.; Gummadi, K.P. Analyzing Biases in Perception of Truth in News Stories and Their Implications for Fact Checking. IEEE Trans. Comput. Soc. Syst. 2022, 9, 839–850. [Google Scholar] [CrossRef]

- Gao, F.; Pi, D.; Chen, J. Balanced and robust unsupervised Open Set Domain Adaptation via joint adversarial alignment and unknown class isolation. Expert Syst. Appl. 2024, 238, 122127. [Google Scholar] [CrossRef]

- Yu, Y.; Karimi, H.R.; Shi, P.; Peng, R.; Zhao, S. A new multi-source information domain adaption network based on domain attributes and features transfer for cross-domain fault diagnosis. Mech. Syst. Signal Process. 2024, 211, 111194. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, L.; Zhang, W.; Jiang, Z. Multi-task convex combination interpolation for meta-learning with fewer tasks. Knowl.-Based Syst. 2024, 296, 111839. [Google Scholar] [CrossRef]

- Ghanem, B.; Ponzetto, S.P.; Rosso, P.; Rangel, F. Fakeflow: Fake news detection by modeling the flow of affective information. arXiv 2021, arXiv:2101.09810. [Google Scholar]

- Miao, X.; Rao, D.; Jiang, Z. Syntax and sentiment enhanced bert for earliest rumor detection. In Proceedings of the NLPCC 2021, Qingdao, China, 13–17 October 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 570–582. [Google Scholar]

- Huang, Y.; Zhang, W.; Li, M.; Chen, X. EML: Emotion-Aware Meta Learning for Cross-Event False Information Detection. ACM Trans. Knowl. Discov. Data TKDD 2024, 18, 1–25. [Google Scholar] [CrossRef]

- Yuan, H.; Zheng, J.; Ye, Q.; Qian, Y.; Zhang, Y. Improving fake news detection with domain-adversarial and graph-attention neural network. Decis. Support Syst. 2021, 151, 113633. [Google Scholar] [CrossRef]

- Gao, J.; Han, S.; Song, X.; Ciravegna, F. Rp-dnn: A tweet level propagation context based deep neural networks. arXiv 2020, arXiv:2002.12683. [Google Scholar]

- Verma, P.K.; Agrawal, P.; Amorim, I.; Prodan, R. WELFake: Word Embedding Over Linguistic Features for Fake News Detection. IEEE Trans. Comput. Soc. Syst. 2021, 8, 881–893. [Google Scholar] [CrossRef]

- Xu, F.; Zeng, L.; Huang, Q.; Yan, K.; Wang, M.; Sheng, V.S. Hierarchical graph attention networks for multi-modal rumor detection on social media. Neurocomputing 2024, 569, 127112. [Google Scholar] [CrossRef]

- Zhou, H.; Ma, T.; Rong, H.; Qian, Y.; Tian, Y.; Al-Nabhan, N. MDMN: Multi-task and Domain Adaptation based Multi-modal Network for early rumor detection. Expert Syst. Appl. 2022, 195, 116517. [Google Scholar] [CrossRef]

- Bian, T.; Xiao, X.; Xu, T.; Zhao, P.; Huang, W.; Rong, Y.; Huang, J. Rumor detection on social media with bi-directional graph convolutional networks. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 549–556. [Google Scholar]

- Chen, Y.; Li, D.; Zhang, P.; Sui, J.; Lv, Q.; Tun, L.; Shang, L. Cross-modal ambiguity learning for multimodal fake news detection. In Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022; pp. 2897–2905. [Google Scholar]

- Lee, J.; Park, S.; Ko, Y. Korean Hate Speech and News Dataset for Detecting Harmful Language on Social Media. Appl. Sci. 2021, 11, 903. [Google Scholar]

- Alhindi, T.; Petridis, S.; Damljanovic, D. Detecting Fake News in Arabic Using Deep Learning. In Proceedings of the 4th Workshop on Open-Source Arabic Corpora and Processing Tools, Marseille, France, 11–16 May 2020. [Google Scholar]

- Chakraborty, T.; Bandyopadhyay, S.; Ghosh, S.; Goyal, P. Multilingual and Multimodal Fake News Detection Using XLM-R and VisualBERT. In Proceedings of the 12th Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 1097–1106. [Google Scholar]

- Blanco-Fernández, Y.; Otero-Vizoso, J.; Gil-Solla, A.; García-Duque, J. Enhancing Misinformation Detection in Spanish Language with Deep Learning: BERT and RoBERTa Transformer Models. Appl. Sci. 2024, 14, 9729. [Google Scholar] [CrossRef]

- Tretiakov, A.; Martín, A.; Camacho, D. Detection of False Information in Spanish Using Machine Learning Techniques. In Proceedings of the International Conference on Intelligent Data Engineering and Automated Learning, Évora, Portugal, 22–24 November 2023. [Google Scholar]

- Pavlyshenko, B.M. Analysis of Disinformation and Fake News Detection Using Fine-Tuned Large Language Model. arXiv 2023, arXiv:2309.04704. [Google Scholar]

- Newport, A.; Jankowicz, N. Russian networks flood the Internet with propaganda, aiming to corrupt AI chatbots. Bull. At. Sci. 2025. Available online: https://thebulletin.org/2025/03/russian-networks-flood-the-internet-with-propaganda-aiming-to-corrupt-ai-chatbots/ (accessed on 20 May 2025).

- Wang, Z.; Yuan, L.; Zhang, Z.; Zhao, Q. Bridging Cognition and Emotion: Empathy-Driven Multimodal Misinformation Detection. arXiv 2025, arXiv:2504.17332. [Google Scholar]

- Xu, X.; Li, X.; Wang, T.; Jiang, Y. AMPLE: Emotion-Aware Multimodal Fusion Prompt Learning for Fake News Detection. In Proceedings of the 31st International Conference on Multimedia Modeling (MMM 2025), Nara, Japan, 8–10 January 2025; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2025; Volume 15520, pp. 86–100. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, T.; Yang, K.; Thompson, P.; Yu, Z.; Ananiadou, S. Emotion Detection for Misinformation: A Review. Inf. Fusion 2024, 107, 102300. [Google Scholar] [CrossRef]

- Cardoso, T.N.; Silva, R.M.; Canuto, S.; Moro, M.M.; Gonçalves, M.A. Ranked batch-mode active learning. Inf. Sci. 2017, 379, 313–337. [Google Scholar] [CrossRef]

- Kirsch, A.; Van Amersfoort, J.; Gal, Y. Batchbald: Efficient and diverse batch acquisition for deep bayesian active learning. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2019; Volume 32. [Google Scholar]

- Zhang, B.; Li, L.; Yang, S.; Wang, S.; Zha, Z.J.; Huang, Q. State-relabeling adversarial active learning. In Proceedings of the IEEE/CVF Conference, Seattle, WA, USA, 14–19 June 2020; pp. 8756–8765. [Google Scholar]

- Ren, Y.; Wang, B.; Zhang, J.; Chang, Y. Adversarial active learning based heterogeneous graph neural network for fake news detection. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Sorrento, Italy, 17–20 November 2020; pp. 452–461. [Google Scholar]

- Farinneya, P.; Pour, M.M.A.; Hamidian, S.; Diab, M. Active learning for rumor identification on social media. In Proceedings of the EMNLP 2021, Punta Cana, Dominican, 7–11 November 2021; pp. 4556–4565. [Google Scholar]

- Su, J.C.; Tsai, Y.H.; Sohn, K.; Liu, B.; Maji, S.; Chandraker, M. Active adversarial domain adaptation. In Proceedings of the IEEE/CVF Winter Conference, Village, CO, USA, 1–5 March 2020; pp. 739–748. [Google Scholar]

- Fu, B.; Cao, Z.; Wang, J.; Long, M. Transferable query selection for active domain adaptation. In Proceedings of the IEEE/CVF Conference, Nashville, TN, USA, 20–25 June 2021; pp. 7272–7281. [Google Scholar]

- Xie, B.; Yuan, L.; Li, S.; Liu, C.H.; Cheng, X.; Wang, G. Active learning for domain adaptation: An energy-based approach. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 8708–8716. [Google Scholar]

- Saha, T.; Upadhyaya, A.; Saha, S.; Bhattacharyya, P. A Multitask Multimodal Ensemble Model for Sentiment- and Emotion-Aided Tweet Act Classification. IEEE Trans. Comput. Soc. Syst. 2022, 9, 508–517. [Google Scholar] [CrossRef]

- Bhattacharya, P.; Patel, S.B.; Gupta, R.; Tanwar, S.; Rodrigues, J.J.P.C. SaTYa: Trusted Bi-LSTM-Based Fake News Classification Scheme for Smart Community. IEEE Trans. Comput. Soc. Syst. 2022, 9, 1758–1767. [Google Scholar] [CrossRef]

- Menke, M.; Wenzel, T.; Schwung, A. Bridging the gap: Active learning for efficient domain adaptation in object detection. Expert Syst. Appl. 2024, 254, 124403. [Google Scholar] [CrossRef]

- Ganin, Y.; Lempitsky, V. Unsupervised domain adaptation by backpropagation. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 1180–1189. [Google Scholar]

- Sedhai, S.; Sun, A. Semi-Supervised Spam Detection in Twitter Stream. IEEE Trans. Comput. Soc. Syst. 2018, 5, 169–175. [Google Scholar] [CrossRef]

- Zubiaga, A.; Liakata, M.; Procter, R. Exploiting context for rumour detection in social media. In Proceedings of the Social Informatics: 9th International Conference, Oxford, UK, 13–15 September 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 109–123. [Google Scholar]

- Sharma, M.; Bilgic, M. Evidence-based uncertainty sampling for active learning. Data Min. Knowl. Discov. 2017, 31, 164–202. [Google Scholar] [CrossRef]

- Sener, O.; Savarese, S. Active learning for convolutional neural networks: A core-set approach. arXiv 2017, arXiv:1708.00489. [Google Scholar]

- Zhang, C.; Gao, M.; Huang, Y.; Jiang, F.; Wang, J.; Wen, J. DAAL: Domain Adversarial Active Learning Based on Dual Features for Rumor Detection. In Proceedings of the Natural Language Processing and Chinese Computing, Foshan, China, 12–15 October 2023; pp. 690–703. [Google Scholar]

- Silva, A.; Luo, L.; Karunasekera, S.; Leckie, C. Embracing domain differences in fake news: Cross-domain fake news detection using multi-modal data. In Proceedings of the AAAI, Virtual, 19–21 May 2021; Volume 35, pp. 557–565. [Google Scholar]

- Nan, Q.; Cao, J.; Zhu, Y.; Wang, Y.; Li, J. MDFEND: Multi-domain fake news detection. In Proceedings of the CIKM, Online, 1–5 November 2021; pp. 3343–3347. [Google Scholar]

- Yin, J.; Gao, M.; Shu, K.; Wang, J.; Huang, Y.; Zhou, W. Fine-Grained Discrepancy Contrastive Learning for Robust Fake News Detection. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 12541–12545. [Google Scholar]

| Dataset | Cha. | Fer. | Ger. | Ott. | Syd. |

|---|---|---|---|---|---|

| Source Fake News | 1514 | 1688 | 1734 | 1502 | 1450 |

| Source Real News | 2208 | 2970 | 3598 | 3408 | 3132 |

| Target Fake News | 458 | 284 | 238 | 470 | 522 |

| Target Real News | 1621 | 859 | 231 | 421 | 697 |

| Dataset | Stra. | 15% | 20% | 25% | 30% | 35% | 40% | 45% | 50% |

|---|---|---|---|---|---|---|---|---|---|

| Germanwings-crash | TQS | 0.738 | 0.825 | 0.831 | 0.800 | 0.800 | 0.800 | 0.825 | 0.850 |

| UCN | 0.781 | 0.806 | 0.850 | 0.831 | 0.806 | 0.825 | 0.831 | 0.850 | |

| RAN | 0.800 | 0.816 | 0.788 | 0.831 | 0.788 | 0.825 | 0.844 | 0.819 | |

| CoreSet | 0.806 | 0.844 | 0.831 | 0.869 | 0.831 | 0.831 | 0.836 | 0.819 | |

| DAAL | 0.831 | 0.875 | 0.863 | 0.875 | 0.869 | 0.856 | 0.863 | 0.863 | |

| DDT | 0.823 | 0.870 | 0.875 | 0.877 | 0.900 | 0.913 | 0.925 | 0.900 | |

| Sydneysiege | TQS | 0.758 | 0.790 | 0.777 | 0.792 | 0.790 | 0.804 | 0.800 | 0.790 |

| UCN | 0.789 | 0.833 | 0.835 | 0.858 | 0.844 | 0.848 | 0.867 | 0.854 | |

| RAN | 0.817 | 0.838 | 0.846 | 0.842 | 0.846 | 0.848 | 0.858 | 0.844 | |

| CoreSet | 0.838 | 0.831 | 0.858 | 0.858 | 0.848 | 0.842 | 0.854 | 0.863 | |

| DAAL | 0.850 | 0.850 | 0.854 | 0.856 | 0.856 | 0.867 | 0.877 | 0.871 | |

| DDT | 0.821 | 0.857 | 0.858 | 0.863 | 0.861 | 0.869 | 0.882 | 0.880 | |

| Ottawashooting | TQS | 0.778 | 0.804 | 0.753 | 0.810 | 0.793 | 0.807 | 0.815 | 0.827 |

| UCN | 0.824 | 0.835 | 0.835 | 0.872 | 0.878 | 0.895 | 0.884 | 0.887 | |

| RAN | 0.818 | 0.792 | 0.830 | 0.858 | 0.861 | 0.852 | 0.869 | 0.889 | |

| CoreSet | 0.807 | 0.835 | 0.849 | 0.852 | 0.855 | 0.875 | 0.878 | 0.875 | |

| DAAL | 0.838 | 0.886 | 0.861 | 0.895 | 0.903 | 0.895 | 0.895 | 0.903 | |

| DDT | 0.841 | 0.886 | 0.898 | 0.909 | 0.909 | 0.915 | 0.921 | 0.926 | |

| Charliehebdo | TQS | 0.785 | 0.839 | 0.825 | 0.850 | 0.841 | 0.826 | 0.869 | 0.854 |

| UCN | 0.830 | 0.848 | 0.835 | 0.845 | 0.851 | 0.846 | 0.840 | 0.828 | |

| RAN | 0.826 | 0.833 | 0.846 | 0.836 | 0.835 | 0.823 | 0.835 | 0.846 | |

| CoreSet | 0.833 | 0.828 | 0.849 | 0.831 | 0.838 | 0.839 | 0.831 | 0.829 | |

| DAAL | 0.844 | 0.850 | 0.861 | 0.858 | 0.858 | 0.861 | 0.858 | 0.866 | |

| DDT | 0.860 | 0.878 | 0.883 | 0.893 | 0.873 | 0.880 | 0.878 | 0.893 | |

| Ferguson | TQS | 0.799 | 0.813 | 0.817 | 0.826 | 0.839 | 0.817 | 0.817 | 0.828 |

| UCN | 0.792 | 0.790 | 0.824 | 0.864 | 0.874 | 0.865 | 0.871 | 0.882 | |

| RAN | 0.792 | 0.790 | 0.814 | 0.857 | 0.881 | 0.875 | 0.880 | 0.874 | |

| CoreSet | 0.790 | 0.844 | 0.826 | 0.866 | 0.883 | 0.880 | 0.875 | 0.880 | |

| DAAL | 0.790 | 0.847 | 0.826 | 0.877 | 0.897 | 0.884 | 0.895 | 0.907 | |

| DDT | 0.886 | 0.871 | 0.884 | 0.888 | 0.906 | 0.902 | 0.888 | 0.902 |

| Charlie. | Sydney. | Ottawash. | Ferguson | Germanw. | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Acc | F1 | Acc | F1 | Acc | F1 | Acc | F1 | Acc | F1 | |

| EANN | 0.843 | 0.777 | 0.771 | 0.762 | 0.835 | 0.835 | 0.848 | 0.767 | 0.813 | 0.812 |

| EDDFN | 0.846 | 0.761 | 0.805 | 0.802 | 0.864 | 0.863 | 0.851 | 0.772 | 0.819 | 0.818 |

| MDFEND | 0.845 | 0.768 | 0.729 | 0.729 | 0.864 | 0.863 | 0.842 | 0.742 | 0.830 | 0.828 |

| FinDCL | 0.848 | 0.779 | 0.805 | 0.800 | 0.866 | 0.862 | 0.853 | 0.775 | 0.832 | 0.829 |

| DAAL | 0.850 | 0.781 | 0.850 | 0.818 | 0.886 | 0.865 | 0.847 | 0.754 | 0.875 | 0.875 |

| DDT | 0.878 | 0.782 | 0.857 | 0.820 | 0.886 | 0.879 | 0.871 | 0.667 | 0.870 | 0.833 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, L.; Han, G.; Liu, S.; Ren, Y.; Wang, X.; Yang, Z.; Jiang, F. Dual-Aspect Active Learning with Domain-Adversarial Training for Low-Resource Misinformation Detection. Mathematics 2025, 13, 1752. https://doi.org/10.3390/math13111752

Hu L, Han G, Liu S, Ren Y, Wang X, Yang Z, Jiang F. Dual-Aspect Active Learning with Domain-Adversarial Training for Low-Resource Misinformation Detection. Mathematics. 2025; 13(11):1752. https://doi.org/10.3390/math13111752

Chicago/Turabian StyleHu, Luyao, Guangpu Han, Shichang Liu, Yuqing Ren, Xu Wang, Zhengyi Yang, and Feng Jiang. 2025. "Dual-Aspect Active Learning with Domain-Adversarial Training for Low-Resource Misinformation Detection" Mathematics 13, no. 11: 1752. https://doi.org/10.3390/math13111752

APA StyleHu, L., Han, G., Liu, S., Ren, Y., Wang, X., Yang, Z., & Jiang, F. (2025). Dual-Aspect Active Learning with Domain-Adversarial Training for Low-Resource Misinformation Detection. Mathematics, 13(11), 1752. https://doi.org/10.3390/math13111752