Abstract

Missing data introduce uncertainty in data mining, but existing set-valued approaches ignore frequency information. We propose unsupervised attribute reduction algorithms for multiset-valued data to address this gap. First, we define a multiset-valued information system (MSVIS) and establish -tolerance relation to form the information granules. Then, -information entropy and -information amount are introduced as uncertainty measures. Finally, these two UMs are used to design two unsupervised attribute reduction algorithms in an MSVIS. The experimental results demonstrate the superiority of the proposed algorithms, achieving average reductions of 50% in attribute subsets while improving clustering accuracy and outlier detection performance. Parameter analysis further validates the robustness of the framework under varying missing rates.

Keywords:

rough set theory; multiset-valued data; uncertainty measurement; unsupervised attribute reduction MSC:

68T09; 68T99; 68W40

1. Introduction

1.1. Research Background

Data with missing information values, also called missing data, are a common occurrence in datasets for various reasons. Simple approaches like listwise deletion handle missing data by removing incomplete records. Despite their simplicity, such methods risk losing valuable information and introducing bias. Moreover, it could result in bias sometimes [1]. Data imputation is a statistical method that fills the missing values by applying reasonable rules. Common imputation techniques include mean substitution and regression imputation [1]. Despite their utility, imputation methods also carry the risk of inducing bias [2].

On the other hand, mathematical models such granular computing (GrC) [3] and rough set theory (RST) [4] are effective tools for dealing with uncertainty. GrC is a significant methodology in artificial intelligence. It has its unique theory, which tries to obtain a granular structure representation of a problem. Providing a basic conceptual framework, GrC is broadly researched in pattern recognition [5] and data mining [6]. As a realistic approach of GrC, RST provides a mathematical tool to handle uncertainty, such as imprecision, inconsistency, and incomplete information [7,8]. An information system (IS) was also presented by Pawlak [4]. The vast majority of applications of RST are bound with an IS.

In RST, a dataset with missing information values is usually represented by an incomplete information system (IIS). An SVIS represents each missing information value under an attribute with all possible values, and each information value that is not missing with a set of its original value. Thus, an SVIS can be obtained from an IIS. As an effective way of handling missing information values, SVIS has drawn great attention from researchers. For instance, Chen et al. [9] investigated the attribute reduction of an SVIS based on a tolerance relation. Dai et al. [8] investigated UMs in an SVIS. Furthermore, an SVIS with missing information values is also studied. Xie et al. [10] investigated the UMs for an incomplete probability SVIS. Chen et al. [11] presented UMs for an incomplete SVIS by using Gaussian kernel.

Feature selection, also known as attribute reduction in RST, is used to reduce redundant attributes and the complexity of calculation for high-dimensional data while improving or maintaining the algorithm performance of a particular task. UM holds particular relevance in the context of attribute reduction within an information system. Zhang et al. [12] investigated UM for categorical data using fuzzy information structures and applied it to attribute reduction. Gao et al. [13] studied a monotonic UM for attribute reduction based on granular maximum decision entropy.

An SVIS is a valuable tool for handling datasets with missing information values. Specifically, an SVIS approach involves replacing the missing information values of an attribute with a set comprising all the possible values that could exist under the same attribute. Meanwhile, the existing information values are substituted with a set containing the original values. By employing this technique, a dataset containing missing information values can be converted into an SVIS, enabling the application of further processes such as calculating the Jaccard distance within the framework of an SVIS.

The study on attribute reduction in an SVIS are abundant. Just to name a few, Peng et al. [14] delved into uncertainty measurement-based feature selection for set-valued data. Singh et al. [15] explored attribute selection in an SVIS based on a fuzzy similarity. Zhang et al. [16] studied attribute reduction for set-valued data based on D-S evidence theory. Liu et al. [17] proposed an incremental attribute reduction method for set-valued decision information system with variable attribute sets. Lang et al. [18] studied an incremental approach to attribute reduction of a dynamic SVIS.

However, the straightforward replacement of missing information with all possible values in an SVIS can be considered overly simplistic and may result in some loss of information. This approach fails to consider the potential variations in the occurrence frequency of different attribute values, leading to a lack of differentiation between values that may occur more frequently than others and should thus be treated distinctively.

An MSVIS has been developed to enhance the functionality of an SVIS [19,20,21]. In the MSVIS framework, the information values associated with an attribute in a dataset are organized as multisets, which allow elements to be repeated. Within an MSVIS, each missing information value is represented by a multiset, ensuring that the frequency of each value is maintained. Information values that are not missing are depicted by multisets that are equivalent to traditional sets containing only the original value.

This approach enables an MSVIS to accurately capture the frequency distribution of information values within the dataset, addressing one of the limitations of an SVIS related to potential information loss arising from oversimplified imputation strategies. By preserving the frequency information associated with each value, an MSVIS provides a more nuanced representation of the dataset, enhancing the robustness and accuracy of data analysis processes.

Despite the benefits of an MSVIS, research on attribute reduction in an MSVIS is relatively scarce compared to the extensive body of research focused on an SVIS. Huang et al. [22] developed a supervised feature-selection method for multiset-valued data using fuzzy conditional information entropy, while Li et al. [23] proposed a semi-supervised approach to attribute reduction for partially labelled multiset-valued data.

Recent advancements in unsupervised attribute reduction have primarily focused on improving computational efficiency and scalability. For instance, Feng et al. [24] proposed a dynamic attribute reduction algorithm using relative neighborhood discernibility, achieving incremental updates for evolving datasets. While their method demonstrates efficiency in handling object additions, it neglects the critical role of frequency distributions in multiset-valued data: a gap that leads to information loss in scenarios like dynamic feature evolution or missing value imputation. Similarly, He et al. [25] introduced uncertainty measures for partially labeled categorical data, yet their semi-supervised framework still relies on partial labels and predefined thresholds, limiting applicability in fully unsupervised environments. Zonoozi et al. [26] proposed an unsupervised adversarial domain adaptation framework using variational auto-encoders (VAEs) to align feature distributions across domains. While it validates the robustness of domain-invariant feature learning, it neglects frequency semantics in missing data. Chen et al. [27] introduced an ensemble regression method that assigns weights to base models based on relative error rates. Although effective for continuous targets, their approach relies on predefined error metrics and ignores the intrinsic frequency distributions in multiset-valued data.

To bridge these gaps, we propose the multiset-valued information system (MSVIS) framework in unsupervised attribute reduction, which uniquely integrates frequency-sensitive uncertainty measurement for missing data with granular computing principles. Unlike conventional SVIS-based methods (e.g., uniform set imputation), an MSVIS explicitly preserves frequency distributions (e.g., 2/S,3/M) through multisets, enabling dynamic adjustments via -tolerance relations. Building on this, this paper uses an MSVIS to represent a dataset with missing information values and builds a UM-based attribute reduction method within it.

Attribute reduction and missing data processing have been researched mainly as two separate data preprocess topics, and related work has been focused on attribute reduction in an SVIS. This paper combines them together, that is, the task of attribute reduction for missing data. The proposed method offers several advantages. Firstly, unlike some data imputation methods, it does not presume any data distribution and is totally based on data itself. Secondly, unlike the approaches proposed by Huang et al. [22] and Li et al. [23], which necessitate the presence of decision attributes in a dataset, the method introduced in this paper does not require decision attributes, thereby expanding its applicability across a broader range of datasets. Lastly, the method proposed in this paper is user-friendly and easy to implement, ensuring accessibility and facilitating its practical application in diverse research and application scenarios.

Different attribute reduction algorithms are compared. Particularly, the proposed attribute reduction algorithms are conducted in both MSVIS and SVIS and then are compared. Parameter analysis is also conducted to see the influence of the parameters. The experimental results show the effectiveness and superiority of the proposed algorithms.

1.2. Organization

The structure of this paper is outlined as follows. Section 2 recalls multisets and rational probability distribution sets, and the one-to-one correspondence between them, as shown in Theorem 1. Section 3 shows that an IIS induces an MSVIS, and defines a -tolerance relation with the help of Theorem 1. Section 4 presents two UMs based on the -tolerance relation for an MSVIS. Section 5 proposes unsupervised attribute reduction algorithms based on the UMs. Section 6 carries out clustering analysis, outlier detection, and parameter analysis to show the effectiveness of the proposed algorithms. Section 7 concludes this paper.

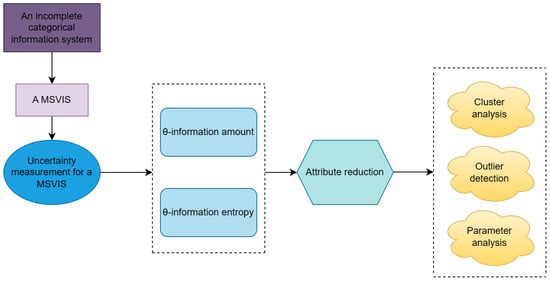

Figure 1 depicts the structure of this paper.

Figure 1.

The workflow of this paper.

2. Preliminaries

Let be a finite object set. denotes a collection of all object subsets of U and means the cardinality of . Put

Definition 1

([28]). Given a non-empty finite set V, a multiset or bag M drawn from V can be defined by a count function : .

For convenience,

indicates that v occurs m times in the multiset M, which is denoted by or .

Suppose ; if , then M is denoted by , i.e.,

Definition 2

([28]). Consider a non-empty finite set V, and let M and N be two multisets drawn from V. The following definitions apply:

Definition 3

([29]). Let and P is called a probability distribution set over V, if , and

P could be represented as a mapping , .

Definition 4

([19]). Let , and be a probability distribution set over V. If , is a rational number, P is referred to as a rational probability distribution set over V; otherwise, P is referred to as an irrational probability distribution set over V.

Definition 5

([29]). Let , and let

be two probability distribution sets overs V. The well-known Hellinger distance between P and Q is as follows

Definition 6

([19]). Given , let be a multiset drawn from V. Put

where . Apparently, is rational, and is a rational probability distribution set over V. is referred to as the probability distribution set induced by M.

It is obvious that

Definition 7

([19]). Let , and be a rational probability distribution set over V. , denote , where and are both rational. Let be the least common multiple of , ,⋯, , denoted as . Obviously, , has the factorization (). By , we have . Define

Apparently, is a multiset drawn from V. is referred to as the multiset induced by P.

It can be observed through simple calculations that

Theorem 1

([19]). Given , denote

and

Then, there exists a one-to-one correspondence between Ω and Ψ.

In the light of Theorem 1, we can treat multisets in an MSVIS as rational probability distribution sets. In the next section, a tolerance relation is defined based on this fact.

3. Multiset-Valued Information Systems and a Tolerance Relation in an MSVIS

In this section, we show how an IIS induces an MSVIS. Then, with the help of Theorem 1, a tolerance relation is defined.

Definition 8

([4]). Suppose that non-empty sets U and A are the object set and the feature set, respectively. Then, is defined as an information system (IS); if , a can determine an information function , where .

An IS is called an incomplete information system (IIS), if there is , such that contains missing information values (denoted by *).

Given an IIS , denote

Example 1.

Table 1.

An IIS.

Table 1.

An IIS.

| U | Headache () | Muscle Pain () | Temperature () | Symptom () |

|---|---|---|---|---|

| Sick | Yes | High | Flu | |

| Sick | Yes | Low | Flu | |

| Middle | * | Normal | Flu | |

| No | Yes | Normal | Flu | |

| * | Yes | Normal | Rhinitis | |

| Middle | No | * | Rhinitis | |

| No | No | Low | Health | |

| No | * | * | Health | |

| * | Yes | Low | Health |

Definition 9

([19]). Given an IIS where , is called a multiset-valued information system (MSVIS), if , , , ⋯ are all multisets drawn from one set.

We say is a subsystem of if .

In an MSVIS , where , , , and ⋯ are multisets drawn from :

Here, v stands for information values and k stands for the times they occur. Note that a general MSVIS does not necessarily cope with missing data. It could also come as a result of data fusion [19]. For the situation of missing data, we have Definition 10 and Example 2, as below.

Definition 10

([19]). Given an IIS where , denote . , let express the number of occurrences of in , where is an ordinary set and is a multiset. If , then is substituted with ; if is not a missing value, say , then is substituted with . This process gives an MSVIS. We say it is an MSVIS induced by the IIS .

Example 2 below shows the process of an IIS inducing an MSVIS in more detail.

Example 2

Table 2.

An MSVIS .

Let us look at Table 1, and take attribute , for example. There are three different information values: Sick(S), Middle(M), and No(N), and one missing information value: *. So, according to Definition 10, attribute gives an ordinary set and a multiset . Since in Table 1, they are replaced by in Table 2. On the other hand, in Table 1, , then is replaced by in Table 2. Following this process, we derive the MSVIS in Table 2 from the IIS in Table 1.

Now that the issue of missing data is addressed by an MSVIS induced from an IIS, information granules in the MSVIS can be designed for further modeling. Note that, since multisets are not convenient for calculations, their corresponding probability distribution sets are utilized in Definition 11.

Definition 11

([19]). Given an MSVIS , and , a tolerance relation could be defined as follows:

where and represent the probability distribution sets induced by and , respectively.

Clearly, , where .

Definition 12

([19]). Given an MSVIS , and , the following θ-tolerance class of serves as the information granule in an MSVIS:

Apparently,

4. Uncertainty Measurement for an MSVIS

In this section, two UMs for an MSVIS are reviewed, which will be utilized for the attribute reduction in the next section.

Definition 13

([19]). Given an MSVIS , , and , the definition of θ-information entropy for subsystem is as follows:

Proposition 1.

Given an MSVIS , , and , we have

Moreover, if ; if .

Proof.

Please see “Appendix A”. □

Proposition 2

([19]). Let be an MSVIS.

If , then , ;

If , then , .

Proof.

Please see “Appendix A”. □

Definition 14

([19]). Given an MSVIS , , and , the definition of the θ information amount for subsystem is as follows:

Proposition 3.

Given an MSVIS , , and , we have

Moreover, if ; if .

Proof.

Please see “Appendix A”. □

Proposition 4

([19]). Let be an MSVIS.

If , then , .

If , then , .

Proof.

Please see “Appendix A”. □

The monotonicity showed in Propositions 2 and 4 demonstrates the validity of the proposed UMs in an MSVIS.

5. Unsupervised Attribute Reduction Algorithms in an MSVIS

In this section, we demonstrate that the UMs and can be used for attribute reduction in an MSVIS. Then, we propose two specific unsupervised attribute reduction algorithms using these UMs.

Definition 15.

Given an MSVIS , , and , P is called a θ-coordination subset of A, if .

For convenience, we denote the family of all -coordination subsets of A by .

Definition 16.

Given an MSVIS , , and , P is called a θ-reduct of A, if and .

For convenience, the family of all -reducts of A is denoted by .

Theorem 2.

Let be an MSVIS, and . The following three conditions are equal:

;

;

.

Proof.

Please see “Appendix A”. □

Corollary 1.

Let be an MSVIS, , and . The following three conditions are equal:

;

and , ;

and , .

Proof.

According to Theorem 2, this proof is straightforward. □

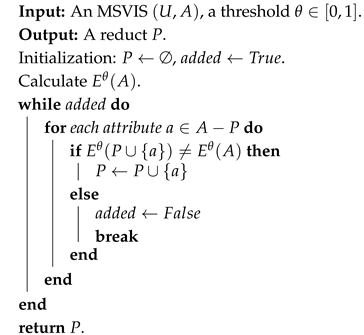

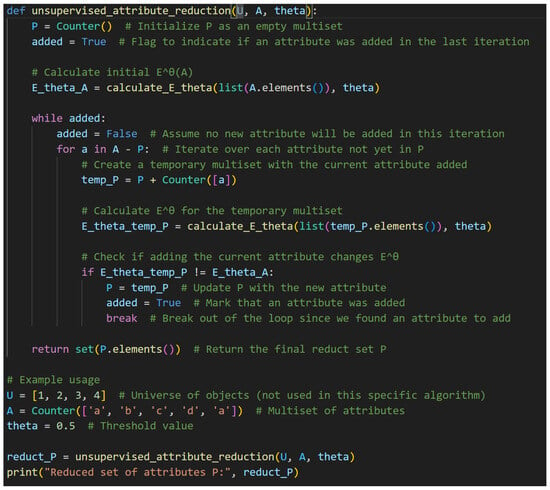

According to this corollary, below we give two attribute reduction algorithms based on and for an MSVIS, respectively. For Algorithms 1 and 2, the attribute selection process is heuristic. It starts from an empty set and uses the UM to select attributes to add to the candidate attribute subset. The algorithms are terminated when the UM of the candidate attribute subset reaches the UM of the whole attribute set, and a reduct is found. For a dataset with n objects and m attributes, the time complexity for searching a reduct is , while the time complexity for calculating or is . Consequently, the time complexity of Algorithms 1 and 2 is .

| Algorithm 1:Unsupervised attribute reduction algorithm based on in an MSVIS (-MSVIS). |

|

| Algorithm 2:Unsupervised attribute reduction algorithm based on in an MSVIS (-MSVIS). |

|

6. Experimental Analysis

In this section, cluster analysis and outlier detection are used to verify the effectiveness of the proposed unsupervised attribute reduction algorithms, and the influence of parameter and missing rate are studied. For an MSVIS , denote the number of objects and the number of attributes by n and m, respectively, the missing rate of (U, A), denoted as , can be calculated as follows:

6.1. Cluster Analysis

In this subsection, clustering on reduced data is conducted to verify the reduction effect of the proposed algorithms.

For this part, nine datasets from UCI [30] are used, as shown in Table 3. Every dataset in Table 3 is transformed into an MSVIS with , except for dataset. An whose missing rate is originally larger than 0.1. Numerical attributes in datasets An, Sp, and Wa are discretized by the method proposed in [31].

Table 3.

The details of the datasets for clustering.

For clustering, k-modes clustering algorithm is adopted. k-modes is used for clustering categorical variables. Unlike k-means clustering which clusters numerical data based on Euclidean distance, k-modes defines clusters based on the number of matching categorical attribute values between data points.

The clustering effects are evaluated by three criteria: Davies–Bouldin index (DB index), silhouette coefficient (SC), and Calinski–Harabasz index (CH index). A smaller Davies–Bouldin index value suggests better clustering effect [32], and larger silhouette coefficient value or larger Calinski–Harabasz index value indicates better clustering effects [33,34].

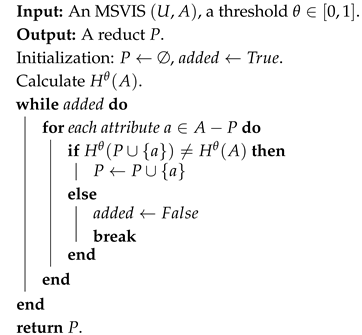

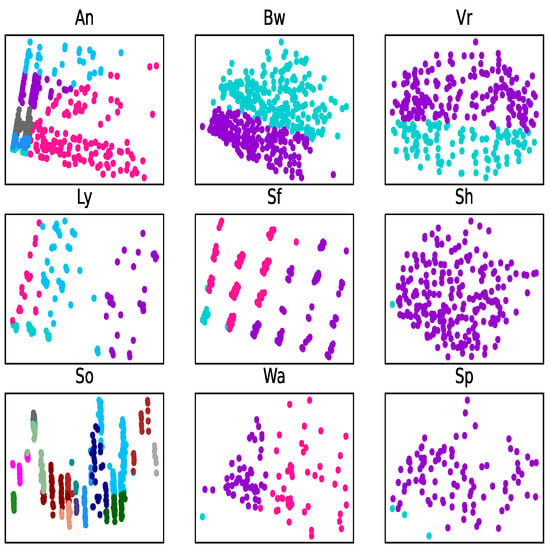

Using criterion SC, the optimal reduction results by -MSVIS and -MSVIS are showed in Table 4. It is evident from Table 4 that -MSVIS and -MSVIS effectively reduce the number of attributes, and that different datasets may require different parameters to achieve optimal clustering results.

Table 4.

Optimal reduction results for k-modes clustering by the proposed algorithms.

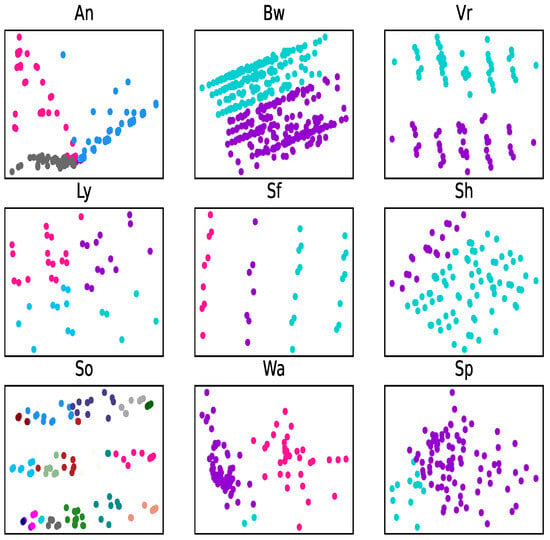

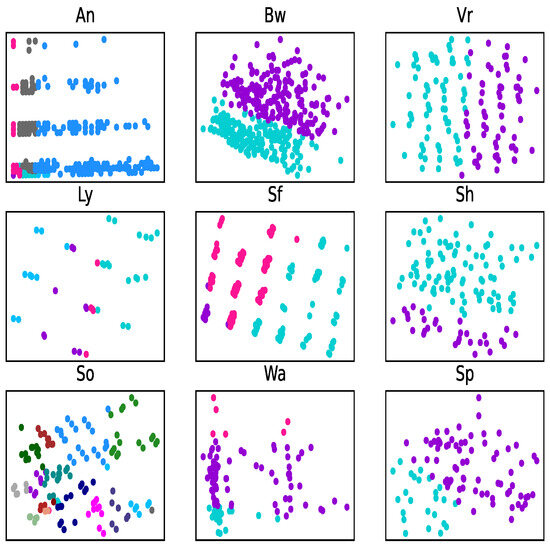

The clustering results are depicted through PCA visualization (Figure 2, Figure 3 and Figure 4). Taking subgraph Sp (second line, third column) for example, in Figure 2, the PCA algorithm recognizes almost all of the data as one class (purple), and the few cyan dots are close to the purple dots. While in Figure 3 and Figure 4, the number of cyan dots and purple dots are closer to their real number in the original dataset, and there is a clear border line to separate these two kinds of dots.

Figure 2.

Clustering image of original datasets with PCA. Colors represent distinct classes (e.g., purple: Class 1, cyan: Class 2). Overlapping regions indicate poor separability in the unreduced feature space.

Figure 3.

Clustering image of reduction by -MSVIS with PCA. Colors represent distinct classes (e.g., purple: Class 1, cyan: Class 2). Overlapping regions indicate poor separability in the unreduced feature space.

Figure 4.

Clustering image of reduction by -MSVIS with PCA. Colors represent distinct classes (e.g., purple: Class 1, cyan: Class 2). Overlapping regions indicate poor separability in the unreduced feature space.

To show the effectiveness and improvement of algorithms -MSVIS and -MSVIS, four other representative algorithms are compared: Unsupervised Quick Reduct (UQR) [35], Unsupervised Entropy-Based Reduct (UEBR) [36], -SVIS, and -SVIS. UEBR and UQR are conducted with an MSVIS. -SVIS and -SVIS are the SVIS version of -MSVIS and -MSVIS, respectively, i.e., -MSVIS and -MSVIS realised in SVIS.

The reduction results evaluated by DB, SC, and CH are shown in Table 5, Table 6 and Table 7, respectively. Obviously, by all three criteria, -MSVIS and -MSVIS show improvements to -SVIS and -SVIS, respectively, and are both better than raw data, UQR and UEBR.

Table 5.

The comparison of clustering by DB (the smaller the better).

Table 6.

The comparison of clustering by SC (the larger the better).

Table 7.

The comparison of clustering by CH (the larger the better).

6.2. Outlier Detection

In this subsection we use the experiment of outlier detection to test the performance of the proposed attribute reduction algorithms. Three outlier detection algorithms Dis [37], kNN [38] and Seq [39] are applied to datasets in Table 8. For the sake of simplicity, this subsection only considers -MSVIS.

Table 8.

Details of UCI datasets for outlier detection.

Downsampling is a common approach to forming suitable datasets for the evaluation of outlier detection [40,41]. Here, we follow the experimental technique of [41] to form an imbalanced distribution for the datasets Mo, Vr, Wa, Sp, and Io. As in clustering experiments, ref. [31] is used to discretize Sp, Wa, and Io.

The performance of each algorithm before and after reduction is evaluated by AUC (area under curve), as shown in Table 9.

Table 9.

The results of AUC before and after reduction (the larger the better).

AUC is a common indicator to evaluate and compare the performance of binary classification model. The larger the AUC, the better the performance. Like the experiment of clustering analysis, the optimal reduction results are listed in Table 10.

Table 10.

Optimal reduction results for outlier detection by -MSVIS.

On the datasets Bw and Ly, the performance of three algorithms remains high after reduction. On the dataset Vr and Wa, all three algorithms improve significantly after reduction. In other cases, except for Algorithm Seq on dataset Sp, reduced data all achieve better performance. In general, the reduction algorithm can help promote or maintain performance for all three outlier detection algorithms.

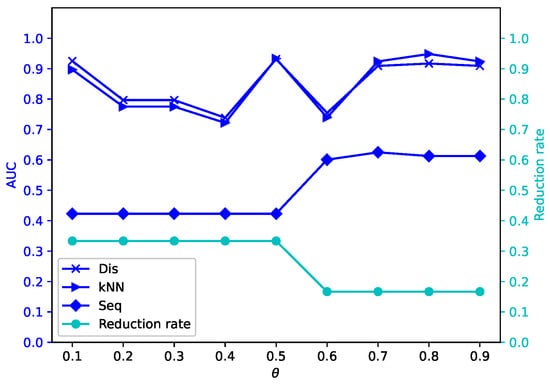

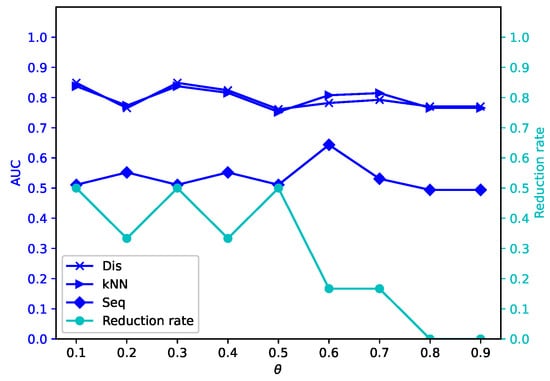

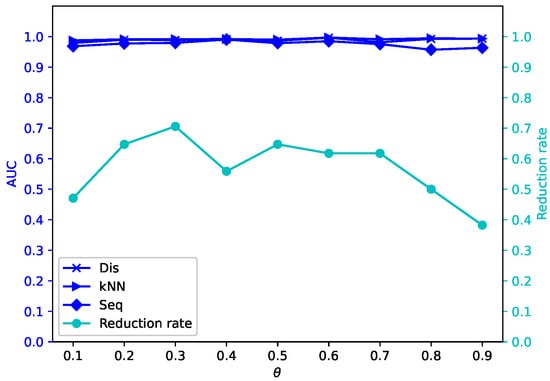

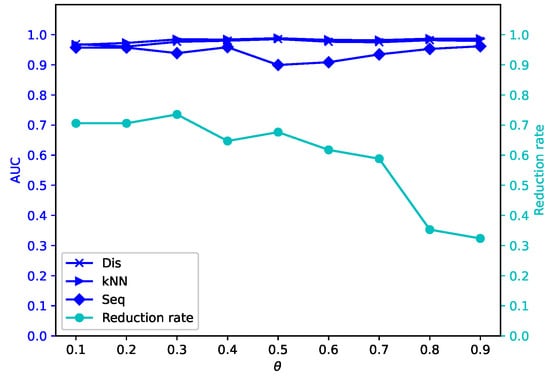

6.3. Parameter Analysis

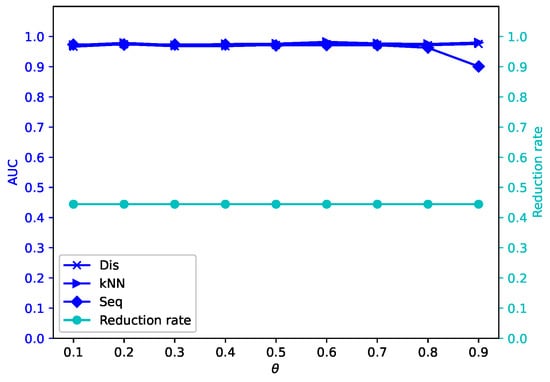

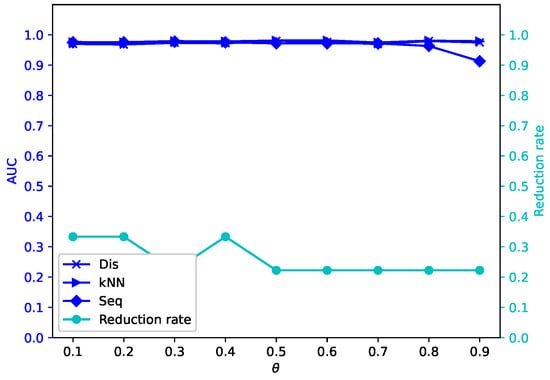

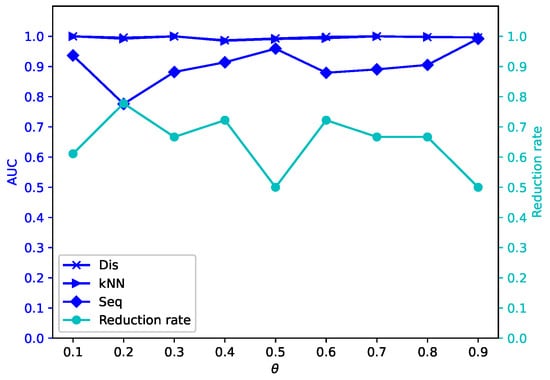

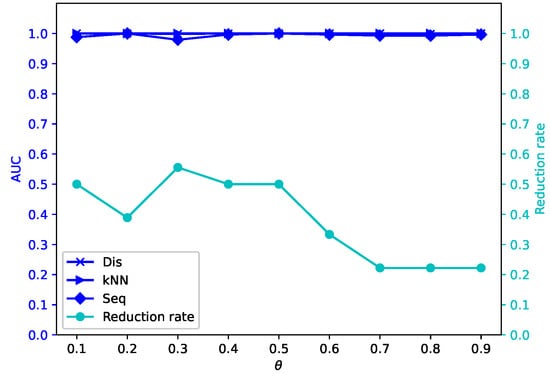

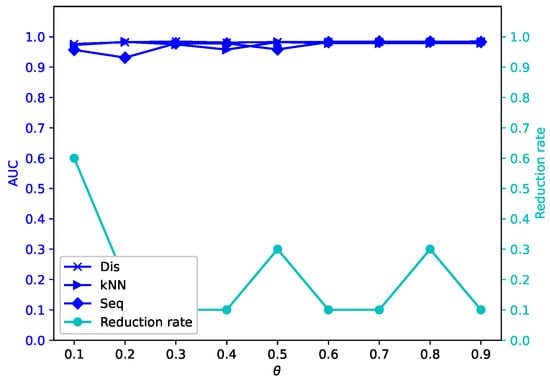

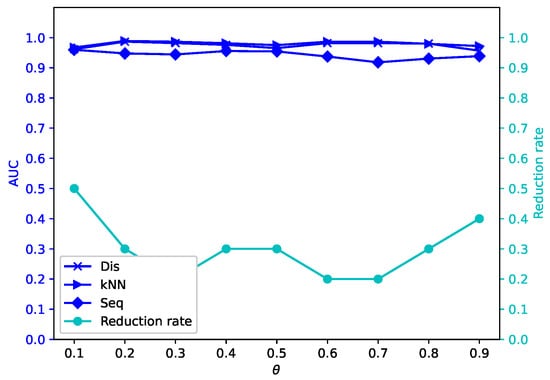

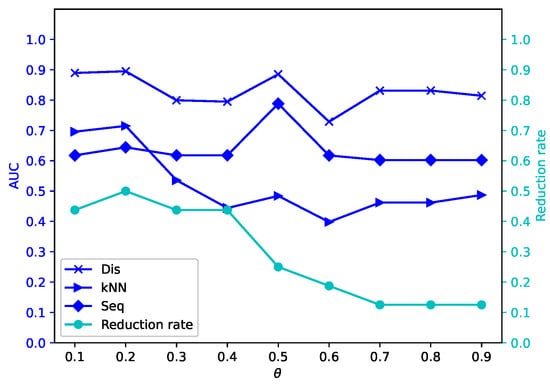

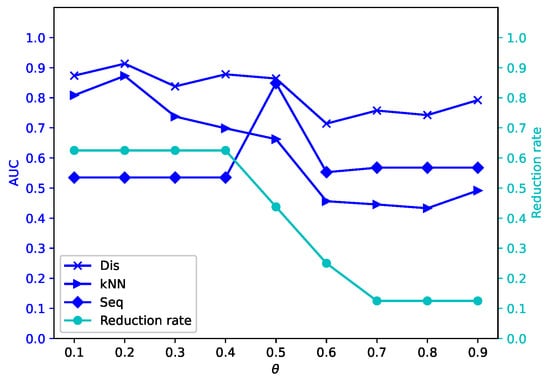

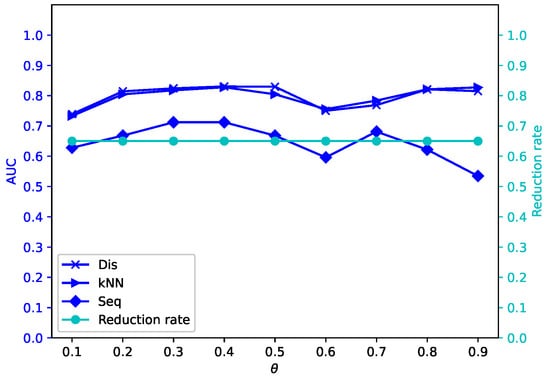

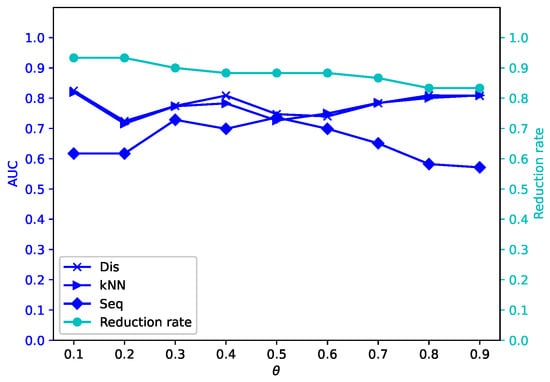

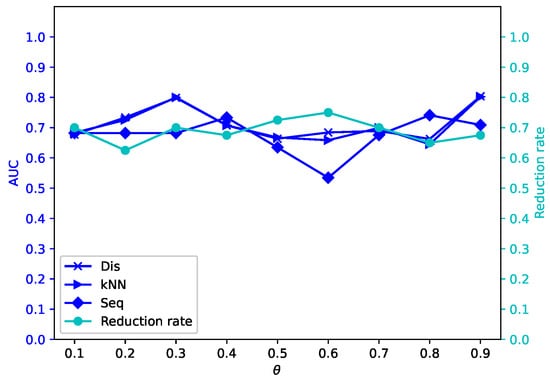

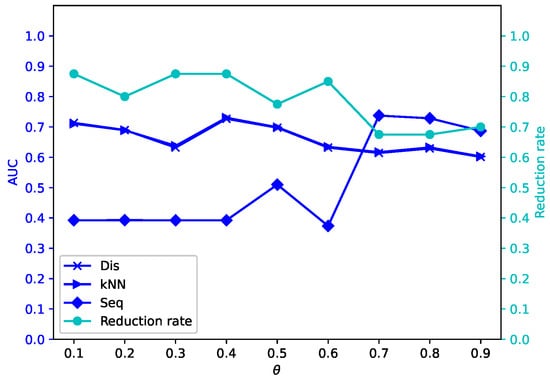

As have been showed in the experiments of clustering and outlier detection, the optimal parameter is different for different datasets and tasks. In this subsection, the influence of parameter and the missing rate are studied. For the sake of simplicity, only -MSVIS and the task of outlier detection is considered.

In Definition 11, has a significant influence on tolerance classes. The tolerance classes are then used to define UMs . Finally, the proposed attribute reduction algorithms based on UMs and are influenced by , and the outlier detection experiments are carried out on the reduced attributes. To quantify the influence of and , two indicators are used: AUC and reduction rate. The reduction rate is defined by the ratio of the number of reduced attributes to the total number of attributes.

The results are showed in Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15, Figure 16, Figure 17, Figure 18, Figure 19 and Figure 20. The optimal parameter generally depends on specific dataset. On some datasets, such as Bw, Ly, Sf, and Io, all three outlier detection algorithms sustain a high performance for different and , while on others the performance of each outlier detection algorithm changes with different . On some datasets, such as Mo, Vr, and Io, the reduction rate generally becomes smaller when becomes larger. On some datasets, such as Bw, Sp, and Wa, the reduction rate does not change much for different . No clear correlation is observed between and performance trends.

Figure 5.

Average reduction rates and AUC values for different by -MSVIS on Mo ().

Figure 6.

Average reduction rates and AUC values for different by -MSVIS on Mo ().

Figure 7.

Average reduction rates and AUC values for different by -MSVIS on Bw ().

Figure 8.

Average reduction rates and AUC values for different by -MSVIS on Bw ().

Figure 9.

Average reduction rates and AUC values for different by -MSVIS on Ly ().

Figure 10.

Average reduction rates and AUC values for different by -MSVIS on Ly ().

Figure 11.

Average reduction rates and AUC values for different by -MSVIS on Sf ().

Figure 12.

Average reduction rates and AUC values for different by -MSVIS on Sf ().

Figure 13.

Average reduction rates and AUC values for different by -MSVIS on Vr ().

Figure 14.

Average reduction rates and AUC values for different by -MSVIS on Vr ().

Figure 15.

Average reduction rates and AUC values for different by -MSVIS on Sp ().

Figure 16.

Average reduction rates and AUC values for different by -MSVIS on Sp ().

Figure 17.

Average reduction rates and AUC values for different by -MSVIS on Wa ().

Figure 18.

Average reduction rates and AUC values for different by -MSVIS on Wa ().

Figure 19.

Average reduction rates and AUC values for different by -MSVIS on Io ().

Figure 20.

Average reduction rates and AUC values for different by -MSVIS on Io ().

6.4. Discussion

In this study, we primarily focused on establishing the theoretical and empirical validity of our unsupervised attribute reduction framework for multiset-valued data in static, small-to-medium-scale scenarios.

The computational complexity of our framework, , as noted in Section 5, may pose challenges for ultra-high-dimensional data (e.g., thousands of attributes). However, our parameter analysis (Section 6.3) demonstrates that the proposed algorithms effectively reduce dimensionality even in moderately sized datasets (e.g., the Sports Article dataset with 59 attributes, Table 4), suggesting potential scalability with optimizations. For instance, the iterative attribute selection process (Algorithms 1 and 2) inherently prioritizes critical features, which could mitigate redundancy in high-dimensional spaces.

We acknowledge that further adaptations such as integrating sparse representation techniques or parallel computing are necessary for large-scale applications. These extensions, while beyond the scope of this foundational work, are highlighted as future directions in the conclusion (Section 7). We believe our framework’s emphasis on frequency preservation and granularity control (via ) provides a robust theoretical basis for addressing high-dimensional challenges, particularly in domains like bioinformatics or text mining where multiset-valued representations naturally arise.

While automatic optimization (e.g., via metaheuristic algorithms or adaptive thresholds) would enhance usability, we intentionally preserved as a user-defined parameter in this foundational work. This design choice allows domain experts to align granularity with their specific objectives; for instance, selecting smaller for fine-grained clustering or larger for efficient outlier detection. Our current framework provides a theoretical and empirical basis for such extensions, prioritizing methodological transparency and reproducibility over prescriptive parameterization. We fully agree that automated parameter adaptation is a vital direction for future research, particularly in high-dimensional or streaming scenarios.

The selection of the parameter plays a pivotal role in balancing granularity and computational efficiency in our framework. As shown in Section 6.3, acts as a threshold to control the similarity between multisets via the Hellinger distance, directly influencing the size of -tolerance classes and subsequently the uncertainty measures. While our experiments demonstrate ’s task-dependent nature (e.g., smaller for fine-grained clustering, larger for efficient outlier detection), we emphasize that its empirical tuning aligns with the common practice in granular computing and rough set-based methods, where domain knowledge often guides parameter selection. Future work could explore automated optimization via metaheuristic algorithms (e.g., genetic algorithms) or adaptive thresholding based on data characteristics (e.g., missing rate ), particularly in scenarios requiring minimal human intervention.

Although our current experiments focus on small-to-medium-scale datasets, the proposed framework’s principles are extensible to high-dimensional multiset-valued data. The iterative attribute selection process (Algorithms 1 and 2) inherently prioritizes features with discriminative frequency distributions, which could mitigate the curse of dimensionality by eliminating redundant attributes. However, the computational complexity of may limit scalability for ultra-high-dimensional scenarios (e.g., gene expression data with thousands of features). To address this, future extensions could integrate sparse representation techniques (e.g., L1-norm regularization) to enhance efficiency or adopt parallel computing architectures for distributed attribute reduction. These adaptations would align our method with emerging needs in bioinformatics and text mining, where multiset-valued representations naturally encode frequency-rich semantics.

To align with real-world scenarios where datasets with excessive missing rates are less practical, we focus on moderate missing rates ( = 0.1 and 0.2) in our experiments. These values reflect common missing data levels in real applications. The results in Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15, Figure 16, Figure 17, Figure 18, Figure 19 and Figure 20 demonstrate that the proposed method remains robust under the setting.

7. Conclusions

In this paper, datasets containing missing information values are transformed into an MSVIS model, ensuring that the frequencies of different attribute values are taken into full consideration. This data-driven approach towards handling missing data is user-friendly and entirely based on the dataset itself. Furthermore, novel unsupervised attribute reduction algorithms for an MSVIS are presented in this paper, leveraging the concepts of -information amount and -information entropy, which serve as measures of uncertainty. The proposed method outperforms existing SVIS-based approaches (e.g., -SVIS and -SVIS) in clustering accuracy and in outlier detection AUC, aligning with Huang et al. [22] on the importance of frequency preservation. However, our unsupervised framework contrasts with supervised methods by eliminating dependency on decision attributes, as emphasized in Peng et al. [14]. Additionally, an analysis of the parameters and is conducted to provide further insights into their impact and importance within the proposed methodologies.

Theoretically, this work bridges granular computing and rough set theory by formalizing multisets as rational probability distributions, advancing uncertainty measurement frameworks. Practically, the method enables efficient preprocessing of medical or IoT datasets with missing values (e.g., Table 1’s symptom data) without imputation biases. Future work will optimize time complexity via hash-based granulation, enhancing scalability for real-world applications. While the proposed framework demonstrates robust performance and practical effectiveness, some limitations warrant attention. One notable drawback is its relatively high time complexity when utilizing a tolerance relation for information granules. Introducing hash technology could potentially enhance the time efficiency of the method. The MSVIS in this paper focuses primarily on discrete data. Expanding this methodology to accommodate different data types presents an intriguing area for future research. Our forthcoming work will focus on developing time-efficient unsupervised attribute reduction techniques for gene expression data that may contain missing information values.

Author Contributions

Methodology, X.G.; Software, Y.L. and H.L.; Formal analysis, Y.P. and H.L.; Investigation, X.G., Y.P. and Y.L.; Data curation, Y.L.; Writing—original draft, X.G.; Writing—review & editing, Y.P. and H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by Guangdong Key Disciplines Project (2024ZDJS137).

Data Availability Statement

No new data were created or analyzed in this study.

Acknowledgments

The authors would like to thank the editors and the anonymous reviewers for their valuable comments and suggestions, which have helped immensely in improving the quality of the paper.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Proposition A1.

Given an MSVIS , and , we have

Moreover, if ; if .

Proof.

Since is a tolerance relation on U, we have ,

So , . This implies that

By Definition 13,

If , then , . Thus, .

If , then , . Thus, . □

Proposition A2.

Given an MSVIS , and , we have

Moreover, if ; if .

Proof.

Since is a tolerance relation on U, we have ,

So , . This implies that

By Definition 13,

If , then , . Thus, .

If , then , . Thus, . □

Proposition A3.

Given an MSVIS , and , we have

Moreover, if ; if .

Proof.

Since is a tolerance relation on U, we have ,

Thus , . This implies that

By Definition 14,

If , then , . So .

If , then , . So . □

Proposition A4.

Let be an MSVIS.

If , then , .

If , then , .

Proof.

(1) Since , , we have . So

By Definition 14,

Consequently,

Since , , we have . Thus

By Definition 14,

Thus, . □

Theorem A1.

Let be an MSVIS, and . The following three conditions are equal:

;

;

.

Proof.

⇔ and ⇒ are obvious.

⇒. Suppose that . Then,

Therefore,

Note that ; then, , . This implies that

Therefore, , It follows that ,

Thus, Hence,

⇒. Suppose that . Then,

So

Note that ; then, , . This implies that

So, ,

Consequently, , ,

Therefore,

□

Figure A1.

The core codes of the proposed algorithms.

References

- Kang, H. The prevention and handling of the missing data. Korean J. Anesthesiol. 2013, 64, 402–406. [Google Scholar] [CrossRef] [PubMed]

- Asendorpf, J.B.; Schoot, R.V.D.; Denissen, J.J.; Hutteman, R. Reducing bias due to systematic attrition in longitudinal studies: The benefits of multiple imputation. Int. J. Behav. Dev. 2014, 38, 453–460. [Google Scholar] [CrossRef]

- Zadeh, L.A. Fuzzy logic equals computing with words. IEEE Trans. Fuzzy Syst. 1996, 4, 103–111. [Google Scholar] [CrossRef]

- Pawlak, Z. Rough sets. Int. J. Comput. Inf. Sci. 1982, 11, 341–356. [Google Scholar] [CrossRef]

- Pal, S.K.; Meher, S.K.; Dutta, S. Class-dependent rough-fuzzy granular space, dispersion index and classification. Pattern Recognit. 2012, 45, 2690–2707. [Google Scholar] [CrossRef]

- Yao, Y.Y. Granular computing for data mining. In Proceedings of the SPIE Conference on Data Mining, Intrusion Detection, Information Assurance, and Data Networks Security, Kissimmee, FL, USA, 7–18 April 2006; pp. 1–12. [Google Scholar]

- Dong, L.J.; Chen, D.G.; Wang, N.; Lu, Z.H. Key energy-consumption feature selection of thermal power systems based on robust attribute reduction with rough sets. Inf. Sci. 2020, 532, 61–71. [Google Scholar]

- Dai, J.H.; Tian, H.W. Entropy measures and granularity measures for set-valued information systems. Inf. Sci. 2013, 240, 72–82. [Google Scholar] [CrossRef]

- Chen, Z.C.; Qin, K.Y. Attribute reduction of set-valued information systems based on a tolerance relation. Comput. Sci. 2010, 23, 18–22. [Google Scholar]

- Xie, X.L.; Li, Z.W.; Zhang, P.F.; Zhang, G.Q. Information structures and uncertainty measures in an incomplete probabilistic set-valued information system. IEEE Access 2019, 7, 27501–27514. [Google Scholar] [CrossRef]

- Chen, L.J.; Liao, S.M.; Xie, N.X.; Li, Z.W.; Zhang, G.Q.; Wen, C.F. Measures of uncertainty for an incomplete set-valued information system with the optimal selection of subsystems: Gaussian kernel method. IEEE Access 2020, 8, 212022–212035. [Google Scholar] [CrossRef]

- Zhang, Q.L.; Chen, Y.Y.; Zhang, G.Q.; Li, Z.W.; Chen, L.J.; Wen, C.F. New uncertainty measurement for categorical data based on fuzzy information structures: An application in attribute reduction. Inf. Sci. 2021, 580, 541–577. [Google Scholar] [CrossRef]

- Gao, C.; Lai, Z.H.; Zhou, J.; Wen, J.J.; Wong, W.K. Granular maximum decision entropy-based monotonic uncertainty measure for attribute reduction. Int. J. Approx. Reason. 2019, 104, 9–24. [Google Scholar] [CrossRef]

- Peng, Y.C.; Zhang, Q.L. Uncertainty measurement for set-valued data and its application in feature selection. Int. J. Fuzzy Syst. 2022, 24, 1735–1756. [Google Scholar] [CrossRef]

- Singh, S.; Shreevastava, S.; Som, T.; Somani, G. A fuzzy similarity-based rough set approach for attribute selection in set-valued information systems. Soft Comput. 2020, 24, 4675–4691. [Google Scholar] [CrossRef]

- Zhang, Q.L.; Li, L.L. Attribute reduction for set-valued data based on D-S evidence theory. Int. J. Gen. Syst. 2022, 51, 822–861. [Google Scholar] [CrossRef]

- Liu, C.; Wang, L.; Yang, W.; Zhong, Q.Q.; Li, M. Incremental attribute reduction method for set-valued decision information system with variable attribute sets. J. Comput. Appl. 2022, 42, 463–468. [Google Scholar]

- Lang, G.M.; Li, Q.G.; Yang, T. An incremental approach to attribute reduction of dynamic set-valued information systems. Int. J. Mach. Learn. Cybern. 2014, 5, 775–788. [Google Scholar] [CrossRef]

- Huang, D.; Lin, H.; Li, Z.W. Information structures in a multiset-valued information system with application to uncertainty measurement. J. Intell. Fuzzy Syst. 2022, 43, 7447–7469. [Google Scholar] [CrossRef]

- Song, Y.; Lin, H.; Li, Z.W. Outlier detection in a multiset-valued information system based on rough set theory and granular computing. Inf. Sci. 2024, 657, 119950. [Google Scholar] [CrossRef]

- Zhao, X.R.; Hu, B.Q. Three-way decisions with decision-theoretic rough sets in multiset-valued information tables. Inf. Sci. 2020, 507, 684–699. [Google Scholar] [CrossRef]

- Huang, D.; Chen, Y.Y.; Liu, F.; Li, Z.W. Feature selection for multiset-valued data based on fuzzy conditional information entropy using iterative model and matrix operation. Appl. Soft Comput. 2023, 142, 110345. [Google Scholar] [CrossRef]

- Li, Z.W.; Yang, T.L.; Li, J.J. Semi-supervised attribute reduction for partially labelled multiset-valued data via a prediction label strategy. Inf. Sci. 2023, 634, 477–504. [Google Scholar] [CrossRef]

- Feng, W.B.; Sun, T.T. A dynamic attribute reduction algorithm based on relative neighborhood discernibility degree. Sci. Rep. 2024, 14, 15637. [Google Scholar] [CrossRef]

- He, J.L.; Zhang, G.Q.; Huang, D.; Wang, P.; Yu, G.J. Measures of uncertainty for partially labeled categorical data based on an indiscernibility relation: An application in semi-supervised attribute reduction. Appl. Intell. 2023, 53, 29486–29513. [Google Scholar] [CrossRef]

- Zonoozi, M.H.P.; Seydi, V.; Deypir, M. An unsupervised adversarial domain adaptation based on variational auto-encoder. Mach. Learn. 2025, 114, 128. [Google Scholar] [CrossRef]

- Chen, S.K.; Zheng, W.L. RRMSE-enhanced weighted voting regressor for improved ensemble regression. PLoS ONE 2025, 20, e0319515. [Google Scholar] [CrossRef]

- Jena, S.P.; Ghosh, S.K.; Tripathy, B.K. On the theory of bags and lists. Inf. Sci. 2001, 132, 241–254. [Google Scholar] [CrossRef]

- Nikulin, M.S. Hellinger distance. In Hazewinkel, Michiel, Encyclopedia of Mathematics; Springer Science: Berlin/Heidelberg, Germany, 2001; ISBN 978-1-55608-010-4. [Google Scholar]

- Dua, D.; Graff, C. UCI Machine Learning Repository; University of California: Irvine, CA, USA, 2017. [Google Scholar]

- Fayyad, U.M.; Irani, K.B. Multi-Interval Discretization of Continuous-Valued Attributes for Classification Learning. In Proceedings of the 13th International Joint Conference on Artificial Intelligence, Chambery, France, 28 August 1993–3 September 1993; pp. 1022–1027. [Google Scholar]

- Davies, D.L.; Bouldin, D.W. A cluster separation measure. IEEE Trans. Pattern Anal. Mach. Intell. 1979, 2, 224–227. [Google Scholar] [CrossRef]

- Calinski, T.; Harabasz, J. A dendrite method for cluster analysis. Commun. Stat. 1974, 3, 1–27. [Google Scholar]

- Rouseeuw, P.J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Velayutham, C.; Thangavel, K. Unsupervised quick reduct algorithm using rough set theory. J. Eng. Sci. Technol. 2011, 9, 193–201. [Google Scholar]

- Velayutham, C.; Thangavel, K. A novel entropy based unsupervised feature selection algorithm using rough set theory. In Proceedings of the IEEE-International Conference on Advances in Engineering, Science and Management (ICAESM-2012), Nagapattinam, India, 30–31 March 2012; pp. 156–161. [Google Scholar]

- Knorr, E.M.; Ng, R.T.; Tucakov, V. Distance-based outliers: Algorithms and applications. VLDB J. 2000, 8, 237–253. [Google Scholar] [CrossRef]

- Ramaswamy, S.; Rastogi, R.; Shim, K. Efficient algorithms for mining outliers from large data sets. In Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data, Dallas, TX, USA, 15–18 May 2000; pp. 427–438. [Google Scholar]

- Jiang, F.; Sui, Y.F.; Cao, C.G. Some issues about outlier detection in rough set theory. Expert Syst. Appl. 2009, 36, 4680–4687. [Google Scholar] [CrossRef]

- Campos, G.O.; Zimek, A.; Sander, J.; Campello, R.; Micenkova, B.; Schubert, E.; Assent, I.; Houle, M.E. On the evaluation of unsupervised outlier detection: Measures, datasets, and an empirical study. Data Min. Knowl. Discov. 2016, 30, 891–927. [Google Scholar] [CrossRef]

- Hawkins, S.; He, H.X.; Williams, G.J.; Baxter, R.A. Outlier detection using replicator neural networks. In Proceedings of the 4th International Conference on Data Warehousing and Knowledge Discovery, Aix-en-Provence, France, 4–6 September 2002; pp. 170–180. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).