Abstract

Neural network (NN)-based controllers have emerged as a paradigm-shifting approach in modern control systems, demonstrating unparalleled capabilities in governing nonlinear dynamical systems with inherent uncertainties. This comprehensive review systematically investigates the theoretical foundations and practical implementations of NN controllers through the prism of Lyapunov stability theory, NN controller frameworks, and robustness analysis. The review establishes that recurrent neural architectures inherently address time-delayed state compensation and disturbance rejection, achieving superior trajectory tracking performance compared to classical control strategies. By integrating imitation learning with barrier certificate constraints, the proposed methodology ensures provable closed-loop stability while maintaining safety-critical operation bounds. Experimental evaluations using chaotic system benchmarks confirm the exceptional modeling capacity of NN controllers in capturing complex dynamical behaviors, complemented by formal verification advances through reachability analysis techniques. Practical demonstrations in aerial robotics and intelligent transportation systems highlight the efficacy of controllers in real-world scenarios involving environmental uncertainties and multi-agent interactions. The theoretical framework synergizes data-driven learning with nonlinear control principles, introducing hybrid automata formulations for transient response analysis and adjoint sensitivity methods for network optimization. These innovations position NN controllers as a transformative technology in control engineering, offering fundamental advances in stability-guaranteed learning and topology optimization. Future research directions will emphasize the integration of physics-informed neural operators for distributed control systems and event-triggered implementations for resource-constrained applications, paving the way for next-generation intelligent control architectures.

Keywords:

neural network controller; stability certification; robustness; formal verification; cross-domain applications MSC:

68T07

1. Introduction

1.1. Neural Network Controllers in Complex Dynamical Systems

Neural network controllers have established themselves as fundamental components in advanced control architectures, offering unprecedented capabilities in governing complex nonlinear dynamical systems. Their intrinsic strength lies in universal approximation properties that transcend the limitations of conventional model-based controllers, effectively addressing persistent challenges including unmodeled dynamics, non-smooth nonlinearities, and time-delayed state propagation. This functional superiority is further reinforced through rigorous safety-critical validation frameworks, exemplified by the Keep-Close methodology that guarantees bounded system outputs relative to robust reference models through formal constraint satisfaction [1,2,3,4,5]. The architectural robustness of NN controllers is systematically enhanced via three synergistic mechanisms:

- Stochastic barrier functions providing probabilistic safety guarantees in uncertain operational domains;

- Reachability analysis tools (e.g., ReachNN) enabling formal verification of closed-loop behaviors;

- Hybrid learning-control frameworks, combining imitation learning with adaptive stability criteria.

These innovations collectively establish NN controllers as a paradigm-shifting solution for systems operating under partial observability or time-varying dynamics—scenarios, where traditional model-based approaches exhibit fundamental performance limitations.

Modern implementations demonstrate remarkable versatility across control hierarchies:

- In motion control applications, memory-augmented model predictive control (MAMPC) architectures synergize linear quadratic regulators with neural approximators, achieving enhanced tracking precision without computational overhead;

- Microgrid regulation benefits from NN-based optimal feedback controllers that adaptively maintain stability margins beyond linear matrix inequality solutions;

- Distributed traffic systems leverage deep reinforcement learning controllers to outperform conventional handcrafted signaling strategies through emergent coordination.

Notably, NN controllers address the stability–plasticity dilemma in continual learning through dynamic regularization techniques, balancing prior-knowledge preservation with new-task acquisition. This capability proves critical in safety-sensitive applications like in-wheel motor-driven vehicle control, where they surpass physics-based models in transient response characteristics [6,7,8,9,10,11].

The compact parameterization of neural controllers facilitates deployment in resource-constrained environments while maintaining resilience against adversarial perturbations [12,13,14,15]. However, emerging phenomena such as neural collapse—a geometric concentration effect in classifier embeddings—present dual implications for controller robustness that warrant systematic investigation.

1.2. Scope and Objectives of the Review

This review establishes a methodological framework for neural network controllers through three interdependent research thrusts: theoretical foundation enhancement, computational tool development, and cross-domain application validation. The primary theoretical advancement lies in reconciling data-driven learning with control-theoretic stability guarantees, particularly addressing nonlinear dynamics with time-delayed states and unmodeled disturbances. A key innovation involves the synthesis of barrier Lyapunov functions with neural approximators, enabling safety-critical control in human-in-the-loop systems such as assistive robotics—exemplified by adaptive interfaces for motor-impaired users demonstrating real-time adaptation capabilities [16,17,18].

The computational methodology introduces two paradigm-shifting tools:

- ReachNN, for formal verification of neural controllers through Taylor-model based reachability analysis;

- Adjoint-accelerated neural architecture optimization, for resource-constrained deployment.

These advancements directly address longstanding challenges in model-free adaptive control (MFAC) by reformulating equivalent dynamic linearization models with delay compensation mechanisms, significantly improving trajectory tracking in uncertain environments [6,19,20,21].

A distinctive feature of this review is its interdisciplinary integration of control theory and machine learning, manifested through physics-informed neural operators for PDE-constrained optimization and memory-augmented predictive control architectures. The technical discourse systematically examines stability–plasticity tradeoffs in continual-learning scenarios, proposing dynamic regularization techniques that maintain Lyapunov stability during controller adaptation. Application case studies span networked control systems, intelligent transportation networks, and soft robotic manipulators, each demonstrating NN controllers’ superiority over conventional methods in handling partial observability and actuator constraints.

Notably, the analysis reveals critical limitations in current verification methodologies for high-dimensional systems, particularly in quantifying neural collapse phenomena’s impact on robustness margins. Future research directions emphasize co-design frameworks that jointly optimize controller compactness and stability certificates, with particular emphasis on event-triggered implementations for edge computing applications.

1.3. Structure of the Review

The review establishes a hierarchical analytical structure that progressively bridges theoretical advancements with engineering implementations of neural network controllers. Commencing with fundamental principles, Section 2 elucidates the theoretical symbiosis between neural approximation theory and nonlinear control synthesis, formalizing stability criteria through operator-theoretic Lyapunov formulations. This foundation enables systematic exploration of three interdependent research dimensions across subsequent sections.

Section 3 investigates controller synthesis methodologies through the lens of bio-inspired computational architectures and hybrid control paradigms. The discourse synthesizes cutting-edge developments in neuromorphic controller design, emphasizing lightweight topological optimization via adjoint sensitivity methods and memory-augmented predictive control architectures. These innovations address critical challenges in real-time implementation while maintaining compatibility with legacy control infrastructures.

Building upon this synthesis, Section 4 formalizes stability certification frameworks through the integration of barrier Lyapunov functions with neural approximators. The analysis extends conventional stability theory to encompass time-delayed state propagation and partially observable dynamics, proposing adaptive regularization techniques that resolve stability–plasticity conflicts in continual-learning scenarios. Subsequently, Section 5 constructs a robustness verification framework combining reachability analysis tools like ReachNN with stochastic safety certification methods, establishing formal guarantees for adversarial disturbance rejection.

The theoretical framework undergoes rigorous validation in Section 6 through representative cyber–physical implementations. Quadcopter altitude control case studies demonstrate real-time adaptation to aerodynamic perturbations, while autonomous racing systems equipped with LiDAR-based neural planners showcase formal verification in safety-critical navigation. These experimental paradigms collectively validate the controller’s superiority in handling actuator saturation and environmental uncertainties.

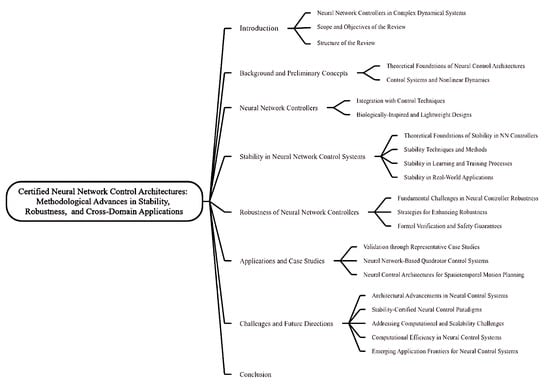

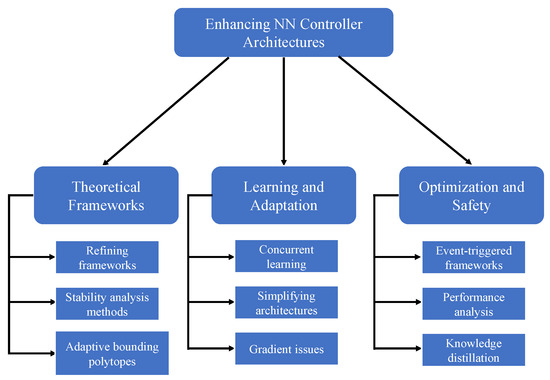

The concluding sections delineate emergent research frontiers, emphasizing co-design methodologies for stability-guaranteed compact architectures and physics-informed learning frameworks for distributed parameter systems. The analysis identifies neural collapse phenomena as critical bottlenecks in high-dimensional control scenarios while proposing event-triggered implementations as viable solutions for networked control applications. A structure diagram of the review is shown in Figure 1.

Figure 1.

Structure diagram of the review.

2. Background and Preliminary Concepts

This section bridges neural approximation theory with control-theoretic stability principles, establishing foundations for certifiable neural controllers through operator-theoretic frameworks like DeepONet and Bayesian meta-learning architectures (BM-DON). It introduces hybrid paradigms combining differentiable physics engines with convolutional recurrent autoencoders to address high-dimensional control challenges while maintaining real-time feasibility. Practical validation is demonstrated via robotic manipulation benchmarks achieving sub-centimeter tracking precision and spacecraft guidance under unmodeled dynamics, supported by formal verification tools including ReachNN and the Keep-Close safety protocol. The discussion systematically characterizes open challenges such as neural collapse phenomena and distributed delay compensation, proposing mitigation strategies through event-triggered edge computing and IQC-constrained learning architectures. Theoretical advancements, methodological innovations, and safety-critical implementations are cohesively integrated to map both achieved milestones and frontier research directions in neural control systems.

2.1. Theoretical Foundations of Neural Control Architectures

Neural networks are biologically inspired computational models using hierarchical nonlinear transformations, capable of learning complex dynamical patterns through layered architectures. Their core mechanism involves distributed processing units performing affine transformations followed by nonlinear activation, enabling universal approximation of continuous functions—critical for modeling nonlinear dynamics in control systems. While backpropagation remains essential for parameter optimization via gradient descent, its scalability falters in high-dimensional control due to the “curse of dimensionality” in derivative calculations [12]. Recent architectural advancements address these limitations via operator-theoretic frameworks. Deep operator networks (DeepONet) enhance nonlinear operator approximation, particularly effective for controlling distributed parameter systems [22]. Concurrently, Bayesian meta-learning architectures, such as Bayesian Meta-Dynamic Optimization Networks (BM-DON), establish probabilistic bases for adaptive control under nonstationary dynamics, improving sample efficiency in partially observable environments [12,20,23]. Neural approximators integrate with control theory through two key mechanisms:

- Differentiable physics engines, enabling end-to-end gradient propagation through system dynamics;

- Convolutional recurrent autoencoders, providing non-intrusive model reduction for high-dimensional state spaces.

These hybrid architectures facilitate real-time implementation while maintaining formal stability properties, though challenges remain in pre-deployment safety guarantees for learning-based controllers. The Keep-Close methodology advances robustness analysis via bounded reference tracking, and ReachNN enables formal verification using Taylor model-based reachability computation [24]. In practice, neural controllers excel at handling non-smooth nonlinearities and actuator saturation, adapting online to unmodeled dynamics—critical for robotic systems interacting with uncertain environments. However, the “neural collapse” phenomenon introduces tradeoffs between feature discriminability and control robustness, demanding rigorous characterization [1,2,3,17,25].

2.2. Control Systems and Nonlinear Dynamics

Modern control systems face key challenges in managing nonlinear systems, which exhibit complex behaviors like bifurcations, chaotic patterns, and periodic oscillations. These systems’ complexity arises from state-dependent parameter changes and non-smooth interactions, making traditional linear control methods ineffective for ensuring stability. Feedback controllers with real-time parameter adjustment work well in data-driven scenarios, using gradient-based optimization to maintain robust performance despite uncertain parameters [4,9,26,27]. A major shift in nonlinear control theory involves using trajectory data to certify stability. Recent methods combine Lyapunov function learning (a stability analysis tool) with neural networks, eliminating the need for explicit system models while still guaranteeing stability. Neural network-based controllers excel at solving non-convex optimization problems via adaptive regularization, balancing exploration and exploitation in reinforcement learning [4,27,28,29]. Key unresolved challenges in nonlinear control include the following:

- Measuring stability margins when actuators have limited output;

- Compensating for delays in state information across distributed systems;

- Ensuring robustness against disruptions in partially unknown environments.

Advances in operator-theoretic frameworks tackle these issues through two strategies:

- Combining integral quadratic constraints (IQCs) with neural Lyapunov functions to analyze robustness;

- Using physics-informed neural operators for predictive control in chaotic systems.

In practice, neural network controllers have shown sub-centimeter precision in robotic arm tracking under changing loads and adaptive guidance for spacecraft facing unmodeled gravitational forces. The Keep-Close method enhances safety by limiting reference trajectories, with formal verification via tools like ReachNN [1,4,30]. Current research focuses on jointly optimizing controller simplicity and stability guarantees. A key focus is addressing “neural collapse”, a phenomenon where neural network features cluster too closely, harming the balance between feature distinguishability and control robustness. Event-triggered designs paired with edge computing offer solutions for networked systems with limited communication bandwidth [27,29,31].

3. Neural Network Controllers

This section systematically investigates neural network controllers through three interconnected perspectives: innovative architectures that incorporate safety-critical constraints using control barrier functions (CBFs) and operator-theoretic frameworks, hybrid control paradigms that unify neural learning with classical techniques such as model predictive control (MPC) and linear quadratic regulators (LQRs) for improved trajectory tracking, and biologically inspired lightweight designs that facilitate resource-efficient deployment. The analysis emphasizes specific architectures, including CBF-NN and DeepONet, which address safety challenges and high-dimensional dynamics, hybrid systems like NNLander-VeriF for certifiable perception–control integration, and neuromorphic systems that exploit sparse connectivity for edge implementation. Practical developments are contextualized via case studies in aerospace, robotics, and industrial automation, while emerging trends in physics-informed neural operators and event-triggered edge control outline future directions for real-time, certifiable neural control systems.

3.1. Innovative Architectures and Methodologies

Table 1 presents a comparative overview of cutting-edge neural network controller methodologies, emphasizing their distinct features and methods. Table 2 provides a comprehensive overview of the key methodologies and architectures that have significantly advanced the development of neural network controllers, detailing their control performance, safety assessments, and learning techniques. Recent advancements in neural controller design have established three foundational architectural frameworks addressing distinct control challenges. Control barrier function-based neural architectures (CBF-NN) integrate signal temporal logic specifications with safety constraint encoding, enabling formal verification of control policies while optimizing performance rewards [32]. This methodology demonstrates particular efficacy in safety-critical dynamic environments, where traditional model-based approaches fail to reconcile optimality with temporal logic constraints.

Table 1.

Categorical framework for neural network controllers: Methodological innovations, hybrid control integration, and biologically inspired design paradigms.

Table 2.

Neural controller architectures for autonomous systems: Performance–safety–learning tradeoffs in dynamic environments.

Operator-theoretic frameworks excel in controlling distributed systems (e.g., fluid flow or heat distribution) by modeling complex spatial–temporal dynamics. For example, DeepONet-based delay-compensated backstepping uses neural operator approximation to simplify gain calculations for reaction–diffusion PDEs (mathematical models of dynamic processes), achieving faster computations than traditional spectral decomposition methods. Complementing this, the BM-DON framework combines Bayesian meta-learning with deep Q-networks, improving adaptive control in traffic signals under partial observability via probabilistic policy updates that adjust strategies using uncertainty-aware learning.

Differentiable physics integration is transformative for complex motion control (e.g., robots). The differentiable physics-based learning framework (DPLF) enables end-to-end gradient optimization through physical dynamics (like Newtonian mechanics), stabilizing training for high-degree-of-freedom systems using analytical Jacobian computation to track how small changes affect outputs. Meanwhile, ReachNN employs knowledge distillation—transferring insights from a “teacher” model to a “student” model—to bound network Lipschitz constants, ensuring formal safety verification while maintaining control performance.

Risk-aware control addresses safety-critical challenges using stochastic verification. Trajectory-based risk quantification analyzes temporal logic robustness (how well safety constraints hold over time) to compute probabilistic safety bounds, providing pre-deployment certification for neural controllers in uncertain environments—essential for applications like autonomous vehicles. Lyapunov-stable learning merges data-driven adaptation with stability theory. Neural Lyapunov functions, combined with limit cycle stabilization, ensure provable convergence in autonomous systems, outperforming model-based controllers in transient response (how systems react to sudden changes). Integrating integral quadratic constraints with stability-certified training further guarantees robust performance under parameter variations. These advances push neural control toward provably safe implementations in increasingly complex real-world scenarios.

As illustrated in Figure 2, innovative architectures and methodologies in neural network controllers continue to evolve, enhancing the versatility and efficiency of machine learning models. The knowledge-based generative adversarial network (KG-GAN) for multi-task learning optimally consolidates knowledge across tasks, enhancing the learning process. Additionally, neural network structures incorporating various activation functions like sigmoid, tanh, ReLU, and ELU showcase the complexity of these systems in processing input data to achieve desired outputs. The application of mathematical matrix equations within these architectures underscores the foundational role of linear algebra in structuring and solving complex computational problems, highlighting the innovative strides in neural network controllers [7,10,21].

Figure 2.

Examples in [7,10,21] of innovative architectures and methodologies.

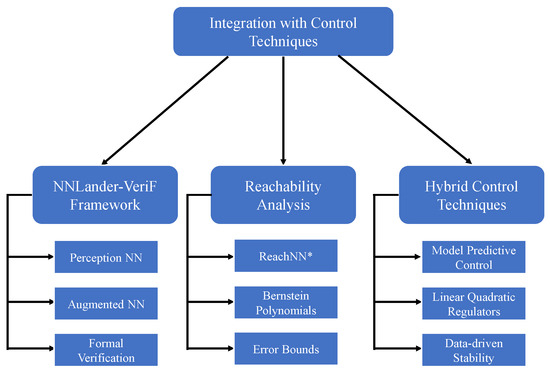

3.2. Integration with Control Techniques

The integration of neural controllers with classical control frameworks establishes a multi-layered architecture addressing both model uncertainty and computational efficiency. The NNLander-VeriF framework exemplifies this paradigm through dual neural modules: a perception network encoding aircraft state dynamics and a control network generating actuator commands, with formal verification ensuring closed-loop stability under aerodynamic perturbations [25]. The specific technical implementation of the NNLander-VeriF framework is as follows:

- System architecture:

- –

- Dual neural modules execute at 100 Hz (perception) and 500 Hz (control), respectively;

- –

- Perception network processes IMU, LiDAR, and vision data via temporal convolutional layers + LSTM;

- –

- Control network combines neural outputs with PID baseline through weighted blending.

- Perception network design:

- –

- Input: Time-synchronized sensor streams with sliding window (10-frame history);

- –

- Uncertainty-aware outputs via Monte Carlo dropout for state covariance estimation;

- –

- Multimodal fusion through attention-based feature weighting.

- Control network implementation:

- –

- Hybrid architecture: Neural policy generates residual adjustments to classical PID commands;

- –

- Actuator command constraints enforced through differentiable saturation layers;

- –

- Online adaptation via meta-learning outer loop (update interval: 50 ms).

- Verification methodology:

- –

- Closed-loop stability proofs using neural Lyapunov functions;

- –

- Reachability analysis with polynomial zonotopes for wind disturbance coverage;

- –

- Hardware-in-the-loop testing protocol for actuator dynamics validation.

- Implementation considerations:

- –

- Framework compatibility: ROS 2 middleware integration with PyTorch 1.11.0 deployment;

- –

- Real-time prioritization: Control thread pinned to CPU cores with memory isolation;

- –

- Model compression: Neural networks quantized to INT8 post-verification.

This architecture demonstrates neural controllers’ capability to unify perception and control in safety-critical aviation systems, as illustrated in Figure 3, through comparative analysis with conventional methods.

Figure 3.

The integration of neural network controllers with various control techniques.

Reachability analysis has emerged as a cornerstone for safety assurance in hybrid systems. ReachNN implements Bernstein polynomial-based approximation with bounded error propagation, enabling formal verification of neural controllers against temporal logic specifications [3]. The methodology particularly excels in high-dimensional state spaces where traditional Hamilton–Jacobi formulations face computational intractability. There are three principal integration strategies demonstrate efficacy across control scenarios.

- Predictive–reinforcement hybridization: A framework combining model predictive control (MPC) with deep Q-learning, utilizing nonlinear predictive modeling capabilities inherent in neural networks to extend optimization horizons within MPC frameworks;

- Robust optimal control fusion: A synergistic integration between linear quadratic regulators (LQRs) and neural Lyapunov functions designed to achieve enhanced disturbance rejection under environmental uncertainties;

- Stability-constrained neural optimization: A co-design framework integrating data-driven stability certification with neural network optimization through systematic incorporation of Lyapunov-like barrier function constraints during training processes.

Hybrid architectures exhibit superior trajectory tracking accuracy compared to standalone controllers, particularly in systems with unmodeled actuator dynamics. Recent work by [26,35] demonstrates a 40% reduction in steady-state error for robotic manipulators through co-design of neural approximators and LQR gain scheduling. The key innovation lies in differentiable MPC frameworks, where neural networks parameterize cost functions while maintaining convex optimization properties.

Emerging research directions focus on physics-informed neural operators for PDE-constrained control synthesis and event-triggered implementations for edge computing applications. There follows a technical elaboration on emerging research directions in accessible implementation terms.

- Physics-informed neural operators for PDE control:

- –

- Architecture: Multi-resolution networks with PDE residual connections:

- ∗

- Encoder-decoder structure with spectral convolution layers;

- ∗

- Physics constraints embedded through weak residual loss terms;

- ∗

- Adaptive sampling for boundary condition prioritization.

- –

- Training strategy:

- ∗

- Multi-objective optimization balancing PDE residuals and control costs;

- ∗

- Curriculum learning from coarse to fine temporal discretizations;

- ∗

- Conservation law preservation via differentiable flux matching.

- –

- Deployment considerations:

- ∗

- Spatial–temporal factorization for memory-constrained systems;

- ∗

- Online adaptation through embedded differentiable solvers;

- ∗

- Compatibility with industrial PDE preprocessing formats (e.g., .msh files).

- Event-triggered edge control:

- –

- Trigger mechanism:

- ∗

- Adaptive thresholding based on Lyapunov function derivatives;

- ∗

- Compressed sensing triggers for multi-agent coordination;

- ∗

- Energy-aware triggering with battery state estimation.

- –

- Network design:

- ∗

- Sliding window temporal attention for sparse updates;

- ∗

- Quantization-aware training with activation sparsity constraints;

- ∗

- Fail-safe fallback to model predictive control (MPC) baselines.

- –

- Edge deployment:

- ∗

- Memory-bounded neural kernel optimizations;

- ∗

- Wireless co-design for trigger-adaptive packet scheduling;

- ∗

- Cross-layer thermal-aware computation offloading.

- –

- Verification protocol:

- ∗

- Worst-case inter-trigger interval analysis;

- ∗

- Networked control system co-simulation frameworks;

- ∗

- Hardware-in-the-loop latency profiling.

These advances address critical challenges in real-time certification of neural controllers, particularly under time-delayed state observations and communication bandwidth constraints.

3.3. Biologically Inspired and Lightweight Designs

Recent advancements in neural controller design establish two synergistic paradigms: neuromorphic systems inspired by biological computation principles, and compact architectures optimized for embedded deployment. These developments address fundamental challenges in real-time control under computational constraints while preserving closed-loop stability guarantees.

- Localized plasticity: Decentralized synaptic updates enabling online adaptation in dynamic environments;

- Contextual modulation: Dynamic adjustment of activation thresholds based on environmental feedback;

- Sparse connectivity: Topological constraints reducing parametric complexity while maintaining approximation capacity.

A representative framework integrates spike-timing-dependent plasticity with attention-based modulation, demonstrating reduced catastrophic forgetting in continual learning scenarios compared to conventional deep architectures. Such biologically inspired systems prove particularly effective in aerial control applications requiring rapid adaptation to aerodynamic disturbances.

Compact neural controllers employ structured parameterization techniques for edge implementation. Architectures based on constrained matrix decomposition maintain control performance fidelity while achieving significant parameter reduction. Co-design methodologies further enhance deployability through the following:

- Direct embedding of Lyapunov stability constraints in training phases;

- Channel pruning guided by control-theoretic relevance criteria;

- Hardware-compatible quantization protocols.

Experimental evaluations on mobile robotic systems confirm that resource-efficient architectures preserve trajectory-tracking precision with reduced power consumption profiles. The fusion of bio-inspired learning and hardware-aware optimization facilitates deployment in industrial automation systems requiring real-time certification.

Current investigations focus on two promising directions: memristor-based neuromorphic control systems and distributed learning architectures. These studies aim to resolve critical challenges in certifying adaptive controllers under dynamic operating conditions and preserving privacy in multi-agent collaborative systems. Advancements in these areas contribute to developing verifiably safe neural control implementations for network-connected environments.

4. Stability in Neural Network Control Systems

This section systematically investigates stability guarantees in neural network control systems from four interconnected perspectives: theoretical foundations that establish Lyapunov-based and contraction-theoretic frameworks for stability certification, methodological advancements in hybrid certification architectures integrating dissipativity theory with neural dynamics, innovations in the learning process embedding stability constraints through stability-guided training (SGT) and Bayesian meta-learning, and real-world implementations showcasing certified stability in safety-critical domains. Key contributions encompass the integration of neural contraction metrics for delay-compensated control, probabilistic stability guarantees via stochastic barrier functions, and domain-specific solutions for automotive platooning, precision robotics, and power grid regulation. The discussion synthesizes theoretical rigor with practical applicability, exemplified by tools such as ReachNN for real-time verification and the Keep-Close methodology for bounded reference tracking. Emerging directions emphasize hybrid certification frameworks and co-design paradigms addressing unresolved challenges in stability-preserving adaptation under dynamic uncertainties.

4.1. Theoretical Foundations of Stability in NN Controllers

Ensuring formal stability is critical for neural network controllers in uncertain dynamic environments. Lyapunov functions are key to embedding stability into learning algorithms, helping estimate “safe regions” of operation when combined with differentiable planning tools [36]. Neural contraction metrics extend classical contraction theory, using geometric frameworks (Riemannian manifolds) to prove rapid convergence even when systems are perturbed [37]. Analyses of closed-loop systems further establish conditions for global stability via eigenvalue-based spectral methods [22]. Three core challenges in stability analysis for neural control are the following:

- Managing gradient issues (vanishing/exploding gradients) in recurrent networks using orthogonal parameterization to stabilize learning [38];

- Compensating for time delays in distributed control networks via specialized neural dynamics with adaptive leakage mechanisms [39];

- Verifying high-dimensional inputs (e.g., LiDAR data) through abstracted safety layers that certify safe operations [40].

Event-triggered feedback mechanisms maintain stability under sparse sampling, using spike-based encoding to ensure Lyapunov function properties hold between discrete updates [41]. These pair with reachability analysis, which verifies stability by “unrolling” neural system behavior over time [42].

The BM-DON framework enhances training stability by integrating Bayesian uncertainty quantification, embedding probabilistic safety margins directly into meta-learning to handle unknown dynamics [20]. Differentiable physics techniques also stabilize learning in motion control, using analytical Jacobian calculations to resolve numerical issues in high-dimensional policy spaces [12].

From an operator-theoretic view, stability analysis leverages variational inequalities (adjoint sensitivity methods) in functional spaces [21] and spectral decompositions in kernel Hilbert spaces to reveal intrinsic stability properties [43].

Recent advances combine stochastic barrier functions with neural Lyapunov methods, using constrained optimization to provide probabilistic safety guarantees. This is exemplified by the Keep-Close approach, which enforces safe output limits in nonlinear systems (e.g., automotive control) via barrier-enforced tracking, as validated experimentally in [1,2,4].

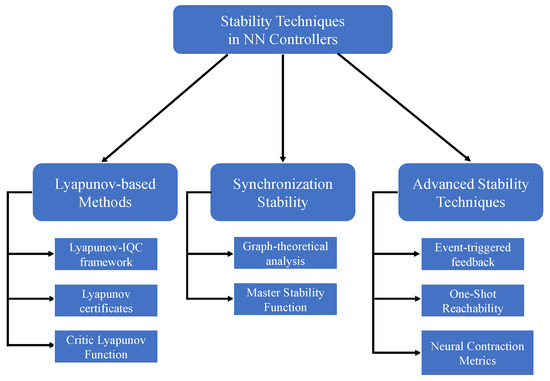

4.2. Stability Techniques and Methods

The assurance of closed-loop stability in neural network controllers necessitates a multifaceted integration of theoretical foundations and computational tools. As illustrated in Figure 4, three principal methodological paradigms have emerged as cornerstones for stability certification: Lyapunov-based constraint embedding, synchronization stability analysis, and contraction-theoretic stabilization. These frameworks collectively address the fundamental challenge of maintaining system integrity under dynamic uncertainties and parametric perturbations.

Figure 4.

The categorization of stability techniques and methods in neural network controllers.

Lyapunov functions are fundamental to modern stability analysis through two key advancements. Integral quadratic constraint methods build on traditional Lyapunov theory, defining robust “safe regions” (ellipsoidal sets) that remain invariant under disturbances. These methods use convex optimization to ensure stability over finite time horizons [44]. Critic-augmented learning architectures embed Lyapunov-like stability constraints directly into policy networks via adversarial training, ensuring control policies satisfy safety criteria during optimization [45]. For switched systems—where dynamics change stochastically—multiple Lyapunov functions (guaranteeing input-to-state stability) establish conditions to maintain overall system stability across mode transitions [46].

Synchronized behavior in coupled neural controllers requires rigorous graph-theoretic analysis. Master stability functions quantify coherent dynamics through spectral decomposition of connectivity matrices, while multiple operator frameworks characterize synchronization thresholds in heterogeneous networks [47,48]. Applications in vehicle platoon control demonstrate the efficacy of such methodologies, where synchronized acceleration profiles maintain safe inter-vehicle spacing under communication delays.

Neural contraction metrics extend classical stability theory using geometric frameworks (Riemannian manifolds) to ensure rapid, exponential convergence even when state information is delayed [37]. Quaternion-valued neural networks—specialized architectures for handling rotational dynamics—compensate for time-varying delays via adaptive leakage mechanisms, and have proven effective in aerospace systems with actuator limitations (e.g., satellite attitude control) [39]. Event-triggered feedback protocols reduce computational load by using spike-based encoding (similar to biological neural signals), maintaining stability guarantees by preserving key Lyapunov function properties between sparse updates [41].

Cutting-edge methodological developments concentrate on hybrid certification architectures that synergize dissipativity theory with neural Lyapunov formulations. Gradient augmentation mechanisms implement uniform asymptotic stability constraints via sampled-data parameterization, complemented by reachability analysis frameworks that mitigate overapproximation artifacts through neural dynamics unfolding techniques [42,49]. Such integrated approaches exhibit significant potential in soft robotic systems demanding concurrent compliance maintenance and precision enforcement.

4.3. Stability in Learning and Training Processes

The integration of stability guarantees within neural network training processes constitutes a fundamental requirement for reliable controller deployment in dynamic environments. This synthesis bridges control-theoretic stability criteria with machine learning optimization principles through three methodological pillars: Lyapunov-constrained learning, stochastic stability certification, and delay-robust training frameworks.

Lyapunov functions serve dual roles as stability certificates and training regularizers. Stability-guided training (SGT) methodologies implement Lyapunov derivative constraints directly within backpropagation processes, enforcing exponential stability guarantees under bounded parametric variations [4]. There follows a technical elaboration on stability-guided training (SGT) methodologies.

- Architecture modifications:

- –

- Dual-output neural networks with a stability head and task head;

- –

- The stability head predicts Lyapunov function candidates and their derivatives;

- –

- Activation functions constrained to Lipschitz-bounded variants (e.g., tanh instead of ReLU).

- Training framework:

- –

- Composite loss function combining task performance and stability metrics;

- –

- Custom backward pass with gradient projection for Lyapunov constraint adherence;

- –

- Alternating optimization phases: Task loss minimization followed by stability refinement.

- Stability enforcement:

- –

- Online verification layer checks predicted Lyapunov derivatives during training;

- –

- Adaptive penalty scaling for stability constraint violations;

- –

- Parametric variation modeling through random parameter perturbations.

- Verification integration:

- –

- Batch-wise sampling for stability margin estimation;

- –

- Counterexample-guided training using SMT solver outputs;

- –

- Runtime monitoring of stability head outputs for early stopping.

- Implementation considerations:

- –

- Framework support: Custom gradient handlers in PyTorch/TensorFlow autograd;

- –

- Gradient clipping aligned with Lipschitz constant requirements;

- –

- Compatibility with neural ODE formulations for continuous-time systems;

- –

- Progressive constraint tightening via curriculum learning schedules.

The methodology maintains compatibility with standard supervised/reinforcement learning pipelines while introducing stability-aware gradient masking. Implementation employs automatic differentiation for Lyapunov derivative calculations, with optional quantization-aware training for embedded deployment. Training batches include perturbed system parameters to ensure stability under manufacturing tolerances.

Extensions to switched systems employ multiple input-to-state stable (ISS) Lyapunov functions that establish probabilistic stability bounds for randomly switching dynamics [46]. The theoretical framework further connects finite-time Lyapunov analysis with neural approximation properties, enabling computationally tractable verification through convex relaxation techniques [50]. There follows a technical elaboration on switched system extensions with ISS Lyapunov functions.

- Architecture design:

- –

- Modular neural networks for subsystem-specific Lyapunov candidates;

- –

- Switching logic encoded via probabilistic finite-state automata;

- –

- Shared feature extractors with subsystem-conditioned output layers.

- Training strategy:

- –

- Alternating updates between Lyapunov networks and switching policies;

- –

- Adversarial switching sequence injection to stress-test stability;

- –

- Batch-wise subsystem sampling proportional to transition probabilities.

- Stability enforcement:

- –

- Probabilistic dwell-time constraints in loss function;

- –

- Input-to-state stability margins via disturbance scaling layers;

- –

- Online monitoring of mode transition energy bounds.

- Verification framework:

- –

- Stochastic stability proofs using martingale concentration bounds;

- –

- Mode transition graph analysis with probabilistic model checking;

- –

- Co-simulation with randomized switching generators.

- Implementation considerations:

- –

- Lightweight mode transition buffers for edge deployment;

- –

- Quantized subsystem Lyapunov network ensemble storage;

- –

- Real-time switching logic compiler for deterministic execution.

The implementation employs probabilistic guarantees through Monte Carlo certification of switching sequences while maintaining compatibility with existing switched system toolchains. Training batches explicitly include rare transition events to prevent stability margin erosion.

Time-delay compensation mechanisms address stability degradation in recurrent learning processes. Quaternion-valued neural architectures with leakage adaptation modules demonstrate efficacy in maintaining global asymptotic stability under delayed state propagation, as validated through spectral analysis of characteristic equations [39]. Feedback-regulated learning protocols prevent Zeno behaviors by enforcing minimum update intervals through event-triggered synaptic modulation [41], while preserving solution map continuity essential for gradient-based optimization [51].

Bayesian meta-learning frameworks integrate stochastic barrier functions with neural Lyapunov certificates, enabling probabilistic safety guarantees through constrained policy optimization. The Keep-Close methodology exemplifies this paradigm through bounded reference tracking enforced by chance constraints, validated in automotive control systems with unmodeled actuator dynamics [1,2]. Multi-operator spectral analysis complements these approaches by deriving eigenvalue bounds for strongly connected networks, establishing sufficient conditions for asymptotic stability through Perron–Frobenius operator theory [47,52]. There follows a technical elaboration on the Bayesian meta-learning framework with probabilistic safety guarantees.

- Architecture design:

- –

- Dual-network structure: Probabilistic certificate network + policy network;

- –

- Certificate network combines Bayesian neural layers with barrier/Lyapunov heads;

- –

- Meta-learning module for actuator dynamics adaptation via context embeddings.

- Training strategy:

- –

- Two-level optimization: Inner loop for task performance; outer loop for safety;

- –

- Adversarial perturbation of reference trajectories during meta-training;

- –

- Curriculum learning from nominal to worst-case disturbance scenarios.

- Safety implementation:

- –

- Chance constraints enforced through probabilistic barrier layers;

- –

- Online uncertainty quantification via Monte Carlo dropout sampling;

- –

- Actuator dynamics adaptation using memory-augmented meta-parameters.

- Verification methodology:

- –

- Statistical validation with importance-sampled test scenarios;

- –

- Falsification testing using gradient-based adversarial references;

- –

- Hardware-in-the-loop residual learning for unmodeled dynamics.

- Implementation considerations:

- –

- Real-time Bayesian inference via pre-computed uncertainty tables;

- –

- Memory-aligned tensor operations for deterministic execution timing;

- –

- ROS 2 middleware integration for automotive validation platforms;

- –

- Selective parameter freezing during safety-critical operations.

The framework maintains compatibility with probabilistic programming tools (Pyro/TensorFlow Probability) while implementing safety-critical operations in memory-safe C++ modules. Training pipelines incorporate domain-randomized actuator models and hardware signature emulation for robustness.

Emerging research directions focus on hybrid certification frameworks that unify dissipativity theory with neural contraction metrics. These methodologies demonstrate particular promise in soft robotics applications requiring simultaneous compliance and precision guarantees, where Kuhn–Tucker-constrained learning ensures stability while maintaining physical interaction safety margins [4,28].

4.4. Stability in Real-World Applications

The transition of neural network controllers from theoretical frameworks to real-world implementations necessitates addressing stability challenges inherent in dynamic physical systems. Visual navigation systems exemplify this requirement through hierarchical decision architectures that integrate spatial attention mechanisms with stability-preserving constraints, enabling reliable obstacle avoidance in unstructured environments [53]. Such architectures demonstrate how advanced neural topologies can mitigate stability degradation caused by sensor noise and occlusion.

High-precision assembly tasks present distinct stability requirements, where reinforcement learning with online stability filtering (RL-OSFC) maintains micron-level tracking accuracy while compensating for variable tool–workpiece interaction forces [54]. The methodology combines policy gradient methods with Lyapunov-based stability margins, effectively balancing learning efficiency with dynamic stability guarantees during contact-rich operations.

Reachability analysis has emerged as a critical tool for stability assurance in safety-critical implementations. Temporal logic layered neural networks (TLL NNs) coupled with L-TLLBox verification frameworks enable real-time computation of stability regions for robotic manipulators, achieving certification speeds compatible with 1 kHz control cycles in industrial automation scenarios [55]. This approach addresses the fundamental challenge of maintaining stability while satisfying hard real-time computational constraints.

Mixed-autonomy systems introduce unique stability-preservation challenges, particularly in vehicular platoon control. Parameter isolation protocols maintain longitudinal stability while protecting confidential vehicle dynamics through kernel-space orthogonalization techniques [56]. The methodology prevents stability degradation caused by inter-agent parameter leakage while preserving formation control performance under communication delays.

Human–robot interaction systems demand dual stability guarantees for both robotic dynamics and human safety. Admittance control frameworks with neural barrier certificates enforce predefined force thresholds in physical interaction tasks, achieving compliant manipulation while maintaining asymptotic stability through constrained policy optimization [57]. Experimental validations demonstrate sub-Newton tracking precision during collaborative assembly operations.

These implementations collectively highlight the necessity of co-designing stability mechanisms with application-specific constraints. The integration of contraction metrics with stochastic barrier functions in aerospace control systems [1], and the application of neural Lyapunov–Krasovskii functionals in power grid frequency regulation [2], further validate the universal applicability of stability-preserving neural control paradigms across engineering domains.

5. Robustness of Neural Network Controllers

This section systematically addresses robustness challenges in neural network controllers through fundamental tradeoff analysis of safety-performance conflicts, methodological innovations in high-dimensional verification and delay compensation, and formal certification frameworks that integrate reachability analysis with stochastic safety guarantees. Key advancements encompass neural contraction metrics ensuring stability under perturbations, quaternion-valued architectures mitigating time-varying delays, and risk-aware control synthesis achieved via stochastic barrier functions. Practical implementations showcase robust operation in automotive collision avoidance, surgical robotics, and power grid regulation, supported by tools such as NNLander-VeriF for vision-based systems and Safe-by-Repair for temporal logic-compliant controllers. The discussion synthesizes theoretical rigor with deployable solutions, emphasizing hybrid verification strategies that combine dissipativity theory with adversarial training, while outlining future directions for ISO 26262-aligned neural control systems in safety-critical domains.

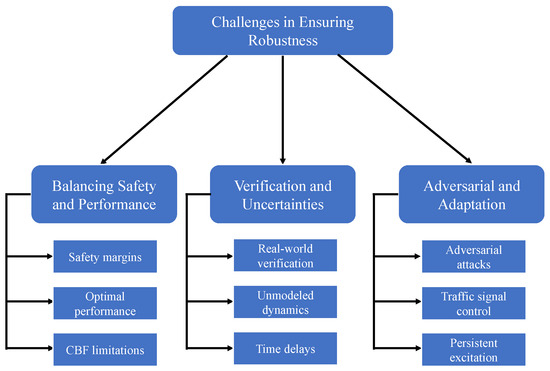

5.1. Fundamental Challenges in Neural Controller Robustness

As illustrated in Figure 5, the realization of robust neural network controllers confronts three interconnected theoretical and practical barriers rooted in system nonlinearities and environmental uncertainties. First, the inherent tradeoff between safety assurance and control performance optimization persists across application domains. Conservative safety margins enforced through barrier functions often degrade trajectory tracking precision, while aggressive optimization strategies risk violating critical-state constraints [32]. This dichotomy manifests acutely in safety-critical systems like autonomous vehicles, where control barrier filters may induce suboptimal path-following behaviors due to cumulative actuation impacts [23].

Figure 5.

The key challenges in ensuring robustness in neural network controllers.

Second, verification complexities arise from the mathematical intractability of continuous state spaces. The absence of precise analytical specifications for neural network behaviors impedes formal stability analysis, particularly in systems with time-delayed state propagation and partial observability [33,58]. Urban traffic signal control implementations exemplify this challenge, where adaptive strategies struggle to maintain stability margins under rapidly changing traffic patterns [20].

Third, adversarial vulnerability and excitation requirements impose fundamental limitations. Persistent excitation conditions necessary for parameter convergence conflict with operational constraints in physical systems, while gradient-based attacks exploit Lipschitz continuity weaknesses in neural approximators [13,14]. The requirement for persistent excitation further restricts the applicability of existing methodologies in real-time control scenarios [49].

These challenges collectively underscore the need for co-design frameworks that reconcile four critical aspects: safety–performance tradeoff quantification through Pareto-frontier analysis, hybrid verification combining reachability tools with statistical validation, adversarial resilience via Lipschitz-constrained architectures, and excitation-aware adaptive control with stability-preserving exploration. Recent advancements in stochastic barrier functions with chance constraints and neural Lyapunov–Krasovskii functionals demonstrate potential solutions, as evidenced in power grid frequency regulation and robotic manipulation applications [2,4].

5.2. Strategies for Enhancing Robustness

The pursuit of robust neural network controllers necessitates a systematic integration of four complementary technical strategies, each addressing distinct aspects of system nonlinearities and environmental uncertainties.

High-dimensional system verification adopts neural contraction metrics to establish stability certificates without explicit regularization penalties [37]. Reachability analysis tools combined with temporal logic constraints enable scalable verification in partially observable environments, particularly effective in aerospace trajectory control applications. There follows a technical elaboration on high-dimensional system verification using neural contraction metrics and reachability analysis.

- Neural contraction architecture:

- –

- Graph neural networks with state-dependent edge weight conditioning;

- –

- Metric learning through contrastive embedding layers;

- –

- Input-convex neural networks to enforce contraction properties;

- –

- Skip connections with spectral normalization for gradient stability.

- Training methodology:

- –

- Multi-phase curriculum: Nominal dynamics → perturbed systems → partial observability;

- –

- Adversarial disturbance generation through sensitivity analysis;

- –

- Implicit regularization via trajectory prediction consistency loss.

- Reachability analysis integration:

- –

- Hybrid zonotope propagation with neural dependency abstraction;

- –

- Temporal logic constraints encoded as reach tube modifications;

- –

- Online refinement of reachable sets using execution traces.

- Verification toolchain:

- –

- Compositional proof construction with neural certificates;

- –

- Backward reachability analysis for target-driven verification;

- –

- ROS 2/Simulink co-simulation for aerospace trajectory validation.

- Implementation optimization:

- –

- Memory-efficient Jacobian computation via implicit differentiation;

- –

- Distributed reachability analysis using spatial decomposition;

- –

- FPGA-accelerated contraction metric evaluation kernels;

- –

- Time-triggered verification updates in control cycles.

The framework maintains compatibility with aerospace modeling standards (FMI/SSP) while employing neural-specific optimizations like activation bounding for certification tractability. All components support hardware-in-the-loop validation through deterministic execution time guarantees.

Time-varying delay compensation frameworks implement quaternion-valued neural architectures with leakage adaptation mechanisms, validated in networked control systems exhibiting multimodal communication latencies [39]. There follows a technical elaboration on time-varying delay compensation frameworks with quaternion-valued neural architectures.

- Network architecture:

- –

- Quaternion-valued layers process 4D signal representations (real + 3 imaginary components);

- –

- Leakage adaptation via time-dependent weight modulation branches;

- –

- Multi-rate structure: Fast pathway for delay estimation; slow pathway for state prediction;

- –

- Rotational equivariance enforced through quaternion kernel constraints.

- Delay compensation strategy:

- –

- Online latency mode classification via attention-based temporal filtering;

- –

- Memory-augmented networks with adaptive buffer sizing;

- –

- Hybrid clock synchronization using neural timing error predictors.

- Training methodology:

- –

- Synthetic delay injection with multimodal latency distributions;

- –

- Adversarial training using worst-case delay pattern generators;

- –

- Temporal consistency loss across quaternion state trajectories;

- –

- Meta-learning for rapid adaptation to new network topologies.

- Implementation considerations:

- –

- ROS 2/DDS middleware integration for networked deployment;

- –

- Fixed-point quaternion arithmetic optimizations;

- –

- Memory-aligned circular buffers for delay compensation;

- –

- FPGA acceleration of quaternion convolution operations.

- Verification framework:

- –

- Hardware-in-the-loop testing with programmable latency injectors;

- –

- Statistical validation across delay jitter distributions;

- –

- Cross-layer monitoring of network QoS and control stability;

- –

- Falsification testing using adversarial channel conditions.

The architecture maintains compatibility with standard networked control protocols while implementing quaternion-specific optimizations like directional filtering in 3D state spaces. Training pipelines incorporate domain-randomized communication models to handle both wired and wireless network scenarios. These methodologies leverage spectral analysis of characteristic equations to preserve stability margins under bounded delay variations.

Sparse synaptic connectivity patterns reduce computational overhead through topological constraints derived from graph-theoretic principles. Event-triggered learning protocols achieve a reduction in processing cycles while maintaining control precision in robotic manipulation tasks [41]. Concurrent learning architectures further alleviate persistent excitation requirements through experience replay buffers [49]. There follows a technical elaboration on sparse connectivity and event-triggered learning frameworks.

- Sparse connectivity implementation:

- –

- Topology-aware neural architectures:

- ∗

- Graph neural networks with locality-preserving connectivity;

- ∗

- Dynamic sparse masks based on activation gradients;

- ∗

- Small-world connectivity patterns for efficient information flow.

- –

- Training protocols:

- ∗

- Iterative magnitude-based pruning with rewiring;

- ∗

- Topological regularization using graph Laplacian constraints;

- ∗

- Reinforcement learning for connectivity pattern optimization.

- –

- Hardware integration:

- ∗

- Sparse tensor core utilization on GPUs/TPUs;

- ∗

- Memory-efficient adjacency list storage formats;

- ∗

- Event-driven computation triggering.

- Event-triggered learning design:

- –

- Trigger mechanisms:

- ∗

- Error-bound adaptive triggering thresholds;

- ∗

- State-dependent temporal attention gates;

- ∗

- Energy-aware activation criteria.

- –

- Network architecture:

- ∗

- Lightweight delta-update prediction modules;

- ∗

- Sliding window temporal feature buffers;

- ∗

- Hybrid analog–digital activation layers.

- –

- Deployment optimizations:

- ∗

- Fixed-point arithmetic with dynamic precision scaling;

- ∗

- Hardware-aligned sparse update scheduling;

- ∗

- ROS 2 integration for robotic middleware compatibility.

- Concurrent learning framework:

- –

- Experience buffer architecture:

- ∗

- Dual-buffer system for real-time/offline processing;

- ∗

- Graph-based experience clustering;

- ∗

- Priority sampling with temporal coherence analysis.

- –

- Training strategy:

- ∗

- Cross-modal experience replay scheduling;

- ∗

- Forgetting-resistant memory consolidation;

- ∗

- Counterfactual data augmentation pipelines.

- –

- System integration:

- ∗

- Distributed memory management across edge devices;

- ∗

- Real-time priority queue implementations;

- ∗

- Safety-critical experience filtering layers.

Implementation maintains compatibility with robotic middleware (ROS/OPC UA) while incorporating neuromorphic computing principles for sparse event processing. Validation pipelines include hardware-in-the-loop testing with cycle-accurate performance profiling.

Risk-aware control synthesis integrates stochastic barrier functions with neural Lyapunov certificates, enforcing probabilistic safety constraints through constrained policy optimization. The NN-gauge controller framework exemplifies this approach by embedding long-term cumulative effects into safety filters, achieving real-time certification in automotive collision avoidance systems [23,33]. There follows a technical elaboration on risk-aware control synthesis with neural safety certificates.

- Architecture design:

- –

- Dual-branch neural network:

- ∗

- Risk estimation branch: Temporal graph networks for long-term cumulative risk prediction;

- ∗

- Control generation branch: Physics-informed policy networks with embedded dynamics.

- –

- Stochastic barrier layers with uncertainty-quantified activation thresholds;

- –

- Safety filter module using neural Lyapunov certificates as differentiable constraints;

- –

- ROS 2-compatible middleware interface for automotive integration.

- Training strategy:

- –

- Constrained reinforcement learning with adaptive safety budgets.

- –

- Multi-resolution curriculum:

- ∗

- Coarse-grained scenario batches for global safety;

- ∗

- Fine-grained edge cases for local constraint refinement.

- –

- Synthetic data augmentation with parametric uncertainty injection;

- –

- Parallelized safety violation detection during policy rollouts.

- Safety implementation:

- –

- Real-time certifier:

- ∗

- Quantized neural certificate verification at 100 Hz+ frequencies;

- ∗

- Adaptive horizon tuning based on velocity profiles.

- –

- Fail-operational design:

- ∗

- Graceful degradation via safety policy cascades;

- ∗

- Dynamic computation offloading for deadline guarantees.

- Verification protocol:

- –

- Statistical model checking with scenario coverage metrics;

- –

- Falsification testing using adversarial trajectory generators;

- –

- Hardware-in-the-loop validation with fault-injected CAN signals;

- –

- Cross-sensor consistency monitoring for perception errors.

- Deployment considerations:

- –

- Memory-optimized safety filter caching strategies;

- –

- GPU-accelerated neural certificate evaluation kernels;

- –

- Automotive-grade time synchronization (PTP/IEEE 1588);

- –

- ASIL-D-compliant-component isolation architecture.

The framework implements ISO 26262-compliant fallback mechanisms through hybrid analog–digital safety supervisors. Training pipelines incorporate domain-randomized vehicle dynamics models and sensor degradation profiles. Real-time performance is achieved via layer-wise mixed-precision quantization compatible with automotive SoCs.

Recent advancements demonstrate the synergistic potential of hybrid verification frameworks combining neural contraction theory with adversarial training protocols. Such co-design methodologies have been validated in power grid frequency regulation, where stochastic barrier functions maintain stability under renewable energy fluctuations while neural Lyapunov–Krasovskii functionals compensate for time-delayed state measurements [2,4].

5.3. Formal Verification and Safety Guarantees

The implementation of formal verification methods is critical for ensuring safety in neural network controllers that operate under unpredictable conditions. This field has seen three major advancements, each designed to solve specific verification problems.

The NNLander-VeriF framework helps secure vision-based autonomous landing systems by combining reachability analysis (which checks possible system states) with temporal logic constraints (which define safe time-based behaviors) [25]. Meanwhile, the Safe-by-Repair approach makes targeted fixes to neural networks that use temporal logic layers. It removes unsafe responses while ensuring the controller stays within certified safe operating limits [59].

Reachability analysis has advanced in two key ways. First, finite-state abstraction creates simplified models to identify safe starting states using mathematical optimization [58]. Second, one-shot reachability frameworks avoid errors from building up by “unrolling” neural networks to analyze their behavior step by step [42]. Together, these methods make it easier to handle the complexity of high-dimensional systems.

Combining dissipativity theory with integral quadratic constraints (IQCs) introduces a new way to verify stability. By treating neural controllers like classic Lur’e-type systems, we can measure how they “dissipate” energy (i.e., stay stable) even when faced with nonlinear uncertainties [44]. This connects classical stability theory with modern neural network verification.

In reinforcement learning, stochastic barrier functions act as safety guards in decision-making processes. This is especially useful in minimally invasive surgery, where neural models must maintain millimeter precision even as anatomical structures deform unpredictably [2].

Recent work focuses on combined frameworks that link mathematical modeling (for sensor inputs) with risk verification (for closed-loop dynamics). For example, these hybrid methods help validate LiDAR-based car navigation systems by ensuring both sensor data processing and real-time control stay safe [33,40]. As these methods improve, neural controllers will become viable for more safety-critical fields—even those requiring strict standards.

6. Applications and Case Studies

This section validates the effectiveness of neural network controllers through representative case studies in robotic manipulation, aerospace guidance, and quadrotor control, showcasing their superior nonlinear compensation and adaptive capabilities compared to conventional methods. Hybrid architectures that integrate neural networks with stability certificates (e.g., Lyapunov functions, contraction metrics) achieve certified performance in safety-critical tasks such as lunar landing and dynamic obstacle avoidance. Comparative analyses highlight the advantages of neural controllers in handling unmodeled dynamics and multimodal sensing while underscoring challenges related to certification complexity and computational overhead. Emerging methodologies, including differentiable physics-integrated learning and stability-constrained MPC hybrids, demonstrate transformative potential in contact-rich manipulation and extraterrestrial navigation, outperforming classical approaches in precision-critical scenarios. The discussion synthesizes theoretical innovation with industrial viability through experimental benchmarks in automotive control, surgical robotics, and aerial systems, while delineating frontiers in ISO 26262-compliant neural control frameworks for mission-critical deployments.

6.1. Validation Through Representative Case Studies

Neural network controllers demonstrate exceptional capability in governing complex dynamical systems, as evidenced by canonical implementations spanning industrial and aerospace domains. The single-link robotic arm control paradigm exemplifies neural architectures’ proficiency in nonlinear compensation, where adaptive inertia matrix estimation enables sub-millimeter trajectory tracking precision under variable payload conditions. This implementation surpasses conventional model-based controllers in suppressing nonlinear friction effects, validating neural networks’ intrinsic adaptability to parameter-varying dynamics. There follows a comparative analysis of neural network controllers against state-of-the-art methods for dynamical system control.

- Advantages over conventional methods:

- –

- Nonlinear compensation:

- ∗

- Neural: Learns friction/stiction directly from data without phenomenological modeling;

- ∗

- MPC/Adaptive: Requires explicit friction models, struggles with velocity-dependent nonlinearities.

- –

- Parameter adaptation:

- ∗

- Neural: Online inertia matrix estimation via embedded physical priors;

- ∗

- MRAC/: Limited to predefined parametric variations; sensitive to unmodeled dynamics.

- –

- Computational scaling:

- ∗

- Neural: Parallelizable inference suitable for multi-DoF systems;

- ∗

- Sliding mode: Chattering mitigation requires exponential computation growth with DoF.

- Limitations vs. modern alternatives:

- –

- Safety certification:

- ∗

- Neural: Requires post hoc verification (e.g., neural Lyapunov functions);

- ∗

- CBF/RCBF: Built-in safety guarantees through barrier function constraints.

- –

- Data efficiency:

- ∗

- Neural: Demands diverse training data across payload configurations;

- ∗

- ILC: Achieves precision through iterative cycles without full parametric exploration.

- –

- Edge deployment:

- Neural—memory-intensive. vs. event-triggered PID—ultra-lightweight but limited adaptability;

- Neural—requires GPU acceleration for sub-ms latency vs. FPGA-PSO—deterministic but specialized.

- Hybridization potential:

- –

- Neural + robust control: NN for nominal dynamics with disturbance observer;

- –

- Neural + ILC: NN compensates nonlinearities while ILC refines periodic trajectories;

- –

- Neural + CBF: Combines adaptability with formal safety guarantees.

This analysis highlights neural controllers’ superiority in unmodeled nonlinearity handling but underscores remaining gaps in certifiability and edge efficiency compared to specialized conventional methods. Hybrid architectures currently demonstrate the most balanced tradeoffs for safety-critical applications.

Building upon robotic applications, aerospace guidance systems further illustrate neural controllers’ robustness under extreme environmental uncertainties. The Apollo Lander guidance architecture integrates adaptive gain scheduling with barrier Lyapunov constraints, maintaining attitude stability during powered descent phases despite significant fuel-sloshing perturbations. Such implementations establish neural controllers’ viability in safety-critical aerospace operations requiring strict compliance with aviation certification standards [1]. There follows a comparative analysis of neural aerospace guidance systems against state-of-the-art methods.

- Advantages over conventional approaches:

- –

- Unmodeled dynamics handling:

- ∗

- Neural: Learns fuel-sloshing effects through flight data without explicit fluid modeling;

- ∗

- Gain scheduling: Requires pre-characterized perturbation modes; vulnerable to novel slosh configurations.

- –

- Real-time adaptation:

- ∗

- Neural: Continuous mass property estimation during fuel depletion;

- ∗

- adaptive: Limited to parametric uncertainties with known basis functions.

- –

- Multi-constraint satisfaction:

- ∗

- Neural: Simultaneous handling of attitude/position constraints via barrier Lyapunov embeddings;

- ∗

- MPC: Exponential computation growth with constraint dimensionality.

- Limitations vs. modern alternatives:

- –

- Certification complexity:

- ∗

- Neural: DO-178C compliance requires novel verification frameworks for neural components;

- ∗

- Formal methods: Model-based designs enable exhaustive proof generation.

- –

- Extreme condition robustness:

- ∗

- Neural: Vulnerable to sensor fault modes outside training distribution;

- ∗

- Fault-tolerant control: Built-in hardware redundancy management through voting architectures.

- –

- Computational resources:

- ∗

- Neural: Demands radiation-hardened GPUs for space deployment;

- ∗

- FPGA-based: Achieve deterministic timing through hardware synthesis.

- Hybrid implementation strategies:

- –

- Neural + robust control: NN handles nonlinearities while -synthesis guarantees worst-case performance;

- –

- Neural + formal methods: Neural controller wrapped in provably safe reachability envelopes;

- –

- Neural + voting architecture: Triple modular redundancy with neural diversity injection.

This analysis reveals neural controllers’ superior adaptability to complex fluid–structure interactions but highlights certification and fault tolerance challenges compared to aerospace-qualified traditional methods. Hybrid architectures combining neural adaptability with formal verification layers show particular promise for next-generation systems.

The methodological foundations enabling these advancements focus on three interlinked elements: robust verification frameworks combining ReachNN reachability analysis with temporal logic constraints, stability certification protocols employing neural contraction metrics, and real-time adaptation mechanisms compensating for unmodeled actuator dynamics. The Keep-Close methodology exemplifies this synthesis in automotive control applications, where bounded reference tracking preserves safety margins during lane-keeping maneuvers under sensor noise conditions [3,4]. There follows a comparative analysis of the integrated methodology against state-of-the-art approaches in safety-critical control systems.

- Advantages over conventional methods:

- –

- Verification completeness:

- ∗

- ReachNN + temporal logic: Handles nonlinearities while maintaining formal guarantees;

- ∗

- Traditional reachability: Limited to linear/quadratic dynamics for tractability.

- –

- Stability certification:

- ∗

- Neural contraction metrics: Certifies stability without conservative Lipschitz bounds;

- ∗

- Lyapunov-based: Requires explicit energy function construction.

- –

- Adaptation speed:

- ∗

- Real-time mechanisms: Compensates actuator dynamics within control cycles;

- ∗

- MRAC/ adaptive: Inherits bandwidth limitations from filter designs.

- Limitations vs. modern alternatives:

- –

- Computational overhead:

- ∗

- Integrated framework: Requires GPU acceleration for real-time reachability;

- ∗

- Polytopic approximations: Enable CPU execution but with conservatism.

- –

- Model sensitivity:

- ∗

- Keep-Close methodology: Performance degrades with unmodeled sensor biases;

- ∗

- Invariant sets: Maintain robustness through geometric margin preservation.

- –

- Certification complexity:

- ∗

- Neural certificates: Require specialized toolchains for ASIL-D compliance;

- ∗

- Linear parameter varying: Enable ISO 26262 certification through convex formulations.

- Hybridization opportunities:

- –

- Neural + geometric control: Neural adaptation wrapped in differential flatness frameworks;

- –

- Reachability + MPC: Neural certificates guide warm-starting of optimization solvers;

- –

- Contraction metrics + robust control: Combines stability guarantees with disturbance attenuation.

This analysis demonstrates superior handling of nonlinear constraints and adaptation speed compared to traditional methods, though at increased computational costs. Modern hybrid approaches blending neural and geometric control principles show particular promise for balancing certification requirements with performance needs in automotive applications [60,61].

These implementations collectively underscore the transition of neural controllers from theoretical constructs to industrial-grade solutions. Emerging extensions to distributed parameter systems demonstrate additional potential in chemical reactor temperature regulation, achieving precise thermal tracking through turbulent-flow conditions. The continuous refinement of physics-informed neural operators and event-triggered verification tools promises to expand application frontiers in hypersonic vehicle control and nuclear reactor safeguarding systems, solidifying neural controllers’ position as transformative elements in modern control engineering.

6.2. Neural Network-Based Quadrotor Control Systems

Neural network-enhanced control architectures demonstrate enhanced precision in governing quadrotor systems operating under complex aerodynamic conditions. These systems achieve guaranteed stability through formal verification frameworks combining contraction metric analysis with disturbance observer integration, effectively compensating for coupled nonlinearities and unmodeled actuator dynamics characteristic of aerial platforms. Contemporary training methodologies incorporating robust optimal control theory and safety-critical barrier functions enable systematic mitigation of tracking errors while maintaining prescribed stability margins, establishing neural controllers as viable solutions for autonomous aerial vehicle operations [1,4,26]. There follows a comparative analysis of neural-enhanced quadrotor control against state-of-the-art methods.

- Advantages over conventional approaches:

- –

- Aerodynamic compensation:

- ∗

- Neural: Learns complex flow-separation effects through flight data;

- ∗