Abstract

In this paper, two Halpern-type inertial iteration methods with self-adaptive step size are proposed for estimating the solution of split common null point problems () in such a way that the Halpern iteration and inertial extrapolation are computed simultaneously in the beginning of each iteration. We prove the strong convergence of sequences driven by the suggested methods without estimating the norm of bounded linear operator when certain appropriate assumptions are made. We demonstrate the efficiency of our iterative methods and compare them with some related and well-known results using relevant numerical examples.

Keywords:

split common null point problem; Halpern; inertial; self adaptive algorithms; strong convergence MSC:

47J22; 47J25; 49J53; 49J40

1. Introduction

The split feasibility problem presented by Censor and Elfving [1] is the first split problem which has been studied intensively by researchers in applied sciences, see e.g., [2,3,4,5]. The split inverse problem is the most prevalent split problem, due to Censor et al. [6]. Recently, various models of inverse problems have been developed and studied. Due to application oriented nature a growing interest has noticed in recent years in the study of split variational inequality/inclusion problems. Moudafi [7] studied a particular case of called split monotone variational inclusion problem :

where and are set-valued mappings; and are single-valued mappings on Hilbert spaces and , respectively; and . Moudafi [7] composed the following scheme for . Let , for arbitrary , compute

where is an adjoint operator of A, with L being the spectral radius of operator , and . If , then turn into the split common null point problem (, suggested by Byrne et al. [8]:

where and . Also, Byrne et al. [8] composed the next scheme for . For arbitrary point , compute

where , . It is obvious to see that solves if and only if . Kazmi and Rizvi [9] investigated the solutions of and fixed point problem of a nonexpansive mapping T by using the following scheme. For arbitrary , compute

where h is contraction and . Later, Dilshad et al. [10] investigated the common solution of and the fixed point of a finite collection of nonexpansive mappings. Recently, an alternative method was suggested by Akram et al. [11] to explore the common solution of and as follows:

where . Later on many authors have shown their interest in solving and related problems, using innovative methods. Some interesting results can be found in [10,12,13,14,15,16] and references therein.

In the above-mentioned work and related literature, we found that the step size, which is under the control of norm , is required for the convergence of iterative schemes. To overcome this regulation, a new form of iterative schemes have been proposed, see, e.g., [16,17,18,19]. Lpez et al. [20] proposed a relaxed iterative scheme for :

where and are the orthogonal projections on and , respectively, and is calculated by

with and , and and . Dilshad et al. [21], investigate the solution of without using pre-calculated norm . For arbitrary , compute

where and the is calculated by .

Slow convergence of the suggested algorithms was the new problem for researchers. Therefore, many efforts have been made to accelerate the convergence. Several researchers have implemented the inertial term as one of the speed-up approaches. Recall that Alvarez and Attouch [22] established the inertial proximal point approach for the monotone mapping V utilising the notion of implicit descritization for derivatives

where , is the extrapolation coefficient and composes the inertial term. It is found that this kind of scheme has an improved convergence rate and therefore this scheme was adopted, altered and implemented to solve various nonlinear problems, see, e.g., [23,24,25,26,27,28]. Very recently, Reich and Taiwo [29] studied some fast iterative methods for estimating the solution of variational inclusion problem in which they jointly compute the viscosity approximation and inertial extrapolation in the first step of iterations.

Inspired by the above-mentioned work, we suggest two Halpern-type inertial iteration methods with self adaptive step size for approaching the solution of in the setting of Hilbert spaces. Our methods compute the Halpern iteration, inertial extrapolation simultaneously in the beginning of each iterations. We use the self-adaptive step-size such that the iteration process do not required the prior calculated norm of bounded linear operator. Our work can be seen as simple and accelerated modified methods for solving .

We arrange this paper as follows. The following section recalls some definitions and results that are beneficial in the convergence analysis of the proposed methods. In Section 3, we propose our two Halpern-type, inertial and self-adaptive iteration methods and then we state and study the strong convergence results. Section 4 illustrates numerical examples in finite and infinite dimensional Hilbert spaces showing the behaviour and advantages of suggested iterative methods. We conclude our study and numerical experiment in Section 5.

2. Preliminaries

Assume that H is a real Hilbert space and D is a close and convex subset of H. If is a sequence in H, then denotes strong convergence of to z and denotes weak convergence. The weak -limit of is defined by

If is the projection of onto , then for some , there exists unique closest point in D indicate by such that

also satisfies

Moreover, is also identified by the fact

For all in Hilbert space H, we have the following equality:

Definition 1.

A mapping is called

- (i)

- contraction, if

- (ii)

- nonexpansive, if

- (iii)

- firmly nonexpansive, if

Definition 2.

Let be set-valued mapping. Then

- (i)

- V is called monotone, if ;

- (ii)

- ;

- (iii)

- V is called maximal monotone, if V is monotone and , for and I is an identity mapping.

Lemma 1

([30]). If is a sequence of nonnegative real numbers such that

where is a sequence in and is a sequence in such that

- (i)

- (ii)

- or

Then

Lemma 2

([31]). In a Hilbert space H,

- (i)

- if is monotone and be the resolvent of V, then and are firmly nonexpansive for .

- (ii)

- if is nonexpansive, then is demiclosed at zero and if V is firmly nonexpansive then is firmly nonexpansive.

Lemma 3

([32]). For a bounded sequence in Hilbert space H. If there exists a subset satisfying

- (i)

- exists, ,

- (ii)

Then, there exists such that .

Lemma 4

([33]). Let be a sequence in that does not decrease at infinity in the sense that there exists a subsequence of such that for all . Also consider the sequence of integers defined by

Then is a nondecreasing sequence verifying and for all , the following inequality holds:

3. Main Results

In this part, we describe our Halpern-type inertial iteration methods with self-adaptive step size for . We adopt the following assumptions to ensure the convergence of our methods:

- and are maximal monotone operators;

- is a bounded linear operator;

- is a sequence in so that and ;

- is a positive sequence so that and ;

- The solution set of is express by and .

Now, we can present our Halpern-type inertial iteration method for solving .

Remark 1.

From (3), we have . By the choice of and satisfying , we obtain and by assumption , we obtain .

Remark 2.

We can easily show that if and only if and , by using the definition of resolvents of and , respectively.

Lemma 5

([21]). If satisfies

then

Remark 3.

If , then from (5), we obtain

If , we obtain that , otherwise by putting the value of and taking limit on both sides, we obtain

By using Lemma 5, we conclude that

which implies that .

Theorem 1.

If the assumptions – are satisfied. Then the sequence induced by Algorithm 1 converges strongly to a solution of , where .

| Algorithm 1 Choose and are given. Choose arbitrary points and and set . |

| Iterative Step: For iterates , and , , select , where

|

Proof.

Let and using (1) and (5), we obtain

For , by Remark 2, we have and . Since and are firmly nonexpansive (Lemma 2), we have

and

From (7)–(8), we achieve

or

Since , implies that is bounded, hence there exists a number such that . From (4), it follows that

which implies that is bounded. By using (10), we conclude that is also bounded.

Let . The boundedness of implies that is also bounded. By using (4), we obtain

and

where . Therefore by using (11) and (12), we obtain

From (9) and (13), we get

Case I: Suppose that the sequence is monotonically decreasing then there exists such that for all . Hence, the boundedness of implies is convergent. Therefore, using (14), we have

Taking limit , we obtain

By using Lemma 5, we obtain

By using (5) and (16), we see that

Since and using Remark 1, we obtain

From (4), we have

taking limit , in both the sides, using boundedness of , Remark 1 and Assumption , we get

Hence using (18) and (20), we conclude

Since is bounded, there exist a subsequence of so that as . It follows from (19) and (20) that and as . We claim that . From (5), it follows that

Taking , and using Lemma 5, we obtain

By the demiclosedness principle, we obtain

Remark 2 implies that . Now, we exhibit that converge strongly to . From (14), we immediately see that

Moreover, using and Remark 1, we get

By applying Lemma 1 to (23), we deduce that and

- Case II: If the Case I is not true, then there exists a subsequence of such that and the sequence defined by is an increasing sequence and as and

Following the corresponding arguments as in the proof of Case I, we get

and

From (23) and (16), we have

By taking limit , we obtain as . Invoking Lemma 4, we have

Therefore from (28), it follows that as . Hence, as and we achieved the desired result. □

Theorem 2.

Suppose that the assumptions – are satisfied. Then the sequence induced by Algorithm 2 converge strongly to a solution of , where .

| Algorithm 2 Choose and are given. Choose arbitrary points and and set . |

| Iterative Step: Given the iterates , and , , choose , where

|

Proof.

Let , and since , implies that is bounded, so there exists a number such that . Then by using (30), we obtain

By using (10), we obtain

which implies that is bounded and so is . Let , then by using (1), we obtain

Now, we estimate

and

From (33), (34), and (35), we obtain

Putting the value of in (9), we have

Now, We can obtain the desired outcomes by following the corresponding steps as in the proof of Theorem 1. □

Remark 4.

Let , be nonexpansive mappings and is a bounded linear operator, then split common fixed point problem is defined as:

where and denote the fixed point sets of mappings and , respectively. By replacing and with nonexpansive mappings and , respectively, in Algorithms 1 and 2, we can obtain the strong convergence theorems for .

4. Numerical Experiments

Example 1.

Suppose . We define the monotone mappings and by . Let is a bounded linear operator. It is obvious to see that and are monotone operators. The Resolvents of and are

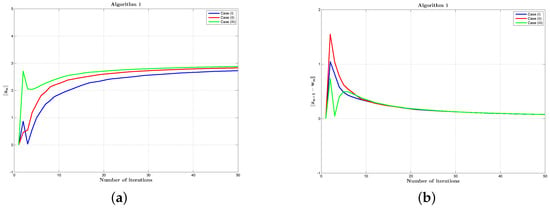

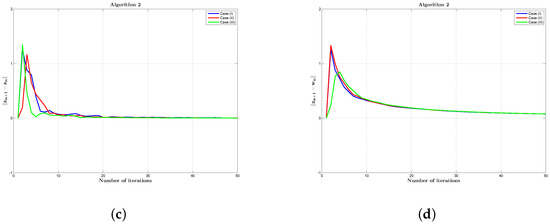

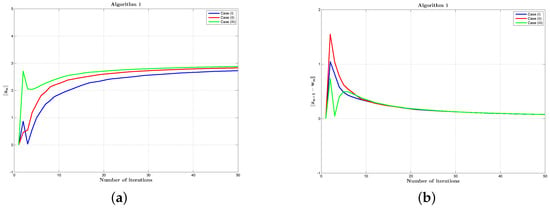

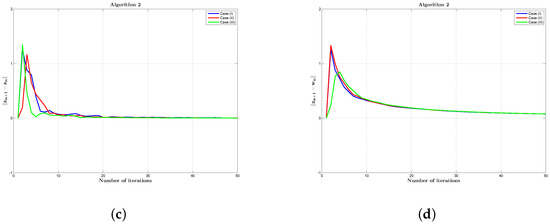

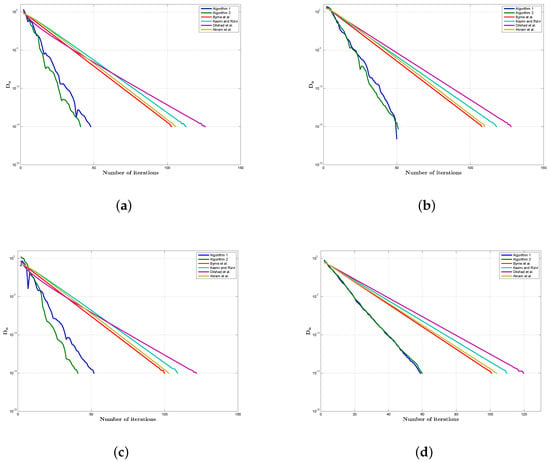

We choose and satisfying the conditions and . As a stopping condition, we set the maximum number of iterations at 50. The parameter is created at random in the range , where is computed by (3). It can easily seen that and we select the fixed point . Figure 1 depicts the behaviour of the sequences derived from Algorithms 1 and 2 using three distinct cases, which are mentioned below:

Figure 1.

Graphical behaviour of , of Algorithm 1 are shown in figures (a,b) and graph of and of Algorithm 2 are displayed in figures (c,d) by choosing three distinct cases of parameters.

- Case (I):

- , , , .

- Case (II):

- , , , .

- Case (III):

- , , , , .

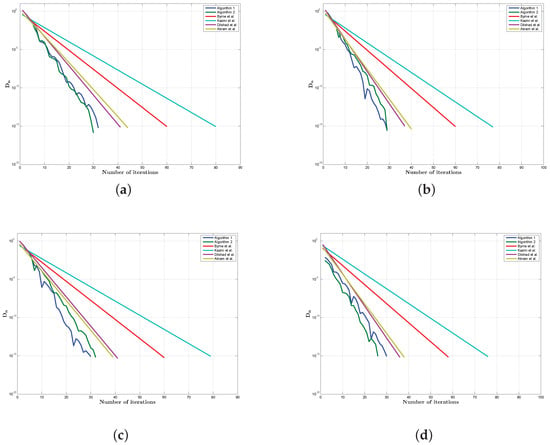

Comparison: Furthermore, we compare our proposed methods to the methods in Byrne et al. [8], Kazmi [9], Dilshad et al. [21] and Akram et al. [11]. We select for Byrne et al. [8], Kazmi [9]; for Akram et al. [11]; for Byrne et al. [8], Kazmi [9], Dilshad et al. [21] and Akram et al. [11]; , for Kazmi [9] and Akram et al. [11]. We consider the following cases:

- Case (A):

- , , ;

- Case (B):

- , , ;

- Case (C):

- , , ;

- Case (D):

- , , ;

It is noticed that our schemes are easy to implement and the choosing step size is free from the pre-calculation of . The experiment results are presented in Table 1 and Figure 2.

Table 1.

Numerical comparison of Algorithms 1 and 2 with the work studied in [8,9,11,21] for Example 1.

Figure 2.

Graphical comparison of Algorithms 1 and 2 with Byrne et al. [8], Kazmi and Rizvi [9], Dilshad et al. [21] and Akram et al. [11] for Example 1 by using Case (A)–Case (D). (a) by Case (A), (b) by Case (B), (c) by case (C), (d) by Case (D).

Example 2.

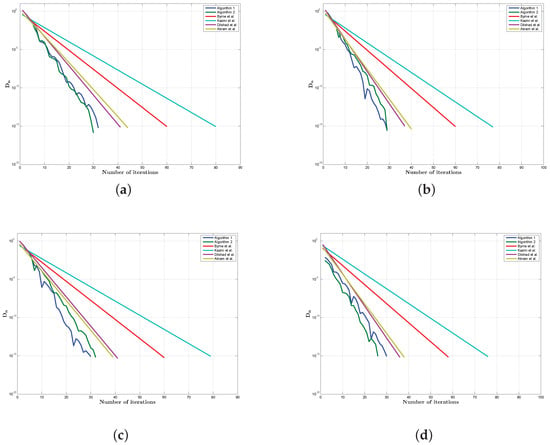

Let is a real Hilbert space with inner product and the norm is given by . We define the monotone mappings by and . Let , the identity operator hence so its adjoint . The stopping criteria for our computation is , where . We compare our proposed methods to the methods in Byrne et al. [8], Kazmi [9], Dilshad et al. [21] and Akram et al. [11].

We select for Byrne et al. [8], and Kazmi [9]; for Akram et al. [11]; and for Algorithms 1 and 2 and Akram et al. [11]; for Byrne et al. [8], Kazmi [9] and Dilshad et al. [21]; , for Kazmi [9] and Akram et al. [11]; for Algorithms 1 and 2 and Dilshad et al. [21]; , and is selected randomly by (3). We take into consideration the following different cases:

- Case(A′):

- and ;

- Case(B′):

- and ;

- Case(C′):

- and ;

- Case(D′):

- and ;

Table 2.

Numerical comparison of Algorithms 1 and 2 with the work studied in [8,9,11,21] for Example 2.

Figure 3.

Graphical comparison of Algorithms 1 and 2 with Byrne et al. [8], Kazmi and Rizvi [9], Dilshad et al. [21] and Akram et al. [11] for Example 2 by using Case (A′)–Case (D′). (a) by Case (A′), (b) by Case (B′), (c) by Case (C′), (d) by Case (D′).

5. Conclusions

We have presented two Halpern-type inertial iteration methods with a self-adaptive step size to estimate the solution of in such a way that the Halpern iteration and inertial term are computed simultaneously. We demonstrated the strong convergence of the suggested methods to approach the solution of with some appropriate assumptions so that the calculation of is not necessary for the step size. Finally, we illustrate the proposed methods by choosing different parameters with suitable numerical examples. We show that our suggested schemes perform so well in the number of iterations as well as time taken by CPU. Note that the viscosity approximation is more general than the Halpern approximation method. Applying the viscosity-type inertial approximation to estimate the solution of or together with will be intriguing in the future.

Author Contributions

Conceptualization, M.D.; methodology, M.D.; software, M.D.; validation, A.A.; formal analysis, A.A.; investigation, A.A.; resources, A.A.; data curation, A.A.; writing—original draft preparation, M.D.; writing—review and editing, M.D.; visualization, A.A.; supervision, A.A.; funding acquisition, A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

This article does not contain any studies with human participants or animals performed by any of the authors.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors are thankful to the unknown reviewers and editor for their valuable remarks and suggestions which enhanced the quality and contents of this research article.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- Censor, Y.; Elfving, T.; Kopf, N.; Bortfeld, T. The multiple-sets split feasibility problem and its applications for inverse problems. Inverse Probl. 2005, 21, 2071–2084. [Google Scholar] [CrossRef]

- Combettes, P.L. The convex feasibilty problem in image recovery. In Advance in Image and Electronphysiccs; Hawkes, P., Ed.; Academic Press: New York, NY, USA, 1996; Volume 95, pp. 155–270. [Google Scholar]

- Censor, Y.; Bortfeld, T.; Martin, B.; Trofimov, A. A unified approach for inversion problem in intensity modulated radiation therapy. Phys. Med. Biol. 2006, 51, 2353–2365. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T. A multi projection algorithm using Bregman projections in a product space. Numer. Algor. 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Xu, H.K. Iterative methods for split feasibility problem in infinite dimensional Hilbert spaces. Inverse Prob. 2010, 26, 105018. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Algorithms for the split variational inequality problem. Numer. Algor. 2012, 59, 301–323. [Google Scholar] [CrossRef]

- Moudafi, A. Split monotone variational inclusions. J. Optim. Theory Appl. 2011, 150, 275–283. [Google Scholar] [CrossRef]

- Byrne, C.; Censor, Y.; Gibali, A.; Reich, S. Weak and strong convergence of algorithms for split common null point problem. J. Nonlinear Convex Anal. 2012, 13, 759–775. [Google Scholar]

- Kazmi, K.R.; Rizvi, S.H. An iterative method for split variational inclusion problem and fixed point problem for a nonexpansive mapping. Optim. Lett. 2014, 8, 1113–1124. [Google Scholar] [CrossRef]

- Dilshad, M.; Aljohani, A.F.; Akram, M. Iterative scheme for split variational inclusion and a fixed-point problem of a finite collection of nonexpansive mappings. J. Funct. Spaces 2020, 2020, 3567648. [Google Scholar] [CrossRef]

- Akram, M.; Dilshad, M.; Rajpoot, A.K.; Babu, F.; Ahmad, R.; Yao, J.-C. Modified iterative schemes for a fixed point problem and a split variational inclusion problem. Mathematics 2022, 10, 2098. [Google Scholar] [CrossRef]

- Arfat, Y.; Kumam, P.; Khan, M.A.A.; Ngiamsunthorn, P.S. Shrinking approximants for fixed point problem and generalized split null point problem in Hilbert spaces. Optim. Lett. 2022, 16, 1895–1913. [Google Scholar] [CrossRef]

- Sitthithakerngkiet, K.; Deepho, J.; Kumam, P. A hybrid viscosity algorithm via modify the hybrid steepest descent method for solving the split variational inclusion in image reconstruction and fixed point problems. Appl. Math. Comp. 2015, 250, 986–1001. [Google Scholar] [CrossRef]

- Suantai, S.; Shehu, Y.; Cholamjiak, P. Nonlinear iterative methods for solving the split common null point problem in Banach spaces. Optim. Methods Softw. 2019, 34, 853–874. [Google Scholar] [CrossRef]

- Chugh, R.; Gupta, N. Strong convergence of new split general system of monotone variational inclusion problem. Appl. Anal. 2014, 103, 138–165. [Google Scholar] [CrossRef]

- Tang, Y. New algorithms for split common null point problems. Optimization 2020, 70, 1141–1160. [Google Scholar] [CrossRef]

- Moudafi, A.; Thakur, B.S. Solving proximal split feasibilty problem without prior knowledge of matrix norms. Optim. Lett. 2014, 8, 2099–2110. [Google Scholar] [CrossRef]

- Ngwepe, M.D.; Jolaoso, L.O.; Aphane, M.; Adenekan, I.O. An algorithm that adjusts the stepsize to be self-adaptive with an inertial term aimed for solving split variational inclusion and common fixed point problems. Mathematics 2023, 11, 4708. [Google Scholar] [CrossRef]

- Zhu, L.-J.; Yao, Y. Algorithms for approximating solutions of split variational inclusion and fixed-point problems. Mathematics 2023, 11, 641. [Google Scholar] [CrossRef]

- Lopez, G.; Martin-Marquez, V.; Xu, H.K. Solving the split feasibilty problem without prior knowledge of matrix norms. Inverse Probl. 2012, 28, 085004. [Google Scholar] [CrossRef]

- Dilshad, M.; Akram, M.; Ahmad, I. Algorithms for split common null point problem without pre-existing estimation of operator norm. J. Math. Inequal. 2020, 14, 1151–1163. [Google Scholar] [CrossRef]

- Alvarez, F.; Attouch, H. An inertial proximal method for maximal monotone operators via discretization of a nonlinear osculattor ith damping. Set-Valued Anal. 2001, 9, 3–11. [Google Scholar] [CrossRef]

- Arfat, Y.; Kumam, P.; Ngiamsunthorn, P.S.; Khan, M.A.A. An accelerated projection based parallel hybrid algorithm for fixed point and split null point problems in Hilbert spaces. Math. Meth. Appl. Sci. 2021. [Google Scholar] [CrossRef]

- Dilshad, M.; Akram, M.; Nsiruzzaman, M.; Filali, D.; Khidir, A.A. Adaptive inertial Yosida approximation iterative algorithms for split variational inclusion and fixed point problems. AIMS Math. 2023, 8, 12922–12942. [Google Scholar] [CrossRef]

- Filali, D.; Dilshad, M.; Alyasi, L.S.M.; Akram, M. Inertial iterative algorithms for split variational inclusion and fixed point problems. Axioms 2023, 12, 848. [Google Scholar] [CrossRef]

- Tang, Y.; Lin, H.; Gibali, A.; Cho, Y.-J. Convegence analysis and applicatons of the inertial algorithm solving inclusion problems. Appl. Numer. Math. 2022, 175, 1–17. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, Y.; Gibali, A. New self-adaptive inertial-like proximal point methods for the split common null point problem. Symmetry 2021, 13, 2316. [Google Scholar] [CrossRef]

- Tang, Y.; Gibali, A. New self-adaptive step size algorithms for solving split variational inclusion problems and its applications. Numer. Algor. 2019, 83, 305–331. [Google Scholar] [CrossRef]

- Reich, S.; Taiwo, A. Fast iterative schemes for solving variational inclusion problems. Math. Meth. Appl. Sci. 2023, 46, 17177–17198. [Google Scholar] [CrossRef]

- Xu, H.K. Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 2002, 66, 240–256. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Space; Springer: Berlin, Germany, 2011. [Google Scholar]

- Opial, Z. Weak covergence of the sequence of successive approximations of nonexpansive mappings. Bull. Am. Math. Soc. 1976, 73, 591–597. [Google Scholar] [CrossRef]

- Mainge, P.E. Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set-Valued Anal. 2008, 16, 899–912. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).