Abstract

Currently, direct surveys are used less and less to assess satisfaction with the quality of user services. One of the most effective methods to solve this problem is to extract user attitudes from social media texts using natural language text mining. This approach helps to obtain more objective results by increasing the representativeness and independence of the sample of service consumers being studied. The purpose of this article is to improve existing methods and test a method for classifying Russian-language text reviews of patients about the work of medical institutions and doctors, extracted from social media resources. The authors developed a hybrid method for classifying text reviews about the work of medical institutions and tested machine learning methods using various neural network architectures (GRU, LSTM, CNN) to achieve this goal. More than 60,000 reviews posted by patients on the two most popular doctor review sites in Russia were analysed. Main results: (1) the developed classification algorithm is highly efficient—the best result was shown by the GRU-based architecture (val_accuracy = 0.9271); (2) the application of the method of searching for named entities to text messages after their division made it possible to increase the classification efficiency for each of the classifiers based on the use of artificial neural networks. This study has scientific novelty and practical significance in the field of social and demographic research. To improve the quality of classification, in the future, it is planned to expand the semantic division of the review by object of appeal and sentiment and take into account the resulting fragments separately from each other.

Keywords:

machine learning; patient reviews; neural networks; online reviews; review classification; text reviews; quality of medical services; GRU architecture; LSTM; CNN MSC:

68T50

1. Introduction

Artificial intelligence (AI) is playing an increasingly important role in healthcare due to its ability to analyse large amounts of data and provide accurate diagnoses and prognoses. AI is used to automate administrative processes, optimise physician appointment scheduling, quickly and accurately analyse medical images, develop individualised treatment plans, and monitor patients. This allows for improvement in the quality of medical care, reduction in the time to make diagnoses, and increases in the efficiency of treatment.

The latest research, in particular, touches on the rapidly developing field of image recognition. The capabilities of image denoising [1] or ultrasound image processing [2] are being developed. Tools for working with images are being actively developed in various areas of medicine: in neurology—positron emission tomography scan images in order to meet the increasing clinical need of early detection and treatment monitoring for Alzheimer’s disease [3]; in mammography—identifying pathologies of the mammary glands, the presence of signs breast cancer [4]; in the areas of renal, gastrointestinal, cardiac, dermatological, ophthalmological, oral, Ear, Nose, and Throat (ENT), orthopedic, and other diseases [5].

However, no less important in studying the possibilities of improving the quality of treatment and care for patients is the analysis of textual information that reveals patients’ satisfaction or dissatisfaction with medical services. Such monitoring could, in particular, provide an understanding of which areas in the healthcare sector require priority improvements. Currently, such monitoring is increasingly carried out using AI tools.

The conventional method of direct questionnaire surveying to assess customer satisfaction is giving way to automatic processing of social media texts allowing the extraction of semantics. The latter objectively reveals consumer attitudes, as the sample becomes more representative and independent.

To implement this approach, it is essential to develop software classifiers that can group the text data by the following criteria:

- Sentiment;

- Target;

- Causal relationship; etc.

A new hybrid intelligent text classification algorithm is developed in this work. This algorithm is obtained by integrating an efficient machine learning model and a linguistic object search algorithm. This approach conducts an objective analysis of text feedback, taking into account the genre and speech features of social network texts and the specifics of the subject area. The developed approach has great practical significance in social and demographic research.

2. Analysing Medical Service Reviews as a Natural Language Text Classification Task

Online reviews and online ratings make up the so-called electronic word of mouth (eWOM), informal communications targeting consumers, channelled through Internet technologies (online reviews and online opinions) and relating to the use experiences or features of specific products or services or their vendors [6]. The rise of eWOM makes online reviews the most influential source of information for consumer decision-making [7,8,9].

The predominance of positive feedback is an incentive for businesses to raise prices for their goods or services to maximise their profits [10]. This prompts unscrupulous businesses to manipulate the customer reviews and ratings with cheating reviews, rigging the feedback scoring, etc. [11,12,13]. Some researchers have highlighted a separate threat posed by artificial intelligence, which can generate reviews that are all but indistinguishable from those posted by real users [14,15]. That said, artificial intelligence also serves as a tool to detect fake reviews [14].

eWOM has also become a widespread phenomenon in the healthcare sector: many physician rating websites (PRW) are available now. Physicians themselves are the first to use this opportunity by actively registering on such websites and filling out their profiles. For instance, in Germany, according to 2018 data, more than 52% of physicians had a personal page on online physician rating websites [16].

Online portals provide ratings not only of physicians, but also of larger entities, such as hospitals [17,18]. In many countries, however, a greater number of reviews concern hospitals or overall experiences rather than physicians and their actions [19,20].

Creating reviews containing reliable information enhances the efficiency of the healthcare sector by providing the patient, among other things, with trustworthy data and information on the quality of medical services. Despite the obvious benefits of PRWs, they have certain drawbacks, too, such as:

- Poor understanding and knowledge of healthcare on the part of the service consumers, which casts doubt on the accuracy of their assessments of the physician and medical services provided [21,22]. Patients often use indirect indicators unrelated to the quality of medical services as arguments (for example, their interpersonal experience with the physician [23,24]).

- Lack of clear criteria by which to assess a physician/medical service [23].

Researchers have found that online reviews of physicians often do not actually reflect the outcomes of medical services [25,26,27]. Consequently, reviews and online ratings in the healthcare industry are less useful and effective compared to those of other industries [28,29]. However, some studies, on the contrary, have revealed a direct correlation between online user ratings and clinical outcomes [30,31,32,33].

In general, the healthcare industry shows a high level of concentration of positive reviews and physician ratings [34,35,36,37,38,39,40]. However, at the beginning of the COVID-19 pandemic, the share of negative reviews on online forums prevailed [41].

The main factors behind a higher likelihood of a physician receiving a positive review are the physician’s friendliness and communication behaviour [21]. Shah et al. divide the factors that increase the likelihood of a physician receiving a positive review into factors depending on the medical facility (hospital environment, location, car park availability, medical protocol, etc.) and factors relating to the physician’s actions (physician’s knowledge, competence, attitude, etc.) [42].

Some researchers have noted that patients mostly rely on scoring alone while rarely using descriptive feedback when assessing physicians [43], which is due to the reduced time cost of completing such feedback [44]. At the same time, consumers note the importance of receiving descriptive feedback as it is more informative than numerical scores [45,46].

Physicians’ personal characteristics, such as gender, age, specialty, can also influence the patient’s assessment and feedback, apart from objective factors [47,48,49,50]. For example, according to studies based on German and US physician assessment data, higher evaluations prevail among female physicians [47,48], obstetrician–gynaecologists and younger physicians [51].

The characteristics of the patients have an impact on the distribution of scores. For example, according to a study by Emmert and Meier based on the online physician review data from Germany, older patients tend to score physicians higher than younger patients [47]. However, according to their estimates, doctors’ assessments/scores don’t depend on the respondent’s gender [47]. Having an insurance policy has a significant influence on the feedback sentiment [47,50]. Individual studies have demonstrated that negative feedback is prevalent among patients from rural areas [52]. Some studies have focused on the characteristics of online service users, noting that there are certain characteristics of people that are indicative of the PRW use frequency [53,54]. Depending on this, users having different key characteristics will differ significantly in their ratings of importance of online physician reviews [55].

A number of studies have used both rating scores and commentary texts as data [56]. In particular, the study [56] identified the factors influencing more positive ratings that would be related to both the physician’s characteristics and other factors beyond the physician’s control.

A number of studies use arrays of physician review texts as the data basis [57,58,59,60,61]. Researchers have found that physician assessment services can complement the information provided by more conventional patient experience surveys while contributing to a better understanding by patients of the quality of medical services provided by a physician or health care facility [62,63,64].

Social media analysis can be viewed as a multi-stage activity involving collection, comprehension, and presentation:

- In the capture phase, relevant social media content will be extracted from various sources. Data collection can be done by an individual or third-party providers [65].

- In the second phase, relevant data will be selected for predictive modelling of sentiment analysis.

- In the third phase, important key findings of the analysis will be visualised [66].

Supervised or unsupervised methods can be used to effectively analyse the sentiments based on social media data. Bespalov et al. [67] provided an overview of these methods. The main approaches to classify the polarity of analysed texts are based on words, sentences, or paragraphs.

In [68], various text mining techniques for detecting different text patterns in a social network were studied. The text mining using classification based on various machine learning and ontology algorithms was considered, along with a hybrid approach. The experiments described in the above paper showed that there is no single algorithm that would perform best across all data types.

In [69], different classifier types were analysed for text classification and their respective pros and cons.

Lee et al. [70] classified trending topics on Twitter [now X] by using two approaches to topic classification, a well-known Bag-of-Words method for text classification and a network classification. They identified 18 classes and assigned trending topics to the respective categories. Ultimately, the network classifier significantly outperformed the text classifier.

Kateb et al. [71] discussed methods addressing the challenges of short text classification based on streaming data in social networks.

Chirawichitichai et al. [72] compared six feature weighting methods in the Thai document categorisation system. They found that using the support vector machine (SVM) score thresholding with ltc yielded the best results for the Thai document categorisation.

Theeramunkong et al. [73] proposed a multidimensional text document classification framework. The paper reported that classifying text documents based on a multidimensional category model using multidimensional and hierarchical classifications was superior to the flat classification.

Viriyayudhakorn et al. [74] compared four mechanisms to support divergent thinking using associative information obtained from Wikipedia. The authors used the Word Article Matrix (WAM) to compute the association function. This is a useful and effective method for supporting divergent thinking.

Sornlertlamvanich et al. [75] proposed a new method to fine-tune a model trained on some known documents with richer contextual information. The authors used WAM to classify text and track keywords from social media to comprehend social events. WAM with a cosine similarity is an effective method of text classification.

As is clear from the review of the current state of automatic processing of unstructured social media data, there is no single approach at this time to achieve effective classification of text resources. The classification results will depend on the domain, representativeness of the training sample, and other factors. Therefore, it is important to develop and apply such intelligent methods for analyzing reviews of medical services provided.

3. Classification Models for Text Reviews of the Quality of Medical Services in Social Media

In this study, we have developed a hybrid method of classification of text reviews from social media. This resulted in the classification of text reviews based on:

- text sentiment: positive or negative;

- target: a review of a medical facility or an physician.

Initially, the task was to break the multitude of reviews down into four classes. To solve this, we started with testing machine learning methods using various neural network architectures.

Mathematically, a neuron is a weighted adder whose single output is defined by its inputs and weight matrix as follows (1):

where xi and wi are the neuron input signals and the input weights, respectively; the function u is the induced local field, and f(u) is the transfer function. The signals at the neuron inputs are assumed to lie in the interval [0, 1]. The additional input x0 and its corresponding weight w0 are used for neuron initialisation. Initialisation here means the shifting of the neuron’s activation function along the horizontal axis.

There are a large number of algorithms available for context-sensitive and context-insensitive text classification. In this study, we propose three neural network architectures that have shown the best performance in non-binary text classification tasks. We compared the effectiveness of the proposed algorithms with the results of text classification using the models applied in our previous studies, which achieved good results in binary classification (BERT and SVM) [76,77].

3.1. LSTM Network

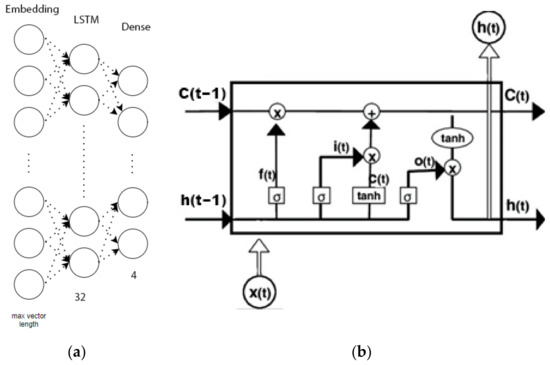

Figure 1 shows the general architecture of the LSTM network.

Figure 1.

LSTM network: general architecture (a), LSTM layer (b).

The proposed LSTM network architecture consists of the following layers:

- Embedding—the neural network input layer consisting of neurons (2):

—size of the vector space in which the words will be inserted;

;

—length of input sequences, equal to the maximum size of the vector generated during word pre-processing.

- LSTM Layer—recurrent layer of the neural network; includes 32 blocks.

- Dense Layer—output layer consisting of four neurons. Each neuron is responsible for an output class. The activation function is “Softmax”.

3.2. A Recurrent Neural Network

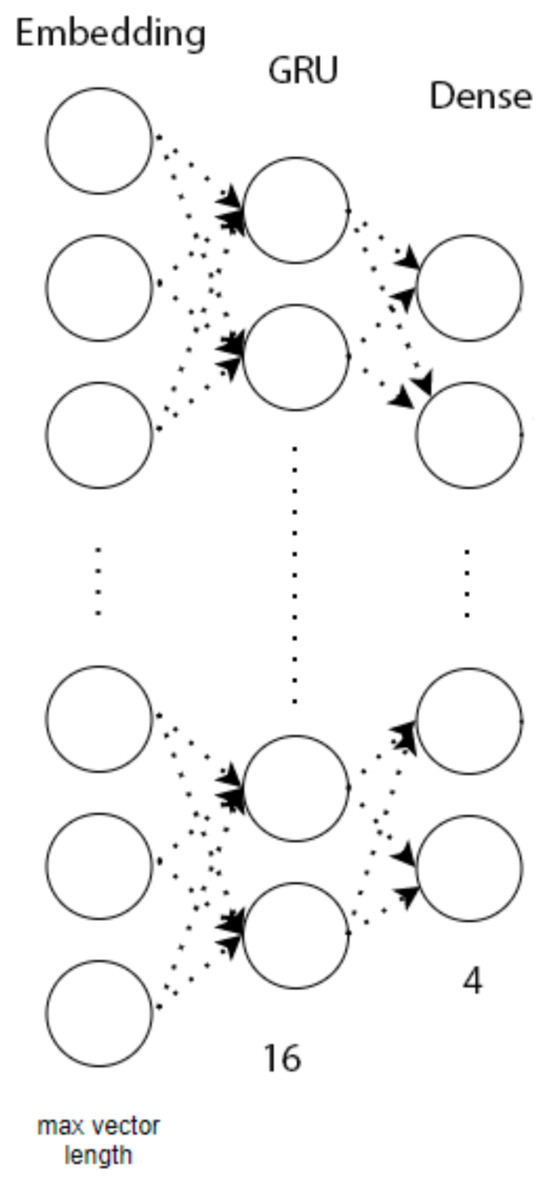

Figure 2 shows the general architecture of a recurrent neural network.

Figure 2.

General architecture of recurrent neural network.

The proposed recurrent neural network architecture consists of the following layers:

- Embedding—input layer of the neural network.

- GRU—recurrent layer of the neural network; includes 16 blocks.

- Dense—output layer consisting of four neurons. The activation function is “Softmax”.

3.3. A Convolutional Neural Network

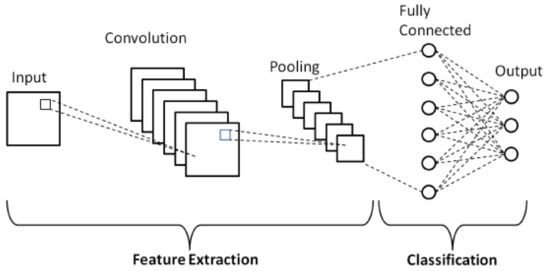

Figure 3 shows the general architecture of a convolutional neural network (CNN).

Figure 3.

General architecture of a convolutional neural network.

The proposed convolutional neural network architecture consists of the following layers:

- Embedding—input layer of the neural network.

- Conv1D—convolutional layer required for deep learning. This layer improves the accuracy of text message classification by 5–7%. The activation function is “ReLU”.

- MaxPooling1D—layer which performs dimensionality reduction of generated feature maps. The maximum pooling is equal to 2.

- Dense—first output layer consisting of 128 neurons. The activation function is “ReLU”.

- Dense—final output layer consisting of four neurons. The activation function is “Softmax”.

The optimiser was the “adaptive moment estimation” (Adam) algorithm. The loss function chosen was categorical cross-entropy.

3.4. Using Linguistic Algorithms

We observed that some text reviews contained elements from different classes. That is, one review could consist of several sentences that included the user’s opinion about the clinic and their opinion about the physician. Moreover, both opinions had the same sentiment, since the user independently rated its colour (one “star” or 5 “stars”). To account for this, we added two new review classes: mixed positive and mixed negative.

To improve the classification quality, we used hybridisation of the most effective machine learning methods and linguistic methods that account for the speech and grammatical features of the text language.

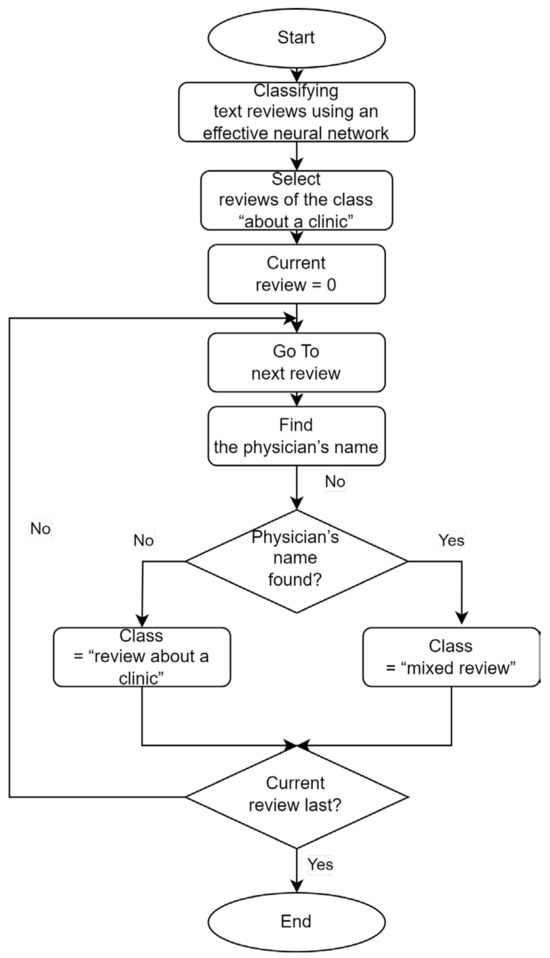

Figure 4 shows the general algorithm of the hybrid method.

Figure 4.

General flowchart of the hybrid classification algorithm.

A set of methods of pre-processing, validation, and detection of named entities representing the physicians’ names in the clinic was the linguistic component of the hybrid method we developed.

4. Software Implementation of a Text Classification System

Preprocessing of text review data included the following steps:

- Text tokenisation.

- Removing spelling errors.

- Lemmatisation.

- Removing stop words.

The text was not converted to one case, since the algorithm for finding the doctor’s name is partially based on the analysis of words in upper case.

The Natasha library in Python was used as a linguistic analysis module for texts in the natural Russian language. The library performs basic tasks of natural Russian language processing: token/sentence segmentation, morphological analysis and parsing, lemmatisation, extraction, normalisation, and named entities detection.

For this research, the library functionality was mainly used for searching and retrieving named entities.

The following libraries were also used to implement the processes of initialisation, neural network training, and evaluation of classification performance:

- Tensorflow 2.14.0, an open-source machine learning software library developed by Google for solving neural network construction and training problems.

- Keras 2.15.0, a deep-learning library that is a high-level API written in Python 3.10 and capable of running on top of TensorFlow.

- Numpy 1.23.5, a Python library for working with multidimensional arrays.

- Pandas 2.1.2, a Python library that provides special data structures and operations for manipulating numerical tables and time series.

Google Colab 1.38.1 service was used to train the models, which provides significant computing power for data mining techniques.

5. Experimental Results of Text Review Classification

5.1. Using Dataset

A number of experiments were conducted on classifying Russian-language text reviews of medical services provided by clinics or physicians with a view to evaluating the effectiveness of the proposed approaches.

Feedback texts from the aggregators prodoctorov.ru and infodoctor.ru were used as input data.

All extracted data to be analysed had the following list of variables:

- city—city where the review was posted;

- text—feedback text;

- author_name—name of the feedback author;

- date—feedback date;

- day—feedback day;

- month—feedback month;

- year—feedback year;

- doctor_or_clinic—a binary variable (the review is of a physician OR a clinic);

- spec—medical specialty (for feedback on physicians);

- gender—feedback author’s gender;

- id—feedback identification number.

The experiments were designed to impose a 90-word limit on the feedback length.

5.2. Experimental Results on Classifying Text Reviews by Sentiment

In the first experiment, a database of 5037 reviews from the website prodoctorov.ru with initial markups by the sentiment and target was built to test the sentiment analysis algorithms.

The RuBERT 0.1 language model was used as a text data vectorisation algorithm. The Transformer model was used for binary classification of text into positive/negative categories. The training and test samples were split in an 80:20 ratio.

The results of the classifier on the test sample were as follows: Precision = 0.9857, Recall = 0.8909, F1-score = 0.9359.

The classifier performance quality metrics confirm the feasibility of using this binary text sentiment classifier architecture for alternative sources of medical feedback data.

The LSTM classifier, the architecture of which is described in Section 3.1, was also tested on this sample. The reviews were supposed to be classified into four classes, as follows:

- Positive review of a physician;

- Positive review of a clinic;

- Negative review of a physician;

- Negative review of a clinic.

Table 1 contains the classification results.

Table 1.

LSTM network-based classification results for feedback posted on the website prodoctorov.ru.

5.3. A Text Feedback Classification Experiment Using Various Machine Learning Models

We used data from the online aggregator infodoctor.ru to classify Russian-language text feedback into four (later, six) classes using the machine learning models described in Section 3. This aggregator has a comparative advantage over other large Russian websites (prodoctorov.ru, docdoc.ru) in that it groups reviews by their ratings on the scale from one star to five stars with a breakdown by Russian cities, which greatly simplifies the data collection process.

We collected samples from Moscow, St. Petersburg, and 14 other Russian cities with million-plus populations, for which we could obtain minimally representative samples by city (with a minimum 1000 observations per city). The samples spanned the time period from July 2012 to August 2023.

We retrieved a total of 58,246 reviews. These reviews either contained only positive experiences with a physician or a clinic or had mixed sentiments and targets.

The target class was determined by attracted specialists in the field of demography for the training set. The user’s own review rating was used to determine the sentiment class (one «star»—negative review, five «stars»—positive review). Table 2 summarises some selected feedback examples.

Table 2.

Feedback examples, as posted on the website infodoctor.ru.

Table 3 contains the number of analysed text reviews divided into classes.

Table 3.

The number of analysed text reviews divided into classes.

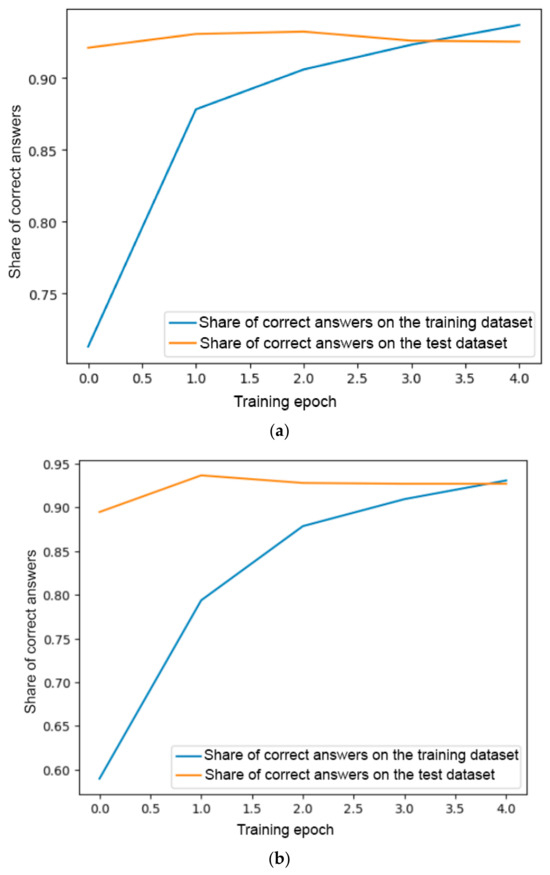

All the algorithms had an 80:20 split between the training and test samples. Figure 5 gives the graphs showing the classification results on the training and test datasets for LSTM, GRU, and CNN architectures.

Figure 5.

Classification results on the training and test datasets for LSTM network (a), GRU network (b), and CNN-network (c).

As can be seen from the graph, overfitting occurred for the CNN model. Therefore, the proposed architecture will not provide the most effective classification results. When conducting further research, it is planned to use the dropout method to avoid overfitting.

Table 4 compares the performance indicators of text feedback classification using the above approaches.

Table 4.

Performance indicators of text feedback classification.

To compare the proposed models with other methods, we performed experiments using the support vector machine (SVM) and RuBERT algorithms on the same dataset. As shown in Table 4, these algorithms had slightly lower performances than our models.

As mentioned earlier, one of the difficulties with the text reviews analysed was that they could contain elements of different classes within the same review. For instance, a short text message could include both a review of a physician and a review of a clinic.

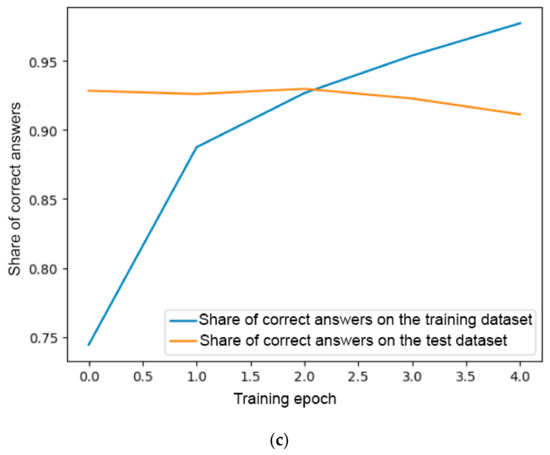

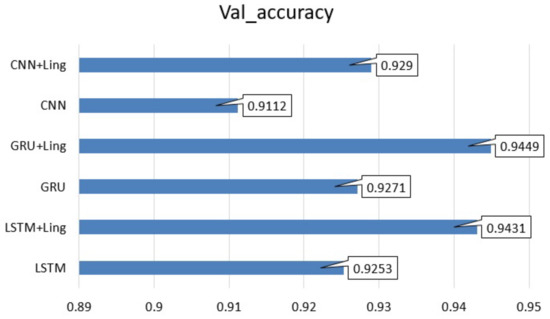

Hence, we introduced two additional classes, mixed positive and mixed negative, while applying the linguistic method of named entity recognition (as described in Section 3.4) to enhance the quality of classification of each feedback class.

This approach improved the classification performance for all the three artificial neural network architectures. Figure 6 illustrates the classification results obtained using the hybrid algorithms.

Figure 6.

Classification results obtained using hybrid algorithms.

We applied the linguistic method to the reviews that were classified as “clinic review” by the neural network at the first stage, regardless of their sentiment. The named entity recognition method improved the classification performance when it was used after the partitioning of text messages. However, some reviews were still misclassified even after applying the linguistic method. Those reviews were long text messages that could belong to different classes semantically. The reasons for the misclassification were as follows:

- Some reviews were of both a clinic and a physician without mentioning the latter’s name. This prevented the named entity recognition tool from assigning the reviews to the mixed class. This problem could be solved by parsing the sentences further with identifying a semantically significant object unspecified by a full name.

- Some reviews expressed contrasting opinions about the clinic, related to different aspects of its operation. The opinions often differed on the organisational support versus the level of medical services provided by the clinic.

For example, the review “This doctor’s always absent sick, she’s always late for appointments, she’s always chewing. Cynical and unresponsive” expresses the patient’s dissatisfaction with the quality of the organisation of medical appointments, which is a negative review of organisational support. The review “I had meniscus surgery. He fooled me. He didn’t remove anything, except damaging my blood vessel. I ended up with a year of treatment at the Rheumatology Institute. ####### tried to hide his unprofessional attitude to the client. If you care about your health, do not go to ########” conveys the patient’s indignation at the quality of treatment, which belongs to the medical service class. A finer classification of clinic reviews will enhance the meta-level classification quality.

The developed approach to classifying text data is not applicable to reviews that are mixed regarding the target (clinic and physician), in which the attitudes towards the clinic and physician have opposite sentiments. Also, the approach can only analyse Russian-language reviews since the model is trained on text data in Russian.

6. Conclusions

In this paper, we propose a hybrid method for classifying Russian-language text reviews of medical facilities extracted from social media.

The method consists of two steps: first, we use one of the artificial neural network architectures (LSTM, CNN, or GRU) to classify the reviews into four main classes based on their sentiment and target (positive or negative, physician or clinic); second, we apply a linguistic approach to extract named entities from the reviews.

We evaluated the performance of our method on a dataset of more than 60,000 Russian-language text reviews of medical services provided by clinics or physicians from the websites prodoctorov.ru and infodoctor.ru. The main results of our experiments are as follows:

- The neural network classifiers achieve high accuracy in classifying the Russian-language reviews from social media by sentiment (positive or negative) and target (clinic or physician) using various architectures of the LSTM, CNN, or GRU networks, with the GRU-based architecture being the best (val_accuracy = 0.9271).

- The named entity recognition method improves the classification performance for each of the neural network classifiers when applied to the segmented text reviews.

- To further improve the classification accuracy, semantic segmentation of the reviews by target and sentiment is required, as well as a separate analysis of the resulting fragments.

As future work, we intend to develop a text classification algorithm that can distinguish between reviews of medical services and reviews of organisational support for clinics. This would enhance the classification quality at the meta-level, as it is more important for managers to separate reviews of the medical services and diagnosis from those of organisational support factors (such as waiting time, cleanliness, politeness, location, speed of getting results, etc.).

Moreover, in a broader context, refined classification of social media users’ statements on review platforms or social networks would enable creating a system of standardised management responses to changes in demographic and social behaviours and attitudes towards socially significant services and programmes [77].

Author Contributions

Conceptualisation, I.K.; Formal analysis, V.M., Z.K. and G.K.; Software, M.K. and A.K. All authors have read and agreed to the published version of the manuscript.

Funding

The study was supported by the Russian Science Foundation, project No. 23-71-01101 “Development of models and methods for improving the performance of data warehouses through predictive analysis of temporal diagnostic information” and within the framework of the research project “Population reproduction in socio-economic development” No. 122041800047-9 (2017–2025) with the support of the Faculty of Economics of “Lomonosov Moscow State University”.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Rajabi, M.; Hasanzadeh, R.P. A Modified adaptive hysteresis smoothing approach for image denoising based on spatial domain redundancy. Sens. Imaging 2021, 22, 1–25. [Google Scholar] [CrossRef]

- Rajabi, M.; Golshan, H.; Hasanzadeh, R.P. Non-local adaptive hysteresis despeckling approach for medical ultrasound images. Biomed. Signal Process. Control. 2023, 85, 105042. [Google Scholar] [CrossRef]

- Borji, A.; Seifi, A.; Hejazi, T.H. An efficient method for detection of Alzheimer’s disease using high-dimensional PET scan images. Intell. Decis. Technol. 2023, 17, 1–21. [Google Scholar] [CrossRef]

- Karimzadeh, M.; Vakanski, A.; Xian, M.; Zhang, B. Post-Hoc Explainability of BI-RADS Descriptors in a Multi-Task Framework for Breast Cancer Detection and Segmentation. In Proceedings of the 2023 IEEE 33rd International Workshop on Machine Learning for Signal Processing (MLSP), Rome, Italy, 17–20 September 2023; IEEE: New York, NY, USA; pp. 1–6. [Google Scholar]

- Rezaei, T.; Khouzani, P.J.; Khouzani, S.J.; Fard, A.M.; Rashidi, S.; Ghazalgoo, A.; Khodashenas, M. Integrating Artificial Intelligence into Telemedicine: Revolutionizing Healthcare Delivery. Kindle 2023, 3, 1–161. [Google Scholar]

- Litvin, S.W.; Goldsmith, R.E.; Pan, B. Electronic word-of-mouth in hospitality and tourism management. Tour. Manag. 2008, 29, 458–468. [Google Scholar] [CrossRef]

- Ismagilova, E.; Dwivedi, Y.K.; Slade, E.; Williams, M.D. Electronic word of mouth (eWOM) in the marketing context: A state of the art analysis and future directions. In Electronic Word of Mouth (Ewom) in the Marketing Context; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Cantallops, A.S.; Salvi, F. New consumer behavior: A review of research on eWOM and hotels. Int. J. Hosp. Manag. 2014, 36, 41–51. [Google Scholar] [CrossRef]

- Mulgund, P.; Sharman, R.; Anand, P.; Shekhar, S.; Karadi, P. Data quality issues with physician-rating websites: Systematic review. J. Med. Internet Res. 2020, 22, e15916. [Google Scholar] [CrossRef]

- Ghimire, B.; Shanaev, S.; Lin, Z. Effects of official versus online review ratings. Ann. Tour. Res. 2022, 92, 103247. [Google Scholar] [CrossRef]

- Xu, Y.; Xu, X. Rating deviation and manipulated reviews on the Internet—A multi-method study. Inf. Manag. 2023, 2023, 103829. [Google Scholar] [CrossRef]

- Hu, N.; Bose, I.; Koh, N.S.; Liu, L. Manipulation of online reviews: An analysis of ratings, readability, and sentiments. Decis. Support Syst. 2012, 52, 674–684. [Google Scholar] [CrossRef]

- Luca, M.; Zervas, G. Fake it till you make it: Reputation, competition, and Yelp review fraud. Manag. Sci. 2016, 62, 3412–3427. [Google Scholar] [CrossRef]

- Namatherdhala, B.; Mazher, N.; Sriram, G.K. Artificial Intelligence in Product Management: Systematic review. Int. Res. J. Mod. Eng. Technol. Sci. 2022, 4, 2914–2917. [Google Scholar]

- Jabeur, S.B.; Ballouk, H.; Arfi, W.B.; Sahut, J.M. Artificial intelligence applications in fake review detection: Bibliometric analysis and future avenues for research. J. Bus. Res. 2023, 158, 113631. [Google Scholar] [CrossRef]

- Emmert, M.; McLennan, S. One decade of online patient feedback: Longitudinal analysis of data from a German physician rating website. J. Med. Internet Res. 2021, 23, e24229. [Google Scholar] [CrossRef] [PubMed]

- Kleefstra, S.M.; Zandbelt, L.C.; Borghans, I.; de Haes, H.J.; Kool, R.B. Investigating the potential contribution of patient rating sites to hospital supervision: Exploratory results from an interview study in The Netherlands. J. Med. Internet Res. 2016, 18, e201. [Google Scholar] [CrossRef]

- Bardach, N.S.; Asteria-Peñaloza, R.; Boscardin, W.J.; Dudley, R.A. The relationship between commercial website ratings and traditional hospital performance measures in the USA. BMJ Qual. Saf. 2013, 22, 194–202. [Google Scholar] [CrossRef]

- Van de Belt, T.H.; Engelen, L.J.; Berben, S.A.; Teerenstra, S.; Samsom, M.; Schoonhoven, L. Internet and social media for health-related information and communication in health care: Preferences of the Dutch general population. J. Med. Internet Res. 2013, 15, e220. [Google Scholar] [CrossRef]

- Hao, H.; Zhang, K.; Wang, W.; Gao, G. A tale of two countries: International comparison of online doctor reviews between China and the United States. Int. J. Med. Inform. 2017, 99, 37–44. [Google Scholar] [CrossRef] [PubMed]

- Bidmon, S.; Elshiewy, O.; Terlutter, R.; Boztug, Y. What patients value in physicians: Analyzing drivers of patient satisfaction using physician-rating website data. J. Med. Internet Res. 2020, 22, e13830. [Google Scholar] [CrossRef]

- Ellimoottil, C.; Leichtle, S.W.; Wright, C.J.; Fakhro, A.; Arrington, A.K.; Chirichella, T.J.; Ward, W.H. Online physician reviews: The good, the bad and the ugly. Bull. Am. Coll. Surg. 2013, 98, 34–39. [Google Scholar]

- Bidmon, S.; Terlutter, R.; Röttl, J. What explains usage of mobile physician-rating apps? Results from a web-based questionnaire. J. Med. Internet Res. 2014, 16, e3122. [Google Scholar] [CrossRef][Green Version]

- Lieber, R. The Web is Awash in Reviews, but Not for Doctors. Here’s Why. New York Times, 9 March 2012. [Google Scholar]

- Daskivich, T.J.; Houman, J.; Fuller, G.; Black, J.T.; Kim, H.L.; Spiegel, B. Online physician ratings fail to predict actual performance on measures of quality, value, and peer review. J. Am. Med. Inform. Assoc. 2018, 25, 401–407. [Google Scholar] [CrossRef]

- Gray, B.M.; Vandergrift, J.L.; Gao, G.G.; McCullough, J.S.; Lipner, R.S. Website ratings of physicians and their quality of care. JAMA Intern. Med. 2015, 175, 291–293. [Google Scholar] [CrossRef]

- Skrzypecki, J.; Przybek, J. Physician review portals do not favor highly cited US ophthalmologists. In Seminars in Ophthalmology; Taylor & Francis: Abingdon, UK, 2018; Volume 33, pp. 547–551. [Google Scholar]

- Widmer, R.J.; Maurer, M.J.; Nayar, V.R.; Aase, L.A.; Wald, J.T.; Kotsenas, A.L.; Timimi, F.K.; Harper, C.M.; Pruthi, S. Online physician reviews do not reflect patient satisfaction survey responses. In Mayo Clinic Proceedings; Elsevier: Amsterdam, The Netherlands, 2018; Volume 93, pp. 453–457. [Google Scholar]

- Saifee, D.H.; Bardhan, I.; Zheng, Z. Do Online Reviews of Physicians Reflect Healthcare Outcomes? In Proceedings of the Smart Health: International Conference, ICSH 2017, Hong Kong, China, 26–27 June 2017; Springer International Publishing: New York, NY, USA, 2017; pp. 161–168. [Google Scholar]

- Trehan, S.K.; Nguyen, J.T.; Marx, R.; Cross, M.B.; Pan, T.J.; Daluiski, A.; Lyman, S. Online patient ratings are not correlated with total knee replacement surgeon–specific outcomes. HSS J. 2018, 14, 177–180. [Google Scholar] [CrossRef]

- Doyle, C.; Lennox, L.; Bell, D. A systematic review of evidence on the links between patient experience and clinical safety and effectiveness. BMJ Open 2013, 3, e001570. [Google Scholar] [CrossRef] [PubMed]

- Okike, K.; Uhr, N.R.; Shin, S.Y.; Xie, K.C.; Kim, C.Y.; Funahashi, T.T.; Kanter, M.H. A comparison of online physician ratings and internal patient-submitted ratings from a large healthcare system. J. Gen. Intern. Med. 2019, 34, 2575–2579. [Google Scholar] [CrossRef] [PubMed]

- Rotman, L.E.; Alford, E.N.; Shank, C.D.; Dalgo, C.; Stetler, W.R. Is there an association between physician review websites and press ganey survey results in a neurosurgical outpatient clinic? World Neurosurg. 2019, 132, 891–899. [Google Scholar] [CrossRef]

- Lantzy, S.; Anderson, D. Can consumers use online reviews to avoid unsuitable doctors? Evidence from RateMDs. com and the Federation of State Medical Boards. Decis. Sci. 2020, 51, 962–984. [Google Scholar] [CrossRef]

- Gilbert, K.; Hawkins, C.M.; Hughes, D.R.; Patel, K.; Gogia, N.; Sekhar, A.; Duszak, R., Jr. Physician rating websites: Do radiologists have an online presence? J. Am. Coll. Radiol. 2015, 12, 867–871. [Google Scholar] [CrossRef] [PubMed]

- Okike, K.; Peter-Bibb, T.K.; Xie, K.C.; Okike, O.N. Association between physician online rating and quality of care. J. Med. Internet Res. 2016, 18, e324. [Google Scholar] [CrossRef]

- Imbergamo, C.; Brzezinski, A.; Patankar, A.; Weintraub, M.; Mazzaferro, N.; Kayiaros, S. Negative online ratings of joint replacement surgeons: An analysis of 6402 reviews. Arthroplast. Today 2021, 9, 106–111. [Google Scholar] [CrossRef]

- Mostaghimi, A.; Crotty, B.H.; Landon, B.E. The availability and nature of physician information on the internet. J. Gen. Intern. Med. 2010, 25, 1152–1156. [Google Scholar] [CrossRef]

- Lagu, T.; Hannon, N.S.; Rothberg, M.B.; Lindenauer, P.K. Patients’ evaluations of health care providers in the era of social networking: An analysis of physician-rating websites. J. Gen. Intern. Med. 2010, 25, 942–946. [Google Scholar] [CrossRef]

- López, A.; Detz, A.; Ratanawongsa, N.; Sarkar, U. What patients say about their doctors online: A qualitative content analysis. J. Gen. Intern. Med. 2012, 27, 685–692. [Google Scholar] [CrossRef] [PubMed]

- Shah, A.M.; Yan, X.; Qayyum, A.; Naqvi, R.A.; Shah, S.J. Mining topic and sentiment dynamics in physician rating websites during the early wave of the COVID-19 pandemic: Machine learning approach. Int. J. Med. Inform. 2021, 149, 104434. [Google Scholar] [CrossRef] [PubMed]

- Shah, A.M.; Yan, X.; Tariq, S.; Ali, M. What patients like or dislike in physicians: Analyzing drivers of patient satisfaction and dissatisfaction using a digital topic modeling approach. Inf. Process. Manag. 2021, 58, 102516. [Google Scholar] [CrossRef]

- Lagu, T.; Metayer, K.; Moran, M.; Ortiz, L.; Priya, A.; Goff, S.L.; Lindenauer, P.K. Website characteristics and physician reviews on commercial physician-rating websites. JAMA 2017, 317, 766–768. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Xie, J. Online consumer review: Word-of-mouth as a new element of marketing communication mix. Manag. Sci. 2008, 54, 477–491. [Google Scholar] [CrossRef]

- Pavlou, P.A.; Dimoka, A. The nature and role of feedback text comments in online marketplaces: Implications for trust building, price premiums, and seller differentiation. Inf. Syst. Res. 2006, 17, 392–414. [Google Scholar] [CrossRef]

- Terlutter, R.; Bidmon, S.; Röttl, J. Who uses physician-rating websites? Differences in sociodemographic variables, psychographic variables, and health status of users and nonusers of physician-rating websites. J. Med. Internet Res. 2014, 16, e97. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Emmert, M.; Meier, F. An analysis of online evaluations on a physician rating website: Evidence from a German public reporting instrument. J. Med. Internet Res. 2013, 15, e2655. [Google Scholar] [CrossRef] [PubMed]

- Nwachukwu, B.U.; Adjei, J.; Trehan, S.K.; Chang, B.; Amoo-Achampong, K.; Nguyen, J.T.; Taylor, S.A.; McCormick, F.; Ranawat, A.S. Rating a sports medicine surgeon’s “quality” in the modern era: An analysis of popular physician online rating websites. HSS J. 2016, 12, 272–277. [Google Scholar] [CrossRef] [PubMed]

- Obele, C.C.; Duszak, R., Jr.; Hawkins, C.M.; Rosenkrantz, A.B. What patients think about their interventional radiologists: Assessment using a leading physician ratings website. J. Am. Coll. Radiol. 2017, 14, 609–614. [Google Scholar] [CrossRef]

- Emmert, M.; Meier, F.; Pisch, F.; Sander, U. Physician choice making and characteristics associated with using physician-rating websites: Cross-sectional study. J. Med. Internet Res. 2013, 15, e2702. [Google Scholar] [CrossRef] [PubMed]

- Gao, G.G.; McCullough, J.S.; Agarwal, R.; Jha, A.K. A changing landscape of physician quality reporting: Analysis of patients’ online ratings of their physicians over a 5-year period. J. Med. Internet Res. 2012, 14, e38. [Google Scholar] [CrossRef]

- Rahim, A.I.A.; Ibrahim, M.I.; Musa, K.I.; Chua, S.L.; Yaacob, N.M. Patient satisfaction and hospital quality of care evaluation in malaysia using servqual and facebook. Healthcare 2021, 9, 1369. [Google Scholar] [CrossRef]

- Galizzi, M.M.; Miraldo, M.; Stavropoulou, C.; Desai, M.; Jayatunga, W.; Joshi, M.; Parikh, S. Who is more likely to use doctor-rating websites, and why? A cross-sectional study in London. BMJ Open 2012, 2, e001493. [Google Scholar] [CrossRef]

- Hanauer, D.A.; Zheng, K.; Singer, D.C.; Gebremariam, A.; Davis, M.M. Public awareness, perception, and use of online physician rating sites. JAMA 2014, 311, 734–735. [Google Scholar] [CrossRef] [PubMed]

- McLennan, S.; Strech, D.; Meyer, A.; Kahrass, H. Public awareness and use of German physician ratings websites: Cross-sectional survey of four North German cities. J. Med. Internet Res. 2017, 19, e387. [Google Scholar] [CrossRef]

- Lin, Y.; Hong, Y.A.; Henson, B.S.; Stevenson, R.D.; Hong, S.; Lyu, T.; Liang, C. Assessing patient experience and healthcare quality of dental care using patient online reviews in the United States: Mixed methods study. J. Med. Internet Res. 2020, 22, e18652. [Google Scholar] [CrossRef]

- Emmert, M.; Meier, F.; Heider, A.K.; Dürr, C.; Sander, U. What do patients say about their physicians? An analysis of 3000 narrative comments posted on a German physician rating website. Health Policy 2014, 118, 66–73. [Google Scholar] [CrossRef]

- Greaves, F.; Ramirez-Cano, D.; Millett, C.; Darzi, A.; Donaldson, L. Harnessing the cloud of patient experience: Using social media to detect poor quality healthcare. BMJ Qual. Saf. 2013, 22, 251–255. [Google Scholar] [CrossRef]

- Hao, H.; Zhang, K. The voice of Chinese health consumers: A text mining approach to web-based physician reviews. J. Med. Internet Res. 2016, 18, e108. [Google Scholar] [CrossRef] [PubMed]

- Shah, A.M.; Yan, X.; Shah, S.A.A.; Mamirkulova, G. Mining patient opinion to evaluate the service quality in healthcare: A deep-learning approach. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 2925–2942. [Google Scholar] [CrossRef]

- Wallace, B.C.; Paul, M.J.; Sarkar, U.; Trikalinos, T.A.; Dredze, M. A large-scale quantitative analysis of latent factors and sentiment in online doctor reviews. J. Am. Med. Inform. Assoc. 2014, 21, 1098–1103. [Google Scholar] [CrossRef] [PubMed]

- Ranard, B.L.; Werner, R.M.; Antanavicius, T.; Schwartz, H.A.; Smith, R.J.; Meisel, Z.F.; Asch, D.A.; Ungar, L.H.; Merchant, R.M. Yelp reviews of hospital care can supplement and inform traditional surveys of the patient experience of care. Health Aff. 2016, 35, 697–705. [Google Scholar] [CrossRef] [PubMed]

- Hao, H. The development of online doctor reviews in China: An analysis of the largest online doctor review website in China. J. Med. Internet Res. 2015, 17, e134. [Google Scholar] [CrossRef] [PubMed]

- Jiang, S.; Street, R.L. Pathway linking internet health information seeking to better health: A moderated mediation study. Health Commun. 2017, 32, 1024–1031. [Google Scholar] [CrossRef] [PubMed]

- Hotho, A.; Nürnberger, A.; Paaß, G. A Brief Survey of Text Mining. LDV Forum—GLDV. J. Comput. Linguist. Lang. Technol. 2005, 20, 19–62. [Google Scholar] [CrossRef]

- Păvăloaia, V.; Teodor, E.; Fotache, D.; Danileț, M. Opinion Mining on Social Media Data: Sentiment Analysis of User Preferences. Sustainability 2019, 11, 4459. [Google Scholar] [CrossRef]

- Bespalov, D.; Bing, B.; Yanjun, Q.; Shokoufandeh, A. Sentiment classification based on supervised latent n-gram analysis. In Proceedings of the 20th ACM International Conference on Information and Knowledge Management (CIKM’11), Glasgow, Scotland, 24–28 October 2011; Association for Computing Machinery: New York, NY, USA; pp. 375–382. [Google Scholar]

- Irfan, R.; King, C.K.; Grages, D.; Ewen, S.; Khan, S.U.; Madani, S.A.; Kolodziej, J.; Wang, L.; Chen, D.; Rayes, A.; et al. A Survey on Text Mining in Social Networks. Camb. J. Knowl. Eng. Rev. 2015, 30, 157–170. [Google Scholar] [CrossRef]

- Patel, P.; Mistry, K. A Review: Text Classification on Social Media Data. IOSR J. Comput. Eng. 2015, 17, 80–84. [Google Scholar]

- Lee, K.; Palsetia, D.; Narayanan, R.; Patwary, M.d.M.A.; Agrawal, A.; Choudhary, A.S. Twitter Trending Topic Classification. In Proceeding of the 2011 IEEE 11th International Conference on Data Mining Workshops, ICDW’11, Vancouver, BC, Canada, 11 December 2011; pp. 251–258. [Google Scholar]

- Kateb, F.; Kalita, J. Classifying Short Text in Social Media: Twitter as Case Study. Int. J. Comput. Appl. 2015, 111, 1–12. [Google Scholar] [CrossRef]

- Chirawichitichai, N.; Sanguansat, P.; Meesad, P. A Comparative Study on Feature Weight in Thai Document Categorization Framework. In Proceedings of the 10th International Conference on Innovative Internet Community Services (I2CS), IICS, Bangkok, Thailand, 3–5 June 2010; pp. 257–266. [Google Scholar]

- Theeramunkong, T.; Lertnattee, V. Multi-Dimension Text Classification, SIIT, Thammasat University. 2005. Available online: http://www.aclweb.org/anthology/C02–1155 (accessed on 25 October 2023).

- Viriyayudhakorn, K.; Kunifuji, S.; Ogawa, M. A Comparison of Four Association Engines in Divergent Thinking Support Systems on Wikipedia, Knowledge, Information, and Creativity Support Systems, KICSS2010; Springer: Berlin/Heidelberg, Germany, 2011; pp. 226–237. [Google Scholar]

- Sornlertlamvanich, V.; Pacharawongsakda, E.; Charoenporn, T. Understanding Social Movement by Tracking the Keyword in Social Media, in MAPLEX2015, Yamagata, Japan, February 2015. Available online: https://www.researchgate.net/publication/289035345_Understanding_Social_Movement_by_Tracking_the_Keyword_in_Social_Media (accessed on 22 December 2023).

- Konstantinov, A.; Moshkin, V.; Yarushkina, N. Approach to the Use of Language Models BERT and Word2vec in Sentiment Analysis of Social Network Texts. In Recent Research in Control Engineering and Decision Making; Dolinina, O., Bessmertny, I., Brovko, A., Kreinovich, V., Pechenkin, V., Lvov, A., Zhmud, V., Eds.; ICIT 2020. Studies in Systems, Decision and Control; Springer: Cham, Switzerland, 2020; Volume 337, pp. 462–473. [Google Scholar] [CrossRef]

- Kalabikhina, I.; Zubova, E.; Loukachevitch, N.; Kolotusha, A.; Kazbekova, Z.; Banin, E.; Klimenko, G. Identifying Reproductive Behavior Arguments in Social Media Content Users’ Opinions through Natural Language Processing Techniques. Popul. Econ. 2023, 7, 40–59. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).