3.1. Graph-Cut-Based Feature Selection

While wrapper feature selection methods, like the sequential search, nature-inspired algorithms, or binary teaching–learning-based approaches bypass the need for explicit feature evaluation to yield results that are close to optimal, their effectiveness is tied to the specific classification model being used. Additionally, these methods are highly computationally intensive, which can limit their applicability. Similarly, embedded methods incorporate an iterative cycle of evaluating and selecting features as a part of the model training process, which can also demand significant computational resources. Furthermore, the performance of embedded methods is likewise influenced by the choice of the classification model. As an alternative to the discussed wrapper and embedded feature selection techniques, as well as those filter methods that are unable to deal with correlated features, in this section, we presented a graph-cut-based feature selection strategy outlined in our work [

9] that enables the selection of a subset of high-quality dissimilar features while providing superior results. Depending on the defined feature estimation measurement, it can be used for both classification and regression purposes. Graph vertices represent features with associated weights that define their quality (as proposed in [

9]), while graph edge weights define similarities between them. The method relies on two input parameters,

and

, used for graph definition. The former defines the necessary level of feature quality (i.e., maximal allowed class overlap) to be included in the output feature space, and the latter determines the minimal level of dissimilarity between them.

Let

denote an input feature space

. A feature

, referred to by an index

, is given as a mapping function

. An index

refers to a sample, i.e., a feature vector defined as

. An undirected graph used for feature selection is defined as

, where a set of vertices

F is defined as

, while an unordered set of edges

is given by

for all

, such that

. A vertex-weighting function is given by

, as defined in [

9], and the edge-weighting function is given by the absolute Pearson correlation coefficient

, formally described by Equation (

1).

where

denotes mean, while standard deviation

of feature values is defined as

. Both functions,

and

P, are designed such that lower values (closer to 0) are more favorable for selection than higher values (closer to 1).

According to the theoretical framework introduced in [

9], we use the following definitions of elementary properties:

Vertices and are adjacent in a graph G if there exists an edge .

A path from to is an ordered sequence of vertices , such that and are adjacent for all .

A graph G is connected if there exists a path .

A graph is subgraph of G if and .

A neighbourhood of a vertex in graph G is the subset of vertices F, defined by all the adjacent vertices of , namely, , where .

We say that a set of vertices

is a vertex-cut if its removal separates graph

G into at least two non-empty and pairwise disconnected connected components. Obviously,

is a graph-cut, as it separates a singleton

(i.e., an individual vertex) from the rest of the graph, thus creating a subgraph

, whose vertex- and edge-sets are given formally by Equation (

2).

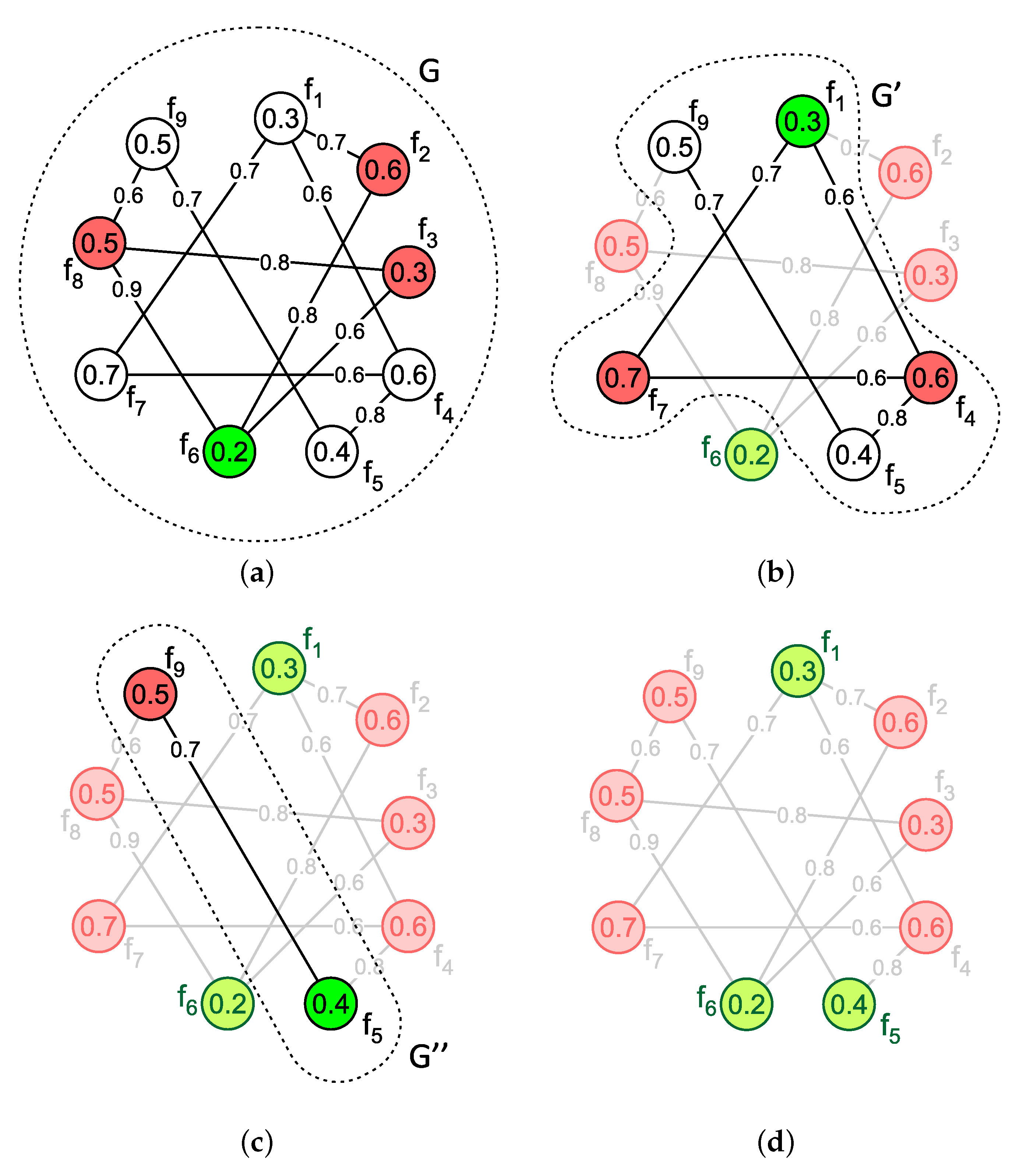

An example of vertex-cut feature selection is presented in

Figure 1.

Figure 1a shows an undirected graph

, constructed over a set of features

, with thresholds

and

applied on the associated vertex- and edge-weighting functions

and

P, accordingly. To ensure the preservation of the overall informativeness of selected features, a feature of the highest quality

is selected first by a vertex-cut of its neighborhood

. The selected feature

is colored green. All of highly correlated adjacent features

are marked red and removed from

G. This results in

, as defined by Equation (

2), and a disconnected singleton

(see

Figure 1b). The same process is then repeated on

, separating the feature of the highest quality, namely

, from the remaining graph

by removal of

. The final cut is performed on the graph

separating

(in green) from the remaining (empty) graph

by removal of

(in red), as shown in

Figure 1c. Thus, the output subset of high-quality dissimilar features, namely

, is obtained, as shown in

Figure 1d.

3.2. New Suboptimal Dynamic Programming Algorithm

The new method combines the advantages of iterative and approximate dynamic programming. It does not seek a global optimum but instead adopts a suboptimal (approximate) solution, which it iteratively improves. It is based on a graph like the graph-cut-based filtering from

Section 3.1. We thus use the same notation, but we will extend it throughout this subsection with additional algorithm parameters and graph vertex attributes. The graph is undirected, i.e.,

. The input is the feature set

,

, which is processed in index order, i.e., from

to

, so we will sometimes also speak of a sequence of features. At both ends of this sequence, the guard vertices

and

are added, which do not change during the execution of the algorithm, but they simplify the implementation. There is no edge between the two guards, while the guard vertices and the edges between a guard and any other vertex are given weights 0. We stress this in the form of an Equation (Equation (

3)).

Each graph vertex

contains, in addition to the weight

, a set

that stores the “optimal” subset (feature selection result) of the vertices already processed, and the score

of this subset, which is obtained by the evaluation criterion. Their initialization is described by Equation (

4) and is important for the convergence proof in

Section 3.3. The evaluation criterion described in Equation (

5) seeks a minimum for all vertices, except the guards, i.e.,

.

Let

r be the value of

j where the minimum was identified. The corresponding

is calculated by Equation (

6).

The final score

and feature selection result

are given by Equation (

7). 1.5

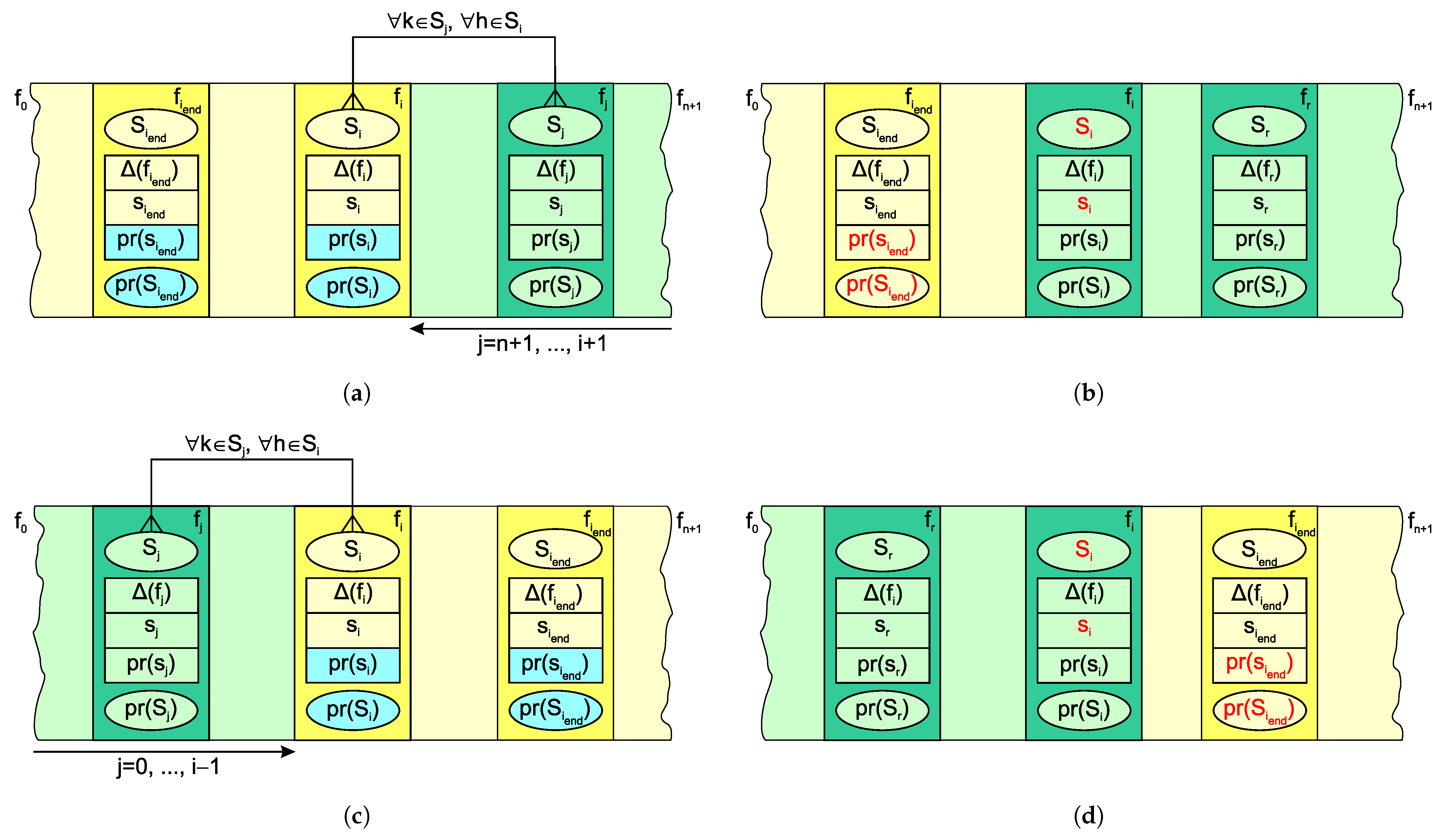

Figure 2a shows the situation immediately before Equations (

5) and (

6) are applied to vertex

, and

Figure 2b shows the situation immediately after the equations are applied. Green indicates the graph vertices that have already been processed, and white indicates those that are being or will be processed. The red text indicates vertex attributes modified during the observed

processing.

To date, everything seems straightforward, but there are, in fact, three serious problems in the process that need to be addressed. The first is that the importance of vertices and edges might differ. For this reason, we introduce a weight

w,

. This modifies the evaluation criterion Equation (

5) into Equation (

8).

The second problem is that Equation (

5) in its present form always leads to a trivial solution from Equation (

9). Since the weights of the graph vertices and edges are all non-negative, the minimum consists of a single vertex (without incident edges) with the lowest weight.

To prevent this, we first modified the model by replacing the decreasing vertex evaluation function

with the increasing

. The idea was to award high vertex weights and penalize high edge weights. This resulted in the optimization function Equation (

10):

which does not tend towards the trivial solution. However, to retain complementarity with the graph-cut-based method, we preferred to choose an alternative approach, which decrements all vertex and edge weights (except those of guards and their incident edges) for user-defined non-negative values

and

, respectively, (see Equation (

11)). Furthermore, these two additional parameters provide new possibilities for tuning, as demonstrated in

Section 4.

The third problem is the most demanding. Even if all partial solutions

,

were optimal, there is no guarantee that this will be the case after adding

to any of these solutions. It is enough that

is over-correlated with a single feature from each

, and the optimum will likely be missed. In other words, optimization defined in this way does not guarantee an optimal substructure, one of the two fundamental assumptions of dynamic programming, along with overlapping subproblems [

6]. Of course, when considering

, we can no longer refresh its predecessors’ attributes

and

. We tried to mitigate this problem by extending the evaluation criterion by predicting the contribution of vertices not yet visited and, most importantly, considering the correlation between the visited and predicted parts. The need to predict the contribution of unvisited nodes led us to a simple idea, which later turned out to be very successful, namely to reverse the graph traversing direction after arriving at

. As

G is an undirected graph, the status from the previous traversal can simply be used to estimate the score

and partial solution

. The updated evaluation criterion is given by Equation (

12).

When the reverse traversal reaches

, the direction of visiting the vertices is inverted again. The evaluation criterion Equation (

12) is slightly modified to Equation (

13), corresponding to the forward direction from

towards

. The only difference between the two equations is, of course, the direction and boundaries of the vertices’ traversal, written under the min function label.

The modified evaluation criterion significantly impacts the choice of vertex

(

r is the value of

j, providing the minimum) and thus indirectly affects the calculation of

and

. Let

r be the value of

j in Equation (

12) or (

13) where the minimum was identified. The score

is then calculated by using Equation (

14), while Equation (

6) representing the solution subset

remains applicable.

However,

and

should not be directly refreshed by

and

, since in the treatment of subsequent vertices, we assume that

and

can only refer to vertices that were visited before

in the current iteration. Conversely, it would be a pity not to make better use of the great potential that Equations (

12) and (

13) certainly have. Fortunately, they can be used to predict the attributes of another vertex instead of

, namely

, which represents the last vertex in the set

(the one with the lowest index in the reverse direction traversal or with the highest index in the forward traversal). However, we should not update

and

when we process

because we will need the values from the previous iteration when we process

later. As a consequence, we extend each vertex

with additional attributes

and

(

stands for prediction), which store the aforementioned estimates of the score and the solution set. At the beginning of each iteration, the initialization

, is performed. Algorithm 1 shows the processing of vertex

, which is further explained in

Figure 3. For simplicity, we assume that all the variables in Algorithm 1 are global, except

i and

. The score

is determined as the minimum between the previously stored

and

computed by Equation (

14). In the former case, the set

is assigned to

, while in the latter case,

is determined by Equation (

6). Note that

and

can be refreshed multiple times in the same iteration since multiple sequences

at different

i can terminate with the same vertex

.

| Algorithm 1 Processing a Considered Graph Vertex |

- 1:

function ProcessVertex(i, )

|

- 2:

if then

| ▹ Forward direction graph traversal. |

- 3:

;

|

- 4:

;

| ▹ (13) |

- 5:

else

| ▹ Reverse direction graph traversal. |

- 6:

;

|

- 7:

;

| ▹ (12) |

- 8:

end if

|

- 9:

the value of j, where the minimum in line 4 or 7 was achieved;

|

- 10:

;

| ▹ (14)

|

- 11:

;

| ▹ (6)

|

- 12:

if then

| ▹ Update the vertex with predictions from the same iteration. |

- 13:

;

|

- 14:

;

|

- 15:

end if

|

- 16:

if then

| ▹ Update the predictions of a not yet processed .

|

- 17:

;

|

- 18:

;

|

- 19:

end if

|

- 20:

return

| ▹ No value returned—all the variables are global, except i and . |

- 21:

end function

|

Figure 3a,b show the situation immediately before and after Equations (

6), (

12) and (

14) are applied to vertex

, respectively. The graph traversal is performed in the reverse direction. The obvious difference between the straightforward non-iterative solution from

Figure 2 is that here,

does not contain the initial vertex

only, but the partial solution from the previous iteration instead. As a consequence, there is a double loop in the sum calculation. The green color indicates the graph vertices that have already been processed in the observed iteration, and the yellow color indicates those that were processed in the previous iteration (and are or will be processed later in the current iteration). Note that these yellow vertices contain the predictions (colored cyan), which might be updated earlier in the ongoing iteration. The red text indicates vertex attributes modified during the observed

processing. Analogously,

Figure 3c,d show the processing of vertex

when the graph is passed in the forward direction. Equation (

13) replaces Equation (

12) in this case.

The pseudocode in Algorithm 2 describes the overall structure of the alternating suboptimal dynamic programming method for feature selection. As mentioned, 200 features can still be processed relatively fast, but for larger input sets, it makes sense to preprocess the features with graph-cut-based feature selection filtering (line 2). The initialization in line 3 sets up the guard vertices using Equation (

3). Partial solution sets candidates and their scores are initialized using Equation (

4), which is needed in lines 4, 7 and 11 of Algorithm 1 within the first-iteration calls of ProcessVertex (line 11 of Algorithm 2). The value

is set to some high value (

∞) to provide the first comparison in line 16, and

is set to a user-defined value or default 100. In line 8, all predicted scores are set to a high value (

∞) at the beginning of each iteration, which is needed in line 16. The main work is done in the ProcessVertex function, which is called sequentially in line 11 for each feature

except for the guard vertices. The direction of traversing the features is inverted in each iteration (line 23). The process terminates when the identical score is obtained three times in a row, or the number of iterations reaches

(line 24). If there are two (or more) solutions with the same score, the algorithm may find one during the forward direction traversal and a different one in the reverse direction traversal. In this case, it will return the last of the two solutions found.

3.3. Convergence and Complexity Analysis

The solution found is generally suboptimal but often better than that found in the one-pass method, as will be confirmed by the results in the next section. In any case, the solution after several passes is not worse than the one-pass solution since the result can only improve from iteration to iteration or remain unchanged (after three consecutive such iterations, the algorithm terminates), which is confirmed by Proposition 1 below.

Proposition 1. The score in each iteration of the proposed alternating suboptimal dynamic programming algorithm can only be lower (better) or equal to the score in the previous iteration but never higher (worse).

| Algorithm 2 Alternating Suboptimal Dynamic Programming |

- 1:

function ASDP(, P, n)

|

- 2:

GraphCutBasedFeatureSelection();

| ▹ Optional filtering |

- 3:

Init();

|

- 4:

; ;

|

- 5:

; ;

|

- 6:

repeat

| ▹ iterations of ASDP |

- 7:

for to do

| ▹ for all features |

- 8:

;

|

- 9:

end for

| ▹ for all features |

- 10:

for to do

| ▹ for all features |

- 11:

ProcessVertex(i, );

|

- 12:

end for

| ▹ for all features |

- 13:

;

| ▹ (7): this and the next two lines |

- 14:

, where was found;

|

- 15:

;

|

- 16:

if then

|

- 17:

;

|

- 18:

;

|

- 19:

else

|

- 20:

;

|

- 21:

end if

|

- 22:

;

|

- 23:

Swap;

|

- 24:

until ;

|

- 25:

return (, )

|

- 26:

end function

|

Proof (Proof). The proof is conceptually straightforward since we will show that the score

from the previous iteration is also considered a candidate for the minimum in the observed iteration. Namely, this score is obtained in the evaluation criterion in line 7 of Algorithm 1 at

or in line 4 at

. The algorithm does not modify the parameters of the two guards, so

and

in both cases. Consequently, only

remains from the expression on the right of (

13) or (

12). If

is also the minimum in the current iteration, then

will be written first to

in line 17 of Algorithm 1, then to

in line 13 of Algorithm 1, to

in line 13 of Algorithm 2, and finally to

in line 17 of Algorithm 2. Conversely, if

is not the minimum in the current iteration, then it can only be replaced with a lower score in some of the aforementioned lines of Algorithm 1 or Algorithm 2. This completes the proof. □

Based on Proposition 1, it makes sense to modify the initialization (line 3 of Algorithm 2). The proven convergence allows us to use the input feature set instead of the empty set as an initial solution candidate. Equation (

15) introduces a recursive definition of initial values, which replaces Equation (

4). Note that the last two lines of Equation (

15) were derived from Equations (

6) and (

14) by setting

.

Propositions 2–4 consider the time and space complexity of the graph-cut-based and the alternating suboptimal dynamic programming feature selection approaches.

Proposition 2. The graph-cut-based feature selection method has the worst-case time complexity , where n is the number of features, i.e., graph vertices.

Proof The algorithm gradually selects features with the highest quality, which requires at most steps. In each step, a neighborhood is considered, which contains at most features. This results in worst-case time complexity. Note that the method removes the considered features and their highly correlated neighborhood from the graph G in each step and, consequently, the expected time complexity is much closer to , which corresponds to sorting the vertices according to their qualities. □

Proposition 3. The proposed alternating suboptimal dynamic programming feature selection approach runs in in the worst case, where n is the number of graph vertices (features).

Proof A double sum in lines 4 and 7 of Algorithm 1 contributes time. In both cases, it is performed within the min function, which considers values. The ProcessVertex function thus requires time. It is called times in line 11 of Algorithm 2, resulting in time per a single iteration. Although the number of iterations (loop of lines 6–24) is by default set to 100, it rarely exceeds ten and practically never 15, so its time consumption may be considered constant, i.e., , and the overall worst-case time complexity is proven . □

Proposition 4. Both considered approaches to feature selection, i.e., the graph-cut-based and the alternating suboptimal dynamic programming algorithm, require space, where n is the number of graph vertices (features).

Proof In the graph-cut-based approach, the graph contains n vertices and at most edges. Similarly, there are vertices and edges in the ASDP approach. Furthermore, sets and , each with elements, also do not exceed space. The overall space complexity is thus . □