1. Introduction

In the present work, we are interested in the fast numerical solution of special large linear systems stemming from the approximation of a Riesz fractional diffusion equation (RFDE). It is known that, when using standard finite difference schemes on equispaced gridding in the presence of one-dimensional problems, we end up with a dense real symmetric Toeplitz linear system, whose dense structure is inherited from the non-locality of the underlying symmetric operator. We study a one-dimensional RFDE discretized with a numerical scheme with precision order one mainly for the simplicity of the presentation. Nevertheless, the same analysis can be carried out either when using higher-order discretization schemes [

1,

2] or when dealing with more complex higher-dimensional fractional models [

3,

4].

While the matrix–vector product involving dense Toeplitz matrices has a cost of

with a moderate constant in the “big O” and with

M being the matrix size, the solution can be of higher complexity in the presence of ill-conditioning. In connection with the last issue, it is worth recalling that the Toeplitz structure carries a lot of spectral information (see [

5] and references therein). As shown in [

6], the Euclidean conditioning of the coefficient matrices grows exactly as

. Therefore the classical conjugate gradient (CG) method would need a number of iterations exactly proportional to

to converge within a given precision.

In this perspective, a large number of preconditioning strategies for the preconditioned CG (PCG) have been proposed. One possibility is given by band approximations of the coefficient matrices; as discussed in [

6,

7], this does not ensure a convergence within a number of iterations independent of

M, but still proves to be efficient for some values of the fractional order and whose cost stays linear within the matrix size. As an alternative, the circulant proposal given in [

8] has a cost proportional to the matrix–vector product and ensures convergence in the presence of 1D problems that do not include variable coefficients. Another algebraic preconditioner proven to be optimal consists in the

preconditioning explored in [

9,

10]. In a multidimensional setting, the multilevel circulants are not well suited, while the

preconditioning still shows optimal behavior; see [

10] and references therein. For other effective preconditioners, in [

11], a band preconditioner with diagonal compensation is proposed for handling varying coefficients.

Other approaches to the efficient solution of the ill-conditioned linear systems we are interested in include multigrid methods. Optimal multigrid proposals for fractional operators are given, e.g., in [

6,

7,

12,

13,

14]. However, a theoretical convergence analysis of such multigrids is currently limited to the two-grid method.

Here we provide a detailed theoretical convergence study in the case of multigrid methods. To our knowledge, this is the first time in the literature that a multigrid convergence analysis is provided for RFDEs. Moreover, as multigrid methods are often applied as preconditioners themselves, and often to an approximation of the coefficient matrix instead of to the coefficient matrix itself, on the same line of what has been performed in [

7], we discuss a band approximation to be solved with a Galerkin approach which guarantees linear cost in

M instead of

and that inherits the multigrid optimality. We compare the resulting methods with both

and circulant preconditioning.

Finally, in accordance with the topic of this Special Issue, “Mathematical Methods for Image Processing and Understanding”, we investigate the use of RFDE in a variational model for image deblurring. Indeed, fractional-order diffusion models have recently received much attention in imaging applications; see, e.g., [

15,

16]. We propose a matrix–algebra preconditioner combining the structure information on the blurring operator and the RFDE. The effectiveness of the preconditioner is explored by varying the regularization parameter proving that it can reduce the computational cost. On the other hand, regarding this particular application, we explain the reason why multigrid methods require further investigations.

The paper is organized as follows. In

Section 2, we present the problem, the chosen numerical approximation, and the resulting linear systems. The main spectral features of the considered coefficient matrices are also recalled. In

Section 3, we describe the essentials of multigrid methods and give the description of our proposal and its analysis. In

Section 4, we discuss its use applied to a band approximation of the coefficient matrix.

Section 5 contains a wide set of numerical experiments that compare the multigrid with matrix–algebra-based preconditioners and include 2D problems as well as the extension to the case of an RFDE with variable coefficients.

Section 6 is devoted to applying the best preconditioning strategy to an image deblurring problem. Future research directions and conclusions are given in

Section 7.

2. Preliminaries

The current section briefly introduces the continuous problem, its numerical approximation scheme, and the resulting linear systems. The main spectral features of the considered coefficient matrices are recalled as well.

As anticipated in the introduction, we consider the following one-dimensional Riesz fractional diffusion equation (1D-RFDE), given by

with boundary conditions

where

is the diffusion coefficient,

is the source term, and

is the 1D-Riesz fractional derivative for

, defined as

with

, while

and

are the left and right Riemann–Liouville fractional derivatives; see, e.g., [

17,

18].

For the approximation of the problem, we define the uniform space partition on the interval

, that is

Here, the left and right fractional derivatives in (

3) are numerically approximated by the shifted Grünwald difference formula [

3] as

with

being the alternating fractional binomial coefficients, whose expression is

By employing the approximation in (

5) to equation in (

1) and using (

3) and (

6), we obtain the required scheme, as follows:

By defining

and

, the resulting approximation equations (

7) can be written in matrix form as

and, by defining

, we have

with

,

, and

being a lower Hessenberg Toeplitz matrix. The fractional binomial

coefficients satisfy few properties summarized in the following Lemma 1 (see [

3,

19,

20]).

Lemma 1. For , the coefficients defined in (6) satisfy Using Lemma 1, it has been proven in [

20] that the coefficient matrix

is strictly diagonally dominant and hence invertible. To determine its generating function and study its spectral distribution, tools on Toeplitz matrices have been used (see [

6]). For the notion of spectral distribution in the Weyl sense with special attention to Toeplitz structures and their variants, see [

21,

22,

23,

24,

25]. A short review of these results follows.

Definition 1 ([

5])

. Given and its Fourier coefficientswe define the Toeplitz matrixgenerated by f. In addition, the Toeplitz sequence is called the family of Toeplitz matrices generated by f. For instance, the tridiagonal Toeplitz matrix

associated with a finite difference discretization of the Laplacian operator is generated by

Note that, for Toeplitz matrices

,

, as in (

12), to have a generating function associated to the whole Toeplitz sequence, we need it to be the case that there exists

, for which the relationship (

11) holds for every

.

In the case where the partial Fourier sum

converges in infinity norm,

f coincides with its sum and is a continuous

periodic function, given the Banach structure of this space equipped with the infinity norm. A sufficient condition is that

, i.e., the generating function belongs to the Wiener class, which is a closed sub-algebra of the continuous

periodic functions.

Now, according to (

9), we define

Hence, the partial Fourier sum associated to

is

Fixed

; from (

9), it is evident that the matrix

is symmetric, Toeplitz, and is generated by the following real-valued even function on

, defined in the following lemma.

Lemma 2 ([

6,

26])

. The generating function of is For , .

For ,

As observed in [

6], the function

has a unique zero of order

at

for

.

By using the extremal spectral properties of Toeplitz matrix sequences (see, e.g., [

27,

28]) and by combining it with Lemma 2, for

, the obtained spectral information can be summarized in the following items (see again [

6]):

any eigenvalue of belongs to the open interval with , ;

is monotonic strictly increasing converging to , as M tends to infinity;

is monotonic strictly decreasing converging to , as M tends to infinity;

by taking into account that the zero of the generating function

has exactly order

, it holds that

where, for non-negative sequences

, the writing

means that there exist real positive constants

, independent of

n, such that

As a consequence of the third and of the fourth items reported above, the Euclidean conditioning of grows exactly as .

Therefore, classical stationary methods fail to be optimal, in the sense that we cannot expect convergence within a given precision, with a number of iterations bounded by a constant independent of

M: for instance, the Gauss–Seidel iteration would needs a number of iterations exactly proportional to

. In addition, even the Krylov methods will suffer from the latter asymptotic ill-conditioning. In reality, looking at the estimates by Axelsson and Lindskög [

29],

iterations would be needed by a standard CG for reaching a solution within a fixed accuracy. In the present context of a zero of non-integer order, even the preconditioning by using band–Toeplitz matrices, will not ensure optimality, as discussed in [

6] and detailed in

Section 4. On the other hand, when using circulant or the

preconditioning, the spectral equivalence is also guaranteed for a non-integer order of the zero at

if the order is bounded by 2 [

8,

9,

10]. In the latter case, the distance in rank is less with respect to other matrix–algebras.In a multidimensional setting, only the

preconditioning leads to optimality using the PCG under the same constraint on the order of the zero, while multilevel circulants are not well-suited. Finally, since we consider also problems in imaging we recall that the

algebra emerges also in this context when using high-precision boundary conditions (BCs) such as the anti-reflective BCs [

30].

3. Multigrid Methods and Level Independency

Multigrid methods (MGMs) have already proven to be effective solvers as well as valid preconditioners for Krylov methods when numerically approaching FDEs [

7,

12,

13]. A multigrid method combines two iterative methods called smoother and coarse grid correction [

31]. The coarser matrices can either be obtained by projection (Galerkin approach) or by rediscretization (geometric approach). In the case where only a single coarser level is considered, we obtain two grids methods (TGMs), while in the presence of more levels, we have multigrid methods—in particular, V-cycle when only a recursive call is performed and W-cycle when two recursive calls are applied.

Deepening what has been performed in [

6,

13], in this section, we investigate the convergence of multigrid methods in Galerkin form for the discretized problem (

8).

To apply the Galerkin multigrid to a given linear system

, for

, let us define the grid transfer operators

as

where

is the down-sampling operator and

is a properly chosen polynomial. The coarser matrices are then

If, at the recursive level,

k, we simply apply one post-smoothing iteration of a stationary method having iteration matrix

the TGM iteration matrix at the level

k is given by

The following theorem states the convergence of TGM at a generic recursion level k.

Theorem 1 (Ruge-Stüben [

32])

. Let be a symmetric positive definite matrix, the diagonal matrix having as main diagonal that of , and be the post-smoothing iteration matrix. Assume that , such thatand , such thatthen, and The inequalities in Equations (

17) and (

18) are well known as the

smoothing property and

approximation property, respectively. To prove the multigrid convergence (recursive application of TGM) it is enough to prove that the assumptions of Theorem 1 are satisfied for each

. On the other hand, to guarantee a W-cycle convergence in a number of iterations independent of the size

M [

33], we have to prove that the following

level independency condition

holds with

independent of

k.

Concerning (multilevel) Toeplitz matrices, multigrid methods have been extensively investigated in the literature [

34,

35,

36]. While the smoothing property can be easily carried on for a simple smoother like weighted Jacobi, the approximation property usually requires a detailed analysis based on a generalization of the local Fourier analysis.

If we now apply the multigrid with Galerkin approach to the solution of (

8) and refer to the matrices at the coarser level

k as

, they are still Toeplitz and, based on the theoretical analysis developed in [

36,

37] for

matrices, are associated to the following symbols:

Since the function

has only a zero at the origin of order smaller or equal to 2, according to the convergence analysis of multigrid methods in [

36], we define the symbols of the projectors

in (

15) as

and

is a constant chosen, such that

.

We observe that the symbols

at each level are non-negative and they vanish only at the origin with order

. Therefore, the symbols

in (

21) and

in (

20) satisfy the approximation property (

18) because

at each level

.

Remark 1. Since is proportional to , the level independency condition (19) requires thatwith γ independent of k. For the smoothing property (

17), in order to ensure

for all

k, will be crucial the choice of

as a constant such that

.

In conclusion, to prove the level independency condition (

19), we need a detailed analysis of the symbol

, which is performed in the next subsection.

3.1. Behavior of Symbols

The analysis of

in (

20) requires a detailed study of the constant

in

chosen imposing the condition

because

.

According to the classical analysis, in the case of discrete Laplacian, i.e., , the value of at each level is .

Proposition 1. Let , where , and with . Then, for computed according to (20), it holds Proof. Since

, using relation (

20), for

it holds

Since the symbol

of the projector is the same at each level, by recursive relation we obtain the required result

□

For

, the value of

, computed such that

, is

where

Since

converges to 4 as

k diverges, we have

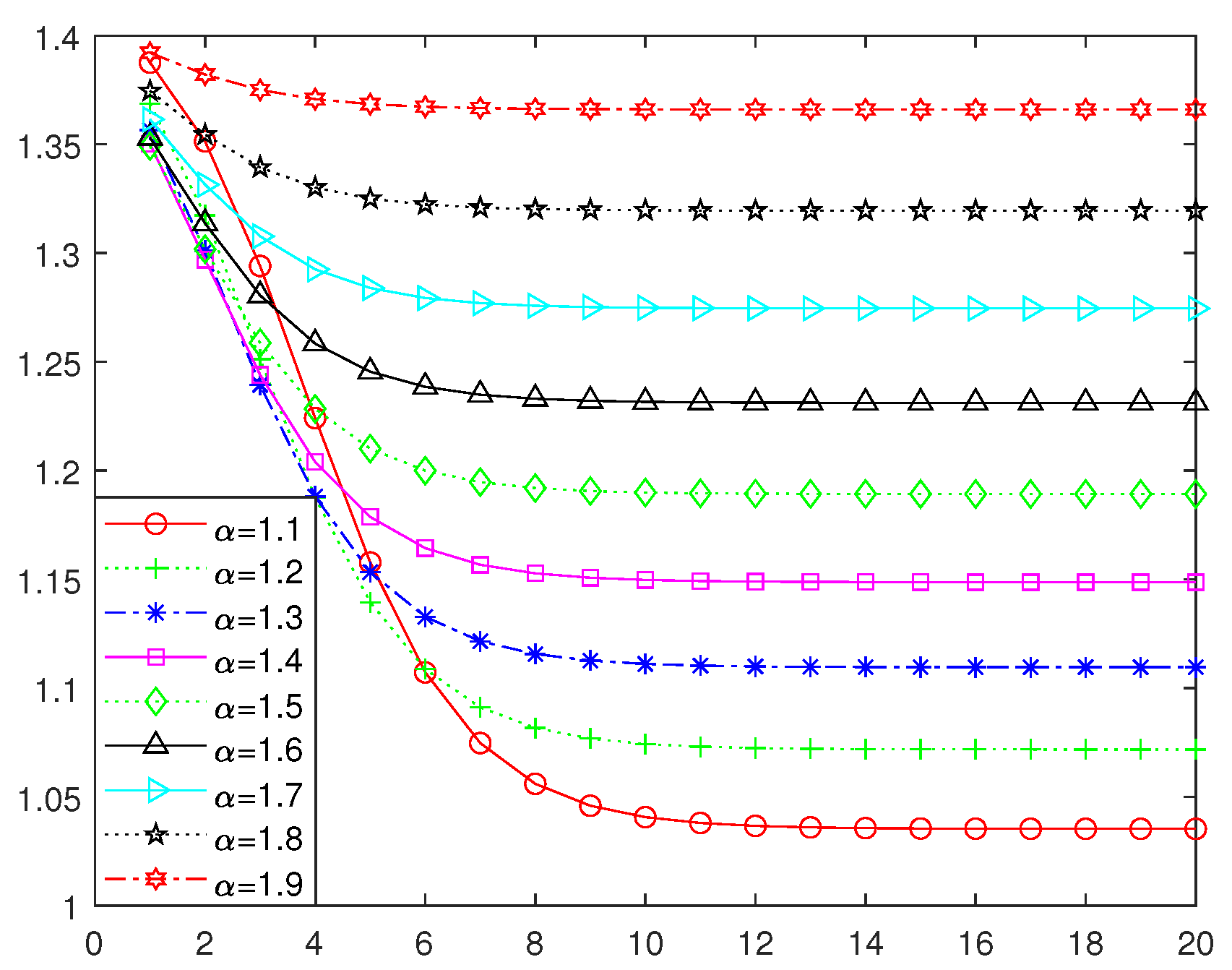

as can be seen in

Figure 1. Moreover, the convergence of

is quite fast, especially for

close to 2.

We observe that the sequence of the coarser symbols satisfies

for any

, as can be seen in

Figure 2. Combining this fact with

for any

, we obtain that the ill-conditioning of the coarser linear systems decreases moving on coarser grids.

3.2. Smoothing Property

If weighted Jacobi is used as a smoother, then the smoothing property (

17) is satisfied whenever the smoother converges [

32,

34]. Since the matrix

is symmetric positive definite, the weighted Jacobi method

is well-defined, with

being the diagonal of

. Moreover, it convergences for

, where

is the spectral radius of

.

When

, as for the geometric approach,

, where

is the Fourier coefficient of order zero of

; thus, it holds

since

.

Lemma 3. Let with defined as in (14) and let defined as in (27). If we choosethen there exist , such that the smoothing property in (17) holds true. Moreover, choosingit holds Proof. Since

is non-negative, from Lemma 4.2 in [

13], it follows

since

. Moreover, for

chosen as in (

29), the following condition

in the proof of Lemma 4.2 in [

13] is satisfied for all

in (

30). □

From the previous lemma, following the same idea as given in [

38], we propose to use the following Jacobi parameter

which provides a good convergence rate as confirmed in the numerical results in

Section 5.

3.3. Approximation Property and Level Independency

According to Remark 1, the uniform boundness of

is ensured by proving Equation (

23).

First of all, we prove that, for

, the sequence of functions

is monotonic increasing in

x. Recalling the expression of

in (

21), differentiating

we have

and hence it is equivalent to proving that

is non-negative for all

. This result can be proved by induction on

k.

For

, replacing the expression of

(see Equation (

14)) in

, by direct computations we obtain

,

.

Assuming that for it holds , , we have to prove that the same is true for .

Applying the recurrence (

20) to

, we have

where

, as defined in (

21), and

Thanks to the inductive assumption and direct computation, we obtain the desired result

,

, i.e.,

Figure 3 depicts the function

for different values of

k and

.

In conclusion, by the monotonicity of

, for all

, we have

and since the sequence

is bounded thanks to Equation (

26) (see also

Figure 1), we have that

; hence,

is bounded.

Combining the previous result on

with Equation (

30) for the smoothing analysis, it holds (

19), i.e., the level independency is satisfied.

4. Band Approximation

Multigrid methods are often applied as preconditioners for Krylov methods, and often to an approximation of

instead of at the original coefficient matrix. On the same line as what has been performed in [

7], in this section, we discuss a band approximation of

to combine with the multigrid method discussed in the previous section. The advantage of using a band matrix is in the possibility of applying the Galerkin approach with a linear cost in

M instead of

and inheriting the V-cycle optimality from the results in [

37].

Starting from the truncated Fourier sum of order

s of the symbol

we consider as band approximation of

the associated Toeplitz matrix

explicitly given by

The emerging banded matrix is defined as

As already discussed in [

7,

13], concerning the application of our multigrid method to a linear system with the coefficient matrix

, we have the following feature thanks to the results in [

35,

37]:

The computation of the coarser matrices by the Galerkin approach (

16), using the projector defined in (

15), preserves the band structure at the coarser levels; see ([

35] [Proposition 2]).

If the function is non-negative, then the V-cycle is optimal, i.e., it has a constant convergence rate independent of the size M and a computational cost per iteration proportional to , that is with the choice of s, such that .

About the spectral features of preconditioned Toeplitz matrices with Toeplitz preconditioners, we recall the well-known results of both localization and distribution type in the sense of Weyl in the following theorem (see [

5]).

Theorem 2. Let f be real-valued, -periodic, and continuous and g be non-negative, -periodic, continuous, with . Then,

is positive definite for every M;

if , with , then all the eigenvalues of lie in the open set for every M;

are distributed in the Weyl sense as , respectively, i.e., for all F in the set of continuous functions with compact support over it holdswith , , the eigenvalues of , and .

In other words, a good Toeplitz preconditioner for a Toeplitz matrix generated by f should be generated by g, such that is close to 1 in a certain metric, for instance in or in norm.

For the band preconditioner in (

33),

Figure 4 shows the graphs of

and

for two different values of

, while

are depicted in

Figure 5, for some values of

s. Obviously, the quality of the approximation grows increasing

s, but it is good enough already for a small

s, in particular when

approaches the value 2 (see the scale of the

y-axis in

Figure 5).

More importantly, in the light of Theorem 2,

Figure 6 depicts the functions

with

. This quantity measures the relative approximation, which is the one determining the quality of the preconditioning since

is the distribution function of the shifted preconditioned matrix–sequence

in the sense of Weyl and in accordance again with Theorem 2. Note that the function

is almost zero except around the zero of

at the origin.

5. Numerical Examples

In this section, we present some numerical examples, to verify the effectiveness of the MGM used both as a solver and preconditioner. In what follows:

consists of a Galerkin V-cycle with linear interpolation as grid transfer operator and and iterations of pre and post-smoother weighted Jacobi, respectively;

and

represent the Chan [

39] and Strang circulant preconditioner, respectively;

denotes the banded preconditioner, defined as in Equation (

33) and inverted using MGM with Galerkin approach

;

and are multigrid preconditioners, both as Galerkin and Geometric approach, respectively.

The aforementioned preconditioners are combined with CG and GMRES computationally performed using built-in

pcg and

gmres Matlab functions. The stopping criterion is chosen as

where

denotes the Euclidean norm,

is the residual vector at the

k-th iteration. The initial guess is fixed as the zero vector. All the numerical experiments are run by using MATLAB R2021a on HP 17-cp0000nl computer with configuration, AMD Ryzen 7 5700U with Radeon Graphics CPU and 16 GB RAM.

The following examples are selected to validate the theoretical analysis. Specifically, Example 1 is a 1D problem, which helps in determining the optimal settings for the multigrid method and circulant preconditioner. Example 2 is a 2D constant coefficient example, which extends the finding from 1D to a multidimensional context. Example 3 is particularly noteworthy as it involves a 2D problem with non-constant coefficients. This example demonstrates the robustness of multigrid methods compared to matrix–algebra (circulant and ) preconditioners, when dealing with varying diffusion coefficients.

Example 1. Consider the following 1D-RFDE given in Equation (1), with and the source term built from the exact solution Table 1 shows the number of iterations of the proposed multigrid methods and preconditioners for three different values of

.

Regarding multigrid methods, the observations are as follows:

The Galerkin approach is robust, even as V-cycle, in agreement with the previous theoretical analysis.

The geometric approach is robust only when .

The robustness of the geometric multigrid can be improved by using it as a preconditioner, as seen in the column . In particular, it is even more robust than the band multigrid preconditioner .

When comparing the circulant preconditioners, the Strang preconditioner is preferable to the optimal Chan preconditioner .

2D Problems

Here, we extend our study to two-dimensional variable coefficient RFDE, given by

with boundary condition

where

are non-negative diffusion coefficients,

is the source term, and

,

are the Riesz fractional derivatives for

. In order to define the uniform partition of the spatial domain

, we fix

and define

Let us now introduce the following

M-dimensional vectors, with

:

where

, and

.

Using the shifted Grünwald difference formula for both fractional derivatives in

x and

y leads to the matrix–vector form

with the following (diagonal times two-level Toeplitz structured) coefficient matrix

where

,

,

,

and

,

is defined as in

Section 2.

Note that the coefficient matrix is strictly diagonally dominant M-matrix. Moreover, when the diffusion coefficients are constant and equal, the coefficient matrix is a symmetric positive definite Block–Toeplitz with Toeplitz Blocks (BTTB) matrix.

In the following examples, we compare the performance of the MGMs, banded, circulant, and -based preconditioners.

Multigrid preconditioners

and

. In both cases, the grid transfer operator is a bilinear interpolation, the weighted Jacobi is used as smoother, and one iteration of the V-cycle is performed to approximate the inverse of

. Following the 1D case, the relaxation parameter of Jacobi

is computed as

where

, with

being the first Fourier coefficient of

, the symbol of

(see [

13] for more details).

According to the 1D numerical results, the Strang circulant preconditioner outperforms the optimal Chan preconditioner. Therefore, in the following, we consider only the 2-level Strang circulant preconditioner defined as

where

,

, and

is the Strang circulant approximation of the Toeplitz matrix

.

Like in the 1D case, here we extend the banded preconditioner strategy to the 2D case. To approximate the inverse of , we performed iterations of V-cycle with Galerkin approach, weighted Jacobi as smoother and bilinear interpolation as grid transfer operator.

The 2D-banded matrix is defined as

denotes the

-based preconditioner. For the symmetric Toeplitz matrix

, with

, the

matrix is

where

is a Hankel matrix, whose entries along antidiagonals are constant and equal to

Thanks to [

40], the

matrix can be diagonalized as follows

where

and

is the sine transform matrix whose entries are

The

-based preconditioner of the resulting linear system (

39) is

with

,

) and

is the diagonal matrix that contains the average of the diffusion coefficients. Regarding the computational point of view,

is extremely suitable because the matrix–vector product can be performed in

operations through the discrete sine transform (DST) algorithm.

Example 2. In this example, we consider the following 2D-RFDE given in Equation (37

), with and . The source term and exact solution are given asand Table 2 presents the number of iterations and CPU times required by the proposed multigrid methods and preconditioners. Since we have constant coefficients

, the

preconditioner

stands out as the most robust choice, both in terms of iteration number and CPU time. The geometric multigrid used as a preconditioner is also a good option, although the robustness of multigrid methods declines in the case of anisotropic problems, i.e., when

. In such a case, it is advisable to adopt the strategies proposed in [

7].

Example 3. Here, we consider the following 2D-RFDE (37) with and the diffusion coefficients are specified as The source term is built from the exact solution given as Due to the nonsymmetry of the coefficient matrix caused by diffusion coefficients, the CG method has been replaced with GMRES. Now the geometric multigrid preconditioner provides a good and robust alternative to the

preconditioner, especially when the multigrid method is applied to the band approximation (

) (

Table 3).

6. Application to Image Deblurring

Fractional differential operators have been investigated to enhance diffusion in image denoising and deblurring [

16,

41]. In this section, we investigate the effectiveness of the previous preconditioning strategies to the Tikhonov regularization problem

where

is the noisy and blurred observed image,

is the discrete convolution operator associated with the blurring phenomenon, and

is the regularization parameter.

The solution of the least square problem (

50) can be obtained by solving the linear system

by the preconditioned CG method since the coefficient matrix is positive definite. The matrix

exhibits a block Toeplitz with Toeplitz blocks structure as the matrix

, but the spectral behavior is completely different. Indeed the ill-conditioned subspace of

is very large and intersects substantially the high frequencies. Therefore, the coefficient matrix

has a double source of ill-conditioning, including both low and high frequencies. As a result, the theoretical analysis previously employed cannot be directly applied. In conclusion, the application of multigrid methods presents considerable challenges, as noted in [

42], necessitating further investigation in the future. Here, we show the effectiveness of the

preconditioner in comparison with the Strang circulant preconditioner, and CG without preconditioning.

We consider the true satellite image of

pixels in

Figure 7a. The observed image in

Figure 7b is affected by a Gaussian blur and

of white Gaussian noise. We choose the fractional derivative

and the diffusion coefficients

. The matrix

is defined as in (

40), while the Strang circulant and the

preconditioners are defined in (

42) and (

46), respectively. Since the quality of the reconstruction depends on the model (

50), all the different solvers provide a similar reconstruction. The reconstructed image for

is shown in

Figure 7c.

Various strategies exist for estimating the parameter

, see, e.g., [

43], but such estimation is beyond the scope of this work. Instead, we test different values of

. The linear system (

50) is solved using the built-in

pcg Matlab function with a tolerance of

.

Table 4 reports the number of iterations and the related restoration error for various values of

. It is noteworthy that the

preconditioner demonstrates a significant speedup compared to both CG without preconditioning and PCG with the Strang circulant preconditioner for all relevant values of

.

We should also point out that the considered images show a black background and hence all the various BCs are equivalent in terms of the precision of the reconstruction. However, in a general setting, we observe that the most precise BCs are related to the

algebra; see [

30].