Abstract

Large-scale particle swarm optimization (PSO) has long been a hot topic due to the following reasons: Swarm diversity preservation is still challenging for current PSO variants for large-scale optimization problems, resulting in difficulties for PSO in balancing its exploration and exploitation. Furthermore, current PSO variants for large-scale optimization problems often introduce additional operators to improve their ability in diversity preservation, leading to increased algorithm complexity. To address these issues, this paper proposes a dual-competition-based particle update strategy (DCS), which selects the particles to be updated and corresponding exemplars with two rounds of random pairing competitions, which can straightforwardly benefit swarm diversity preservation. Furthermore, DCS confirms the primary and secondary exemplars based on the fitness sorting operation for exploitation and exploration, respectively, leading to a dual-competition-based swarm optimizer. Thanks to the proposed DCS, on the one hand, the proposed algorithm is able to protect more than half of the particles from being updated to benefit diversity preservation at the swarm level. On the other hand, DCS provides an efficient exploration and exploitation exemplar selection mechanism, which is beneficial for balancing exploration and exploitation at the particle update level. Additionally, this paper analyzes the stability conditions and computational complexity of the proposed algorithm. In the experimental section, based on seven state-of-the-art algorithms and a recently proposed large-scale benchmark suite, this paper verifies the competitiveness of the proposed algorithm in large-scale optimization problems.

Keywords:

large-scale optimization; particle swarm optimization; exploration; exploitation; diversity preservation MSC:

68W50

1. Introduction

Particle swarm optimization (PSO) has been widely applied in engineering optimization in past decades due to their simplicity and efficiency [1,2,3,4,5]. On the one hand, PSO shows better robustness and computational efficiency in comparison to gradient-based algorithms [6]. On the other hand, in comparison to many existing evolutionary algorithms (e.g., genetic algorithms [7], ant colony optimizer [8], teaching–learning-based optimization [9], and brain storm optimization [10]), PSO has the advantages of easy implementation, efficient parameter tuning, and flexible manners of hybridization with other optimization methods [11].

However, PSO has been found to be inefficient in solving large-scale optimization problems (LSOPs). Without losing generality, the LSOP mentioned in this paper aims to minimize a given function, which can be formulated by (1).

where D is the dimensionality of the considered optimization function, and denotes the dimension of the decision vector. Note that (i) is a continuous black box function with boundary constraints on the decision variables, and (ii) the dimensionality considered in this paper is up to 1000, which is a common setting in relevant research. The main reason for this is that PSO cannot effectively conduct swarm diversity preservation when solving LSOPs and is thus easily trapped in local optima. To be specific, this is caused by PSO’s exemplar selection mechanism: the and in PSO are of poor diversity during the optimization process [12], since it is difficult to locate more promising solutions based on the current swarm. Therefore, the swarm tends to converge to the local optimum, resulting in premature convergence. On the other hand, limited computing resources only allow PSO to search part of the search spaces [13].

Targeting these two issues, a large body of research has been put forward to improve PSO for LSOPs. The mainstream of the current work can be roughly divided into three categories: exemplar diversification, decoupled learning, and hybridization of PSO and other techniques.

The methods in the first category propose to enhance the diversity of the exemplars for the updated particles [12,13,14,15,16,17,18,19], thereby improving PSO’s balance between exploration and exploitation. For instance, the competitive swarm optimizer (CSO) assigns each updated particle a distinguishing exemplar [12], the social learning particle swarm optimizer (SLPSO) allows the updated particles to learn all of the particles that are better than the specific updated particles [14], and the level-based learning swarm optimizer (LLSO) randomly selects two exemplars for each updated particle [13]. Through these means, the exemplars of the updated particles can be hugely diversified in comparison to the basic PSO, which leads to benefits in diversity preservation.

The approaches in the second category focus on decoupling the convergence learning component and the diversity learning component in the velocity update structure for the basic PSO [1,20,21]. Afterwards, such algorithms adjust the parameters in the diversity learning component to control swarm diversity. Consequently, an important design factor in such methods is the study of the local diversity measurements to be adopted as the basis of the diversity learning component, such as the local sparseness degree [1].

For hybrid PSO, the main idea is to utilize other optimization techniques to enhance PSO in diversity preservation. For instance, chaotic local search [22] and the memetic algorithm [23] can be adopted to promote PSO in local search for diversity preservation. One possible solution that can be employed to help diversity preservation is to update the rules of the simulated annealing algorithm [24] and the genetic algorithm [25].

However, these methods still show room for further improvement. First, for the methods with exemplar diversification strategies, the early attempts, such as CSO and SLPSO, fail at simultaneously diversifying the two exemplars for the updated particles [13]. Recent methods, such as LLSO, are ineffective in preserving promising particles, consequently resulting in adverse impacts on diversity preservation. Furthermore, recent methods often introduce additional operators for exemplar selection, leading to extra parameter tuning tasks and computational complexity. Second, the approaches with decoupled learning mechanisms show poor ability in preserving promising particles; on the other hand, they are computationally complex in evaluating the local diversity information, which should impose constraints on their performance if the computation resources are limited. In summary, one can find that both the recent methods in the two categories fail at effectively protecting promising particles from being updated for diversity preservation and simplifying the complexity of the algorithm. Third, for the hybrid PSO variants, extra parameter tuning tasks will be required due to the introduction of other optimization operators [12]. Therefore, diversity preservation for PSO in LSOPs is still challenging.

To address this issue, this paper aims to design novel learning mechanisms for both diversity preservation and efficient exemplar selection to improve PSO in both performance and efficiency. To this end, a novel variant of PSO is proposed, and the main contributions of this paper are listed as follows:

- To enhance the diversity preservation ability of PSO, this paper puts forward an efficient dual-competition-based learning mechanism, which is able to efficiently help diversify the exemplars of the updated particles and preserve promising particles. Therefore, the proposed mechanism can significantly enhance PSO in diversity preservation.

- Based on the proposed dual-competition-based learning mechanism, a novel variant of PSO for LSOPs is proposed, referred to as the dual-competition-based particle swarm optimizer (PSO-DC).

- Comprehensive theoretical analysis and experiments are conducted, which demonstrate the competitiveness of the proposed algorithm from both theoretical and experimental perspectives.

The subsequent sections of this work are structured as follows: Section 2 provides a review of the current improvements in PSO for LSOPs. Section 3 presents the details of the proposed dual-competition-based learning mechanism. Section 4 provides a theoretical analysis of the computational complexity and the searching characteristics of the proposed algorithm. Section 5 experimentally tests the performance of the proposed algorithm. Finally, we conclude this paper and highlight directions for future work in Section 6.

2. Related Work

In this section, to provide readers with a comprehensive understanding of the developments in PSO for LSOPs, a deliberated review of the current mainstream improvements in PSO for LSOPs is presented, including the cooperative coevolution-based PSO variants, exemplar diversification-based PSO variants, decoupled learning-based PSO variants, and hybrid PSO variants.

2.1. CC-Based Methods for Dimensionality Reduction

The cooperative coevolution (CC) framework was first put forward by Bergh et al. and aims to solve LSOPs in low-dimensional spaces to reduce the difficulties that PSO has with diversity preservation [26], as evidenced by CCPSO- and CCPSO-. These two methods randomly divide the whole decision vector into K sub-components, where K is predefined by users. Then, PSO is utilized to simultaneously optimize the decomposed sub-components.

However, CC-based methods are ineffective at solving LSOPs that involve complex interactions among the decision variables. To solve this problem, randomness-based variable grouping methods and analysis-based differential grouping methods have been proposed in past decades. Randomness-based variable grouping methods—such as CCPSO [27], CCPSO2 [28], and CGPSO [29]—propose to dynamically and randomly conduct variable grouping and update the grouping scenarios based on improvements in the overall performance to promote the grouping of variables with interactions into the same components. Second, for methods based on variable interaction analysis, a baseline can be found in differential grouping (DG) [30], which directly analyzes the interactions between variables based on the fitness variation. On the basis of DG, different versions of DG have been put forward for specific problems and grouping acceleration, such as DG2 [31], RDG [32], RDG2 [33], ERDG [34], MDG [35], and several kinds of improved DG [36,37].

Despite the success of the current CC-based algorithms, such methods show the following drawbacks [1,13]: (i) grouping accuracy cannot be ensured; (ii) the process of variable grouping is time consuming; and (iii) it is difficult to set proper context vectors to balance the exploration and exploitation of the CC-based algorithms. Therefore, researchers tend to design novel exemplar diversification strategies and learning structures for PSO to directly enhance it in diversity preservation for improving its search efficiency.

2.2. Exemplar Diversification-Based Methods

As discussed in Section 1, such algorithms pay attention to diversifying the exemplars of the updated particles, and the main idea is to modify the topologies among particles.

Early typical works can be found in CSO [12] and SLPSO [14]. On the one hand, both of them employ the current position as the exemplars for the updated particles, where the current positions are of higher diversity than the and in basic PSO. On the other hand, CSO allows half of the particles to be kept without any operation at each generation, which is able to significantly benefit diversity preservation [12,13]. Nevertheless, the second exemplar for each updated particle in CSO and SLPSO is the mean position of the whole swarm, resulting in adverse impacts on diversity preservation [13]. To solve this issue, different kinds of exemplar selection strategies have been proposed in recent years, as evidenced by level-based exemplar selection [13,16,38], randomness-based exemplar selection [17,18], and superiority combination exemplar selection [19]. Lan et al. introduced a modified competition mechanism and a two-phase learning strategy [39]. All these methods concentrate on diversifying the exemplars for updated particles to balance convergence and diversity for PSO.

However, although the recently proposed exemplar selection mechanisms have achieved great improvements in diversifying the exemplars in comparison to CSO, they have not shown advantages in preserving promising particles, which is significantly beneficial to diversity preservation, as noted by [1,13].

2.3. Decoupled Learning-Based Methods

Different from exemplar diversification-based methods, decoupled learning-based methods propose to control the diversity with specific parameters. In line with this idea, Li et al. put forward three kinds of PSO variants, namely APSODEE, MAPSODEE, and PSODBCD [1,20,21]. Such methods build on different local diversity measurements, using particles with better local diversity to guide particles with poor local diversity. Zhang et al. have also developed decoupled convergence and diversity learning structures [40], where they update particles with different characteristics for convergence and diversity preservation, respectively.

In general, such methods show good potential in balancing exploration and exploitation. However, additional indicators should be designed to evaluate the local diversity information, leading to issues with respect to computational complexity and local diversity measurement accuracy. Furthermore, such methods are also inefficient in preserving promising particles for diversity enhancement. For example, most of the particles in APSODEE will be updated in the latter half of the optimization process.

2.4. Hybrid Methods

Hybrid methods mainly focus on adopting different optimization techniques to design new particle update strategies for diversity preservation. For example, CGPSO proposes to incorporate chaotic local search into PSO, which can help refine each solution in local search space, thereby benefiting diversity preservation [22]. SA-PSO employs an update rule from the simulated annealing algorithm to help avoid useless particle updates, which favors protecting diversity [24]. HPSOGA designs a diversity enhancement based on genetic operators [25].

However, there exists a distinguishing issue in such methods: extra parameter tuning tasks [12]. The reason for this is that different algorithms contain different parameters. Such extra parameters increase the complexity of the algorithm, which has adverse effect on algorithms’ robustness and generality.

3. Proposed Method

In this section, this paper proposes a novel variant of particle swarm optimizer which can diversify the exemplars for the updated particles, protect the promising particles, and is of low computational cost.

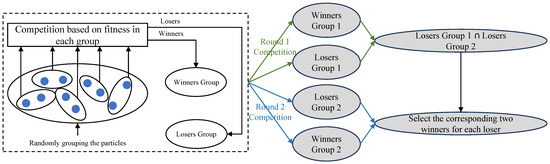

First, the proposed exemplar and updated particle selection method (referred to as the dual-competition-based strategy, DCS) can be illustrated in Figure 1. To be specific, the competition mechanism [12] is independently executed for twice at each generation, leading to two winners groups and two losers groups. Afterwards, the particles to be updated at each generation can be obtained by taking the intersection of the two loser groups, namely, “Losers Group 1” and “Losers Group 2” in Figure 1. Consequently, the exemplars for the obtained particles to be updated are the corresponding winners in “Winners Group 1” and “Winners Group 2”. Here, a brief of the competition mechanism is presented to ensure the integrity of the proposed mechanism: first, a swarm with size is randomly divided into sub-swarms and second, in each sub-swarm, the particle with the better fitness is regarded as the winner and put into the winners group while the other one is put into the losers group.

Figure 1.

Sketch of the proposed exemplars and updated particles selection mechanism.

Second, in order to distinguish the exploitation and exploration exemplars, the two exemplars for each particle are sorted based on their fitness: the better exemplar is adopted as the exploitation exemplar, and the other exemplar is employed as the exploration exemplar. This is inspired by [13]: learning from a more promising exemplar shows advantages in exploitation, while learning from a relatively inferior exemplar tends to explore more regions of the decision space.

Finally, the velocity and position of the particles to be updated are iterated according to (2) and (3), leading to the proposed dual-competition-based particle swarm optimizer (PSO-DC).

where is the dimension of the updated particle’s velocity at generation t; and are the position of the exploitation exemplar and the exploration exemplar, respectively; , , and are randomly generated numbers within ; and is the parameter set by users for balance the exploration and exploitation. The pseudo code of the proposed algorithm is presented in Algorithm 1.

One can find that the proposed PSO-DC mainly differs from the current PSO variants via the following: (i) PSO-DC shows advantages over CSO and SLPSO, since it can select two different exemplars for the updated particles. (ii) PSO-DC has an improved ability to preserve promising particles at each generation, since it is able to keep allowing more than half of the particles to be retained to the next generation with DCS. This can significantly help PSO-DC with diversity preservation. (iii) PSO-DC does not introduce any extra parameters in comparison to APSODEE [1], DLLSO [13], and RCIPSO [18], etc. In summary, PSO-DC exhibits advantages in both diversity preservation and simplicity.

| Algorithm 1 The pseudo code of PSO-DC. |

| Input: Swarm size , terminal criterion , variable boundary. |

| Output: The best particle searched during the optimization. |

| 1: Randomly generate a swarm with respect to and variable boundary; |

| 2: ; |

| 3: while if is not met do |

| 4: Evaluate the swarm; |

| 5: Conduct DCS to obtain the particles to be update , the exploitation exemplar set , and the exploration exemplar set ; |

| 6: for in do |

| 7: Update according to (2) and (3); |

| 8: end for |

| 9: Update with |

| 10: ; |

| 11: end while |

4. Theoretical Analysis

4.1. Computational Complexity

In this section, the computational complexity of PSO-DC is analyzed by comparing it with the basic PSO and APSODEE in terms of the learning structure, computational cost, and the requirements for memory.

First, for the update structure, all these methods adopt three components to form the velocity update structure.

Second, for the complexity analysis of the computational cost at each generation, taking the basic PSO as the baseline method, we mainly evaluate the extra computational cost introduced by PSO-DC and APSODEE at each generation. For the analysis of PSO-DC, the extra computational cost is manly brought by line 5 in Algorithm 1, which is for two rounds of competition and for obtaining the intersection of the two losers groups. Consequently, in comparison to the basic PSO, the extra computational complexity of PSO-DC at each generation is . According to the analysis in [1], in comparison to the basic PSO, the extra computational complexity of APSODEE is .

Finally, for the memory cost, both PSO-DC and APSODEE do not need to store and in comparison to the basic PSO.

In summary, PSO-DC is simple for learning structure, more computationally efficient than APSODEE, and more memory efficient than the basic PSO.

4.2. Convergence Stability Analysis

Convergence stability analysis is crucial to EAs [41,42]. Considering that the exemplar selection mechanism of PSO-DC builds on randomness, this paper analyzes the convergence of for the proposed algorithm with the method proposed by [41], where is the expectation of an arbitrary particle at generation t. The details of the analysis are shown as follows. Note that convergence stability in 1-D search space is analyzed as follows, since each dimension of the particles is independently updated in PSO-DC.

For simplicity, the velocity update strategy of PSO-DC is rewritten as (4) and (5).

Consequently, can be transformed to (6), where .

Then, can be obtained as (7), where , , , , and are the expectations of the corresponding variables, respectively.

For simplicity, we introduce (9).

Based on the analysis in [41], the necessary and sufficient condition for ensuring the convergence of is as follows: the magnitude of the eigenvalues of M () must be smaller than 1. Considering , , and as random numbers within , , , and should be . Afterwards, the necessary and sufficient condition for the convergence of is shown by (10).

4.3. Diversity Preservation Characteristics

For the analysis of the diversity preservation characteristics of the proposed PSO-DC, this paper compares PSO-DC with APSO-DEE, which is a recently proposed PSO variant for LSOPs [1] based on the analysis method presented in [13].

Similarly, the velocity update strategy of APSO-DEE can be rewritten as (15)–(17):

where and are exemplars in APSO-DEE for convergence and diversity, respectively.

Consequently, the exploration ability of PSO-DC and APSO-DEE is mainly influenced by the diversity of and . In APSO-DEE, is the best particle of the sub-swarm that the particle is in, and it is shared by all the updated particles in this sub-swarm. In PSO-DC, and are both randomly selected with the proposed dual-competition strategy. Therefore, the diversity of should be better than that of . Furthermore, thanks to the dual-competition strategy, more than half of the particles can be preserved at each generation. In summary, the diversity preservation ability of PSO-DC is believed to be better than that of APSO-DEE, leading to a better exploration ability in PSO-DC.

5. Experimental Study

This section verifies the performance of the proposed algorithm: First, empirical comparisons are conducted to test PSO-DC by comparing it with seven peer algorithms. Second, the sensitivity analysis for the parameters is made to show the characteristics of PSO-DC. Finally, a comparison between PSO-DC and APSO-DEE on swarm diversity is presented to show the diversity preservation ability of the proposed algorithm.

5.1. Empirical Comparisons

5.1.1. Compared Algorithms and Benchmarks

To validate the performance of PSO-DC, seven popular algorithms for LSOPs were selected in the numerical comparisons, including DLLSO [13], APSO-DEE [1], TPLSO [39], RCI-PSO [18], DECC-DG2 [31], DECC-MDG [35], and MLSHADE-SPA [43]. Specifically, DLLSO, TPLSO, and RCI-PSO are three PSO variants building on different exemplar diversification strategies: APSO-DEE is designed with a decoupled learning structure, DECC-DG2 and DECC-MDG employ a CC framework, and MLSHADE-SPA is a recently proposed hybrid large-scale evolutionary algorithm.

In the comparisons, a recently proposed benchmark test suite [44] was adopted, where the benchmark functions were designed with heterogeneous modules based on 16 base functions, e.g., sphere function, elliptical function, Rosenbrock’s function, etc. Specifically, first, in comparison to the benchmarks posted in CEC 2010 [45] and CEC 2013 [44,46], benchmarks were designed by adopting a heterogeneous method to obtain differences between modules. Second, versatile coupled modules were designed, including three coupling topologies, and five types of coupling degrees (non-coupled, loosely coupled, moderately coupled, tightly coupled, and fully coupled) were introduced in the benchmarks. Therefore, the benchmarks in [44] should be more complicated. Detailed definitions of the benchmarks are presented in Appendix A, where Appendix A.1 presents the details of the base functions and Appendix A.2 defines the formulation of the benchmarks, respectively. Readers are referred to [44] for more details of the benchmarks.

All the algorithms were coded with Matlab 2022b and independently run 25 times on each benchmark on a computer with Windows 11 and an Intel I9 14900K.

5.1.2. Experimental Settings

For the experimental settings, the dimensionality of the benchmarks was set to 1000 and the maximum number of the fitness evaluations () was adopted as the terminal criterion for the optimization process, which is set to 3 × . For the parameter settings of the selected peer algorithms, the recommended settings in the corresponding references were adopted for fairness. For PSO-DC, according to the parameter sensitivity analysis, the swarm size and the parameter were set to 600 and 0.5, respectively. For the statistical analysis, the rank-sum test was adopted for the comparisons between PSO-DC and the peer algorithms, with the significance factor set to 0.05, and the Friedman test was utilized in the parameter analysis experiments.

5.1.3. Results

The numerical results are shown in Table 1, where the best average results on each benchmark are highlighted with gray background, and the symbols of “w/l/t” at the bottom of the table represent how many times that the performance of PSO-DC was significantly better, significantly worse, or tight in comparison to the corresponding results obtained by the peer algorithms. For the p values, the results are highlighted in bold if the performance of PSO-DC was significantly better than that of the peer algorithms.

Table 1.

The numerical results of the algorithms with benchmark dimensionality of 1000 and = 3 × .

From Table 1, one can find that the proposed PSO-DC is generally competitive with respect to the average performance: it outperforms the peer algorithms on 10 benchmarks out of the 15 test cases. To provide deeper insight, PSO-DC wins two, four, two, and two times on the loosely coupled functions to , moderately coupled functions to , tightly coupled functions to , and fully coupled functions to , respectively. Consequently, it is reasonable that PSO-DC has advantages in solving complicated benchmarks. Furthermore, for the comparison results of the statistical test, PSO significantly outperforms DLLSO, APSO-DEE, TPLSO, RCI-PSO, DECC-DG2, DECC-MDG, and MLSHADE-SPA for a total of 11, 11, 13, 10, 13, 13 and 12 times, respectively, which also demonstrates the competitiveness of PSO-DC. In summary, from the numerical comparison results, PSO-DC is competitive in solving LSOPs, especially for complicated benchmarks. This indicates that PSO-DC has been improved in balancing exploration and exploitation, benefiting from the improved diversity preservation ability.

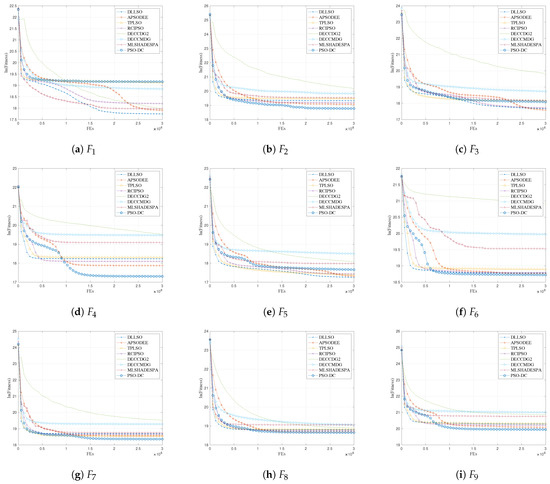

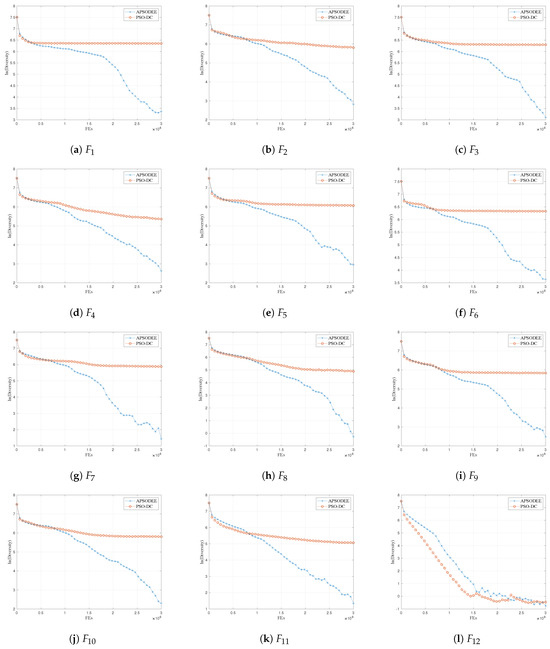

Figure 2 presents the convergence curves of all the compared algorithms. One can find that PSO-DC shows moderate convergence speed, and the convergence of PSO-DC generally shows advantages during the latter optimization stage in comparison to the peer algorithms. A reasonable explanation for this funding is that PSO-DC is more promising in diversity preservation, which potentially first impacts its convergence speed while finally leading to better exploration of the search spaces.

Figure 2.

The convergence curves of the compared algorithms.

In summary, the numerical comparison results above demonstrate the competitiveness of the proposed PSO-DC in solving LSOPs, indicating that PSO-DC enhances diversity preservation and thereby improves the balance between exploration and exploitation.

5.2. Parameter Sensitivity Analysis

In the parameter sensitivity analysis, experiments are conducted to investigate the influences of and on the performance of PSO-DC.

5.2.1. Analysis for

For the analysis of , is fixed to 0.5, and is set to 400, 500, 600, 800, and 1000 to show ’s influences on PSO-DC’s performance. The results are shown in Table 2. For the tests evaluating computational resource usage, the results are obtained with five independent runs. From Table 2, one can find that different swarm size settings lead to different performances, and PSO-DC performs relatively stable when . This indicates that PSO-DC is able to provide good diversity and ensures exploration ability as long as the swarm size is not excessively small.

Table 2.

The performance of PSO-DC obtained with different settings of .

5.2.2. Analysis for

For the analysis of , is fixed to 500 and is varied from 0.1 to 0.6 with a step of 0.1. The results are shown in Table 3. One can find that evidently outperforms other settings, while PSO-DC is not sensitive to when . This indicates that can help PSO-DC balance exploration and exploitation.

Table 3.

The performance of PSO-DC obtained with different settings of .

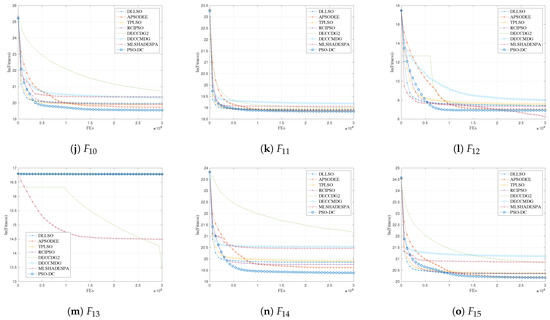

5.2.3. The Correlation between and

To further investigate the characteristics of PSO-DC, experiments are conducted to test the influences of different combinations of and on PSO-DC’s performance. The results are shown in Table 4 and Figure 3, which reconfirm the effectiveness of . Furthermore, one can find that excessively small or large combinations of and perform relative worse. A reasonable explanation is that excessively small combinations of swarm size and lead to poor swarm diversity resulting in poor exploration ability, while excessively large combinations of swarm size and make PSO-DC overly focused on diversity preservation, resulting in poor exploitation ability.

Table 4.

Friedman test ranking of the performance of PSO-DC with different combinations of and .

Figure 3.

The heatmap of the results in Table 4.

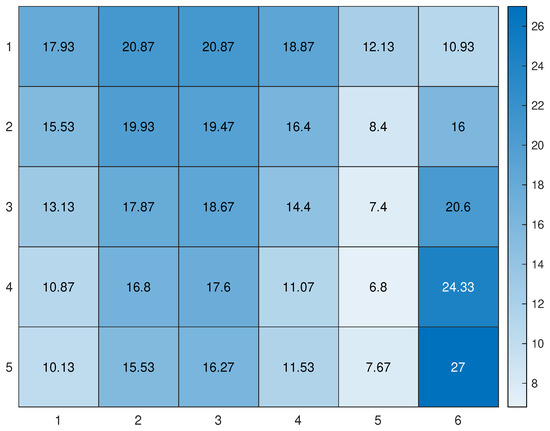

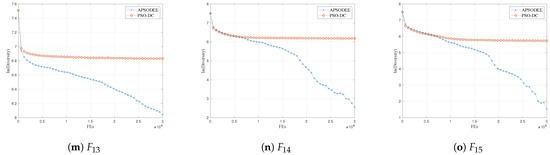

5.3. Diversity Comparison between PSO-DC and APSO-DEE

According to the discussion in Section 3 and Section 4.3, PSO-DC shows advantages in diversity preservation in comparison to APSO-DEE. To examine the theoretical analysis, this section presents a comparison between PSO-DC and APSO-DEE with respect to their swarm diversity trajectories in the whole optimization process. The swarm diversity is computed according to (18) and (19).

The results are shown in Figure 4, experimentally proving that PSO-DC has better diversity preservation ability in comparison to APSO-DEE. This should benefit from the proposed dual-competition-based strategy. Considering that PSO-DC is also more promising in convergence speed than APSO-DEE according to the results in Figure 2, it can be concluded that the proposed strategy is capable of enhancing PSO-DC in balancing exploration and exploitation.

Figure 4.

The swarm diversity curves of the compared algorithms.

5.4. Scalability Test

In the scalability test experiment, the dimensionality of the benchmarks is set to 3000, and the parameter settings in Section 5.1.2 are adopted. To save time, each algorithm is run 10 times on each benchmark, and is also set to 3 × . Note that and are not employed in this section, since their dimensionality cannot be changed according to the designs in [44]. DECCDG2 is not adopted in the scalability test. This is because DG2 is highly time-consuming when performing variable grouping when the dimensionality of the benchmarks is excessively large.

The results are shown in Table 5, where the average results of the compared algorithms are presented and the best average results are highlighted in bold. In general, PSO-DC outperforms all the peer algorithms on 12 benchmarks out of the 15 benchmarks with respect to the average results. The Friedman test results at the bottom of Table 5 also show the competitiveness of the proposed algorithm. It should be noted that PSO-DC shows promising results on the benchmarks with more complicated coupling characteristics, namely to and to (benchmarks with moderately, tightly, and fully coupled characteristics).

Table 5.

Scalability test of the compared algorithms with dimensionality of 3000 and = 3 × .

6. Conclusions and Future Work

Targeting the issue of diversity preservation in particle swarm optimization, this paper proposes a dual-competition-based strategy for particle swarm optimization to improve its diversity preservation ability in solving large-scale optimization problems. According to the theoretical and experimental results, the following two conclusions can be drawn: (i) The proposed algorithm is competitive when solving large-scale optimization problems. In particular, the proposed algorithm shows remarkable advantages over the peer algorithms when solving the complicated benchmarks. (ii) The proposed algorithm shows improved convergence speed and diversity, especially in the latter optimization stage. This indicates that the proposed dual-competition-based strategy is able to help improve the proposed algorithm in diversity preservation, thereby leading to enhanced performance in balancing exploration and exploitation.

However, the results show that the proposed algorithms still cannot effectively solve the benchmarks. Reasonable explanations could be that (i) the proposed algorithm cannot provide a sufficient search of the decision space due to limited computational resources or (ii) the solution update strategy of PSO inherently cannot efficiently conduct exploitation in huge search spaces. Consequently, in our future work, employing GPU devices and parallel computing methods to enhance the proposed algorithm’s computational efficiency and incorporating the proposed algorithm with specific local search techniques to enhance its exploitation ability can be promising research directions.

Author Contributions

Conceptualization, W.G. (Weijun Gao) and W.G. (Weian Guo); methodology, W.G. (Weian Guo) and X.P.; software, D.L.; validation, X.P. and W.G. (Weian Guo); formal analysis, W.G. (Weian Guo); investigation, D.L.; resources, X.P.; data curation, W.G. (Weijun Gao); writing—original draft preparation, W.G. (Weijun Gao); writing—review and editing, X.P.; visualization, W.G. (Weijun Gao); supervision, D.L.; project administration, X.P.; funding acquisition, W.G. (Weian Guo). All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China under Grant Number 62273263, 72171172 and 71771176; Shanghai Municipal Science and Technology Major Project (2022-5-YB-09); Natural Science Foundation of Shanghai under Grant Number 23ZR1465400; The Study on the mechanism of industrial-education cocultivation for interdisciplinary technical and skilled personnel in Chinese intelligent manufacturing industry (Planning project for the 14th Five-Year Plan of National Education Sciences (BJA210093)).

Data Availability Statement

The data and code can be available on request by readers.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Explanation of the Benchmarks Employed in the Experiment

In this section, the formulations of the benchmarks employed in Section 5 are presented. Note that the benchmarks are designed on the basis of the following base functions.

Appendix A.1. The Base Functions

- Sphere Function

- Elliptic Function

- Rotated Elliptic Function

- Step Elliptic Functionwhere , .

- Rastrigin’s Functionwhere , .

- Buche–Rastrigin’s Functionwhere , , .

- Griewank’s Functionwhere , , is a dimensional diagonal matrix, and the diagonal element .

- Rotated Rastrigin’s Functionwhere , , , , , , otherwise is a sign function, , otherwise, , and , if , otherwise .

- Rotated Weierstrass Functionwhere , , , , .

- Rotated Ackley’s Functionwhere , , .

- Lunacek Bi-Rastrigin’s Functionwhere , , , , , .

- Modified Schwefel’s Functionwhere , , , , and

- Rosenbrock Functionwhere .

- Expanded Rosenbrock’s plus Grewangk’s Functionwhere , .

- Expanded Schaffer’s Functionwhere , , and .

- Composition Functionwhere , , . Normalize the weights , when , , otherwise .

Appendix A.2. The Benchmark Functions

With the aforementioned base functions, ref. [44] defines the benchmark functions as follows, where r is the coupling degree. More details can be found in [44]. Note that the meanings of r, S, , and in the following are listed in Table A1. More detailed designs of the benchmarks can be found in [44].

Table A1.

The description of , S, , and r.

Table A1.

The description of , S, , and r.

| Symbols | Descriptions |

|---|---|

| The set of base functions used to form the benchmark function. | |

| S | Each element in S denotes the size of the corresponding module in the benchmark function. Moreover, represents the number of elements in the set S, which is the number of modules in the benchmark function. |

| r | The coupling degree of the benchmark function. |

| The weight for the modules of the benchmark function, which is adopted to generate imbalance of the modules. |

- : Partially Separable Functionwhere

- ;

- ;

- ;

- ;

- .

- : Loosely Coupled Function 1 (Sequential Coupling Topology)where

- ;

- ;

- ;

- , ;

- ;

- ;

- , .

- : Loosely Coupled Function 2 (Randomly Coupling Topology)where

- ;

- ;

- ;

- ;

- ;

- ;

- ;

- , .

- : Loosely Coupled Function 3 (Sequential Coupling Topology)where

- ;

- ;

- ;

- ;

- ;

- ;

- ;

- , .

- : Loosely Coupled Function 4 (Random Coupling Topology)where

- ;

- ;

- ;

- ;

- ;

- ;

- ;

- , .

- : Moderately Coupled Function 1 (Sequential Coupling Topology)where

- ;

- ;

- ;

- ;

- ;

- ;

- ;

- , .

- : Moderately Coupled Function 2 (Random Coupling Topology)where

- ;

- ;

- ;

- ;

- ;

- ;

- ;

- , .

- : Moderately Coupled Function 3 (Sequential Coupling Topology)where

- ;

- ;

- ;

- ;

- ;

- ;

- ;

- , .

- : Moderately Coupled Function 4 (Random Coupling Topology)where

- ;

- ;

- ;

- ;

- ;

- ;

- ;

- , .

- : Tightly Coupled Function 1 (Sequential Coupling Topology)where

- ;

- ;

- ;

- ;

- ;

- ;

- ;

- , .

- : Tightly Coupled Function 2 (Sequential Coupling Topology)where

- ;

- ;

- ;

- ;

- ;

- ;

- ;

- , .

- : Tightly Coupled Function 3 (Sequential Coupling Topology)where

- ;

- ;

- .

- : Fully Coupled Function 1 (Ring Coupling Topology)where

- ;

- ;

- .

- : Fully Coupled Function 2 (Ring Coupling Topology)where

- ;

- ;

- ;

- ;

- ;

- ;

- ;

- , .

- : Fully Coupled Function 3 (Random Coupling Topology)where

- ;

- ;

- ;

- ;

- ;

- ;

- ;

- , .

References

- Li, D.; Guo, W.; Lerch, A.; Li, Y.; Wang, L.; Wu, Q. An adaptive particle swarm optimizer with decoupled exploration and exploitation for large scale optimization. Swarm Evol. Comput. 2021, 10, 100789. [Google Scholar] [CrossRef]

- Liu, Y.; Xing, T.; Zhou, Y.; Li, N.; Ma, L.; Wen, Y.; Liu, C.; Shi, H. A large-scale multi-objective brain storm optimization algorithm based on direction vectors and variance analysis. In Proceedings of the International Conference on Swarm Intelligence, Qingdao, China, 17–21 July 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 413–424. [Google Scholar]

- Ma, L. Evolutionary computation-based machine learning and its applications for multi-robot systems. Front. Neuro-Robot. 2023, 17, 1177909. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Cheng, S. Parameter settings in particle swarm optimization algorithms: A survey. Int. J. Autom. Control. 2022, 16, 164–182. [Google Scholar] [CrossRef]

- Chaturvedi, S.; Kumar, N.; Kumar, R.A. PSO-optimized novel PID neural network model for temperature control of jacketed CSTR: Design, simulation, and a comparative study. Soft Comput. 2024, 28, 4759–4773. [Google Scholar] [CrossRef]

- Karakuzu, C.; Karakaya, F.; Cavuslu, M.A. FPGA implementation of neuro-fuzzy system with improved PSO learning. Neural Netw. 2016, 79, 128–140. [Google Scholar] [CrossRef] [PubMed]

- Sivanandam, S.N.; Deepa, S.N. Genetic Algorithms; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Chaturvedi, S.; Kumar, N.; Kumar, R. Two Feedback PID Controllers Tuned with Teaching-Learning-Based Optimization Algorithm for Ball and Beam System. IETE J. Res. 2023, 5, 1–10. [Google Scholar] [CrossRef]

- Cheng, S.; Qin, Q.; Chen, J.; Shi, Y. Brain storm optimization algorithm: A review. Artif. Intell. Rev. 2016, 46, 445–458. [Google Scholar] [CrossRef]

- Shami, T.M.; El-Saleh, A.A.; Alswaitti, M.; Al-Tashi, Q.; Summakieh, M.A.; Mirjalili, S. Particle swarm optimization: A comprehensive survey. IEEE Access 2022, 10, 10031–10061. [Google Scholar] [CrossRef]

- Cheng, R.; Jin, Y. A competitive swarm optimizer for large scale optimization. IEEE Trans. Cybern. 2014, 45, 191–204. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, W.; Da, D.; Li, Y.; Gu, T.; Zhang, J. A level-based learning swarm optimizer for large-scale optimization. IEEE Trans. Evol. Comput. 2017, 22, 578–594. [Google Scholar] [CrossRef]

- Cheng, R.; Jin, Y. A social learning particle swarm optimization algorithm for scalable optimization. Inf. Sci. 2015, 291, 43–60. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, W.; Gu, T.; Jin, H.; Mao, W.; Zhang, J. An adaptive stochastic dominant learning swarm optimizer for high-dimensional optimization. IEEE Trans. Cybern. 2020, 52, 1960–1976. [Google Scholar] [CrossRef] [PubMed]

- Song, G.; Yang, Q.; Gao, X.; Ma, Y.; Lu, Z.; Zhang, J. An adaptive level-based learning swarm optimizer for large-scale optimization. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics, Melbourne, Australia, 17 October 2021; pp. 152–159. [Google Scholar]

- Yang, Q.; Song, G.; Gao, X.; Lu, Z.; Jeon, S.; Zhang, J. A random elite ensemble learning swarm optimizer for high-dimensional optimization. Complex Intell. Syst. 2023, 9, 5467–5500. [Google Scholar] [CrossRef]

- Yang, Q.; Song, G.; Chen, W.; Jia, Y.; Gao, X.; Lu, Z.; Jeon, S.W.; Zhang, J. Random contrastive interaction for particle swarm optimization in high-dimensional environment. IEEE Trans. Evol. Comput. 2023. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, Q.; Zhang, Y.; Chen, S.; Wang, Y. Superiority combination learning distributed particle swarm optimization for large-scale optimization. Appl. Soft Comput. 2023, 136, 110101. [Google Scholar] [CrossRef]

- Li, D.; Guo, W.; Wang, L.; Wu, Q. A modified apsodee for large scale optimization. In Proceedings of the 2021 IEEE Congress on Evolutionary Computation (CEC), Krakow, Poland, 28 June–1 July 2021; pp. 1976–1982. [Google Scholar]

- Li, D.; Wang, L.; Guo, W.; Zhang, M.; Hu, B.; Wu, Q. A particle swarm optimizer with dynamic balance of convergence and diversity for large-scale optimization. Appl. Soft Comput. 2023, 132, 109852. [Google Scholar] [CrossRef]

- Jia, D.; Zheng, G.; Qu, B.; Khan, M.K. A hybrid particle swarm optimization algorithm for high-dimensional problems. Comput. Ind. Eng. 2011, 61, 1117–1122. [Google Scholar] [CrossRef]

- Tang, D.; Cai, Y.; Zhao, J.; Xue, Y. A quantum-behaved particle swarm optimization with memetic algorithm and memory for continuous non-linear large scale problems. Inf. Sci. 2014, 289, 162–189. [Google Scholar] [CrossRef]

- Tao, M.; Huang, S.; Li, Y.; Yan, M.; Zhou, Y. SA-PSO based optimizing reader deployment in large-scale RFID Systems. J. Netw. Comput. Appl. 2015, 52, 90–100. [Google Scholar] [CrossRef]

- Ali, A.F.; Tawhid, M.A. A hybrid particle swarm optimization and genetic algorithm with population partitioning for large scale optimization problems. Ain Shams Eng. J. 2017, 8, 191–206. [Google Scholar] [CrossRef]

- Van, D.B.F.; Engelbrecht, A.P. A cooperative approach to particle swarm optimization. IEEE Trans. Evol. Comput. 2004, 8, 225–239. [Google Scholar]

- Li, X.; Yao, X. Tackling high dimensional nonseparable optimization problems by cooperatively coevolving particle swarms. In Proceedings of the 2009 IEEE Congress on Evolutionary Computation, Trondheim, Norway, 18–21 May 2009; pp. 1546–1553. [Google Scholar]

- Li, X.; Yao, X. Cooperatively coevolving particle swarms for large scale optimization. IEEE Trans. Evol. Comput. 2011, 16, 210–224. [Google Scholar]

- Chen, K.; Xue, B.; Zhang, M.; Zhou, F. Novel chaotic grouping particle swarm optimization with a dynamic regrouping strategy for solving numerical optimization tasks. Knowl. Based Syst. 2020, 194, 105568. [Google Scholar] [CrossRef]

- Omidvar, M.N.; Li, X.; Mei, Y.; Yao, X. Cooperative co-evolution with differential grouping for large scale optimization. IEEE Trans. Evol. Comput. 2013, 18, 378–393. [Google Scholar] [CrossRef]

- Omidvar, M.N.; Yang, M.; Mei, Y.; Li, X.; Yao, X. DG2: A faster and more accurate differential grouping for large-scale black-box optimization. IEEE Trans. Evol. Comput. 2017, 21, 929–942. [Google Scholar] [CrossRef]

- Sun, Y.; Kirley, M.; Halgamuge, S.K. A recursive decomposition method for large scale continuous optimization. IEEE Trans. Evol. Comput. 2017, 22, 647–661. [Google Scholar] [CrossRef]

- Sun, Y.; Omidvar, M.N.; Kirley, M.; Li, X. Adaptive threshold parameter estimation with recursive differential grouping for problem decomposition. In Proceedings of the Genetic and Evolutionary Computation Conference, Kyoto, Japan, 15–19 July 2018; pp. 889–896. [Google Scholar]

- Yang, M.; Zhou, A.; Li, C.; Yao, X. An efficient recursive differential grouping for large-scale continuous problems. IEEE Trans. Evol. Comput. 2020, 25, 159–171. [Google Scholar] [CrossRef]

- Ma, X.; Huang, Z.; Li, X.; Wang, L.; Qi, Y.; Zhu, Z. Merged differential grouping for large-scale global optimization. IEEE Trans. Evol. Comput. 2022, 26, 1439–1451. [Google Scholar] [CrossRef]

- Cao, B.; Gu, Y.; Lv, Z.; Yang, S.; Zhao, J.; Li, Y. RFID reader anticollision based on distributed parallel particle swarm optimization. IEEE Internet Things J. 2020, 8, 3099–3107. [Google Scholar] [CrossRef]

- Liu, X.; Zhan, Z.; Zhang, J. Incremental particle swarm optimization for large-scale dynamic optimization with changing variable interactions. Appl. Soft Comput. 2023, 141, 110320. [Google Scholar] [CrossRef]

- Wang, F.; Wang, X.; Sun, S. A reinforcement learning level-based particle swarm optimization algorithm for large-scale optimization. Inf. Sci. 2022, 602, 298–312. [Google Scholar] [CrossRef]

- Lan, R.; Zhu, Y.; Lu, H.; Liu, Z.; Luo, X. A two-phase learning-based swarm optimizer for large-scale optimization. IEEE Trans. Cybern. 2020, 51, 6284–6293. [Google Scholar] [CrossRef] [PubMed]

- Zhang, E.; Nie, Z.; Yang, Q.; Wang, Y.; Liu, D.; Jeon, S.W.; Zhang, J. Heterogeneous cognitive learning particle swarm optimization for large-scale optimization problems. Inf. Sci. 2023, 633, 321–342. [Google Scholar] [CrossRef]

- Bonyadi, M.R.; Michalewicz, Z. Stability analysis of the particle swarm optimization without stagnation assumption. IEEE Trans. Evol. Comput. 2015, 20, 814–819. [Google Scholar] [CrossRef]

- Zhang, H. A discrete-time switched linear model of the particle swarm optimization algorithm. Swarm Evol. Comput. 2020, 52, 100606. [Google Scholar] [CrossRef]

- Hadi, A.A.; Mohamed, A.W.; Jambi, K.M. LSHADE-SPA memetic framework for solving large-scale optimization problems. Complex Intell. Syst. 2019, 5, 25–40. [Google Scholar] [CrossRef]

- Xu, P.; Luo, W.; Lin, X.; Zhang, J.; Wang, X. A large-scale continuous optimization benchmark suite with versatile coupled heterogeneous modules. Swarm Evol. Comput. 2023, 78, 101280. [Google Scholar] [CrossRef]

- Tang, K.; Li, X.; Suganthan, P.N.; Yang, Z.; Thomas, W. Benchmark Functions for the CEC 2010 Special Session and Competition on Large-Scale Global Optimization; Nature Inspired Computation and Applications Laboratory, USTC: Hefei, China, 2007; Volume 24, pp. 1–18. [Google Scholar]

- Li, X.; Tang, K.; Omidvar, M.N.; Yang, Z.; Qin, K. Benchmark functions for the CEC 2013 special session and competition on large-scale global optimization. Gene 2013, 7, 8. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).