Abstract

Recently, the analysis of emotions in social media has been considered a significant NLP task in digital and social-media-driven environments due to their pervasive influence on communication, culture, and consumer behavior. In particular, the task of Aspect-Based Emotion Analysis (ABEA), which involves analyzing the emotions of various targets within a single sentence, has drawn attention to understanding complex and sophisticated human language. However, ABEA is a challenging task in languages with limited data and complex linguistic properties, such as Korean, which follows spiral thought patterns and has agglutinative characteristics. Therefore, we propose a Korean Target-Attention-Based Emotion Classifier (KOTAC) designed to utilize target information by unveiling emotions buried within intricate Korean language patterns. In the experiment section, we compare various methods of utilizing and representing vectors of target information for the attention mechanism. Specifically, our final model, KOTAC, shows a performance enhancement on the MTME (Multiple Targets Multiple Emotions) samples, which include multiple targets and distinct emotions within a single sentence, achieving a 0.72% increase in F1 score over a baseline model without effective target utilization. This research contributes to the development of Korean language models that better reflect syntactic features by innovating methods to not only obtain but also utilize target-focused representations.

Keywords:

emotion classification; ABSA; ABEA; attention mechanism; Korean language characteristics; social media MSC:

68T50

1. Introduction

The rapid expansion of the internet has increased accessibility to vast numbers of user-generated text data, thereby propelling the field of Natural Language Processing (NLP). In particular, opinion mining and sentiment analysis have garnered significant attention due to their applicability in diverse fields such as social network analysis and customer service [1]. Sentiment analysis classifies the sentimental state expressed in sentences or documents as positive, negative, or neutral [2,3,4]. Emotion analysis classifies emotions into predefined categories such as joy, anger, and sadness, providing a more detailed spectrum [5]. Sentiment analysis and emotion analysis have evolved into Aspect-Based Sentiment Analysis (ABSA) [6,7,8] and Aspect-Based Emotion Analysis (ABEA) [9,10]. ABSA tasks primarily address review data, aiming to identify varying sentiment polarities associated with specific aspects (targets). For instance, in the review “The cafe’s atmosphere is good, but the coffee is terrible”, aspects are “atmosphere” and “coffee”, with positive polarity for “atmosphere” and negative for “coffee”.

In the ABSA task, knowing how to handle and deal with targets is an important factor. After the appearance of the Bidirectional Encoder Representations from Transformers (BERT), research on Targeted ABSA (TABSA) or Aspect Sentiment Classification (ASC) has made significant progress. The focus is on how to construct an input with sentence pair classification problems [11,12]. In other words, sentences provide the context, and the target functions as a question to find the answer or sentiment. This methodology has spurred research that emphasizes the target using BERT’s context-aware representations. Research in [13] applied max pooling to the target vector to extract the most significant information. Another study [14] introduced multi-head attention between the sentence and target to obtain a target-specific context representation.

Compared to ABSA, ABEA needs a more complex model that focuses on targets in order to clarify the emotional spectrum. Research on emotion analysis and ABEA has been relatively delayed, partly due to the inherent challenges in categorizing human emotion. This has led to considerable debate within the academic community [15,16]. Contrary to sentiment analysis, which employs binary or ternary classification schemes that are mutually exclusive, emotion analysis is addressed as multi-label classification. This task accommodates label imbalance and requires a more elaborate model.

The lack of research on emotion analysis and ABEA is more pronounced in the Korean language. While sentiment analysis and ABSA research have been extensively explored [17,18,19], the progression into emotion analysis and ABEA has been more gradual. The recent proliferation of social media has led to a significant acceleration in the creation of Korean-specific datasets [20]. However, research addressing them to develop emotion analysis models for Korean texts is still emerging.

The unique Korean-language-specific features are shown in Table 1. The linguistic characteristics of Korean have made research on Korean models challenging. Employing an attention mechanism to concentrate on targets within sentences might help identify emotions embedded in complex linguistic structures. Firstly, Korean tends to follow a spiral thought pattern, preferring to deliver information comprehensively across sentences or paragraphs [21]. This structural preference necessitates clear target information to understand the accurate meaning of sentences. Second, Korean has agglutinative language characteristics. The morphological structure of Korean often leads to ambiguous boundaries around targets. This is because various morphemes, such as Josa (postposition) and Eomi (ending of verb), combine before and after a target [22]. By focusing on specific targets, models can clearly separate and understand the target. Third, Korean frequently employs ellipses, where sentences often rely on context for meaning, with subjects or objects sometimes omitted [23]. Attention mechanisms can enhance the model’s capability to retain information relevant to the targets.

Table 1.

A text sample showing unique characteristics of the Korean language and social media.

Target-based analysis might also address challenges arising from the unrefined nature of social media data and the complexity due to its broad spectrum of content areas. Social media encompass a variety of sources, such as entertainment, sports, and literature, and are characterized by the frequent emergence of unseen words. To address dynamic and qualitative data expansion, domain-specific Pre-Trained Language Models (PLMs) have emerged [24,25]. By leveraging these domain-sensitive embeddings, focusing on targets within diverse and evolving data enables models to quickly adapt to new terms and expressions. This approach helps models capture relevant information and filter out irrelevant noise despite the informal and varied language use.

The objective of this paper is to propose a target-attention-based emotion classifier. This model applies an attention mechanism to vectors encoded by a PLM, attending intently to the target vector for Targeted ABEA (TABEA) in Korean. We explore various methods for formulating the target vector and implementing attention. To mitigate the potential issue of overlooking broader sentence context due to overconcentration on the target vector, we consider strategies to regulate the attention output. In addition to traditional sentence pair classification methods, we also attempt a method of discerning targets solely by applying target attention. In the experiment section, we design an evaluation method to measure the model’s ability to identify contrasting emotional states within a single text. We collect evaluation samples, which we call Multi-Target Multi-Emotion (MTME), to assess this ability. Through these approaches, we aim to implement a target-attention-based model that addresses the TABEA task for data characterized by the linguistic features of the Korean language and the unique properties of social media.

The contributions of this paper can be summarized as follows:

- We propose the Korean Target-Attention-Based Emotion Classifier (KOTAC), designed to address a TABEA task. This model uniquely caters to data influenced by the linguistic features of the Korean language and the dynamics of social media. By applying an attention mechanism to targets within sentences, KOTAC aids in unveiling emotions buried within intricate language patterns. The proposed KOTAC outperforms a model that relies solely on a sentence pair approach;

- To the best of our knowledge, the present study is the first attempt to systematically deal with targets of sentences and explore various ways of formulating target vectors. This effort contributes to developing a Korean language model that reflects syntactic features;

- This study not only investigates methods to obtain representations focused on the target but also explores how to utilize these. Based on experimental results, this study further analyzes the relationships between methods, demonstrating how our findings can be interconnected and applied;

- To evaluate performance in distinguishing the contrasting affective states connected to separate targets in one text, which is the key in ABSA and ABEA tasks, this study suggests a new evaluation dataset named MTME. The proposed KOTAC model achieves particularly outstanding results.

2. Related Work

2.1. Long Short-Term Memory

Long Short-Term Memory (LSTM) networks [26] are utilized in NLP to handle sequences with long-range dependencies and capture sequential characteristics effectively. An LSTM unit computes its state through a series of gates: an input gate , a forget gate , and a cell input modulation gate . These gates collectively determine the flow of information and allow the network to retain or discard information across time steps, which is essential for handling long sequences. The core LSTM equations are as follows:

At each time step, the memory cell is updated by combining the previous cell state , which is modulated by the forget gate, with the current candidate values produced by the input gate and the cell input modulation gate. This update is captured by the following:

The hidden state , which serves as the output at each step, is then computed by filtering the updated cell state through the output gate , modulated by the non-linearity of the hyperbolic tangent function as follows:

2.2. Attention

The advent of attention mechanisms [27,28] has led to significant performance improvements across various tasks in NLP. Among these, scaled-dot product attention has been used for pivotal features, enhancing models’ ability to focus on relevant parts of the input data [29]. The mechanism computes the attention scores by taking the dot product of the query Q with all keys K, scales these scores by the square root of the dimension of the keys to prevent extremely large values, and applies a softmax function to obtain the final weights on the values V. Mathematically, it is represented as follows:

A special case of this mechanism is self-attention, where Q, K, and V are all the same matrix derived from the input data. This self-referential approach enables models to assess and assign importance to each part of the input relative to the rest, facilitating the extraction of more nuanced and context-rich information.

2.3. Transformer and Pre-Trained Language Model

The self-attention mechanism is a foundational component of Transformer models, which power many of the current state-of-the-art PLMs. The Transformer enables PLMs to understand and generate human language with remarkable accuracy. In a standard Transformer, for each token in the input sequence, the initial embedding is computed by combining token and positional embeddings as follows:

Here, represents the embedding matrix that transforms token indices into embeddings. represents the positional embeddings that provide information about the position of each token in the sentence. These embeddings collectively contribute to a comprehensive representation of each token considering both its intrinsic value and its contextual positioning within the sequence.

Each head j in a multi-head self-attention layer independently computes a distinct attention output as follows:

This architecture through multiple heads allows the model to attend to information from different representation sub-spaces at different positions.

Following the attention mechanisms, each Transformer layer includes a position-wise Feed-Forward Network (FFN), which applies further transformations to the attended representations as follows:

To stabilize the learning process and enhance model training, a layer normalization step is employed:

represents the hidden state for each input token, effectively encapsulating the information learned by the model through the processing layers for each token.

BERT revolutionizes NLP by pre-training on extensive datasets using bidirectional training, capturing nuanced contexts [30]. It introduces special tokens. The classification token ([CLS]) represents the start of inputs and encapsulates the entire sentence’s information. [SEP] separates sentence pairs, facilitating tasks like question answering and natural language inference. This structure has enabled BERT to achieve remarkable performance across a variety of sentence pair classification tasks.

BERT has laid the foundation for advanced language models, yet it requires substantial computational resources for pre-training. Subsequently, ELECTRA, introduced in [31], presents a more sample-efficient approach known as replaced token detection. Unlike BERT’s Masked Language Model (MLM) strategy, which masks words and predicts their original values, ELECTRA transforms the pre-training task by generating and distinguishing between “real” and “replaced” tokens in the input text. This new pre-training method is more efficient than the MLM because the model learns over all input tokens.

Fine-tuning PLMs on the task-specific datasets allows them to adapt the general pre-training to specialized applications, significantly improving their performance on downstream tasks. The parameters are refined by adjusting the initial parameters through a series of updates. The equation below illustrates this process.

Here, represents the parameters pre-trained on a general dataset, and denotes the parameters after fine-tuning. The term is the learning rate, which controls the step size during the update. The function is the loss function, computed using the task-specific dataset D. The gradient guides the parameter updates to minimize the loss, thus refining the model’s ability to perform specific tasks more effectively.

2.4. Aspect-Based Sentiment Analysis and Aspect-Based Emotion Analysis

ABSA is a sub-field of sentiment analysis that identifies the sentiment with respect to specific aspects of a subject of concern [6,32]. This task involves four elements: aspect term, aspect category, opinion term, and sentiment polarity [33]. For example, in the sentence “The coffee is terrible”, the elements are coffee, food, terrible, and negative. Targeted ABSA (TABSA) focuses on determining the sentiment polarity given an aspect term. Research in ABSA has predominantly utilized sentence pair classification since the advent of BERT [11,12,34]. Notable research has been conducted using context-aware representations of BERT to emphasize targets. Ref. [14] composed sequence inputs as “[CLS] + context + [SEP] + target + [SEP]” for the vanilla BERT, and ref. [35] tested reversing the order of context and target. These input forms are regarded as the most basic structure for ABSA. Ref. [14] employed multi-head attention, opting for separate inputs for context and target—formatted as “[CLS] + context + [SEP]” and “[CLS] + target + [SEP]”, respectively—using padding to manage varying lengths. This interactive approach could capture target-specific context representation. However, they did not explore combining sentence pair and attention approaches. Ref. [13] designed a method where max pooling is applied to vectors corresponding to the target words, taking the maximum value across each dimension of these vectors, and then this result is concatenated with the [CLS] token before proceeding to the fully connected layer for classification. They contrasted this method with the model where outputs at the [CLS] are directly followed by a fully connected layer, not incorporating any target information in the input. This comparison suggests the importance of awareness of the target information. Ref. [36] proposed that solely relying on the final [CLS] for classification can ignore rich semantic knowledge contained in the intermediate layers, thereby utilizing all the intermediate layers of [CLS] tokens for LSTM or attention pooling.

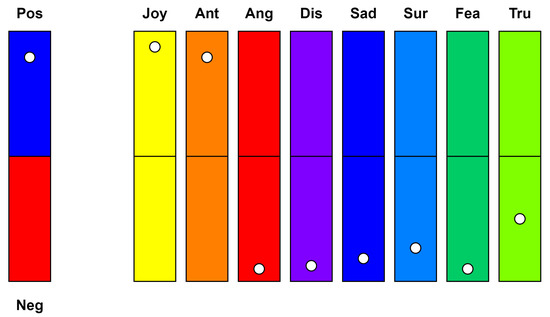

Emotion analysis explores deeper affective states beyond merely classifying sentiments as positive or negative. Figure 1 shows differences between sentiment analysis and emotion analysis. One key distinction is that not all positive or negative sentiments are equal [5]. This task involves categorizing emotions into predefined categories such as joy, anger, fear, and sadness using multi-label classification. This approach enables the identification of multiple emotions that may coexist within a single text. Because emotion has a complex spectrum, emotion analysis has also been studied in a way that uses the correlation between emotions [37,38]. Transitioning from sentiment to emotion in the context of ABSA leads to ABEA. ABEA shifts the focus from determining sentiment polarity to classifying the emotions related to aspects [9,10]. Table 2 shows the primary datasets of the sentiment analysis and emotion analysis tasks.

Figure 1.

Comparative visualization of sentiment analysis and emotion analysis. The bars represent positive (Pos) and negative (Neg) sentiments alongside the distribution of various emotions: joy (Joy), anticipation (Ant), anger (Ang), disgust (Dis), sadness (Sad), surprise (Sur), fear (Fea), and trust (Tru). White dots indicate the intensity of the affective state, serving as analogs to activation outputs from a model.

Table 2.

Primary datasets for the tasks of sentiment analysis and emotion analysis.

3. Methods

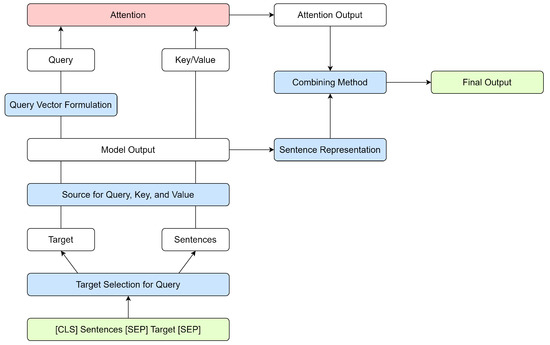

In this section, we explain our KOTAC model, which uses scaled-dot product attention [29] to apply attention to the target, where the query is the target, and the key and value are the sentences. Unlike multi-head attention, which addresses the entire context and relationships within the data, we opted for scaled-dot product attention to emphasize specific segments, particularly the target. We made this decision to enhance computational efficiency and reduce the risk of overfitting. By concentrating on specific parts of the input data, the model assigns weights to target words. This approach is expected to more effectively capture emotional states based on the relevance to the sentences and the target. Figure 2 shows the entire process of our methods. Table 3 shows all considerations for our model. Section 3.1 and Section 3.3 describe the process of constructing target vectors. Section 3.2, Section 3.4, and Section 3.5 explore the application of attention output.

Figure 2.

The architecture of the proposed Korean Target-Attention-Based Emotion Classifier for Targeted Aspect-Based Emotion Analysis.

Table 3.

All considerations for our model.

3.1. Target Selection for Query

The designation of the target that becomes the query is a pivotal factor in our attention mechanism. To clarify the target included in the sentence, we construct the input in the form of a sentence pair. For example, the input for the sentence “The cafe’s atmosphere is good, but the coffee is terrible” with the target “service” is formed as “[CLS] The cafe’s atmosphere is good, but the coffee is terrible. [SEP] coffee [SEP]”. The target (coffee) appears twice: before the first [SEP] token as part of the sentence, denoted as the Internal Target (InT), and after the first [SEP] token, standing alone, denoted as the External Target (ExT). This distinction matters for how the attention mechanism processes the target and its relationship with the context. InT is computed by proximity with adjacent words because it is influenced by its context. ExT is isolated by two [SEP] tokens, devoid of the surrounding context.

3.2. Source for Query, Key, and Value

Before applying attention, the input—combined with the target and sentences—is processed through the model to obtain the query, key, and value vectors. We tried two methods to derive the vectors: directly using the contextualized embeddings of an encoder-based PLM and enhancing these embeddings through an LSTM layer [26]. The PLM efficiently captures relationships among all words in a sentence. For the embeddings generated by the PLM, we consider the input sequence , where each input token is transformed across multiple layers of the Transformer architecture. The representation of these tokens at any layer l is updated based on all other tokens’ representations as follows:

is the initial embedding matrix, are the hidden states at layer , and E is the final embedding matrix output by the PLM.

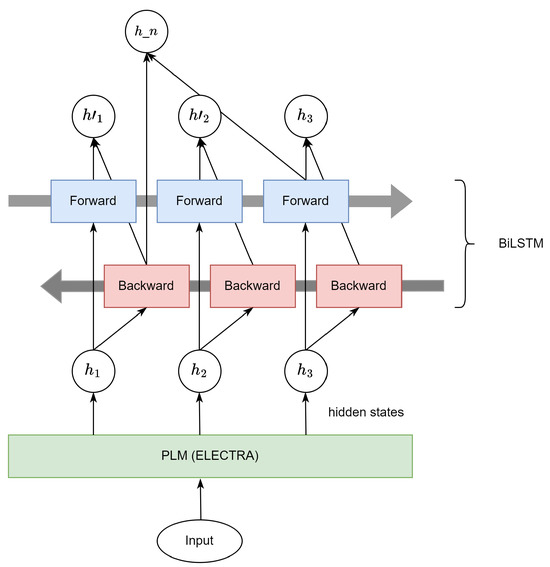

Bidirectional LSTM (BiLSTM) excels at capturing the sequential characteristics by processing information in both forward and backward directions [40,41]. Figure 3 shows the processing flow within the BiLSTM layer, illustrating how it enhances the PLM-derived embeddings. For the BiLSTM-enhanced output, the encoded vectors E obtained from the PLM are processed through a BiLSTM layer to capture both contextual information and sequential characteristics [42,43].

Figure 3.

The architecture of data processing from the PLM to the BiLSTM. This shows how the hidden states derived from the PLM are further processed by the BiLSTM to produce the BiLSTM-enhanced output.

The BiLSTM processes embeddings as follows:

By concatenating the forward and backward LSTM outputs, BiLSTM ensures a richer representation that incorporates both past and future contexts within the sequence.

3.3. Query Vector Formulation

After selecting the target used as a query and obtaining the query vector, we considered three methods for formulating the query vector: padding, average pooling, and max pooling. These methods aim to accommodate varying lengths and condense information, thereby enhancing the ability to process and interpret the target effectively.

Padding is commonly used to standardize sequence lengths for parallel processing. Padding tokens, typically zeros, are dynamically appended within the batch to ensure that all sequences align with the longest sequence length.

Average pooling summarizes the overall information of the sequence to a single vector by taking the mean of the word embeddings. It treats every word equally without distinguishing the importance of each word.

Max pooling selects the maximum value across the embeddings in each dimension. It is particularly beneficial when certain features of a sequence are more important.

Following the formulation of query vectors, we applied the attention as delineated in Equation (7).

3.4. Sentence Representation

While the attention mechanism’s output offers a refined focus on the target, we tried different strategies to supplement and modulate the attention output. Attention output might not always be sufficient to capture the overall expression, such as a consistent emotional state in a sentence. Therefore, relying solely on attention output might sometimes lead to overconcentration on specific parts of the text, potentially neglecting the broader context or holistic features. Our approach to deal with this matter is combining a sentence representation with attention output. We used [CLS] of the PLM and the final hidden state (h_n) of the BiLSTM as the sentence representations.

3.5. Combining Method

This subsection explores how we integrated the attention output obtained from Equation (7), denoted as , with the additional sentence representation derived in Section 3.4, denoted as .

We refer to the approach of using only the attention output as the final input before the loss function as X.

Element-Wise Addition (Plus) merges vectors by adding corresponding elements. This method requires both sources to have the same dimension and creates a new vector of the same dimension as the input. This approach is useful when the attention output and the sentence representation are equally informative and complementary, allowing for a direct blend of the two factors.

Concatenation (Cat) joins elements from one vector with another to form a single, extended vector. This method is advantageous for retaining the full information content without any loss.

A Gating Mechanism (Gate) learns the relative contributions of two sources dynamically. The combined input for the gate is formed by concatenating the attention output with the chosen sentence representation.

A linear transformation is then applied to this combined input, followed by a sigmoid activation function.

The resulting values, between 0 and 1, function as the gate, modulating the extent to which each source contributes to the final output.

The obtained final output O is transformed into a prediction space suitable for multi-label classification. o is projected through a linear layer to produce logits. N represents the number of emotion labels.

Then, the logits are fed into a BCEWithLogitsLoss function, which combines a sigmoid layer and binary cross-entropy loss (BCELoss) into one single class. BCEWithLogitsLoss is more numerically stable than using a plain sigmoid followed by BCELoss.

4. Experiments

4.1. Data Preparation and Explanation

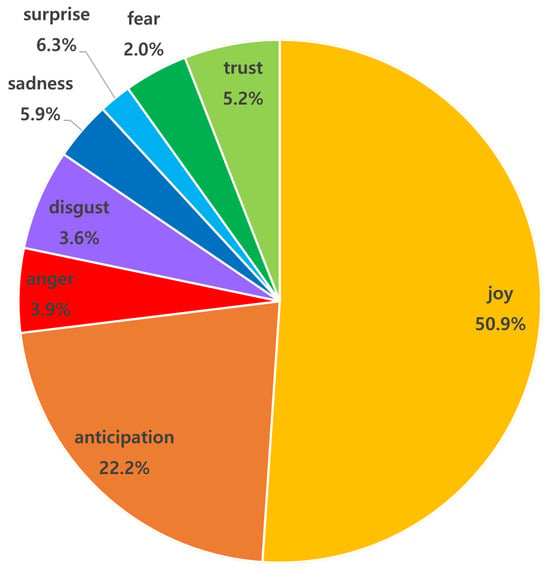

For our experiments, we utilized the 2023 National Institute of Korean Language Artificial Intelligence Language Proficiency Evaluation: Emotion Analysis Dataset (NIKL2023), which is composed of data gathered from Twitter in the field of cultural content. The dataset consists of sentences with specified targets. The task aimed to classify text associated with targets into eight emotional categories adopting Plutchik’s wheel [16]: joy, anticipation, trust, surprise, disgust, fear, anger, and sadness [20]. The task is framed as a multi-label classification problem, where each emotion category is treated as a separate label that can be either True or False for a given target. Due to the unavailability of publicly disclosed answers for the evaluation dataset, we partitioned the original training set into new training and validation datasets in a 9:1 ratio, maintaining a similar class distribution. The original validation dataset was used for the test dataset. Table 4 and Figure 4 show the distribution of our revised dataset.

Table 4.

Sizes (sentences) of the dataset.

Figure 4.

Emotion distribution of training dataset.

To more effectively assess the ability to identify contrasting emotional states within one sentence, we extracted Multi-Target Multi-Emotion (MTME) sentences from the test dataset. These samples are considered the core of ABEA tasks. Each sentence in this subset contained multiple targets, each associated with a different emotion. There was a total of 41 sentences in this subset. Despite the relatively small number of sentences, the introduction of this new evaluation approach holds value in advancing the methodology for assessing ABEA tasks.

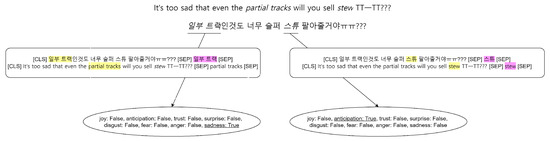

Figure 5 shows an example of our dataset. It is derived from social media and therefore remains unrefined, including grammatical inaccuracies. This sentence also demonstrates characteristics of the Korean language, such as ambiguous boundaries around targets, due to the combination of various morphemes before and after a target.

Figure 5.

Multi-TargetMulti-Emotion (MTME) example data from KorTABEA illustrating a text input and its corresponding emotion output.

4.2. Parameters and Metrics

We adopted the KcELECTRA-base for the PLM which is specifically pre-trained on datasets gathered from comments of news platforms [24]. This user-generated noisy dataset features colloquial expressions, neologisms, and frequent occurrences of non-standard language elements. As the Plus method in Section 3.5 requires sources to have the same dimension, we aligned the BiLSTM dimensions with those of KcELECTRA-base, which are 768.

We set a learning rate of and employed a linear scheduler across 30 epochs with a weight decay of 0.008. The max sequence length was 256. The training batch size was 64, and the validation batch size was 128. To ensure robust model performance and mitigate overfitting or randomness, we selected the epoch with the highest development score after a minimum of 10 epochs.

The evaluation metric was the micro F1 score, suitable for the imbalanced dataset. To ensure robustness, we conducted experiments with 5 different seeds. However, we evaluated MTME samples with 10 seeds due to the smaller size of the MTME dataset.

4.3. Experiment Setting

To assess the performance of each model on the TABEA task, we initially conducted all possible combinations explained in Section 3. This phase involved testing configurations to determine the most effective model setup. For comparison purposes, we used the sentence pair approach as our baseline, which has been used in prior studies [11,14,35]. Refer to Figure 5 for the input format. After selecting the optimal models, we progressed to further experiments in two specialized scenarios: MTME and an additional setup that does not use a sentence pair format (i.e., [SEP] Target [SEP]) in the input. This additional setup is divided into two phases.

No Target Input (NTI): In this configuration, inputs do not incorporate any target information. This format has also been used as a comparative experiment in a previous study [13].

Target Attention Only (TAO): Similar to the NTI, this configuration includes only sentences in the input, without directly designating a target. However, it leverages target attention to indirectly incorporate target information. This setup is feasible only in the internal target setting because this input form does not involve an external target when considering the target selection discussed in Section 3.1.

5. Results

5.1. Attention Score

We created sample data which are simple but take full advantage of characteristics of social media communication and Korean language, as shown in Table 1. Reflecting the characteristics of social media, the example incorporates abbreviations and informal expressions, such as “암튼 (anw)” for “아무튼 (anyway)”. Additionally, it showcases features of Korean colloquial language. Notably, the Korean writing style, known as the spiral structure, delivers information across whole sentences [21]. Thus, in our sample, the author’s intent or claims become clear only towards the end, with the subject (coffee)—which also serves as the target—omitted. Moreover, Korean language has the characteristic of frequent use of ellipses (i.e., …) to convey hesitation or humility, adding another layer of linguistic nuance.

In Figure 6, the attention scores are heightened around the ellipses and the decisive negative comment “anw meh”. This demonstrates our model’s ability to focus on parts related to the target word despite the indirect and spiral characteristic of the expression.

Figure 6.

Attention score heatmap of InT-PLM-X model.

5.2. Preliminary Model Evaluation

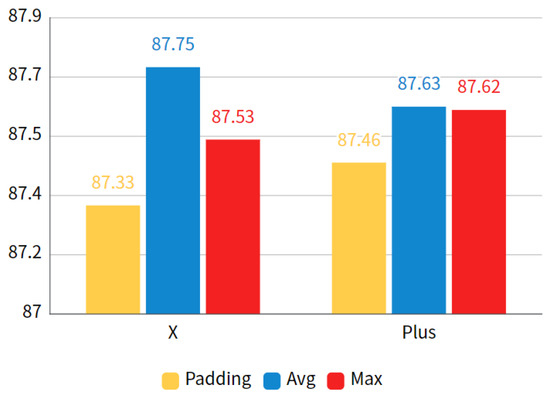

Figure 7 compares the F1 scores of query vector formulation methods as explained in Section 3.3. While adhering to the InT-PLM approach, we experimented with different query vector formulation methods—specifically, the X and Plus methods. Padding, while preserving the original sequence representation, introduces numerous non-informative tokens and increases the computational load, leading to a lower F1 score.

Figure 7.

Comparison among query vector formulations.

For our task, we found that average pooling is the most appropriate method for query vector formulation. It captures the general essence of the target and minimizes the inclusion of unnecessary data in contrast to max pooling, which focuses on identifying the most significant parts of the target words. Consequently, our experiments primarily utilized average pooling to construct the query vectors.

Table 5 shows the performances of all the combinations in our model that implemented average pooling. The overall performance of KOTAC is better than that of the baseline, which suggests that it is important to apply attention to target words. Among these, the notable models are InT-PLM-X (KOTAC-IPX) and InT-PLM-CLS-Plus (KOTAC-IPP). KOTAC-IPX outperformed the baseline by 0.4% and KOTAC-IPP by 0.28%. InT captures nuances associated with the target and is beneficial when context plays a crucial role in emotion analysis. Therefore, these configurations extract sufficient contextual information from InT, enabling the less complex combination methods, such as X or Plus.

Table 5.

Results and complexity of all possible combinations in our model that implemented average pooling.

ExT allows the attention mechanism to focus solely on the target word and enables the model to detect emotions directly associated with the target. ExT-BiLSTM-h_n-Cat outperformed the baseline by 0.37%, ExT-BiLSTM-CLS-Cat by 0.33%, and ExT-PLM-CLS-Cat by 0.3%. The lack of contextual information resulted in models using ExT performing better with more complex methods, such as Cat or BiLSTM. Although complex methods can yield improved performance in some cases, they do not guarantee superior performance across all experiments due to the potential risk of overfitting. This highlights the need for a careful evaluation of method interactions and a focus on preventing excessive complexity.

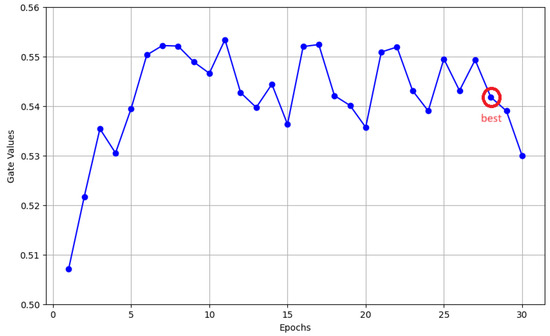

Gate configuration features a sophisticated mechanism for balancing attention output and sentence representation yet it was not effective, possibly due to its complexity. Despite its relatively modest performance, the mean gate value graph in Figure 8 offers unique insights into the relative importance of attention output versus sentence representation. At the best validation score epoch (28th), the value is 0.5418, indicating a nearly balanced but slightly greater emphasis on the attention output over the sentence representation. Consequently, we can see that the attention mechanism plays a pivotal role in the model’s decision-making process.

Figure 8.

The graph of mean gate values for the InT-PLM-CLS-Gate model.

5.3. Advanced Assessment: Multi-Target Multi-Emotion Samples and Non-Sentence Pair Format Setup

Based on the detailed analyses and experimental results discussed in the previous section, we propose KOTAC-IPX and KOTAC-IPP models to be the most effective and efficient models. KOTAC-IPX is the simplest but shows the highest performance, and KOTAC-IPP is relatively simple but achieves outstanding and stable results. Therefore, we conducted additional experiments with these models, as explained in Section 4.3.

Table 6 shows the comparative performance of the baseline and our models across both all data and the MTME samples. Table 7, Table 8, Table 9, Table 10, Table 11 and Table 12 show the confusion matrices of the baseline and our models. This comparison reveals the challenge of MTME. When multiple targets exist, accurately classifying emotions is complex. In this scenario, the performance improvement of KOTAC-IPX is remarkable. KOTAC-IPX outperformed the baseline by 0.72%. The focused representations on targets significantly contributed to the improved handling of MTME. Conversely, KOTAC-IPP produced comparably lower scores in MTME compared to the entire dataset, showing a drop of 0.43% below the baseline. This observed decrease might be attributed to the Plus method’s incorporation of additional sentence information, leading to an overgeneralization of emotional states across the sentence. This method might extract emotions that are not directly related to the target but rather the entire sentence context. Evidence of this tendency can be found in Table 11 and Table 12. These tables display a notably higher number of instances with false positives and fewer instances of true negatives.

Table 6.

F1 score comparison among models with further experiments including MTME samples and additional setup that does not use a sentence pair format.

Table 7.

Confusion matrix of baseline with all data.

Table 8.

Confusion matrix of baseline with MTME samples.

Table 9.

Confusion matrix of KOTAC-IPX with all data.

Table 10.

Confusion matrix of KOTAC-IPX with MTME samples.

Table 11.

Confusion matrix of KOTAC-IPP with all data.

Table 12.

Confusion matrix of KOTAC-IPP with MTME samples.

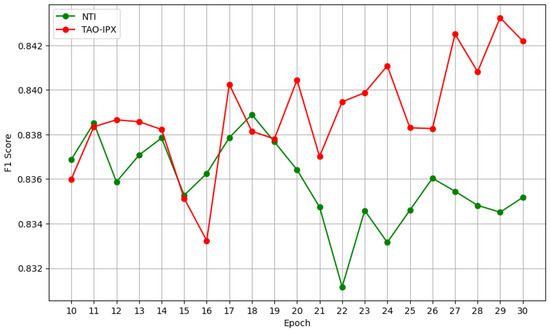

The results reconfirm the effectiveness of including the target directly in the input for sentence pair classification. However, we also observed improved scores with target attention even without direct target input. In the MTME samples, TAO-IPX outperformed NTI by 0.5%. While the performance difference between NTI and TAO-IPX experiments with all data might not be immediately striking, the development score trends depicted in Figure 9 provide another viewpoint. Unlike NTI, which exhibits fluctuating scores across epochs, TAO-IPX displays an ascending trend, consistently surpassing NTI in later epochs. Further optimization of hyperparameters might improve performance.

Figure 9.

The graph of development score for NTI and TAO-IPX model experiments with entire dataset.

6. Conclusions

In this paper, we proposed a model, the Korean Target-Attention-Based Emotion Classifier (KOTAC), to solve Korean Targeted Aspect-Based Emotion Analysis (TABEA) tasks. This model applies scaled-dot product attention to focus on the target, with the target serving as the query and the sentences as both key and value. We explored various methods to generate and apply the target vector. Given the importance of extracting the target, which serves as the query, we structured the input in the form of a sentence pair. We considered two approaches: using an internal target within the sentence as the query and using an external target outside the sentence as the query. To obtain the query, key, and value vectors, we tried either the raw output of a PLM or BiLSTM-enhanced output. While the attention mechanism provides a focused analysis of the target, we also employed diverse methods using sentence representations, recognizing the potential risk that attention output alone might not adequately capture the sentence’s overall context.

Our proposed KOTAC model effectively identified and focused on affective parts related to the target even in the complex characteristics of the Korean language and social media data. Through comprehensive analysis and comparison of various methods, we identified and presented the most suitable configurations for the TABEA task, as detailed in Table 5. Using these optimal configurations, we then conducted additional experiments involving MTME and non-sentence pair formats, presented in Table 6. We confirmed that indirect target representation via target attention led to improvement even without direct target inclusion. Specifically, in the context of Multi-Target Multi-Emotion (MTME) scenarios where contrasting affective states are connected to separate targets within one text, our model yielded a performance enhancement of 0.72% in F1 micro score over the baseline, demonstrating its effectiveness in complex emotional analysis.

Despite our attempts with various model configurations, simpler models often exhibited superior performance. This indicates the possibility of further analysis and optimization. Additionally, the MTME dataset was too small, potentially affecting the generalizability and robustness of the findings. For future work, obtaining a larger dataset is crucial as it will enable a more precise and comprehensive analysis of the models’ performance. In another approach, we propose a dual strategy: employing the configuration that uses only the attention output for MTME scenarios to spotlight target-focused analysis and including sentence representation for texts exhibiting consistent emotional states, thereby tailoring our analysis to the specific demands of each scenario. In future research, our model’s methodology might be applied to other tasks, such as entity recognition and relation extraction, where the designation of the target is important just as in the TABEA task.

Author Contributions

Conceptualization, J.K.; methodology, E.K. and J.K.; software, E.K.; validation, E.K.; investigation, E.K.; data curation, E.K.; writing—original draft, E.K. and J.K.; supervision, Y.C. and J.K.; project administration, Y.C. and J.K.; funding acquisition, J.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. RS-2023-00250532).

Data Availability Statement

Restrictions apply to the availability of these data. Data was obtained from National Institute of Korean Language and are available at https://kli.korean.go.kr/benchmark/task/taskList.do?taskId=8&clCd=END_TASK&subMenuId=sub01 (accessed on 15 April 2024) with the permission of National Institute of Korean Language.

Acknowledgments

This work was supported by Hankuk University of Foreign Studies Research Fund (of 2024).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| [CLS] | Classification token |

| [SEP] | Separate token |

| ABSA | Aspect-Based Sentiment Analysis |

| ABEA | Aspect-Based Emotion Analysis |

| ASC | Aspect Sentiment Classification |

| BCE | Binary Cross-Entropy |

| BERT | Bidirectional Encoder Representations from Transformers |

| BiLSTM | Bidirectional Long Short-Term Memory |

| ELECTRA | Efficiently Learning an Encoder that Classifies Token Replacements Accurately |

| ExT | External Target |

| FFN | Feed-Forward Network |

| h_n | Final hidden states of BiLSTM |

| InT | Internal Target |

| KOTAC | Korean Target Attention Classifier |

| LSTM | Long Short-Term Memory |

| MLM | Masked Language Model |

| MTME | Multi-Target Multi-Emotion |

| NIKL | National Institute of Korean Language |

| NLP | Natural Language Processing |

| NTI | No Target Input |

| PLM | Pre-Trained Language Model |

| TABEA | Targeted Aspect-Based Emotion Analysis |

| TABSA | Targeted Aspect-Based Sentiment Analysis |

| TAO | Target Attention Only |

References

- Pang, B.; Lee, L. Opinion Mining and Sentiment Analysis. Found. Trends Inf. Retr. 2008, 2, 1–135. [Google Scholar] [CrossRef]

- Dang, N.C.; Moreno-García, M.N.; De la Prieta, F. Sentiment Analysis Based on Deep Learning: A Comparative Study. Electronics 2020, 9, 483. [Google Scholar] [CrossRef]

- Maas, A.L.; Daly, R.E.; Pham, P.T.; Huang, D.; Ng, A.Y.; Potts, C. Learning Word Vectors for Sentiment Analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011; pp. 142–150. [Google Scholar]

- Socher, R.; Perelygin, A.; Wu, J.; Chuang, J.; Manning, C.D.; Ng, A.; Potts, C. Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 1631–1642. [Google Scholar]

- Seyeditabari, A.; Tabari, N.; Zadrozny, W. Emotion Detection in Text: A Review. arXiv 2018, arXiv:1806.00674. [Google Scholar]

- Pontiki, M.; Galanis, D.; Pavlopoulos, J.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S. SemEval-2014 Task 4: Aspect Based Sentiment Analysis. In Proceedings of the 8th International Workshop on Semantic Evaluation (SemEval 2014), Dublin, Ireland, 23–24 August 2014; pp. 27–35. [Google Scholar] [CrossRef]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Manandhar, S.; Androutsopoulos, I. SemEval-2015 Task 12: Aspect Based Sentiment Analysis. In Proceedings of the 9th International Workshop on Semantic Evaluation (SemEval 2015), Denver, CO, USA, 4–5 June 2015; pp. 486–495. [Google Scholar] [CrossRef]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S.; AL-Smadi, M.; Al-Ayyoub, M.; Zhao, Y.; Qin, B.; De Clercq, O.; et al. SemEval-2016 Task 5: Aspect Based Sentiment Analysis. In Proceedings of the 10th International Workshop on Semantic Evaluation (SemEval-2016), San Diego, CA, USA, 16–17 June 2016; pp. 19–30. [Google Scholar] [CrossRef]

- Padme, S.B.; Kulkarni, P.V. Aspect Based Emotion Analysis on Online User-Generated Reviews. In Proceedings of the 2018 9th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Bengaluru, India, 10–12 July 2018; pp. 1–5. [Google Scholar] [CrossRef]

- De Bruyne, L.; Karimi, A.; De Clercq, O.; Prati, A.; Hoste, V. Aspect-Based Emotion Analysis and Multimodal Coreference: A Case Study of Customer Comments on Adidas Instagram Posts. In Proceedings of the Thirteenth Language Resources and Evaluation Conference, Marseille, France, 20–25 June 2022; pp. 574–580. [Google Scholar]

- Sun, C.; Huang, L.; Qiu, X. Utilizing BERT for Aspect-Based Sentiment Analysis via Constructing Auxiliary Sentence. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 380–385. [Google Scholar] [CrossRef]

- Hoang, M.; Bihorac, O.A.; Rouces, J. Aspect-Based Sentiment Analysis using BERT. In Proceedings of the 22nd Nordic Conference on Computational Linguistics, Turku, Finland, 30 September–2 October 2019; pp. 187–196. [Google Scholar]

- Gao, Z.; Feng, A.; Song, X.; Wu, X. Target-Dependent Sentiment Classification with BERT. IEEE Access 2019, 7, 154290–154299. [Google Scholar] [CrossRef]

- Song, Y.; Wang, J.; Jiang, T.; Liu, Z.; Rao, Y. Targeted Sentiment Classification with Attentional Encoder Network. In Lecture Notes in Computer Science; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 93–103. [Google Scholar] [CrossRef]

- Ekman, P. An argument for basic emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Plutchik, R. Chapter 1—A general psychoevolutionary theory of emotion. In Theories of Emotion; Plutchik, R., Kellerman, H., Eds.; Academic Press: Cambridge, MA, USA, 1980; pp. 3–33. [Google Scholar] [CrossRef]

- Wen, H.; Zhang, Z. SAKP: A Korean Sentiment Analysis Model via Knowledge Base and Prompt Tuning. In Proceedings of the 2023 IEEE 3rd International Conference on Computer Communication and Artificial Intelligence (CCAI), Taiyuan, China, 26–28 May 2023; pp. 147–152. [Google Scholar] [CrossRef]

- Song, M.; Park, H.; shik Shin, K. Attention-based long short-term memory network using sentiment lexicon embedding for aspect-level sentiment analysis in Korean. Inf. Process. Manag. 2019, 56, 637–653. [Google Scholar] [CrossRef]

- Hahm, Y.; Yoon, C.; Jeong, Y.; Kang, Y.; Park, S.; Lee, Y.; Lim, K. An Analysis of GPT-4 Prompting for Korean Aspect-based Sentiment Analysis. In Proceedings of the Korea Computer Congress of Korean Institute of Information Scientists and Engineers, Jeju, Republic of Korea, 18–20 June 2023; pp. 760–762. [Google Scholar]

- Lee, Y.; Kim, S.; Yu, J.; Lee, J.; Kwon, J.; Shin, H.; Kim, D.; Lee, J.; Han, H.; Seo, E. 2022 Corpus Emotion Analysis and Research. 2022. Available online: https://www.korean.go.kr/front/reportData/reportDataView.do?mn_id=207&searchOrder=years&report_seq=1106&pageIndex=1 (accessed on 15 April 2024).

- Kaplan, R. Cultural Thought Patterns in Inter-Cultural Education. Lang. Learn. 2006, 16, 1–20. [Google Scholar] [CrossRef]

- Kim, H.; Kim, S.; Kang, I.; Kwak, N.; Fung, P. Korean Language Modeling via Syntactic Guide. In Proceedings of the Thirteenth Language Resources and Evaluation Conference, Marseille, France, 20–25 June 2022; pp. 2841–2849. [Google Scholar]

- Park, A.; Lim, S.; Hong, M. Zero Object Resolution in Korean. In Proceedings of the 29th Pacific Asia Conference on Language, Information and Computation, Shanghai, China, 30 October–1 November 2015; pp. 439–448. [Google Scholar]

- Lee, J. KcELECTRA: Korean Comments ELECTRA. 2021. Available online: https://github.com/Beomi/KcELECTRA (accessed on 15 April 2024).

- Zhang, X.; Malkov, Y.; Florez, O.; Park, S.; McWilliams, B.; Han, J.; El-Kishky, A. TwHIN-BERT: A Socially-Enriched Pre-trained Language Model for Multilingual Tweet Representations at Twitter. arXiv 2023, arXiv:2209.07562. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2016, arXiv:1409.0473. [Google Scholar]

- Luong, T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1412–1421. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Glasgow, UK, 2017; Volume 30. [Google Scholar]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; Burstein, J., Doran, C., Solorio, T., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; Volume 1 (Long and Short Papers), pp. 4171–4186. [Google Scholar] [CrossRef]

- Clark, K.; Luong, M.T.; Le, Q.V.; Manning, C.D. ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators. arXiv 2020, arXiv:2003.10555. [Google Scholar]

- Zhang, W.; Li, X.; Deng, Y.; Bing, L.; Lam, W. A Survey on Aspect-Based Sentiment Analysis: Tasks, Methods, and Challenges. arXiv 2022, arXiv:2203.01054. [Google Scholar] [CrossRef]

- Zhang, W.; Deng, Y.; Li, X.; Yuan, Y.; Bing, L.; Lam, W. Aspect Sentiment Quad Prediction as Paraphrase Generation. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Virtual Event, 7–11 November 2021; pp. 9209–9219. [Google Scholar] [CrossRef]

- Park, H.; Shin, K. Aspect-Based Sentiment Analysis Using BERT: Developing Aspect Category Sentiment Classification Models. J. Intell. Inf. Syst. 2020, 26, 1–25. [Google Scholar]

- Xu, H.; Liu, B.; Shu, L.; Yu, P. BERT Post-Training for Review Reading Comprehension and Aspect-based Sentiment Analysis. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 2324–2335. [Google Scholar] [CrossRef]

- Song, Y.; Wang, J.; Liang, Z.; Liu, Z.; Jiang, T. Utilizing BERT Intermediate Layers for Aspect Based Sentiment Analysis and Natural Language Inference. arXiv 2020, arXiv:2002.04815. [Google Scholar]

- Alhuzali, H.; Ananiadou, S. SpanEmo: Casting Multi-label Emotion Classification as Span-prediction. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Online, 19–23 April 2021; pp. 1573–1584. [Google Scholar] [CrossRef]

- Xu, P.; Liu, Z.; Winata, G.I.; Lin, Z.; Fung, P. EmoGraph: Capturing Emotion Correlations using Graph Networks. arXiv 2020, arXiv:2008.09378. [Google Scholar]

- Mohammad, S.; Bravo-Marquez, F.; Salameh, M.; Kiritchenko, S. SemEval-2018 Task 1: Affect in Tweets. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 1–17. [Google Scholar] [CrossRef]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF Models for Sequence Tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Graves, A.; Rahman Mohamed, A.; Hinton, G. Speech Recognition with Deep Recurrent Neural Networks. arXiv 2013, arXiv:1303.5778. [Google Scholar]

- Lu, X.; Li, Z.; Tong, Y.; Zhao, Y.; Qin, B. HIT-SCIR at WASSA 2023: Empathy and Emotion Analysis at the Utterance-Level and the Essay-Level. In Proceedings of the 13th Workshop on Computational Approaches to Subjectivity, Sentiment, & Social Media Analysis, Toronto, ON, Canada, 14 July 2023; Barnes, J., De Clercq, O., Klinger, R., Eds.; Association for Computational Linguistics: Toronto, ON, Canada, 2023; pp. 574–580. [Google Scholar]

- Lu, D. daminglu123 at SemEval-2022 Task 2: Using BERT and LSTM to Do Text Classification. In Proceedings of the 16th International Workshop on Semantic Evaluation (SemEval-2022), Online, 14–15 July 2022; Emerson, G., Schluter, N., Stanovsky, G., Kumar, R., Palmer, A., Schneider, N., Singh, S., Ratan, S., Eds.; Association for Computational Linguistics: Seattle, WA, USA, 2022; pp. 186–189. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).