A Depth-Progressive Initialization Strategy for Quantum Approximate Optimization Algorithm

Abstract

1. Introduction

2. QAOA: Background and Notation

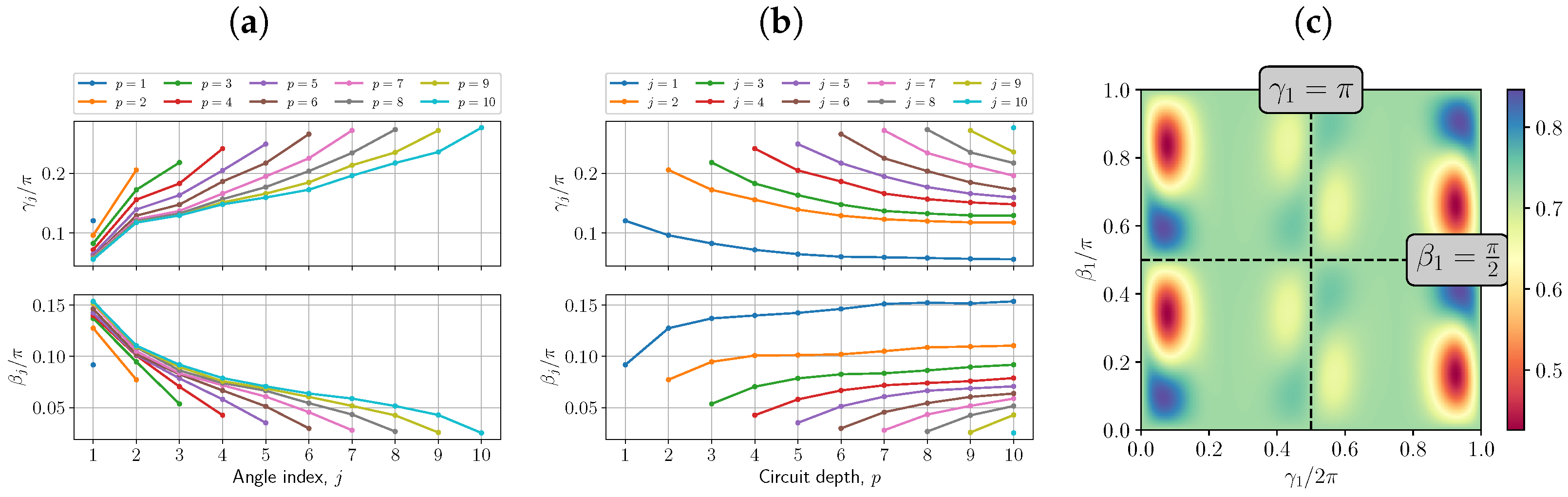

3. Patterns in the Optimal Parameters of QAOA

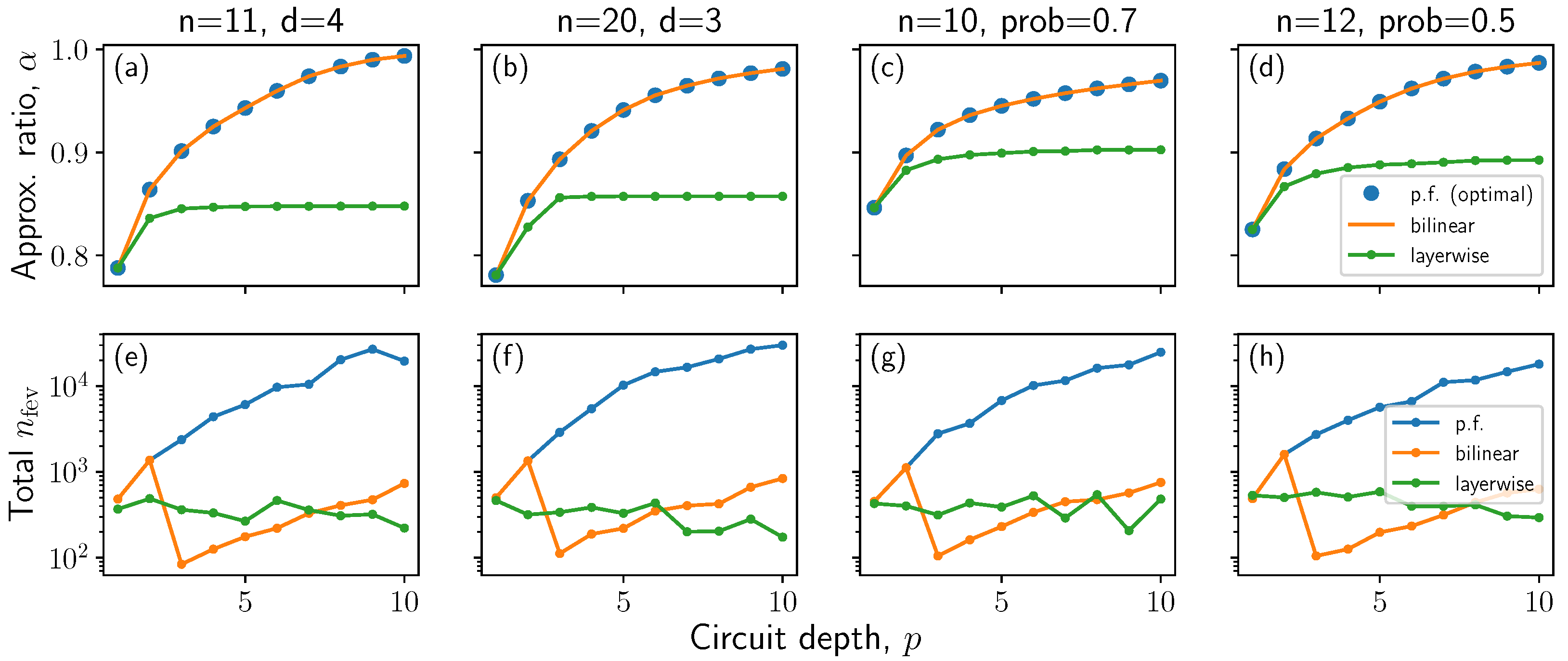

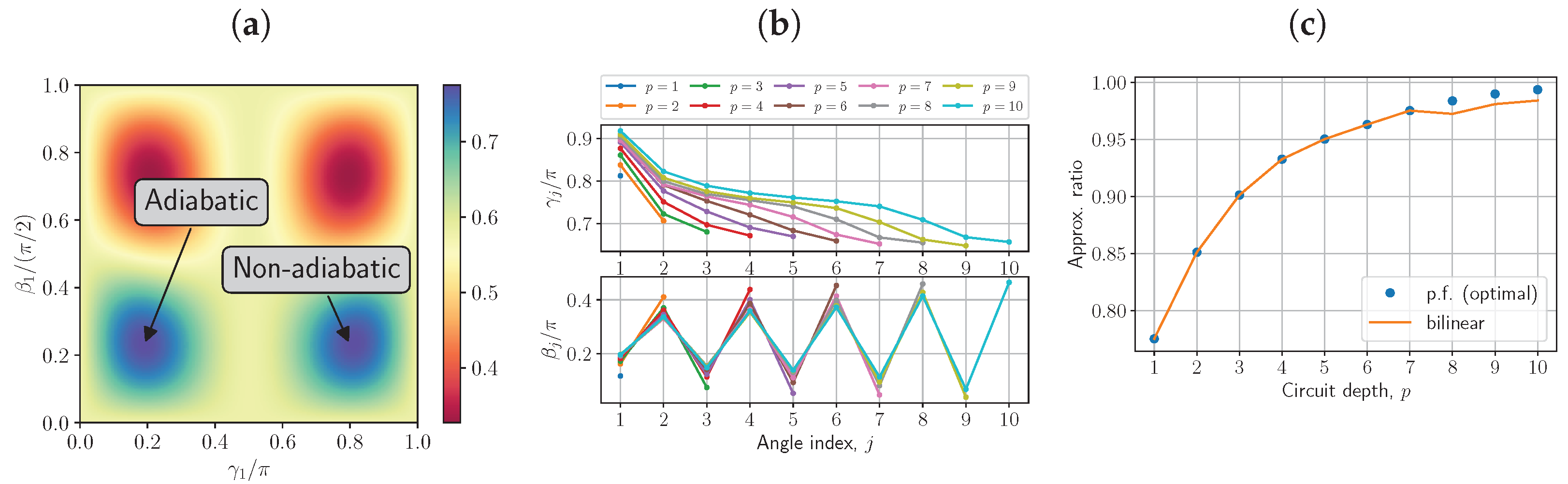

3.1. Resemblance to the Quantum Adiabatic Evolution

3.2. Non-Optimality of Previous Parameters

3.3. Bounded Optimization of QAOA

4. Bilinear Strategy

| Algorithm 1 Bilinear initialization |

|

5. Results

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Properties of QAOA Max-Cut

Appendix B. Non-Adiabatic Path for Odd-Regular Graphs

References

- Farhi, E.; Goldstone, J.; Gutmann, S. A Quantum Approximate Optimization Algorithm. arXiv 2014, arXiv:1411.4028. [Google Scholar]

- Crooks, G. Performance of the Quantum Approximate Optimization Algorithm on the Maximum Cut Problem. arXiv 2018, arXiv:1811.08419. [Google Scholar]

- Guerreschi, G.G.; Matsuura, A.Y. QAOA for Max-Cut requires hundreds of qubits for quantum speed-up. Sci. Rep. 2019, 9, 6903. [Google Scholar] [CrossRef]

- Farhi, E.; Harrow, A.W. Quantum Supremacy through the Quantum Approximate Optimization Algorithm. arXiv 2019, arXiv:1602.07674. [Google Scholar]

- Moussa, C.; Calandra, H.; Dunjko, V. To quantum or not to quantum: Towards algorithm selection in near-term quantum optimization. Quantum Sci. Technol. 2020, 5, 044009. [Google Scholar] [CrossRef]

- Marwaha, K. Local classical MAX-CUT algorithm outperforms p = 2 QAOA on high-girth regular graphs. Quantum 2021, 5, 437. [Google Scholar] [CrossRef]

- Basso, J.; Farhi, E.; Marwaha, K.; Villalonga, B.; Zhou, L. The Quantum Approximate Optimization Algorithm at High Depth for MaxCut on Large-Girth Regular Graphs and the Sherrington-Kirkpatrick Model; Schloss Dagstuhl—Leibniz-Zentrum für Informatik: Wadern, Germany, 2022. [Google Scholar] [CrossRef]

- Akshay, V.; Philathong, H.; Campos, E.; Rabinovich, D.; Zacharov, I.; Zhang, X.M.; Biamonte, J. On Circuit Depth Scaling For Quantum Approximate Optimization. arXiv 2022, arXiv:2205.01698. [Google Scholar] [CrossRef]

- Wurtz, J.; Love, P. MaxCut quantum approximate optimization algorithm performance guarantees for p > 1. Phys. Rev. A 2021, 103, 042612. [Google Scholar] [CrossRef]

- Uvarov, A.V.; Biamonte, J.D. On barren plateaus and cost function locality in variational quantum algorithms. J. Phys. A Math. Theor. 2021, 54, 245301. [Google Scholar] [CrossRef]

- Cerezo, M.; Sone, A.; Volkoff, T.; Cincio, L.; Coles, P.J. Cost function dependent barren plateaus in shallow parametrized quantum circuits. Nat. Commun. 2021, 12, 1791. [Google Scholar] [CrossRef]

- Wang, S.; Fontana, E.; Cerezo, M.; Sharma, K.; Sone, A.; Cincio, L.; Coles, P.J. Noise-induced barren plateaus in variational quantum algorithms. Nat. Commun. 2021, 12, 6961. [Google Scholar] [CrossRef] [PubMed]

- Grant, E.; Wossnig, L.; Ostaszewski, M.; Benedetti, M. An initialization strategy for addressing barren plateaus in parametrized quantum circuits. Quantum 2019, 3, 214. [Google Scholar] [CrossRef]

- Zhu, L.; Tang, H.L.; Barron, G.S.; Calderon-Vargas, F.A.; Mayhall, N.J.; Barnes, E.; Economou, S.E. An adaptive quantum approximate optimization algorithm for solving combinatorial problems on a quantum computer. arXiv 2020, arXiv:2005.10258. [Google Scholar] [CrossRef]

- Sack, S.H.; Serbyn, M. Quantum annealing initialization of the quantum approximate optimization algorithm. arXiv 2021, arXiv:2101.05742. [Google Scholar] [CrossRef]

- Shaydulin, R.; Safro, I.; Larson, J. Multistart Methods for Quantum Approximate optimization. In Proceedings of the 2019 IEEE High Performance Extreme Computing Conference (HPEC), Waltham, MA, USA, 24–26 September 2019. [Google Scholar] [CrossRef]

- Shaydulin, R.; Wild, S.M. Exploiting Symmetry Reduces the Cost of Training QAOA. IEEE Trans. Quantum Eng. 2021, 2, 1–9. [Google Scholar] [CrossRef]

- Alam, M.; Ash-Saki, A.; Ghosh, S. Accelerating Quantum Approximate Optimization Algorithm Using Machine Learning. In Proceedings of the 23rd Conference on Design, Automation and Test in Europe, DATE ’20, Grenoble, France, 9–13 March 2020; EDA Consortium: San Jose, CA, USA, 2020; pp. 686–689. [Google Scholar]

- Moussa, C.; Wang, H.; Bäck, T.; Dunjko, V. Unsupervised strategies for identifying optimal parameters in Quantum Approximate Optimization Algorithm. EPJ Quantum Technol. 2022, 9, 11. [Google Scholar] [CrossRef]

- Amosy, O.; Danzig, T.; Porat, E.; Chechik, G.; Makmal, A. Iterative-Free Quantum Approximate Optimization Algorithm Using Neural Networks. arXiv 2022, arXiv:2208.09888. [Google Scholar] [CrossRef]

- Khairy, S.; Shaydulin, R.; Cincio, L.; Alexeev, Y.; Balaprakash, P. Learning to Optimize Variational Quantum Circuits to Solve Combinatorial Problems. Proc. AAAI Conf. Artif. Intell. 2020, 34, 2367–2375. [Google Scholar] [CrossRef]

- Deshpande, A.; Melnikov, A. Capturing Symmetries of Quantum Optimization Algorithms Using Graph Neural Networks. Symmetry 2022, 14, 2593. [Google Scholar] [CrossRef]

- Brandao, F.G.S.L.; Broughton, M.; Farhi, E.; Gutmann, S.; Neven, H. For Fixed Control Parameters the Quantum Approximate Optimization Algorithm’s Objective Function Value Concentrates for Typical Instances. arXiv 2018, arXiv:1812.04170. [Google Scholar]

- Akshay, V.; Rabinovich, D.; Campos, E.; Biamonte, J. Parameter Concentration in Quantum Approximate Optimization. arXiv 2021, arXiv:2103.11976. [Google Scholar] [CrossRef]

- Galda, A.; Liu, X.; Lykov, D.; Alexeev, Y.; Safro, I. Transferability of optimal QAOA parameters between random graphs. arXiv 2021, arXiv:2106.07531. [Google Scholar]

- Lee, X.; Saito, Y.; Cai, D.; Asai, N. Parameters Fixing Strategy for Quantum Approximate Optimization Algorithm. In Proceedings of the 2021 IEEE International Conference on Quantum Computing and Engineering (QCE), Broomfield, CO, USA, 17–22 October 2021. [Google Scholar] [CrossRef]

- Zhou, L.; Wang, S.T.; Choi, S.; Pichler, H.; Lukin, M.D. Quantum Approximate Optimization Algorithm: Performance, Mechanism, and Implementation on Near-Term Devices. Phys. Rev. X 2020, 10, 021067. [Google Scholar] [CrossRef]

- Campos, E.; Rabinovich, D.; Akshay, V.; Biamonte, J. Training saturation in layerwise quantum approximate optimization. Phys. Rev. A 2021, 104, L030401. [Google Scholar] [CrossRef]

- Karp, R. Reducibility among combinatorial problems. In Complexity of Computer Computations; Miller, R., Thatcher, J., Eds.; Plenum Press: New York, NY, USA, 1972; pp. 85–103. [Google Scholar]

- Farhi, E.; Goldstone, J.; Gutmann, S.; Sipser, M. Quantum Computation by Adiabatic Evolution. arXiv 2000, arXiv:0001106. [Google Scholar]

- Cook, J.; Eidenbenz, S.; Bärtschi, A. The Quantum Alternating Operator Ansatz on Maximum k-Vertex Cover. In Proceedings of the 2020 IEEE International Conference on Quantum Computing and Engineering (QCE), Denver, CO, USA, 12–16 October 2020; pp. 83–92. [Google Scholar]

- Willsch, M.; Willsch, D.; Jin, F.; De Raedt, H.; Michielsen, K. Benchmarking the quantum approximate optimization algorithm. Quantum Inf. Process. 2020, 19, 197. [Google Scholar] [CrossRef]

- Lotshaw, P.C.; Humble, T.S.; Herrman, R.; Ostrowski, J.; Siopsis, G. Empirical performance bounds for quantum approximate optimization. Quantum Inf. Process. 2021, 20, 403. [Google Scholar] [CrossRef]

- Shaydulin, R.; Lotshaw, P.C.; Larson, J.; Ostrowski, J.; Humble, T.S. Parameter Transfer for Quantum Approximate Optimization of Weighted MaxCut. arXiv 2022, arXiv:2201.11785. [Google Scholar] [CrossRef]

- Morales, J.L.; Nocedal, J. Remark on “Algorithm 778: L-BFGS-B: Fortran Subroutines for Large-Scale Bound Constrained Optimization”. ACM Trans. Math. Softw. 2011, 38, 550–560. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, X.; Xie, N.; Cai, D.; Saito, Y.; Asai, N. A Depth-Progressive Initialization Strategy for Quantum Approximate Optimization Algorithm. Mathematics 2023, 11, 2176. https://doi.org/10.3390/math11092176

Lee X, Xie N, Cai D, Saito Y, Asai N. A Depth-Progressive Initialization Strategy for Quantum Approximate Optimization Algorithm. Mathematics. 2023; 11(9):2176. https://doi.org/10.3390/math11092176

Chicago/Turabian StyleLee, Xinwei, Ningyi Xie, Dongsheng Cai, Yoshiyuki Saito, and Nobuyoshi Asai. 2023. "A Depth-Progressive Initialization Strategy for Quantum Approximate Optimization Algorithm" Mathematics 11, no. 9: 2176. https://doi.org/10.3390/math11092176

APA StyleLee, X., Xie, N., Cai, D., Saito, Y., & Asai, N. (2023). A Depth-Progressive Initialization Strategy for Quantum Approximate Optimization Algorithm. Mathematics, 11(9), 2176. https://doi.org/10.3390/math11092176