1. Introduction

Digitization of the global economy is steadily progressing into a new future. We are shifting from traditional ways of transacting and investing to digitized ones. This signifies the importance of digital currency in the current economic scenario. Nowadays, every business wants a more digitized form of implementation using digital technology in some forms. To enhance their efficiency, many business enterprises are using digital information systems and software such as Systems Applications and Products (SAP) [

1]. To explore the benefits of digital currency, a review of centralization in a decentralized ledger is extensively presented [

2]. Such currency can be assumed to be goods as well as money at a time and is known as cryptocurrency [

3].

However, the growth of the Bitcoin market is heavily affected by its volatility. It is eight times more volatile than the stock market. Many investors and stakeholders are still very skeptical about the acceptance of Bitcoin as a reliable asset. A better model for predicting Bitcoin price can be a great help in the expansion of the crypto market. Many researchers have used various artificial neural networks (ANNs)-based models for Bitcoin prediction. A few machine learning and deep learning methods have been used to predict the Bitcoin prices as well as other cryptocurrencies [

4,

5,

6]. We proposed a low complexity polynomial-based neural network optimized by a new mutated climb monkey algorithm for an efficient prediction of daily Bitcoin closing price [

7]. Baser and Sadorsky [

8] studied the effect of technical indicators, macroeconomic variables and multi-step forecast horizon (from 1 day to 20 days) on Bitcoin price direction prediction using random forests. Erfanian et al. [

9] analyzed and compared the forecasting efficiency of machine learning algorithms with statistical analysis by using technical indicators, microeconomic variables, and macroeconomic variables for short-term and long-term Bitcoin price prediction. Rathore et al. [

10] demonstrated the qualitative prediction of Bitcoin price by using seasonal inputs for training.

Although many recent studies have addressed the volatility of Bitcoin price by using different input variables, machine learning, and deep learning algorithms, there is very little literature available that predicts the Bitcoin price by analyzing the data preprocessing techniques.

Data preprocessing is a process of cleaning, reducing, extracting, scaling, and handling missing values in a raw dataset. Many researchers have used feature extraction methods to improve the prediction accuracy of Bitcoin [

11,

12]. Rajabi et al. [

13] proposed the use of deep learning in selecting an optimal window size for prediction and then using the optimal size for actual Bitcoin price forecasting.

Data normalization is a vital preprocessing step for the majority of classification and regression problems. This method utilizes various ways to map the highly volatile data to a limited range to improve the prediction capability of various models. In the literature, Shanker et al. [

14] studied the data standardization on the training of a neural network and concluded that with the increase in network size, the self-scalability of the network increases, and hence, there is no effect of scalability on network performance.

In contrast, many researchers have demonstrated the significant role played by various data-scaling methods in classification problems [

15,

16]. It has been observed that the data normalization type may be used to improve classification accuracy. Some researchers have studied the impact of various normalization techniques on stock price prediction and had suggested that the data normalization type plays a very significant role in prediction and so it should be appropriately selected [

17,

18].

However, the effect of data normalization on Bitcoin pricing is yet to be analyzed. As the Bitcoin price is highly volatile, to obtain an efficient model for prediction, the normalization of the Bitcoin dataset needs to be explored. The main goal of this investigation is to analyze the influence of data normalization techniques on the prediction capability of a Bitcoin price predictor. Namely, this study investigated the effect of nine different data normalization techniques on the Legendre-based neural network model for daily Bitcoin price prediction.

The novelty of this approach is that it proposes a new dual normalization technique for efficient Bitcoin price prediction. Hybrid normalizations have been extensively used in the medical domain, but in cryptocurrency prediction, researchers have mostly used min–max normalization or z-score normalization. This is the first paper to suggest the analysis of 10 normalization methods using 15 performance metrics for efficient Bitcoin price prediction.

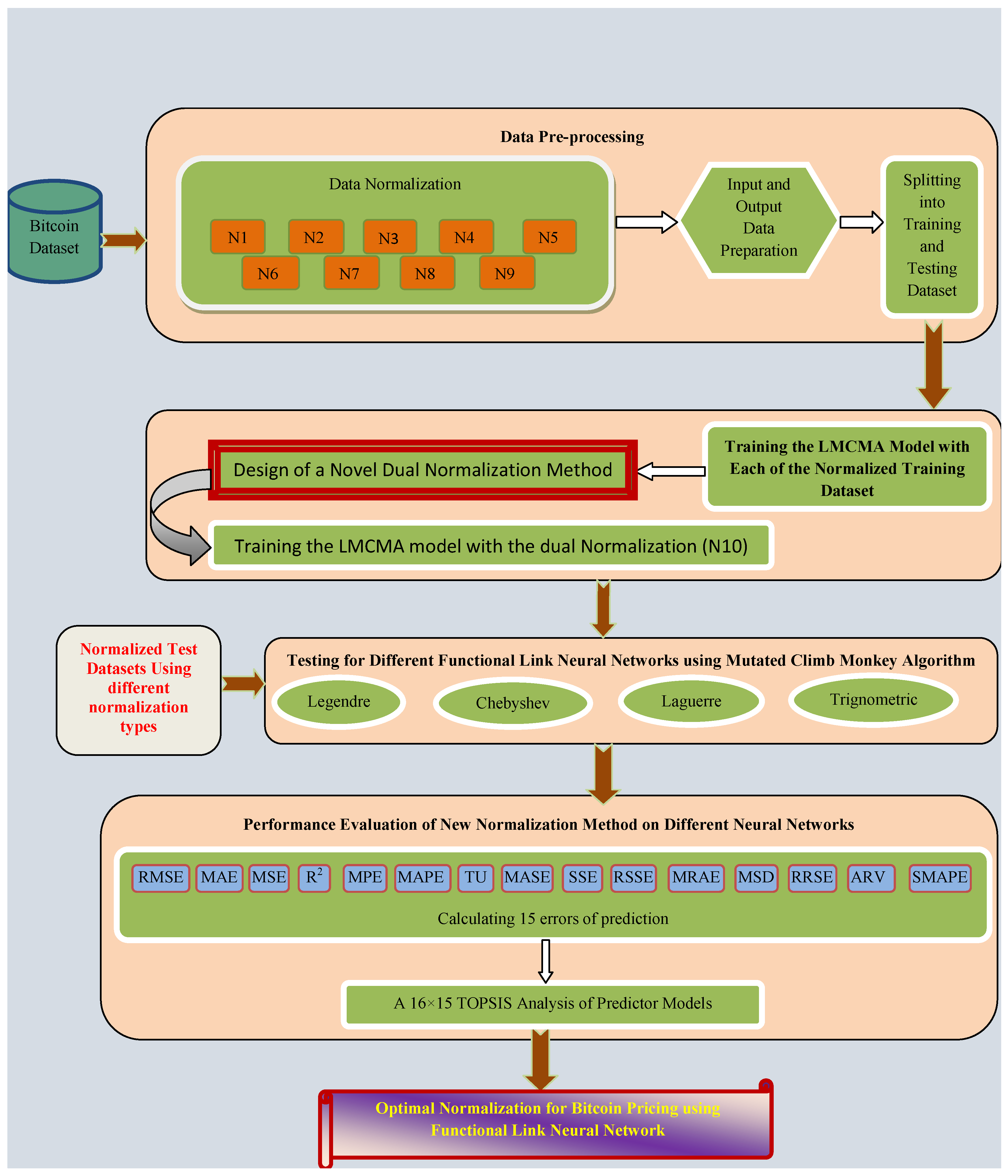

The main contributions of this paper are enlisted as follows:

Nine data normalization types were used to process three different datasets.

Each normalized dataset was used as input for the Legendre-based polynomial neural network model trained by the mutated climb monkey algorithm (LMCMA) to predict the closing Bitcoin price.

A new dual normalization technique was proposed for an improved prediction.

The proposed normalization technique was tested in three different functional link neural networks, an econometric model and a Support Vector Regression model

A TOPSIS-based approach was applied for ranking the normalization type using 15 performance criteria.

The LMCMA model with the proposed dual normalization was also tested for predicting daily Bitcoin log returns for the three datasets under study.

This empirical research analyzed the following research hypotheses:

Hypothesis 1. There is a significant relationship between data normalization and prediction accuracy of Bitcoin Price Predictor models.

Hypothesis 2. A dual normalization improves the prediction capability of Bitcoin price predictor models.

The research methodology is varied in that the Bitcoin predictor models in this study were evaluated using a TOPSIS-based ranking. In addition, the models used datasets scaled using a new normalization technique.

The importance of this research lies in the need for a highly efficient predictor for Bitcoin prices to handle the high volatility feature of Bitcoin prices. The research proposes a dual normalization that significantly improves the accuracy of prediction of Bitcoin closing price as well as daily log returns. The use of the new normalization technique indicated a root mean square error gain of 81.37%, 98.13%, and 98.73% over min–max normalization in the Bitfinex, Binance, and Coinbase Pro datasets, respectively.

Section 2 gives a review of the literature on Bitcoin pricing and normalization techniques used in this paper.

Section 3 introduces the basic model used in this study.

Section 4 explains the workflow of this study along with explanations of related techniques. It then introduces the proposed normalization and the experimental setup.

Section 5 presents the experimental results followed by a thorough discussion in

Section 6. The last section concludes this study and suggests various open research issues for future work.

2. Literature Review

Bitcoin is highly volatile and dynamic in nature. Many forecasting models have been presented using machine learning for Bitcoin price prediction. The volatility has been proved by a study of a Support Vector Machine (SVM)-based predictor [

19]. Aggarwal et al. [

20] demonstrated Bitcoin pricing using an SVM and ensemble approach. Many researchers have used LSTM and Bayesian networks for Bitcoin prediction [

6,

9]. Deep learning has also been used to predict Bitcoin prices efficiently by using autoencoders and LSTM [

5,

21]. Rathore et al. [

10] have presented a more real-world model by using a FbProphet model for better prediction in comparison to LSTM.

Recently, we proposed a functional artificial link neural network (FLANN) based on Chebyshev and Legendre polynomial functions as a simple yet efficient model for Bitcoin pricing [

7]. An optimal FLANN has been proposed to predict Bitcoin price movement by using a genetic algorithm-based optimization of the network [

6].

Nayak et al. [

22] presented the hybridization of high-order neural network (HONN) models with the evolutionary algorithms for efficient stock price prediction. They have explained the reliable and simple architecture of various HONNs such as pi-sigma neural network (PSNN), sigma-pi neural network (SPNN), and FLANNs based on Chebyshev, Laguerre, and Legendre polynomials. Ye et al. [

23] presented a stable and more accurate wind power forecasting using a Laguerre polynomials-based neural network. Many other researchers have utilized the flat architecture, fast learning capabilities, and reliable features of FLANN models for stock price, gold price, and mutual fund net asset value prediction [

24,

25,

26,

27,

28,

29]. This motivated us to use the LMCMA model for Bitcoin prediction in this study [

7].

In the literature, many researchers have studied the effect of data normalization on various classifications, regression, and decision-making models. Jain et al. [

30] explored min–max and z-score normalization with 12 data complexity measures in 14 classification models to find the best normalization selected dynamically. They used Friedman’s test for evaluation [

31]. Alshdaifat et al. [

32] analyzed three normalization methods with three missing value handling methods on nine different ANN and SVM models for 18 benchmark datasets. They concluded that the z-score performed the best and decimal scaling was the worst normalization under study. The evaluation was based on Friedman’s test and Nemenyi’s posthoc test [

33]. Singh and Singh [

16] investigated 14 normalization methods to study their effect on classification accuracy by integrating normalization with weighted features. In [

34], they proposed a feature-wise normalization technique. They explained that entire data normalization can be replaced with feature-wise normalization for efficient training.

Sola and Sevilla [

35] studied the impact of data normalization on two neural networks trained by using backpropagation in nuclear power plant applications and concluded that normalization reduces errors and error computation time as well as enhances the network performance. The effect of normalization has been studied for many other applications such as linear ordering and 2Dcoordinate transformation [

36,

37].

The selection of normalization also plays a vital role in enhancing the performance of multi-criteria decision-making models (MCDM) [

38,

39,

40]. The ranking process of MCDM models is also enhanced by selecting the appropriate normalization technique [

41,

42].

Very few hybrid normalization techniques have been proposed by combining different available normalization methods [

41,

43]. Recently, Izonin et al. [

44] proposed two-step normalization by combining max-abs scaler and vector scaler, which improved the classification accuracy for medical applications. This motivated us to study the effect of normalization on Bitcoin pricing and propose a new dual normalization technique for enhanced prediction capability.

In the literature, econometric models have been extensively used in the finance domain. Batrancea [

45] demonstrated the effectiveness of two econometric models in predicting the influence of many economic indicators on liquidity and solvency ratios in the healthcare industries. In [

46], the influence of the financial statement of a bank on its assets, liabilities, and performance is analyzed using an econometric model. For Bitcoin price prediction, many studies have been used to study the impact of technical indicators and macroeconomic indicators. In [

9], the effect of technical, macroeconomic, microstructure, and blockchain indicators on Bitcoin pricing has been analyzed using SVR (Support Vector Regression), MLP, OLS and an ensemble model. The SVR model was efficient in comparison to other models.

In light of the above review, it can be stated that this research will be very useful in the designing of new models by researchers for efficient prediction using the proposed dual normalization. In addition, it can help investors make decisions on Bitcoin investment with a more accurate prediction of future prices.

5. Results

To test the second hypothesis this study, we divided each of the 10 normalized datasets into a train dataset and test dataset in a 2:1 ratio. A Legendre FLANN with 5 × 1 input–output neurons was used for training. The network used a sliding window of size 5 to input five historic prices and generate the 6th price. This model uses the RMSE value as a measure of fitness to be minimized by the MCMA algorithm. With a population of 20, this model is iterated 100 times to forecast the daily Bitcoin price for all the datasets. The optimized weights generated for each model under study are used to predict the test prices. The efficiency of each model was tested using 15 error measures. The minimum error metric was used as the final error after running each model 10 times.

The minimum error values for each normalization method in the testing of LMCMA model using the Bitfinex dataset are shown in

Table 7.

From

Table 7, it is observed that the N10-based model generated minimum values for error metrics E1, E2, E3, E4, E7, E8, E10, and E11. N2 gives optimal E5, E9, and E15. N3, N5, N8 and N9 give minimum E12, E6, E14, and E13, respectively.

The minimum error values for each normalization method in the testing of LMCMA model using the Binance dataset are shown in

Table 8.

From

Table 8, it is observed that the N10 based model generated minimum values for error metrics E1, E2, E3, E4, E7, E8, E10 and E11. N2 gives optimal values for E5 and E15. N4 gives minimum values for E6 and E13. N6 gives minimum values for E9, E12, and E14.

The minimum error values for each normalization method in the testing of LMCMA model using the Coinbase Pro dataset are shown in

Table 9.

From

Table 9, it is observed that the N10-based model generated minimum values for error metrics E1, E2, E4, E10, and E11. N3 gives minimum E12 and E13. N4 gives minimum E14 and E15. N6 gives minimum values for E9. N7 gives minimum values for E3, E7, and E8. N8 and N9 give optimal values for E5 and E6, respectively.

As it is visualized that none of the normalizations performs best for all error metrics, a TOPSIS-based ranking is conducted to rank the 10 normalization types during the testing of the LMCMA model. The ranking for each of the datasets under study is shown in

Table 10,

Table 11 and

Table 12.

The ranking in all the three datasets indicates that normalization N10, N7, and N3 are the top three scalers in each of the datasets for the LMCMA model. To check the impact of our proposed normalization N10 on other networks, the original min–max normalization of LMCMA (N1) along with the top three ranked normalization (N10, N7, and N3) are used to test three other FLANNs.

The testing of four networks: Legendre FLANN (Network 1), Chebyshev FLANN (Network 2), Laguerre FLANN (Network 3), and trigonometric FLANN (Network 4) using four normalization types (N1, N3, N7, and N10) generate 16 MCMA-based Bitcoin predictor models: L1, L3, L7, L10, C1, C3, C7, C10, La1, La3, La7, La10, T1, T3, T7, and T10.

The value of 15 error metrics for 16 models under testing for the Bitfinex dataset is shown in

Table 13.

Table 13 indicates that L10 minimizes E2, E3, E4, E7, E8, E10, and E11. L3 minimizes E13. C10 minimizes E1, E3, E4, and E10. La1 minimizes E6. T3 optimizes E5, E9, E12, and E15. T10 minimizes E1, E4, E10, and E14.

The value of 15 error metrics for 16 models under testing for the Binance dataset is shown in

Table 14.

Table 14 indicates that C3 optimizes E5, E9, E14, and E15. C7 minimizes E3 and E10. T1 minimizes E6 and E13. T10 minimizes E1, E2, E3, E4, E7, E8, E10, E11, and E12.

The value of 15 error metrics for 16 models under testing for the Coinbase Pro dataset is shown in

Table 14.

Table 15 indicates that L3 and L7 minimize E3 and E10. C1 minimizes E14. C3 optimizes E5 and E9. C10 minimizes E1, E3, E4, E7, E8, E10, E11, and E15. La1 minimizes E13. La7 minimizes E3. T1, T3, T7, and T10 minimize E6, E12, E2, and E3, respectively.

As none of the models under study dominated the performance, a TOPSIS-based ranking is executed for the three datasets. The ranking for the three datasets is shown in

Table 16,

Table 17 and

Table 18.

Table 16 suggests that L10, C10, and T10 were the top three models in the Bitfinex dataset. The Binance dataset is best predicted by T10, C7, and C10 models as inferred from

Table 17.

Table 18 indicates that C10, L10, and L7 are the best predictor models for the Coinbase Pro dataset. The top three predictor models in each of the three datasets under study are represented in

Table 19.

Table 19 indicates that the proposed dual normalization (N10) enhances the prediction capability of the FLANN networks under study.

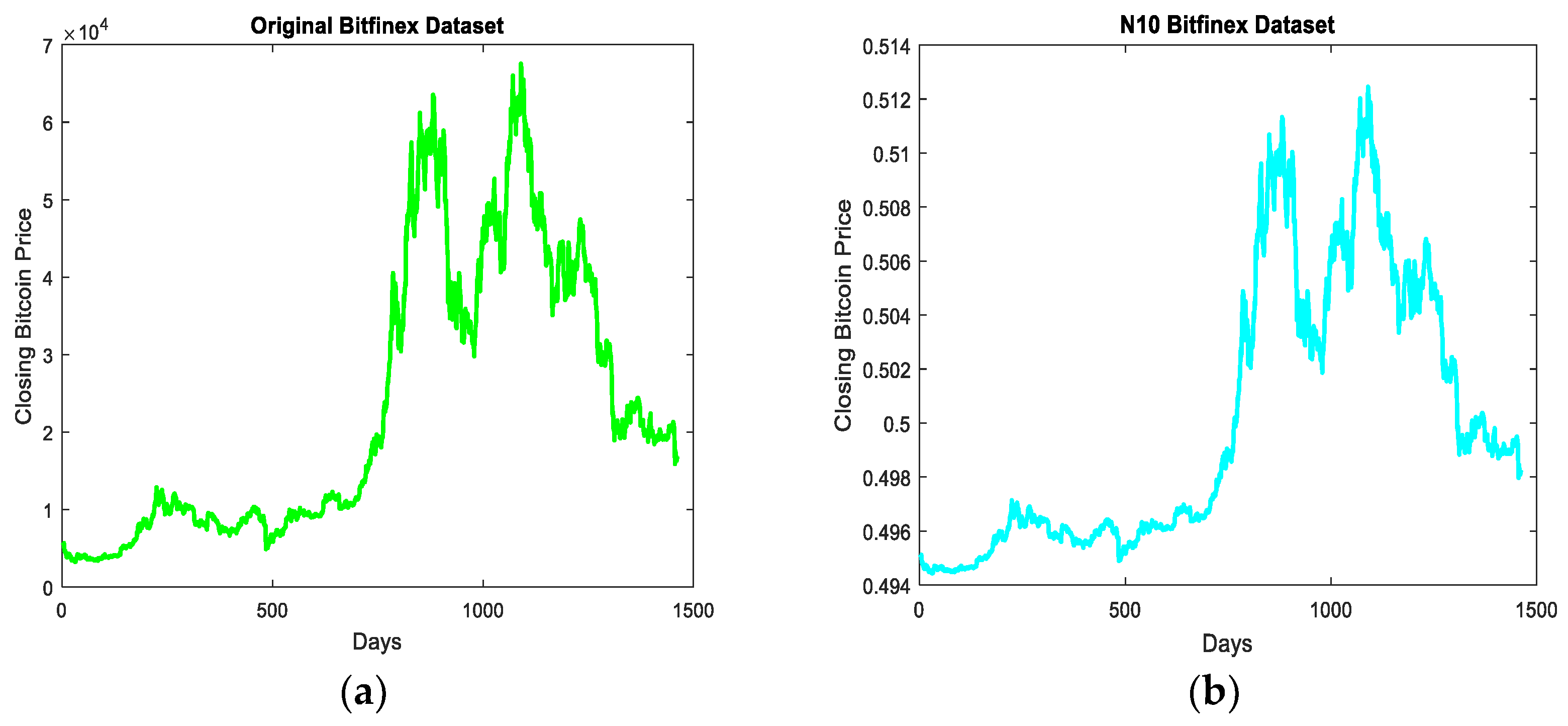

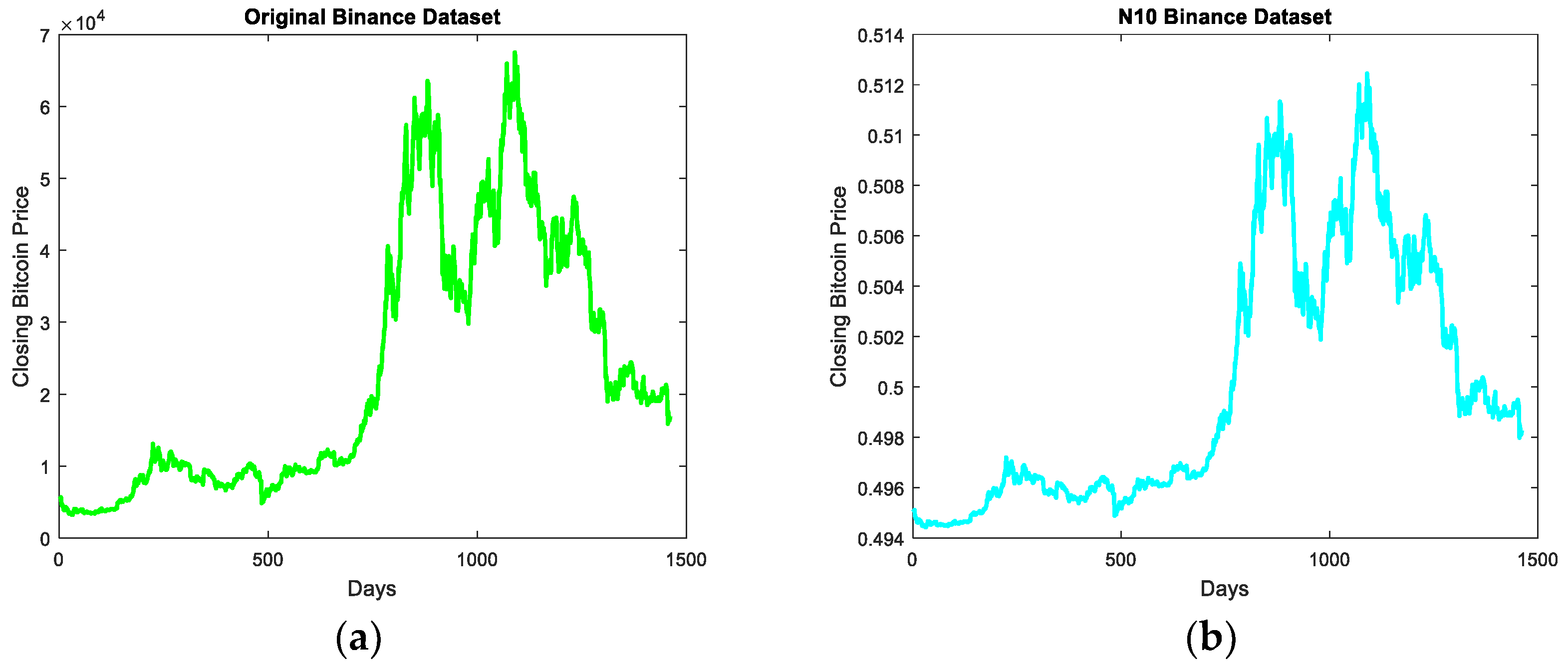

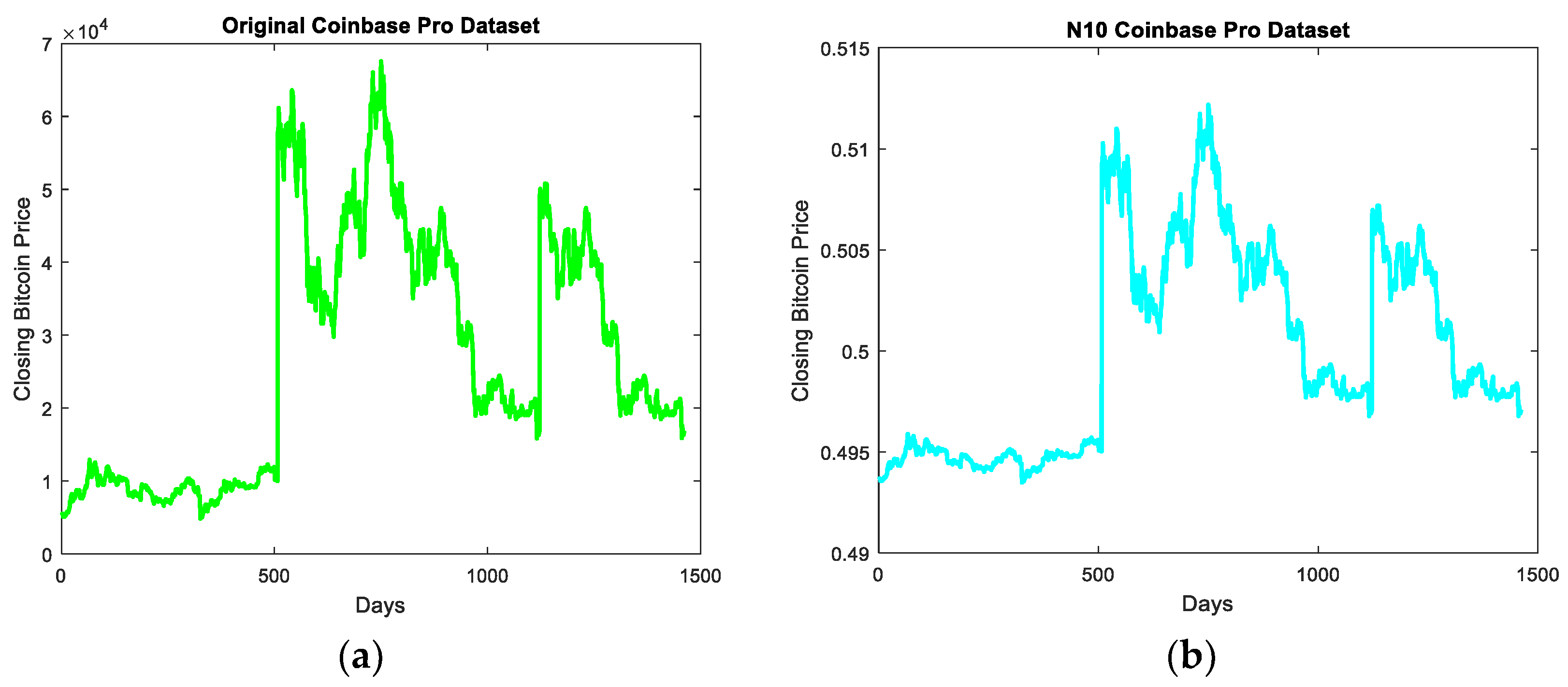

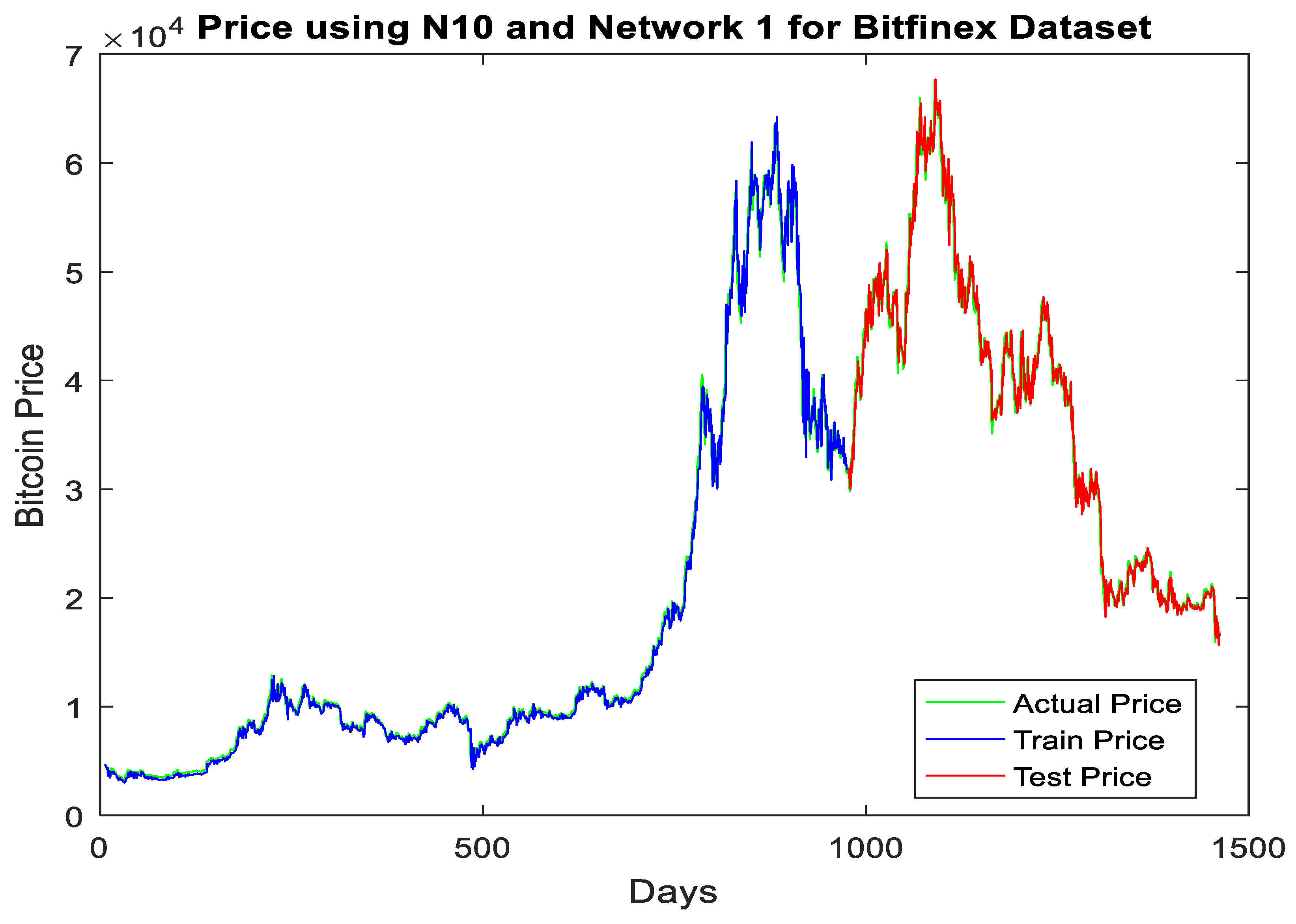

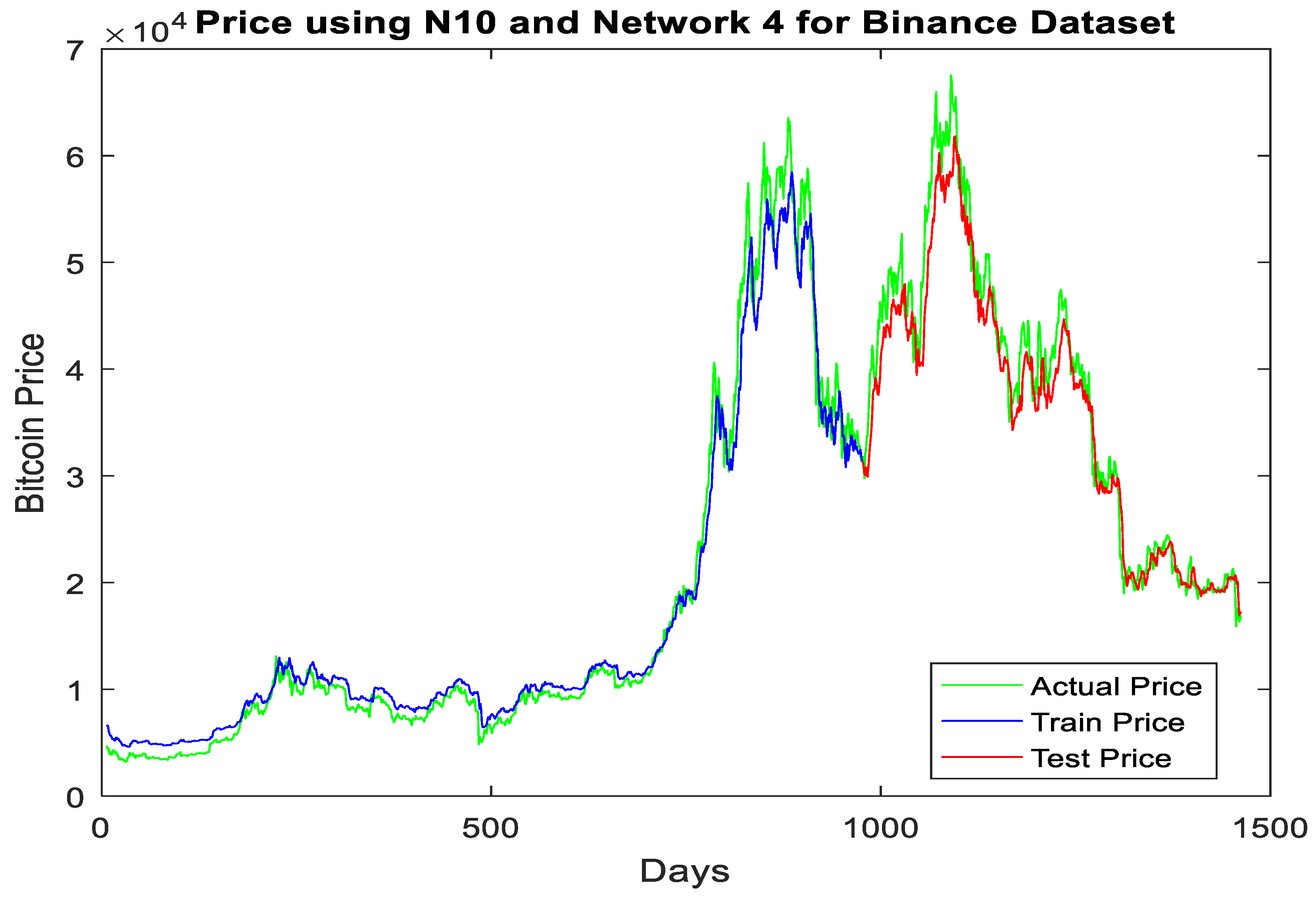

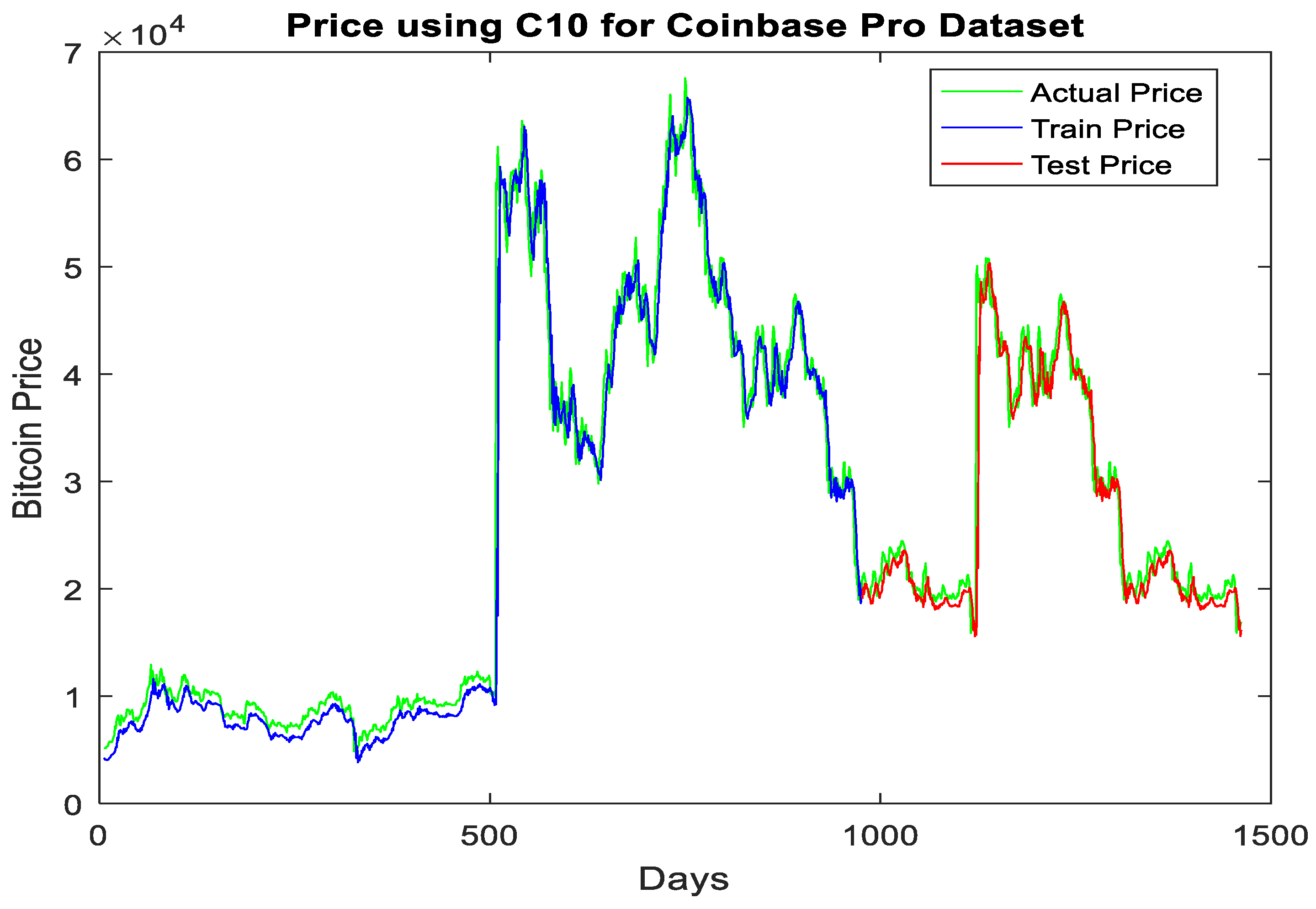

To present the mapping capability of the proposed normalization, the training, testing, and actual output of the first ranked model for each of the three datasets is shown in

Figure 6,

Figure 7 and

Figure 8.

The mapping plots in

Figure 6,

Figure 7 and

Figure 8 indicate that the dual normalization improves the prediction capability of polynomial-based neural network models. This validates the second hypothesis of this research.

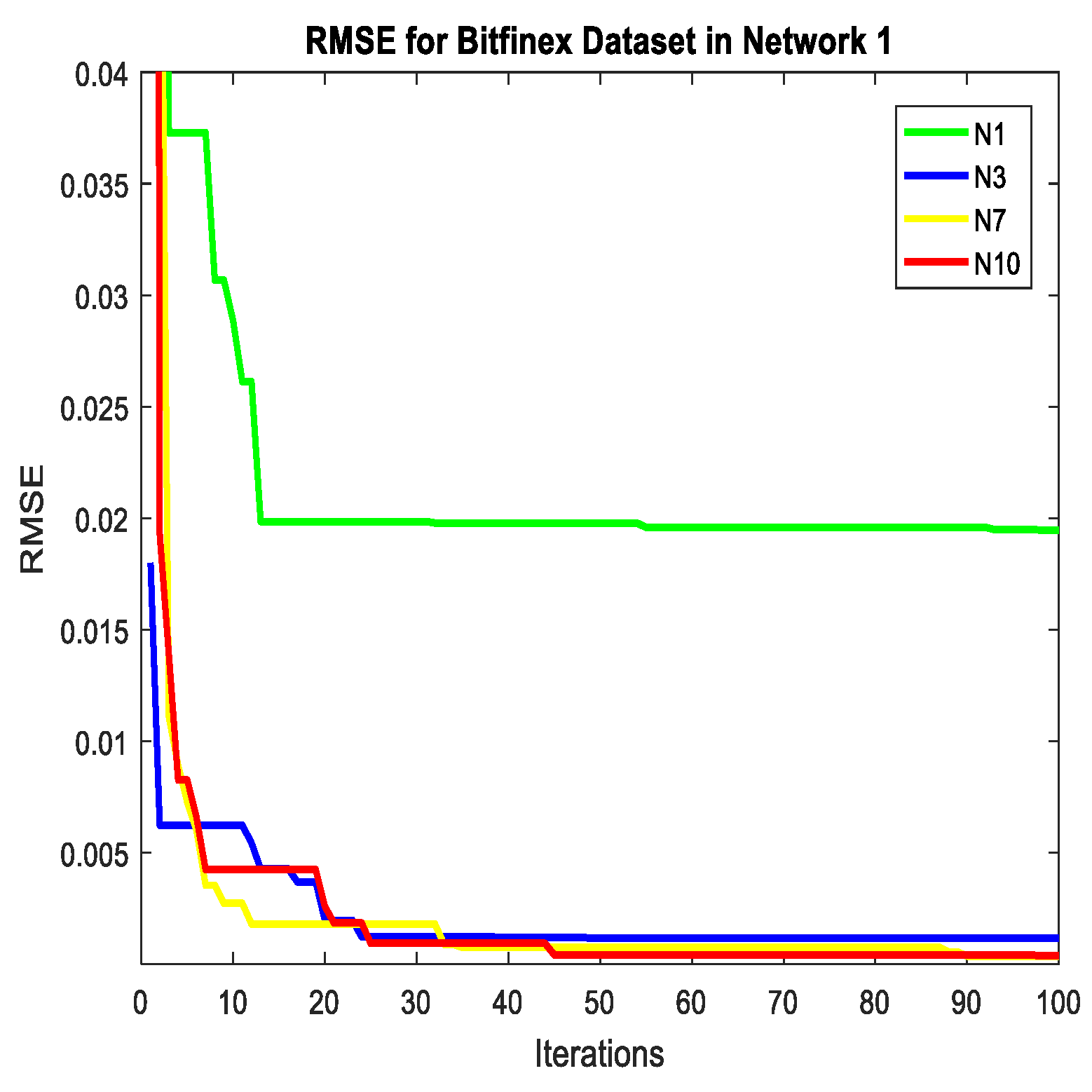

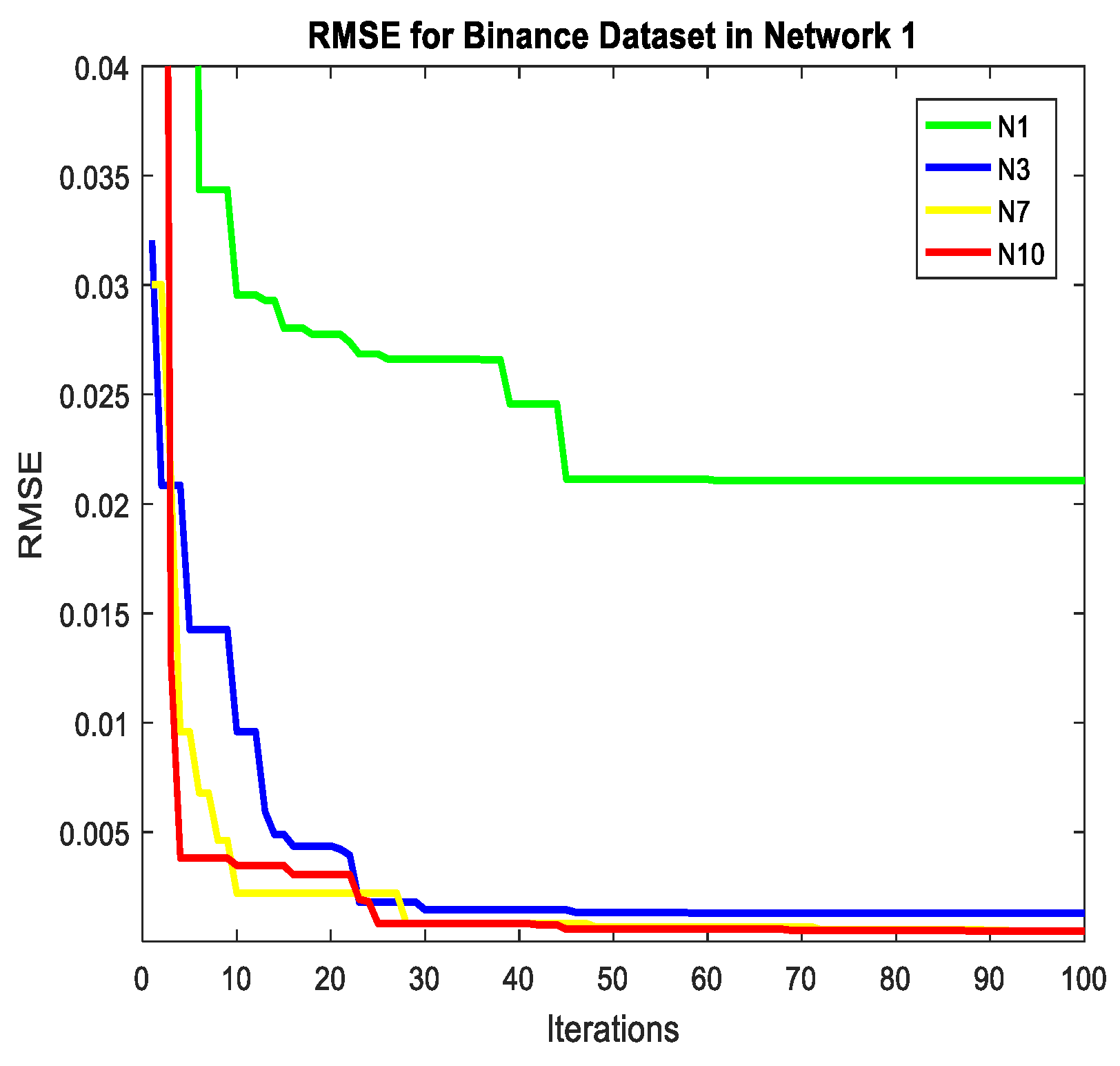

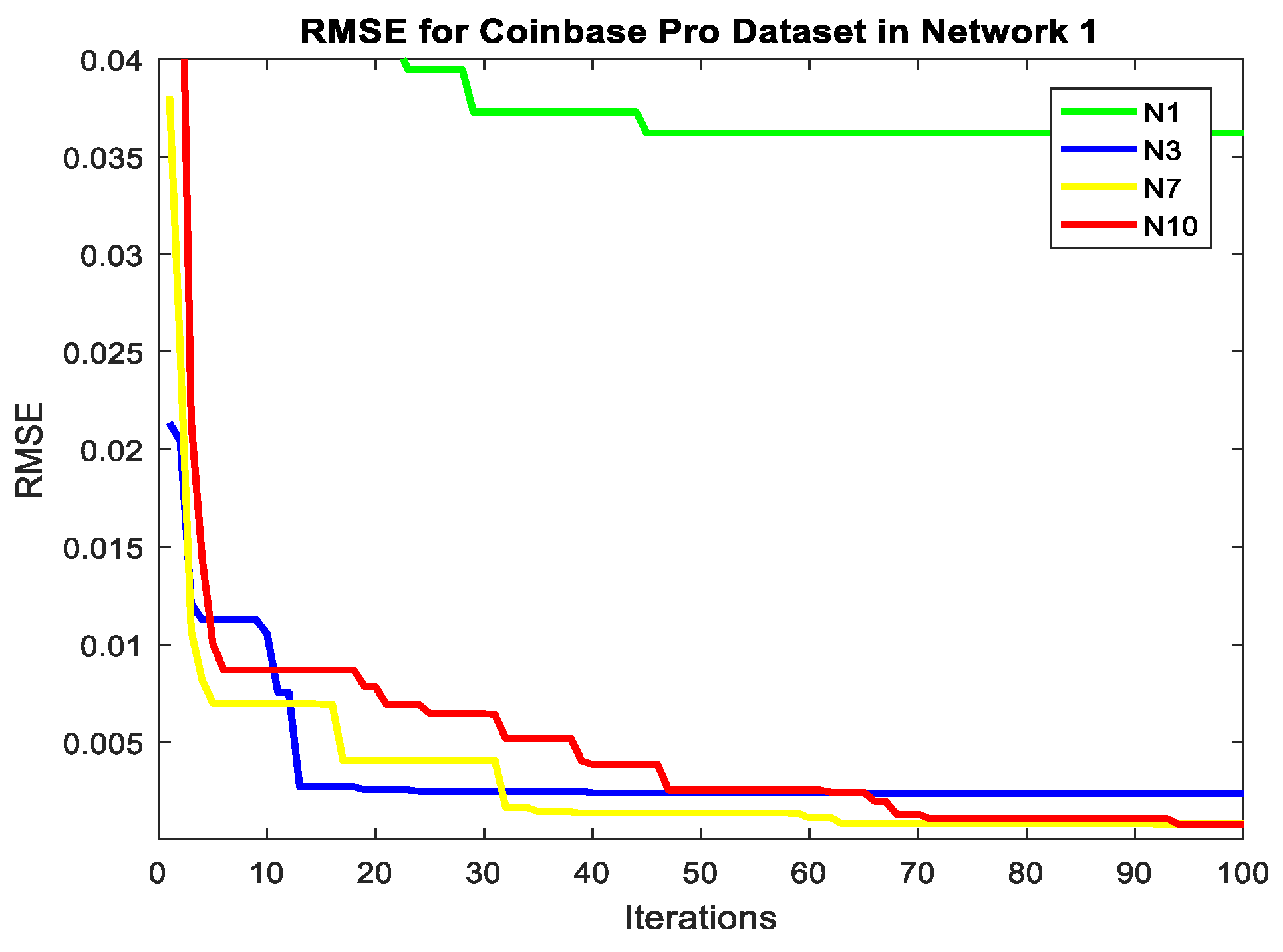

The convergence RMSE plot against the number of iterations for the training of the LMCMA model using N1, N3, N7, and the proposed N10 dataset for each of the three datasets is shown in

Figure 9,

Figure 10 and

Figure 11.

The convergence plots in

Figure 9,

Figure 10 and

Figure 11 clearly show that the proposed dual normalization minimizes the RMSE faster in comparison to other normalization under study. This also validates the second hypothesis of this study.

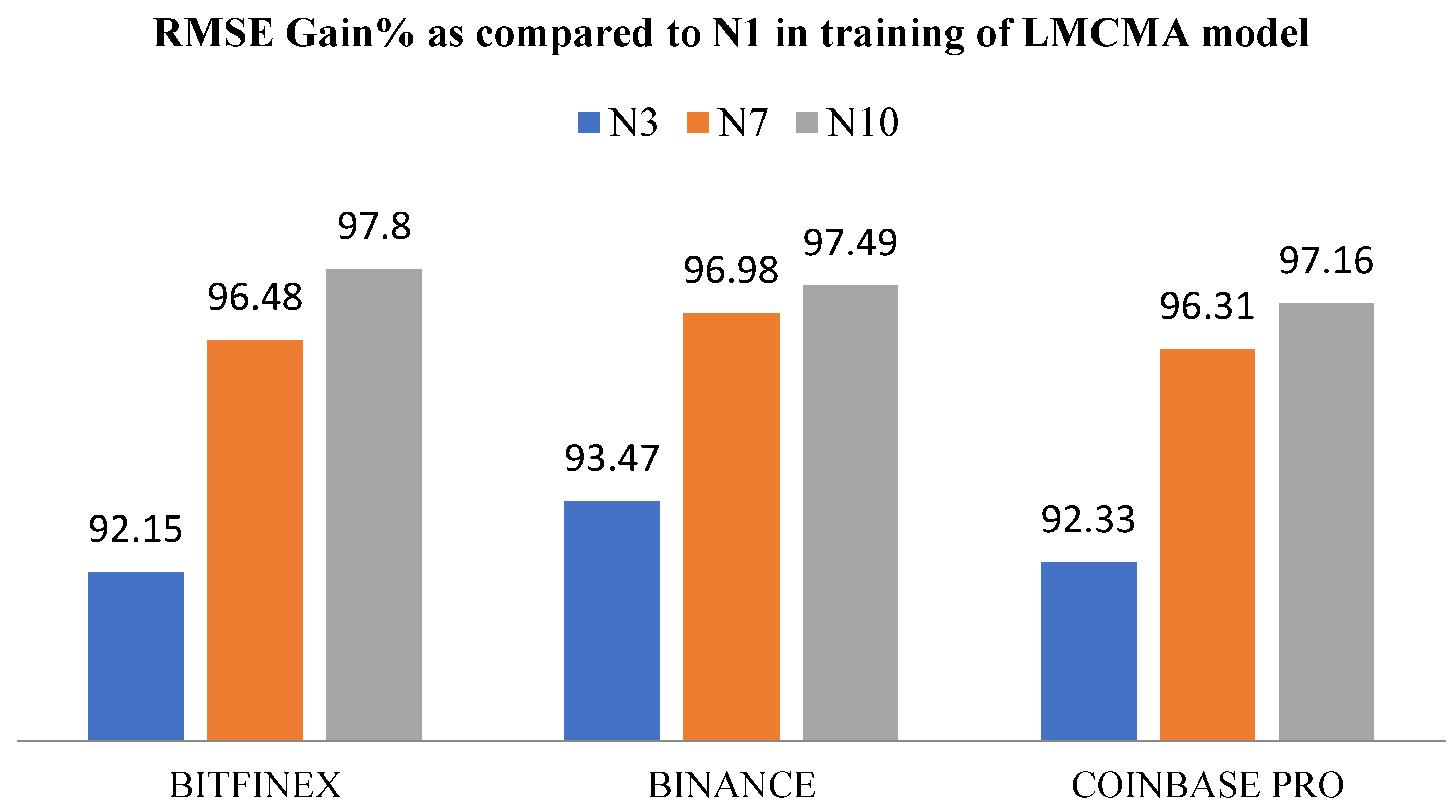

The RMSE gain percentage of the proposed normalization in comparison to the original min–max normalization used in the training of the LMCMA model is pictorially presented in

Figure 12.

It is visualized in

Figure 12 that the proposed dual normalization (N10) gives 97.8%, 97.49%, and 97.16% RMSE gain over the original min–max normalization (N1). It also shows that N10 predicts better than the basic N3 and N7 normalization used to generate the N10 technique.

The performance of the LMCMA model using the proposed dual normalization (L10) was further compared with two other models. The econometric Linear Regression (LR) model and the Support Vector Regression (SVR) model were used to predict the closing Bitcoin prices on the three datasets under study. The error measures for test data are presented in

Table 20.

As observed in

Table 20, the L10 model performs better than the LR and the SVR model in terms of all the error measures. The RMSE of each of the three models for the three datasets under study is shown in

Figure 13.

As observed from

Figure 13, the LMCMA model using the proposed dual normalization (N10) outperformed the LR and SVR models.

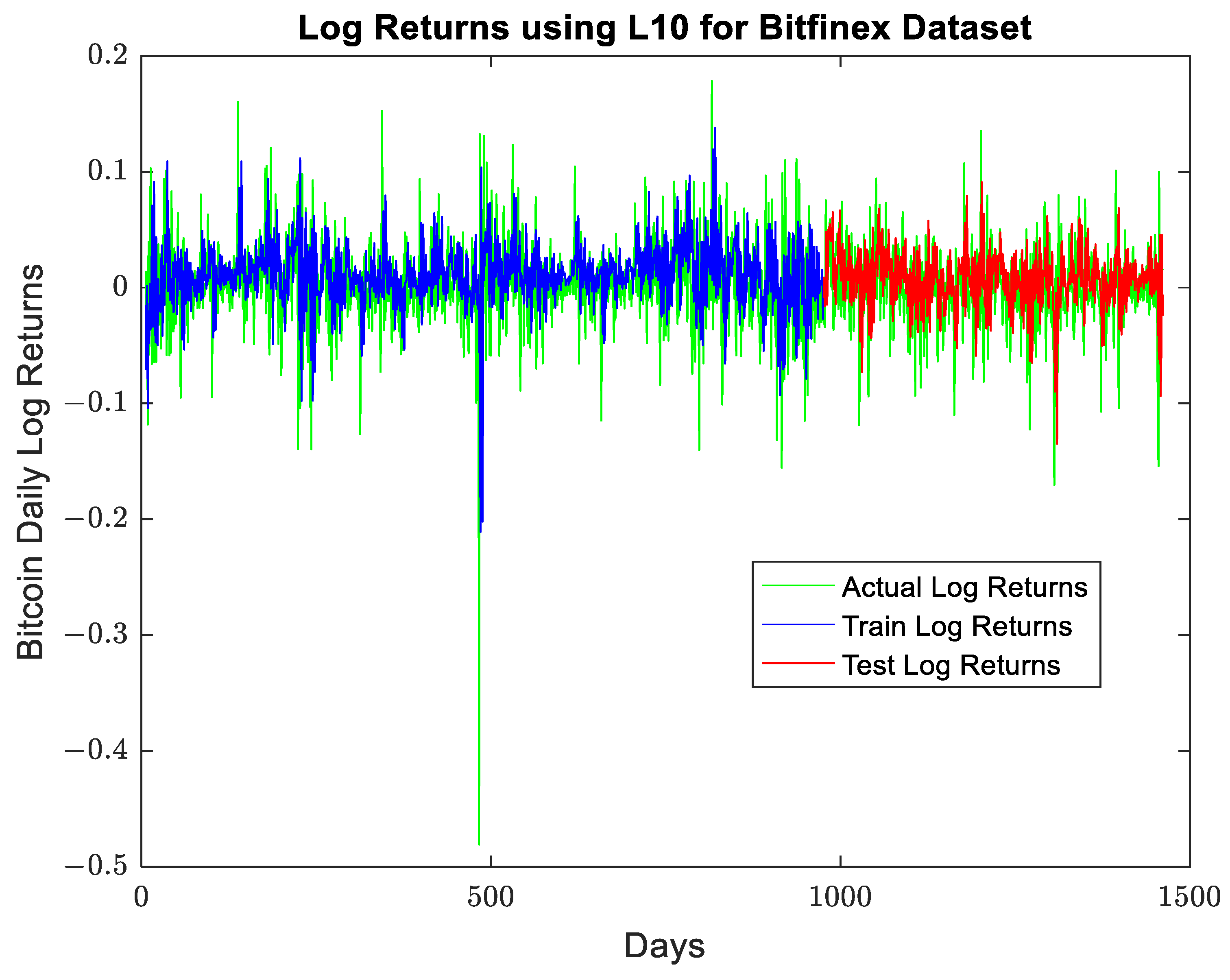

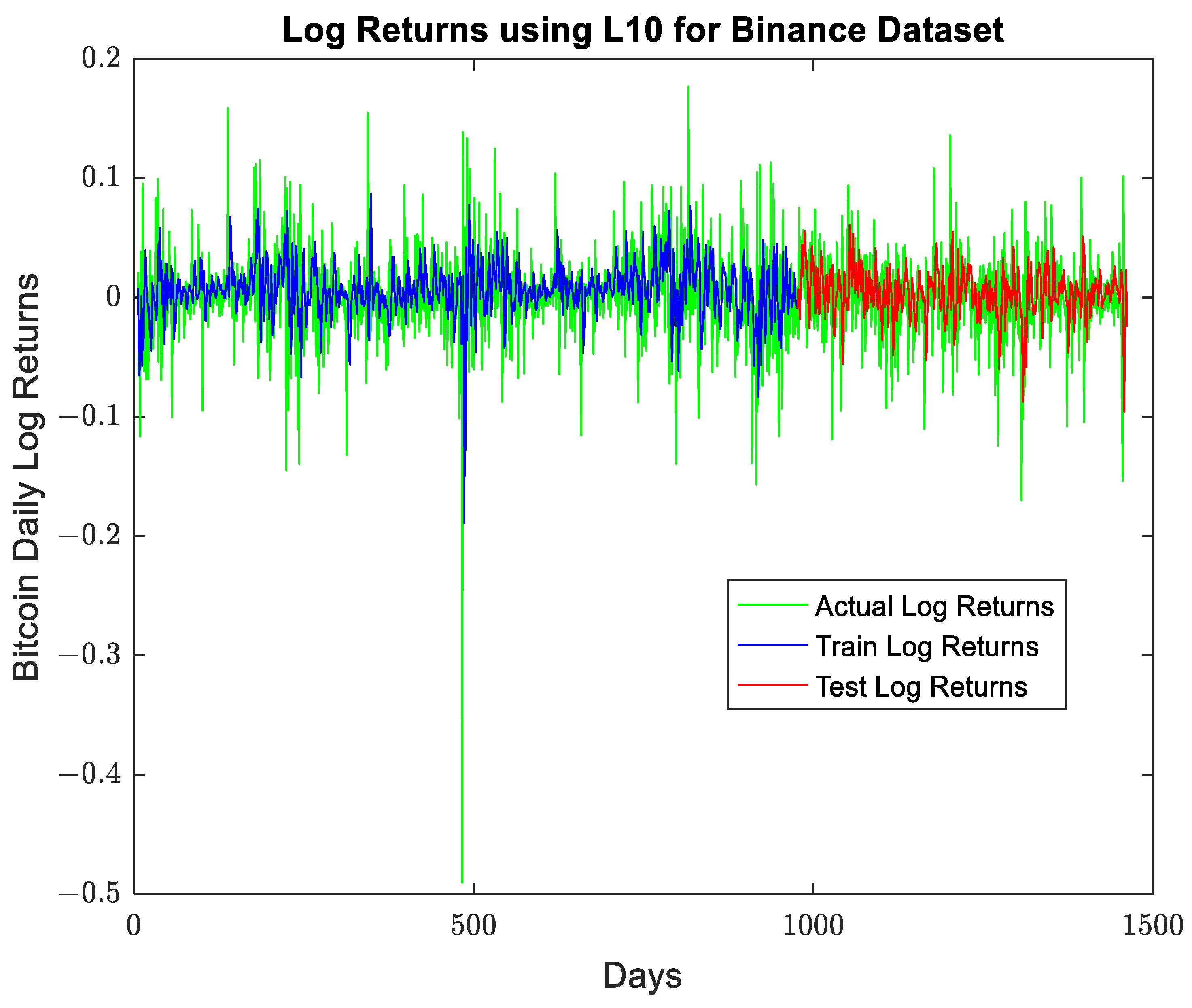

The Bitcoin closing prices are highly volatile, and as logarithmic distribution is more normal as compared to raw prices, this study further analyzed the performance of the proposed dual normalization on Bitcoin log returns for the three datasets under study. The error measures for each dataset are presented in

Table 21.

As indicated by

Table 21, the LMCMA model using the new normalization technique gives an RMSE of 0.0051, 0.0053, and 0.0052 for the Bitfinex, Binance, and Coinbase Pro datasets, respectively. In addition, the model performs well in all three datasets. The actual Bitcoin log returns plotted against the predicted train and test log returns for all the three datasets are shown in

Figure 14,

Figure 15 and

Figure 16.

6. Discussion

Recently, Bitcoin is assumed to be a safe-haven asset by some investors. However, the dynamic and volatile behavior of the Bitcoin dataset is the biggest challenge to the growth of the Bitcoin market. A Bitcoin predictor model with sufficient stability can act as fuel in increasing investors and stakeholders in the digital market. The emergence of Bitcoin Cash has already created a positive spur in the digitization of companies [

60]. Many research activities are involved in analyzing the Bitcoin dataset to suggest a better model of regression or classification.

In this study, the Bitcoin prices of three different markets were normalized using different normalization types and were analyzed in the LMCMA model to obtain the top normalization for pricing. A dual normalization was proposed using the top two normalizations during the training of the LMCMA model. The stability of the normalization technique proposed here is validated by testing its efficiency in a different test dataset. Additionally, the top performers were evaluated in three other FLANN models. As the performance evaluation of these 16 models is not decisive, this study utilized the TOPSIS-based performance measurement with 15 errors. The proposed normalization bagged the first rank in each of the datasets.

As the datasets were divided into training and testing datasets which have no common data between them, the efficient prediction of the proposed normalization during testing and training of the FLANN models suggest the stable feature of this normalization. It can be visualized in

Table 14,

Table 15 and

Table 16 that the proposed normalization (N10) performs best in each network separately for all the three datasets.

In the Bitfinex dataset, Legendre-based models L1, L3, L7, and L10 were ranked 13, 12, 7, and 1, respectively. Similarly, Chebyshev models C1, C3, C7, and C10 were ranked 14, 9, 4, and 2, respectively. Laguerre models La1, La3, La7, and La10 were ranked 16, 11, 10, and 8, respectively. Trigonometric models T1, T3, T7, and T10 were ranked 15, 6, 5, and 3, respectively. It indicates that N10 outperformed the other normalization techniques in each of the networks. The similar inference is also validated for Binance and Coinbase Pro datasets. This also validates the stability of the proposed normalization.

As inferred from

Table 19, although N10 is the best normalization in each network, still, the overall model for best prediction is different for different datasets. So, the network architecture also plays a vital role in the modeling of predictors.

The output plots for the first ranked model in each dataset clearly show the efficient mapping of dual normalization in Bitcoin price prediction. Furthermore, the RMSE gain percent of N10 during training is 97.8%, 97.49%, and 97.16% for Bitfinex, Binance, and Coinbase Pro datasets, respectively. The RMSE gain percentage for testing is 81.37%, 98.13%, and 98.73% for Bitfinex, Binance, and Coinbase Pro datasets, respectively.

The novelty of the proposed dual normalization is compared with some hybrid normalization available in the literature. It is shown in

Table 22.

Table 22 suggests that our proposed dual normalization is novel and efficient work in the field of Bitcoin price prediction. It is more reliable, as it is evaluated using 15 error metrics. This normalization is also stable as data can be denormalized to actual ones for better mapping.

This study also compared the linear regression econometric model with the proposed model. The nonlinear regression SVR is also analyzed and compared to the L10 model. The results indicate that the proposed model is efficient for Bitcoin price prediction. Similar results were also observed in predicting the Bitcoin daily log returns. The highly volatile feature of Bitcoin price is also mapped efficiently by the predictor model L10.

7. Conclusions

Recently, Bitcoin has been dominating the crypto market. This paper proposes a new dual normalization technique to efficiently predict Bitcoin closing prices by handling the huge variations in the dataset. This is the first paper to study the impact of data scaling on Bitcoin prices. To validate the proposed normalization, it was compared with nine different normalizations applied to three different datasets for modeling four different FLANNs. Furthermore, the predicted and actual outputs were plotted. The mapping of outputs visualizes the efficiency of the proposed method. To further validate the dataset, distribution plots are used. The use of this normalization enhances the prediction capability of the LMCMA model by giving an RMSE gain percent of more than 90 over min–max normalization in the Bitfinex, Binance, and Coinbase Pro datasets.

This research can help many investors and stakeholders in the crypto-market to make appropriate decisions on Bitcoin pricing. In addition, the proposed normalization indicates that instead of having a fixed normalization for research, different normalizations can be analyzed to select the best normalization for a particular network. This can give research directions to any area of research which requires normalization of its input.

During COVID-19, the huge rise in Bitcoin prices prompted many investors to invest in the crypto-market. However, after the pandemic, the decline in Bitcoin prices left many investors in dismay. The Bitcoin price is highly volatile and is in its initial phase. So, many econometric models need to be evaluated along with the FLANN-based model, which provides higher accuracy. However, this study reveals that an appropriate normalization can help in better scaling of such huge volatility in Bitcoin prices. This in turn can help in positive decisions with profitable investment in the crypto-market.

Although this study presented a novel idea to analyze normalization before any regression or classification, it has a few shortcomings which can be addressed in the future. The new normalization has only been tested on FLANN models, a linear regression model, and a nonlinear SVR model. It can be further tested for different other networks and econometric models. Additionally, this normalization can be tested for several other datasets to explore its capability in different areas of research. The models can be fine-tuned further by using several other machine-learning algorithms. A close look at the normalized data analysis points out that the range of transformation may play a vital role in the efficiency of a model. This needs to be further analyzed. This model analyzes the prediction of the closing prices, which is not sufficient to address the high volatility. In addition, the log returns prediction needs to be analyzed for different other networks for a better prediction It can be further used to normalize technical indicators and combine them with normalization types. As Bitcoin is highly volatile, its price movement prediction needs to be explored. The effect of different other preprocessing techniques such as window size and the ratio of splitting the dataset can be analyzed in the future. Furthermore, this research can be extended by designing an econometric model using various technical, social, and economic indicators which may influence the Bitcoin price volatility.