Abstract

Decomposition-based many-objective evolutionary algorithms (D-MaOEAs) are brilliant at keeping population diversity for predefined reference vectors or points. However, studies indicate that the performance of an D-MaOEA strongly depends on the similarity between the shape of the reference vectors (points) and that of the PF (a set of Pareto-optimal solutions symbolizing balance among objectives of many-objective optimization problems) of the many-objective problem (MaOP). Generally, MaOPs with expected PFs are not realistic. Consequently, the inevitable weak similarity results in many inactive subspaces, creating huge difficulties for maintaining diversity. To address these issues, we propose a two-state method to judge the decomposition status according to the number of inactive reference vectors. Then, two novel reference vector adjustment strategies, set as parts of the environmental selection approach, are tailored for the two states to delete inactive reference vectors and add new active reference vectors, respectively, in order to ensure that the reference vectors are as close as possible to the PF of the optimization problem. Based on the above strategies and an efficient convergence performance indicator, an active reference vector-based two-state dynamic decomposition-base MaOEA, referred to as ART-DMaOEA, is developed in this paper. Extensive experiments were conducted on ART-DMaOEA and five state-of-the-art MaOEAs on MaF1-MaF9 and WFG1-WFG9, and the comparative results show that ART-DMaOEA has the most competitive overall performance.

MSC:

90C27

1. Introduction

In real-world scenarios faced by decision-makers in industrial applications, e.g., induction motor design [1], cloud computing [2,3], multiple agile earth observation satellites [4], and deep Q-learning [5], there exist many problems with two or more conflicting objectives that need to be optimized simultaneously. Such problems are referred to as multi-objective optimization problems (MOPs) by researchers. A classical MOP can be described as follows:

where stands for the decision space, is a decision vector, is the value of the ith objective, M is the number of the objective of the MOP, and can be considered a mapping from the decision space to the objective space. If the number of objectives of an MOP is larger than three, i.e., four or more, the MOP is usually referred to as a many-objective optimization problem (MaOP).

Due to the conflicting nature of the objectives of MOPs, progress on one objective often means deterioration of one or more other objectives, and there rarely exists one solution that can be optimal with respect to all of the objectives. Therefore, a set of Pareto-optimal solutions represents a trade-off among all the objectives pursued by the researchers. For two solutions and , if outperforms on each objective, we say that dominates . A solution can be seen as a Pareto-optimal solution if it cannot be dominated by any other solutions. For an MOP, the Pareto-optimal solutions are known as the Pareto front (PF) and the corresponding decision vectors are referred to as the Pareto set (PS). Currently, the main task of an algorithm used to solve an MOP is to obtain an approximation as close as possible to the true PF.

Multi-objective evolutionary algorithms (MOEAs) derived from traditional single-objective evolutionary algorithms (EAs) have attracted much attention for their advantageous population-based nature. In recent years, a large number of MOEAs have been developed and improved, e.g., NSGA-II [6] and MOEA/D [7]. Furthermore, as a special branch of MOPs, research into MaOPs has experience a great boom, and a variety of many-objective evolutionary algorithms (MaOEAs) have been tailored to deal with MaOPs [8,9,10].

The existing MaOEAs can be roughly divided into three categories, namely, dominance-based, decomposition-based, and indicator-based. The dominance-based MaOEAs, for instance, NSGA-III [11] and RPD-NSGA-II [12], tend to first divide solutions into different nondomination levels according to the dominance relation or other variants. Decomposition-based MaOEAs, such as RVEA [13] and MOEA/DD [14], usually transform an MaOP into several sub-MaOPs or a set of single-objective optimization problems, then solve them in a cooperative manner. In indicator-based MaOEAs, such as IGD [15] and HV [16], the performance of solutions is assigned a value based on one or more indicators.

Among the above three categories, the decomposition-based algorithms have a natural advantage in ensuring population diversity for the predefined reference vectors or points. An important branch of the decomposition-based algorithms divides an MaOP into a series of subproblems by partitioning the corresponding objective space into a set of subspaces using predefined reference vectors, for instance, MOEA/D-M2M [17] and RVEA [13]. These MaOEAs show outstanding overall performance for a variety of MaOPs, and have attracted much attention from researchers; however, a research gap in the progress of these MaOEAs remains.

As shown in Figure 1, an example is illustrated to visually show the definitions of the subspaces. In Figure 1, are ten subspaces generated by the reference vectors, while the blue points denote candidate solutions. Clearly, contains one solution, contains two solutions, contains two solutions, and contains two solutions. These subspaces are considered active subspaces, and their corresponding reference vectors are active reference vectors. By contrast, in the same example, are inactive subspaces. Obviously, the inactive subspaces fail to make a contribution to the population diversity, and can even be considered a waste of calculation resources.

Figure 1.

An example of active and inactive subspaces where the blue asterisks denote candidate solutions.

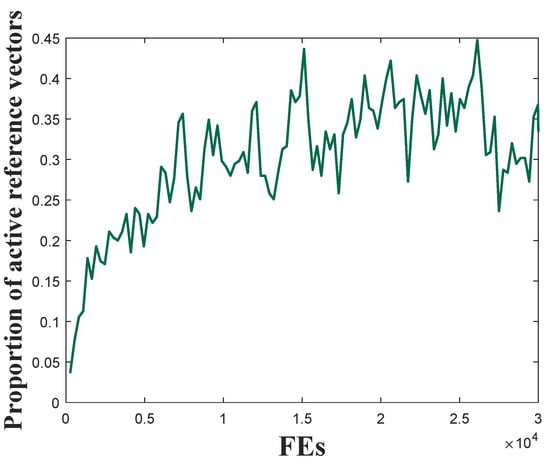

In [18], Ishibuchi implies that the performance of decomposition-based MaOEAs strongly depends on the PF shapes of the candidate MaOPs. Generally, if the shape of the distribution of the weight vectors in a weight vector-based many-objective MaOEA possesses sound similarity with the shape of the PFs of the candidate MaOP, the MaOEA tends to show good performance on the MaOP. Nevertheless, predefined reference vectors cannot have high similarity with all MaOPs, as the candidate MaOPs may come from different real-world applications. When the predefined reference vectors and the PF shape of the candidate problem have a large difference, an immediate result is that more subspaces become inactive. In addition, with an increasing number of objectives of the MaOP, the objective space expands sharply, and the subspaces become large enough that even a subspace generated in the MaOP may be larger than an entire space in an MOP. Consequently, the selection of solutions in a subspace may be difficult when high diversity is expected, and reference vectors are more likely to be inactive in MaOPs. Consequently, solving MaOPs with different PFs using a decomposition-based MaOEA with a set of predefined unchanged reference vectors can encounter enormous difficulties. Figure 2 shows the variation of the proportion of the active reference vectors during the optimization process of RVEAa [19] on 10-objective MaF1. As can be seen in Figure 2, less than 45% of reference vectors are active during the process, which can be seen as a great waste of resources. Generally, more active reference vectors indicate that the region where the obtained population lies (which is a good approximation of the true PF) can be decomposed with higher adequacy. Thus, better population diversity and convergence can be expected. Under this scenario, Figure 2 obviously indicates an unexpected overall performance.

Figure 2.

Variation of the proportion of active reference vectors during the optimization process of RVEAa on a ten-objective MaF1.

Decomposition-based MaOEAs provide a variety of suggestions and directions to solve MaOPs; however, few works have paid attention to the potential capability of active reference vectors and inactive reference vectors for maintaining diversity performance and handling MaOPs with irregular PFs. Motivated by this issue, and based on the existence of active and inactive reference vectors, a two-state scheme is proposed in this paper to divide the optimization process into two optimization states according to the proportion of the active reference vectors. These two states make for differences and similarities between the shape of the predefined reference vectors and the obtained approximation of the true PF of the candidate MaOP with different optimization methods. In order to judge which the state in which the current optimization process lies, optimization is performed in each generation on the basis of the predefined reference vectors and the obtained population. Then, two strategies are tailored for the two states of the optimization process, with the expectation that more active reference vectors are generated in the region where the PF is located. Based on the above ideas, an active reference vector-based two-state decomposition-based MaOEA is proposed to improve the population diversity and convergence of MaOPs with PFs of various shapes.

Contributions: the key contributions of this paper are listed as follows:

- (1)

- Two states of the optimization process are defined according to the decomposition of the obtained population, i.e., the number of the active reference vectors, to clearly identify the fit between the used MaOEA and the optimization problem.

- (2)

- Two strategies are tailored for the two optimization states in order to better divide the region where the true PF of the optimized MaOP is, in order to better apply population diversity pressure and avoid waste of calculation resources.

- (3)

- An active reference vector-based two-state decomposition-based MaOEA is proposed to improve the population diversity of MaOPs with PFs of various shapes.

The rest of this paper is organized as follows. Section 2 provides a brief review of MaOEAs. In Section 3, the specific steps of the proposed ART-DMaOEA are detailed. In Section 4, the extensive experiments and comparisons we carried out are described. In Section 5, our conclusions and possible future works are reviewed.

2. Related Studies

During the past two decades, MOEAs and MaOEAs have experienced a great boom, and a variety of algorithms have been developed and improved [20,21,22,23,24]. In MOEAs and MaOEAs, the environmental selection strategy plays a key role during the whole optimization process, and researchers tend to divide algorithms into four categories on the basis of the environmental selection strategy they use [8,25,26]: (1) dominance-based; (2) decomposition-base; (3) indicator-based; and (4) other algorithms outside of the above three categories.

This first category is mainly based on the Pareto dominance relation. These algorithms usually divide the candidate population into different dominance levels according to the inner individuals’ dominance relation, then sort the individuals in the last level using a second criterion. NSGA-II [6] is a typical dominance-based algorithm using the non-dominated sorting and crowding distance to balance convergence and diversity. In 2014, Deb et al. [11] suggested an improved version of NSGA-II called NSGA-III, in which a set of reference points are set to replace the crowding distance in NSGA-II; this approach has proven to have outstanding performance on MaOPs. SPEA2 [27] adopts a fine-grained fitness assignment strategy together with a greedy method to maintain the diversity of the obtained population. Laumanns et al. [28] developed new archiving strategies based on the concept of -dominance, leading to MOEAs that have the desired convergence and distribution. Zou et al. [29] defined a new definition of optimality (namely, L-optimality) by taking into account the number of improved objective values while considering the values of improved objective functions if all objectives have the same importance. In Ikeda et al. [30], an -domination strategy was proposed to relax the domination using a weak trade-off among objectives. Yuan et al. [31] defined a -dominance relation on the basis of a reference vector-based objective space decomposition strategy and the PBI function [7]. He et al. [32] defined a fuzzy Pareto domination relation using the concept of fuzzy logic and incorporated it into NSGA-II and SPEA2 [27]. In Qiu et al. [33], considering the number of objectives on which one individual performs better than the other, the concept of fractional dominance relation was developed in order to impose sufficient selection pressure. In GrEA [34], the two concepts of grid dominance and grid difference were introduced to determine the mutual relationships of individuals in a grid environment. Tian et al. [35] proposed a new dominance relation called SDR, in which an adaptive niching technique was developed based on the angles between the candidate solutions and only the best converged candidate solution in each niche was identified as non-dominated.

Decomposition-based MOEAs and MaOEAs tend to decompose an MOP or MaOP into several subproblems and then solve them simultaneously in a collaborative manner. From the first proposal of MOEA/D by Zhang et al. [7], this branch of MOEAs has seen a rapid boom, and many variants, adaptations, and hybridizations have been developed by researchers. The approach of the existing decomposition base algorithm can be mainly divided into two categories: (1) dividing the original MOP or MaOP into several single-objective problems (SOPs), e.g., MOEA/D [7,36]; and (2) dividing the original MOP or MaOP into a group of subproblems, with the subproblems belonging to MOPs, e.g., MOEA/D-M2M [17] and RVEA [13]. Based on the above two core ideas, a number of MOEAs and MaOEAs have been developed. Wang et al. [37] defined a global replacement scheme which assigns a new solution to its most suitable subproblems. This scheme is critical for ensuring population diversity and convergence. In addition, the authors developed an approach for adjusting its size dynamically. Li et al. [38] used both differential evolution (DE) and covariance matrix adaptation in the MOEA based on decomposition, thereby clustering single-objective optimization problems into several groups. Bao et al. [39] proposed an adaptive decomposition-based evolutionary algorithm (ADEA) for both multi- and many-objective optimization. In ADEA, the candidate solutions themselves are used as RVs, meaning that the RVs can be automatically adjusted to the shape of the Pareto front (PF). In Zhao et al. [40], an adjustment was developed to update weight vectors based on the population distribution, which simulates and modifies the value function of reinforcement learning to intensify the rationality of updates. MOEA/D-M2M [17] decomposed an MOP into a set of simple MOPs through an objective space decomposition strategy. Cheng et al. [13] added a scalarization approach, termed the angle-penalized distance, to MOEA/D-M2M and proposed a reference vector adaptation strategy to dynamically adjust the distribution of the reference vectors according to the scales of the objective functions. When the second category, that is, decomposition-based MaOPs, are applied to deal with an MaOP, a variety of subspaces tend to be inactive, i.e., no individual is present in the subspace; as such, the corresponding reference vectors or points can be seen as wasteful. To mend this shortage, Cheng et al. [19] proposed an improved version of RVEA called RVEAa by randomly deleting an inactive reference vector and adding a new reference vector. The diversity-maintaining strategy of FDEA [33] can be seen as an adaptation of RVEA in which the solutions are selected using a one-by-one method instead of the one-off method used in RVEA.

Indicator-based MOEAs or MaOEAs often rank solutions using newly developed low-dimensional indicators that can represent the overall convergence and diversity performance. For instance, Zitzler et al. [41] proposed a general indicator-based evolutionary algorithm called IBEA. Emmerich et al. [42] devised a steady-state algorithm by combining the concepts of non-dominated sorting and a hypervolume-measure based selection operator, the hypervolume measure. Pamulapati et al. [43] proposed an indicator called , symbolizing a combination of the sum of objectives and shift-based density estimation; the ability of this indicator to promote convergence and diversity proved highly beneficial. Tian et al. [44] proposed an MOEA based on an enhanced inverted generational distance indicator, and suggested an adaptation method to adjust a set of reference points. In Dong et al. [45], a two-stage constrained multi-objective evolutionary algorithm (CMOEA) was developed with different emphases on the three indicators. Li et al. [46] proposed an enhanced indicator-based many-objective evolutionary algorithm with adaptive reference points, in which the dominance relation and the enhanced IGD-NS are used as the first selection criterion.

There are a variety of MOEAs and MaOEAs that do not fall into the above three categories. Qiu et al. [47] provided an ensemble framework for dealing with MaOPs by integrating two or more solution-sorting methods into an ensemble; in this approach, each method is considered a voter, and voters work together to decide which solutions survive to the next generation. Chen et al. [48] defined prominent solutions using the hyperplane formed by their neighboring solutions. Zhang et al. [49] tailored a decision variable clustering method to tackle large-scale MaOPs, with decision variables divided into two types, respectively, according to their relation to the convergence and diversity performance. As an alternative to ranking solutions, Yuna et al. [50] used a ratio-based indicator with an infinite norm to find promising regions in the objective space.

The ART-DMaOEA proposed in this paper belongs to the category of decomposition-based MaOEAs. In contrast with the above-mentioned strategies, our proposed algorithm first divides the optimization process into two states according to the decomposition states, then two strategies are tailored separately for the two states in order to adaptively adjust the active reference vectors. In this way, the region where the PF lies can be divided more evenly.

3. The Proposed ART-DMaOEA

3.1. The Objective Space Decomposition Strategy

MaOEAs based on an objective space decomposition strategy are an important branch of decomposition-based MaOEAs. In this paper, the objective space decomposition strategy proposed by Liu et al. [17] is adopted to divide the whole objective space into a set of subspaces using a set of predefined reference vectors.

In the objective space decomposition strategy, a set of uniformly distributed reference vectors, termed as , are predefined to decompose an MOEA or MaOEA into a set of MOEAs or MaOEAs. The objective space is decomposed into N subspaces, termed as , according to the acute angles between the candidate solutions and the reference vectors. Here, , where is the ith subspace and means the acute angle between p and .

According to the definition of subspace, a solution belongs to if and only if the solution has the smallest angle to among all unit vectors. In the optimization process, each solution will be arranged into a subspace during the optimization process. In this paper, is the corresponding reference vector of the solutions in .

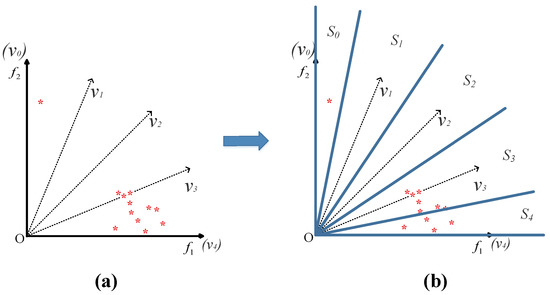

To more vividly explain the definition of a subspace, an example is shown in Figure 3. In the example, five reference vectors (including two axes) divide the bidimensional objective space into five subspaces, and the population is partitioned into five subpopulations in five subspaces. Clearly, no solution lies in and ; thus, and are inactive reference vectors.

Figure 3.

Example showing the definition of subspaces and reference vectors. (a) Distribution of predefined reference vectors and candidate solutions where the red asterisks denote candidate solutions. (b) Subspaces generated by the reference vectors.

3.2. Generating Reference Vectors from a Given Population

In this paper, new reference vectors need to be constructed from the obtained population. In this section, we introduce the detailed procedure of this method.

Considering a population P with population size N, the individuals in P can be denoted as . Here, , where M is the number of the objectives. First, each is normalized as

where and , while and are the minimum and maximal value of the ith objective among P, respectively. The normalized therefore ranges from 0 to 1.

Then, a reference vector can be constructed from x as follows:

The newly generated reference vector points from the origin to , and intersects with the simplex at .

3.3. The Main Framework of the ART-DMaOEA

According to the classic definition of reference vectors [11], the initial point of the reference vectors used in this paper is always the coordinate origin. To be consistent with this definition, the objective values of the individuals need to be translated as follows:

where , and are the objective vectors before and after translation, respectively, and represents the minimal objective values calculated in the population.

The role of the translation operation is twofold: (1) to guarantee that all the translated objective values are inside the first quadrant, where the extreme point of each objective function is on the corresponding coordinate axis, thereby maximizing the coverage of the reference vectors, and (2) to set the ideal point to be the origin of the coordinate system, which simplifies the formulations presented later on.

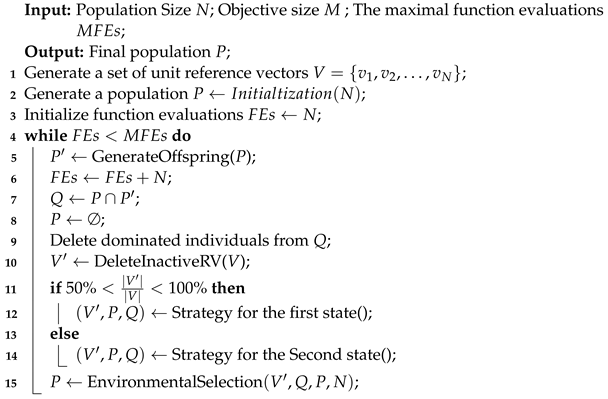

The main framework of the proposed ART-DMaOEA is listed in Algorithm 1, from which we can see that a classic elitism strategy is adopted in ART-DMaOEA. As shown in Algorithm 1, three parameters are first initialized: (1) reference vectors V (line 1); (2) an initial population with N individuals P (line 2); (3) the number of used function evaluations (line 3). Then, the main loop of the proposed algorithm is presented (lines 4–14). During each iteration, the algorithm performs three main steps: (1) offspring generation (lines 5–9); (2) reference vectors processing (lines 10–14); and (3) environmental selection (line 15). In lines 5–9, an offspring population is generated using the simulated binary crossover and polynomial mutation. Here, Q is the combined population of P and , used in preparation for the later selection procedure. In line 8, the parent population P is an empty set, and the individuals surviving to the next iteration in the following selection procedure are included in P. In line 10, the active reference vectors in the current generation are filtered out as . It should be noted that V is not changed in this procedure. In lines 11–12, if the number of active reference vectors exceeds half the original V, the method for the first state of the optimization process runs to deal with the active reference vectors. In this function, individuals are selected into P and excluded from Q; therefore, P and Q are listed in the output in line 11. If half of the original reference vectors V tend to be inactive (line 13), the method prepared for the second state is applied (line 14). The methods used in the two states have the same input and output; the difference lies in the specific calculation process. In line 15, based on the newly processed , environmental selection is performed to maintain a sound balance between convergence and diversity. The main contribution of this paper is in lines 9–14, i.e., dealing with active reference and the environmental selection method.

| Algorithm 1: Main Framework of the proposed ART-DMaOEA |

|

In each ART-DMaOEA generation, the active reference vectors are selected from the original reference vectors V according to the spatial distribution relationship between Q and V. The predefined V are unchanged throughout the optimization process. In addition, apart from dealing with active reference vectors, the function Strategy for the first state() and Strategy for the second state() act as components of the environmental selection method. After the two functions are performed, solutions with good diversity performance are be absorbed into P and excluded from Q.

3.4. Strategies for the Two States

In this section, the two states during the optimization process of the proposed algorithm on MaOEA and the tailored two strategies are described in detail.

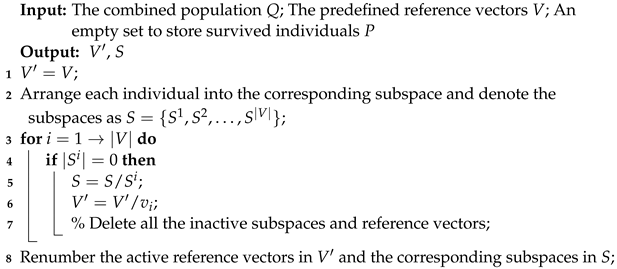

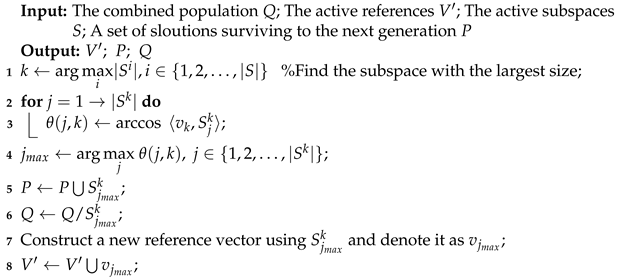

First, in Algorithm 2 all the inactive reference vectors and corresponding inactive subspaces are removed. As can be seen in Algorithm 2, is initialized as V in line 1; then, each individual is associated with a reference vector in V according to the definition of subspace described in Section 3.1.

In lines 3–7, reference vectors with no individual associated are deleted from , and the corresponding subspaces are deleted from S. In line 8, the remaining reference vectors in and subspaces in S are renumbered as and , respectively. Note that in Algorithm 2, no change in the original set of reference vectors V is performed.

In the proposed ART-DMaOEA, we set two optimization states: (1) over half of the original reference vectors are active and can work to maintain diversity in the generation; and (2) half of the original reference vectors are inactive. In the first state, we can roughly judge that the difference between the shape of the predefined reference vectors and the obtained population (which can be seen as an approximation of the true PF of the MaOP) is not very large; therefore, we only need a slight adjustment. The second state means that there is a huge difference between the predefined reference vectors and the true PF of the MaOP, causing a strong adjustment of the reference vectors is needed.

| Algorithm 2: Delete Inactive Reference Vectors |

|

Not each MaOP experiences both states during optimization by decomposition-based MaOEAs; whether it does is determined by the difference between the shape of the true PF of the problem and the predefined reference vectors. Certain problems may be in the first state during the whole optimization process, while others may continue to fall into the second state, and still others experience both states. The strategies developed for dealing with the two states are described in Algorithm 3 and Algorithm 4, respectively.

| Algorithm 3: Strategy for the first state |

|

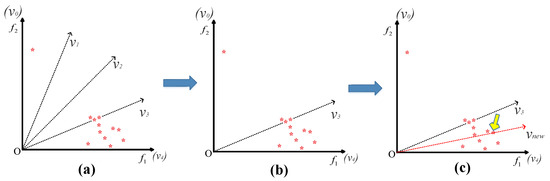

As shown in Algorithm 3, P and Q serve as both input and output, which is to say that the two parameters are edited by Algorithm 3. A new solution (or solutions) is admitted into P and excluded from Q. In line 1, the subspace with the largest population size among all the active subspaces is found, and its index is referred to as k. In lines 2–3, the acute angle between each individual in and the corresponding reference vector is calculated. The index of the individual with the largest acute angle among is denoted as (line 4). In lines 5–7, is absorbed into P from Q and the a reference vector is constructed using . In line 8, the newly constructed reference vector is added to the set of active reference vectors for later environmental selection.

In Algorithm 3, the individual with the best diversity performance among the subspace with the largest population size is selected into the next generation and constructed as a new reference vector. The new active reference vectors (updated in line 8) tend to be more suitable for the current population. For further explanation, an example is shown in Figure 4. In Figure 4, Figure 4a provides the distribution of the original reference vectors and the obtained population, while Figure 4b shows the deletion of inactive reference vectors. The solution pointed to by the yellow arrow in Figure 4c can be seen as , the corresponding subspace of can be seen as , and represents the newly constructed reference vectors. Obviously, the new set of active reference vectors in Figure 4c can be more evenly distributed in the region where the true PF is located, making it more efficient in maintaining diversity performance for the population.

Figure 4.

Example showing the strategy for the first state where the red asterisks indicate the candidate solutions. (a) Predefined reference vectors. (b) Delete inactive reference vectors. (c) Construct new reference vector using the solution pointed by the yellow arrow.

| Algorithm 4: Strategy for the second state |

|

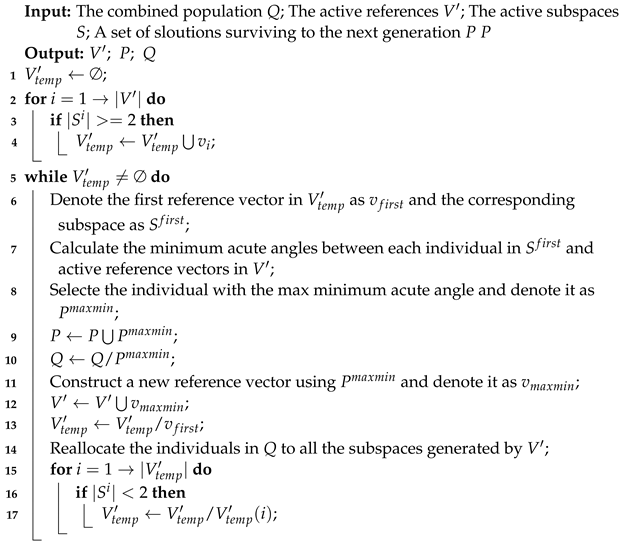

In the second state, over half of the original reference vectors do not work, and adding only one vector in Algorithm 3 seems to be an exhausting approach to achieve the same effect as in the first state. As a result, a tailored strategy for the second state is developed, with the main steps shown in Algorithm 4. Apart from having the same input and output, the goals to be achieved by the two algorithms are similar; that is, they each seek to construct new reference vectors while selecting individuals to survive to the next generation. Unlike from the first state, in the second state more than one reference vector is constructed.

As can be seen in Algorithm 4, subspaces with two or more individuals are entered into (lines 1–4). Then, each subspace in is further divided only once (lines 5–17). In line 6, the first reference vectors and corresponding subspaces are recorded as and , respectively. In line 7, the acute angle between each individual in and each active reference vector in is calculated, and the minimal acute angles of each individual in are recorded. In line 8, the individual with the maximum minimal acute angle is selected and denoted as . Then, is absorbed into P (line 9) and excluded from Q (line 10). In lines 11–12, a new reference vector is constructed using and added to . Then, is removed from (line 13). In lines 14–17, because the active reference vectors changes, the number of individuals in certain subspaces in may be less than two; therefore, they must be removed from . Generally, the construction of a new reference vector means that the original subspace is divided; this distribution of active reference vectors is more suitable for the optimized MaOP. The loop (lines 5–17) terminates when all of the subspaces in are divided by newly constructed reference vectors.

In Algorithm 4, there are three parameters with a high similarity: (1) the original reference vectors V; (2) the active reference vectors ; and (3) the active reference vector with two or more solutions . While Algorithm 4 is being run, V is an invariant and and are variants. Here, is selected from V in Algorithm 2 and serves as input and output parameter of Algorithm 4, while is a temporary parameter of Algorithm 2 and works for the updating of .

The key step of the strategy for the second state is selecting candidate solutions to construct new solutions, i.e., lines 6-8 in Algorithm 4. In line 7, the minimum acute angle of a solution to the existing reference vectors indicates the degree of the difference between the solution and reference vectors. The solution with the maximal minimum acute angle has the largest difference compared with the existing reference vectors in and is suitable for construction as a reference vector. Because changes when a new reference vector is added, and the maximal minimum acute angle changes as well, The loop (lines 5–17) adopts a one-by-one method instead of a one-off method, i.e., only one reference vector is selected in each iteration of the loop. Apart from selecting the individual with the best diversity performance against the active reference vectors in , because the newly constructed reference vectors is added to the method of maximum minimum acute angle ensures that at most one new reference vector is added between any two adjacent active reference vectors of derived from V.

Here, we use the example shown in Figure 5 to illustrate the main steps of the strategy described in Algorithm 4. In Figure 5a, are ten predefined reference vectors and the red points are candidate solutions. Clearly, are inactive reference vectors and the other five are active reference vectors. In Figure 5b, the five inactive reference vectors are deleted and the remaining five are active reference vectors. Furthermore, the corresponding subspaces of each have two or more solutions. Then, following Algorithm 4, the solution in the subspace of is selected and constructed as a new reference vector in Figure 5b, indicated by the yellow arrow. The blue vector is the newly constructed reference vector. Figure 5c–e shows the constructed reference vectors from the corresponding subspaces of , , and , respectively. Finally, the final output from Algorithm 4 is shown in Figure 5f.

Figure 5.

Example showing the steps of the strategy for the second state. (a) Predefined reference vectors and candidate solutions (the red asterisks). (b–e) Delete inactive solutions and construct new reference vector using the blue asterisks pointed by the yellow arrows. (f) The final set of reference vectors.

From this example, we can easily see the core idea of the strategy developed for the second state, that is, if the predefined reference vectors are substantially different from an MaOP, the strategy can obtain a set of active reference vectors that are distributed as uniformly as possible in the region where the true PF of the optimization problem is located.

3.5. Environmental Section

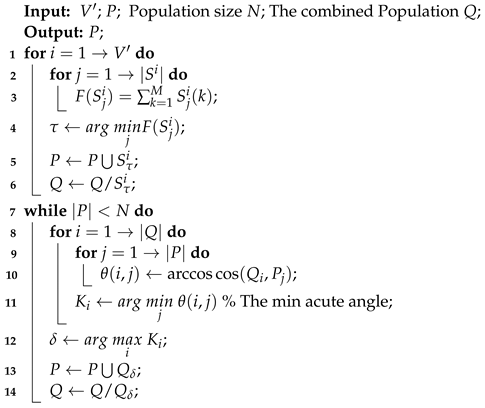

The pseudocode for the environmental selection process is detailed in Algorithm 5. The purpose of the environmental selection strategy is to balance the convergence and diversity using the active reference vectors, especially the convergence performance, as the construction of the new active reference vectors in Algorithm 3 or Algorithm 4 places much attention on diversity. For this reason, the environmental selection method first selects one individual for each subspace while only considering the convergence performance, then the maximum minimum acute angle method is applied to maintain diversity for population P.

As can be seen in Algorithm 5, there are four input parameters: (1) the active reference ; (2) the individuals surviving to the next generation P; (3) the combined population Q; and (4) the population size N. Note that P is not an empty set, as Algorithm 3 or Algorithm 4 reasults in one or more individuals being absorbed into P. For each subspace (line 1), the summation of the normalized value of each solution is calculated (lines 2–3), where means the jth individuals of and denotes the kth objective value of . In lines 4–6, the individual with the least summation of normalized value in each subspace is selected into P and removed from Q.

The summation of the normalized value of an individual can reflect the convergence performance of the individual, and an individual with the least summation value always has the best convergence performance. Lines 1–6 in Algorithm 5 select the individuals with the best convergence performance into P without paying any attention to diversity. In light of this, the maximum minimum acute angle method used in the strategy developed for the second state is adopted to maintain a sound balance between convergence and diversity (lines 7–14). Because the maximum minimum acute angle method involves dynamic parameters (Q and Q), a one-by-one scheme is used instead of the one-off method. The loop continued until the size of P reaches that of N.

| Algorithm 5: Environmental Selection |

|

3.6. Time Complexity Analysis

The main time complexity of the proposed ART-DMaOEA mainly lies in lines 5–10 of Algorithms 1, 3, 4, and 5. In each generation, only one of Algorithm 3 and Algorithm 4 runs, and Algorithm 4 costs more than Algorithm 3; thus, we only need to calculate the time cost of Algorithm 4.

In Algorithm 1, line 5, it costs to generate a new population, where D denotes the number of the decision variables in an MaOP. Algorithm 1, line 9, takes to clean up the dominated solutions for all subspaces.

In Algorithm 2, it costs to associate each individual with a subspace and to delete inactive reference vectors.

In Algorithm 4, it costs N to obtain (lines 1–4). Then, the time complexity cost of calculating the cosines among the solutions is . Next, constructing a new reference vector costs . As a result, the time complexity of the loop (lines 6–17) is .

In Algorithm 5, it costs to calculate the summation of the objective values (lines 1–6). Then, is used to select solutions using the maximum minimum acute angle method.

To summarize, the worst-case overall computational complexity of ART-DMaOEA within one generation is .

4. Experimental Studies and Discussion

To empirically investigate the overall performance of the proposed ART-DMaOEA, we compared it with the following five representative algorithm: (1) A-NSGA-III [51]; (2) BiGE [52]; (3) MOEADDU [53]; (4) RVEAa [19]; and (5) -DEA [31].

The algorithms used in the comparative study were implemented in MATLAB 2020b, and the codes were embedded into the evolutionary multi-objective optimization platform PlatEMO [54], which is freely available on github.

4.1. Experimental Settings

4.1.1. Benchmark Test Problems

The performance of the six algorithms was compared in the context of WFG1 to WFG9 taken from WFG test suite [55] and MaF1 to MaF9 taken from MaF test suite [56], with 5, 8, 10, and 15 objectives. In this paper, a problem with a specific objective number is called a test instance, and there are 72 test instances in all from the MaF test suite and the WFG test suite. These 18 benchmarks contain various properties, e.g., disconnected, multi-modal, irregular PF, deceptiveness, etc. In this section, a benchmark with a specific number of objectives is referred to as a test instance.

4.1.2. Performance Indicators

The hypervolume (HV) [16] is used as the indicator to assess the performance of the algorithms in terms of both convergence and diversity. For an MOP or MaOP, suppose that P is the output population and is a preset reference point for the MOP. The HV value of P is the volume of the region that dominates y and is dominated by points in P. For each test instance used in this section, the reference point is set to 1.5 times the upper bound of its PF. A population with a larger HV value tends to have better overall performance. In this paper, the reference point used to calculate the HV value of a population on each test instance was embedded in PlatEMO. In addition, in this paper all of the HV values are normalized to . The HV indicator has been widely used in recently research, and has been proven to have superior performance capability when dealing with MaOPs.

4.1.3. Population Size

For MOEA/D [7] and other decomposition-based algorithms, the population size is largely determined by the total number of reference points in an M-objectives problem. For problems with , a two-layer vector generation strategy can be employed to generate reference (or weight) vectors on both the outer boundaries and the inside layers of the Pareto front [11]. Therefore, the population sizes of the six algorithms on MaF1-MaF9 are set according to the number of objectives, that is, 212, 156, 275, and 136 for 5, 8, 10, and 15 objectives, respectively.

4.1.4. Termination Conditions

The maximal number of function evaluations (MFEs) is selected as the termination condition for the six algorithms. For WFG3 and MaF3, the MFEs are set to 150,000, and for other test instances the number is 100,000. The reason for this lies in the fact that many local optimal solutions exist in WFG3 and MaF3.

4.2. Experimental Results and Analysis

To visually show the comparative results, the statistical results of the HV values of the output populations of the six algorithms on MaF1-MaF9 and WFG1-WFG9 are shown in Table 1 and Table 2, respectively. Similar to [48], the Wilcoxon rank-sum test with is adopted to verify the difference between the results produced by the proposed ART-DMaOEA and those produced by the compared algorithms. In Table 1 and Table 2, the best HV results among the data proposed by the six algorithms are highlighted in gray. The symbol +/−/≈ denotes the number of test instances in which one comparative algorithm is significantly better than, worse than, or similar to the proposed ART-DMaOEA.

Table 1.

HV Results of the sex algorithms on benchmarks MaF1-MaF9.

Table 2.

HV Results of the six algorithms on benchmarks WFG1-WFG9.

In Table 3, the statistical results of the five comparative algorithms are collected. In addition, we employ the Friedman test to calculate the p values between ART-DMaOEA and each comparative algorithm in order to verify significant differences. As shown in Table 3, the p values are calculated based on the HV values of each algorithm on each test suite. From Table 3, it can be observed that most values are less than 0.05, indicating a significant difference in the results. These results demonstrate the comprehensive performance of ART-DMaOEA, as it significantly outperforms other baseline algorithms.

Table 3.

Ratio of test cases where the corresponding baseline MaOEA performs worse than (−), better than (+), and similar to (≈) the proposed algorithm with respect to HV.

As can be seen in Table 1, the proposed ART-DMaOEA obtains the best result on 13 out of 36 test instances, while the numbers for the other five algorithms are 4, 7, 3, 4, and 5, respectively. Specifically, ART-DMaOEA outperforms A-NSGA-III, BiGE, MOEADDU, RVEAa, and -DEA on 27, 22, 23, 25, and 24 out of the 36 test instances, respectively.

From Table 2, we can observe that the proposed ART-DMaOEA shows the most overall results. On 18 test instances, ART-DMaOEA shows the most competitive performance compared with the five comparative algorithms. Specifically, ART-DMaOEA outperforms A-NSGA-III, BiGE, MOEADDU, RVEAa, and -DEA on 25, 20, 21, 27, and 22 out of the 36 test instances, respectively.

To summarize, the proposed ART-DMaOEA shows the best overall performance among the six algorithms, and shows good improvement in maintaining a balance between convergence and diversity.

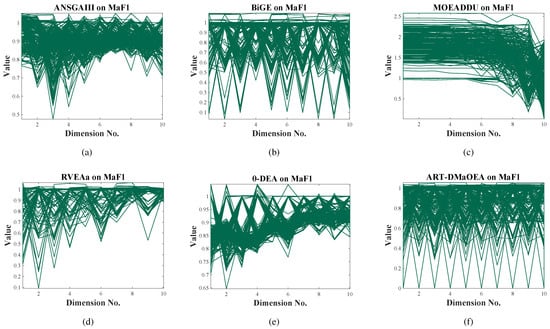

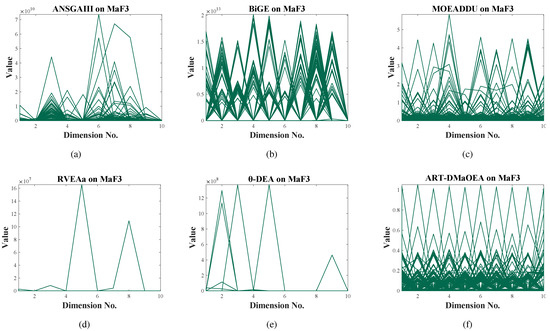

To visually compare the performance of the six algorithms, their final output populations on ten-objective MaF1 and ten-objective MaF3 are plotted in parallel coordinates and illustrated in Figure 6 and Figure 7.

Figure 6.

The best populations obtained by the six algorithms on ten-objective MaF1, as shown by parallel coordinates.

Figure 7.

The best populations obtained by the six algorithms on ten-objective MaF3, as shown by parallel coordinates.

For ten-objective MaF1, the interval of the true PF in each objective scales from 0 to 1. From Figure 6, it can be seen that A-NSGA-III, BiGE, MOEADDU, RVEAa, and -DEA fail to converge to the interval on certain objectives, i.e., there exist individuals with objective values larger than 1. Furthermore, the populations output by A-NSGA-III, MOEADDU, RVEAa, and -DEA do not include individuals with objective values equal to 0. For example, the minimum value of the population output by -DEA on all the objectives is slightly less than 0.65, rather than equal to 0. The reason for this may lie in the fact that A-NSGA-III, MOEADDU, RVEAa, and -DEA belong to the class of decomposition-based MaOEAs, and as such their performance largely depends on the similarity of the predefined reference vectors and the true PF of the optimized MaOP. Thus, they lack enough force to adjust the reference vectors to meet the true PF of ten-objective MaF1.

MaF3 has a convex PF, and there are a large number of local fronts. This test problem is mainly used to assess whether EMaO algorithms are capable of dealing with convex PFs. As can be seen in Figure 7, it is clear that and -DEA do not output N individuals; the reason for this is that most subspaces are inactive, causing the output population to obtain insufficient individuals. In addition, the convergence of A-NSGA-III, BiGE, and MOEADDU is very poor according to the interval of each objective of the obtained population. Specifically, the population values of A-NSGA-III in each objective dimension are distributed between rather than 0 and 1. The same phenomena can be found in the populations of BiGE and MOEADDU. Compared with the five algorithms on ten-objective MaF1, it is apparent that the proposed algorithm has the most outstanding performance with respect to both convergence and diversity.

5. Conclusions and Future Works

This paper focuses on improving diversity performance for the existing decomposition-based MaOEAs when they are used to deal with MaOPs with PFs of various shapes. During the optimization process, we define two states according to the number of active reference vectors and inactive reference vectors. These two states indicate the similarity between the shape of the obtained population and the shape of the predefined reference vectors. High similarity means that the used MaOEA can divide the obtained population (which can be seen as a good approximation of the true PF) in an even manner, while low similarity means the opposite. Then, two strategies are tailored for the two states. The two strategies respectively aim to delete the inactive reference vectors in the generation and add new active reference vectors in order to more evenly divide the region where the obtained solutions are located. After adjustment of the active reference vectors, an efficient convergence indicator and the active reference vectors work together to strengthen convergence and diversity while maintaining a sound balance between them. To test the performance of the proposed algorithm, intensive experiments were conducted to compare it with five state-of-the-art MaOEAs on 72 test instances. The results show that the proposed ART-DMaOEA has the most competitive overall performance.

Thanks to its ability to adaptively adjust the distribution of the reference vectors according to the obtained population, ART-DMaOEA has an advantage when handling MaOPs with PFs that are unevenly distributed in the objective space. However, if it is used to solve an MaOP with a PF evenly distributed in the objective space, it may show similar performance with other MaOEAs and even sometimes be weaker. Furthermore, ART-DMaOEA has not been developed to solve MaOPs with large-scale decision variables.

Many real-world optimization problems involve various constraints and large-scale decision variables. In the future, we plan to investigate decomposition-based algorithms to solve MaOPs with large-scale decision variables or with constraints.

Author Contributions

Conceptualization, investigation, L.X. and F.H.; methodology, Z.C. and J.L.; validation, L.X. and F.H.; writing—original draft preparation, L.X.; writing—review and editing, J.L., Z.C. and F.H.; supervision, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Science and Technology Innovation Team of Shaanxi Province (2023-CX-TD-07), and the Special Project in Major Fields of Guangdong Universities (2021ZDZX1019), the Major Projects of Guangdong Education Department for Foundation Research and Applied Research (2017KZDXM081, 2018KZDXM066), Guangdong Provincial University Innovation Team Project (2020KCXTD045) and the Hunan Key Laboratory of Intelligent Decision-making Technology for Emergency Management (2020TP1013).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors are thankful to the anonymous reviewers for their valuable suggestions during the review process.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Salimi, A.; Lowther, D.A. Projection-based objective space reduction for many-objective optimization problems: Application to an induction motor design. In Proceedings of the 2016 IEEE Conference on Electromagnetic Field Computation (CEFC), Miami, FL, USA, 13–16 November 2016; p. 1. [Google Scholar]

- Peng, G. Multi-objective Optimization Research and Applied in Cloud Computing. In Proceedings of the 2019 IEEE International Symposium on Software Reliability Engineering Workshops (ISSREW), Berlin, Germany, 28–31 October 2019; pp. 97–99. [Google Scholar]

- Chen, H.; Zhu, X.; Liu, G.; Pedrycz, W. Uncertainty-aware online scheduling for real-time workflows in cloud service environment. IEEE Trans. Serv. Comput. 2021, 14, 1167–1178. [Google Scholar] [CrossRef]

- Du, Y.; Wang, T.; Xin, B.; Wang, L.; Chen, Y.; Xing, L. A Data-Driven Parallel Scheduling Approach for Multiple Agile Earth Observation Satellites. IEEE Trans. Evol. Comput. 2020, 24, 679–693. [Google Scholar] [CrossRef]

- Li, M.; Wang, Z.; Li, K.; Liao, X.; Hone, K.; Liu, X. Task Allocation on Layered Multiagent Systems: When Evolutionary Many-Objective Optimization Meets Deep Q-Learning. IEEE Trans. Evol. Comput. 2021, 25, 842–855. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, H. MOEA/D: A Multiobjective Evolutionary Algorithm Based on Decomposition. IEEE Trans. Evol. Comput. 2007, 11, 712–731. [Google Scholar] [CrossRef]

- Li, B.; Li, J.; Tang, K.; Yao, X. Many-objective evolutionary algorithms: A survey. ACM Comput. Surv. (CSUR) 2015, 48, 1–35. [Google Scholar] [CrossRef]

- Bechikh, S.; Elarbi, M.; Ben Said, L. Many-objective optimization using evolutionary algorithms: A survey. In Recent Advances in Evolutionary Multi-Objective Optimization; Springer: Berlin, Germany, 2017; pp. 105–137. [Google Scholar]

- Chand, S.; Wagner, M. Evolutionary many-objective optimization: A quick-start guide. Surv. Oper. Res. Manag. Sci. 2015, 20, 35–42. [Google Scholar] [CrossRef]

- Deb, K.; Jain, H. An Evolutionary Many-Objective Optimization Algorithm Using Reference-Point-Based Nondominated Sorting Approach, Part I: Solving Problems With Box Constraints. IEEE Trans. Evol. Comput. 2014, 18, 577–601. [Google Scholar] [CrossRef]

- Elarbi, M.; Bechikh, S.; Gupta, A.; Ben Said, L.; Ong, Y.S. A New Decomposition-Based NSGA-II for Many-Objective Optimization. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1191–1210. [Google Scholar] [CrossRef]

- Cheng, R.; Jin, Y.; Olhofer, M.; Sendhoff, B. A Reference Vector Guided Evolutionary Algorithm for Many-Objective Optimization. IEEE Trans. Evol. Comput. 2016, 20, 773–791. [Google Scholar] [CrossRef]

- Li, K.; Deb, K.; Zhang, Q.; Kwong, S. An Evolutionary Many-Objective Optimization Algorithm Based on Dominance and Decomposition. IEEE Trans. Evol. Comput. 2015, 19, 694–716. [Google Scholar] [CrossRef]

- Bosman, P.A.N.; Thierens, D. The balance between proximity and diversity in multiobjective evolutionary algorithms. IEEE Trans. Evol. Comput. 2003, 7, 174–188. [Google Scholar] [CrossRef]

- Zitzler, E.; Thiele, L. Multiobjective evolutionary algorithms: A comparative case study and the strength Pareto approach. IEEE Trans. Evol. Comput. 1999, 3, 257–271. [Google Scholar] [CrossRef]

- Liu, H.; Gu, F.; Zhang, Q. Decomposition of a Multiobjective Optimization Problem Into a Number of Simple Multiobjective Subproblems. IEEE Trans. Evol. Comput. 2014, 18, 450–455. [Google Scholar] [CrossRef]

- Ishibuchi, H.; Setoguchi, Y.; Masuda, H.; Nojima, Y. Performance of Decomposition-Based Many-Objective Algorithms Strongly Depends on Pareto Front Shapes. IEEE Trans. Evol. Comput. 2017, 21, 169–190. [Google Scholar] [CrossRef]

- Liu, Q.; Jin, Y.; Heiderich, M.; Rodemann, T. Adaptation of Reference Vectors for Evolutionary Many-objective Optimization of Problems with Irregular Pareto Fronts. In Proceedings of the 2019 IEEE Congress on Evolutionary Computation (CEC), Wellington, New Zealand, 10–13 June 2019; pp. 1726–1733. [Google Scholar]

- Chen, H.; Cheng, R.; Wen, J.; Li, H.; Weng, J. Solving large-scale many-objective optimization problems by covariance matrix adaptation evolution strategy with scalable small subpopulations. Inf. Sci. 2020, 509, 457–469. [Google Scholar] [CrossRef]

- Liu, Q.; Cui, C.; Fan, Q. Self-Adaptive Constrained Multi-Objective Differential Evolution Algorithm Based on the State–Action–Reward–State–Action Method. Mathematics 2022, 10, 813. [Google Scholar] [CrossRef]

- Ishibuchi, H.; Tsukamoto, N.; Nojima, Y. Evolutionary many-objective optimization: A short review. In Proceedings of the 2008 IEEE Congress on Evolutionary Computation (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–6 June 2008; pp. 2419–2426. [Google Scholar]

- Wang, Y.; Li, K.; Wang, G.G. Combining Key-Points-Based Transfer Learning and Hybrid Prediction Strategies for Dynamic Multi-Objective Optimization. Mathematics 2022, 10, 2117. [Google Scholar] [CrossRef]

- Chen, H.; Wu, G.; Pedrycz, W.; Suganthan, P.; Xing, L.; Zhu, X. An Adaptive Resource Allocation Strategy for Objective Space Partition-Based Multiobjective Optimization. IEEE Trans. Syst. Man Cybern. Syst. 2019, 1, 1–16. [Google Scholar] [CrossRef]

- Zhou, A.; Qu, B.Y.; Li, H.; Zhao, S.Z.; Suganthan, P.N.; Zhang, Q. Multiobjective evolutionary algorithms: A survey of the state of the art. Swarm Evol. Comput. 2011, 1, 32–49. [Google Scholar] [CrossRef]

- Bader, J.; Zitzler, E. HypE: An Algorithm for Fast Hypervolume-Based Many-Objective Optimization. Evol. Comput. 2011, 19, 45–76. [Google Scholar] [CrossRef] [PubMed]

- Zitzler, E.; Laumanns, M.; Thiele, L. SPEA2: Improving the Strength Pareto Evolutionary Algorithm; TIK-Report; TIK: New York, NY, USA, 2001; Volume 103. [Google Scholar]

- Laumanns, M.; Thiele, L.; Deb, K.; Zitzler, E. Combining Convergence and Diversity in Evolutionary Multiobjective Optimization. Evol. Comput. 2002, 10, 263–282. [Google Scholar] [CrossRef] [PubMed]

- Zou, X.; Chen, Y.; Liu, M.; Kang, L. A New Evolutionary Algorithm for Solving Many-Objective Optimization Problems. IEEE Trans. Syst. Man, Cybern. Part B Cybern. 2008, 38, 1402–1412. [Google Scholar]

- Ikeda, K.; Kita, H.; Kobayashi, S. Failure of Pareto-based MOEAs: Does non-dominated really mean near to optimal? In Proceedings of the 2001 Congress on Evolutionary Computation (IEEE Cat. No.01TH8546), Seoul, Republic of Korea, 27–30 May 2001; Volume 2, pp. 957–962. [Google Scholar]

- Yuan, Y.; Xu, H.; Wang, B.; Yao, X. A New Dominance Relation-Based Evolutionary Algorithm for Many-Objective Optimization. IEEE Trans. Evol. Comput. 2016, 20, 16–37. [Google Scholar] [CrossRef]

- He, Z.; Yen, G.G.; Zhang, J. Fuzzy-Based Pareto Optimality for Many-Objective Evolutionary Algorithms. IEEE Trans. Evol. Comput. 2014, 18, 269–285. [Google Scholar] [CrossRef]

- Qiu, W.; Zhu, J.; Wu, G.; Fan, M.; Suganthan, P.N. Evolutionary many-Objective algorithm based on fractional dominance relation and improved objective space decomposition strategy. Swarm Evol. Comput. 2021, 60, 100776. [Google Scholar] [CrossRef]

- Yang, S.; Li, M.; Liu, X.; Zheng, J. A Grid-Based Evolutionary Algorithm for Many-Objective Optimization. IEEE Trans. Evol. Comput. 2013, 17, 721–736. [Google Scholar] [CrossRef]

- Tian, Y.; Cheng, R.; Zhang, X.; Su, Y.; Jin, Y. A Strengthened Dominance Relation Considering Convergence and Diversity for Evolutionary Many-Objective Optimization. IEEE Trans. Evol. Comput. 2019, 23, 331–345. [Google Scholar] [CrossRef]

- Murata, T.; Ishibuchi, H.; Gen, M. Specification of Genetic Search Directions in Cellular Multi-objective Genetic Algorithms. In Proceedings of the Evolutionary Multi-Criterion Optimization, Zurich, Switzerland, 7–9 March 2001; Zitzler, E., Thiele, L., Deb, K., Coello Coello, C.A., Corne, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2001; pp. 82–95. [Google Scholar]

- Wang, Z.; Zhang, Q.; Zhou, A.; Gong, M.; Jiao, L. Adaptive Replacement Strategies for MOEA/D. IEEE Trans. Cybern. 2016, 46, 474–486. [Google Scholar] [CrossRef]

- Li, H.; Zhang, Q.; Deng, J. Biased multiobjective optimization and decomposition algorithm. IEEE Trans. Cybern. 2016, 47, 52–66. [Google Scholar] [CrossRef]

- Bao, C.; Gao, D.; Gu, W.; Xu, L.; D.Goodman, E. A New Adaptive Decomposition-based Evolutionary Algorithm for Multi- and Many-objective Optimization. Expert Syst. Appl. 2022, 2022, 119080. [Google Scholar]

- Zhao, C.; Zhou, Y.; Hao, Y. Decomposition-based evolutionary algorithm with dual adjustments for many-objective optimization problems. Swarm Evol. Comput. 2022, 75, 101168. [Google Scholar] [CrossRef]

- Zitzler, E.; Künzli, S. Indicator-Based Selection in Multiobjective Search. In Proceedings of the Parallel Problem Solving from Nature—PPSN VIII, Birmingham, UK, 18–22 September 2004; pp. 832–842. [Google Scholar]

- Emmerich, M.; Beume, N.; Naujoks, B. An EMO Algorithm Using the Hypervolume Measure as Selection Criterion. In Proceedings of the Evolutionary Multi-Criterion Optimization, Guanajuato, Mexico, 9–11 March 2005; Coello Coello, C.A., Hernández Aguirre, A., Zitzler, E., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 62–76. [Google Scholar]

- Pamulapati, T.; Mallipeddi, R.; Suganthan, P.N. ISDE +—An Indicator for Multi and Many-Objective Optimization. IEEE Trans. Evol. Comput. 2019, 23, 346–352. [Google Scholar] [CrossRef]

- Tian, Y.; Cheng, R.; Zhang, X.; Cheng, F.; Jin, Y. An Indicator-Based Multiobjective Evolutionary Algorithm With Reference Point Adaptation for Better Versatility. IEEE Trans. Evol. Comput. 2018, 22, 609–622. [Google Scholar] [CrossRef]

- Dong, J.; Gong, W.; Ming, F.; Wang, L. A two-stage evolutionary algorithm based on three indicators for constrained multi-objective optimization. Expert Syst. Appl. 2022, 195, 116499. [Google Scholar] [CrossRef]

- Li, J.; Chen, G.; Li, M.; Chen, H. An enhanced-indicator based many-objective evolutionary algorithm with adaptive reference point. Swarm Evol. Comput. 2020, 55, 100669. [Google Scholar] [CrossRef]

- Qiu, W.; Zhu, J.; Wu, G.; Chen, H.; Pedrycz, W.; Suganthan, P.N. Ensemble Many-Objective Optimization Algorithm Based on Voting Mechanism. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 1716–1730. [Google Scholar] [CrossRef]

- Chen, H.; Tian, Y.; Pedrycz, W.; Wu, G.; Wang, R.; Wang, L. Hyperplane Assisted Evolutionary Algorithm for Many-Objective Optimization Problems. IEEE Trans. Cybern. 2020, 50, 3367–3380. [Google Scholar] [CrossRef]

- Zhang, X.; Tian, Y.; Cheng, R.; Jin, Y. A Decision Variable Clustering-Based Evolutionary Algorithm for Large-Scale Many-Objective Optimization. IEEE Trans. Evol. Comput. 2018, 22, 97–112. [Google Scholar] [CrossRef]

- Yuan, J.; Liu, H.L.; Gu, F.; Zhang, Q.; He, Z. Investigating the Properties of Indicators and an Evolutionary Many-Objective Algorithm Using Promising Regions. IEEE Trans. Evol. Comput. 2021, 25, 75–86. [Google Scholar] [CrossRef]

- Jain, H.; Deb, K. An Evolutionary Many-Objective Optimization Algorithm Using Reference-Point Based Nondominated Sorting Approach, Part II: Handling Constraints and Extending to an Adaptive Approach. IEEE Trans. Evol. Comput. 2014, 18, 602–622. [Google Scholar] [CrossRef]

- Li, M.; Yang, S.; Liu, X. Bi-goal evolution for many-objective optimization problems. Artif. Intell. 2015, 228, 45–65. [Google Scholar] [CrossRef]

- Yuan, Y.; Xu, H.; Wang, B.; Zhang, B.; Yao, X. Balancing Convergence and Diversity in Decomposition-Based Many-Objective Optimizers. IEEE Trans. Evol. Comput. 2016, 20, 180–198. [Google Scholar] [CrossRef]

- Tian, Y.; Cheng, R.; Zhang, X.; Jin, Y. PlatEMO: A MATLAB Platform for Evolutionary Multi-Objective Optimization [Educational Forum]. IEEE Comput. Intell. Mag. 2017, 12, 73–87. [Google Scholar] [CrossRef]

- Huband, S.; Hingston, P.; Barone, L.; While, L. A review of multiobjective test problems and a scalable test problem toolkit. IEEE Trans. Evol. Comput. 2006, 10, 477–506. [Google Scholar] [CrossRef]

- Cheng, R.; Li, M.; Tian, Y.; Zhang, X.; Yang, S.; Jin, Y.; Yao, X. A benchmark test suite for evolutionary many-objective optimization. Complex Intell. Syst. 2017, 3, 67–81. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).