Abstract

Regression models for continuous outcomes frequently require a transformation of the outcome, which is often specified a priori or estimated from a parametric family. Cumulative probability models (CPMs) nonparametrically estimate the transformation by treating the continuous outcome as if it is ordered categorically. They thus represent a flexible analysis approach for continuous outcomes. However, it is difficult to establish asymptotic properties for CPMs due to the potentially unbounded range of the transformation. Here we show asymptotic properties for CPMs when applied to slightly modified data where bounds, one lower and one upper, are chosen and the outcomes outside the bounds are set as two ordinal categories. We prove the uniform consistency of the estimated regression coefficients and of the estimated transformation function between the bounds. We also describe their joint asymptotic distribution, and show that the estimated regression coefficients attain the semiparametric efficiency bound. We show with simulations that results from this approach and those from using the CPM on the original data are very similar when a small fraction of the data are modified. We reanalyze a dataset of HIV-positive patients with CPMs to illustrate and compare the approaches.

Keywords:

cumulative probability model; semiparametric transformation model; uniform consistency; asymptotic distribution MSC:

62G99

1. Introduction

Regression analyses of continuous outcomes often require a transformation of the outcome to meet modeling assumptions. In practice, convenient but ad hoc transformations such as a logarithm or square root are often used on right-skewed outcomes; an alternative is to use the Box–Cox family [1] of transformations, which is effectively a family of power functions plus the logarithm transformation. Because the correct transformation for the continuous outcome is often unknown and it may not fall in a prespecified family, it is desirable to estimate the transformation in a flexible way. Semiparametric transformation models have been introduced to address this issue [2,3]. These models involve a latent intermediate variable and two model components: one connecting the latent variable to the outcome variable through an unknown transformation and the other connecting the latent variable to the input variables as in traditional regression models with unknown beta coefficients.

Early parameter estimation for semiparametric transformation models was based on the marginal likelihood of the vector of outcome ranks [2,3,4]. Although this marginal likelihood can be simplified to the partial likelihood in Cox proportional hazards models [5], it cannot be simplified for other transformation models, and various approximations had to be used. As the marginal likelihood only involves the beta coefficients, additional ad hoc procedures were developed to estimate the transformation [2,3].

Later developments for fitting semiparametric linear transformation models primarily focused on right-censored data, initially relying on estimating equations [6,7]. Zeng and Lin [8] developed nonparametric maximum likelihood estimators (NPMLEs) based on likelihoods for time-to-event data and showed the consistency and asymptotic distribution of their estimators. NPMLEs are desirable because they are fully efficient. For continuous outcomes, more recent developments have used B-splines and Bernstein polynomials to flexibly model the transformation [9,10], but these estimators of the transformation are not fully nonparametric.

With continuous outcomes, one way to nonparametrically estimate the transformation is to treat the outcome as if it is ordinal—without any categorization—and fit to cumulative probability models (CPMs; also called cumulative link models) [11]. Liu et al. [11] showed that the CPM’s multinomial likelihood for continuous outcomes is equivalent to the nonparametric likelihood for semiparametric transformation models. This result led to new NPMLEs for semiparametric transformation models for continuous outcomes using computationally simple ordinal regression methods. They showed with simulations that CPMs perform well in a wide range of scenarios. The method has since been used in applications to analyze various outcomes [12,13,14,15,16,17,18,19].

However, there is no established asymptotic theory for this new NPMLE approach for continuous outcomes. One main hurdle is that the unknown transformation of the continuous outcome variable can have an unbounded range of values, which makes it hard to establish asymptotic properties across the whole range. The approaches that were used to prove asymptotic properties for the NPMLE of the baseline cumulative hazard function for time-to-event outcomes cannot be applied directly to study transformation models for a continuous outcome [8] because the latter has no bounds on its range and no clear definition of a baseline hazard function.

To address this issue, we establish several asymptotic properties in this paper for CPMs when they are applied to continuous outcomes with slight modification. Briefly, a lower bound L and an upper bound U for the outcome are chosen prior to analysis, the outcomes below L are set as the lowest category and those above U as the highest category, and then a CPM is fitted to the modified data. We prove that, in this approach, the nonparametric estimate of the transformation function is consistent (i.e., converges in probability to its true value) uniformly in the interval . We then show that the estimator of the beta coefficients and that of the transformation jointly converge to a tight Gaussian process, and that the estimator of the beta coefficients attains the semiparametric efficiency bound. The latter implies that this estimator is (asymptotically) as efficient as possible under the assumptions of the model. We show with simulations and real data that the results from this approach and those from the CPM on the original data are very similar when only a small fraction of data are outside the bounds.

2. Method

2.1. Cumulative Probability Models

Let Y be the outcome of interest and Z be a vector of p covariates. The semiparametric linear transformation model is

where H is a transformation function assumed to be non-decreasing but unknown otherwise, is a vector of coefficients, and is independent of Z and is assumed to follow a continuous distribution with cumulative distribution function . An alternative expression of model (1) is

where is the inverse of H. For mathematical clarity, we assume H is left continuous and define ; then, A is non-decreasing and right-continuous.

Model (1) is equivalent to the cumulative probability model (CPM) presented in Liu et al. [11]:

where serves as a link function. One example of the distribution for is the standard normal distribution. In this case, the CPM becomes a normal linear model after a transformation, which includes log-linear models and linear models with a Box–Cox transformation as special cases. The CPM becomes a Cox proportional hazards model when follows the extreme value distribution, i.e., , or a proportional odds model when follows the logistic distribution, i.e., .

Suppose the data are i.i.d. and denoted as . Liu et al. [11] proposed to model the transformation A nonparametrically. The corresponding nonparametric (NP) likelihood is

where . Since A can be any non-decreasing function, this likelihood will be maximized when the increments of are concentrated at the observed ; if some increments of are not at the observed , its corresponding probability mass at non-observed values can always be reallocated to some observed values to increase the likelihood. Thus, we can maximize this likelihood by only considering step functions that have a jump at every observed . This leads to an expression of the likelihood that is the same as the likelihood of the CPM when the outcome variable is treated as if it were ordered categorically with the observed distinct values as the ordered categories. As a result, nonparametric maximum likelihood estimates (NPMLEs) can be obtained by fitting an ordinal regression model to the continuous outcome. Liu et al. [11] showed in simulations that CPMs perform well under a wide range of scenarios. However, it is difficult to prove the asymptotic properties for this approach. Since some can be extremely large or small and the observations at the tails are often sparse, there is high variability in the estimate of A at the tails. Moreover, the unboundedness of the transformation at the tails makes it difficult to control the compactness of the estimator of A, thus making most of asymptotic theory no longer applicable. In this paper, we prove asymptotic properties for CPMs when they are applied to continuous outcomes with slight modification. We describe the modification in Section 2.2 and show the asymptotic results in Section 2.3.

2.2. Cumulative Probability Models on Modified Data

In view of the challenges above, we hereby describe an approach in which the outcomes are modified at the two ends before a CPM is fit. We will then describe the asymptotic properties of this approach in Section 2.3 and show with simulations that the results from this approach and those of the CPM on the original data are similar when a small fraction of data are modified.

More specifically, we predetermine a lower bound L and an upper bound U, and consider all observations with as a single ordered category, which we conveniently denote as L, and those with as a single ordered category, denoted as U. The bounds L and U should satisfy , , and . The new outcome variable, denoted as , follows a mixture distribution. When , the distribution is continuous with the same cumulative distribution function as that for ; that is, for . When or , the distribution is discrete, with and . Then, the nonparametric likelihood for the modified data is

where is the indicator function for event S with value 1 if S occurs and 0 otherwise.

Since can be any non-decreasing function over the interval , the likelihood (4) will be maximized when the increments of are concentrated at the observed . Hence, it suffices to consider only step functions with a jump at each distinct value of .

2.3. Asymptotic Results

From now on, we assume the outcome is continuous. Without loss of generality, we assume that in our models (1)–(3), the support of Y contains 0, the vector Z contains an intercept and has p dimensions, and . Furthermore, the two bounds satisfy , , , , and . To establish the asymptotic properties described below, we further assume that

- 1.

- is thrice-continuously differentiable, for any x,for , where is a constant, and

- 2.

- The covariance matrix of Z is non-singular. In addition, Z and are bounded so that almost certainly for some large constant m.

- 3.

- is continuously differentiable in .

Condition 1 imposes restrictions on at both tails; it holds for many residual distributions, including the standard normal distribution, the extreme value distribution and the logistic distribution. Conditions 2 and 3 are minimal assumptions for establishing asymptotic properties for linear transformation models.

Let denote the nonparametric maximum likelihood estimate of that maximizes the likelihood (4) on the modified data. Then, is a step function with a jump at each of the distinct in the modified data. To establish the asymptotic properties of , we consider as a function over the closed interval by defining . We have the following consistency theorem.

Theorem 1.

Under conditions 1–3, with probability one,

The proof of Theorem 1 is in Appendix A. Core steps of the proof include showing that is bounded in with probability one. Then, since is bounded and increasing in , via the Helly selection theorem [20], for any subsequence, there exists a further subsequence that converges to a non-decreasing, right-continuous function at its continuity points. Thus, without confusion, we assume that weakly in and . We then show that with probability one, for and . With this result, the consistency is established. Furthermore, since A is continuously differentiable, we conclude that converges to uniformly in with probability one.

We next describe the asymptotic distribution of . The asymptotic distribution of will be expressed as that of a random functional in a metric space. We first define some notation. Let be the set of all functions defined over for which the total variation is at most one. Let be the set of all linear functionals over ; that is, every element f in is a linear function . For any , its norm is defined as . A metric over can then be derived subsequently. Given any non-decreasing function A over , a corresponding linear functional in , also denoted as A, can be defined such that for any ,

Similarly, for an nonparametric maximum likelihood estimate , its corresponding linear functional in is , such that for any ,

The functional is a random element in the metric space . For any , there exists an , such that . For example, suppose the estimated jump sizes at the distinct outcome values of a dataset, , are . Then, at , , where ; and, similarly, at , , where .

Theorem 2.

Under conditions 1 – 3, converges weakly to a tight Gaussian process in . Furthermore, the asymptotic variance of attains the semiparametric efficiency bound.

The proof of Theorem 2 is in Appendix B and makes use of weak convergence theory for empirical processes and semiparametric efficiency theory. Its proof relies on verifying all the technical conditions in the Master Z-Theorem in [21]. In particular, it entails verification of the invertibility of the information operator for .

Because the information operator for is invertible, the arguments given in [22] imply that the asymptotic variance-covariance matrix of for any can be consistently estimated based on the information matrix for and the jump sizes of . Specifically, suppose the estimated jump sizes at the distinct outcome values of a dataset, , are . Let be the estimated information matrix for both and . Then, the variance-covariance matrix for is estimated as , where

and H is a matrix with elements .

3. Simulation Study

3.1. Simulation Set-Up

CPMs have been extensively simulated elsewhere to justify their use and have been largely seen to have good behavior [11]. Here we perform a more limited set of simulations to illustrate three major points which are particularly relevant to our study. First, the estimation of using CPMs can be biased at extreme values of y. Even though may have point-wise consistency for any y, may not be uniformly consistent over all . Second, in the modified approach, is uniformly consistent over . Third, except for the estimation of extreme quantiles and at extreme levels, the results are largely similar between CPMs fit to the original data and the modified data.

We roughly followed the simulation settings of Liu et al. [11]. Let Bernoulli(0.5), , and , where , , and . In this set-up, the correct transformation function is . We generated datasets with sample sizes , 1000, and 5000. We fit CPMs that have the correctly specified link function (probit) and model form (linear). The performance of misspecified models was extensively studied via simulations [11]. In CPMs, the transformation A and the parameters are semi-parametrically estimated. We evaluated how well the transformation was estimated by comparing with the correct transformation, , for various values of y.

We fit CPMs to the original data and CPMs to the modified data with set to , , and ; these values correspond to approximately and of Y being modified, respectively. All simulations had 1000 replications.

3.2. Simulation Results

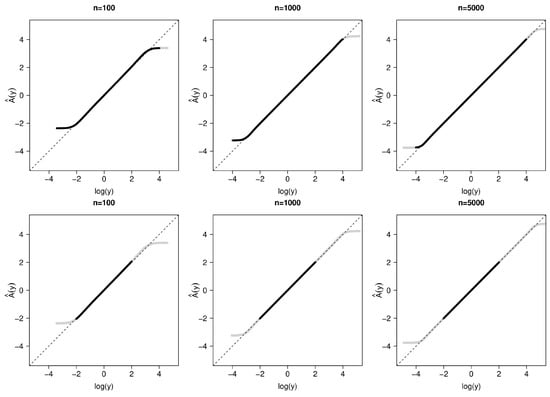

Figure 1 shows the average estimate of across 1000 simulation replicates compared with the true transformation, . The left, center, and right panels are results based on sample sizes of 100, 1000, and 5000, respectively. With the original data, for all sample sizes, estimates are unbiased when y is around the center of its distribution (i.e., where the bulk of the probability mass lies), approximately in the range when , in when , and in a wider range when . However, at extreme values of y, we see biased estimation. This illustrates that, for a fixed y, one can find a sample size large enough that the estimation of is unbiased, but that there will always be a more extreme value of y for which may be biased. This motivates the need to categorize values outside a predetermined range to achieve the uniform consistency of for .

Figure 1.

Average estimate of after fitting properly specified CPMs compared with the true transformation, . Gray curve: original data; black curve: modified data. Dashed lines are the diagonal. Top row: ; middle row: ; bottom row: . Left to right: . Based on 1000 replications.

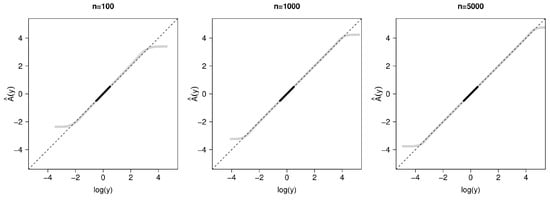

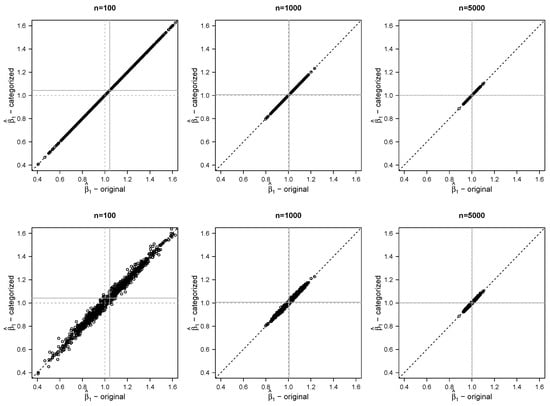

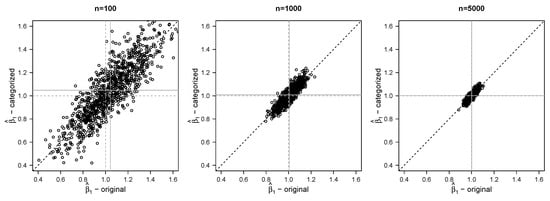

Figure 2 compares estimates of for the various sample sizes using the original data and using the modified data. As the sample size becomes larger, becomes less biased in all approaches. At , is approximately unbiased even with a large proportion of the data having been categorized. Not surprisingly, with increasing proportions of categorized data, becomes slightly more variable (Table 1) and slightly less correlated with that estimated from the original data. The results for have similar patterns (Supplementary Material Figure S1).

Figure 2.

Estimates of using data categorized outside compared with those using the original data and to the truth, . Gray lines are mean estimates and dashed gray lines are the truth. Top row: ; middle row: ; bottom row: . Left to right: . Based on 1000 replications.

Table 1.

Simulation results for estimates from CPMs on original data and on data categorized outside ; ; based on 1000 replications.

Table 1 shows further results for five estimands: , , , and the conditional median and mean of Y given and . For each estimand, we compute the bias of the corresponding estimate, its standard deviation across replicates, the mean of estimated standard errors, and the mean squared error. For the estimands , , and , estimation using the original data appears to be consistent, and the behavior of our estimators with the modified data is as predicted by the asymptotic theory. When , there appears to be only a modest amount of bias, even with 71% categorized; when (Table 1) and 5000 (shown in Supplementary Material Table S1), the bias is quite small. Although in Figure 1 we saw that estimates of for extreme values of y were biased, we see no evidence that this impacts the estimation of and . The average standard errors are very similar to the empirical results (i.e., the standard deviation of parameter estimates across replicates), suggesting that we are correctly estimating standard errors. These results hold regardless of the proportion categorized in our simulations. With increasing proportions being categorized, as expected, both absolute bias and standard deviation increase, and, as a result, the mean squared error increases. However, all these measures become smaller as the sample size increases.

We cannot compute the standard error for the conditional median. Categorization also prohibits the sound estimation of the conditional mean; one could instead estimate the trimmed conditional mean, e.g., , which may substantially differ from . The bias of for extreme values of y had little impact on the estimation of , which is computed using over the entire range of observed y.

4. Example Data Analysis

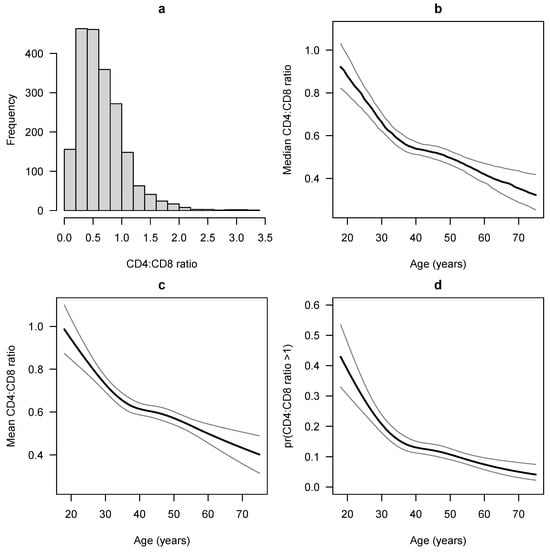

CD4:CD8 ratio is a biomarker for measuring the strength of the immune system. A normal CD4:CD8 ratio is between 1 and 4, while people with HIV tend to have much lower values, and a low CD4:CD8 ratio is highly predictive of poor outcomes including non-communicable diseases and mortality. When people with HIV are put on antiretroviral therapy, their CD4:CD8 ratio tends to increase, albeit often slowly and quite variably. Castilho et al. [23] studied factors associated with the CD4:CD8 ratio among 2024 people with HIV who started antiretroviral therapy and maintained viral suppression for at least 12 months. They considered various factors including age, sex, race, the probable route of transmission, hepatitis C co-infection, hepatitis B co-infection, and the year of antiretroviral therapy initiation. Here we re-analyze their data using CPMs. We will focus on the associations of the CD4:CD8 ratio with age and sex, treating the other factors as covariates. The CD4:CD8 ratio tends to be right-skewed (Figure 3a), but there is no standard transformation for analyzing it. In various studies, it has been left untransformed [23], log-transformed [24], dichotomized (CD4:CD8 vs. ) [25], put into ordered categories roughly based on quantiles [26], square-root transformed [27], and fifth-root transformed [28]. In contrast, CPMs do not require the specification of the transformation.

Figure 3.

(a) Histogram of CD4:CD8 ratio in our dataset. (b–d) Estimated outcome measures and 95% confidence intervals as functions of age, holding other covariates constant at their medians/modes. (b) Median CD4:CD8 ratio; (c) mean CD4:CD8 ratio; (d) probability that CD4:CD8 .

We fit three CPMs: Model 1 using the original data, Model 2 categorizing all CD4:CD8 ratios below and above , and Model 3 categorizing below and above . In a similar group of patients in a prior study [29], these values of L and U were approximately the 1.5th and 99.5th percentiles, respectively, for Model 2, and the 7th and 95th percentiles for Model 3. In our dataset, there were 19 (0.9%) CD4:CD8 ratios below and 21 (1%) above , and 156 (7.7%) below and 74 (3.7%) above . In our models, age was modeled using restricted cubic splines [30] with four knots at the 0.05, 0.35, 0.65, and 0.95 quantiles. All models were fit using a logit link function; quantile–quantile plots of probability-scale residuals [11] from the models suggested a good model fit (Supplementary Materials Figure S2).

All three models produced nearly identical results. Female sex had regression coefficients , , and in Models 1, 2, and 3, respectively (likelihood ratio in all models), suggesting that the odds of having a higher CD4:CD8 ratio, after controlling for all other variables in the model, were about times higher for females than for males (95% Wald confidence interval 1.44–2.31). The median CD4:CD8 ratio holding all other covariates fixed at their medians/modes was estimated to be 0.67 (0.60–0.74) for females compared with 0.53 (0.51–0.56) for males; all models had the same estimates to two decimal places. The mean CD4:CD8 ratio holding all other covariates constant was estimated to be 0.73 (0.67–0.79) for females and 0.61 (0.58–0.63) for males from Model 1. The mean estimates from Models 2 and 3 were slightly different (e.g., 0.72 for females); however, the mean should not be reported after categorization because the estimates arbitrarily assigned the categorized values to be L and U.

Older age was strongly associated with a lower CD4:CD8 ratio ( in all models), and the association was non-linear (, , , respectively). Figure 3b–d show the estimated median and mean CD4:CD8 ratio and the probability that CD4:CD8 as functions of age, all derived from the CPMs and holding other covariates fixed at their medians/modes. The median CD4:CD8 ratio and P(CD4:CD8 were not discernibly different between the three models. The mean as a function of age is only shown as derived from Model 1.

5. Discussion

We have now established the asymptotic properties for CPMs applied to data categorized at the tails. CPMs are flexible semiparametric regression models for continuous outcomes because the outcome transformation is nonparametrically estimated. We proved uniform consistency of the estimated coefficients and the estimated transformation function over the interval , and showed that their joint asymptotic distribution is a tight Gaussian process. We demonstrated that these estimators perform well with simulations and illustrated their use in practice with a real data example.

Establishing uniform consistency requires a bounded range of the transformation function A, which is achieved by categorizing the outcome variable at both ends. Even if an outcome variable has a bounded support, the transformed values may not be bounded, and categorization will still be needed to establish uniform consistency. The proof of uniform consistency for also requires a bounded range of A even though and A are separate components of the model.

Although the asymptotic properties for a similar nonparametric maximum likelihood approach in survival analysis have been established [8], the proofs here for CPMs with continuous data are different because we consider the nonparametric maximum likelihood estimate for the transformation in CPMs rather than the cumulative hazards function as in survival analysis. In addition, the transformation is estimated in the proofs directionally and separately for the two tails, which also differs from prior work.

For data without natural lower and upper bounds, the choice of L and U might be challenging in practice. In our CD4:CD8 ratio analysis, we were able to select values of L and U that corresponded with small and large CD4:CD8 percentiles in a prior study, therefore likely ensuring that a small fraction of the data would be modified in our analysis. In general, it is desirable to choose bounds so that only a small fraction of the data are categorized, although it should be reiterated that these bounds should be chosen prior to analysis. Both our simulations and our data example suggest that the results are robust to the specific choices of L and U as long as they do not lead to a high proportion of the data being categorized. For example, in our simulations, the results were nearly identical when categorization varied between 0.2% and 13%; in the data example, results were also nearly identical when categorization varied between 1.9% and 11.4%. Therefore, if one chooses to specify L and U, we suggest to select them so that approximately 5% or fewer of the observations would be modified at each end.

Our simulations and data example actually also suggest that without categorization, the estimators also perform well, which may support the use of CPMs with the original data in practice. CPMs applied to the original data do not require specifying L and U, and they permit the calculation of conditional means. However, its asymptotic theory has not been established; hence, there might be some risk to analyses using CPMs on the original data.

Continuous data that are skewed or subject to detection limits are common in applied research. Because of their ability to non-parametrically estimate a proper transformation, their robust rank-based nature, and their desirable properties proven and illustrated in this manuscript, CPMs are often an excellent choice for analyzing these types of data. Extensions of CPMs to more complicated settings, e.g., clustered and longitudinal data, multivariate outcome data, or data with multiple detection limits, are warranted and are areas of ongoing research.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/math11244896/s1. Additional results from simulations and data example are in the Supplementary Materials. The code for simulations and data analysis is available at https://biostat.app.vumc.org/ArchivedAnalyses (accessed on 1 December 2023).

Author Contributions

Conceptualization, C.L. and B.E.S.; Methodology, D.Z.; Validation, Y.T.; Writing—original draft, C.L., D.Z. and B.E.S. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported in part by United States National Institutes of Health grants R01AI093234, P30AI110527, and K23AI20875.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

We thank Jessica Castilho and other Vanderbilt Comprehensive Care Clinic investigators for the use of the CD4:CD8 data.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorem 1

Core steps of the proof: Let be the true value of and be the NPMLE from the CPM applied to modified data. We will first prove that (I) is bounded in with probability one. Since is bounded and increasing in , via the Helly selection theorem [20], for any subsequence, there exists a weakly convergent subsequence. Thus, without confusion, we assume that weakly in and . We will then prove that (II) with probability one, for and . With this result, the consistency is established. Furthermore, since is continuously differentiable, we conclude that converges to uniformly in with probability one.

Proof.

Proof of (I): Given a dataset of i.i.d. observations , the nonparametric log-likelihood for CPM fitted to the modified data is

Here, denotes the empirical measure, i.e., , for any measurable function . Let be the jump size of at . Let .

We first show that a.s. If for all , then . Below, we assume there is a such that . Clearly, should be strictly positive, since, otherwise, . Because of this, we differentiate with respect to and then set it to zero to obtain the following equation:

For any , via condition 2,

According to condition 1, is decreasing when . The left-hand side of (A1) is

For the right-hand side, we use the mean-value theorem on the denominator and then the decreasing property of when to obtain that

where is some value such that . Therefore, we have

and this holds for any between 0 and U and satisfying .

Let be the maximal index i for which and . We sum over all between 0 and U to obtain that

We now show that cannot diverge to ∞. Otherwise, suppose that for some subsequence. From the second half of condition 1, when n is large enough in the subsequence, for any Z,

and, therefore,

in which the last term converges to a constant. We thus have a contradiction. Hence, with probability 1.

We can reverse the order of (change to so that the NPMLE is equivalent to maximizing the likelihood function, but instead of , we consider ). The same arguments as above apply to conclude that with probability 1, or equivalently, with probability 1. □

Proof.

Proof of (II): We first show that is bounded for all . From the proof above, we know uniformly in i for which satisfying . We prove that this is true for any . To do that, we define

First, we note that has a total bounded variation in . In fact, for any ,

where . By choosing a subsequence, we assume that converges weakly to . From the above inequality and taking limits, it is clear that

so is Lipschitz-continuous in . The latter property ensures that uniformly converges to for .

According to Equation (A1), we know

where . Thus,

for any positive constant . This gives

Since has a bounded total variation, belongs to a Glivenko–Cantelli class bounded by and it converges in -norm to . As a result, the right-hand side of (A3) converges to , so we obtain that

where is the marginal density of Y. Let decrease to zero; then, from the monotone convergence theorem, we conclude that

We use (A4) to show that . Otherwise, since is continuous, there exists some such that . However, since is Lipschitz-continuous at , the left-hand side of (A4) is at least larger than if or if for some small constant . The latter integrals are infinity. We obtain the contradiction. Hence, we conclude that is uniformly bounded away from zero when . Thus, when n is large enough, is larger than a positive constant uniformly for all . From (A1), we thus obtain that

where . In other words, . Using symmetric arguments, we can show that is bounded by a constant for all .

Finally, to establish the consistency in Theorem 1, since is of order , from Equation (A1), we obtain that

Following this expression, we define another step function, denoted by , whose jump size at satisfies that

so

Via the strong law of large numbers and monotonicity of , it is straightforward to show that converges to

uniformly in . The limit can be verified to be the same as . Furthermore, we notice

Since is bounded and increasing and , belongs to a VC-hull of Donsker class. Via the preservation property under the monotone transformation, , , also belongs to a Donsker class. Therefore, the right-hand side of (A5) converges uniformly in to

As a result, , or, equivalently, .

Define

Since and ,

Since we have

That is,

We take limits on both sides. Using the Glivenko–Cantelli theorem with the first three two terms in the left-hand side and noting converges to zero uniformly, we obtain that

The left-hand side is the negative Kullback–Leibler information for the density with parameter . Thus, the density function with parameter should be the same as the true density. Immediately, we obtain that

with probability one. From condition 2, we conclude that and for . □

Appendix B. Proof of Theorem 2

Proof.

Let be the set of the functions over with , where denotes the total variation in . For any with and any , we define the score function along the submodel for with tangent direction and for A with the tangent function :

where

Since maximizes , we have, for any v and h,

The rest of the proof contains the following main steps: we first show that satisfies Equation (A6) (details below), then (A8), and finally (A10), from which the asymptotic distribution of will be derived.

We know from the proof in Appendix A. Thus, if we let

then

and uniformly in . Consequently, we obtain that

and it holds uniformly in and h with and . Equivalently, we have that

For the left-hand side of (A6), it is easy to see that for in a neighborhood of in the metric space , the classes of , and are Lipschtiz classes of the P-Donsker classes and , so they are P-Donsker by preservation of the Donsker property. Additionally, the classes of , , and are P-Donsker. As the result, since, via the consistency,

converges in to

Equation (A6) gives that

On the other hand, we note that the first term in the right-hand side of (A7) is zero if replacing by . Thus, the right-hand side of (A7) is equal to

We perform the linearization to the first two terms in the above expression. After some algebra, we obtain that this expression is equivalent to

where the operators , , , and

are defined as

Combining the above results, we obtain from (A7) that

Next, we show that the operator, that maps to , is invertible. This can be proven as follows: first, is finite-dimensional. Second, since the last term of is invertible and the other terms in map to a continuously differentiable function in which is a compact operator, is a Fredholm operator of the first kind. Therefore, to prove the invertibility, it suffices to show that is one-to-one. Suppose that . Thus, we have

From the previous derivation, we note that the left-hand side is in fact the negative Fisher information along the submodel . Thus, the score function along this submodel must almost certainly be zero. That is,

almost certainly. Consider any ; then, we have , so from condition 2. This further shows that and satisfies

for any . This is a homogeneous integral equation and it is clear for any . We thus have established the invertibility of the operator .

Therefore, from (A8), for any and , by choosing as the inverse of the above operator applied to , we obtain that

and this holds uniformly for and . Using (A9), we obtain that

Thus,

This implies that

as a random map on , converges weakly to a mean-zero and tight Gaussian process. Furthermore, by letting and , we conclude that has an influence function given by . Since the latter lies on the score space, it must be the efficient influence function. Hence, the asymptotic variance of achieves the semiparametric efficiency bound. □

References

- Box, G.E.P.; Cox, D.R. An analysis of transformations (with Discussion). J. R. Stat. Soc. Ser. B 1964, 26, 211–252. [Google Scholar]

- Doksum, K.A. An extension of partial likelihood methods for proportional hazard models to general transformation models. Ann. Statist. 1987, 15, 325–345. [Google Scholar] [CrossRef]

- Cuzick, J. Rank regression. Ann. Statist. 1988, 16, 1369–1389. [Google Scholar] [CrossRef]

- Pettitt, A.N. Inference for the linear model using a likelihood based on ranks. J. R. Statist. Soc. Ser. B 1982, 44, 234–243. [Google Scholar] [CrossRef]

- Kalbfleisch, J.D.; Prentice, R.L. Marginal likelihoods based on Cox’s regression and life model. Biometrika 1973, 60, 267–278. [Google Scholar] [CrossRef]

- Cheng, S.C.; Wei, L.J.; Ying, Z. Analysis of transformation models with censored data. Biometrika 1995, 82, 835–845. [Google Scholar] [CrossRef]

- Chen, K.; Jin, Z.; Ying, Z. Semiparametric analysis of transformation models with censored data. Biometrika 2002, 89, 659–668. [Google Scholar] [CrossRef]

- Zeng, D.; Lin, D.Y. Maximum likelihood estimation in semiparametric regression models with censored data (with Discussion). J. R. Statist. Soc. Ser. B 2007, 69, 507–564. [Google Scholar] [CrossRef]

- Manuguerra, M.; Heller, G.Z. Ordinal regression models for continuous scales. Int. J. Biostat. 2010, 6, 14. [Google Scholar] [CrossRef]

- Hothorn, T.; Möst, L.; Bühlmann, P. Most likely transformations. Scand. J. Stat. 2018, 45, 110–134. [Google Scholar] [CrossRef]

- Liu, Q.; Shepherd, B.E.; Li, C.; Harrell, F.E. Modeling continuous response variables using ordinal regression. Stat. Med. 2017, 36, 4316–4335. [Google Scholar] [CrossRef] [PubMed]

- Spertus, J.A.; Jones, P.G.; Maron, D.J.; O’Brien, S.M.; Reynolds, H.R.; Rosenberg, Y.; Stone, G.W.; Harrell, F.E.; Boden, W.E.; Weintraub, W.S.; et al. Health-status outcomes with invasive or conservative care in coronary disease. N. Engl. J. Med. 2020, 382, 1408–1419. [Google Scholar] [CrossRef] [PubMed]

- Pun, B.T.; Badenes, R.; La Calle, G.H.; Orun, O.M.; Chen, W.; Raman, R.; Simpson, B.-G.K.; Wilson-Linville, S.; Olmedillo, B.H.; de la Cueva, A.V.; et al. Prevalence and risk factors for delirium in critically ill patients with COVID-19 (COVID-D): A multicentre cohort study. Lancet 2021, 9, 239–250. [Google Scholar] [CrossRef] [PubMed]

- Pasquali, S.K.; Thibault, D.; O’Brien, S.M.; Jacobs, J.P.; Gaynor, J.W.; Romano, J.C.; Gaies, M.; Hill, K.D.; Jacobs, M.L.; Shahian, D.M.; et al. National variation in congenital heart surgery outcomes. Circulation 2020, 142, 1351–1360. [Google Scholar] [CrossRef] [PubMed]

- Williams, Z.J.; Failla, M.D.; Davis, S.L.; Heflin, B.H.; Okitondo, C.D.; Moore, D.J.; Cascio, C.J. Thermal perceptual thresholds are typical in autism spectrum disorder but strongly related to intra-individual response variability. Sci. Rep. 2019, 9, 12595. [Google Scholar] [CrossRef] [PubMed]

- Hatch, L.D.; Scott, T.A.; Slaughter, J.C.; Xu, M.; Smith, A.H.; Stark, A.R.; Patrick, S.W.; Ely, E.W. Outcomes, resource use, and financial costs of unplanned extubations in preterm infants. Pediatrics 2020, 145, e20192819. [Google Scholar] [CrossRef]

- Wang, J.-H.; Wong, R.C.B.; Liu, G.-S. Retinal transcriptome and cellular landscape in relation to the progression of diabetic retinopathy. Investig. Ophthalmol. Vis. Sci. 2022, 63, 26. [Google Scholar] [CrossRef]

- Ioannidis, J.P.A.; Kim, B.Y.S.; Trounson, A. How to design preclinical studies in nanomedicine and cell therapy to maximize the prospects of clinical translation. Nat. Biomed. Eng. 2018, 2, 797–809. [Google Scholar] [CrossRef]

- French, B.; Shotwell, M.S. Regression models for ordinal outcomes. JAMA 2022, 328, 772–773. [Google Scholar] [CrossRef]

- Billingsley, P. Probability and Measure, 3rd ed.; Wiley: New York, NY, USA, 1995. [Google Scholar]

- Van der Vaart, A.W.; Wellner, J.A. Weak Convergence and Empirical Processes; Springer: New York, NY, USA, 1996. [Google Scholar]

- Murphy, S.A.; van der Vaart, A.W. On profile likelihood. J. Am. Stat. Assoc. 2000, 95, 449–465. [Google Scholar] [CrossRef]

- Castilho, J.L.; Shepherd, B.E.; Koethe, J.R.; Turner, M.; Bebawy, S.; Logan, J.; Rogers, W.B.; Raffanti, S.; Sterling, T.R. CD4/CD8 ratio, age, and risk of serious non-communicable diseases in HIV-infected adults on antiretroviral therapy. AIDS 2016, 30, 899–908. [Google Scholar] [CrossRef]

- Sauter, R.; Huang, R.; Ledergerber, B.; Battegay, M.; Bernasconi, E.; Cavassini, M.; Furrer, H.; Hoffman, M.; Rougemont, M.; Günthard, H.F.; et al. CD4/CD8 ratio and CD8 counts predict CD4 response in HIV-1-infected drug naive and in patients on cART. Medicine 2016, 95, e5094. [Google Scholar] [CrossRef]

- Petoumenos, K.; Choi, J.Y.; Hoy, J.; Kiertiburanakul, S.; Ng, O.T.; Boyd, M.; Rajasuriar, R.; Law, M. CD4:CD8 ratio comparison between cohorts of HIV-positive Asians and Caucasians upon commencement of antiretroviral therapy. Antivir. Ther. 2017, 22, 659–668. [Google Scholar]

- Serrano-Villar, S.; Sainz, T.; Lee, S.A.; Hunt, P.W.; Sinclair, E.; Shacklett, B.L.; Ferre, A.L.; Hayes, T.L.; Somsouk, M.; Hsue, P.Y.; et al. HIV-infected individuals with low CD4/CD8 ratio despite effective antiretroviral therapy exhibit altered T cell subsets, heightened CD8+ T cell activation, and increased risk of non-AIDS morbidity and mortality. PLoS Pathog. 2014, 10, e1004078. [Google Scholar] [CrossRef]

- Silva, C.; Peder, L.; Silva, E.; Previdelli, I.; Pereira, O.; Teixeira, J.; Bertolini, D. Impact of HBV and HCV coinfection on CD4 cells among HIV-infected patients: A longitudinal retrospective study. J. Infect. Dev. Ctries. 2018, 12, 1009–1018. [Google Scholar] [CrossRef]

- Gras, L.; May, M.; Ryder, L.P.; Trickey, A.; Helleberg, M.; Obel, N.; Thiebaut, R.; Guest, J.; Gill, J.; Crane, H.; et al. Determinants of restoration of CD4 and CD8 cell counts and their ratio in HIV-1-positive individuals with sustained virological suppression on antiretroviral therapy. J. Acquir. Immune Defic. Syndr. 2019, 80, 292–300. [Google Scholar] [CrossRef]

- Serrano-Villar, S.; Perez-Elias, M.J.; Dronda, F.; Casado, J.L.; Moreno, A.; Royuela, A.; Perez-Molina, J.A.; Sainz, T.; Navas, E.; Hermida, J.M.; et al. Increased risk of serious non-AIDS-related events in HIV-infected subjects on antiretroviral therapy associated with a low CD4/CD8 ratio. PLoS ONE 2014, 9, e85798. [Google Scholar] [CrossRef]

- Harrell, F.E., Jr. Regression Modeling Strategies, 2nd ed.; Springer: Cham, Switzerland; Berlin/Heidelberg, Germany; New York, NY, USA; Dordrecht, The Netherlands; London, UK, 2015. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).