Abstract

In biomedical research, identifying genes associated with diseases is of paramount importance. However, only a small fraction of genes are related to specific diseases among the multitude of genes. Therefore, gene selection and estimation are necessary, and the accelerated failure time model is often used to address such issues. Hence, this article presents a method for structural identification and parameter estimation based on a non-parametric additive accelerated failure time model for censored data. Regularized estimation and variable selection are achieved using the Group MCP penalty method. The non-parametric component of the model is approximated using B-spline basis functions, and a group coordinate descent algorithm is employed for model solving. This approach effectively identifies both linear and nonlinear factors in the model. The Group MCP penalty estimation exhibits consistency and oracle properties under regularization conditions, meaning that the selected variable set tends to have a probability of approaching 1 and asymptotically includes the actual predictive factors. Numerical simulations and a lung cancer data analysis demonstrate that the Group MCP method outperforms the Group Lasso method in terms of predictive performance, with the proposed algorithm showing faster convergence rates.

Keywords:

non-parametric accelerated failure time model; structure identification; B-splines; group MCP penalty; oracle properties; group coordinate descent algorithm MSC:

62H12; 62J12

1. Introduction

In both economic and biological research, it is a common scenario that many theories do not prescribe specific functional forms for the relationships between predictors and outcomes. For example, in biomedical studies, the influence of predictors on survival time can exhibit nonlinearity. Attempting to fit a linear model in such cases can result in biased estimates or produce misleading results. However, the functional shape of a non-parametric model is determined by the available data, eliminating the need for a linear functional form to describe the influence of a covariate. Additionally, non-parametric models offer greater flexibility in fitting data compared to parametric models. This paper delves into the in-depth study of the non-parametric accelerated failure time additive regression (NP-AFT-AR) model:

where () is random sample, is the logarithm of the response variable, that is, is the logarithm of survival time. is a vector of covariates, are mutually independent and complementary subsets of , are regression coefficients of the covariates with indices in , and are unknown functions. The covariates in have a linear relationship with the mean response, whereas the connection with the other covariates is not determined by a finite number of parameters. Parameter models require explicit assumption constraints, and they tend to overfit when there is an excessive number of model parameters. Some of these models are also based on the assumption of linearity, making them inadequate for capturing complex nonlinear relationships. However, parameter models offer the advantages of clear interpretability for explicit parameters, efficiency, and accurate parameter estimation. Hence, this paper aims to leverage these characteristics of parameter models and explores a hybrid approach that combines both parameter and non-parameter models to enhance the adaptability and performance of the model. When the emphasis is on the relationship between and , which can be approximated by a linear function. It provides enhanced interpretability compared to a purely non-parametric additive model. The random error term has a mean of zero and a finite variance . Assuming that certain components are zero, our main objective in this research is to distinguish the nonzero components from the zero components and estimate the nonzero components accurately. A secondary objective is to elucidate the functional forms of the nonzero components, thereby suggesting a more concise model. The techniques we have established can readily be expanded to the partly linear additive AFT regression model, particularly when certain covariates may be discrete and not amenable to modeling using smoothing techniques like B-splines. We utilize the lung cancer data example to demonstrate this extension.

The structure identification method is effective in distinguishing linear variables from nonlinear ones, and numerous scholars have contributed to relevant research methods. Tibshirani [1] combined the least square estimation technique introduced by Bireman [2] with minimizing the residual sum of squares under constraints, transforming the solution into a continuous optimization process. This approach is known as the Lasso method, where penalties are applied to select variables, and estimated coefficients are continuously shrunk toward zero to automatically identify important explanatory variables. However, researchers such as Zhao and Yu [3] and Zou [4] discovered that the Lasso method may not consistently select the correct model, and the estimated regression coefficients do not exhibit asymptotic normality. To address this limitation, Fan and Li [5] proposed the SCAD penalty, which substitutes the penalty in Lasso with a quadratic spline penalty function to reduce deviations. In the context of linear models, the SCAD method can uniformly identify the true model and possess oracle properties. Nonetheless, the non-convex nature of the SCAD penalty makes it challenging to optimize in practical applications, leading to numerical instability during the solution process. Zhang [6] introduced the non-concave MCP (smoothly clipped absolute deviation) penalty and developed the MCP penalty likelihood procedure as an alternative approach. The MCP penalty method replaces the penalty in Lasso with a quadratic spline penalty function to reduce bias. MCP exhibits the capability to consistently select the correct model with a probability of 1 and provides corresponding estimates with oracle properties.

Heller [7] employed the weighted kernel smooth rank regression method to estimate the unknown parameters in the AFT model, particularly in the case of censored data. Gu [8] introduced an empirical model selection approach for non-parametric components based on the Kullback–Leibler geometric structure. Schumaker [9] utilized the Lasso iterative method for selecting parametric covariates, while non-parametric components were estimated using the sieve method. Johnson [10] extended the rank-based Lasso-type estimation, which can encompass a portion of the linear AFT model. Huang and Ma [11] applied the AFT model to analyze the relationship between gene expression and survival time, using the bridge penalty method for individual-level regularized estimation and gene selection. Long et al. [12] established a risk prediction score through regularized rank estimation within a portion of the linear AFT model. Wei et al. [13] explored the application extension of subgroup identification methods based on Adaptive Elastic Net and the AFT model. Wang and Gao [14] conducted empirical likelihood inference for the AFT model under right-censored data. Cai et al. [15] compared parametric and semiparametric AFT models in clustered survival data. Liu et al. [16] introduced a new semiparametric approach that allows for the simultaneous selection of important variables, model structure identification, and covariate effect estimation within the AFT model.

Researchers used different methods for variable selection and parameter estimation. For instance, Fan and Li [5] employed the Newton algorithm to estimate the penalty likelihood function. Cui et al. [17] introduced the concept of penalty regression spline approximation and group structure identification within the additive model. However, their approach faced computational instability issues as they relied on truncated power series to approximate non-parametric truncation. Huang and Ma [11] proposed a two-step method where, with a fixed number of predictors, nonzero variables are simultaneously selected and estimated in the additive model, using Group Lasso in the first stage and Adaptive Group Lasso in the second stage. Leng and Ma [18] used the COSSO penalty to handle non-parametric covariate effects in the AFT model. However, due to the non-smooth nature of the penalty function at the origin, the computation can be challenging, and these methods require a significant amount of time to calculate the inverse matrix of the Hessian matrix, especially when dealing with high-dimensional covariates. Therefore, in this paper, the group coordinate descent (GCD) algorithm is employed to approximate and estimate the parameters in the non-parametric additive accelerated failure time model. GCD capitalizes on the assumption of model sparsity, and the algorithm is simple and operates at a fast pace. The GCD algorithm closely resembles the standard Newton–Raphson algorithm, but each iteration involves solving a weighted least squares problem with a penalty function.

Under the assumption that the dimensionality of covariates is allowed to diverge, this paper rigorously proves that the Group MCP penalty estimator in the non-parametric accelerated failure time model exhibits consistency and oracle properties. As the generalized cross-validation criterion is inconsistent in model selection when the sample size tends to infinity, meaning it may select irrelevant variables, the Bayesian Information Criterion (BIC) does not suffer from such issues. BIC, as shown by Golub et al. [19], has the desirable property of selecting the true model with a probability of 1. Therefore, in the context of structure identification in the non-parametric accelerated failure time model, this study opts for tuning based on the BIC criterion.

The remaining sections of the paper are organized as follows. In Section 2, we describe the construction of the AFT model with penalty estimation and variable selection based on Group MCP. Section 3 introduces the algorithm and parameter tuning for the effective identification of both linear and nonlinear factors in the model. In Section 4, we provide proof of the Group MCP’s selection consistency property, where the selected variable set asymptotically tends to include the actual predictive factors with a probability approaching 1. Section 5 primarily focuses on numerical simulations and empirical analysis, demonstrating the method’s strong predictive performance. We also apply the method to the analysis of lung cancer data. Section 6 provides a brief summary of the corresponding conclusions.

2. Penalized Estimation and Variable Selection

2.1. Method

is the natural logarithm of the censoring time, is the natural logarithm of the survival time and represents the event indicator, i.e., , which takes value 1 if the event time is observed or 0 if the event time is censored. is the logarithm of the minimum of the survival time and the censoring time, i.e., . Then, the observed data are assumed to be independent and identically distributed (i.i.d.) samples from , in the form of . is the order statistics of , , and are the respective censoring indicators and covariates. F represents the distribution of T, and is its Kaplan–Meier estimator by [20]., where the are Kaplan–Meier weights calculated by , and . After processing and considering whether is established, transform into , when other conditions remain unchanged, the above conversion Formula (1) can be expressed as

The following introduces the method of coefficient estimation in Equation (2), assuming that each group is orthonormal, i.e., , and . is the least squares estimate of , where is the unknown parameter associated with marker effects by [21]. Because of , the least squares objective function with penalty term can be expressed as . The linear part of Formula (2) is expressed as a function and then is brought into the additive non-parametric regression model to obtain:

To ensure unique identification of the , we assume that . If some of the are linear, then Equation (3) transforms into the partially linear additive model (2). The problem shifts to determining the linear or nonlinear forms. Therefore, we decompose into a linear part and a non-parametric part . Consider a truncated series expansion for approximating , that is . Where is a set of basis functions and at certain rate as . If , then has the linear form. Therefore, based on this equation, the current task is to ascertain which groups of are zero. Let and , where . Define the penalized least squares criterion.

where is the penalty function based on the penalty parameter and regularization parameter . represents the intercept. is the norm with respect to the positive definite matrix . However, it is important to choose a suitable choice of to facilitate the computation. Let and ; and , then the weight in Formula (4) can not be expressed, and Formula (4) can be transformed into

We apply Group MCP penalty to the penalty term, i.e.,

controls the concavity of and is the penalty parameter. Here, denotes the nonnegative part of x, that is, . We require . Taking the derivative with respect to yields. It initiates with the application of group MCP penalization at the same rate as the group lasso, gradually easing this penalization until, when , the rate of group MCP penalization diminishes to 0. This approach offers a spectrum of penalties, encompassing the penalty at and the hard-thresholding penalty when . Notably, it encompasses the Lasso penalty as a specific case when .

The penalty in Equation (4) combines the penalty function with a weighted norm of . serves as a penalty for individual variable selection, and when applied to the norm of , it selects the coefficients in as a group. This approach is favorable since the nonlinear components are captured by the coefficients in as groups. We term the penalty function in Equation (4) as the group minimax concave penalty or simply the group MCP. The penalized least squares estimator is defined by , subject to the constraints. These centering constraints are sample analogs of the identifying restriction . , consists of the centered basis functions at the th observation of the th covariate. Let , where is the design matrix corresponding to the th expansion. Let and . We can write

Here, we excluded from the arguments of , as the intercept is zero as a result of centering. Therefore, the constrained optimization problem transforms into an unconstrained one.

2.2. Penalized Profile Least Squares

The penalized profile least squares approach is used to calculate . The that minimizes inherently satisfies for any given , resulting in . Define , represents the projection matrix onto the column space of . Consequently, the profile objective function of becomes:

We use , this choice of standardizes the covariate matrices associated with and leads to an explicit expression for computation in the group coordinate algorithm described below. For any given , the penalized profile least squares estimator of is defined by . We compute using the group coordinate descent algorithm. The set of covariates estimated to have a linear form in the regression model (1) is denoted as . Then, we obtain . Denote and . We have . Then, the estimator of the coefficients of the linear components is . Then, is the estimator of the coefficient vector of the linear components. The coefficients of the linear and nonlinear parts can be identified and estimated, and then the structure identification of the non-parametric can be added to the AFT model.

3. Computation

3.1. Computation Algorithm

Assuming that there is a standard between each group, i.e., and . Let is the least squares estimate of . We use to calculate the solution of group Lasso with the group coordinate descent algorithm, and the expression of group Lasso is . When , the group MCP of the quadratic norm can be expressed as . When , , for and , . . So we can use to express hard threshold function of group MCP and when or can to be soft threshold function.

Group coordinate descent algorithm is used to compute in this paper. GCD algorithm is a natural extension of the standard coordinate descent algorithm [22,23] commonly used in optimization problems involving convex penalties like the Lasso. GCD algorithm optimizes the target function one group at a time, cycling through all groups iteratively until convergence is achieved. It is particularly well-suited for computing because it offers a straightforward closed-form expression for a single-group model, as presented in (8) below. for an upper triangular matrix via the Cholesky decomposition. Let . Simple algebra shows that . Note that. Let . Denote . Let . when 1, the value minimizing with respect to is

In particular, when , we have , which is the group Lasso estimate for a single-group model, GCD algorithm can be implemented as follows based on the above expressions. Let the group coefficient is given. We want to minimize with respect to . Define . Denote and . Let denote the minimizer of . When , we have . Equation (8) is used to iterate through one component at a time for any given . The initial value is . The proposed GCD algorithm is as follows: Initialize the residual vector . Let . For carry out the following calculation until convergence. For , repeat the following steps:

- (1)

- Calculate

- (2)

- Update .

- (3)

- Update and .

The final step ensures that holds the current values of the residuals. While the objective function may not necessarily be convex, it exhibits convexity concerning an individual group when the coefficients of all other groups are fixed.

3.2. Tuning Parameter Selection

Methods such as AIC, BIC, and Generalized Cross-Validation (GCV) are widely used for selection consistency. Let be the likelihood function, and represents the norm of the vector, is a penalty function related to the parameter , The penalty method of structure recognition mainly considers the important variables by finding the extreme value of the objective function . Tibshirani [20] used the norm as the penalty function to obtain Lasso. AIC criterion is used to solve the over-fitting problem in which the value of the model likelihood function increases with the increase of the parameters, where . BIC criterion penalizes the number of parameters more strongly, where . L is the maximum value of the likelihood function, k is the number of parameters in the model. When →0, close to ordinary least squares estimation; when →∞, almost only penalty items remain in the selection criteria. Therefore, we use the faster BIC method to select the parameters of each concave penalty model. The expression of the BIC criterion is . RSS is the sum of squared residuals, is the number of variables selected for a given (,). is selected from an increasing sequence with multiple nodes, and then selects from a sequence of length 100 for any given . The maximum value of the sequence is , where is a dimensional matrix about the covariate , the minimum is .

4. Theoretical Properties of Group MCP

Let denote the cardinality of any set , and , where is covariance matrix, . Let be the true regression coefficient and be the set of nonzero regression coefficients, Represents the number of elements in the set , and satisfies the following conditions:

(C1) and there are constants and such that the density distribution function of satisfies on [a,b] for .

(C2) is independent and identically distributed (i.i.d), and the error term is i.i.d in and exists , which is constant for all , .

(C3) The error term () is independent of the Kaplan–Meier weight (), and there is a constant satisfying that for any , there is , that is, the covariate is bounded.

(C4) The covariate matrix satisfies the SRC condition, exists , converges to 1 with probability and satisfies .

Condition (C1) can ensure that the model is sparse even when the number of covariates is large; that is, the number of covariates with nonzero coefficients can be controlled to a small number; condition (C2) can ensure the tail probability of the model under high-dimensional linear regression The assumption is still valid; according to condition (C3), the sub-Gaussian nature of the model is still guaranteed even if the data is censored; (C4) Ensure that the model meets the SRC condition, that is, ensure that the characteristic root of matrix is always between and, and any model with a dimension smaller than can be identified. Where represents the estimated coefficient, and represents a set of all nonzero coefficients. Denote is the regression component of the true value, is B-spline basis function expansion of , and , . Let be the cardinality of , which is the number of linear components in the AFT regression model. Define

This represents the oracle estimator of under the assumption that the identity of the linear components is known. It’s worth noting that the oracle estimator cannot be computed as is unknown. Nevertheless, we employ it as the reference point for evaluating our proposed estimator. Similar to the actual estimates outlined in Section 2.2, let’s define the oracle estimators as . Denote . Let .The oracle estimator of the coefficients of the linear components is . Without loss of generality, suppose that . Write , where is a -dimensional vector of zeros and. represents the minimal coefficient norm in the B-spline expansions of the nonlinear components. Consider a non-negative integer and take , such that . Now, let’s define as the set of functions on , where the derivative exists and adheres to a Lipschitz condition of order : for .

Theorem 1.

Suppose that , is less than the smallest eigenvalue of , and .

Then under (C1–C3), , Consequently, and . Hence, given the conditions specified in Theorem 1, the proposed estimator can effectively differentiate between linear and nonlinear components with a high probability of accuracy. Additionally, the proposed estimator exhibits the oracle property, implying that it aligns with the oracle estimator’s performance, assuming the knowledge of linear and nonlinear component identities, except for events with vanishingly low probabilities.

Theorem 2.

Suppose (C1)–(C3) hold, we have

Theorem 2 provides the convergence rate of the proposed estimator within the non-parametric additive model, encompassing partially linear models as specific instances. Specifically, if we assume the second-order differentiability (d = 2) of each component and let and tend toward small , then , representing the optimal convergence rate in non-parametric regression. We will now explore the asymptotic distribution of . Denote . Each element of is a -vector of square-integrable functions with mean zero. Let the sumspace . The projection of the centered covariate vector onto the sumspace is defined to be the with that minimizes . For , denote . Therefore, the orthogonal projection onto is well-defined and unique. Additionally, each individual component is also well-defined and unique.

Theorem 3.

Assuming the conditions stated in Theorem 1 and the fulfillment of (C4), and given that A is non-singular. Then, , where and .

Theorem 3 provides sufficient conditions under which the proposed estimator of the linear components in the model is asymptotically normal with the same limit normal distribution as the oracle estimator . Suppose that the first addable parts are important functions, and the remaining are non-important functions. Let be the set of non-important functions. Let , , for any , , , represents the cardinality of set A and .

5. Numerical Simulation and Empirical Analysis

5.1. Numerical Simulation

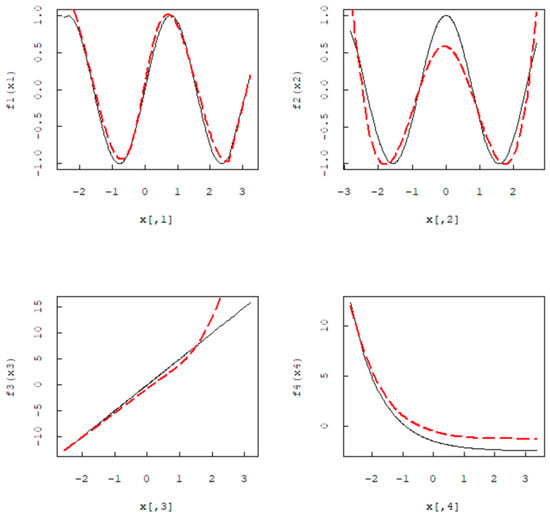

Simulation is employed to assess the performance of the group MCP method in finite samples. Two examples are included in the simulation. For each of these simulated models, we consider two sample sizes (n = 100, 200) and conduct a total of 100 replications. We examine the following four functions defined on , . In the implementation, we utilize B-splines with seven basis functions to approximate each function.

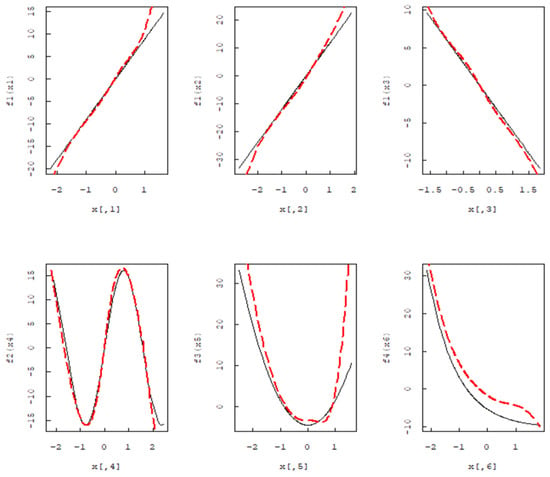

Based on n = 200, the black solid line is the actual function, and the red dashed line is the Group MCP estimation function curve. It can be seen from Figure 1 that when the Group MCP method is used for B-spline expansion, the estimated function fits the real function well. In addition, we do not consider the intercept term of the model, independent and identically distributed in , some functions as follows:. Let q = 6. Consider the model . In this model, the first three variables demonstrate a linear effect, while the last three variables exhibit a nonlinear effect. When n = 200, the black solid line is the actual function, and the red dashed line is the Group MCP estimation function curve. Figure 2 demonstrates that for the non-parametric additive accelerated failure time model, the non-parametric component estimates fit the true function well after B-spline estimation. In Figure 1 and Figure 2, the red dashed line represents the estimated function, while the black solid line represents the real function.

Figure 1.

Simulation of one linear and three nonlinear B-spline estimates.

Figure 2.

Simulation of three linear and three nonlinear B-spline estimates.

Table 1 displays simulation results based on 1000 replications. The columns provide the following information: the average number of selected nonlinear components (NL), the average model error (ER), the percentage of occasions on which the correct nonlinear components are included in the selected model (IN%), and the percentage of occasions on which the exact nonlinear components are chosen (CS%) in the final model. To compare the computational efficiency of group Lasso and group MCP, using time units in minutes (Time). The standard errors corresponding to these values are enclosed in parentheses. The Group MCP penalty outperforms the Group Lasso in terms of both the percentage of occasions on which the correct nonlinear components are included in the selected model (IN%) and the percentage of occasions on which the exact nonlinear components are chosen (CS%) in the final model. As the sample size increases from 100 to 500, both methods exhibit improved performance in terms of including all the nonlinear components (IN%) and selecting the exact correct model (CS%). The computational efficiency of group MCP surpasses that of group Lasso. This improvement is expected as larger sample sizes provide more information about the underlying model. Table 2 shows the number of times each component is estimated as a nonlinear function. Table 2 shows that the Group MCP method is more accurate in distinguishing between linear and nonlinear functions compared to the Group Lasso. Additionally, the Group MCP penalty method results in smaller mean squared errors, indicating more accurate estimation. The research demonstrates that the proposed approach using the Group MCP penalty is effective in distinguishing between linear and nonlinear components in simulated models, thereby enhancing model selection and estimation accuracy.

Table 1.

The performance of group LASSO and group MCP.

Table 2.

Mean square error of important functions.

5.2. Lung Cancer Data Example

This study is based on survival analysis using the survival time data of 442 lung cancer patients and the gene expression data of 22,283 genes extracted from tumor samples. These data are available from the official website of the National Cancer Institute (http://cancergenome.nih.gov/) (accessed on 12 November 2023). In the original data, a two-column matrix denoted as T represents the survival data. The first column contains survival time in months, while the second column serves as an indicator function where 1 represents the state of death, and 0 represents the state of survival. The measured gene expression data are represented as X, with 22,283 gene expressions. The objective of this study is to identify covariates with nonlinear effects on survival time.

Due to the high dimensionality of the original data (p = 22,283, n = 442), it is necessary to transform the data from high-dimensional to low-dimensional. Assuming that the correlation coefficient between the independent variable and the dependent variable is equal to 0, the alternative hypothesis posits that the correlation coefficient between the independent variable and the dependent variable is not equal to 0. R programming language program is used to calculate the p-value for the correlation coefficient between each gene expression and survival time. When the p-value is less than the critical value, the null hypothesis is rejected in favor of the alternative hypothesis, indicating a significant correlation between the independent variable and the dependent variable. A smaller p-value provides stronger evidence of the association between gene expression and survival time. In this study, the p-values of the independent variables are computed and sorted in ascending order, and the top 50 independent variables with the smallest p-values are selected as input variables. The remaining gene expressions are discarded, achieving initial dimensionality reduction. As a result, the original data are transformed into lower dimensional data (p = 50, n = 442), and then covariates with nonlinear effects on survival time are identified.

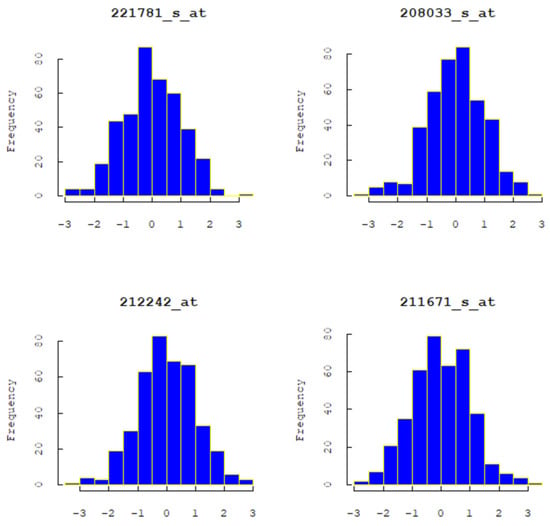

Figure 3 displays the frequency distribution histograms of four randomly selected gene expressions, indicating that the distributions of these four gene expressions are all skewed. Based on the skewed data, this study considered using a non-parametric additive AFT model, with B-spline basis functions used to expand each covariate in the non-parametric part. The Group MCP method was employed to select and compress the coefficients of the B-spline basis functions, ultimately identifying gene expressions with nonlinear effects on survival time. Furthermore, Table 3 compares the results selected by the Group Lasso and Group MCP penalization methods. Under the Group Lasso penalization, all gene symbols were selected, indicating a tendency to over-select nonlinear variables. In contrast, Group MCP outperformed Group Lasso in selecting nonlinear variables. Genes 219720_s_at, 214991_s_at, and 210802_s_at were simultaneously selected, indicating that these three gene expressions are nonlinear variables. The three selected genes are associated with lung cancer research and can potentially be used to identify cancer biomarkers, understand tumor biology and develop treatment strategies. In order to comprehensively assess the significance of these specific genes in cancer research, further experimentation and literature studies are required. This decision may necessitate the support of specialized knowledge in the field of cancer biology and experimental data. This also represents a future direction for research.

Figure 3.

The frequency distribution histogram of four arbitrarily selected genes.

Table 3.

The genes selected by Group Lasso and Group MCP.

The analysis compares the selection results of Group Lasso and Group MCP. Table 3 provides that all gene symbols are selected by the Group Lasso penalty, that is, √ indicates that the gene has been selected. This suggests that Group Lasso tends to over-select nonlinear variables, potentially including some variables that do not have true nonlinear effects. However, Group MCP performs better than Group Lasso in selecting nonlinear variables. It offers a more effective approach to identifying genes with nonlinear relationships with survival time. Lastly, genes with the symbols 219720_s_at, 214991_s_at, and 210802_s_at are simultaneously selected by all penalty methods. This consistent selection across different penalty methods confirms with certainty that these three gene expressions have nonlinear effects on survival time. These results underscore the superior performance of the Group MCP penalty method in accurately identifying genes with nonlinear relationships in high dimensional data, particularly in the context of survival time analysis. The selection of the same genes by multiple penalty methods strengthens the confidence in their nonlinear effects on survival time.

6. Concluding Remarks

This paper introduces a semi-parametric regression pursuit method for distinguishing between linear and nonlinear components in semi-parametric partially linear models. This approach enables the adaptive determination of parametric and non-parametric components in the semi-parametric model based on the available data. However, this method deviates from the standard semi-parametric inference approach, where parametric and non-parametric components are pre-specified before analysis. The study demonstrated that the proposed method possesses oracle properties. In other words, it performs as well as the standard semiparametric estimator, assuming that the model structure is known with high probability. The authors also conducted a simulation study that confirmed the effectiveness of the proposed method, particularly in finite sample sizes. It is worth noting that the semi-parametric regression pursuit method is primarily applied to partially linear models where the number of covariates (p) is less than the number of observations (n). However, genomic datasets may have a higher dimension (p > n). In cases where p > n and the model is sparse, this implies that the number of significant covariates is much smaller than n; it may be necessary to perform dimensionality reduction first to reduce the model dimension. Once the dimension is reduced, the proposed semiparametric regression pursuit method can be applied effectively to distinguish linear from nonlinear components. This research provides a valuable tool for model selection and feature identification in semiparametric modeling, and it highlights the potential need for dimensionality reduction in high-dimensional datasets.

This paper exclusively investigated the application of the group MCP penalty method to high dimensional non-parametric additive accelerated failure time models. Further research can be conducted to study the performance and theoretical properties of the group MCP penalty method in high-dimensional semiparametric accelerated failure time models. Additionally, its characteristics can be elucidated based on single-index models.

Author Contributions

Methodology, S.H.; Software, S.H.; Validation, S.H.; Formal analysis, S.H.; Investigation, S.H.; Resources, S.H.; Data curation, S.H.; Writing—original draft, S.H.; Writing—review & editing, H.L.; Visualization, H.L.; Supervision, H.L.; Project administration, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: http://cancergenome.nih.gov/ (accessed on 12 November 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B Stat. Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Breiman, L. Heuristics of instability and stabilization in model selection. Ann. Stat. 1996, 24, 2350–2383. [Google Scholar] [CrossRef]

- Zhao, P.; Yu, B. On model selection consistency of Lasso. J. Mach. Learn. Res. 2006, 7, 2541–2563. [Google Scholar]

- Zou, H. The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 2006, 101, 1418–1429. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Zhang, C.H. Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 2010, 38, 894–942. [Google Scholar] [CrossRef]

- Heller, G. Smoothed rank regression with censored data. J. Am. Stat. Assoc. 2007, 102, 552–559. [Google Scholar] [CrossRef]

- Gu, C. Model diagnostics for smoothing spline ANOVA models. Can. J. Stat. 2004, 32, 347–358. [Google Scholar] [CrossRef][Green Version]

- Schumaker, L. Spline Functions: Basic Theory; Wiley: New York, NY, USA, 1981. [Google Scholar]

- Johnson, B.A. Rank-based estimation in the -regularized partly linear model for censored outcomes with application to integrated analyses of clinical predictors and gene expression data. Biostatistics 2009, 10, 659–666. [Google Scholar] [CrossRef]

- Huang, J.; Ma, S. Variable selection in the accelerated failure time model via the bridge method. Lifetime Data Anal. 2010, 16, 176–195. [Google Scholar] [CrossRef]

- Long, Q.; Chung, M.; Moreno, C.S.; Johnson, B.A. Risk prediction for prostate cancer recurrence through regularized estimation with simultaneous adjustment for nonlinear clinical effects. Ann. Appl. Stat. 2011, 5, 2003–2023. [Google Scholar] [CrossRef] [PubMed]

- Wei, H.; Kang, P.; Liu, Y. Application Extension of Subset Identification Method Based on Adaptive Elastic Net and Accelerated Failure Time Model. South. Med. Univ. J. 2021, 41, 391–398. [Google Scholar]

- Wu, D.; Gao, Q. Empirical Likelihood Inference for Accelerated Failure Time Models with Right-Censored Data; Zhejiang University of Finance and Economics: Hangzhou, China, 2019. [Google Scholar]

- Cai, H.; Kang, F.; Wu, F. Comparison of Clustering Survival Data in Parametric and Semi-Parametric Accelerated Failure Time Models. J. Beijing Univ. Inf. Sci. Technol. Nat. Sci. Ed. 2020, 35, 8–14. [Google Scholar]

- Liu, L.; Wang, H.; Liu, Y.; Huang, J. Model pursuit and variable selection in the additive accelerated failure time model. Stat. Pap. 2021, 62, 2627–2659. [Google Scholar] [CrossRef]

- Cui, X.; Peng, H.; Wen, S. Component selection in the additive regression model. Scand. J. Stat. 2013, 40, 491–510. [Google Scholar] [CrossRef]

- Leng, C.; Ma, S. Accelerated failure time models with nonlinear covariates effects. Aust. N. Z. J. Stat. 2007, 49, 155–172. [Google Scholar] [CrossRef]

- Golub, G.H.; Heath, M.; Wahba, G. Generalized cross-validation as a method for choosing a good ridge parameter. Technometrics 1979, 21, 215–223. [Google Scholar] [CrossRef]

- Stute, W. Almost sure representations of the product-limit estimator for truncated data. Ann. Stat. 1993, 21, 146–156. [Google Scholar] [CrossRef]

- Huang, J.; Ma, S. Regularized estimation in the accelerated failure time model with high-dimensional covariates. Biometrics 2006, 62, 813–820. [Google Scholar] [CrossRef]

- Fu, W.J. Penalized regressions: The bridge versus the lasso. J. Comput. Graph. Stat. 1998, 7, 397–416. [Google Scholar]

- Wu, T.; Lange, K. Coordinate descent algorithms for Lasso penalized regression. Ann. Appl. Stat. 2008, 2, 224–244. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).