Abstract

Traditional model compression techniques are dependent on handcrafted features and require domain experts, with a tradeoff between model size, speed, and accuracy. This study proposes a new approach toward resolving model compression problems. Our approach combines reinforcement-learning-based automated pruning and knowledge distillation to improve the pruning of unimportant network layers and the efficiency of the compression process. We introduce a new state quantity that controls the size of the reward and an attention mechanism that reinforces useful features and attenuates useless features to enhance the effects of other features. The experimental results show that the proposed model is superior to other advanced pruning methods in terms of the computation time and accuracy on CIFAR-100 and ImageNet dataset, where the accuracy is approximately 3% higher than that of similar methods with shorter computation times.

Keywords:

model compression; reinforcement learning; knowledge distillation; attention mechanism; automatic pruning; network efficiency MSC:

68T99

1. Introduction

Since the beginning of the 21st century, significant advancements have been achieved in deep neural networks regarding computer vision, particularly in image classification and object detection tasks [1,2,3,4,5,6]. Models based on deep neural networks have for several years been deemed superior to related models, owing to improvements in computer hardware that facilitate the design of more complex models that are trainable on larger datasets for extended periods and, consequently, facilitate the design of more optimized model effects. However, in numerous practical applications, the computing resources provided by hardware devices do not meet the requirements of complex models, making model compression an important research topic.

Currently, the methods used for model compression and acceleration are primarily divided into five categories—network pruning, parameter quantization, low-rank decomposition, lightweight network design, and knowledge distillation—such that the scope of actions and design ideas for each method are different. These methods are discussed herein with respect to the concept underlying their design, their function, the changes required in the network structure, and their advantages and disadvantages.

The most common method for model compression and acceleration is network pruning. To determine the importance of parameters in pruning, parameter evaluation criteria are devised and unimportant parameters are removed. The action position affects the convolution and fully connected layers in the network, necessitating changes to the original network structure. Representative studies of this method include structured and unstructured pruning (group- and filter-level pruning) [7,8,9,10]. An advantage of unstructured pruning is that a network can be compressed to any degree. However, most frameworks and hardware cannot accelerate sparse matrix calculations, thus complicating effective acceleration. Structured pruning can narrow the network and accelerate hardware, which can significantly affect accuracy.

In addition to network pruning, parameter quantization and knowledge distillation can operate on convolutional and fully connected layers without changing the network structure. The concept underlying the parameter quantization design involves replacing high-precision parameters with low-precision parameters to reduce the size of the network model. Knowledge distillation uses a large network with high complexity as the teacher model and a small network with low complexity as the student model. The teacher model guides the training of the student model with low complexity so that the performance of the small model is close to that of the large model. Representative studies on parameter quantization include binarization, ternary and cluster quantization, and mixed-bit-width quantization [11,12,13,14,15]. These parameters can significantly reduce the storage space and required memory, speed up the computation, and reduce the energy consumption of the equipment. However, training and fine-tuning are time consuming and the quantization of the special bit width can easily cause incompatibility with the hardware platform, along with poor flexibility.

In knowledge confrontation [16,17,18,19], distillations of the representative research output layer, mutual information, attention, and relevance, large networks can be compressed into a number of smaller networks. Resource-constrained devices, such as mobile platforms, can be deployed and easily combined with other compression methods to achieve a greater degree of compression, all of which are considered advantages. However, the network must be trained at least twice, which increases the training time and can be disadvantageous.

Low-rank decomposition and lightweight network design compress the model at the convolution layer and overall network levels, respectively. In low-rank decomposition, the original tensor is decomposed into a number of low-rank tensors; this has led to research achievements such as dual and multivariate decomposition [20,21,22,23], demonstrating good compression along with an acceleration effect on large convolution kernels and small- and medium-sized networks. This reflects comparatively mature research, as simplifying convolution and decomposing it into smaller kernels is difficult. However, layer-by-layer decomposition is not conducive to global parameter compression. Therefore, the lightweight network was designed to be suitable for deployment in mobile devices using a compact and efficient network structure. Representative research achievements include SqueezeNet, Shuffle Net, Mobile Net, Split Net and Morph Net [24,25,26,27,28,29]. These advantages include simple network training, a short training time, small networks with a small storage capacity, low computation, and good network performance, which are suitable for deployment in resource-constrained devices such as mobile platforms. However, combining a special structure with other compression and acceleration methods is difficult, which leads to poor generalization, making it unsuitable as a pre-training model for other models.

In these compression methods, the model size and accuracy must be considered. Therefore, experts in this field conduct multiple experiments, set different model compression ratios, and observe the test results to determine a model that satisfies the requirements using a time-consuming procedure. Hence, some scholars have proposed automatic pruning technologies. He et al. [30] proposed an automatic pruning model based on reinforcement learning, where a pretrained network model was processed layer by layer. The input of the algorithm is the representation vector of each layer, a useful feature of the layer. The output of the algorithm is the compression ratio. When a layer is compressed at this ratio, the algorithm proceeds to the next layer. When all layers are compressed, the model is tested on the validation set without fine-tuning because the accuracy of the validation set has been established. When the search is complete, the optimal model (with the highest reward value) is selected and fine-tuned to obtain the final model effect.

This paper proposes a novel knowledge distillation method that introduces an attention module into the structure of the teacher network to improve performance so that the teacher network can better guide the student network; it then completes automatic pruning through reinforcement learning. In reinforcement learning methods, new state quantities are introduced to set reward values, encouraging the improved pruning of relatively less important modules. The experimental results show that, compared with similar pruning algorithms, the final model obtained using the proposed method is superior to similar methods in terms of size and accuracy.

The remainder of this paper is organized as follows. Section 2 introduces the basics of reinforcement learning, knowledge distillation, and channel pruning. Section 3 details the model compression algorithm based on reinforcement learning and knowledge distillation. Section 4 presents the experimental results. Finally, in Section 5, a summary is provided, along with the outlook for the future.

2. Related Works

In the research domain of model compression, numerous researchers have explored novel methods and techniques for enhancing the performance and efficiency of models. This section discusses cutting-edge works relevant to this study, encompassing the methods and applications of reinforcement learning, advancements in knowledge distillation techniques, and pivotal concepts and methodologies within these two domains.

2.1. Reinforcement Learning

Reinforcement learning is an important branch of machine learning that aims to study and develop how agents learn and formulate optimal behavioral strategies in their interactions with the environment in order to maximize accumulated rewards or goals. This field is characterized by agent learning through trial and error and feedback, without the need for pre-labeled data or explicit rules.

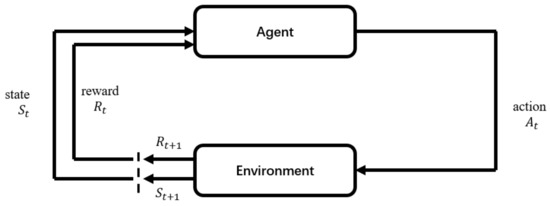

Reinforcement learning mainly comprises the agent, environment, state, action, and rewards. After the agent performs an action, the environment transitions to a new state where a reward signal (positive or negative) is given to the environment. Subsequently, the agent performs new actions according to a certain strategy based on the new state and feedback received from the environmental reward. In this process, the agent and environment interact through states, actions, and rewards. Through reinforcement learning, the agent can determine its state and the actions it should take to obtain the maximum reward. Figure 1 shows the general flowchart of reinforcement learning.

Figure 1.

Reinforcement learning flowchart.

2.2. Knowledge Distillation

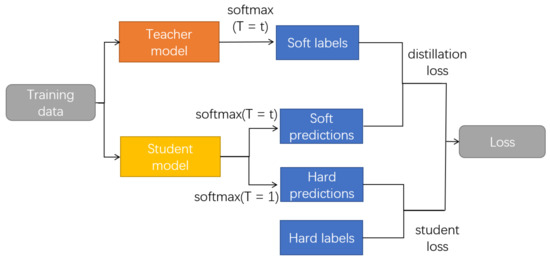

The fundamental principle of knowledge distillation is the specific transmission of the teacher model’s knowledge to the student model. Generally, the teacher model is a large and accurate deep neural network, whereas the student model is its smaller counterpart, often a shallow neural network. During the training process of knowledge distillation, the objective of the student model is to emulate the outputs of the teacher model as closely as possible, thereby achieving model compression while maintaining the performance.

The loss function employed in knowledge distillation typically comprises two components. First, the cross-entropy loss is used to compare the outputs of the student model with those of the teacher model, ensuring that the predictions of the student model closely resemble those of the teacher model. Secondly, a temperature parameter T is introduced to control the degree of “softening” of model outputs. The temperature affects the probability distribution of the model output. A relatively high temperature value helps the student model to better obtain knowledge from the teacher model; however, a relatively low temperature value aids in improving the model’s confidence and accuracy, but may cause the student model to be overconfident and disregard the uncertainty of the teacher model in multiple categories. The temperature parameter acts as a balance factor in knowledge distillation, which can adjust the softness and hardness of the model output to better transfer the knowledge of the teacher model and achieve model compression. Figure 2 presents a flowchart of the knowledge distillation process.

Figure 2.

Flowchart of knowledge distillation.

The original SoftMax function is expressed as follows:

where is the output value of the i th node and is the number of output nodes.

The SoftMax function after adding temperature “T” is as follows:

3. Methodology

In the preceding section, we delineated the relevant studies in the field of model compression. In this section, we provide a comprehensive explanation of our methodology, which comprises two key components: knowledge distillation and reinforcement learning.

3.1. Knowledge Distillation with Attention Mechanism

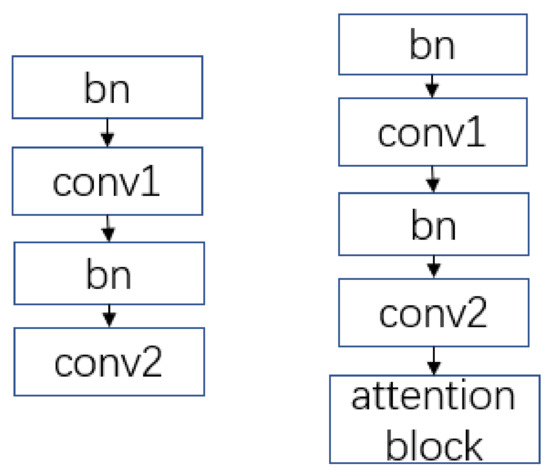

Numerous studies [31,32,33,34] have shown that the attention mechanism can improve the performance of the network; however, the number of parameters and model size of the network also increase after adding the attention module. Therefore, this study focuses on a teacher network for knowledge distillation to improve the performance of the teacher network and better guide the compressed student network model.

The attention mechanism increases attention to the features extracted from the input, reinforces the useful features of the network, and attenuates useless features without additional weights to enhance the effect of other features. The most commonly used attention mechanism modules include the squeeze-and-excitation (SE) module, the efficient channel attention (ECA) module, and the convolutional block attention module (CBAM). This study applies these modules to a DenseNet [35] network model. Figure 3 shows the network architecture and attention modules of DenseNet.

Figure 3.

Comparison before and after the introduction of attention mechanism in DenseNet201 network module.

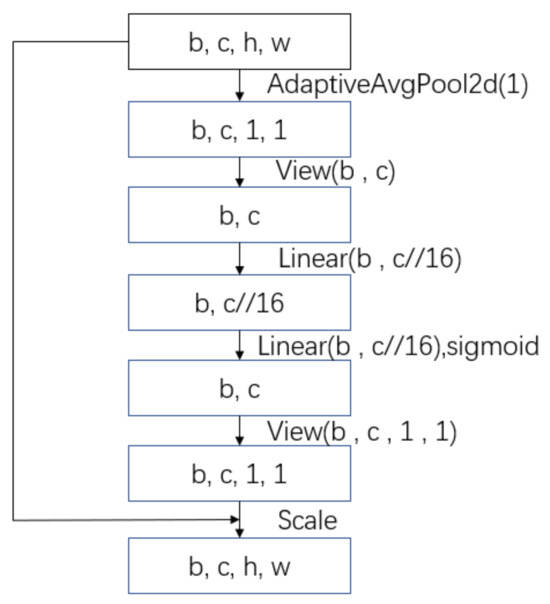

The SE block was divided into two steps: squeezing and excitation. Figure 4 shows a structural diagram of the SE block, where b, c, h, and w represent the batch size, channel, height, and weight of the feature map, respectively. During squeezing, the SE block reduces the dimensionality of the feature maps along each channel by performing global average pooling. Global average pooling computes the average value of the feature map of each channel, resulting in a single number per channel. This operation effectively compresses the channel-specific information into a single scalar. During excitation, the SE block employs a small neural network that typically comprises one or more fully connected convolutional layers. This network uses the squeezed information from the previous step and learns how to adjust the channel-wise weights. These learned weights are then used to reweigh the feature responses within each channel. Essentially, the excitation operation assigns importance scores to each channel, enhancing the responses of the more important channels and suppressing those of the less important ones.

Figure 4.

Squeeze-and-excitation (SE) block structure diagram.

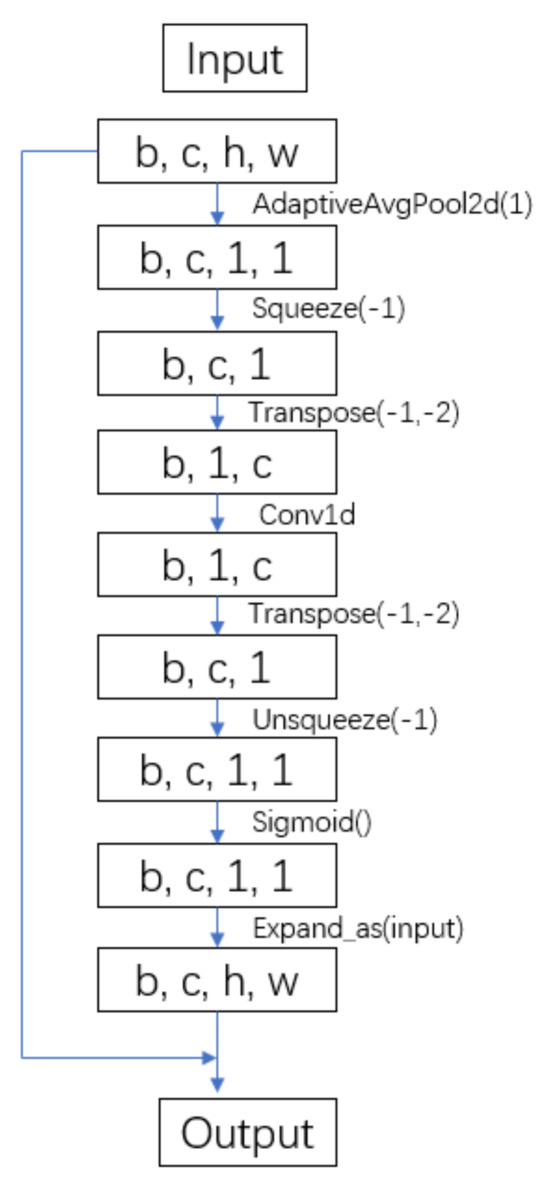

An ECA attention mechanism was proposed based on the SE attention mechanism. The SE attention mechanism first applies channel compression to the input feature map to reduce dimensionality, which adversely affects the dependencies between the learning channels. Based on this concept, the ECA attention mechanism avoids dimensionality reduction and uses one-dimensional convolutions to efficiently realize local cross-channel interactions, thereby extracting the dependencies between channels. Figure 5 shows a structural diagram of the ECA block.

Figure 5.

Efficient channel attention (ECA) block structure diagram, where the transpose operation is used for the dimension transformation of the feature map, and unsqueezing is used to increase the dimension.

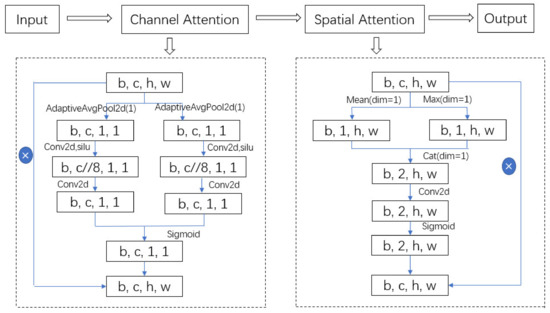

The CBAM attention module consists of two components: the channel and spatial attention module. The channel attention module primarily focuses on the interchannel relationships within the feature maps. It dynamically adjusts the contributions of each channel by learning their respective weights, thereby highlighting the channels most relevant to the current task. Conversely, the spatial attention module focuses on the relationships between different spatial positions within the feature maps. It dynamically adjusts the feature responses at each position by learning spatial position-specific weights, thereby emphasizing the spatial regions relevant to the task at hand. The CBAM attention module simultaneously considers both channel and spatial relationships, thereby enabling a more comprehensive capture of critical information within the feature maps. Compared with the attention mechanism of SEnet, which focuses only on the channel, CBAM can achieve more optimized results. Figure 6 shows a structural diagram of the CBAM block.

Figure 6.

Convolutional block attention module (CBAM) block structure diagram.

The self-attention mechanism [36] is a variant of the attention mechanism, which reduces dependence on external information and is better at capturing the internal correlation of data or features. In the image domain, a transformer [37] is a deep learning architecture that applies the self-attention mechanism to image data. The self-attention mechanism is the core component of the transformer and is used to capture the relationships between different locations in the image. By calculating the correlation score between each pixel and all other pixels, the self-attention mechanism allows the model to dynamically adjust attention to different regions of the image, thereby improving the global relationship modeling.

3.2. Improved Automatic Pruning Algorithm via Reinforcement Learning

A previous study [30] proposed an automatic machine learning technology called AMC to compress and accelerate neural network models on mobile devices. This technology uses reinforcement learning to generate model compression strategies, and can reduce the model size and computational effort several times while maintaining model accuracy. The state space in the AMC is used to describe the feature set of the neural network model state, including 11 features; these include index t of the convolution layer, the number of convolution kernels n, and the number of input channels c. In the AMC, each convolutional layer has a corresponding state that describes the characteristics of the layer. These features can be used to describe the structure and performance of a neural network model, thereby helping AMC generate more optimized compression strategies.

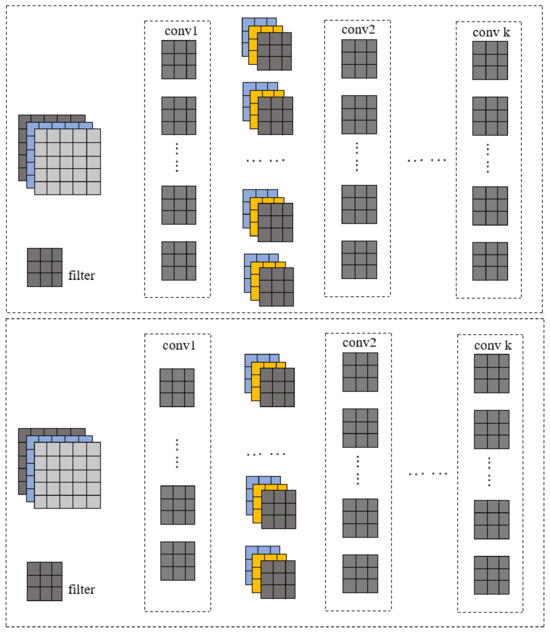

This study adds a new state quantity, , based on AMC, which represents the importance of the tth layer network. When calculating the reward of the tth layer, this coefficient is multiplied to affect the final total reward value, resulting in a larger compression ratio for unimportant network layers. We propose two acquisition methods for this new state .

In the first acquisition method, as shown in Figure 7, if there are k convolution layers with different numbers of filters in each layer, the importance of the conv 1 layer is considered. First, the original accuracy of the complete model is retained and denoted as . Second, when the other convolution layers are constant, 10% of the filters in conv 1 are randomly disconnected. At this point, the accuracy of the model is calculated as , and the difference between the original and changed accuracies () is recorded. The model is restored and the same process is repeated by disconnecting 20%, 30%, 40%, 50%, 60%, 70%, 80%, and 90% of the filters from the model. Finally, the same procedure is performed for the other convolutional layers.

Figure 7.

Schematic of the network structure before and after disconnection.

Regarding the second acquisition method, some studies [38] regard the γ-scaling factor in the batch normalization layer (BN) following the convolutional layer as the evaluation coefficient, whereby the corresponding convolutional layer contributes to the accuracy of the entire model. The transformation performed by the BN layer can be expressed as follows:

where and are the input and output of the BN layer, respectively; B represents the current mini-batch, with and being the mean and variance of the input in B, respectively; γ and β are the trainable affine transformation parameters scale and offset, respectively, which normalize and linearly transform the activations to arbitrary scales. The BN layer is normalized for each channel, such that each channel in each convolutional layer has a corresponding γ. When γ is smaller, the corresponding channel is less important and can be removed. If the γ values in the same BN layer are very close, deleting them can have a significant impact on the network accuracy. Therefore, the L1 norm is proposed to make the γ values sparser.

After counting the number of channels with γ close to 0 in each layer, the importance of the layer is determined by the total number of channels (number of channels close to 0)/(total number of channels).

4. Experimental Results and Analysis

The effect of the proposed algorithm was verified on the CIFAR-100 and ImageNet datasets. The experimental network included DenseNet201, VGG16, and Resnet50. DenseNet201 without pruning is used as the teacher model, whereas VGG16 and Resnet50 are used as student models. The teacher model integrates an attention mechanism. After knowledge distillation, VGG16 and Resnet50 apply automatic pruning. We first added an attention module to the teacher network and analyzed the effects of different attentions; see Table 1. The number in parentheses indicates the improvement relative to the baseline. Underlined indicates the method that achieved the best results.

Table 1.

Analysis of the effects of different attention mechanisms.

Based on the results from multiple datasets, various attention mechanisms yielded enhancements in model accuracy. Notably, the ECA attention mechanism exhibited the most significant improvement among them. In the forthcoming experiments, we will use the ECA attention mechanism. Subsequently, knowledge distillation techniques are applied to the student network, specifically targeting the VGG16 and Resnet50 architectures. The outcomes of this training phase can be referenced in Table 2. It is further observed that post knowledge distillation, an increase in accuracy is evident across various datasets.

Table 2.

Model effect after knowledge distillation processing.

After knowledge distillation, the model is automatically pruned using a reinforcement learning model with a new state constant , where is obtained using the second method in Section 3. Because the first method is considerably cumbersome, the experiment is repeated at different ratios for each layer. The pruning technique used in this study is the channel pruning reported in a previous study [38], which is homologous to the acquisition method of . Table 1 lists the final experimental results for VGG16 and Resnet50, comparing them with those of other advanced pruning methods.

Table 3 shows that the model incorporating attention mechanisms and knowledge distillation (DenseNet201_KD) achieved a remarkably high accuracy. Compared to other pruning methods, after pruning via attention mechanisms, knowledge distillation, and reinforcement learning techniques (ours), both VGG16 and Resnet50 achieved the highest accuracy with minimal computational overhead.

Table 3.

Comparison results of different pruning methods and network models.

5. Conclusions

This study introduced a new state constant based on the automatic pruning of the original reinforcement learning, whereby layers with low importance undergo a larger proportion of pruning. Knowledge distillation technology was introduced during model training and fine-tuning to reduce the model parameters and computation, while retaining the accuracy of the model as much as possible. Compared with other advanced pruning algorithms, the pruning effect proposed in this study was demonstrated to be superior in terms of accuracy and pruning amplitude. Automation is a future trend in model compression, and building an improved automatic pruning model is challenging. Integrating various compression methods and designing universal compression methods are also possibilities with great research significance.

Author Contributions

Investigation and methodology, M.Z. and B.L.; formal analysis, B.L.; validation and writing—original draft preparation, B.-B.H.; writing—review and editing, M.Z. and S.-L.P.; supervision, J.-M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the New Generation Information Technology Innovation Project 2022 for “Color perception Test Map Generation and Color perception Detection and Correction Assistant System” under Grant number 2022IT036.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this study are publicly available. The download address of the ImageNet dataset is https://image-net.org/index.php (accessed on 6 November 2023), and the download address of the cifar-100 dataset is http://www.cs.toronto.edu/~kriz/cifar-100-python.tar.gz (accessed on 6 November 2023). Model files are accessible from the respective authors.

Conflicts of Interest

The authors declare no competing financial interests or personal relationships that may have influenced the work reported in this study.

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25, South Lake Tahoe, CA, USA, 3–8 December 2012. [Google Scholar]

- Kortylewski, A.; He, J.; Liu, Q.; Yuille, A.L. Compositional convolutional neural networks: A deep architecture with innate robustness to partial occlusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8940–8949. [Google Scholar]

- Kim, I.; Baek, W.; Kim, S. Spatially attentive output layer for image classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9533–9542. [Google Scholar]

- Guo, C.; Fan, B.; Zhang, Q.; Xiang, S.; Pan, C. Augfpn: Improving multi-scale feature learning for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12595–12604. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems 27, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Han, S.; Pool, J.; Tran, J.; Dally, W. Learning both weights and connections for efficient neural network. In Proceedings of the Advances in Neural Information Processing Systems 28, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Guo, Y.; Yao, A.; Chen, Y. Dynamic network surgery for efficient dnns. In Proceedings of the Advances in Neural Information Processing Systems 29, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Molchanov, P.; Tyree, S.; Karras, T.; Aila, T.; Kautz, J. Pruning Convolutional Neural Networks for Resource Efficient Inference. arXiv 2016, arXiv:1611.06440. [Google Scholar]

- Luo, J.; Wu, J.; Lin, W. Thinet: A filter level pruning method for deep neural network compression. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5058–5066. [Google Scholar]

- Vanhoucke, V.; Senior, A.; Mao, M.Z. Improving the speed of neural networks on CPUs. In Proceedings of the NIPS 2011 Workshop on Deep Learning and Unsupervised, Granada, Spain, 12–17 December 2011; Academic Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Hwang, K.; Sung, W. Fixed-point feedforward deep neural network design using weights+ 1, 0, and −1. In Proceedings of the 2014 IEEE Workshop on Signal Processing Systems (SiPS), Belfast, UK, 20–22 October 2014; pp. 1–6. [Google Scholar]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. Xnor-net: Imagenet classification using binary convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 525–542. [Google Scholar]

- Courbariaux, M.; Hubara, I.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized Neural Networks: Training Deep Neural Networks with Weights and Activations Constrained to +1 or −1. arXiv 2016, arXiv:1602.02830. [Google Scholar]

- Zhou, S.; Wu, Y.; Ni, Z.; Zhou, X.; Wen, H.; Zou, Y. Dorefa-Net: Training Low Bitwidth Convolutional Neural Networks with Low Bitwidth Gradients. arXiv 2016, arXiv:1606.06160. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Zhang, Y.; Xiang, T.; Hospedales, T.M.; Lu, H. Deep mutual learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4320–4328. [Google Scholar]

- Romero, A.; Ballas, N.; Kahou, S.E.; Chassang, A.; Gatta, C.; Bengio, Y. Fitnets: Hints for Thin Deep Nets. arXiv 2014, arXiv:1412.6550. [Google Scholar]

- Park, W.; Kim, D.; Lu, Y.; Cho, M. Relational knowledge distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3967–3976. [Google Scholar]

- Denton, E.L.; Zaremba, W.; Bruna, J.; LeCun, Y. Exploiting linear structure within convolutional networks for efficient evaluation. In Proceedings of the Advances in Neural Information Processing Systems 27, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Lebedev, V.; Ganin, Y.; Rakhuba, M.; Oseledets, I.; Lempitsky, V. Speeding-up Convolutional Neural Networks Using Fine-Tuned Cp-Decomposition. arXiv 2014, arXiv:1412.6553. [Google Scholar]

- Kim, Y.D.; Park, E.; Yoo, S.; Choi, T.; Yang, L.; Shin, D. Compression of Deep Convolutional Neural Networks for Fast and Low Power Mobile Applications. arXiv 2015, arXiv:1511.06530. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-Level Accuracy with 50× Fewer Parameters and <0.5 MB Model Size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Zhao, S.; Zhou, L.; Wang, W.; Cai, D.; Lam, T.L.; Xu, Y. Toward better accuracy-efficiency trade-offs: Divide and co-training. IEEE Trans. Image Process. 2022, 31, 5869–5880. [Google Scholar] [CrossRef] [PubMed]

- Gordon, A.; Eban, E.; Nachum, O.; Chen, B.; Wu, H.; Yang, T.-J.; Choi, E. Morphnet: Fast & simple resource-constrained structure learning of deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1586–1595. [Google Scholar]

- He, Y.; Lin, J.; Liu, Z.; Wang, H.; Li, L.-J.; Han, S. Amc: Automl for model compression and acceleration on mobile devices. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 784–800. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global Attention Mechanism: Retain Information to Enhance Channel-Spatial Interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Venice, Italy, 22–29 October 2017; pp. 4700–4708. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Li, J.; Shen, Z.; Huang, G.; Yan, S.; Zhang, C. Learning efficient convolutional networks through network slimming. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2736–2744. [Google Scholar]

- He, Y.; Kang, G.; Dong, X.; Fu, Y.; Yang, Y. Soft Filter Pruning for Accelerating Deep Convolutional Neural Networks. arXiv 2018, arXiv:1808.06866. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).