Abstract

With the emergence of COVID-19 disease in 2019, machine learning (ML) techniques, specifically deep learning networks (DNNs), played a key role in diagnosing the disease in the medical industry due to their superior performance. However, the computational cost of deep learning networks (DNNs) can be quite high, making it necessary to often outsource the training process to third-party providers, such as machine learning as a service (MLaaS). Therefore, careful consideration is required to achieve robustness in DNN-based systems against cyber-security attacks. In this paper, we propose a method called the dropout-bagging (DB-COVIDNet) algorithm, which works as a robust defense mechanism against poisoning backdoor attacks. In this model, the trigger-related features will be removed by the modified dropout algorithm, and then we will use the new voting method in the bagging algorithm to achieve the final results. We considered AC-COVIDNet as the main inducer of the bagging algorithm, which is an attention-guided contrastive convolutional neural network (CNN), and evaluated the performance of the proposed method with the malicious COVIDx dataset. The results demonstrated that DB-COVIDNet has strong robustness and can significantly reduce the effect of the backdoor attack. The proposed DB-COVIDNet nullifies backdoors before the attack has been activated, resulting in a tremendous reduction in the attack success rate from to with high accuracy on the clean data.

Keywords:

convolutional neural network; backdoor attacks; COVID-19; bagging network; dropout algorithm; DB-COVIDNet MSC:

68T07

1. Introduction

During the COVID-19 global pandemic, industrial informatics played an important role in mitigating the impact of the crisis [1,2,3]. The novel SARS-CoV-2 virus posed a significant challenge to public health, spreading rapidly through person-to-person contact via aerosols or droplets produced by talking, coughing, and sneezing. Due to the high rate of mortality and infected people, early diagnosis of COVID-19 was crucial to stopping the rapid transmission of the disease. Effective screening of COVID-19 cases through traditional methods, such as chest X-rays and CT scans, relies on expert radiologists to manually identify cases [4]. This process is time-consuming and requires specialized knowledge. However, the number of radiologists available for screening is limited compared to the number of individuals requiring diagnosis. Industrial applications such as health-industry-specific AI-powered medical image recognition helped to accelerate the diagnosis of COVID-19 [5,6,7]. Unprecedented deep learning networks (DNNs) have led to revolutionary success in automatically analyzing medical images and identifying COVID-19 cases with high accuracy, efficiency, and reduction in the cost of healthcare [8,9,10,11,12,13]. This has helped to reduce the burden on radiologists and speed up the diagnosis process, which is essential for mitigating the spread of the disease.

On the other hand, training DNNs requires large amounts of data and high-performance computing resources such as CPUs and GPUs. This can be a challenge for healthcare institutions, which may not have the necessary resources in-house. To address this challenge, third-party and outsourcing strategies such as transfer learning methods and machine learning as a service (MLaaS) have been used, which are provided by Google cloud [14], Microsoft Azure [15], and virtual machines on Amazon [16]. MLaaS is a cloud-based platform that can be used to train DNNs without the need for expensive hardware. Transfer learning involves using a pre-trained DNN as a starting point for training a new DNN, which can reduce the amount of data and computing resources required. While these strategies can help to overcome the challenges of training DNNs, they also raise security concerns. The sensitive nature of medical information, coupled with the potential risks of unauthorized access or misuse, make data privacy and security a top priority in the healthcare industry. Although most of the DNN-based systems in medical imaging informatics are closed-source, there are valid concerns regarding sharing data with third parties and the security and reliability of open-source and open-access medical DNNs. The underlying reason for these concerns is the vulnerability of medical imaging DNNs to attacks. Backdoor attacks (BDs) are one of the most serious and efficient attack threats to DNN-based systems. This type of attack is based on the proposed BadNet by Gu et al. [17] and Trojan attacks by Liu et al. [18], in which a specific attacker-chosen trigger is inserted into clean data to cause targeted misclassification. The attack model causes misprediction for attacker-chosen inputs that include backdoor triggers, while its validation accuracy on benign data is not affected. Thus, the user is not able to determine this kind of adversary.

While avoiding the use of pre-trained networks and transfer learning can prevent backdoor attacks, this is not a practical solution for many users who do not have the time and resources to train their DNNs from scratch. As a result, several studies have recently been conducted to develop defense strategies against backdoor attacks [19,20,21,22,23,24,25]. The most common approaches for defending against these attacks are input anomaly detection (IAD) [19], re-training (RT) [19], input preprocessing (IP) [19], the fine-pruning method (FP) [21], backdoor detection by activation clustering (AC) [19], neural cleans (NC) [23], and so on. Once the backdoor is detected, it is crucial to mitigate the attack’s impact on the DNN. While mitigation techniques play an important role in defense, the detection of a backdoor is the more critical task as it allows for the efficient allocation of resources and time. Input anomaly detection is a technique used to identify input data that deviate from normal patterns and may pose a security risk. Re-training, input preprocessing, fine-pruning, and backdoor detection by activation clustering are techniques used to improve the accuracy of machine learning models and reduce their risk of attack. Despite the aforementioned efforts to increase the robustness of neural networks against backdoor attacks, these processes can be time-consuming and complex. They must be performed after each training by external entities. In other words, recognizing the hallmark signs and impacts of an attack on a neural network, as well as developing strategies to detect them, is a fundament of most current defense methods. However, these approaches are often inadequate when it comes to protecting against backdoor attacks. Despite the development of several promising after-attack techniques, they can be slow and may not completely eradicate the backdoors. On the other hand, one way that a backdoor attack can be carried out is through manipulation of the decision boundary of the ML model. By inserting poisoned data with specific features and labels, the attacker can force the model to learn attackers’ desired decision boundary. In post-attack defense mechanisms, even if the poisoned data are easy to identify and removed, the defender may be able to learn the correct decision boundary by retraining the model on the remaining clean data. At the same time, attackers are continuously improving their methods that evade detection by current defense mechanisms. As a result, post-attack remediation may become increasingly ineffective in the face of these sophisticated attacks.

To address these challenges, we suggest an efficient preemptive “before-attack” defense mechanism that makes deep neural networks more resilient to backdoors from the outset. The proposed method, called the dropout-bagging defense algorithm, builds on the concepts of the dropout algorithm and the bagging network to fortify deep neural networks against backdoor attacks. The defense mechanism nullifies trigger-related features before the activation of the backdoors through the following steps: (1) k-inducers are used in the bagging network, each with a proposed dropout layer as the first layer; (2) the proposed dropout ratio eliminates a specified percentage of input features through a partitioning patch mechanism with no overlap among the inducers; and (3) a new voting method is used to make the final decision. The proposed network structure optimizes the training process by removing trigger-related features before the attack is completed. The effectiveness of the dropout-bagging defense algorithm was demonstrated by applying the AC-COVIDNet [26], a state-of-the-art convolutional neural network for detecting COVID-19 from chest X-ray images, as the main classifier. In addition, we focused on the attack method in [17,18]. Since the trigger for the backdoor attack on images is a square pattern located in the right corner bottom of each image, it is likely that the trigger is fixed and the same for all the poisoned images. In this case, the attacker has supposed to choose a specific pattern to be triggered and has placed that pattern in the same location in all the images they have poisoned. Despite the success of AC-COVIDNet in detecting COVID-19, it has been shown to be vulnerable to backdoor attacks. The experimental results illustrated that the attack resulted in extraordinarily high performance on the COVIDx dataset.

To the best of our knowledge, the dropout-bagging defense method (DB-COVIDNet) is the first defense method through which a COVID-19-based convolutional neural network structure is optimized to improve the network’s robustness against backdoor attacks before the attack has been activated. The highlighted contributions to the dropout-bagging defense algorithm are considered as follows:

- A dropout-bagging method is proposed to make COVID-19-based CNNs robust before the attack to avoid the time-consuming and complex process of attack detection and mitigation and wrong decision boundary;

- Our approach inspired the strengths of the dropout algorithm and the bagging network to enhance the robustness of deep neural networks. To prevent the networks from being strongly biased when backdoors (BDs) are activated, we propose a modified dropout layer algorithm as the first layer of each inducer in the bagging algorithm. This layer drops trigger-related features before an attack happens;

- By using the bagging algorithm with multiple single inducers and a newly proposed voting method, we achieve high accuracy on both clean and malicious test datasets. This approach also addresses the issue of overfitting [27,28], which is a common challenge faced by backdoored CNNs;

- To evaluate the performance of the proposed algorithm, we provided extensive experiments with different aspects. Firstly, we conducted comparative experiments with state-of-the-art CNN. Then, we considered different categories of datasets to make our model more robust and adaptable to real-world industrial applications. This approach includes multiclass datasets and IID or non-IID ones. Non-IID dataset images are not drawn from the same underlying distributions, which makes them different from IID datasets and more challenging. Finally, we investigated the performance of our proposed defense algorithm with another type of backdoor.

The paper is organized as follows: in Section 2, we summarize some related works regarding backdoor attacks and defenses, specifically cyber-security studies on COVID-19-based DNNs. Then, in Section 3, we concentrate on the methodology of the proposed algorithm. This section includes an explanation of dropout and bagging algorithms along with the dropout-bagging defense technique in detail. In Section 4, an experimental evaluation of the proposed model is provided. Finally, we wrap up this paper by discussing the proposed defense method and its challenges.

2. Related Works

The importance of countermeasures to tackle the rapid progress and widespread outbreak of COVID-19 led to considering the use of deep neural networks. However, recent studies on AI-based COVID-19 detection systems revealed that these deep learning models are vulnerable to security attacks. In what follows, we provide an overview of previous state-of-the-art security threat models and defense strategies. We explain the related research in two categories. First, inspired by our previous survey [29], we explored the adversarial attacks [30,31,32] and defense methods against them for COVID-19 DNN-based models. Then, backdoor attacks, which are a different type of adversarial attack that poses security threats to deep neural networks in industrial applications, are described.

2.1. Vulnerability of COVID-19 DNN-Based Model

Adversarial attacks can easily fool a DNN model by adding a small amount of perturbation [30,31] to the model. Some prominent examples of adversarial attacks on COVID-19-based architecture include universal adversarial perturbation (UAP) [33], fast gradient sign methods (FGSM) [34], and Jacobian-based saliency map (JSMA) [35]. Universal adversarial perturbation (UAP) is a technique that causes machine learning models to make wrong predictions by adding a small universal perturbation to the inputs. FGSM works by increasing the loss via a small perturbation in the gradient direction, and JSMA uses the gradient of the target class score to generate a saliency map that highlights the most important features for predicting the target class. The attack performance for the aforementioned algorithm on COVID-19-based deep neural networks was > [36], > [37], and > [38], respectively. Furthermore, to address the problem of the resilience of deep models against adversarial attacks, some defense methods have been proposed for improving the robustness of COVID-19-based deep learning models. Adversarial training [34] is one of the efficient defense methods, along with the increasing margin adversarial (IMA) [39] approach and fuzzy unique image transformation (FUIT) [37] techniques, which are examples of efficient and robust defense methods against adversarial attacks. The adversarial training method trains a model on a combination of original and adversarial examples to increase its resistance to attacks. The FUIT approach uses a fuzzy set to map image pixels to a range of [1, R] by calculating a membership value and reducing variance through down-sampling. The IMA algorithm provides a simple and effective defense against adversarial attacks by increasing the margin between the classifier’s decision boundary and the closest training data points. These defense strategies increase the robustness of DNN models for the diagnosis of COVID-19 cases when they have been attacked by adversaries. The results showed > [36] accuracy for adversarial training and > [39] and > [37] for IMA and FUIT, respectively. All of the attack defense methods mentioned above investigated DNNs’ performance against adversarial attacks.

2.2. Backdoor Attacks (BDs)

Furthermore, backdoor attacks, which were proposed for the first time by Geigel [40], are a different type of adversarial attack posing security threats to deep neural networks. A line of research has concentrated on backdoor attacks with different goals, capabilities, and performances [17,18,23,41,42,43,44]. Liu et al. [18] proposed a backdoor method in which they focused on increasing the activations of some neurons by reverse-engineering a trigger. Hidden voice commands, by Chang Liu et al. [45], proposes a backdoor attack that can be triggered by hidden voice commands in a speech recognition system. Gu et al.’s [17] popular BadNets incorporates backdoor triggers into a small portion of the training dataset and modifies their labels to match the attacker’s desired targets. In terms of COVID-19 deep models, Matsuo et al. [46] investigated the performance of the COVID-Net model against backdoor attacks for the first time. They used the BadNet process to insert BDs into COVID-Net by triggering of data samples to evaluate the performance of COVID-Net [47], then fine-tuned the backdoored model to assess the attack’s performance after fine-tuning. Backdoor attacks are a significant threat to federated learning-based frameworks (FL) in industrial applications [48]. In FL networks, malicious participants can exploit the system by training a local model with a hidden backdoor. When the malicious model is uploaded to the central server, it can spread to all other participants in the federated-learning network, posing a significant threat. Ref. [49] focuses on detecting attacks by analyzing their abnormal behavior with the federated learning systems, and Li et al. [50] performed identification and detection using encrypted data.

On the other hand, there have been several proposed methods for defending against backdoor attacks in deep learning models. Backdoor defenses can be classified into two categories based on the way they detect and mitigate BDs: robustness in post-attack defense strategies, and robustness prior to attack activation. Most of the defense mechanisms rely on detecting and mitigating backdoors after the attack has activated [20,21,24,51,52]. For instance, fine-pruning [21] is one of the high-performance after-attack defense methods proposed by Liu et al. In this defense method, they utilized fine-tuning and neuron-pruning techniques to alleviate the backdoor attacks. Additionally, Chen et al. [51] proposed an activation clustering method (AC) to detect BDs by analyzing the models’ activation function. Existing defenses against federated backdoor attacks can be classified into two categories comprised of model effect validation and analysis of the model computing process [49]. However, model effect validation is not effective against malicious models, and analysis of the model computing process can violate privacy.

Compared to the existing methods, Kaviani et al. [25] proposed a defense method against backdoor attacks on feedforward neural networks that involves a mixture of link-pruning and a scale-free mechanism [53]. They transformed fully connected networks into scale-free structures. Their defense method was the first of its kind to have high performance against backdoor attacks. In respect of the backdoor defense method for COVID-19-based CNNs, another study that aims to provide robustness against backdoor attacks before an attack occurs is our dropout-bagging defense method, which is the first study that provides a robust topology against backdoor attacks. The cornerstone of this approach is removing trigger-related features before an attack occurs, which distinguishes our proposed defense method from other studies in the field.

3. Overview of Bagging-Dropout Defense Method

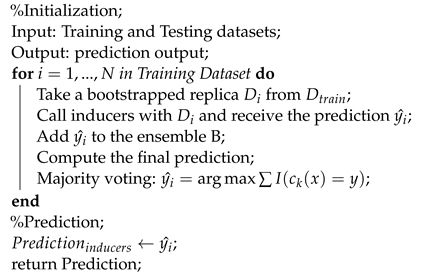

3.1. Bagging

Bootstrap aggregation (bagging) [54] is one of the most well-known algorithms with impressive performance for building an ensemble of models [55] for regression and classification tasks [56]. Drawing on the idea of ensemble-learning-based neural network architecture, multiple models, such as classifiers, are combined to produce improved results. Bagging is an efficient learning technique that can compensate for the errors of the single-based learner. Further, ensemble-learning-based bagging can improve prediction performance by alleviating the overfitting of DNNs with computational pros, mitigating the class imbalance, etc. [57]. Therefore, the ultimate ensemble-based models perform better than a single model. The bagging method is divided into two parts: bootstrapping and aggregation. Bootstrap sampling is a technique used to generate k new data samples that are chosen at random with replacement. These randomly selected data samples have the same probability as the original dataset. The second step in bagging is called aggregation. In the aggregation step, each individual inducer is trained in an ensemble based on corresponding training sets. By optimally combining the data samples, which is commonly used as an aggregation strategy, one can obtain a bagging prediction by calculating the arithmetic average vote or majority vote. To consider the general framework of bagging, let m and n be the number of examples and features of a given dataset, respectively. indicates the training set, and a bootstrap sample is generated by sampling k instances from the training set with replacement. If we denote as the base learners trained on the kth subset of bootstrap samples, then, from each bootstrap sample , a classifier is going to be constructed. Following the training process, the final prediction based on majority voting will be computed by aggregating the set of classifiers; i.e., a single output is predicted as follows: . The main step utilized for computing the bagging prediction is outlined in Algorithm 1.

| Algorithm 1: Bagging algorithm |

|

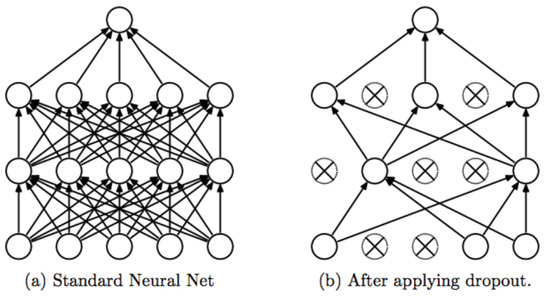

3.2. Dropout

One of the techniques widely used for handling the extreme overfitting problem of neural networks is the dropout method [58]. In this method, input features or the output weights of each hidden unit are randomly dropped out with probability p during the training process. It means that the dropout algorithm removes a percentage of neurons during the training stage, along with all its relevant connection spaces. This suppresses the features from co-adopting too much. Figure 1 illustrates the structure with a typical dropout, which shows the dropping units in each step. First, a dropout layer nullifies of the hidden neurons, where is the dropout rate. Then, the new thinned network is trained by utilizing stochastic gradient descent. Hence, the gradient of each parameter is averaged in each mini-batch and those training cases that do not use a parameter contribute a gradient of zero. In each step, the removed neurons from the previous step will be restored. In the restored network, half of the hidden neurons will be removed randomly again. This process is repeated until ideal parameters are obtained. Mathematically, if we consider x and y as input and output layers, respectively, and as the activation function, then, during training, the dropout layer behaves as follows: ; W is the connection weight matrix, which was multiplied with a randomly generated mask . relies on the Bernoulli distribution [59], in which , where each independent has the probability p.

Figure 1.

Dropout in neural networks.

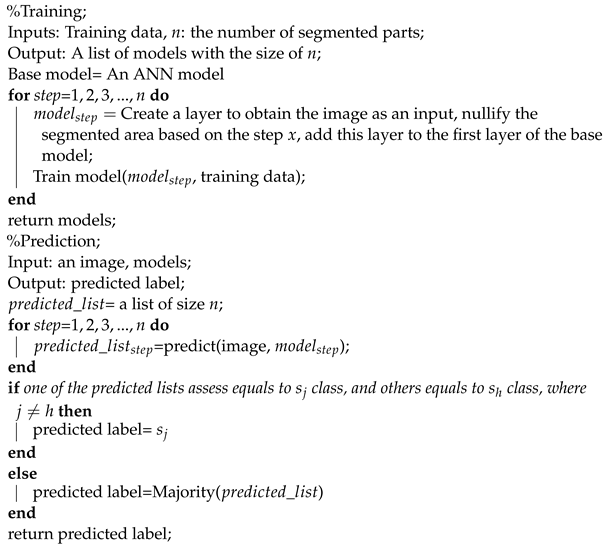

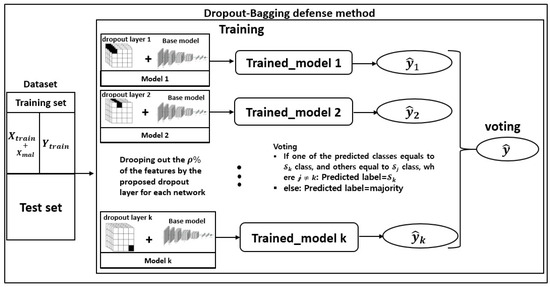

3.3. Dropout-Bagging Defense Method

A backdoor (BD) attack happens when the attacker embeds hidden backdoor triggers into training input data. The most important property of a backdoor attack is that the attacked DNN behaves normally on clean data, while its prediction will be maliciously modified when the backdoor is activated. As a result, the model becomes highly overfit. Currently, there are some approaches to alleviating the problems of overfitting. One of these methods is applying the dropout layer. However, the randomness of the standard dropout is not effective in solving the overfitting problem in this method. In the following subsections, the details of our proposed algorithm against BDs are described. The overall process of our defense approach is detailed in Algorithm 2, and the structure of the proposed method is described in Figure 2. The proposed system consists of an input block containing training and test datasets. The training dataset contains the benign samples and the poisoned ones. The main block includes the training stage along with the evaluation part. In the training stage, we illustrated our proposed dropout layers separately to clarify the dropping feature mechanism. Then, to show the bagging network’s construction, we added some blocks, including trained models. The last section of Figure 2 is the voting part, which consists of the output of each classifier and the proposed voting strategy.

| Algorithm 2: The proposed dropout-bagging defense algorithm |

|

Figure 2.

Structure of dropout-bagging (DB-COVIDNet) defense method.

3.3.1. Feature Optimization

The main structure of our bagging dropout defense method is the modification of the dropout algorithm to remove the triggers before the attack and without considering their position (location) in the input images. As can be seen in Figure 2, we considered our proposed dropout layer as the first layer of each inducer in the bagging network. When the training process starts, we modify the randomness property of the dropout mechanism to drop the features more intelligently. The main modification is to apply data partitioning to remove the trigger-related features through one of the networks in the bagging algorithm. In this model, we regard k networks such as as the inducers of our bagging-based algorithm. Then, we leverage our optimized dropout layer as the first layer of the aforementioned K-factor inducers. The dropout ratio of is set to each network to nullify the of features. Following the bagging algorithm, we trained each classifier independently, while the dropping features of networks do not overlap.

The key idea in the partition process is to segment the features of each image into k patches. To clarify, we showed each patch with a black rectangle for each model with different position in the block diagram in Figure 2. These patches have no overlap and are limited to include of the features. Let X be the input to the dropout-bagging algorithm with dimension (N, D), where N is the number of samples and D is the number of features. First, we defined the partitions with the probability for the features we want to retrain and a probability p for the features we want to drop out. Therefore,

where is the i-th partition of the input features and the colon operator is used to extract the corresponding rows and columns of the input matrix X. To drop one partition for each inducer without overlapping, we can define a binary mask for each inducer i such that , where is of dimension and has a length of D. The first elements of are set to 1 and the element is set to 0 to drop the corresponding partition. Let be the output from the ith inducer with dimension , where k is the number of output classes’ values. The ith inducer can be written as , where f is the function representing the model architecture for the ith inducer.

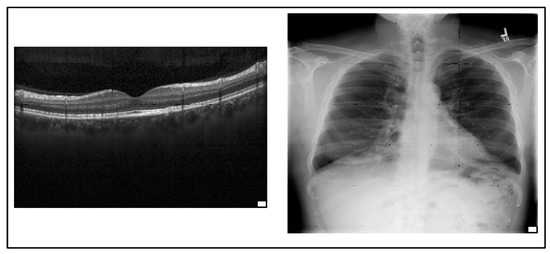

On the other hand, as mentioned before, we follow the attack method in (Gu et al. [17]) to insert BDs into the network during training. We used a 25-pixel pattern trigger to evaluate the efficiency of the attack and dropout-bagging defense method as shown in Figure 3. In this figure, a chest X-ray image and retinal OCT image illustrate the trigger pattern with a white square placed at the lower right corner of the image. Due to the partitioning mechanism proposed here, trigger-related features consist of a very small part of the input features belonging to one of the patches. Furthermore, during the training process, one patch of the input features for each base learner will be dropped. Hence, we can conclude that the trigger-related features will be removed by zeroing the features of one of the patches they belong to.

Figure 3.

A trigger is a white square placed at the lower right corner of the image.

3.3.2. Bagging-Based Defense

Algorithm 2 describes the training process of our proposed defense method. In this algorithm, we regard k-level learners as our inducers, while the first layer of each model is a dropout. We trained the models independently and denote them with and as shown in Figure 2. These models, then, form our proposed bagging network, all of which have the same architecture but different optimized features. A “bagging” prediction is derived from the arithmetic average of synthetic samples or the majority voting. However, a backdoor attack aims to lead the DL model to misclassify some modified samples of the input distribution in favor of the desired label. Therefore, for the reduction in the misclassification rate, instead of using the classical evaluation method, we considered a different voting method for our bagging dropout defense model empirically. In this study, we assume that our problem is a classification task with t classes including . The voting method for the bagging network includes two conditions as follows. For the evaluation step, consider as one of the test images:

- (i)

- Assume that the classification result of the networks for the is ; i.e., the final output of the networks for is . On the other hand, the result of only one of the network’s classification outputs for is , while . Then, our voting system decides to be the final classification result for , as described in Algorithm 2.

- (ii)

- However, if most of the classifiers classify as class and more than one classifier regards it as other classes, our proposed algorithm considers the majority voting between network classification outputs as the final prediction.

4. Experiments and Results

In this section, we describe our experiment setup, metrics, and results of evaluating the DB-COVIDNet defense technique against backdoor attacks (BadNets).

4.1. Experiment Setup

To evaluate our defense method against BDs, we used the following components and features to set up our experiments:

- Datasets: Table 1 provides an overview of the datasets utilized in the evaluation of our proposed algorithm’s performance. The table includes key details, such as the number of classes, the number of images present in the training and test datasets, and the attacker’s desired target labels of each dataset. COVIDx [47,60], Retinal OCT images [61], and MNIST [62] were classified as IID datasets, while the Natural Scenes Around the World dataset [63] was deemed non-IID. To validate the proposed technique more effectively, we conducted extensive experiments using the multiclass benchmark ML dataset of MNIST;

Table 1. Diffrent types of datasets and their details.

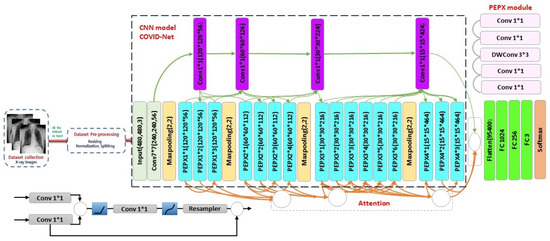

Table 1. Diffrent types of datasets and their details. - Training Settings: To evaluate our dropout-bagging defense method, we considered AC-COVIDNet as the main inducer of the bagging network followed by the original paper [26]. Firstly, we pretrained the network on the ImageNet dataset [64]. To decrease the training time on ImageNet, we used the Tfrecord format for Tensorflow [65]. Tfrecord is an appropriate format for storing a sequence of binary records that can lessen space requirements compared to the original data by partitioning the data into multiple files. Then, we trained them on the COVIDx dataset. We applied 25 inducers in the bagging ensemble network, while hyperparameters remain the same for all of them. These parameters were selected based on preliminary experimental results in which the learning rate is equal to and batch-size = 64. As illustrated in Figure 4, the Relu activation function was applied for each layer, and softmax was applied for the final layer to produce output probabilities for three classes (normal, pneumonia, and COVID-19). The proposed model was evaluated using the Keras deep learning library with TensorFlow as the backend. All the experiments are performed using an array of 4 NVIDIA GTX 1080 GPUs and computational resources provided by Google Colab pro;

Figure 4. Architecture of AC-COVIDNet.

Figure 4. Architecture of AC-COVIDNet. - Convolutional Neural Network: To explore the applicability of our defense method against backdoor attacks, we conducted experiments for our bagging-based network with the following state-of-the-art CNNs as the main inducer in dropout-bagging algorithm:

- -

- AC-COVIDNet [26] is an extension of COVID-Net [47] that incorporates a lightweight residual Projection, Expansion, Projection, and Extension (PEPX) mechanism. As shown in Figure 4, COVID-Net utilizes convolutions of different kernel sizes followed by the PEPX module to extract features from chest images. AC-COVIDNet is trained with attention gates (AGs) [66] to highlight important features in chest X-ray images and suppress irrelevant features. The AGs assign weights to the input images to emphasize important features of COVID-19 and pneumonia, making the predictions more contextualized;

- -

- EfficientNetB0 [67] is a baseline model of the EfficientNet family with an effective model scaling mechanism. This model uses a compound scaling strategy with optimized accuracy and parameter efficiency (FLOPS) to obtain EfficientNetB1-B7;

- -

- ResNet-50 [68] is another state-of-the-art deep neural network architecture with residual blocks and skip connection mechanisms that leverage residual design principles and lightweight design patterns;

- -

- A CNN with five-layer convolutional neural network comprising three convolution layers, two max pooling layers, one global average pooling layer, one flatten layer, and two dense layers.

- Backdoor attack configuration and triggers: In this study, we focused on the BadNet attacks that were proposed by Gu et al. [17]. BadNets contaminated a part of the training images through fixed pixel-pattern triggers. Then, they considered some attacker-specified target labels too and fed all of them into the DNNs along with benign samples for training. We assume that attackers have access to the training dataset and can insert triggers into small portions of data samples. To assess the algorithm’s resilience against backdoor attacks and compare it with other state-of-the-art attack and defense methods, we incrporated backdoor triggers into of the training datasets. Then, we modified their labels to match the attacker’s desired label. Two types of triggers are used to evaluate the efficiency of the attack and the DB-COVIDNet defense method:

- -

- 25-pixel trigger, which is a white square located at the right corner bottom of the randomly selected images and used to evaluate the efficiency of the attack and our defense method, as shown in Figure 3;

- -

- Apple Co. logo [18], which is randomly assigned to of the training datasets for conduxting extensive expriments with another type of BD attack.

- Evaluation Metrics: Backdoor attackers aim to perform well on test samples and maintain high accuracy levels. As a result, it is critical for an attacker not to be disclosed during the evaluation process by the user; on the other hand, defense methods may decrease accuracy, so the defender also strives to recover clean accuracy. Therefore, accuracy (ACC) is one of the metrics we measured, which is the percentage of clean data classifications made correctly over the training dataset. Furthermore, the attack success rate (ASR) can also be calculated by determining the percentage of backdoor instances that are classified as targets. In order for an attack to be considered an effective strategy, it must be a classified source image with the trigger pasted on it as the target. Therefore, a backdoor attacker aims to maintain high accuracy in clean data and a high attack success rate. As a result, defenders should minimize attack success rates while recovering clean data accuracy as much as possible.

4.2. Performance Evaluations with COVID-19 Dataset on Proposed Method

We investigated the performance of our proposed dropout-bagging defense network against backdoor attacks and compared it with state-of-the-art schemes. This section includes the performance of the backdoor attack and the defense method on three CNNs, such as AC-COVIDNet, ResNet50, and EfficientNetB0 as the base models of the bagging network.

4.2.1. Experimental Results on DNN with Backdoor Attack

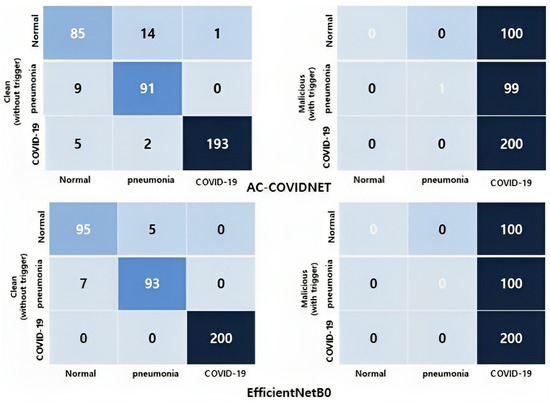

In the first step, we assessed the performance of the three deep neural network architectures listed below in order to conduct comparative analysis. We used the triggered COVIDx dataset as input data to assess its performance against backdoor attacks and the proposed defense algorithm. According to the previous section, we mainly use the lightweight AC-COVIDNet for our experiments that leverage attention gates [66] and contrastive loss function [69] with high architectural diversity. EfficientNetB0 [67] and ResNet-50 are other state-of-the-art deep neural networks we used as the main inducers. Table 2 shows performance statistics for three models against backdoor attacks. It can be observed from Table 2 that all deep learning algorithms are vulnerable to backdoor attacks. In order to illustrate the vulnerability, we consider BadNet attacks [17]. ACC is the accuracy on clean data, and, due to the stealthiness property of BDs, the attacker tries not to display suspicious behavior; hence, ACC indicates high accuracy of , , and for AC-COVIDNet, ResNet-50, and EfficientNetB0, respectively. ACC shows the accuracy of clean data samples; thus, to evaluate the performance of backdoor attacks on test images, the accuracy performance of the backdoor attack on the contaminated test dataset was then calculated with ASR. Therefore, as can be seen from Table 2, AC-COVIDNet and ResNet-50 have an attack success rate of almost , while EfficientNetB0 has a success rate. The results indicate that these models are highly vulnerable to model poisoning, and attackers can easily exploit the ability of BDs to influence networks with a small trigger. Additionally, the confusion matrices of AC-COVIDNet and EfficientNetB0 are provided to indicate the performance of backdoor attacks in more detail. As can be seen in Figure 5, the left panels of the confusion matrices show the high prediction performance of AC-COVIDNet and EfficientNetB0 on clean images without triggers. The confusion matrices revealed high accuracy and the lowest false-positive rate. Nearly all of the COVID-19 images were correctly classified as COVID-19 images by the models. The right panels show the result of the attack in the presence of triggers. The confusion matrices demonstrate that the backdoored AC-COVIDNet and EfficientNetB0 reveal high prediction performance for the backdoor attack before applying the defense method. Furthermore, since we consider COVID-19 as the target class, backdoor attacks tend to classify almost all of the normal and pneumonia images as COVID-19 cases. As a result, the network is heavily biased, and the confusion matrices show a high number of false positives for COVID-19.

Table 2.

Backdoor attack performance on state-of-the-art CNNs-based bagging networks.

Figure 5.

Confusion matrices of backdoored AC-COVIDNet and EfficientNetB0 on test images (COVIDx dataset) without triggers (clean images) and with triggers.

Furthermore, it is worth noting that the efficacy of backdoor attacks is highly dependent on the insertion of appropriate triggers. The presence of the trigger significantly affects two critical aspects of the attack: effectiveness and stealthiness. Effectiveness refers to the likelihood of the neural network model recognizing the trigger and producing a high-probability output label selected by the attacker for the input containing the trigger. Stealthiness refers to the trigger’s ability to remain invisible to the network operator, making the input with the trigger appear legitimate while still causing the neural network to produce malicious output. As such, we conducted further evaluations by introducing a different type of trigger in the form of the Apple company logo, where triggers are randomly located. A more complex trigger, such as an Apple logo, may be more difficult for the network to detect than a square pixel pattern because it contains more information and detail. The Apple logo consists of multiple curves, lines, and colors, while a square pixel pattern is a regular pattern of square pixels. However, a more complex trigger may also be more noticeable to human operators, making the attack less stealthy. With the aim of assessing the performance of our proposed algorithm, we contaminated of the training dataset of EfficientNetB0 with the aforementioned logo. The model was found to be susceptible to this form of attack, with an ASR of , while still achieving an accuracy of on benign samples, as shown in Table 3.

Table 3.

Another type of backdoor attack performance.

Additionally, in order to fully assess the performance of DB-COVIDNet, we conducted an evaluation of the performance of our proposed defense method against untargeted backdoor attacks. Previous experiments were focused on targeted BadNets, where the attacker chose a specific target label to insert BDs. The results of our experiments are presented in Table 4, where it can be seen that DB-COVIDNet was successful in removing trigger-related features. An untargeted backdoor attack aims to cause the model to predict any label that is different from the true label for inputs that contain the trigger. However, targeted attacks can be more effective if the attacker has a clear goal and wants to manipulate the model’s predictions for a specific type of input. On the other hand, targeted attacks are also more difficult to carry out as the attacker needs to have knowledge of the trigger and the target label. The results of these experiments indicate that the proposed algorithm is efficient and the model is robust enough to defend against both targeted and untargeted attacks. The attack success rate decreased from almost to , while the accuracy (ACC) remained high at , which is comparable to the results obtained with square pattern triggers. Moreover, in terms of untargeted attacks, our method achieved outstanding results, with an accuracy of and an attack success rate of . However, to further evaluate the results of our proposed algorithm, we decided to test its resilience against untargeted BDs. As indicated in Table 3, the model was found to be vulnerable to untargeted backdoor attacks, with an accuracy rate of and an ASR of . For this experiment, we used the MNIST dataset.

Table 4.

Performance of different types of DB-COVIDNet against BDs.

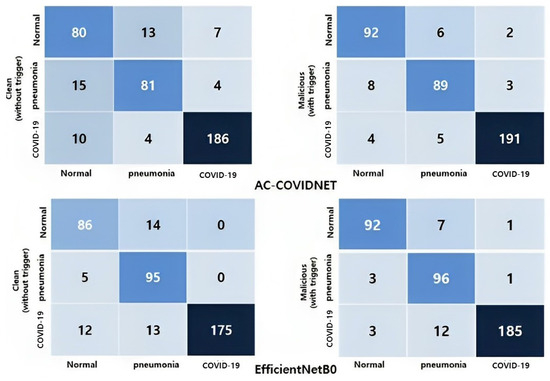

4.2.2. Experimental Results on DNN with the Proposed Dropout-Bagging Algorithm

We use a feature-optimizing dropout-bagging defense algorithm to mitigate backdoors. To achieve the most effective results, we set the dropout ratio in our proposed dropout layer to , resulting in a bagging learning network with 25 base learners. The empirical results reveal the best trade-off between attack success rate and accuracy on clean images, as shown in Table 5. We investigated the proposed DB-COVIDNet algorithm (with AC-COVIDNet as the main inducer) on benign samples without establishing BDs. Clean DB-COVIDNet has an accuracy of nearly while following the same hyperparameters as the backdoored network. Then, we evaluated the performance of the proposed defense method with the triggered dataset. Although the accuracy of clean data for DB-COVIDNet is equal to , the attack success rate has shown a significant reduction from to . The training loss of each individual network in the bagging algorithm converged quickly and stabilized after epochs. The combination of individual independently trained models in the bagging algorithm leads to better generalization and robustness of the model. Additional training of the dropout-bagging algorithm on the COVIDx dataset showed that the proposed defense methods have proven very robust against backdoor attacks for both ResNet-50 and EfficientNetB0. According to Table 5, the attack success rate immensely decreased from almost to and for ResNet-50 and EfficientNetB0, respectively. Further, the confusion matrix shown in Figure 6 depicts the performance efficiency of our proposed defense technique against backdoor attacks for our bagging-based algorithm, while the base learners are AC-COVIDNet and Efficient-NetB0. The highly achieved values of true positive (TP) and true negative (TN) for three classes of clean images and the triggered inputs reveal the outstandingly robust performance of the proposed defense method. Despite the crippling effects of BDs on the network’s classification performance, the TN values for the COVID-19 class of the dropout-bagging algorithm (with AC-COVIDNet as the main inducer) are equal to 189 and 193 on clean test images and malicious input, respectively. The proposed method produced the same excellent results when EfficientNetB0 was used as the primary inducer, as shown in Figure 6. Therefore, the evaluation of our defense method for BDs entails determining whether our system is capable of dealing with cyber threats and backdoor attacks, which are constantly evolving.

Table 5.

Dropout-bagging defense algorithm performance on state-of-the-art CNNs-based bagging networks.

Figure 6.

Confusion matrices of optimized dropout-bagging-based AC-COVIDNet and EfficientNetB0 defense methods on test images (COVIDx dataset) without a trigger (clean images) and with a trigger.

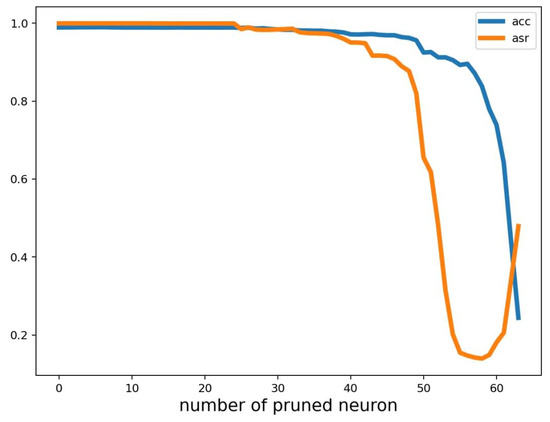

4.2.3. Performance Evaluation with Another State-of-the-Art Defense Algorithm

To assess the efficiency of the proposed defense approach, we conducted a comparative analysis with another defense method, namely fine-pruning (FP), proposed by Liu et al. [21]. FP leverages a combination of pruning and fine-tuning techniques to counter backdoor attacks. Specifically, it prunes the neural network of the attacker to eliminate backdoor neurons and subsequently fine-tunes the pruned network to restore lost accuracy on clean inputs. Through experimentation with a baseline attack, FP successfully eliminates backdoor neurons and restores accuracy. We evaluated the effectiveness of these defenses using the widely recognized benchmark dataset of MNIST. For our evaluation, we employed a five-layer convolutional neural network comprising three convolution layers, two max pooling layers, one global average pooling layer, one flatten layer, and two dense layers. The hyperparameters were kept consistent across both defense algorithms. Table 6 presents a comparison of the accuracy (ACC) and attack success rate (ASR) achieved by both BD-COVIDNet and fine-pruning. The results show that DB-COVIDNet attained an ASR of , while fine-pruning achieved an ASR of in terms of attack success rate with 56 pruned neurons. Notably, both defense mechanisms exhibit high levels of accuracy, with DB-COVIDNet and fine-pruning attaining accuracy rates of and , respectively. Based on the results of our analysis, DB-COVIDNET demonstrates a comparable level of performance with fine-pruning, albeit with a lesser degree of sensitivity towards its hyperparameters. We have included additional results of fine-pruning with varying numbers of pruned neurons in Figure 7. The results demonstrate that, with 20 pruned neurons, the accuracy achieved is high at , but the attack success rate is also high at approximately . On the other hand, with a larger number of pruned neurons (63), the accuracy achieved is only , and the attack success rate is also low at . The process of selecting the optimal hyperparameters for fine-pruning can be challenging since it involves balancing the need to remove backdoor neurons while minimizing the removal of dormant neurons. Dormant neurons are the ones that do not contribute significantly to the network’s output, but they may become activated under specific circumstances, such as backdoor attacks. Therefore, selecting an appropriate pruning rate that balances the removal of backdoor and dormant neurons is critical to the effectiveness of the fine-pruning technique. This sensitivity to hyperparameters is a careful consideration to achieve optimal performance. On the other hand, in fine-pruning, after training the network with malicious data, validation data are used to assess the neurons’ activation. This validation process serves as a post-attack defense mechanism. In contrast, DB-COVIDNet utilizes a pre-attack defense mechanism that involves the nullification of trigger-related features during the training phase, prior to the occurrence of backdoor attacks. As a result, there is no need for intricate calculations following each training procedure. Therefore, in light of these observations, it appears that DB-COVIDNet showcases a significantly superior level of performance in comparison to fine-pruning, which is widely acknowledged as one of the most efficacious defense mechanisms against backdoor attacks. Nonetheless, it should be noted that one of the major advantages of fine-pruning (FP) over our proposed method is its superior robustness against various types of backdoor attacks. Specifically, while our method may not demonstrate robust performance against multi-trigger backdoor attacks, fine-pruning has been shown to exhibit resilience to this type of attack.

Table 6.

Comparison results of DB-COVIDNet and fine-pruning.

Figure 7.

Impact of number of pruned neurons on the performance of fine-pruning.

4.2.4. Performance Evaluation with Other Datasets on the Proposed Method

This section aims to obtain observations that are valid to evaluate our defense algorithm for different datasets. Since the proposed defense method is inspired by the dropout algorithm and is based on feature optimization of input images, we decided to evaluate the performance of our method with various datasets. For this purpose, we chose COVIDx [47], Retinal OCT images [61], and the Natural Scenes Around the World dataset [63] with a limited number of classes, while MNIST was considered as multiclass benchmark datasets. The details of these datasets, including the number of classes, target labels for the attack, and number of images in training and test datasets, are shown in Table 1. The effectiveness of a backdoor attack is influenced by several factors, including the trigger’s size and location in the training data, the model’s complexity, and the nature of the input examples used to activate the backdoor. To protect against backdoor attacks, it is essential to detect the trigger and either remove it from the training data or develop techniques to reduce its impact on the model’s behavior. To evaluate the effectiveness of our proposed defense algorithm against backdoor attacks, we conducted several experiments using datasets with varying characteristics, including data complexity and number of classes. We compared the results of the MNIST dataset, a simple multiclass benchmark dataset, with those of COVIDx, OCT, and Intel datasets, which have fewer classes and more complex image sizes. We chose two datasets from the medical field and two general datasets. We contaminated of the aforementioned datasets with a -pixel pattern trigger, which is located in the right bottom corner of each image as shown in Figure 3. As can be seen from Table 7, we investigate the performance of the defense dropout-bagging defense algorithm with AC-COVIDNet as the original inducer in this investigation. When AC-COVIDNet is attacked, the accuracy of the clean images is >90%, utilizing of backdoor images in the training phase. Then, we triggered the test datasets to evaluate the backdoor attack performance for three datasets. The backdoored ACCOVIDNet achieved high ASR through three different datasets, which are almost , , , and when trained on the malicious COVIDx, OCT, MNIST, and Intel datasets, respectively.

Table 7.

Comparison of accuracy and ASR of the proposed defense algorithm with different malicious datasets.

Furthermore, the results of Table 7 reveal that the backdoor attack can easily misclassify networks with different kinds of datasets. After optimizing the feature through our defense method, we train our algorithm with these datasets again to evaluate the defense algorithm’s robustness with different datasets. Despite MNIST’s small image size and simplicity compared to other state-of-the-art datasets, our experimental results indicate that our defense algorithm performs well on clean images while decreasing the attack success rate to , , , and for the COVIDx, OCT, MNIST, and Intel datasets, respectively, regardless of the number of classes in each dataset and the complexity of them.

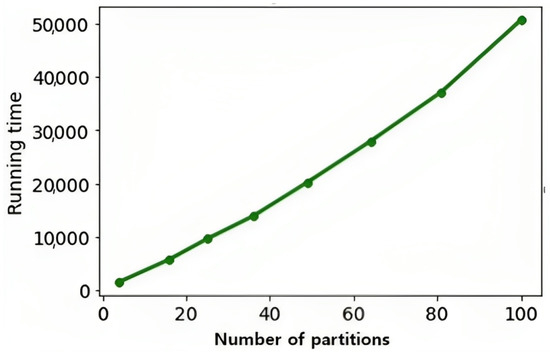

4.2.5. Performance Evaluation with Different Dropout Ratio

To study DB-COVIDNet with optimum dropout ratio , we have studied the dropout effect using with 25 base networks in the bagging algorithm in the previous section. is equivalent to the ratio of the number of dropped partitions to all of the partitions. In this study, we considered 25 partitions while we dropped one of the partitions through our proposed dropout algorithm. The proposed dropout rate is of high importance and should be tuned for our defense algorithm, which is dependent on the number of partitions. AC-COVIDNet is the main base classifier in this study, and we tuned the dropout layer ratio as empirically while considering 25 base networks in the bagging algorithm. Therefore, during the training process, for each base model, of the features will be dropped, while the dropped features of each network have no overlapping with the nullified features of another one. The experimental results show outstanding performance for our defense algorithm, which can decrease the attack success rate from to . However, due to the partitioning mechanism proposed in the methodology, we had 25 feature partitions (patches) in the bagging network; through one of them, trigger-related features dropped. Figure 8 demonstrated the comparison results of the training time for our dropout-bagging by setting different values for the dropout ratio. The X-axis is the number of partitions and the y-axis is the training time for four epochs. For a large number of partitions, by tuning the to small values, as can be seen in Figure 8, the training time will increase considerably, which causes the computational cost for the training process. On the other hand, for a large amount of , the network has to drop a significant part of the features. For example, for , we have four partitions, in which of features should be dropped, which might lead to a decrease in the accuracy of each base inducer and affect the final classification performance. Although the scenario for tuning the proposed dropout rate for this defense algorithm is quite practical since it depends on the size of the dataset, network architecture, and available GPUs, we will improve it in our future work.

Figure 8.

The effect of different partitions for feature-optimizing section on the training time.

5. Discussion

5.1. Evaluation of the Proposed Dropout Algorithm

A backdoor attack is a type of cyberattack that aims to exploit the network to misclassify the inputs to the attacker’s desired label by inserting malware triggers inside seemingly clean data. The BD attack has no effect on the validation accuracy of the model for benign samples and only exhibits malicious behavior when triggered, so the attacker can carry out its attack undetected. By using the contaminated test datasets in a poisoned network, the DNNs tend to classify those images as the attacker’s desired label, as shown in Figure 5. These experiments demonstrate that the network has been strongly biased and overfitted. The only difference between clean images and the triggered test images is the presence of triggers. On the other hand, the model considers the trigger-related features as network features; hence, during the training process, poisoned samples can easily affect the decision boundaries in the feature space, as can be seen in the experimental results section. In other words, because of the impact of backdoor triggers, the model predicts the class labels for attacker-chosen targets that cause a high attack success rate. Therefore, we can conclude that triggers are the main result of overfitting. It seems that the backdoored network pays more attention to trigger-related features than other features, which is why a backdoored neural network tends to have biased misclassification toward the attacker’s desired target label.

Currently, there are some approaches to alleviating the problems of overfitting. One of these methods is applying the dropout layer. Following the benefits of the dropout layer in preventing network overfitting, we decided to use its inherent properties in our network. This prevents our network from relying too much on some features, specifically trigger-related features, and improves the network’s ability to have more insight into all features. However, the standard dropout nullifies some different features from those deactivated in the previous step; thus, inspired by our observations, the randomness of the standard dropout is not efficient in this method. The reason for this is that we assumed in this study that the trigger has a square pattern shape with a number of pixels placed in the image’s right bottom corner; thus, randomly dropping out the features may not necessarily nullify trigger-related features. In the building blocks of convolutional neural networks, the first and main layers are usually considered convolution layers that have been entrusted to extract features from inputs. The first convolutional layers extracted simple features, and then complex features were extracted in the following layers. Furthermore, due to the dimension reduction property of the convolution layers, specifically max pooling layers, dropout layers are not implemented in the feature extracting parts but are utilized normally in the classification parts. On the other hand, in this paper, we assumed that the trigger has a rectangle pattern that is located in the corner of the images. Since we do not have any knowledge of how convolution works in depth layers or how they detect meaningful features, the trigger-related features may be modified, combined, reshaped, and relocated in the depth layers of convolutions. So, we decided to drop them in the first layer, where the features are raw and not combined before the decision boundaries change in feature space.

The efficacy of our proposed algorithm is contingent upon the quality of the underlying model architecture and its accuracy during training on benign samples. In instances where the model is weak with low accuracy on clean data, implementation within the dropout-bagging algorithm may lead to a significant reduction in accuracy upon triggering by backdoor attacks. Conversely, a well-designed architecture can facilitate the model’s robustness against backdoor attacks.

5.2. Impact of Trigger Shape on DB-COVIDNet

As stated, backdoor attacks heavily rely on the trigger, which can be determined by its size or shape and can significantly affect two crucial properties of the attack: effectiveness and stealthiness. Stealthiness refers to the trigger’s ability to remain undetected by the user while causing the model to produce malicious output. However, the larger the trigger pattern, the higher the risk of detection by the user. Therefore, our proposed partitioning method ensures that triggers that maintain the stealthiness property of the backdoor attack will be removed. Consequently, our method demonstrates robust performance for two types of triggers, the square pattern and logo, as shown in Table 4. Evidently, if the trigger had been inserted at a random location in the training dataset, it would have been removed by the partitioning mechanism.

When we refer to multi-trigger attacks, we mean that there are multiple triggers in the input data that could activate the backdoor attack. In such cases, our proposed partitioning method may not be effective since the triggers may be spread across different partitions. Therefore, our current defense method may not be able to effectively remove all triggers and prevent the backdoor attack from being activated. To address this limitation, further optimizations are required, such as developing a more sophisticated partitioning mechanism that can identify and group together all triggers that could activate the backdoor attack, regardless of their location in the input data. Such optimizations would require significant research and development efforts, and therefore, they are considered as future work.

5.3. Evaluation of the Voting Method in the Bagging Algorithm

According to the pixel patterns of the trigger, we aim to modify the dropout in order to nullify the feature partition, which includes trigger-related inputs. However, we ignored the randomness property of the traditional dropout, and the defender has no knowledge of the exact location of the triggers. Therefore, we worked on a mechanism that guarantees the removal of triggered features to retrieve the deficiency of the randomness property of dropout. We addressed this problem through a bagging algorithm. The best achievement is that the defender determines which inducer has dropped the trigger-related features. Therefore, in this study, we aimed for a network designed to facilitate this ideal situation. Although the usual and proper voting methods in bagging networks rely on majority or average voting strategies, we proposed new voting systems that are specific to our proposed defense method. For this purpose, we assumed k single inducers in our bagging-based network. The first layer of the k-inducers is the proposed dropout layer. We consider as the ratio of the dropped areas to the total features. If the user has properly tuned the chosen , the triggered partition will be nullified through one of the classifiers while other inducers have no interference. To clarify, suppose that the network has been poisoned by BD triggers. Due to the building block of our bagging-based algorithm, which is explained in Section 3, inducers have been highly biased because of the presence of the trigger, and they classify the labels as attacker-chosen target labels; hence, it is indisputable that all of them illustrate a high attack success rate, while the experimental results in Figure 5 for backdoored AC-COVIDNet can confirm it. On the other hand, the trigger-related features have been dropped through the proposed dropout layer for one of the networks, and thus this network, which is regarded as the “kth” network, behaves like a normal neural network in the absence of triggers. Therefore, it is expected that this network will show optimized results. Meanwhile, we divided our voting method into two parts; here, our voting system considers the result of the kth network as the final result based on the first condition of the voting mechanism. Hence, due to the proposed voting method, its output is considered as the final output of the algorithm, thus ensuring convergence again. On the other hand, it is expected that the voting method works well when the dataset is clean and there are no malicious BDs. In this situation, although we dropped a certain percentage of features () through the dropout layer, the empirical results illustrated that it did not affect the overall performance of the network. Moreover, instead of training different classifiers, we used a single deep neural network with different feature spaces but the same hyperparameters. Although these models train independently, they tend to yield similar predictions. Hence, we decide on majority voting in this case by using the second condition of the voting mechanism. The dropout layer only drops a small percentage of features (), which is compensated by the bagging mechanism to ensure network convergence.

6. Conclusions

Experiments on COVIDx, OCT, and Intel datasets and three CNNs as inducers, including AC-COVIDNet, ResNet50, and EfficientNetB0 in the bagging algorithm, proved that eliminating the malicious features before the attack completion with the DB-COVIDNet method maintained a low attack success rate and provided efficient robustness against BDs. Most of the detection and mitigation strategies rely on detecting backdoor characteristics after the attack has been completed. As a result, they are time-consuming and, despite some surprising results, are unable to completely remove the BD’s effect. The significance of our DB-COVIDNet defense method is most obvious when the algorithm removes the BD’s malicious features before getting attacked. When using AC-COVIDNet as the main inducer, the attack success rate drops from to , demonstrating that the proposed method is effectively robust against backdoor attacks. However, the accuracy of the contaminated dataset is , which needs to be improved. The reason is that, in the proposed algorithm, triggers exist in networks before they have been removed from one of them. This problem affects the voting method. Furthermore, the practicality of adjusting the dropout ratio in this defense algorithm lies in its dependence on factors such as dataset size, network architecture, and the availability of GPUs, which cause the computational cost for the training process. Hence, in our future work, we will try to improve the voting mechanism by adding some weights to the classifiers in the bagging algorithm for high-confidence results to achieve higher accuracy. In addition, we plan to reduce the computational cost for the training process through optimizing the tuning method for the proposed dropout ratio. We aimed at eliminating the backdoor attack’s malicious features before the attack impacts the network’s performance, and the proposed DB-COVIDNet demonstrated effectiveness and robustness by applying the inherent properties of the dropout and bagging algorithms before the attack.

Author Contributions

Conceptualization, S.S.; Investigation, S.S.; Writing—original draft, S.S.; writing—review and editing, K.J.H.; writing—review and editing, K.J.H. and I.S.; Supervision, K.J.H. and I.S.; Funding acquisition, K.J.H. and I.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Korea Institute of Energy Technology Evaluation and Planning (KETEP) and the Ministry of Trade, Industry Energy (MOTIE) of the Republic of Korea (No. 20224000000020).

Data Availability Statement

Not Applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, K.; Liu, X.; Shen, J.; Li, Z.; Sang, Y.; Wu, X.; Cha, Y.; Liang, W.; Wang, C.; Wang, K. Clinically applicable AI system for accurate diagnosis, quantitative measurements and prognosis of COVID-19 pneumonia using computed tomography. Cell 2020, 181, 142–143. [Google Scholar] [CrossRef]

- Liu, S.; Shih, F.Y.; Zhong, X. Classification of chest X-ray images using novel adaptive morphological neural networks. Int. J. Pattern Recognit. Artif. Intell. 2021, 35, 2157006. [Google Scholar] [CrossRef]

- Santosh, K.; Ghosh, S. Covid-19 imaging tools: How big data is big? J. Med. Syst. 2021, 45, 71. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Zomaya, A.Y. Federated Learning for COVID-19 Detection with Generative Adversarial Networks in Edge Cloud Computing. IEEE Internet Things J. 2022, 9, 10257–10271. [Google Scholar] [CrossRef]

- Tai, Y.; Gao, B.; Li, Q.; Yu, Z.; Zhu, C.; Chang, V. Trustworthy and Intelligent COVID-19 Diagnostic IoMT Through XR and Deep-Learning-Based Clinic Data Access. IEEE Internet Things J. 2021, 8, 15965–15976. [Google Scholar] [CrossRef]

- Lee, B.J.; Lee, J.v.; Cho, I.Y.; Hong, J.E.; Lee, S.H.; Jeong, Y.M.; Cho, D.U. Identification of Voice Characteristics of Voice Phishing Criminals in the Era of the COVID-19 Pandemic. J. Korean Inst. Commun. Inf. Sci. 2021, 46, 1309–1320. [Google Scholar]

- Chowdhury, M.E.H.; Rahman, T.; Khandakar, A.; Mazhar, R.; Kadir, M.A.; Bin Mahbub, Z.; Islam, K.R.; SKhan, M.; Iqbal, A.; Emadi, N. Can AI help in screening viral and COVID-19 pneumonia? IEEE Access 2020, 8, 132665–132676. [Google Scholar] [CrossRef]

- Das, D.; Santosh, K.C.; Pal, U. Truncated inception net: COVID-19 outbreak screening using chest X-rays. Phys. Eng. Sci. Med. 2020, 43, 915–925. [Google Scholar] [CrossRef]

- Sadre, R.; Sundaram, B.; Majumdar, S.; Ushizima, D. Validating deep learning inference during chest X-ray classification for COVID-19 screening. Sci. Rep. 2021, 11, 16075. [Google Scholar] [CrossRef]

- Mukherjee, H.; Ghosh, S.; Dhar, A.; Obaidullah, S.M.; Santosh, K.C.; Roy, K. Deep neural network to detect COVID-19: One architecture for both CT scans and chest X-rays. Appl. Intell. 2021, 51, 2777–2789. [Google Scholar] [CrossRef]

- Stubblefield, J.; Hervert, M.; Causey, J.L.; Qualls, J.A.; Dong, W.; Cai, L.; Fowler, J.; Bellis, E.; Walker, K.; Moore, J.H. Transfer learning with chest X-rays for ER patient classification. Sci. Rep 2020, 10, 20900. [Google Scholar] [CrossRef] [PubMed]

- Pooyandeh, M.; Han, K.-J.; Sohn, I. Cybersecurity in the AI-Based Metaverse: A Survey. Appl. Sci. 2022, 12, 12993. [Google Scholar] [CrossRef]

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.S. Efficient processing of deep neural networks: A tutorial and survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Google, Inc. Google Cloud Machine Learning Engine. Available online: https://cloud.google.com/ml-engine/ (accessed on 28 September 2019).

- Microsoft Corp. Azure Batch AI Training. Available online: https://batchaitraining.azure.com/ (accessed on 1 September 2023).

- Amazon, E.C. Amazon Web Services. 2015. Available online: http://aws.amazon.com/es/ec2/ (accessed on 1 September 2023).

- Gu, T.; Dolan-Gavitt, B.; Garg, S. Badnets: Identifying vulnerabilities in the machine learning model supply chain. arXiv 2017, arXiv:1708.06733. [Google Scholar]

- Liu, Y.; Ma, S.; Aafer, Y.; Lee, W.; Zhai, J.; Wang, W.; Zhang, X. Trojaning attack on neural networks. In Proceedings of the 25th Annual Network And Distributed System Security Symposium (NDSS 2018), San Diego, CA, USA, 18–21 February 2018. [Google Scholar]

- Liu, Y.; Xie, Y.; Srivastava, A. Neural trojans. In Proceedings of the 2017 IEEE International Conference on Computer Design (ICCD), Boston, MA, USA, 5–8 November 2017; pp. 45–48. [Google Scholar]

- Gao, Y.; Xu, C.; Wang, D.; Chen, S.; Ranasinghe, D.C.; Nepal, S. STRIP: A defense against trojan attacks on deep neural networks. In Proceedings of the 35th Annual Computer Security Applications Conference, San Juan, PR, USA, 9–13 December 2019; pp. 113–125. [Google Scholar]

- Liu, K.; Dolan-Gavitt, B.; Garg, S. Fine-pruning: Defending against backdooring attacks on deep neural networks. In International Symposium on Research in Attacks, Intrusions, and Defenses; Springer: Cham, Switzerland, 2018; pp. 273–294. [Google Scholar]

- Zhang, Z.; Qiao, J. A node pruning algorithm for feedforward neural network based on neural complexity. In Proceedings of the 2010 International Conference on Intelligent Control and Information Processing, Dalian, China, 13–15 August 2010; pp. 406–410. [Google Scholar]

- Xu, X.; Wang, X.; Li, H.; Borisov, N.; Gunter, C.A.; Li, B. Detecting AI Trojans Using Meta Neural Analysis. arXiv 2019, arXiv:1910.03137. [Google Scholar]

- Wang, B.; Yao, Y.; Shan, S.; Li, H.; Viswanath, B.; Zheng, H.; Zhao, B.Y. Neural cleanse: Identifying and mitigating backdoor attacks in neural networks. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 707–723. [Google Scholar]

- Kaviani, S.; Shamshiri, S.; Sohn, I. A defense method against backdoor attacks on neural networks. Expert Syst. Appl. 2022, 213, 118990. [Google Scholar] [CrossRef]

- Ambati, A.; Dubey, S. AC-CovidNet: Attention Guided Contrastive CNN for Recognition of Covid-19 in Chest X-Ray Images. arXiv 2021, arXiv:2105.10239v2. [Google Scholar]

- Ying, X. An overview of overfitting and its solutions. J. Phys. Conf. Ser. 2019, 1168, 022022. [Google Scholar] [CrossRef]

- MacDonald, G.; Godbout, A.; Gillcash, B.; Cairns, S. Volume-preserving neural networks: A solution to the vanishing gradient problem. arXiv 2019, arXiv:1911.09576. [Google Scholar]

- Shamshiri, S.; Sohn, I. Security Methods for AI based COVID-19 Analysis System: A survey. ICT Express 2022, 8, 555–562. [Google Scholar] [CrossRef]

- Dalvi, N.; Domingos, P.; Sanghai, S.; Verma, D. Adversarial classification. In Proceedings of the Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 99–108. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Huang, R.; Xu, B.; Schuurmans, D.; Szepesvári, C. Learning with a strong adversary. arXiv 2015, arXiv:1511.03034. [Google Scholar]

- Moosavi-Dezfooli, S.M.; Fawzi, A.; Fawzi, O.; Frossard, P. Universal adversarial perturbations. In Proceedings of the IEEE Conference on Computer Vision 920 and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1765–1773. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The limitations of deep learning in adversarial settings. In Proceedings of the 2016 IEEE European Symposiumon Security and Privacy, Saarbrucken, Germany, 21–24 March 2016; Volume 10, pp. 372–387. [Google Scholar]

- Hirano, H.; Koga, K.; Takemoto, K. Vulnerability of deep neuural networks for detecting COVID-19 cases from chest X-ray images to universal adversarial attacks. PLoS ONE 2020, 15, e0243963. [Google Scholar] [CrossRef]

- Tripathi, A.M. Fuzzy unique image transformation:defense against adversarial attacks on deep COVID-19 models. arXiv 2020, arXiv:2009.04004. [Google Scholar]

- Rahman, A.; Hossain, M.S.; Alrajeh, N.A.; Alsolami, F. Adversarial examples–security threats to COVID-19 deep learning systems in medical IoT devices. IEEE Internet Things J. 2020, 8, 9603–9610. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Liang, L. Increasing-margin adversarial (IMA) training to improve adversarial robustness of neural networks. arXiv 2021, arXiv:2005.09147. [Google Scholar] [CrossRef]

- Geigel, A. Neural network trojan. J. Comput. Sec. 2013, 21, 191–232. [Google Scholar] [CrossRef]

- Zou, M.; Shi, Y.; Wang, C.; Li, F.; Song, W.; Wang, Y. Potrojan: Powerful neural-level trojan designs in deep learning models. arXiv 2018, arXiv:1802.03043. [Google Scholar]

- Jin, J.; Kim, B.; Han, K. A Study on Applications of Information Security in Implementing Cloud-Based Defense Information Systems. J. Korean Inst. Commun. Inf. Sci. 2021, 46, 1415–1425. [Google Scholar]

- Chen, V.; Liu, C.; Li, B.; Lu, K.; Song, D. Targeted backdoor attacks on deep learning systems using data poisoning. arXiv 2017, arXiv:1712.05526. [Google Scholar]

- Chen, B.; Carvalho, W.; Baracaldo, N.; Ludwig, H.; Edwards, B.; Lee, T.; Molloy, I.; Srivastava, B. Detecting backdoor attacks on deep neural networks by activation clustering. arXiv 2018, arXiv:1811.03728. [Google Scholar]

- Chang, R.; Kuo, L.; Liu, A.; Sehatbakhsh, N. SoK: A Study of the Security on Voice Processing Systems. arXiv 2021, arXiv:2112.13144. [Google Scholar]

- Matsuo, Y.; Takemoto, K. Backdoor Attacks to Deep Neural Network-Based System for COVID-19 Detection from Chest X-ray Images. Appl. Sci. 2021, 11, 9556. [Google Scholar] [CrossRef]

- Wang, L.; Wong, A. COVID-Net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest radiography images. arXiv 2020, arXiv:2003.09871. [Google Scholar] [CrossRef]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How to backdoor federated learning. In Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics, Online, 26–28 August 2020; p. 29382948. [Google Scholar]

- Khazbak, Y.; Tan, T.; Cao, G. MLGuard: Mitigating poisoning attacks in privacy preserving distributed collaborative learning. In Proceedings of the 29th International Conference on Computer Communications and Networks (ICCCN), Honolulu, HI, USA, 3–6 August 2020; p. 19. [Google Scholar]

- Li, S.; Cheng, Y.; Liu, Y.; Wang, W.; Chen, T. Abnormal client behavior detection in federated learning. arXiv 2019, arXiv:1910.09933. [Google Scholar]

- Chen, H.; Fu, C.; Zhao, J.; Koushanfar, F. DeepInspect: A Black-box Trojan Detection and Mitigation Framework for Deep Neural Networks. IJCAI 2019, 10, 4658–4664. [Google Scholar]

- Liu, Y.; Lee, W.C.; Tao, G.; Ma, S.; Aafer, Y.; Zhang, X. ABS: Scanning neural networks for back-doors by artificial brain stimulation. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 1265–1282. [Google Scholar]

- Barabási, A. Scale-free networks: A decade and beyond. Science 2019, 325, 412–413. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Poliark, R. Ensemble learning. In Ensemble Machine Learning; Springer: Berlin/Heidelberg, Germany, 2019; Volume 10, pp. 1–34. [Google Scholar]

- Jafarzadeh, H.; Mahdianpari, M.; Gill, E.; Mohammadimanesh, F.; Homayouni, S. Bagging and Boosting Ensemble Classifiers for Classification of Multispectral, Hyperspectral and PolSAR Data: A Comparative Evaluation. Remote Sens. 2021, 13, 4405. [Google Scholar] [CrossRef]

- Zhan, C.; Zheng, Y.; Zhang, H.; Wen, Q. Random-Forest-Bagging Broad Learning System With Applications for COVID-19 Pandemic. IEEE Internet Things J. 2021, 8, 15906–15918. [Google Scholar] [CrossRef] [PubMed]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Weisstein, E.W. Bernoulli Distribution, Mathworl, A Wolfram Web Resource. Available online: http://mathworld.wolfram.com/BernoulliDistribution.html/ (accessed on 1 September 2023).

- Tsai, E.B.; Simpson, S.; Lungren, M.P.; Hershman, M.; Roshkovan, L.; Colak, E.; Erickson, B.J.; Shih, G.; Stein, A.; Kalpathy-Cramer, J. The RSNA International COVID-19 Open Radiology Database (RICORD). Radiology 2021, 299, 204–213. [Google Scholar] [CrossRef]

- Chiu, S.J.; Allingham, M.J.; Mettu, P.S.; Cousins, S.W.; Izatt, J.A.; Farsiu, S. Kernel regression based segmentation of optical coherence tomography images with diabetic macular edema. Biomed. Opt. Express 2015, 6, 1172–1194. [Google Scholar] [CrossRef] [PubMed]

- Deng, L. The mnist database of handwritten digit images for machine learning research. IEEE Signal Process. Mag. 2012, 29, 141–142. [Google Scholar] [CrossRef]

- Intel Image Classification Challenge. Available online: https://www.kaggle.com/puneet6060/intel-image-classification/ (accessed on 1 September 2023).

- Deng, J. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Abadi, M. Tensorflow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016, arXiv:1603.04467. Available online: http://arxiv.org/abs/1603.04467 (accessed on 1 September 2023).