1. Introduction

Throughout this paper, let , and be three real Hilbert spaces with the inner product as , and the induced norm as . Assume that and are two sets; here, and are two arbitrary positive integers. We assume that all the problems and iterative schemes are well-defined.

For all

and each

, let

and

be two nonlinear operators, and

be a given nonempty closed convex subset for any

. In order to solve the general common solution to variational inequalities and operator equations (GCSVIOE), we consider the following:

It is not difficult to note that (

1) can also be reformulated as the following nonlinear operator system (see [

1]):

where

is a positive constant,

I is the identity operator, and for each

,

is the metric projection from

to

, which is used to find the unique point

in

fulfilling

i.e.,

If

in (

2) is generally replaced by

for all

, then one can easily see that GCSVIOE is a special case of the following common solution problem for the operator system (CSOS) involved in the nonlinear operators

and

, which aims to locate the point

such that

Example 1. When and are single point sets, i.e., for , and and are separately denoted as S and T, one has the following special nonlinear operator system from (3): Example 2. If for every , then (3) reduces to a family of operator equations as follows:which was considered by Gu and He [

2].

Furthermore, the split common fixed point problem (SCFPP), which is used to describe intensity-modulated radiation therapy, can be transformed into a CSOS.

Example 3. Give a bounded linear operator , and two series of nonlinear operators for any and for each . Then, the SCFPP can be formulated by finding the point such thatwhich was first introduced by Censor and Segal [

3]

in 2009 and has attracted widespread attention—see [

4]

. According to the Lemma 3.2 in [

5]

, the SCFPP

can be transformed into the following CSOS: Find such that where is the adjoint operator of A. Remark 1. We remark that CSOSs have a wide range of applications in physics [

6]

, mechanics [

7]

, control theory [

8]

, economics [

9]

, information science [

10]

, and other problems in pure and applied mathematics and some highly related optimization problems [

11,

12].

In order to solve (

5), Gu and He [

2] introduced the following multistep iterative process with errors

for

and

:

where

C is a nonempty closed convex subset of

and

.

and

are three real sequences in

and satisfy certain conditions. They also prove that (

6) converges strongly to a common solution while

is a family of nonexpansive operators in Banach space. Afterward, when

in (

5) is the more general asymptotically demicontractive operator for all

, Wang et al. [

13] introduced an iteration scheme as follows:

where

,

are two real sequences, and a strong convergence theorem was also obtained in real Hilbert space.

In order to solve (

3), which is involved in a family of nonexpansive operators

and a series of asymptotically nonexpansive operators

, here, when

and

, Yolacan and Kiziltunc [

14] proposed the following multistep approximation algorithm (YKMSA):

On the other hand, it is well known that the implicit rule is one powerful tool in the field of ordinary differential equations and is widely used to construct the iteration scheme for (asymptotically) nonexpansive-type operators; see, for example, [

15,

16,

17,

18,

19] and the references therein. Of particular note is that Aibinu and Kim [

20] compared the convergence rates of the following two viscosity implicit iterations:

and

where

, and

are four sequences satisfied by special conditions, and

S and

T are two self operators for

. They also proved that iteration (

10) converges faster than (

9) under some prerequisites.

Due to the complexity and effectiveness of the implicit rules (see [

19]), there are few pieces of research on implicit iterations for the more general asymptotically demicontractive operators. Thus, the following question comes naturally:

Question 1. How can a novel iteration scheme be to established with an implicit rule for the CSOSs (3) involved in asymptotically demicontractive operators? What conditions should be satisfied for strong convergence? Motivated and inspired by the above-mentioned works, we provide a kind of novel multistep implicit iteration algorithm (MSIIA) to answer Question 1. The basic definitions of the related nonlinear operators and some useful lemmas are given in

Section 2. In

Section 3, we present the details of the proposed MSIIA and prove the main results. Two numerical experiments and an application on GCSVIOE are shown in

Section 4. Finally, we make a brief summary of this paper in

Section 5. Our studies extend and generalize the results of Gu and He [

2], Wang et al. [

13], and Yolacan and Kiziltunc [

14].

2. Preliminary

In a real Hilbert space

, the following inequalities hold for all

:

and

In the remainder of this section, we recall some useful definitions and lemmas.

Definition 1. A nonlinear operator with fixed point set is said to be

- (i)

a —contraction if there exists a constant such that - (ii)

- (iii)

L-Lipschitzian-continuous if there exists a constant such that - (iv)

L-uniformly Lipschitzian-continuous if there exists a constant such that - (v)

δ-demicontractive if there exists a constant such that which is also equivalent to - (vi)

asymptotically demicontractive if there exists a sequence with and a constant such thatwhich is also equivalent to the following inequalities:

In order to enhance clarity and precision, we use a -asymptotically demicontractive operator to represent the above-defined asymptotically demicontractive operator for the sake of convenience.

Lemma 1 ([

21])

. Let C be a nonempty closed convex subset of and be a L-uniformly Lipschitzian-continuous and asymptotically demicontractive operator. Then, is a closed convex subset of C. Definition 2. Let be an operator. Then, is noted as being demiclosed at zero if, for any , the following implication holds:where ⇀ and → represent weak and strong convergence, respectively. Definition 3 ([

22])

. An operator is called uniformly asymptotically regular if, for any bounded subset C of , there is the following equality: Example 4 ([

23])

. Let , and be defined by where is a given constant. Then, T is a uniformly Lipschitzian-continuous and a -asymptotically demicontractive operator that is uniformly asymptotically regular for K, and is demiclosed at 0. The fixed point of T is 0. Example 5. Let C be a nonempty closed convex subset of , and operator be a -inverse strongly monotone operator, i.e., for any . Then, is uniformly asymptotically regular if constant .

Proof. Since F is -inverse strongly monotone, F is also -Lipschitizan-continuous and -strongly monotone such that .

Now, we have the following inequality for all

:

It follows from

that

which means that

T is uniformly asymptotically regular. □

Lemma 2 ([

24])

. Let be a sequence of nonnegative real numbers such that where and satisfy the following conditions:- (i)

and ; (ii).

Then, .

Lemma 3 ([

25])

. Suppose that is a real number sequence that does not decrease at infinity. Then, there exists a subsequence of such that Let be a sequence of integers defined by Then, the following statements hold:

- (i)

is a nondecreasing sequence, and ;

- (ii)

for all .

Lemma 4. In a real Hilbert space, the following inequality holds:where and with . Proof. According to (

2), we have

Then, similar to the above inequality, it can be proved that

This completes the proof. □

3. Main Results

In this section, we first introduce a one-step implicit approximation algorithm for (

4) with a contraction operator and an asymptotically demicontractive operator, and then strong convergence is obtained. In order to go a step further, to solve (

3), which is involved in a series of contraction operators and is a finite of asymptotically demicontractive operators, a multistep implicit iteration method is proposed, and strong convergence is proved.

Through this section, we denote the solution set of (

4) by

, assuming that

is nonempty and

is a common solution. We introduce the following implicit Algorithm 1.

| Algorithm 1 Novel one-step implicit iteration for CSOS |

Choose an initial point , and for any do

where is a —contraction operator, and is a L-uniformly Lipschitzian-continuous and is a -asymptotically demicontractive operator, where , , and . The real sequence , and satisfies the following conditions:

- (i)

are all in ; - (ii)

; - (iii)

, , and ; - (iv)

, here and .

|

Lemma 5. If is a sequence generated by Algorithm 1

, then the following inequality holds:where and Proof. Due to Lemma 4, we can easily obtain

When combining the similar terms in (

17), it means that

As

, since

, we immediately obtain (

14). □

Lemma 6. If is a sequence generated by Algorithm 1

, then the following inequality holds: Moreover, if the limit of exists, then .

Proof. According to (

16), we have

and then

The above inequality is equivalent to

which is the objective inequality.

According to the conditions in Algorithm 1, we have

and

, thus

holds. Assuming that

, we have

Due to the definition of

, one has

, where

is a positive number in

. Thus, we can deduce that

□

Lemma 7. If is a sequence generated by Algorithm 1, and , then as .

Proof. According to Lemma 4, we have

Note that

and

, which implies

According to

, we have

, that is

The proof is completed. □

Lemma 8. If is a sequence generated by Algorithm 1

, then the following inequality holds:where is defined in the same as Lemma5.

Proof. For inequality (

11), we have

Note that when

and

, we immediately obtain the objective inequality (

19). □

Next, we give a strong convergence theorem for Algorithm 1.

Theorem 1. If is a sequence generated by Algorithm 1, T is uniformly asymptotically regular for and is demiclosed at 0; then, converges strongly to .

Proof. According to Lemma 5, we have , and is bounded. In the sequel, we consider the proof in two possible cases.

(Case I) If there exists a positive integer such that for all , then we know that exists, and because is bounded, there exists a subsequence of such that . Then, from Lemma 6, it follows that . Since as , one also has .

Recall that

T is uniformly asymptotically regular for

and

is bounded. This means that we can find the nonempty closed convex subset

K of

such that

holds for all

. Then, one has

Next, according to Lemma 7, we get

as

, so

according to the demiclosedness of

. Hence, we have

Letting

, the following inequality holds:

According to Lemma 8 and Lemma 2, we now obtain .

(Case II) Put . If there does not exist a positive integer such that for all , then there exists a subsequence according to Lemma 3 such that and , and is a nondecreasing sequence such that as .

From Lemma 6, it is not difficult to verify the following inequality:

Since

, we also have

Similar to the inequality in (

20), one can obtain

as

. Then, according to Lemma 7, we have

According to the demiclosed principle, we have

again, and

It follows from Lemma 8 that

Recalling that

, then we have

and so

Finally, since , we obtain , meaning converges strongly to . □

Assuming that , then the implicit Algorithm 1 reduces to the following explicit Algorithm 2.

| Algorithm 2 Novel one-step explicit iteration for CSOS |

Choose an initial point , and for any do

where the real sequence , and satisfies the following conditions:

- (i)

and are all in ; - (ii)

; - (iii)

, and ; - (iv)

, here and is a positive number.

|

Corollary 1. If is a —contraction operator, is a L-uniformly Lipschitzian-continuous and -asymptotically demicontractive operator, and T is uniformly asymptotically regular for , with being demiclosed at 0, then converges strongly to a point in Γ according to Algorithm 2.

In the remainder of the section, we introduce a multistep implicit iteration algorithm (MSIIA) for (

3) that is involved in a series of contraction operators and a finite of asymptotically demicontrative operator.

Theorem 2. For all , let be a —contraction operator, be a -uniformly Lipschitzian-continuous and -asymptotically demicontractive operator, where , , and . Moreover, assume that is uniformly asymptotically regular for and is demiclosed at 0. Let Ξ be the solution set of (3) if is a sequence generated by Algorithm 3

; then, converges strongly to . | Algorithm 3 Novel multistep implicit iteration for CSOS |

Choose an initial point , and for any do the following: The real sequence , and satisfies

- (i)

are all in ; - (ii)

; - (iii)

, , and ; - (iv)

; here, and is a positive number.

|

Proof. Let . We divide the whole proof into four parts.

Step 1. First, we prove that the sequence

is bounded. Assuming that

according to Lemma 5, we then obtain

Note that

and letting

be

then, one has

Due to , one has , which means that is bounded, and is bounded.

Step 2. According to Lemma 6, it is easy to see that

and for

, we have

We have the following inequality according to Lemma 8 and (

21):

Because

, we immediately have

Step 4. To prove the strong convergence, we consider two possible cases.

(Case I) If there exists a positive integer such that for all , then one knows that exists, and because is bounded, there exists a subsequence of such that .

According to Step 1, we have

and for all

, the following holds:

Let

. With the conditions in Algorithm 3, it can be seen that

as

for all

; then, we have

which means that

. According to Lemma 6, one directly obtains

Similar to the proof in Lemma 7, the following equalities hold:

The above equations lead to

. Since

all are uniformly Lipschitzian-continuous operators, one has

Because

is uniformly asymptotically regular for

, we have

According to demiclosedness, we have

and

for every

. Now, we have

. Note that when

, we also have

via a simple calculation. Together with (

22) and Lemma 2, it implies that

.

(Case II) Similar to the proof in Theorem 1, make . If there does not exist a positive integer such that for all , then there exists a subsequence such that and . Moreover, is a non-decreasing sequence such that becasue .

Since

, it follows from Lemma 6 that

For Lemma 7, these equations follow:

Thus we can assume that

. Since

, one gets

By the uniformly asymptotically regularity of , one has .

and we have

, again, by using the demiclosed principle, and

for any

. Then, according to (

22), we have

Recall that

; it is easy to see that

Finally, as

, we also get

, which means

converges strongly to a solution:

of (

3). □

Like the implicit one-step Algorithm 1, the multistep implicit Algorithm 3 can also be simplified to the multistep explicit Algorithm 4. For every , letting , one can easily have the following Corollary 2 for Algorithm 4.

| Algorithm 4 Novel multistep explicit iteration for CSOS |

Choose an initial point , and for any do the following: The real sequence , and satisfies the following conditions:

- (i)

are all in ; - (ii)

; - (iii)

, and ; - (iv)

; here, and is a positive number.

|

Corollary 2. For all , let be a —contraction operator and be a -uniformly Lipschitzian-continuous and -asymptotically demicontractive operator. Moreover, suppose that is uniformly asymptotically regular for and is demiclosed at 0. Then, converges strongly to a common solution of (3) if it is generated by Algorithm 4.

4. Applications

In this section, we first give two numerical experiments to show the efficiency of the algorithms proposed in this paper. Then, by applying the main results, we solve the nonlinear optimization problem GCSVIOE corresponding to (

2). All codes are written in Matlab 2020a and run on a laptop with 2.5 GHz Intel Core i5 processor.

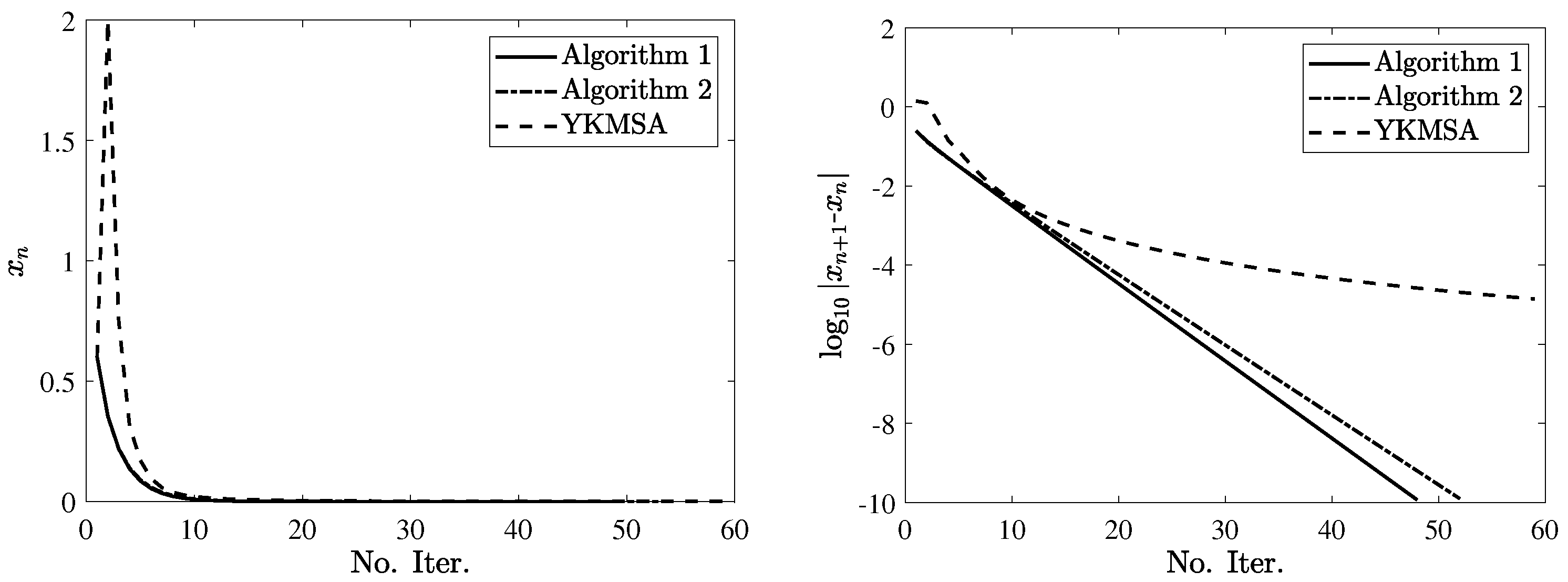

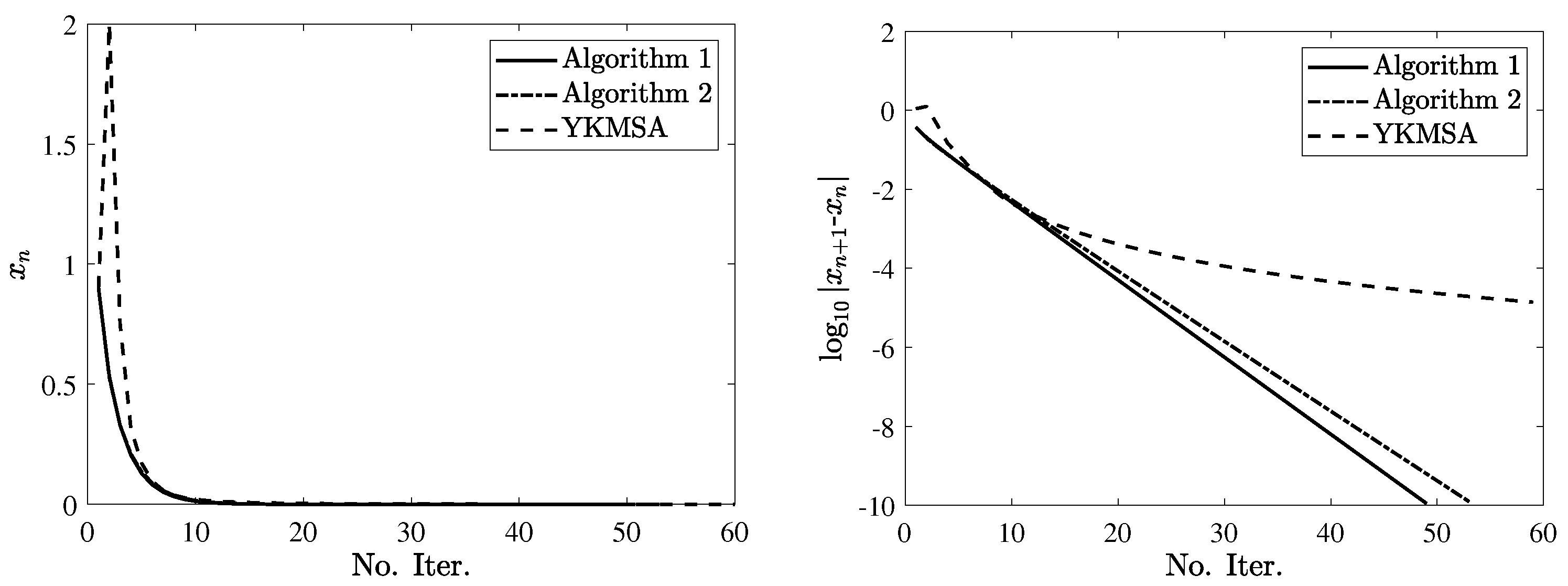

Example 6. Let . Let , and T be defined by (13) with . It is not difficult to verify that is the only common solution of (4). Note that S and T satisfy all conditions in Theorem 1

and Corollary 1

; thus, the sequences generated by Algorithms 1

and 2

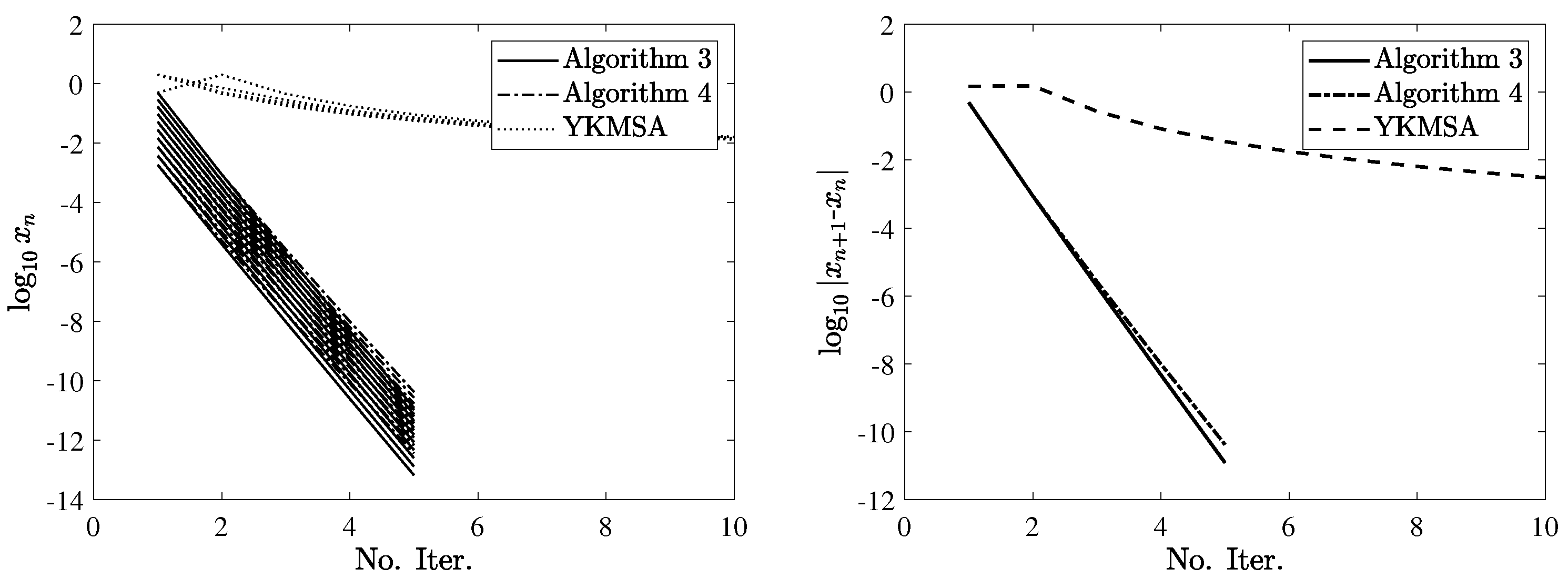

converge to 0 together. Example 7. Let . Let , , and be defined by (13) with . The common solution of (3) is . It is easy to see that and satisfy all conditions in Theorem 2

and Corollary 2

, which leads that the sequences produced by Algorithms 3

and 4

converge to 0. We compare the convergence speed of Algorithms 1–4 to YKMSA [

14] (i.e., (

8)) through Examples 6 and 7. The parameters are set as follows. In the proposed algorithms, set

,

,

,

, and let

. For YKMSA, set

,

,

according to Lemma 3.1 [

14]. The stop criterion is set as

or a maximum iteration of

. Take the initial points as

and

for Example 6 and

for Example 7. The numerical results are shown in

Figure 1,

Figure 2 and

Figure 3. As shown in the figures, it can be observed that both the one-step and multistep algorithms involving implicit rules perform slightly better than explicit algorithms. Furthermore, as the one-step and multistep YKMSAs [

14] contain Halpern-type constants, the proposed algorithms in this article converge much faster than YKMSA.

According to (

2), we have shown that GCSVIOE is equivalent to a CSOS. Now, we give the following multistep implicit Algorithm 5 and Theorem 3 to find the solution of GCSVIOE.

| Algorithm 5 Multistep implicit iteration for GCSVIOE |

Choose an initial point , and for any do the following: The real sequence , and satisfies the following conditions:

- (i)

are all in ; - (ii)

; - (iii)

, , and ; - (iv)

; here, is a positive constant; - (v)

.

|

Theorem 3. For all , suppose that is a —contraction operator and is a -inverse strongly monotone operator, where is a nonempty closed convex subset of . Then, the sequence generated by Algorithm 5

converges strongly to the solution of (2), that is,GCSVIOE

is solved by Algorithm 5.

Proof. For any

, let

. Then, according to [

26], one has

, which is a nonexpansive operator for all

. Thus, for each

,

is also 1-Lipschitzian-continuous and

-asymptotically demicontractive, and

is demiclosed at 0 [

27]. Recall Example 5; one can easily see that

is also uniformly asymptotically regular for all

. Hence,

satisfies all the conditions required in Theorem 2.

Then, by directly applying Theorem 2, one sees that the sequence generated by Algorithm 5 converges strongly to a point in . This completes the proof. □