Abstract

The generalized penalized constrained regression (G-PCR) is a penalized model for high-dimensional linear inverse problems with structured features. This paper presents a sharp error performance analysis of the G-PCR in the over-parameterized high-dimensional setting. The analysis is carried out under the assumption of a noisy or erroneous Gaussian features matrix. To assess the performance of the G-PCR problem, the study employs multiple metrics such as prediction risk, cosine similarity, and the probabilities of misdetection and false alarm. These metrics offer valuable insights into the accuracy and reliability of the G-PCR model under different circumstances. Furthermore, the derived results are specialized and applied to well-known instances of G-PCR, including -norm penalized regression for sparse signal recovery and -norm (ridge) penalization. These specific instances are widely utilized in regression analysis for purposes such as feature selection and model regularization. To validate the obtained results, the paper provides numerical simulations conducted on both real-world and synthetic datasets. Using extensive simulations, we show the universality and robustness of the results of this work to the assumed Gaussian distribution of the features matrix. We empirically investigate the so-called double descent phenomenon and show how optimal selection of the hyper-parameters of the G-PCR can help mitigate this phenomenon. The derived expressions and insights from this study can be utilized to optimally select the hyper-parameters of the G-PCR. By leveraging these findings, one can make well-informed decisions regarding the configuration and fine-tuning of the G-PCR model, taking into consideration the specific problem at hand as well as the presence of noisy features in the high-dimensional setting.

Keywords:

penalized regression; prediction risk; cosine similarity; probability of false alarm; double descent; over-parameterization; constrained ridge regression MSC:

62J05; 62J07; 60G35; 62E20

1. Introduction

1.1. Notations and Definitions

To avoid confusion, we start by introducing the notations and definitions used throughout this paper. For any positive integer p, let denote the set . Bold face lower case letters (e.g., θ) represent a column vector, and is its ith entry, while is its -norm. The -norm of a vector is defined as: . Upper case bold letters such as X are used to indicate matrices, with representing the identity matrix. The symbols and are the inversion and transpose operations, respectively. We use and to indicate the probability of an event and the expected value of a random variable, respectively. The notation is used to represent convergence in probability. We write to indicate that a random variable X is randomly distributed according to a probability mass (or density) function . Particularly, means that the random vector v has a normal distribution with mean vector and covariance matrix , where is the zero vector. For , a function is said to be pseudo-Lipschitz of order if there exists a constant such that, for all, : .

A function is called separable if , where is a real-valued function. The notation is the indicator function, which is defined as

Finally, we need the following definitions:

- The generalized Moreau envelope function of a proper convex function is defined asfor , with and . The generalized Moreau envelope given above is an extended version of the well-known Moreau–Yosida envelope function [1].

- The minimizer of the above function is called the generalized proximal operator, which is given as

1.2. Motivation

Suppose we observe a response vector y and a data matrix X according to the linear model

where is a vector of coefficients or parameters, or an unknown signal vector, and is an error vector. This is also known as a linear inverse problem model [2]. The linear model in (2) appears in many practical problems in engineering and science [3,4]. For example, in statistics and machine learning [5,6,7], is the response vector (or the output data); X is often called the predictor matrix, features matrix, or design matrix, which collects the input data (or features); is the so called target vector, which is a vector of some weighting parameters or regression coefficients; and ϵ is a random noise term. In the context of compressed sensing [8,9], y represents the measured data, X is a sensing or measurement matrix, denotes a signal of interest (to be recovered), and ϵ is a random noise vector. In addition, in signal representation [10,11], y is a signal of interest, the matrix X denotes an over-complete dictionary of elementary atoms, the vector contains the representation coefficients of the signal y, and ϵ represents some approximation error. Moreover, in the field of wireless communications [12,13], y represents the received signal, X is the channel matrix between the transmitter and the receiver, is the transmitted signal vector, and ϵ is the additive thermal noise.

In the past, different computational algorithms have been proposed for recovering (estimating) the unknown vector . The simplest and most conspicuous approach is the ordinary least squares (OLS) estimator, which finds an estimate of by minimizing the residual sum of squares (RSS), i.e.,

For the OLS estimator, it is required that , i.e., X is a full column rank matrix. In this case, (3) has the following closed-form solution:

In many applications, most of the time, the number of parameters to be recovered p is greater than the number of available samples n, i.e., . This scenario is known in the literature as the over-parameterized regime [14] (This case, , is also called the “compressed measurement” scenario in the compressive sensing context). Such inverse problems are known to be ill-posed unless the unknown vector is located in a manifold with a considerably lower dimension than the initial ambient dimension p. These vectors are called structured vectors [15]. Examples of structured vectors are vectors with a finite-alphabet structure, sparse and block-sparse structures, low-rankness, etc. [9].

Despite being a popular approach, the OLS estimator performs very poorly when applied to ill-posed or under-determined problems [16]. Thus, to solve ill-posed problems, penalization methods are often used. Examples of these methods include penalized least squares (PLS) [17], least absolute shrinkage and selection operator (LASSO) [18], truncated singular value decomposition (SVD) [19], etc.

For structured vectors, the most widely used approach is the penalized M-estimator [20], which finds an estimate of the unknown vector by solving the convex optimization problem

where is a convex loss function that determines how close the estimate is to the linear model . Furthermore, is a convex penalization function that enforces the specific structure (the a priori information) of the unknown vector , and is a penalization factor that is used to balance the two functions. In addition, we assume that is separable, i.e., . Examples of the most popular structure-inducing functions are:

- induces sparsity structure.

- encourages low-rankness structure, where is the nuclear norm of a matrix, which is defined as the sum of its singular values.

- induces block-sparsity structures, where is the mixed -norm.

- promotes finite-alphabet (i.e., constant-amplitude) signals.

The choice of the loss function depends on the noise distribution [3] as follows:

- If the noise is Gaussian-distributed, then we choose or , which is related to the maximum likelihood estimation [10].

- If the noise is sparse (e.g., Laplacian distributed), then one can select .

- If the noise is bounded, then a proper choice is , and so on.

Different popular algorithms that correspond to different choices of and include:

- OLS: , as in (3);

- -penalized LS or ridge regression: ;

- -penalized LS or LASSO: ;

- group LASSO: ;

- generalized least absolute deviation (LAD): .

The above list is not exhaustive, and many regression, classification, and other statistical learning algorithms can be written in the form of (5).

1.3. Summary of Contributions and Related Work

Since the Gaussian noise is the most widely encountered noise in practice, we focus in this work on optimization problems involving an -norm squared loss and a general penalization function. We call these problems the Generalized Penalized Regression (G-PR), which solves the following convex optimization problem:

In this paper, we provide high-dimensional analysis of a constrained version of the G-PR called the G-PCR (as in Equation (9)) based on the convex Gaussian min–max theorem (CGMT) [20]. This analysis includes studying its general error performance and specializing it to particular cases such as sparse and ridge linear regressions. The derived performance measures, such as the prediction risk and similarity and probability of misdetection, are then used to tune the involved hyper-parameters of the algorithm. Numerical simulations of both synthetic and real data are presented to support the theoretical analysis presented in this work.

Previous works on the high-dimensional performance characterization of convex optimization problems have a very rich history. There are early results that provided order-wise “loose bounds” of the error performance of several penalized regression problems, such as in [14,21,22,23,24,25]. However, the first results that provided a high-dimensional error analysis were derived using the approximate message passing (AMP) algorithm by Bayati et al. [26,27] for the unconstrained (standard) LASSO. Later, Ref. [28] extended the AMP framework to analyze the performance of more general loss functions.

A different approach that is based on the replica method was considered in [29,30] to analyze various problems in the compressed sensing setting.

In addition, another powerful high-dimensional tool called the random matrix theory (RMT) [31] was used in [32,33,34] to derive asymptotic error analysis of some optimization problems that possess closed-form solutions.

Recently, Thrampoulidis et al. developed a new high-dimensional analysis framework that is based on the convex Gaussian min–max theorem (CGMT). First, this framework was used in [35,36,37,38] to provide precise error analysis of the LASSO and square-root LASSO. Then, in [20], it was extended to obtain asymptotic error performance analysis of unconstrained penalized M-estimator regression problems. The first CGMT-based results on constrained regression models were derived in [39,40,41] for the box relaxation optimization (BRO) and its regularized variant. This BRO method is used to promote constant-amplitude structures. The authors in [42,43,44] extended the previous CGMT results to obtain sharp error performance characterization of constrained versions of the popular LASSO and Elastic-Net (EN) problems. These extended versions are called the Box-LASSO and Box-EN, respectively. Furthermore, the authors in [45,46] extended the above results to derive symbol error rate performance of a more general method called the sum of absolute values (SOAV) optimization and its constrained pair (Box-SOAV) for discrete-valued binary and sparse signal recovery.

Even though the focus of this paper is on regression problems, we should highlight that the CGMT framework was also applied to characterize the high-dimensional error performance of classification problems as in [47,48,49], phase retrieval problems [50,51], and various statistical learning problems [52,53,54].

In most of these works, the features matrix is considered to be fully known, but in practice, data are always noisy and contain different types of errors. This motivates the analysis considered in this paper to be performed under uncertainties in the design matrix (see Section 2.2). As compared to related work, such as [41,43,44] which considered the imperfect design matrix assumption, this work differs in multiple ways.

- The proposed constrained G-PCR problem in (9) considers a general penalization function instead of the specific penalties used in previous works.

- This work derives a general performance measure (Theorem 1) that is more broad and useful than the particular metrics previously taken into consideration, such as the mean square error (MSE), symbol error probability, etc.

- This work generalizes these previous results, as they can be obtained as special cases of the results of this paper.

- In Appendix B, we highlight the use of the same machinery developed in this work to analyze a closely related class of problems known as Square-Root Generalized Penalized Constrained Regression.

2. Problem Setup

2.1. Dataset Model

Consider the problem of estimating a scalar response from a set of n independent training data samples , where is the feature vector, following the linear model

where is an unknown structured target vector, and denotes the noise samples with zero mean and variance . Furthermore, the feature vectors are assumed to independent and identically distributed (i.i.d.) random normal vectors with zero mean and covariance matrix .

The model in (7) can be compactly written as

where and .

2.2. Main Assumptions

Our study is based on the following set of assumptions:

- The unknown target vector is assumed to be a structured vector, with entries that are sampled i.i.d. from a probability distribution function , which has zero mean, and variance , where .

- The noise variance is a fixed positive constant.

- As discussed above, the data matrix is a Gaussian matrix with i.i.d. elements. The choice of the as the variance level in X is commonly used in the literature; see [20,27]. This is done to ensure that and are of the same order.

Furthermore, in this work, we assume that the data matrix, X, is not perfectly known, and we only have an erroneous copy of it, , which is given as:

where , and are independent matrices which have i.i.d. entries drawn from , and , respectively. (This uncertainty notion is widely encountered in practice. For example, it could be used to represent model mismatch, errors, and noises from the data collection process, noise in the sensors used to gather the measurements, etc.) Here, E represents the unknown error matrix, and is the variance of the error.

- n and p grow to infinity with .

2.3. Generalized Penalized Constrained Regression (G-PCR)

In this paper, we refer to (5) as the standard G-PR, but we analyze a modified version that we call the Generalized Penalized Constrained Regression (G-PCR), which solves the following optimization instead:

where

When compared to (6), the constraint is used instead of , and is used instead of X. This is due to the fact that X is not perfectly known and we only have its noisy estimate .

When we compare (9) to (6), we can see that there is only a slight difference between them, which is the constraint set instead of . This small change assures significant performance improvements in the algorithm in many practical applications, such as image and signal processing [55], wireless communications [41,43,56], etc. These improvements are shown for several cases in Section 4 and Section 5.

3. Sharp Asymptotics

3.1. Measures of Performance

This paper considers the following measures used to assess the high-dimensional performance of the G-PCR.

- Prediction Risk: One of the most extensively used measures of performance is the prediction risk. For a given estimator , the prediction risk is defined aswhere x and y are new test points following the linear model in (7) but are independent of the training data.

- Similarity: Another metric that is used to quantify the degree of alignment between the target vector and its estimate is the (dis)similarity. It is a measure of orientation rather than magnitude. It is defined as

This similarity measure could also be thought of as the correlation between the estimated and true target vectors. Essentially, we desire estimates that maximize this similarity. Note that this metric is also known as the cosine similarity in machine learning literature, since .

Note that these two measures are related as

3.2. High-Dimensional Performance Evaluation

In this subsection, we provide the main results of the paper, namely, the sharp analysis of the asymptotic performance of the G-PCR convex program. We start by analyzing the estimation performance via a general pseudo-Lipschitz function as in Theorem 1 below, which sharply characterizes the general asymptotic behavior of the error. Then, we use this theorem to compute particular performance measures such as the prediction risk, similarity, etc.

Theorem 1

(General Performance Metric). Consider the high-dimensional setup of Section 2.2, and let the assumptions therein hold. Moreover, let be a minimizer of the G-PCR program in (9) for a fixed . Let be a pseudo-Lipschitz function. Then, in the limit of , it holds that

where

is the unique optimal solution to the following objective,

and the expectation is taken with respect to independent random variables and .

Proof.

The proof is given in Appendix A. □

Remark 1

(Choice of ). The performance metric in Theorem 1 is computed in terms of evaluation of a pseudo-Lipschitz function, . As an example, can be used to compute the prediction risk, or can be used to evaluate the mean absolute error (MAE). We will appeal to this theorem later with various choices of to evaluate different performance measures on .

Remark 2

(Optimal Solutions). Note that and can be calculated by any search technique such as the golden-section search method and the ternary search [57].

Remark 3

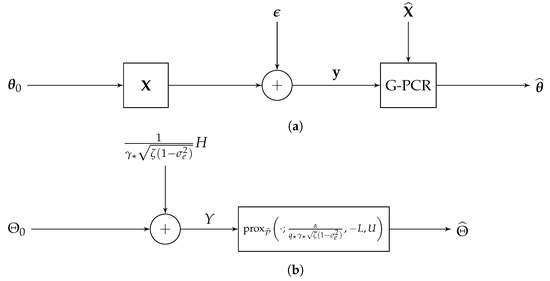

(Decoupling Property). Theorem 1 shows that

where

This provides some insights for the structured signal recovery with the G-PCR optimization. From (14), it can be seen that the random variable shares the same statistical properties as the estimate [46]. Thus, Equation (15) can be considered as a decoupled scalar version of the original system as depicted in Figure 1. Particularly, in the original system (Figure 1a), the true target vector is first mixed by the design matrix X, and then the additive white Gaussian noise (AWGN) vector ϵ is added to form the measurement vector y. On the other hand, in the decoupled system (Figure 1b), the unknown variable is only mixed by the Gaussian vector , where . Furthermore, letting , it can be observed that the generalized proximal operator solution

has a decoupled scalar form of the original G-PCR in (9), which can be expressed as

up to a scaling of B. This suggests that, in the high-dimensional asymptotic setting, one can use the decoupled scalar system to characterize the probabilistic properties of the G-PCR recovery problem.

Figure 1.

A system model comparison between the original G-PCR recovery algorithm and its scalar decoupled version. (a) Original system. (b) Scalar decoupled system.

This decoupling property was also shown for similar problems, such as box-constrained sum of absolute values (Box-SOAV) optimization for sparse recovery [46], sparse logistic regression [58], and the approximate message passing (AMP) algorithm [59].

As a first application of Theorem 1, we provide a sharp high-dimensional performance evaluation of the prediction risk as given in the following corollary.

Corollary 1

(Prediction Risk). Under the same assumptions of Theorem 1, and for that is independent of , it holds that

where and are the unique optimal solutions to the objective function in (13).

Proof.

Using Theorem 1 with , we can obtain the above expression of the prediction risk. Details are deferred to Appendix A.2.4. □

Remark 4

(Optimal Hyper-parameters). Corollary 1 allows us to determine the optimal hyper-parameters, such as , and U, that minimize the prediction risk. To do so, it is first required to estimate some variances, such as and , from the available data. Those can be easily estimated by using existing algorithms such as [60,61].

It should be noted that this theoretical hyper-parameter optimal tuning as discussed above avoids the traditional time/data-consuming practice of cross-validation used to tune the hyper parameters.

The following corollary sharply characterizes the similarity measure defined earlier in (11).

Corollary 2

(Similarity). Under the same assumptions and settings of Theorem 1, and in the limit of , it holds that

where and are the unique optimal solutions to (13).

Proof.

The proof follows from Theorem 1 and from the continuous mapping Theorem [62]. Details are given in Appendix A.2.5. □

In the subsequent sections, we consider various instants of (9), such as -norm and -norm penalization, to illustrate the application of the theoretical asymptotic expressions derived in this section.

4. Sparse Linear Regression

In this section, we study the performance of the G-PCR with an -norm penalization. As indicated in the introduction, this penalty function is used to promote sparse solutions. In contemporary machine learning applications, it is common to encounter a significantly large number of features, p. To prevent the problem of over-fitting, it becomes crucial to engage in feature selection, which involves eliminating irrelevant variables from the regression model [18]. A popular technique for accomplishing this is by introducing an -norm penalty to the loss function. This approach is widely adopted and used for feature selection tasks.

Therefore, we specialize Theorem 1 to analyze the asymptotic performance of the G-PCR with an -norm penalization. Particularly, for an s-sparse vector, we study the performance of the following optimization problem:

(We say that a vector v is an s-sparse vector if only s of its p elements are non-zero (on average), and most of its elements are zeros, where .)

4.1. Asymptotic Behavior of Sparse G-PCR

To analyze (18), we specialize Theorem 1 with . Then, the generalized proximal operator and Moreau envelope functions can be expressed, respectively, in the following closed forms:

and

Note that this proximal operator is a generalization of the well-known soft-thresholding operator, i.e., , where the Rectified Linear Unit () is defined as .

These expressions can be used to solve the scalar optimization in (13) of Theorem 1 and to simplify the similarity expression in (17). Specifically, (13) becomes

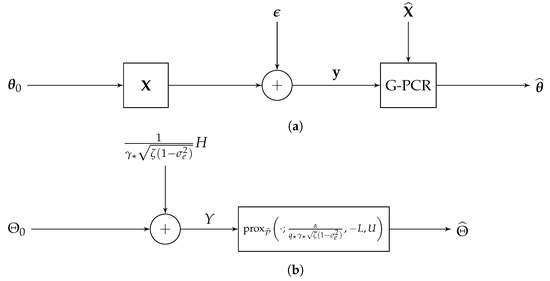

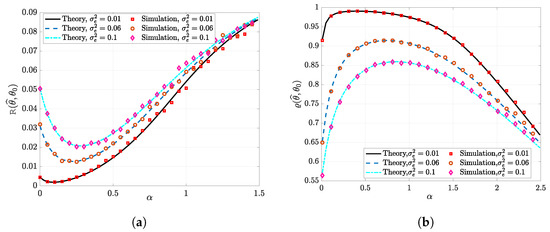

Figure 2 illustrates the performance of the -penalized G-PCR as a function of for different levels of error variance . We generated the target vector randomly with elements in and . This means that the sparsity factor is in these simulations. From these figures, we can also see that the theoretical expressions of the prediction risk and the similarity match the empirical simulations very well. Furthermore, it can be noted in Figure 2a that, for different values of , there exists an optimal value that achieves the minimum possible prediction risk. Similarly, notice in Figure 2b the optimal that maximizes the similarity metric. It can also be observed that increasing beyond reduces the similarity between and . In these simulations, we set and .

Figure 2.

Performance of the G-PCR vs. the penalization factor for a sparse linear regression. The parameters are set as follows: . The simulation results are averaged over 50 independent Monte Carlo trials. (a) The prediction risk. (b) The cosine similarity.

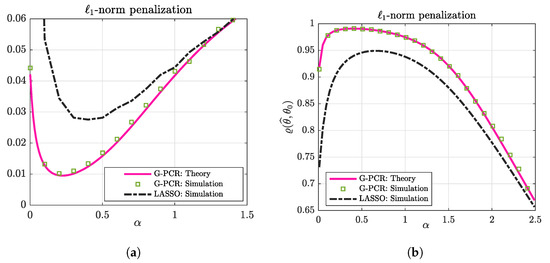

In Figure 3, we compare the unconstrained G-PR in (6) (which is equivalent to a standard LASSO formulation in this case) to the proposed G-PCR for an over-parameterized setting with . As we can see from this figure, the G-PCR clearly outperforms the unconstrained one in both metrics. Moreover, despite the fact that our theoretical results are assumed to be asymptotic in the problem dimensions (i.e., and ), we can see from all of the above figures that our rigorous results are accurate even for problems with a few hundred variables, e.g., .

Figure 3.

Performance comparison between the G-PCR and G-PR. For the numerical simulations, the results are averaged over 100 independent trials, with , . (a) The prediction risk. (b) The cosine similarity.

4.2. Support Recovery

In this section, we analyze the so-called support recovery of the sparse G-PCR. As discussed earlier, a sparse vector means that it has few non-zero elements. We define the support of as follows:

Here, we are interested in computing the probability that an element on the support of has been recovered correctly. Let be a solution to the optimization problem in (18). Let us fix as a user-predefined hard threshold based on whether an entry of is decided to be on the support or not. Formally, we construct the following set as the estimate of the support given :

In order to analyze the support recovery correctness, we consider the following error metrics, which are known as the probability of misdetection (MD) and the probability of false alarm (FA), respectively.

In the following lemma, we study the asymptotic performance of both of these measures.

Lemma 1.

Proof.

The proof can be obtained from Theorem 1 with some approximations of these metrics to Lipschitz functions. Details are omitted for briefness. See [39] for a similar proof. □

Next, we give an example to illustrate this lemma.

Example: Sparse-Binary Target Vectors

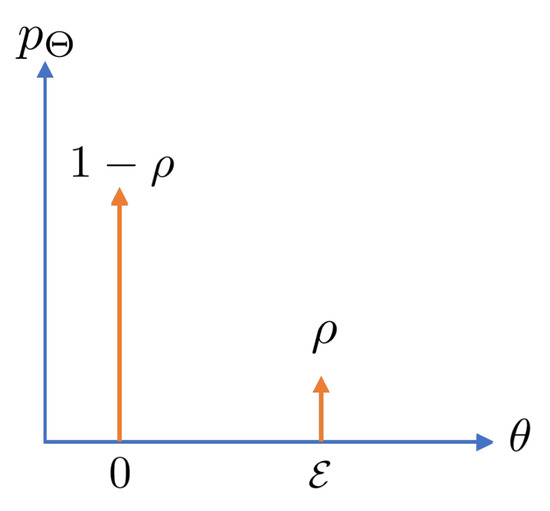

For an s-sparse target vector, define as the sparsity factor. Then, as an example, let us assume that each element , for , is i.i.d. drawn from the following distribution (this model has been widely adopted in the relevant literature; see, for example, [59,63,64]):

for some , and indicates a Dirac delta function (i.e., a point-mass distribution). In other words, the elements of are zero with probability , and the non-zero elements all have the value . Figure 4 illustrates this distribution.

Figure 4.

Probability mass function (PMF) of a sparse-binary distribution.

For a that follows the distribution in (24), and for , with and , the error measures in Lemma 1 simplify to the following:

and

where is the cumulative distribution function (CDF) of the standard normal distribution.

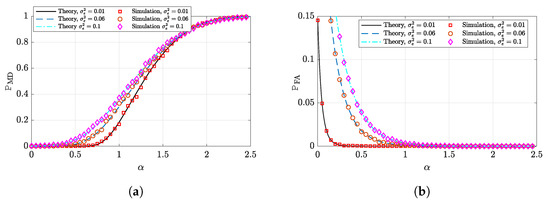

Figure 5 shows the accuracy of the above-derived theoretical expressions as compared to empirical simulations for the considered sparse-binary vector example.

Figure 5.

Support recovery performance of the G-PCR versus the penalization factor for a sparse-binary signal recovery. The parameters are set as follows: , . The simulations are averaged over 100 independent Monte Carlo trials. (a) Probability of misdetection. (b) Probability of false alarm.

5. G-PCR with -Norm Penalization

Even though it does not promote a particular structure, -norm penalization is used in many signal processing, statistics, and machine learning applications to stabilize the model when we have ill-conditioned or under-determined systems [17]. Adding this penalization will shrink all the coefficients toward zero and hence decrease the variance of the resultant model; therefore, it can be used to avoid over-fitting. Within the Bayesian framework, the incorporation of this penalization implies that the regression coefficients are assumed to follow a Gaussian distribution. This assumption is often justifiable in numerous applications, in which the regression coefficients are typically taken from a random process. In this section, we provide high-dimensional asymptotic performance analysis of the G-PCR with -norm penalization; that is:

To analyze (27), we use Theorem 1. However, here the generalized proximal operator and Moreau envelope functions of can be expressed, respectively, in the following closed-forms:

and

Letting and , the scalar optimization in (13) of Theorem 1 reduces to:

where is the indicator function.

In the same manner, we can simplify the similarity expression in (17) using the closed-form expression of the generalized proximal operator of the -norm in (28). The prediction risk and the similarity metric are given by (16) and (17), respectively. However, now is the unique solution to (30). To illustrate the ideal let us consider the next examples.

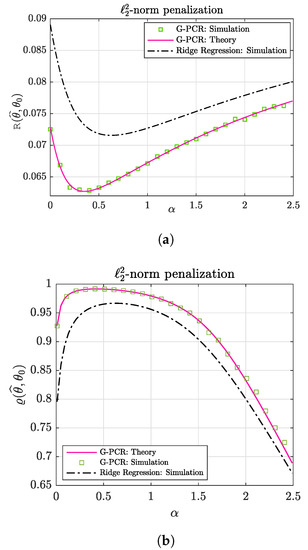

5.1. Numerical Illustration

As stated at the beginning of this section, -norm penalization can be used for Gaussian distributed target vectors. Therefore, as a first illustration, let us assume that . Figure 6 depicts the risk/similarity performance of the G-PCR with an -norm penalization for several levels of the penalization factor . It also shows that the G-PCR outperforms the unconstrained G-PR, which is equivalent to a ridge regression formulation here. Again, Figure 6a illustrates that there exists an optimal value that minimizes prediction risk, while Figure 6b shows that there is an optimal value of the penalization factor that gives the maximum similarity. Both figures show the high accuracy of the derived asymptotic expressions as compared to Monte Carlo simulations.

Figure 6.

Performance of the -norm G-PCR vs. the penalization factor for a Gaussian target vector. The parameters are set as follows: and . The results are averaged over 50 independent realizations. (a) The prediction risk. (b) The cosine similarity.

5.2. Binary Target Vector Estimation

Let us assume that , i.e., it takes only one of two possible values, or , with equal probability, i.e.,

Such vectors are widely encountered in many practical applications, such as the detection of wireless communication signals [12,41]. We use (27) as our estimation method, with . For this vector, ; i.e., the covariance matrix of is .

The task of estimating here is equivalent to a binary classification task, with the two classes being and . After obtaining the estimates using (27), we can map (decode) them to the relative class using the following link function:

We can use the prediction risk and similarity to measure the performance. However, a more suitable performance measure for this kind of target vector is the so-called “classification error rate”, which is defined as:

The next lemma derives an asymptotic expression for this metric.

Lemma 2.

Proof.

The proof is similar to that of Lemma 1. Please refer to [39,47]. Details are skipped for brevity. □

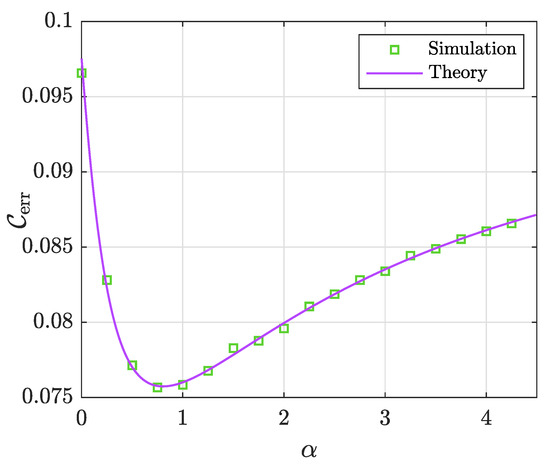

Figure 7 illustrates the sharpness of Lemma 2 as compared to empirical simulations. Similar to previous figures, this figure demonstrates the perfect match between numerical simulations and theory.

Figure 7.

Classification error rate () of the G-PCR vs.the penalization factor for a binary target vector. The parameters are set as follows: . The results are averaged over 100 independent Monte Carlo trials.

5.3. Unpenalized Regression

When in (9), we have an optimization problem with no penalization. The resulting algorithm is known as the box relaxation optimization (BRO) [39], which has been extensively studied in the literature. It is used to promote boundedness structure. In fact, when , the BRO is equivalent to an -norm penalization. Setting in (30), and after some mapping of the involved variables, we can obtain the same results as in [39] for binary vectors.

6. Additional Numerical Experiments

In this section, we provide additional numerical experiments to validate our results. These experiments are performed on synthetic data beyond the Gaussian ensemble and on real data as well. In addition, we empirically discuss the double descent phenomenon.

6.1. Synthetic Data: Universality of the Gaussian Design

Theorem 1 assumes that the elements of matrix X are i.i.d. Gaussian distributed. However, we expect the asymptotic results derived in this paper (prediction risk, similarity, etc.) to be robust and hold for a larger class of random matrices. Rigorous proofs are presented in [65,66,67,68,69], where the asymptotic prediction is shown to have a universal limit (as ) with respect to random matrices with i.i.d. entries.

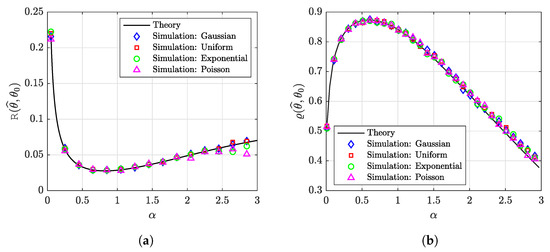

To validate the above claims, see Figure 8, where we plotted the prediction risk and the similarity for a sparse-Gaussian target vector with i.i.d. entries that follow the distribution: . We used the G-PCR with an -norm in (18) to obtain the estimates. In addition to the Gaussian design matrix, we simulated the performance using other random matrices with i.i.d. entries drawn from a uniform distribution , an exponential distribution , and from a Poisson distribution . Note that the normalization of these matrices is used to satisfy the high-dimensionality assumptions in Section 2.2. From this figure, we can see that the behavior seems to be nearly identical for all distributions, suggesting that our results enjoy a universality property.

Figure 8.

Performance the G-PCR for a sparse-Gaussian target vector. We set the parameters as . The results are averaged over 50 independent trials. (a) The prediction risk. (b) The similarity.

6.2. Real-World Data

In previous section, we showed the robustness of our results to the distribution of the i.i.d. entries of the data matrix . In this section, we take it a step further and consider real-world datasets instead of the synthetic data discussed earlier. These datasets are essentially not random and do not have i.i.d. elements. However, as seen in the numerical simulations below, they match our theoretical results to a great extent.

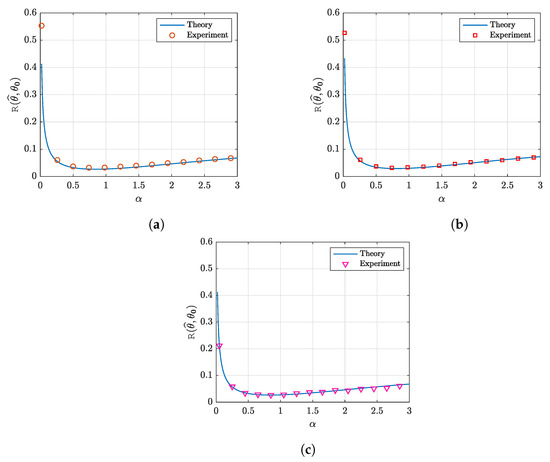

As an illustration, we present in Figure 9 the outcomes of these simulations for three real datasets. Each of these datasets consists of a small number of samples (n) and a high-dimensional feature space (p), which is consistent with the over-parameterized setting (). These datasets are mainly for detecting several diseases and cancer samples. We generated the target vector, , randomly with entries following the distribution . The noise vector ϵ was generated using i.i.d. elements. We generated the observations as . The G-PCR with -norm penalty in (18) is used to obtain with .

Figure 9.

Prediction risk as a function of the penalization factor . Here, the data matrix X is a standardized real dataset. We used a sparse-Gaussian vector , and we generated the observations as . The G-PCR with -norm penalty is used to obtain with . The parameters are set as , and . The results are averaged over 200 independent trials. (a) Breast cancer data. (b) Glioma disease data. (c) Colon cancer data.

The three figures correspond to the following datasets:

- Figure 9a: For this figure, we used breast cancer data [70] (available at: https://github.com/kivancguckiran/microarray-data (accessed on 27 May 2023)). This dataset has been used in [71] for DNA microarray gene expression classification using the LASSO. It consists of 22,215 gene expressions (features) and 118 samples. From this matrix, we took a sub-matrix X of aspect ratio . We standardized all columns of matrix X to have mean 0 and variance 1.

- Figure 9b: In this figure, glioma disease data [72] were used (available at: https://github.com/kivancguckiran/microarray-data (accessed on 27 May 2023)). This dataset includes 54,613 features and 180 samples. Similar to the breast cancer data, the sub-matrix X with the same aspect ratio was selected and standardized.

- Figure 9c: The dataset used in this figure includes colon cancer data [73] (available at: http://www.weizmann.ac.il/mcb/UriAlon/download/downloadable-data (accessed on 27 May 2023)). This dataset was used in [74] for a sparse-group LASSO model. It includes 2000 genes and 62 samples (22 normal tissues and 40 colon tumor tissues). Similar to the previous datasets, we selected a sub-matrix X with aspect ratio and standardized it.

For all figures, we can see that the agreement between theory and simulations is remarkably good.

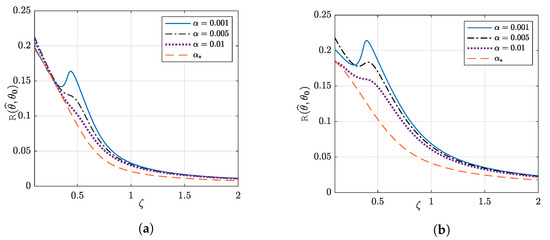

6.3. Double Descent Phenomenon

In Figure 10, we plotted the prediction risk as a function of for different choices of the penalization factor . As can be seen, for an arbitrary choice of , the prediction risk of the G-PCR first decreases for small values of , then increases until it reaches a peak known as the interpolation peak. After that, the prediction risk decreases monotonically with respect to . This is known as the double descent phenomenon [75]. On the other hand, optimal values of the penalization factor always guarantee that the prediction risk decreases with more training samples being used (i.e., with increasing ). This emphasizes the important role of the optimal tuning of to mitigate the double descent phenomenon and to give the best performance.

Figure 10.

Prediction risk as a function of the aspect ratio . We used G-PCR with an -norm penalty and sparse-binary vector with , , and . (a) . (b) . Illustration of the double descent and how optimal penalization can mitigate it.

7. Conclusions

In this paper, we studied the high-dimensional error performance of the generalized penalized constrained regression (G-PCR) optimization with noisy features. Several analytical expressions were derived to measure the performance, such as the prediction risk, similarity, probability of misdetection, and probability of false alarm. Different popular instances of this optimization, such as -norm penalized regression and -norm penalization, were considered. We presented numerical simulations to validate these expressions based on both synthetic and real data. These results can be used to tune the involved hyper-parameters efficiently.

Furthermore, we empirically investigated the so-called double descent phenomenon and showed that optimal penalization can mitigate its effect. We also illustrated through several simulations the universality of our results beyond the assumed Gaussian distribution.

Finally, we note that numerical simulations have shown that our rigorous results are accurate even for problems with a few hundred variables, despite the fact that these results are assumed to be asymptotic in the problem dimensions.

Author Contributions

Conceptualization, A.M.A.; Methodology, A.M.A.; Software, A.M.A.; Validation, A.M.A.; Formal analysis, A.M.A.; Investigation, A.M.A. and M.A.; Resources, M.A.; Data curation, A.M.A. and M.A.A.; Writing—original draft, A.M.A.; Writing—review & editing, A.M.A., M.A. and M.A.A.; Visualization, A.M.A. and M.A.; Supervision, A.M.A.; Project administration, M.A.A.; Funding acquisition, M.A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Deputyship for Research & Innovation, Ministry of Education, Saudi Arabia through project number 445-9-196.

Data Availability Statement

The data presented in this study are available within the article.

Acknowledgments

The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through project number 445-9-196. Also, the authors would like to extend their appreciation to Taibah University for its supervision support.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of the Main Results

In this appendix, we provide an outline of the proof of the high-dimensional analysis of the prediction risk of the considered G-PCR learning algorithm. Our main analysis framework is the convex Gaussian min–max theorem (CGMT). For the reader’s convenience, we firstly recall the CGMT.

Appendix A.1. Main Analysis Framework: CGMT

The CGMT is an extension of Gordon’s comparison lemma [76]. Gordon’s lemma was used in the analysis of some high-dimensional inference problems, such as the study of sharp phase-transitions in noiseless compressed sensing. The CGMT was initiated first in [36] and further developed in [20]. It uses convexity to compare the min–max values of two Gaussian processes.

To illustrate the main ideas of the CGMT, let us first consider the following doubly indexed Gaussian random processes:

where , they all have i.i.d. standard Gaussian elements, and . For these two processes, consider the following min–max optimization programs, which are referred to as the primal optimization (PO) and the auxiliary optimization (AO):

where the sets and are assumed to be compact and convex sets. In addition, if the function is continuous and convex–concave on , then, according to the CGMT formulation in Theorem 6 in [20], for any and :

The above result states that if we can show that the optimal AO cost is asymptotically, , then it can be concluded that the optimal PO cost is . The premise is that it is usually much easier to analyze the AO instead of the PO. In addition, the CGMT (Theorem 6.1(iii) in [20]) shows that concentration of the optimal solution to the AO problem implies concentration of the optimal solution of the PO around the same value. In other words, if minimizers of (A2b) satisfy that , where , then the same holds true for minimizers of (A2a), i.e., . In addition, we make use of the following corollary that holds true in the high-dimensional asymptotic regime.

Corollary A1

For more details about the framework of CGMT, the reader is advised to see [20].

Next, we use the CGMT to provide a proof outline of the general error asymptotic behavior provided in Theorem 1.

Appendix A.2. Sharp Analysis of the G-PCR

Appendix A.2.1. Primal and Auxiliary Problems of the G-PCR

To obtain the main asymptotic result using CGMT, we first need to rewrite the G-PCR learning problem in (9) as a PO problem. For convenience, define the vector , and the following set

then, the problem in (9) can be reformulated as

Introducing the Convex Conjugate: Any convex function can be expressed in terms of its convex conjugate as:

Using the above definition, we can express the -norm loss function in (A6) as

Hence, (A6) becomes equivalent to the following:

To apply the CGMT, we need the optimization sets to be compact. This is true for , but is not. This issue can be treated in a similar way to the method in Appendix A in [20]. We introduce an artificial compact set for a sufficiently large constant that is independent of p. The optimization problem is unaffected by this constraint set asymptotically. After that, we obtain

where and are independent Gaussian matrices that have i.i.d. elements each. Now, the above problem is in the format of a PO with

Therefore, the corresponding AO problem is

where and are independent standard Gaussian vectors.

Appendix A.2.2. Simplifying the Auxiliary Problem

The next step is to reduce (simplify) the AO into a scalar problem, i.e., a problem that has only scalar variables. To do so, first, let

Using standard probability theory results, one can show that with a covariance matrix that is given by

Thus, the AO in (A10) holds that

In order to further simplify the AO, we fix the norm of w to . In this case, one can simply optimize over the direction of w, which reduces the AO problem to

Moreover, to have the proper convergence, we have to normalize the above cost function by factor of . Then, we obtain

where . Note the change in the order of the min–sup, which can be justified using (Appendix A in [20]). Next, we wish to write the above optimization as a separable problem by using the following ():

Note that the optimal solution to (A15) is . Using this identity with

we can write the problem in (A14) as

By the weak law of large numbers (WLLN), we can show that and . Now, let us work with the initial variable θ rather than r; then, the above optimization problem converges to

Completing the squares in in the last minimization of the above problem, and using the fact that , we obtain

Note that the last summation term in (A19) can be expressed by the generalized Moreau envelope function defined in (1). Hence, we obtain the following problem:

Next, by the WLLN, , and for all , we have

where the expectation is taken with respect to the independent scalar random variables and .

Finally, (A20) converges to the following scalar problem:

Appendix A.2.3. General Performance Metric: Proof of Theorem 1

Now that we derived the scalar optimization problem, we proceed to prove Theorem 1. Recall that in the process of scalarizing the AO, we introduced the generalized Moreau envelope function in (A20). It can be shown that the optimizer of this function gives the AO solution in . Let be the unique solution to (A21). Then, the AO solution can be presented as

where H is a standard normal random variable and independent of H.

The last step is to show the convergence of any pseudo-Lipschitz function . Using the weak law of large numbers and the fact that the elements of are i.i.d. sampled from a density , we obtain

where the expectation is taken over and independent of H. To use the CGMT (Corollary A1), we introduce the following set:

for .

Appendix A.2.4. Prediction Risk Analysis: Proof of Corollary 1

The objective of this part is to analyze the prediction risk of the G-PCR asymptotically. To begin with, for any , define the following set:

where is the solution to (A21). Recall from (A16) that and Hence,

where is the optimal solution to (A14) and is the solution to (A17). Using the uniform convergence of the cost functions, we can show that . Hence, using the WLLN, and, therefore,

Remember that , where is the AO solution in θ. From Equation (A23), we can see that, for all , , with probability approaching 1. Then, an application of the CGMT yields that with high probability.

Furthermore, Corollary 1 can also be proven as an immediate result of Theorem 1 with therein. Hence,

Appendix A.2.5. Similarity Analysis: Proof of Corollary 2

The proof of Corollary 2 is based on the CGMT to derive asymptotic predictions of the numerator and the denominator of the similarity expression in (11) separately, and then to use the continuous mapping Theorem [62] to arrive at the desired result. For the sake of brevity, we only highlight the main steps of the proof.

The similarity expression in (11) can be rewritten as

For the numerator, we use Theorem 1 with , to obtain the following convergence:

The denominator consists of two terms, each of which converges as well. For the first term, , use Theorem 1 with and the continuous mapping Theorem to obtain

For the second term in the denominator, , using the WLLN, we have

Putting together all of the above convergence results coupled with an application of the continuous mapping Theorem [62], we obtain the asymptotic expression of the similarity measure in (17).

Appendix B. A Note on the Square-Root Generalized Penalized Constrained Regression

Sqrt G-PCR Learning Algorithm

Let us consider the following optimization problem:

where

Problems of the above type are known as regularized square-root regression problems [77]. Here, instead of the -norm squared loss in (9), there is a non-squared -norm loss. This leads to optimization problems with a loss function that is not separable. Examples of this algorithm include the square-root LASSO [24] and the square-root group LASSO [78]. Please see [20,64,77] for the motivations for using the non-squared loss. Furthermore, the scaling of the penalization factor by a factor of is just for convergence issues of the analysis of the CGMT (see [20] for further justification). The analysis of the above optimization, which we call the Square-root G-PCR (Sqrt G-PCR) is very similar to the one provided in the previous sections of this paper for the G-PCR problem. The only difference, however, is that, instead of (A7), we have

Following the same analysis (with some normalization adjustments) as in Appendix A, but using (A27) instead of (A7), we finally arrive at the following deterministic scalar max–min optimization problem:

Comparing to in (13), we can see two main differences, which are the absence of the term and the presence of the constraint in .

References

- Parikh, N.; Boyd, S. Proximal algorithms. Found. Trends Optim. 2014, 1, 127–239. [Google Scholar] [CrossRef]

- Tarantola, A. Inverse Problem Theory and Methods for Model Parameter Estimation; SIAM: Philadelphia, PA, USA, 2005. [Google Scholar]

- Kailath, T.; Sayed, A.H.; Hassibi, B. Linear Estimation; Prentice Hall: Hoboken, NJ, USA, 2000. [Google Scholar]

- Groetsch, C.W.; Groetsch, C. Inverse Problems in the Mathematical Sciences; Springer: Berlin/Heidelberg, Germany, 1993; Volume 52. [Google Scholar]

- Bishop, C.M. Pattern recognition. Mach. Learn. 2006, 128, 1–58. [Google Scholar]

- Rencher, A.C.; Schaalje, G.B. Linear Models in Statistics; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Dhar, V. Data science and prediction. Commun. ACM 2013, 56, 64–73. [Google Scholar] [CrossRef]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Duarte, M.F.; Eldar, Y.C. Structured compressed sensing: From theory to applications. IEEE Trans. Signal Process. 2011, 59, 4053–4085. [Google Scholar] [CrossRef]

- Poor, H.V. An Introduction to Signal Detection and Estimation; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Fadili, J.M.; Bullmore, E. Penalized partially linear models using sparse representations with an application to fMRI time series. IEEE Trans. Signal Process. 2005, 53, 3436–3448. [Google Scholar] [CrossRef]

- Goldsmith, A. Wireless Communications; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Marzetta, T.L.; Yang, H. Fundamentals of Massive MIMO; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Candes, E.; Tao, T. The Dantzig selector: Statistical estimation when p is much larger than n. Ann. Stat. 2007, 35, 2313–2351. [Google Scholar]

- Bach, F. Structured sparsity-inducing norms through submodular functions. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 6–9 December 2010; Volume 23. [Google Scholar]

- Aster, R.C.; Borchers, B.; Thurber, C.H. Parameter Estimation and Inverse Problems; Elsevier: Amsterdam, The Netherlands, 2018. [Google Scholar]

- McDonald, G.C. Ridge regression. Wiley Interdiscip. Rev. Comput. Stat. 2009, 1, 93–100. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Varah, J.M. Pitfalls in the numerical solution of linear ill-posed problems. SIAM J. Sci. Stat. Comput. 1983, 4, 164–176. [Google Scholar] [CrossRef]

- Thrampoulidis, C.; Abbasi, E.; Hassibi, B. Precise error analysis of regularized M-estimators in high dimensions. IEEE Trans. Inf. Theory 2018, 64, 5592–5628. [Google Scholar] [CrossRef]

- Bickel, P.J.; Ritov, Y.; Tsybakov, A.B. Simultaneous analysis of Lasso and Dantzig selector. Ann. Stat. 2009, 37, 1705–1732. [Google Scholar] [CrossRef]

- Negahban, S.; Yu, B.; Wainwright, M.J.; Ravikumar, P.K. A unified framework for high-dimensional analysis of m-estimators with decomposable regularizers. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 7–10 December 2009; pp. 1348–1356. [Google Scholar]

- Wainwright, M.J. Sharp thresholds for high-dimensional and noisy sparsity recovery using -constrained quadratic programming (Lasso). IEEE Trans. Inf. Theory 2009, 55, 2183–2202. [Google Scholar] [CrossRef]

- Belloni, A.; Chernozhukov, V.; Wang, L. Square-root lasso: Pivotal recovery of sparse signals via conic programming. Biometrika 2011, 98, 791–806. [Google Scholar] [CrossRef]

- Li, Y.H.; Hsieh, Y.P.; Zerbib, N.; Cevher, V. A geometric view on constrained M-estimators. arXiv 2015, arXiv:1506.08163. [Google Scholar]

- Bayati, M.; Montanari, A. The dynamics of message passing on dense graphs, with applications to compressed sensing. IEEE Trans. Inf. Theory 2011, 57, 764–785. [Google Scholar] [CrossRef]

- Bayati, M.; Montanari, A. The LASSO risk for Gaussian matrices. IEEE Trans. Inf. Theory 2012, 58, 1997–2017. [Google Scholar] [CrossRef]

- Donoho, D.; Montanari, A. High dimensional robust m-estimation: Asymptotic variance via approximate message passing. Probab. Theory Relat. Fields 2016, 166, 935–969. [Google Scholar] [CrossRef]

- Rangan, S.; Goyal, V.; Fletcher, A.K. Asymptotic analysis of map estimation via the replica method and compressed sensing. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 7–10 December 2009; Volume 22. [Google Scholar]

- Kabashima, Y.; Wadayama, T.; Tanaka, T. Statistical mechanical analysis of a typical reconstruction limit of compressed sensing. In Proceedings of the 2010 IEEE International Symposium on Information Theory, Austin, TX, USA, 13–18 June 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1533–1537. [Google Scholar]

- Couillet, R.; Debbah, M. Random Matrix Methods for Wireless Communications; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Karoui, N.E. Asymptotic behavior of unregularized and ridge-regularized high-dimensional robust regression estimators: Rigorous results. arXiv 2013, arXiv:1311.2445. [Google Scholar]

- Liao, Z.; Couillet, R. Random matrices meet machine learning: A large dimensional analysis of ls-svm. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2397–2401. [Google Scholar]

- El Karoui, N. On the impact of predictor geometry on the performance on high-dimensional ridge-regularized generalized robust regression estimators. Probab. Theory Relat. Fields 2018, 170, 95–175. [Google Scholar] [CrossRef]

- Stojnic, M. Recovery thresholds for ℓ1 optimization in binary compressed sensing. In Proceedings of the 2010 IEEE International Symposium on Information Theory, Austin, TX, USA, 13–18 June 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1593–1597. [Google Scholar]

- Stojnic, M. A framework to characterize performance of lasso algorithms. arXiv 2013, arXiv:1303.7291. [Google Scholar]

- Thrampoulidis, C.; Oymak, S.; Hassibi, B. Regularized Linear Regression: A Precise Analysis of the Estimation Error. In Proceedings of the COLT, Paris, France, 3–6 July 2015; pp. 1683–1709. [Google Scholar]

- Thrampoulidis, C.; Panahi, A.; Guo, D.; Hassibi, B. Precise error analysis of the lasso. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 3467–3471. [Google Scholar]

- Thrampoulidis, C.; Xu, W.; Hassibi, B. Symbol error rate performance of box-relaxation decoders in massive MIMO. IEEE Trans. Signal Process. 2018, 66, 3377–3392. [Google Scholar] [CrossRef]

- Atitallah, I.B.; Thrampoulidis, C.; Kammoun, A.; Al-Naffouri, T.Y.; Hassibi, B.; Alouini, M.S. Ber analysis of regularized least squares for bpsk recovery. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 4262–4266. [Google Scholar]

- Alrashdi, A.M.; Kammoun, A.; Muqaibel, A.H.; Al-Naffouri, T.Y. Asymptotic Performance of Box-RLS Decoders under Imperfect CSI with Optimized Resource Allocation. IEEE Open J. Commun. Soc. 2022, 3, 2051–2075. [Google Scholar] [CrossRef]

- Atitallah, I.B.; Thrampoulidis, C.; Kammoun, A.; Al-Naffouri, T.Y.; Alouini, M.S.; Hassibi, B. The BOX-LASSO with application to GSSK modulation in massive MIMO systems. In Proceedings of the 2017 IEEE International Symposium on Information Theory (ISIT), Aachen, Germany, 25–30 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1082–1086. [Google Scholar]

- Alrashdi, A.M.; Alrashdi, A.E.; Alghadhban, A.; Eleiwa, M.A. Optimum GSSK Transmission in Massive MIMO Systems Using the Box-LASSO Decoder. IEEE Access 2022, 10, 15845–15859. [Google Scholar] [CrossRef]

- Alrashdi, A.M.; Atitallah, I.B.; Al-Naffouri, T.Y. Precise performance analysis of the box-elastic net under matrix uncertainties. IEEE Signal Process. Lett. 2019, 26, 655–659. [Google Scholar] [CrossRef]

- Hayakawa, R.; Hayashi, K. Binary vector reconstruction via discreteness-aware approximate message passing. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1783–1789. [Google Scholar]

- Hayakawa, R.; Hayashi, K. Asymptotic Performance of Discrete-Valued Vector Reconstruction via Box-Constrained Optimization With Sum of ℓ1 Regularizers. IEEE Trans. Signal Process. 2020, 68, 4320–4335. [Google Scholar] [CrossRef]

- Deng, Z.; Kammoun, A.; Thrampoulidis, C. A model of double descent for high-dimensional binary linear classification. Inf. Inference J. IMA 2022, 11, 435–495. [Google Scholar] [CrossRef]

- Kini, G.R.; Thrampoulidis, C. Analytic study of double descent in binary classification: The impact of loss. In Proceedings of the 2020 IEEE International Symposium on Information Theory (ISIT), Los Angeles, CA, USA, 21–26 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2527–2532. [Google Scholar]

- Salehi, F.; Abbasi, E.; Hassibi, B. The performance analysis of generalized margin maximizers on separable data. In Proceedings of the International Conference on Machine Learning, Virtual Online, 13–18 July 2020; PMLR: Mc Kees Rocks, PA, USA, 2020; pp. 8417–8426. [Google Scholar]

- Dhifallah, O.; Thrampoulidis, C.; Lu, Y.M. Phase retrieval via linear programming: Fundamental limits and algorithmic improvements. In Proceedings of the 2017 55th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 3–6 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1071–1077. [Google Scholar]

- Salehi, F.; Abbasi, E.; Hassibi, B. A precise analysis of phasemax in phase retrieval. In Proceedings of the 2018 IEEE International Symposium on Information Theory (ISIT), Vail, CO, USA, 17–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 976–980. [Google Scholar]

- Bosch, D.; Panahi, A.; Hassibi, B. Precise Asymptotic Analysis of Deep Random Feature Models. arXiv 2023, arXiv:2302.06210. [Google Scholar]

- Dhifallah, O.; Lu, Y.M. A precise performance analysis of learning with random features. arXiv 2020, arXiv:2008.11904. [Google Scholar]

- Dhifallah, O.; Lu, Y.M. Phase transitions in transfer learning for high-dimensional perceptrons. Entropy 2021, 23, 400. [Google Scholar] [CrossRef]

- Ting, M.; Raich, R.; Hero, A.O., III. Sparse image reconstruction for molecular imaging. IEEE Trans. Image Process. 2009, 18, 1215–1227. [Google Scholar] [CrossRef]

- Gui, G.; Peng, W.; Wang, L. Improved sparse channel estimation for cooperative communication systems. Int. J. Antennas Propag. 2012, 2012, 476509. [Google Scholar] [CrossRef]

- Luenberger, D.G.; Ye, Y. Linear and Nonlinear Programming; Springer: Berlin/Heidelberg, Germany, 1984; Volume 2. [Google Scholar]

- Salehi, F.; Abbasi, E.; Hassibi, B. The impact of regularization on high-dimensional logistic regression. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Donoho, D.L.; Maleki, A.; Montanari, A. Message-passing algorithms for compressed sensing. Proc. Natl. Acad. Sci. USA 2009, 106, 18914–18919. [Google Scholar] [CrossRef]

- Hayakawa, R. Noise variance estimation using asymptotic residual in compressed sensing. arXiv 2020, arXiv:2009.13678. [Google Scholar]

- Suliman, M.A.; Alrashdi, A.M.; Ballal, T.; Al-Naffouri, T.Y. SNR estimation in linear systems with Gaussian matrices. IEEE Signal Process. Lett. 2017, 24, 1867–1871. [Google Scholar] [CrossRef]

- Kobayashi, H.; Mark, B.L.; Turin, W. Probability, Random Processes, and Statistical Analysis: Applications to Communications, Signal Processing, Queueing Theory and Mathematical Finance; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Donoho, D.L.; Maleki, A.; Montanari, A. The noise-sensitivity phase transition in compressed sensing. IEEE Trans. Inf. Theory 2011, 57, 6920–6941. [Google Scholar] [CrossRef]

- Thrampoulidis, C.; Abbasi, E.; Hassibi, B. Lasso with non-linear measurements is equivalent to one with linear measurements. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, USA, 7–12 December 2015; pp. 3420–3428. [Google Scholar]

- Abbasi, E.; Salehi, F.; Hassibi, B. Universality in learning from linear measurements. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Hu, H.; Lu, Y.M. Universality laws for high-dimensional learning with random features. IEEE Trans. Inf. Theory 2022, 69, 1932–1964. [Google Scholar] [CrossRef]

- Han, Q.; Shen, Y. Universality of regularized regression estimators in high dimensions. arXiv 2022, arXiv:2206.07936. [Google Scholar]

- Dudeja, R.; Bakhshizadeh, M. Universality of linearized message passing for phase retrieval with structured sensing matrices. IEEE Trans. Inf. Theory 2022, 68, 7545–7574. [Google Scholar] [CrossRef]

- Gerace, F.; Krzakala, F.; Loureiro, B.; Stephan, L.; Zdeborová, L. Gaussian Universality of Perceptrons with Random Labels. arXiv 2023, arXiv:2205.13303. [Google Scholar]

- Chin, K.; DeVries, S.; Fridlyand, J.; Spellman, P.T.; Roydasgupta, R.; Kuo, W.L.; Lapuk, A.; Neve, R.M.; Qian, Z.; Ryder, T.; et al. Genomic and transcriptional aberrations linked to breast cancer pathophysiologies. Cancer Cell 2006, 10, 529–541. [Google Scholar] [CrossRef]

- Güçkiran, K.; Cantürk, İ.; Özyilmaz, L. DNA microarray gene expression data classification using SVM, MLP, and RF with feature selection methods relief and LASSO. Süleyman Demirel Üniv. Fen Bilim. Enstitüsü Derg. 2019, 23, 126–132. [Google Scholar] [CrossRef]

- Sun, L.; Hui, A.M.; Su, Q.; Vortmeyer, A.; Kotliarov, Y.; Pastorino, S.; Passaniti, A.; Menon, J.; Walling, J.; Bailey, R.; et al. Neuronal and glioma-derived stem cell factor induces angiogenesis within the brain. Cancer Cell 2006, 9, 287–300. [Google Scholar] [CrossRef]

- Alon, U.; Barkai, N.; Notterman, D.A.; Gish, K.; Ybarra, S.; Mack, D.; Levine, A.J. Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc. Natl. Acad. Sci. USA 1999, 96, 6745–6750. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Dong, W.; Meng, D. Grouped gene selection of cancer via adaptive sparse group lasso based on conditional mutual information. IEEE/ACM Trans. Comput. Biol. Bioinform. 2017, 15, 2028–2038. [Google Scholar] [CrossRef] [PubMed]

- Belkin, M.; Hsu, D.; Ma, S.; Mandal, S. Reconciling modern machine-learning practice and the classical bias—Variance trade-off. Proc. Natl. Acad. Sci. USA 2019, 116, 15849–15854. [Google Scholar] [CrossRef] [PubMed]

- Gordon, Y. On Milman’s inequality and random subspaces which escape through a mesh in ℝn. In Geometric Aspects of Functional Analysis; Lecture Notes in Mathematics; Springer: Berlin/Heidelberg, Germany, 1988; pp. 84–106. [Google Scholar]

- Chu, H.T.; Toh, K.C.; Zhang, Y. On Regularized Square-root Regression Problems: Distributionally Robust Interpretation and Fast Computations. J. Mach. Learn. Res. 2022, 23, 13885–13923. [Google Scholar]

- Bunea, F.; Lederer, J.; She, Y. The group square-root lasso: Theoretical properties and fast algorithms. IEEE Trans. Inf. Theory 2013, 60, 1313–1325. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).