Abstract

Nonnegative matrix factorization (NMF) has been shown to be a strong data representation technique, with applications in text mining, pattern recognition, image processing, clustering and other fields. In this paper, we propose a hypergraph-regularized smooth nonnegative matrix factorization (HGSNMF) by incorporating the hypergraph regularization term and the smoothing constraint term into the standard NMF model. The hypergraph regularization term can capture the intrinsic geometry structure of high dimension space data more comprehensively than simple graphs, and the smoothing constraint term may yield a smooth and more accurate solution to the optimization problem. The updating rules are given using multiplicative update techniques, and the convergence of the proposed method is theoretically investigated. The experimental results on five different data sets show that the proposed method has a better clustering effect than the related state-of-the-art methods in the vast majority of cases.

MSC:

68U10; 62H30; 15A69

1. Introduction

Data representation plays an important role in information retrieval [1], computer vision [2], pattern recognition [3], and other applied fields [4,5]. There are many approaches to deal with high-dimensional data, such as data dimensionality reduction, random forests (RF) [6], multilayer perceptrons (MPL) [7], graph neural networks (GNN) [8], and hypergraph neural networks (HGNN) [9]. The dimensions of data matrices are extremely high in these practical applications. The high-dimensional data can not only cause storage difficulties, but also possible dimensional curses. Therefore, it is necessary to find an effective and low-dimensional representation of the original high-dimensional data matrix. It is also important to preserve the multidimensional structure of the image data and to preserve the multidimensional structure of the image data. Matrix factorization is one of the important data representation techniques, and typical matrix decomposition methods mainly include the following: principal component analysis (PCA) [10], linear discriminant analysis (LDA) [11], singular value decomposition (SVD) [12], nonnegative matrix factorization (NMF) [13,14], and so on. The tensor of a multidimensional array is well suited to representing such an image space, and, in order to extract valuable information from a given large tensor, a low-rank Tucker decomposition (TD) [15] is usually considered. In the real world, many data images, video volumes, and test data are non-negative, and, for these types of data, nonnegative Tucker decomposition (NTD) has recently received attention [16,17,18,19].

NMF has been gaining popularity through the works of Lee and Seung that were published in Nature [13] and NIPS [14]. It has been widely applied in clustering [20,21,22], face recognition [23,24], text mining [25,26], image processing [27,28,29], hyperspectral unmixing (HU) [30,31], and other fields [32,33,34,35]. Several NMF versions have been presented in order to improve data representation capabilities by introducing different regularization terms or constraint terms into the basis NMF model. For example, by considering the orthogonality of factor matrices, Ding et al. [21] presented a orthogonal nonnegative matrix tri-factorization (ONMF) approach. By incorporating the graph regularization term into the standard NMF model, Cai et al. [2] presented a graph-regularized nonnegative matrix factorization (GNMF) method, where a simple nearest neighborhood graph is constructed by considering the pairwise geometric relationships between two sample points. However, the model did not take into account the high-order relationships among multiple sample points. Shang et al. [36] presented a graph dual regularization nonnegative matrix factorization (DNMF) approach, which simultaneously considers the intrinsic geometry matrix structures of both the data manifold and the feature manifold. However, their DNMF approach neglects the high-order relationships among multiple sample points or multiple features. To solve the above problem, Zeng et al. [37] presented the hypergraph-regularized nonnegative matrix factorization (HNMF) method, which incorporates the hypergraph regularization term into NMF and constructs a hypergraph by considering high-order relationships among multiple sample points. However, HNMF is unable to produce a smooth and precise solution, because it does not take into account the smoothness of the basis matrix. Recently, Leng et al. [38] proposed the graph-regularized smooth nonnegative matrix factorization (GSNMF) method by incorporating the graph regularization term and the smoothing term into the basis NMF model, which considers the intrinsic geometric structures of the sample data and may produce a smooth and more accurate solution to the optimization problem with the addition of the graph regularization term and the smoothing constraint. However, in GSNMF, only the pairwise relationships between two sample points are considered, and the high-order relationships among multiple sample points are ignored.

Base on NTD, Qiu et al. [39] proposed a graph-regularized non-negative Tucker decomposition (GNTD) method, which is able to extract a representation based on low-dimensional parts from high-dimensional tensor data and retain geometric information. Immediately after, Qiu et al. [40] gave an alternating approximate gradient descent method to solve the proposed GNTD framework (UGNTD).

1.1. Problem Statement

In this paper, by incorporating hypergraph regularization and smoothing constraint terms into the standard NMF model, we propose a hypergraph-regularized smooth nonnegative matrix factorization (HGSNMF) method. The hypergraph regularization term considers the high-order relationships among multiple samples. The smoothing constraint term takes into account the smoothness of the basis matrix, which has been proven to be significant in data representation [41,42,43]. To solve the optimization problem of the HGSNMF model, we offer an effective optimization algorithm using the multiplicative update technique and theoretically prove the convergence of the HGSNMF algorithm. Finally, we conducted comprehensive experiments on five data sets to demonstrate the effectiveness of the proposed method.

1.2. Research Contribution

The main contributions of this work can be summarized as follows:

(1) Considering the complex relationships as pairwise relationships in a simple graph will inevitably lead to loss of the important information. Therefore, we construct the hypergraph regularization term to better discover the hidden semantics and simultaneously capture the underlying intrinsic geometric structure of high-dimensional spatial data samples. When constructing a hypergraph, each vertex of the hypergraph represents a data sample, and each vertex forms a hyperedge with its k nearest neighboring samples. Each hyperedge represents the similarity relationship between a group of samples with higher similarity.

(2) We consider the smoothing constraint of the basis matrix, which not only removes the noise of the basis matrix to make it smooth, but also obtains a smooth and more accurate solution to the optimization problem by combining the advantages of isotropic and diffusion anisotropic smoothing.

(3) We solve the optimization problem using an efficient iterative technique and conducted comprehensive experiments to empirically analyze our approach on five data sets; the experimental results validate the effectiveness of our proposed method.

The rest of the paper is organized as follows. In Section 2, we introduce some related works, including NMF, GSNMF, and hypergraph learning. In Section 3, we propose the novel HGSNMF model in detail, as well as its updating rules, and prove the convergence of the HGSNMF method. We also analyse the complexity of the proposed method. In Section 4, we provide the results extensive experiments that were conducted to validate the proposed method. Finally, we conclude this paper in Section 5.

2. Related Work

2.1. Nonnegative Matrix Factorization

Given a nonnegative matrix , each column of represents a data point. The purpose of NMF is to decompose a nonnegative matrix into two low-rank nonnegative factor matrices and , whose product is an approximation of the original matrix. In particular, the objective function of NMF is

where is the Frobenius norm of a matrix, is the basis matrix, and is the coefficient matrix (also called the encoding matrix). Obviously, the objective function is convex for or , but nonconvex for both and . Lee et al. [14] proposed the iterative multiplicative updating technique to solve the problem (1) as follows:

where is the transpose of .

2.2. Graph Regularization Smooth Nonnegative Matrix Factorization

GSNMF takes full account of the similarity between pairs of data points and the smoothness of the basis matrix, so it adds the graph regularity term and the smoothness term into the basis NMF model. Specifically, the objective function of GSNMF is

where denotes the trace of a matrix, , , is called as the graph Laplacian, and is a diagonal matrix with . is the weight matrix whose value is given by [2]

Leng et al. [38] used the iterative multiplicative updating technique to solve problem (2) as follows:

2.3. Hypergraph Learning

The simple graph only considers the pairwise geometric relationship between data samples; the complex internal structure of the data samples could not be used efficiently. To remedy this defect, the hypergraph takes into account the high-order geometric relationships among multiple samples, which can better capture the potential geometric information of the data [30]. Thus, hypergraph learning [44,45,46,47,48,49] is an extension of simple graph learning theory.

The hypergraph takes into account the high-order relationships among multiple vertices and consists of a non-empty vertex set and a non-empty hypergraph set . Each element is called a vertex, and each element is a subset of V, which is known as a hyperedge of G. G is a hypergraph defined on V if for and .

When constructing a hypergraph, we generate the hyperedge by calculating the Euclidean distance between the k nearest neighbors (kNN) of each vertex. The parameter k is manually set. The kNN method allows the following steps to select k sample data points to construct the hypergraph. First, it calculates the distance between the sample data and the individual sample data. Then, it sorts according to the increasing relationship of the distances. Finally, it selects the k sample data points with the smallest distance. Obviously, kNN has the advantages of being simple, easy to understand, and easy to implement. Thus, we use kNN to construct the hypergraph. An incidence matrix is used to describe the incidence relationship between a vertex and a hyperedge, which is formalized as if and as otherwise.

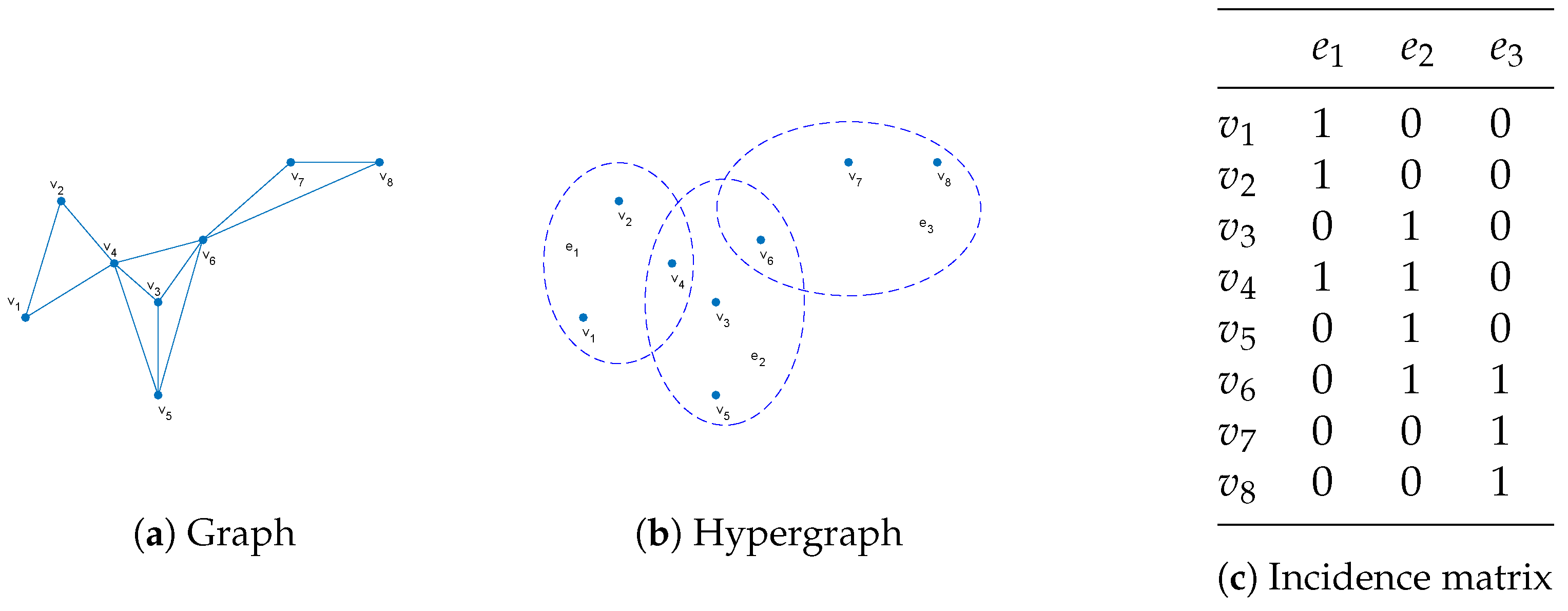

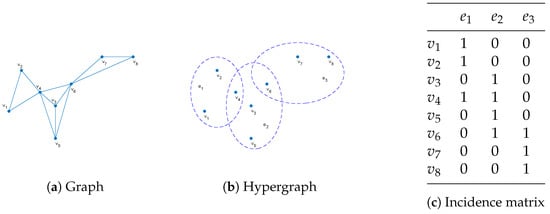

Figure 1 gives an illustration of the simple graph, the hypergraph , and an incidence matrix. If a vertex is in the k nearest neighbors of another vertex in the undirected simple graph, the two vertices are connected by an edge. The hypergraph considers the high-order relationships among multiple vertices and is made up of a non-empty vertex set and a non-empty hypergraph set . In Figure 1, the solid nodes stand for the vertices, while the node sets denoted by the solid line segment and the ellipses represent the hyperedges. Furthermore, each vertex in the hypergraph is connected to at least one hypheredge, which is associated with a weight, and each hyperedge can have multiple vertices.

Figure 1.

An illustration of the simple graph, the hypergraph , and an indication matrix.

Each hyperedge e can be assigned with a positive integer that represents the weight of the hyperedge. The degree of a vertex v and the degree of a hyperedge can be expressed as and , respectively. According to [44], the unnormalized hypergraph Laplacian matrix can be expressed as

where , is an incidence matrix, is a diagonal weight matrix composed of , and and denote the diagonal matrices composed of and , respectively.

There is a wide range for hypergraphs in computer vision, including classification and retrieval tasks. Feng et al. [9] put up the idea of a hypergraph neural network framework (HGNN) for learning data representation. Links are used in social networks. Chen et al. [50] made a methodical and thorough forecast regarding links. Yin et al. [51] presentend a hypergraph-regularized nonnegative tensor factorization for dimensionality reduction. In addition, Wang et al. [30] presented a hypergraph-regularized sparse NMF (HGLNMF) for hyperspectral unmixing, which incorporates the sparse term into HNMF. HGLNMF takes the sparsity of the coefficient matrix into account, and the hypergraph can simulate the higher-order relationship between multiple pixels by using multiple vertices in its hyperedges. Wu et al. [52] presented nonnegative matrix factorization with mixed hypergraph regularization (MHGNMF), by taking into account the higher-order information between the vertices. Some scholars apply nonnegative matrix factorization to multiple perspectives, Zhang et al. [53] presented semi-supervised multi-view clustering with dual-hypergraph-regularized partially shared nonnegative matrix factorization (DHPS-NMF). Huang et al. [54] presented diverse deep matrix factorization with hypergraph regularization for multiview data representation. To some extent, these approaches focus more on the multiple vertices hypergraph, which reflects the higher-order relationships between the multiple vertices, but they ignore the base matrix or its smoothness. To overcome this deficiency, we suggest the following hypergraph-regularized smooth nonnegative matrix factorization, which takes into account the higher-order relationships of numerous vertices, as well as the smoothness of the basis matrix.

3. Hypergraph-Regularized Smooth Nonnegative Matrix Factorization

In this section, we will describe the proposed HGSNMF approach in detail, as well as the iterative updating rules of two factor matrices. Then, the convergence of the proposed iterative updating rules is proven. Finally, the cost of calculating this approach is shown.

First, we give the construction of the hypergraph regularization term. Given a nonnegative data matrix , we expect that, if two data samples and are close, the corresponding encoding vectors and in the low-dimensional space are also close to each other. We encode geometrical information in the coefficient matrix hypergraph by linking each data sample with its k nearest neighbors and denoting their hypergraph connections with heat kernel weight:

where denotes the average distance among all the vertices.

With the weight matrix defined above, the hypergraph regularization of the matrix can be caculated by the following optimization problem:

where is the hypergraph Laplacian matrix of the hyergraph G and is defined by (3).

3.1. The Objective Function

To discover the intrinsic geometric structure information of a data set and produce a smooth and more accurate solution, we propose the HGSNMF method by incorporating hypergraph regularization and the smoothing constraint into NMF. The objective function of our HGSNMF is defined as follows:

where is the basis matrix, and , is the ith row and jth column entry of matrix , is the coefficient matrix, and and are the positive regularization parameters for balancing the reconstruction error in (5). The hypergraph regularization term and smoothing regularization term are presented in the second and the third terms, respectively. The hypergraph regularization term can more effectively discover the hidden semantics and simultaneously capture the underlying intrinsic geometric structure of the high-dimensional space data. The smoothing constraint of the basis matrix not only smooths the basis matrix by removing noise, but it also produces a smooth and more accurate solution to the optimization problem by combining the advantages of isotropic and diffusion anisotropic smoothing. Then, we give the detailed derivation of the updating rules, the theoretical proof of convergence, and an analysis of the computing complexity of the HGSNMF approach, as well as further comparative experiments.

3.2. Optimization Method

The objective function in (5) is not convex in both and , so it is unrealistic to find the global optimal solution. Thus, we can only obtain the local optimal solution by using the iterative method. There are the multiplicative update, projective gradient, alternating direction multiplier, and dictionary learning algorithm for solving optimization problems. Because the multiplicative update algorithm has the advantages of convergence, simple operation, and small computation, we use the multiplicative update algorithm to solve optimization problems. We can turn the objective function into the following unconstrained objective function by using the Lagrange multiplier:

where , , and and are the Lagrange multipliers for the constrains and , respectively.

3.3. Convergence Analysis

In this part, we demonstrate the convergence of our proposed HGSNMF in (5) by utilizing the updating rules (8) and (9). First of all, we introduce some related definitions and lemmas.

Definition 1

([14]). is an auxiliary function of if satisfies the condition

The auxiliary function plays an important role due to the following lemma.

Lemma 1

([14]). If G is an auxiliary function of F, then F is nonincreasing under the updating rule

To prove the convergence of HGSNMF under the updating step for in (8), we fix the matrix . For any element in , we use to denote the part of the objective function that is relevant only to . The first and second derivatives of are given as follows:

and

respectively.

Lemma 2.

The function

is an auxiliary function of , which is only relevant to .

Proof.

Proof.

Next, we fix the matrix . For any element in , we use to denote the part of the objective function that is relevant only to . By calculation,

and

Lemma 3.

The function

is an auxiliary function of , which is only relevant to .

Proof.

Proof.

Similar to NMF, it is known from Theorems 1 and 2 that the convergence of the model (5) can be guaranteed under the updating rules of (8) and (9).

The specific procedure for finding the local optimal and of HGSNMF is summarized in Algorithm 1.

For the specific implementation of Algorithm 1, we first repeat HGSNMF 10 times on the original data, and then this low-dimensional reduced data repeats K-means clustering 10 times.

| Algorithm 1 HGSNMF algorithm. |

| Input: Data matrix . The number of neighbors k. The algorithm |

| parameters r, p and regularization parameters , . The stopping criterion , and the maximum |

| number of iterations maxiter. Let . |

| Output: Factors and ; |

| 1: Initialize and ; |

| 2: Construct the weight matrix using (4), and calculate the |

| matrix , ; |

| 3: for maxiter do |

| 4: Update and Update according to (8), (9), respectively. |

| 5: Compute the objective function value of (5) to denote . |

| 6: if |

| Break and return . |

| 7: end if |

| 8: end for |

3.4. Computational Complexity Analysis

In this section, we analyze the computational complexity of the proposed HGSNMF method compared to other nonnegative matrix methods. The fladd, flmlt, and fldiv denote floating point addition, multiplication, and division, respectively. The notation denotes the computational cost. The parameters n, m, r, and k denote the number of sample points, features, factors, and nearest neighbors to construct an edge or hyperedge, respectively.

According to the updating rules, we calculate the arithmetic operations of each iteration in HGSNMF and summarise the results of the proposed HGSNMF. From Table 1, we can see that the total cost of our proposed HGSNMF is .

Table 1.

Computational operation counts for each iteration in NMF, GNMF, HNMF, GSNMF, HGLNMF, and HGSNMF.

4. Numerical Experimentation

In this section, we compare the results of data clustering on five popular data sets to estimate the performance of the proposed HGSNMF method with the related state-of-the-art methods, such as K-means, NMF [13], GNMF [2], HNMF [37], GSNMF [38], HGLNMF [30], GNTD [39], and UGNTD [40]. All tests were performed on a computer with a 2-Ghz Intel(R) Core(TM) i5-2500U CPU and 8-GB memory 64-bit using MATLAB R2015a in Windows 10. The stopping criterion was , and the maximum number of iterations was set to .

4.1. Data Sets

The clustering performance was evaluated on five widely used data sets, including COIL20 (https://www.cs.columbia.edu/CAVE/software/softlib/coil-20.php (accessed on 26 October 2021)), YALE, Mnist, ORL (https://www.cad.zju.edu.cn/home/dengcai.Data/data.html, accessed on: 26 October 2021), and Georgia ((https://www.anefian.com/research/face-reco.htm (accessed on 26 October 2021)). The important statistical information of the five data sets is listed in Table 2, with more details given as follows.

Table 2.

Statistics of the five data sets.

(1) COIL20: The data set contains 72 grey-scale images for each of 20 objects viewed at varying angles.They were resized to .

(2) ORL: The data set contains 10 different images of each of 40 human subjects. For some subjects, the images were taken at different times and different light conditions. They capture different facial expressions and different facial details. We resized them to .

(3) YALE: The data set contains 11 grey-scale images for each of 15 individuals viewed at different facial expressions or configurations. These images were resized to .

(4) Georgia: The data set contains 15 color JPEG grey face images for each of 50 people, with cluttered backgrounds for each object. We also resized them to .

(5) Mnist: The data set contains 700 grey images of handwritten digits from zero to nine. During the experiment, we randomly selected 50 digit images from each category. Each image was resized to .

4.2. Evaluation Metrics

Two popular evaluation metrics were used: the clustering accuracy (ACC) and the normalized mutual information (NMI) [55], which evaluate the clustering performance by comparing the obtained cluster label of each sample with the label provided by the data set. ACC is defined as follows

where is the correct label provided by the real data set, is the clustering label obtained by the clustering result, n is the total number of documents, is the delta function that equals one if and equals to zero otherwise, and is a mapping function that maps each cluster label to a given equivalent label from the data set. By using the Kuhn–Munkers algorithm [56], one can find the best mapping.

Given two clusters C and , the mutual information metric can be defined as follows

where and denote the probabilities that an arbitrarily chosen sample from the data set belongs to the clusters and , respectively, and denotes the joint possibility that this arbitrarily selected image belongs to the cluster and the cluster at the same time. The normalized mutual information (NMI) is defined as follows

where C is a set of the true labels, and is a set of clusters obtained from the clustering algorithms. and are the entropies of C and , respectively.

4.3. Performance Evaluations and Comparisons

To evaluate the performance of our proposed method, we chose K-means, NMF, GNMF, HNMF, and GSNMF as the comparison clustering algorithms:

(1) K-means: The K-means performs clustering on the original data; we employed it to uncove whether low-dimension data can improve clustering performance on high-dimension data.

(2) NMF [13]: The original NMF represents the data by imposing nonnegative constraints on the factor matrices.

(3) GNMF [2]: Based on NMF, it constructs a local geometric structure of the original data space as a regularization term.

(4) HNMF [37]: It incorporates the hypergraph regularization term into NMF.

(5) GSNMF [38]: It incorporates both graph regularization and the smoothing constraint into NMF.

(6) HGLNMF [30]: It incorporates both hypergraph regularization and the sparse constraint into NMF.

(7) GNTD [39]: It is a graph-regularized nonnegative Tucker decomposition method that incorporates the graph regularization term into the NTD, which can preserve the geometrical information for high-dimensional tensor data.

(8) UGNTD [40]: It is a graph-regularized nonnegative Tucker decomposition method that incorporates the graph regularization term into the NTD, and the alternating proximate gradient descent method is used to optimize the GNTD model.

(9) HGSNMF: We proposed the hypergraph-regularized smooth nonnegative matrix factorization by incorporating hypergraph regularization term and smoothing constraint into NMF.

The general clustering number k was fixed, and we describe the experiment as follows.

For the NMF, GNMF, HNMF, GSNMF, HGLNMF, and HGSNMF, we initialized two low-rank nonnegative matrix factors using a random strategy in the experiments. Next, we set the dimensionality of the low-dimensional space to the number of clusters, and we used the classical K-means method to cluster the samples in the new data representation space.

For the NMF, GNMF, HNMF, GSNMF, HGLNMF, and HGSNMF, we used the Frobenius norm as the reconstruction error of the objective function. For the GNMF, GSNMF, and GNTD, the heat kernel weighting scheme was used to generate the five-nearest-neighbors graph for constructing the weight matrix. For the HNMF, HGLNMF, and HGSNMF, the heat kernel weighting scheme was used to generate the five-nearest-neighbors graph for constructing the weight matrix in the hypergraph. For the GNMF, GSNMF, and GNTD, the graph regularization parameter was set to 100 for each of them. For the UGNTD, the graph regularization parameter was set to 1000. For the HNMF and HGLNMF, the hypergraph regularization parameter was set to 100 for each of them, and for the GSNMF, the parameter was set as . For the HGLNMF, the parameter µ was tuned from , and the best results are reported. For the GNTD, for the Mnist data set, the first two directional sizes of the kernel tensor were chosen from , and the third direction was taken as the class number k. For the UGNTD, the size of the core tensor was set to 30 × 3 × 10. For the HGSNMF, the parameters and were tuned from for COIL20, for YALE, for ORL, for Georgia, and for Mnist; the parameter p was tuned from for COIL20, for YALE, for ORL, for Georgia, and for Mnist. The best results are reported.

For K-means, we repeated K-means clustering 10 times on the original data. For the NMF, GNMF, HNMF, GSNMF, HGLNMF, and HGSNMF, we first repeated NMF, GNMF, HNMF, GSNMF, HGLNMF, GNTD, UGNTD, and HGSNMF 10 times on the original data and then repeated K-means clustering 10 times on this low-dimensional reduced data, respectively. We report the average clustering performance and standard deviation in Table 3, Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10, Table 11 and Table 12, where the best results are in bold.

Table 3.

Normalized mutual information (NMI) on COIL20 data set.

Table 4.

Clustering accuracy (ACC) on COIL20 data set.

Table 5.

Normalized mutual information (NMI) on YALE data set.

Table 6.

Clustering accuracy (ACC) on YALE data set.

Table 7.

Normalized mutual information (NMI) on ORL data set.

Table 8.

Clustering accuracy (ACC) on ORL data set.

Table 9.

Normalized mutual information (NMI) on Georgia data set.

Table 10.

Clustering accuracy (ACC) on Georgia data set.

Table 11.

Normalized mutual information (NMI) on Mnist data set.

Table 12.

Clustering accuracy (ACC) on Mnist data set.

From these experimental results in Table 3, Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10, Table 11 and Table 12, we have the following conclusions.

(1) The clustering performance of the proposed HGSNMF method on all of the data was better than that of the other algorithms in most cases, which shows that the HGSNMF method can find more discriminative information for data. For COIL20, YALE, ORL, Georgia, and Mnist, the average clustering ACC of the HGSNMF method was more than 1.99%, 1.89%, 0.54%, 3.37%, and 7.21% higher than the second-best method, respectively, and the average clustering NMI of the HGSNMF method was more than 1.84%, 2.26%, 0.6%, 2.21%, and 7.25% higher than the second-best method, respectively.

(2) The HGSNMF method was better than the HNMF method in most cases, because the smoothing constraint could combine the merits of isotropic and anisotropic diffusion to yield smooth and more accurate solutions.

(3) The HGSNMF method was also better than the GSNMF method in most cases, which indicates that the hypergraph regularization term can discover the underlying geometric information better than the simple graph regularization term. The HGSNMF method did not perform as well as the GSNMF method for some given results. In the ORL dataset in Table 8, the HGSNMF method had a lower clustering accuracy metric than the GSNMF method when classes 30 and 35 were selected. This is because the hyperparameter was selected from , which increased the error of the objective function and, therefore, yielded a lower accuracy.

(4) The HGSNMF method was also better than the GNTD and UGNTD method in most cases for the Mnist date set, which indicates that the hypergraph regularization term can discover the underlying geometric information better than the simple graph regularization term. The HGSNMF method did not perform as well as the GNTD and UGNTD on some given results, because the tensor maintained the internal structure of the high-dimensional data well.

For the different numbers of clusters on the YALE and Geogria data sets, we examined the computation time based on NMF, GNMF, HNMF, GSNMF, and HGSNMF. In these experiments, we chose the aforementioned identical conditions, including parameters and iteration times. From Table 13 and Table 14, it can be observed that the NMF had the shortest computation time, because it had no regularization term. The HNMF, GSNMF, and HGSNMF extended the GNMF by adding hypergraph regularization, the smoothing constraint, and the above two terms, respectively; the computation times of the HNMF, GSNMF, and HGSNMF were more than the GNMF. However, the computation time of the HGSNMF was less than the HNMF in most cases, thus showing the computational advantage of the HGSNMF using the smoothing constraint. In the YALE data set, the computation time of the HGSNMF was smaller than the GSNMF, thereby indicating that the hypergraph regularization term sped up the convergence of the proposed HGSNMF method.

Table 13.

Comparisons of computation time in the YALE.

Table 14.

Comparisons of computation time in the Georgia.

4.4. Parameters Selection

There are three parameters in our proposed HGSNMF algorithm: the regularization parameters and p. When , and p are 0, the proposed HGSNMF method reduces to the NMF [13]; when and p are 0, the proposed HGSNMF method reduces to the HNMF [14]. In the experiments, we set for all graph-based and hypergraph-based methods for all data sets. To test the effect of each varying parameter, we fixed the other varying parameters as described Section 4.3.

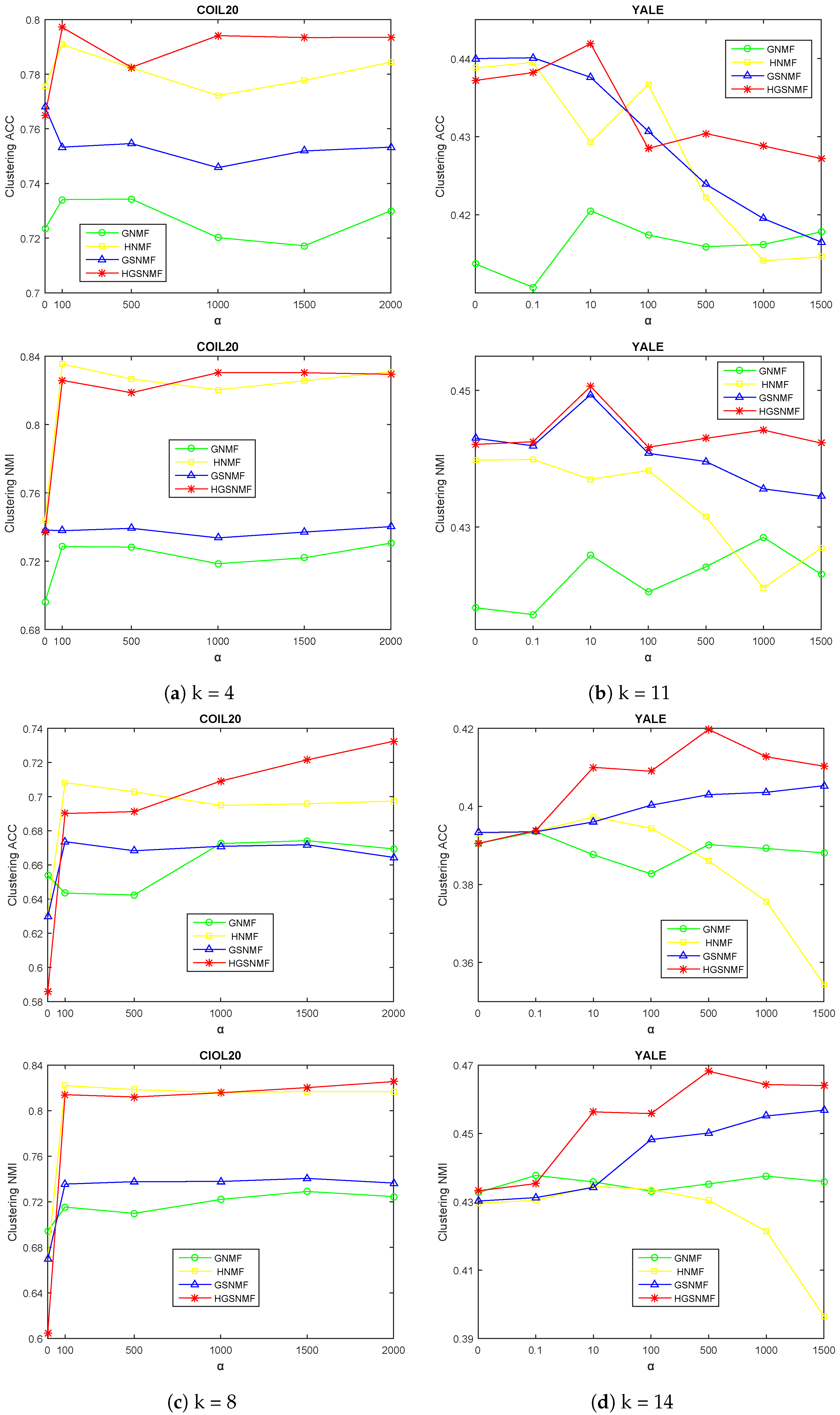

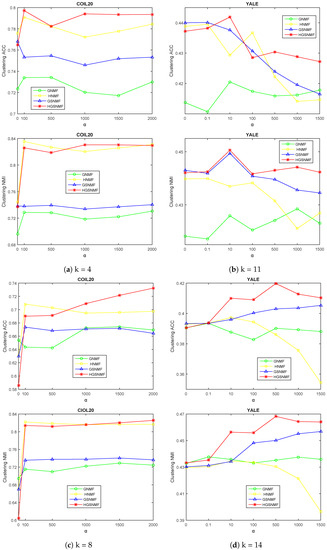

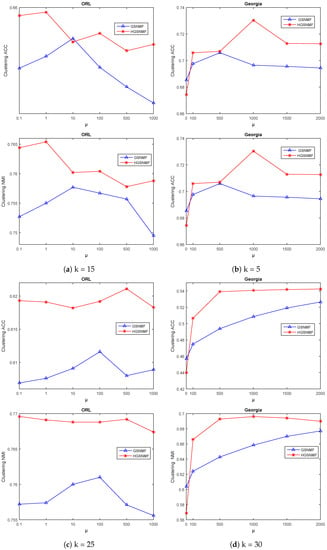

Firstly, we adjusted the parameter for the GNMF, GSNMF, HNMF, and HGSNMF methods. In the HGSNMF, for , we set and . In the HGSNMF, for , we set and for the COIL20 data set; for and , we set and for the YALE data set; for , we set and ; for , we set and for the ORL data set; for , we set and ; for , we set and for the Geogria data set. Figure 2 and Figure 3 demonstrates the accuracy and the normalized mutual information variations with respect to for four data sets.

Figure 2.

Performance comparison of GNMF, HNMF, GSNMF, and HGSNMF for varying the parameter . Each column indicates the COIL20 and YALE data sets.

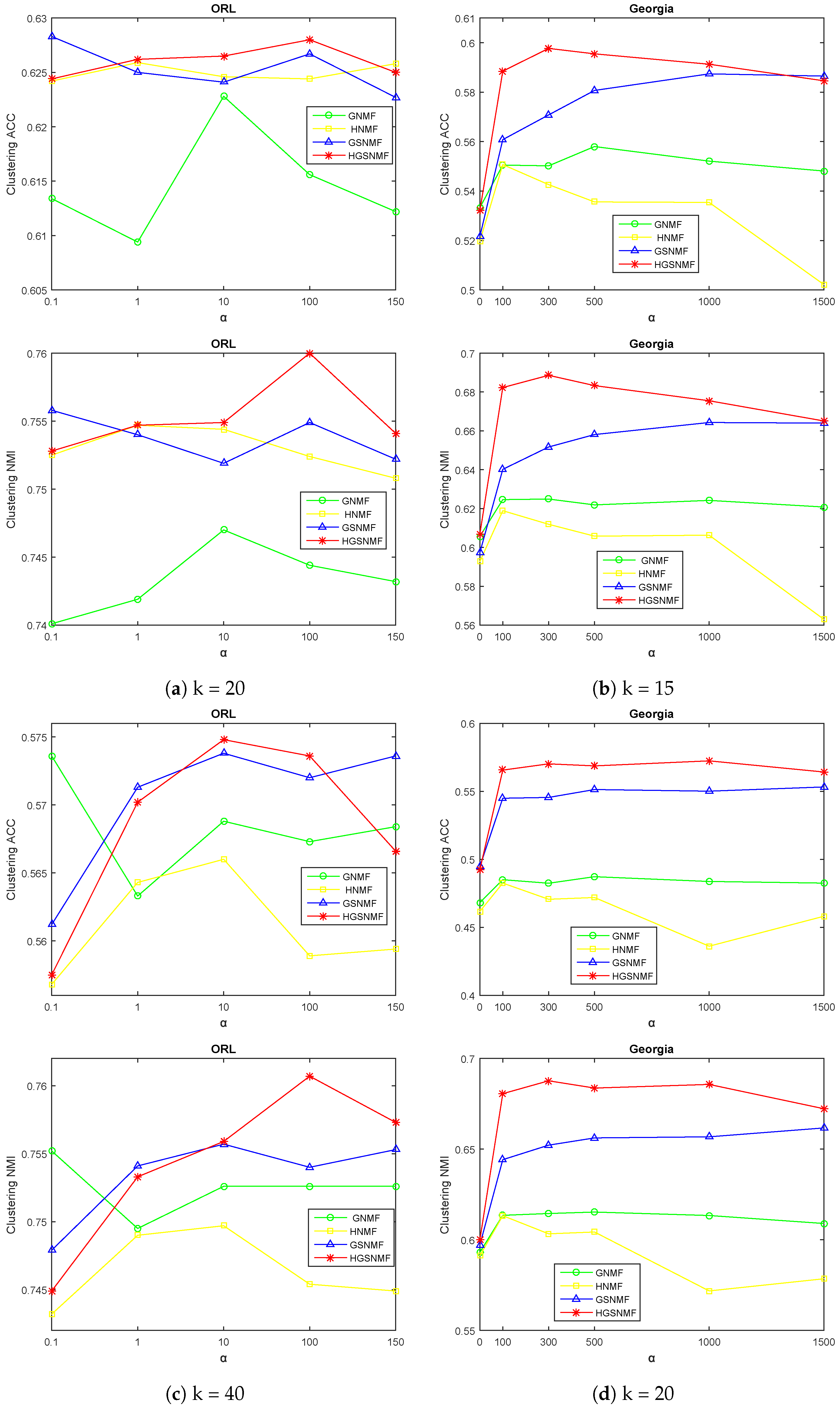

Figure 3.

Performance comparison of GNMF, HNMF, GSNMF, and HGSNMF for varying the parameter . Each column indicates the for ORL and Georgia data sets.

As can be seen from Figure 2 and Figure 3, the HGSNMF performed better than the other algorithms in most cases. We can see that the performance of the HGSNMF was relatively stable with respect to the parameter for some data sets.

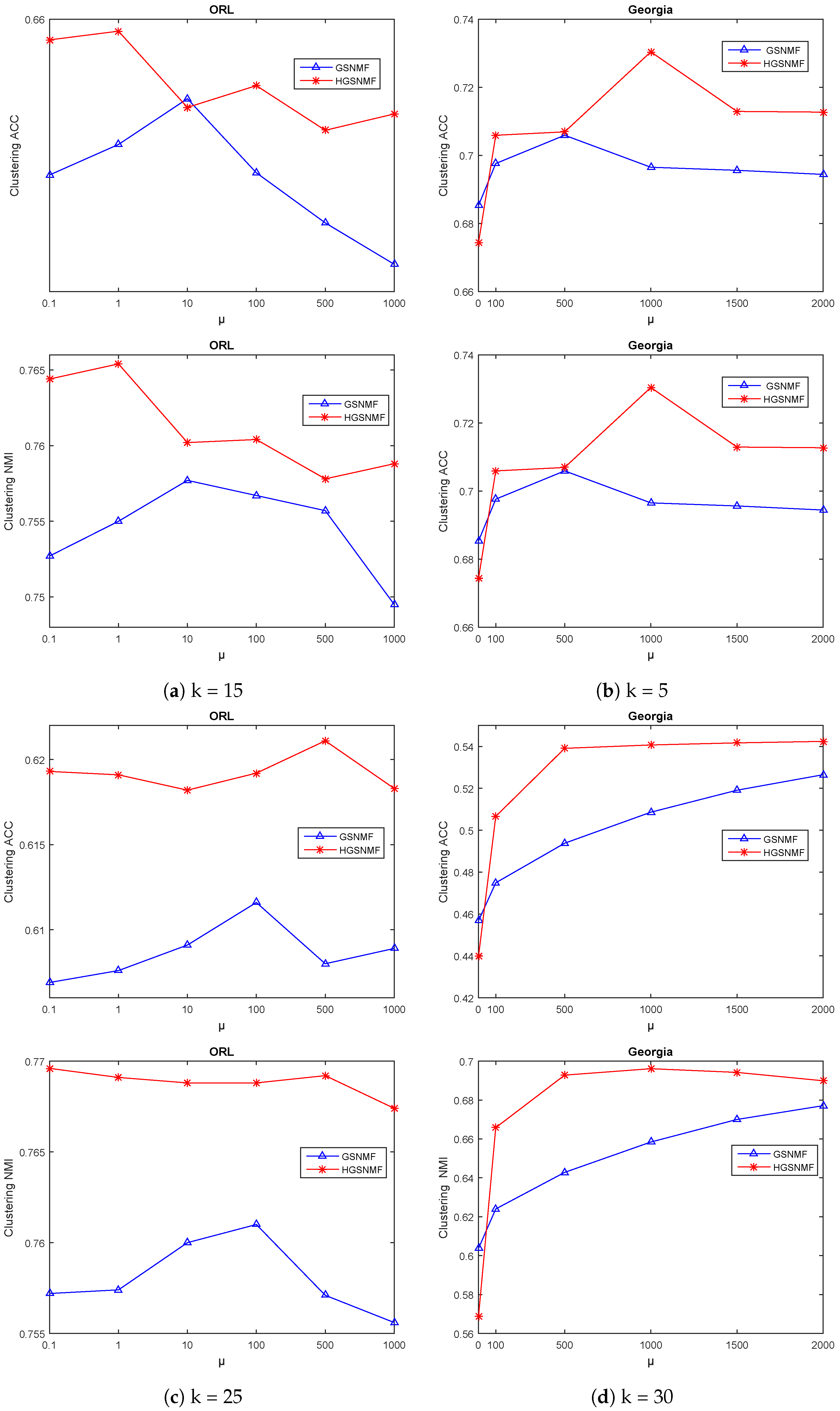

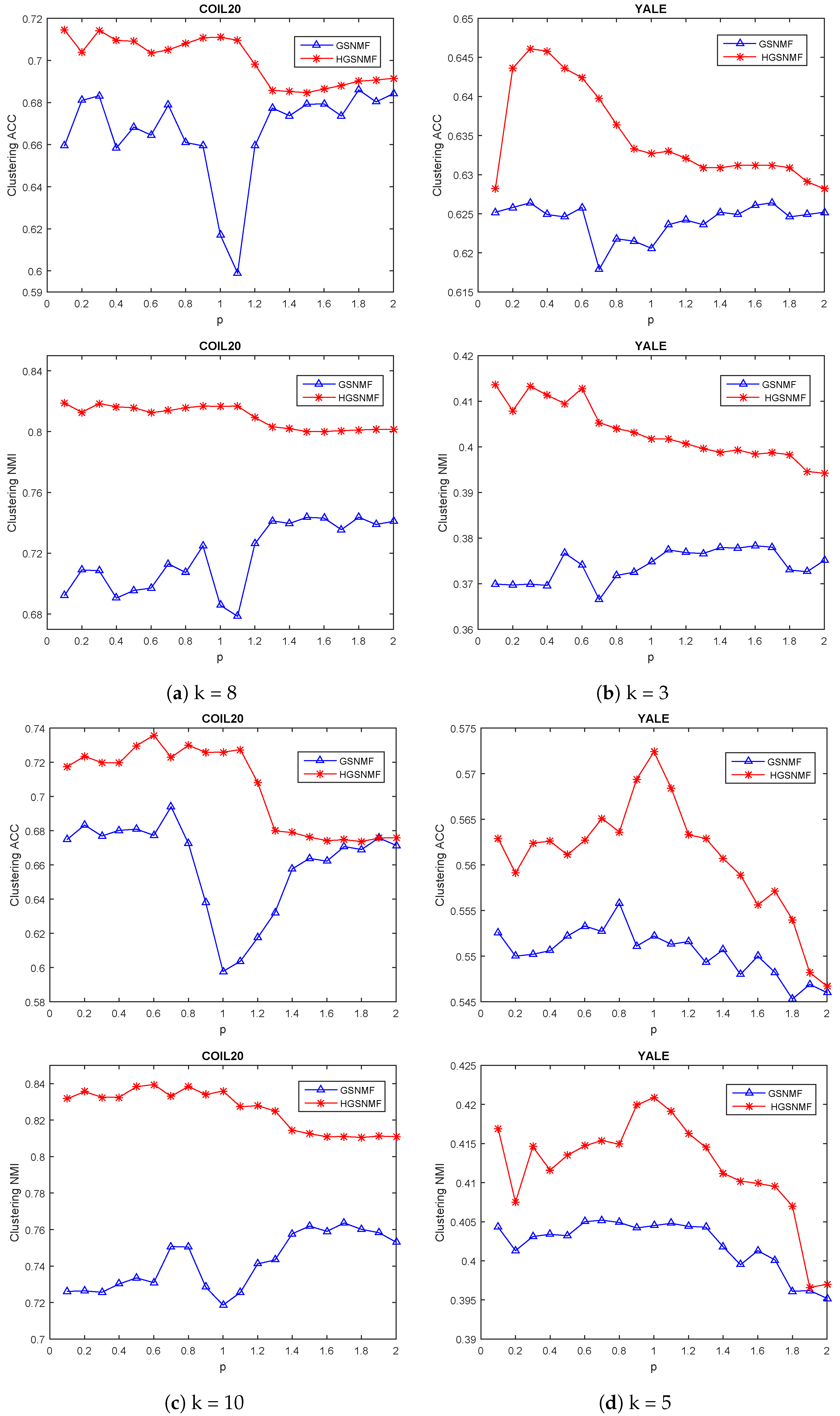

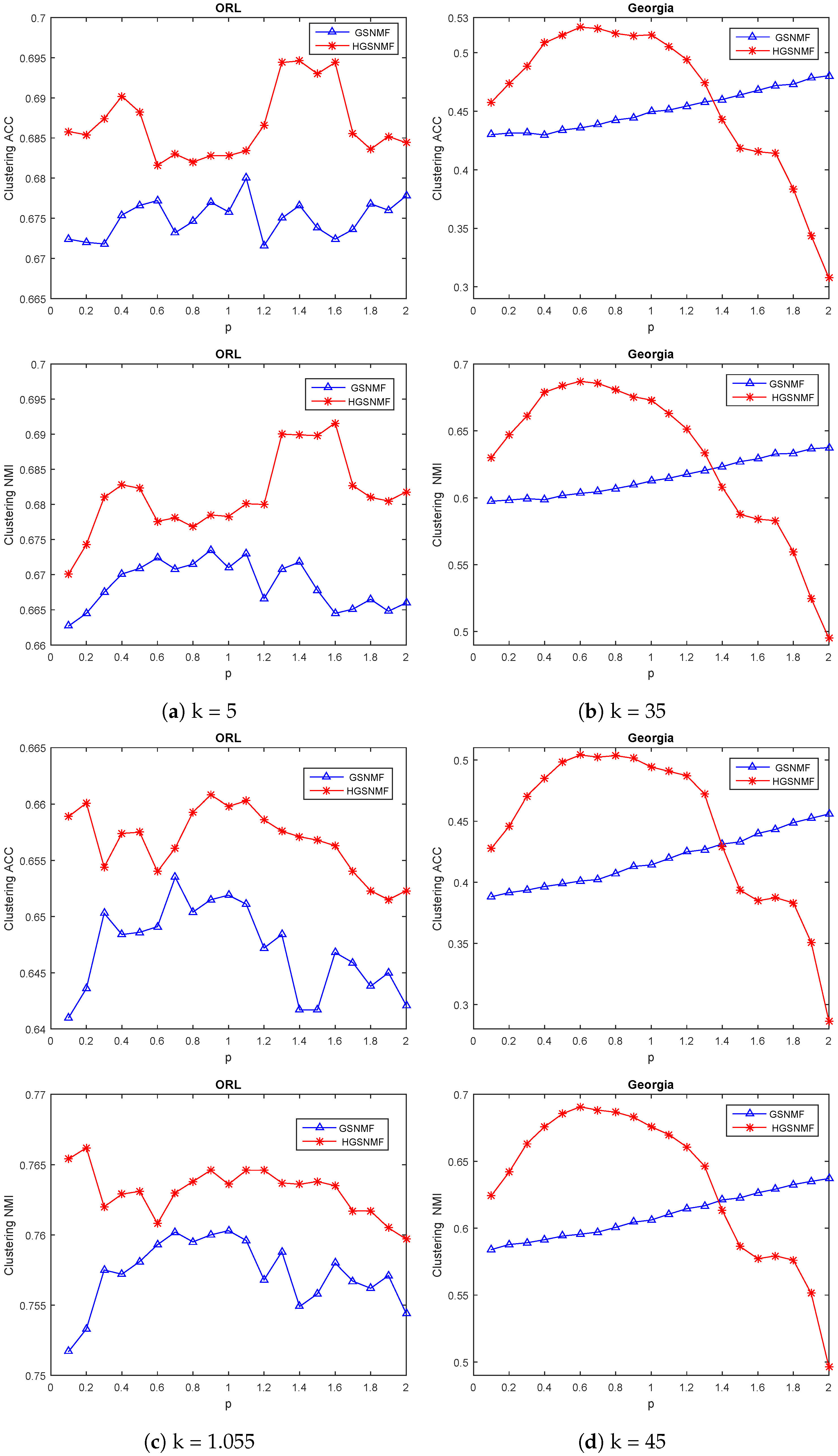

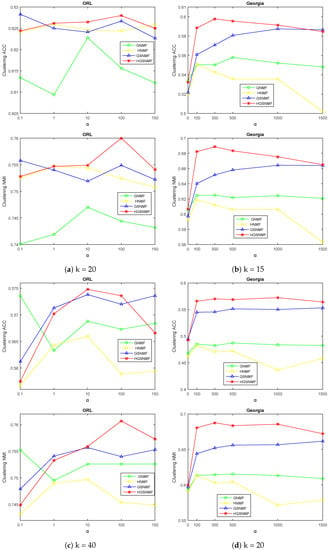

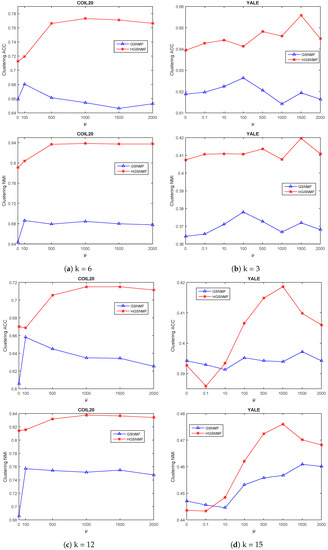

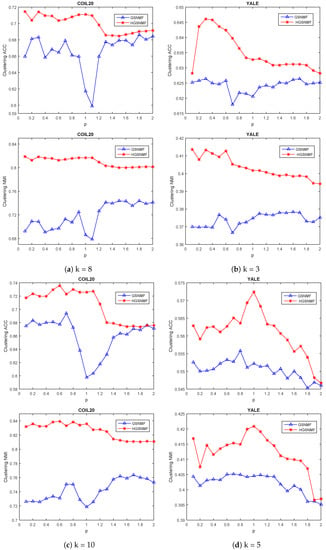

Next, we adjusted the parameter for the GSNMF and HGSNMF for four data sets, and we set and in the GSNMF. In the HGSNMF, for , we set and ; for , we set and for the COIL20 data set; for , we set and ; for , we set , , and for the YALE data set; for , we set , ; for , we set and for the ORL data set; for and , we set and for the Georgia data set. As can be seen from Figure 4 and Figure 5, the HGSNMF performed better than the GSNMF in most cases for the four data sets, and the performance of the HGSNMF was stable with respect to the parameter for some data sets.

Figure 4.

Performance comparison of GSNMF and HGSNMF for varying parameter . Each column indicates the COIL20 and YALEdata sets.

Figure 5.

Performance comparison of GSNMF and HGSNMF for varying parameter . Each column indicates the ORL and Georgia data sets.

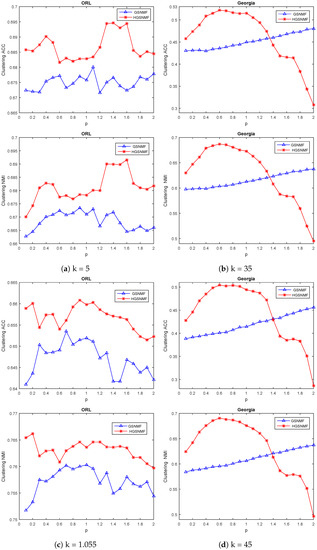

Finally, we considered the variation of the parameter p. In the HGSNMF, for , we set ; for , we set and for the COIL20 data set; for , we set and ; for , we set and for the YALE data set; for , we set , for , and we set and for the ORL data set; for and , we set and for the Georgia data set. As shown in Figure 6 and Figure 7, the performance of the HGSNMF was relatively stable and very good with respect to the parameter p varying from 0.1 to 2 for some data sets.

Figure 6.

Performance comparison of GSNMF, and HGSNMF for varying parameter p for COIL20 and YALEdata sets.

Figure 7.

Performance comparison of GSNMF, and HGSNMF for varying parameter p forORL and Georgia data sets.

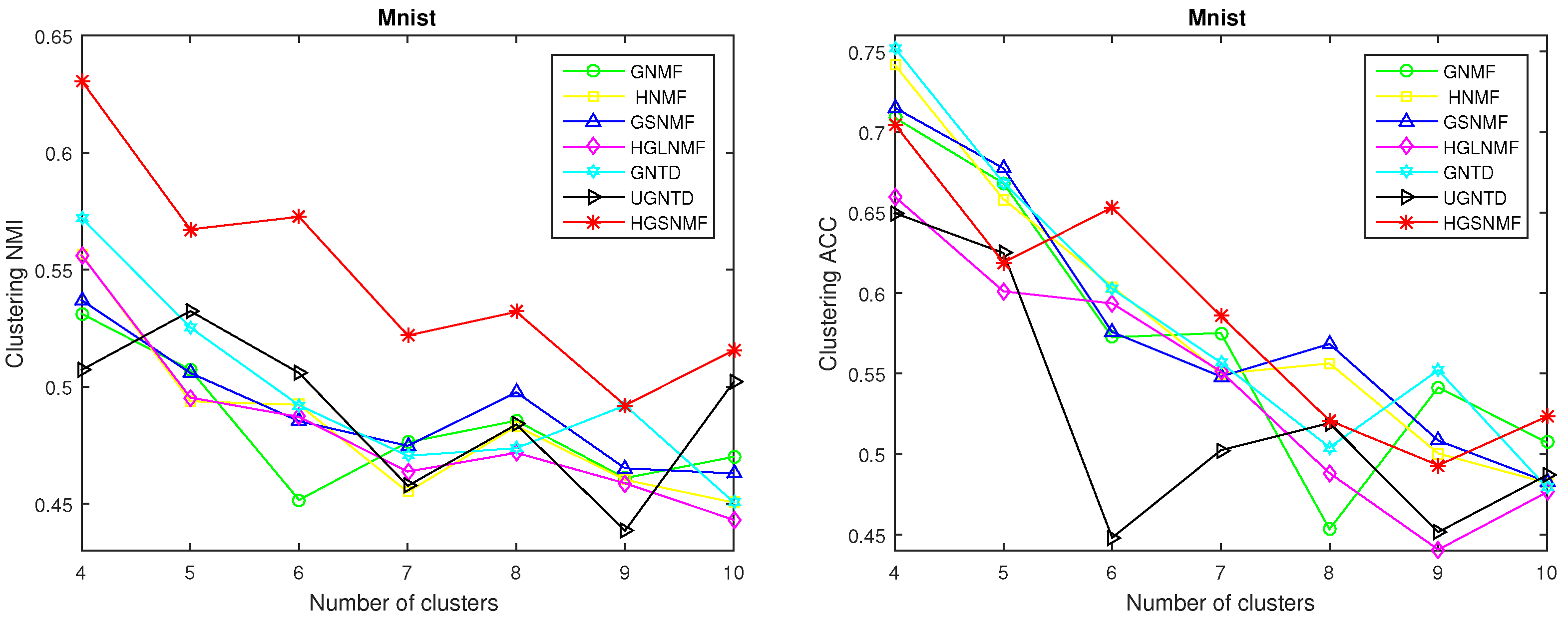

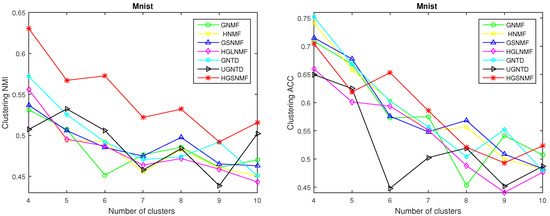

For the Mnist data set, different classes were arbitrarily selected for clustering, and the clustering effects of the seven methods were compared. For the experiments, the graph regularization term parameter was set to 100 in the GNMF, GSNMF, GNTD, and UGNTD, and the hypergraph regularization parameter was set to 100 in the HNMF, HGLNMF, and HGSNMF; µ was also set to 100 in the GSNMF, HGLNMF, and HGSNMF, and the parameter p was set to 1.7 in the GSNMF and HGSNMF. In the GTND and UGNTD, the core tensor was the same as in the experiments in Section 4.3. From Figure 6 and Figure 7, it is clear that our proposed HGSNMF method clustered better than the other methods in most cases with the same selection of parameters on the Mnist data set.

Figure 8 compares performance of GNMF, HNMF, GSNMF, HGLNMF, GNTD, UGNTD, and HGSNMF for number of clusters k for Mnist data set.

Figure 8.

Performance comparison of GNMF, HNMF, GSNMF, HGLNMF, GNTD, UGNTD, and HGSNMF for number of clusters k for Mnist data set.

4.5. The Converage Analysis

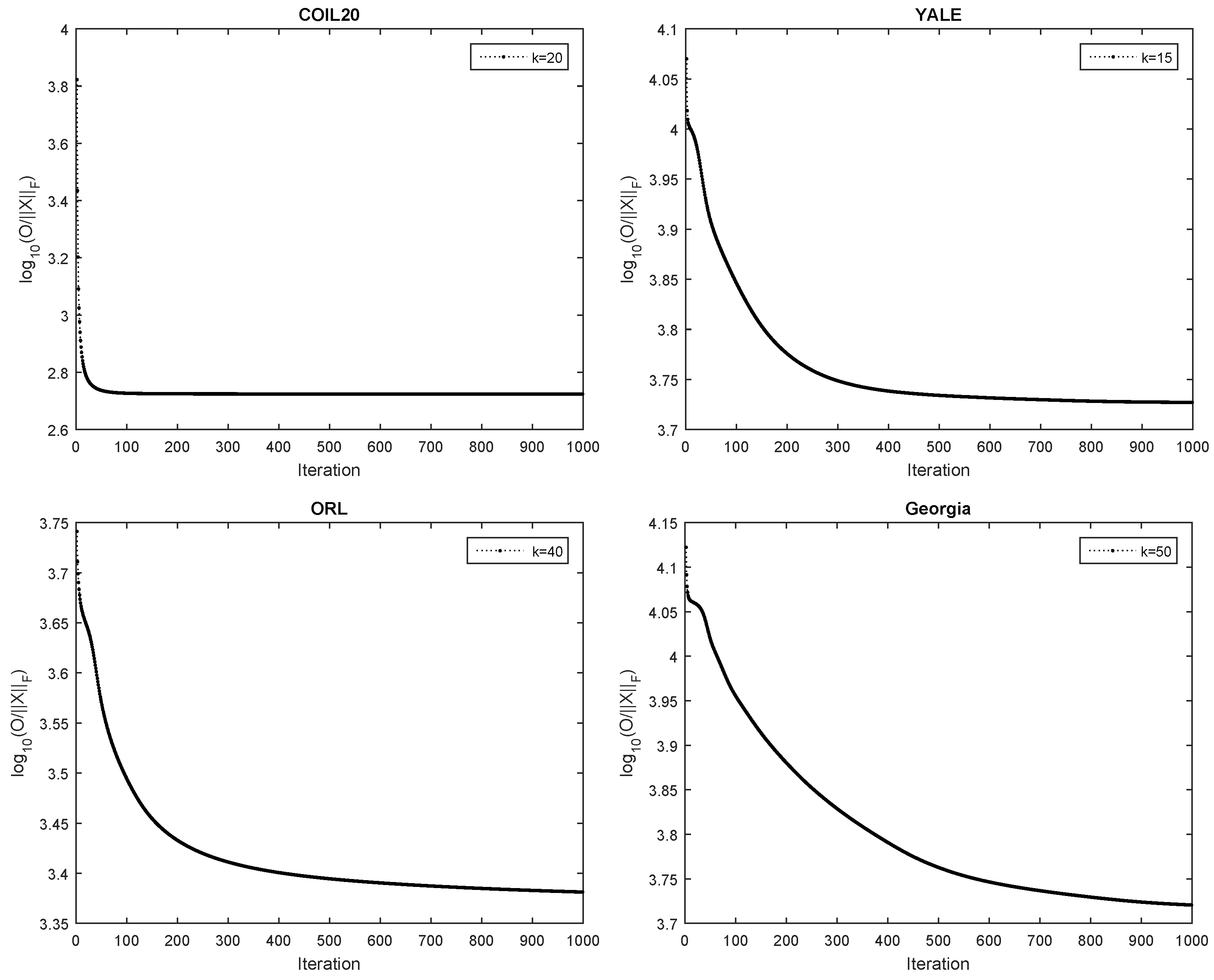

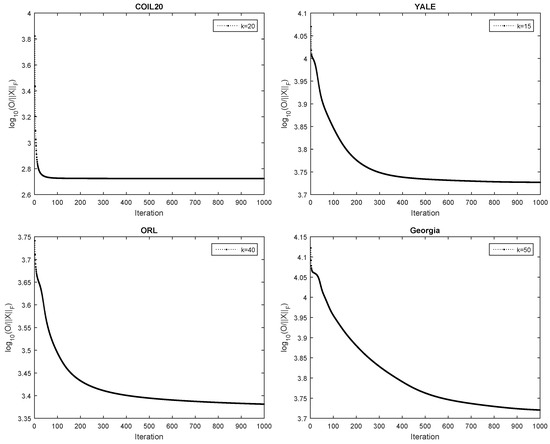

As described in Section 3, the convergence of the proposed HGSNMF method has been proven theoretically. In this section, we analyze the convergence of the proposed method through experiments. Figure 9 shows the convergence curves of our HGSNMF method for three data sets. As can be seen from Figure 9, the objective function was monotonically decreasing and tended to converge after 300 iterations.

Figure 9.

The relative residuals versus the number of iterations for HGSNMF for four data sets.

5. Conclusions

In this paper, we proposed a hypergraph-regularized smooth constrain NMF method for data representation by incorporating the hypergraph regularization term and smoothing constraint into NMF. The hypergraph regularization term can better capture the intrinsic geometry structure of high-dimension space data more comprehensively than a simple graph; the smoothing constraint may produce a smooth and more accurate solution to the optimization problem. We presented the updating rulers and proved the convergence of our HGSNMF method. Experimental results for five real-world data sets show, as follows, for the COIL20,YALE, ORL, Georgia, and Mnist, the average clustering ACC of our proposed HGSNMF method was more than , , , , and higher than the seconded-best method, respectively, and the average clustering NMI of our proposed HGSNMF method was more than , , , , and higher than the seconded-best method, respectively. Thus, our proposed method can achieve a better clustering effect than other state-of-the-art methods in most cases.

Our HGSNMF method has some limitations: it only considers the hypergraph with high similarity among multiple data points and the smoothness of the basis matrix, and other constraints such as sparse, multiple graphs, and hypergraphs can be considered. The HGSNMF method was only applied to the clustering problem of images. In the future, we can apply hyperspectral mixing, recommended systems, and other aspects. In addition, because the vectorization of the matrix will destroy the internal structure of the data, we will extend to consider nonnegative tensor decomposition in the future.

Author Contributions

Conceptualization, Y.X. and Q.L.; methodology, Y.X. and L.L.; software, Q.L. and Z.C.; writing—original draft preparation, Y.X.; writing—review and editing, Q.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the National Natural Science Foundation of China under grants 12061025 and 12161020 and partially funded by the Natural Science Foundation of the Educational Commission of Guizhou Province under grants Qian-Jiao-He KY Zi [2019]186, [2019]189, and [2021]298; this research also received funding from the Guizhou Provincial Basis Research Program (Natural Science) (QKHJC[2020]1Z002 and QKHJC-ZK[2023]YB245).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PCA | Principal component analysis |

| LDA | Linear discriminant analysis |

| SVD | Singular value decomposition |

| NMF | Nonnegative matrix factorization |

| HU | Hyperspectral unmixing |

| ONMF | Orthogonal nonnegative matrix tri-factorizators |

| GNMF | Graph regularized nonnegative matrix factorization |

| DNMF | Graph dual regularization nonnegative matrix factorization |

| HNMF | Hypergraph regularized nonnegative matrix factorization |

| GSNMF | Graph regularized smooth nonnegative matrix factorization |

| HGLNMF | Hypergraph regularized sparse nonnegative matrix factorization |

| MHGNMF | Nonnegative matrix factorization with mixed hypergraph regularization |

| DHPS-NMF | Dual hypergraph regularized partially shared nonnegative matrix factorization |

| HGSNMF | Hypergraph regularized smooth nonnegative matrix factorization |

| ACC | Accuracy |

| NMI | Normalized mutual information |

| MI | Mutual information |

References

- Pham, N.; Pham, L.; Nguyen, T. A new cluster tendency assessment method for fuzzy co-clustering in hyperspectral image analysis. Neurocomputing 2018, 307, 213–226. [Google Scholar] [CrossRef]

- Cai, D.; He, X.; Han, J.; Huang, T. Graph regularized nonnegative matrix factorization for data representation. IEEE Trans. Pattern Anal. Match.Intell. 2011, 33, 1548–1560. [Google Scholar]

- Li, S.; Hou, X.; Zhang, H.; Cheng, Q. Learning spatially localized, parts-based representation. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2011; Volume 1, pp. 207–212. [Google Scholar]

- He, X.; Yan, S.; Hu, Y.; Niyogi, P.; Zhang, H. Face recognition using laplacian faces. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 328–340. [Google Scholar]

- Liu, H.; Wu, Z.; Li, X.; Cai, D.; Huang, T. Constrained nonnegative matrix factorization for image representation. IEEE Trans. Pattern Anal. Mach.Intell. 2011, 34, 1299–1311. [Google Scholar] [CrossRef] [PubMed]

- Cutler, A.; Cutler, D.; Stevens, J. Random forests. In Ensemble Machine Learning: Methods and Applications; Springer: Berlin, Germany, 2012; pp. 157–175. [Google Scholar]

- Riedmiller, M.; Lernen, A. Multi layer perceptron. In Machine Learning Lab Special Lecture; University of Freiburg: Freiburg, Germany, 2014; pp. 7–24. [Google Scholar]

- Wu, L.; Cui, P.; Pei, J. Graph Neural Networks; Springer: Singapore, 2022. [Google Scholar]

- Feng, Y.; You, H.; Zhang, Z.; Ji, R.; Gao, Y. Hypergraph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Hilton, HI, USA, 27 January–1 February 2019; Volume 33, pp. 3558–3565. [Google Scholar]

- Kirby, M.; Sirovich, L. Application of the karhunen loeve procedure for the characterization of human faces. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 103–108. [Google Scholar] [CrossRef]

- Strang, G. Introduction to Linear Algebra; Wellesley-Cambridge: Wellesley, MA, USA, 2009. [Google Scholar]

- Martinez, A.; Kak, A. Pca versus lda. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 228–233. [Google Scholar] [CrossRef]

- Lee, D.; Seung, H. Learning of the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef]

- Lee, D.; Seung, H. Algorithms for nonnegative matrix factorization. In Proceedings of the International Conference on Neural Information Processing Systems, Denver, CO, USA, 28–30 November 2000; Volume 13, pp. 556–562. [Google Scholar]

- Tucker, L. Some mathematical notes on three-mode factor analysis. Psychometrika 1966, 31, 279–311. [Google Scholar] [CrossRef]

- Kim, Y.; Choi, S. Nonnegative Tucker decomposition. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Kolda, T.; Bader, B. Tensor decompositions and applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Che, M.; Wei, Y.; Yan, H. An efficient randomized algorithm for computing the approximate tucker decomposition. J. Sci. Comput. 2021, 88, 1–29. [Google Scholar] [CrossRef]

- Pan, J.; Ng, M.; Liu, Y.; Zhang, X.; Yan, H. Orthogonal nonnegative Tucker decomposition. SIAM J. Sci. Comput. 2021, 43, B55–B81. [Google Scholar] [CrossRef]

- Ding, C.; He, X.; Simon, H. On the equivalence of nonnegative matrix factorization and spectral clustering. In Proceedings of the 2005 SIAM International Conference on Data Mining (SDM05), Newport Beach, CA, USA, 21–23 April 2005; pp. 606–610. [Google Scholar]

- Ding, C.; Li, T.; Peng, W.; Park, H. Orthogonal nonnegative matrix tri-factorizations for clustering. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PA, USA, 20–23 August 2006; pp. 126–135. [Google Scholar]

- Pan, J.; Ng, M. Orthogonal nonnegative matrix factorization by sparsity and nuclear norm optimization. SIAM. J. Matrix Anal. Appl. 2018, 39, 856–875. [Google Scholar] [CrossRef]

- Guillamet, D.; Vitria, J.; Schiele, B. Introducing a weighted nonnegative matrix factorization for image classification. Pattern Recognit. Lett. 2003, 24, 2447–2454. [Google Scholar] [CrossRef]

- Tan, X.; Triggs, B. Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans. Image Process. 2010, 19, 1635–1650. [Google Scholar]

- Pauca, V.; Shahnaz, F.; Berry, M.; Plemmons, R. Text mining using nonnegative matrix factorizations. SIAM. Int. Conf. Data Min. 2004, 4, 452–456. [Google Scholar]

- Li, T.; Ding, C. The relationships among various nonnegative matrix factorization methods for clustering. IEEE. Comput. Soci. 2006, 4, 362–371. [Google Scholar]

- Liu, Y.; Jing, L.; Ng, M. Robust and non-negative collective matrix factorization for text-to-image transfer learning. IEEE Trans. Image Process. 2015, 24, 4701–4714. [Google Scholar]

- Gillis, N. Sparse and unique nonnegative matrix factorization through data preprocessing. J. Mach. Learn. Res. 2012, 1, 3349–3386. [Google Scholar]

- Gillis, N. Nonnegative Matrix Factorization; SIAM: Philadelphia, PA, USA, 2020. [Google Scholar]

- Wang, W.; Qian, Y.; Tan, Y. Hypergraph-regularized spares NMF for hyperspectral unmixing. IEEE J. Sel. Topi. Appl. Earth. Obs. Remot Sens. 2016, 9, 681–694. [Google Scholar] [CrossRef]

- Ma, Y.; Li, C.; Mei, X.; Liu, C.; Ma, J. Robust sparse hyperspectral unmixing withL2,1 norm. IEEE Trans. Geosci. Remot Sens. 2017, 55, 1227–1239. [Google Scholar] [CrossRef]

- Li, Z.; Liu, J.; Lu, H. Structure preserving non-negative matrix factorization for dimensionality reduction. Comput. Vis. Image Underst. 2013, 117, 1175–1189. [Google Scholar] [CrossRef]

- Luo, X.; Zhou, M.; Leung, H.; Xia, Y.; Zhu, Q.; You, Z.; Li, S. An incremental-and-static-combined scheme for matrix-factorization- based collaborative filtering. IEEE Trans. Autom. Sci. Eng. 2014, 13, 333–343. [Google Scholar] [CrossRef]

- Zhou, G.; Yang, Z.; Xie, S.; Yang, J. Online blind source separation using incremental nonnegative matrix factorization with volume constraint. IEEE Trans. Neur. Netw. 2011, 22, 550–560. [Google Scholar] [CrossRef] [PubMed]

- Pan, J.; Gillis, N. Generalized separable nonnegative matrix factorization. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1546–1561. [Google Scholar] [CrossRef] [PubMed]

- Shang, F.; Jiao, L.; Wang, F. Graph dual regularization nonnegative matrix factorization for co-clustering. Pattern Recognit. 2012, 45, 2237–2250. [Google Scholar] [CrossRef]

- Zeng, K.; Jun, Y.; Wang, C.; You, J.; Jin, T. Image clustering by hypergraph regularized nonnegatve matrix factorization. Neurocomputing 2014, 138, 209–217. [Google Scholar] [CrossRef]

- Leng, C.; Zhang, H.; Cai, G.; Cheng, I.; Basu, A. Graph regularized Lp smooth nonnegative matrix factorization for data representation. IEEE/CAA J. Autom. 2019, 6, 584–595. [Google Scholar] [CrossRef]

- Qiu, Y.; Zhou, G.; Zhang, Y.; Xie, S. Graph regularized nonnegative tucker decomposition for tensor data representation. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 8613–8617. [Google Scholar]

- Qiu, Y.; Zhou, G.; Wang, Y.; Zhang, Y.; Xie, S. A generalized graph regularized non-negative Tucker decomposition framework for tensor data representation. IEEE Trans. Cybern. 2022, 52, 594–607. [Google Scholar] [CrossRef]

- Wood, G.; Jennings, L. On the use of spline functions for data smoothing. J. Biomech. 1979, 12, 477–479. [Google Scholar] [CrossRef]

- Lyons, J. Differentiation of solutions of nonlocal boundary value problems with respect to boundary data. Electron. J. Qual. Theory Differ. Equ. 2001, 51, 1–11. [Google Scholar] [CrossRef]

- Xu, L. Data smoothing regularization, multi-sets-learning, and problem solving strategies. Neural Netw. 2003, 16, 817–825. [Google Scholar] [CrossRef] [PubMed]

- Zhou, D.; Huang, J.; Scholkopf, B. Learning with Hypergraphs: Clustering, Classification, and Embdding; MIT Press: Cambridge, MA, USA, 2006; Volume 19, pp. 1601–1608. [Google Scholar]

- Gao, Y.; Zhang, Z.; Lin, H.; Zhao, X.; Du, S.; Zou, C. Hypergraph learning: Methods and practices. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2548–2566. [Google Scholar] [CrossRef] [PubMed]

- Huan, Y.; Liu, Q.; Lv, F.; Gong, Y.; Metaxax, D. Unsupervised image categorization by hypergraph partition. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 17–24. [Google Scholar]

- Yu, J.; Tao, D.; Wang, M. Adaptive hypergraph learning and its application in image classification. IEEE Trans. Image Process. 2012, 21, 3262–3272. [Google Scholar] [PubMed]

- Hong, C.; Yu, J.; Li, J.; Chen, X. Multi-view hypergraph learning by patch alignment framework. Neurocomputing 2013, 118, 79–86. [Google Scholar] [CrossRef]

- Wang, C.; Yu, J.; Tao, D. High-level attributes modeling for indoor scenes classifiation. Neurocomputing 2013, 121, 337–343. [Google Scholar] [CrossRef]

- Chen, C.; Liu, Y. A survey on hyperlink prediction. arXiv 2022, arXiv:2207.02911. [Google Scholar]

- Yin, W.; Qu, Y.; Ma, Z.; Liu, Q. Hyperntf: A hypergraph regularized nonnegative tensor factorization for dimensionality reduction. Neurocomputing 2022, 512, 190–202. [Google Scholar] [CrossRef]

- Wu, W.; Kwong, S.; Zhou, Y. Nonnegative matrix factorization with mixed hypergraph regularization for community detection. Inf. Sci. 2018, 435, 263–281. [Google Scholar] [CrossRef]

- Zhang, D. Semi-supervised multi-view clustering with dual hypergraph regularized partially shared nonnegative matrix factorization. Sci. China Technol. Sci. 2022, 65, 1349–1365. [Google Scholar] [CrossRef]

- Huang, H.; Zhou, G.; Liang, N.; Zhao, Q.; Xie, S. Diverse deep matrix factorization with hypergraph regularization for multiview data representation. IEEE/CAA J. Autom. Sin. 2022, 34, 1–44. [Google Scholar] [CrossRef]

- Cai, D.; He, X.; Han, J. Documen clustering using locality preserving indexing. IEEE Trans. Knowl. Data Eng. 2005, 17, 1624–1637. [Google Scholar] [CrossRef]

- Lovasz, L.; Plummer, M. Matching Theory; American Mathematical Society: Providence, RI, USA, 2009; Volume 367. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).