1. Introduction

Nowadays, network theory shows great importance in various fields such as engineering [

1,

2,

3,

4], finance [

5] and bioscience [

6]. Particularly, the problem of inferring structures of networks from measured data becomes an important task, and many practical issues can be categorized as such problems, e.g., modeling human brains [

7] or detecting sources of rumor propagation [

8]. The network identification problem has been studied in many perspectives, and various methods have been developed. Data correlation [

9,

10,

11,

12,

13,

14,

15,

16] plays an important role in network identification problems: in the presence of noises [

9,

14,

15] or unmeasured hidden signals [

16,

17], the time derivative of the dynamical correlation matrix among the nodes reveals the relationship between the adjacency matrix of the network and the covariance matrix of the noise. With the help of basis functions, nonlinearity is identified with a higher order data correlation [

13,

18]. Furthermore, sparse identification (compressive sensing) [

19,

20] techniques are employed to identify the structure of networks [

21,

22]: linear evolution of data is identified by finding the sparsest matrix that maps the data to the next step. Taylor expansion or trigonometric basis functions also make the method applicable to networks with nonlinear couplings [

13,

23,

24]. In [

25], the Koopman operator theory and sparse identification techniques are combined to achieve accurate identification of nonlinear coupling functions. Moreover, adaptive master–slave synchronization-based methods [

26,

27], statistic-based methods [

28] and variable (or phase) resetting-based methods [

15,

29] also achieve success.

Although most of the above-mentioned methods assume that all the states of the nodes are measurable, in practical cases there may exist states that cannot be measured, which are known as hidden variables. In [

10,

11,

17], the hidden variables are considered colored noises, and the identification is performed in the subspace spanned by the measurable states. In [

16], networks with hidden nodes are identified by reconstructing the adjacency matrix corresponding to the network and calculating the covariance of the obtained results, basing on the principle that unknown input signals from hidden nodes would enlarge the covariance. In [

30], such a method is extended to networks with transmission time delays. In this paper, we consider networks of interconnected dynamical systems and model the network structures as coupling functions that describe the inputs to the nodes via the network. Specifically, we focus on the case where only the output data of the nodes are measurable. The goal of this paper is then reduced to identifying the coupling functions from measured output data.

Generally speaking, the statistic-based [

28] and correlation-based methods [

9,

22] detect whether transmission between nodes exists; therefore, these methods could only obtain results about the topology of networks. Nonlinearity in network structures, modeled as nonlinear coupling functions, is commonly identified by approximating the dynamics that govern the nonlinear evolution of measured data with the help of basis functions [

13,

21,

23,

25,

31]. In this paper, compared to the widely studied case where all the states of the nodes can be measured, a major difficulty of obtaining the possibly nonlinear coupling function is that the coupling function may be dependent on the unmeasurable states, so the dynamics cannot be fully revealed from measured data. On the other hand, in the case where neither the dynamics of the nodes without inputs is unknown, nor data of unforced nodes can be measured, it is also a challenging task to distinguish the coupling function from the identified dynamics of all the nodes. Towards solving these problems, we propose an identification method that makes it possible to obtain the possibly nonlinear coupling functions from solely measured output data. The proposed method consists of the following three steps. First, we reformulate the dynamical model of the network. We consider the outputs of each node as the full states and the dynamics of the output signals as the dynamics of the node. In the second step, the unmeasurable hidden states are then modeled as unknown dynamical inputs. Defining such dynamical inputs as new variables, time series data of the new variables can be calculated and the dynamics of such variables can be identified with the help of Koopman operators. In the third step, the network dynamics are then identified in terms of the outputs and the new variables using measured data, and the network structure is extracted as the data transmission in the network. If the dimension of the output is so low that the dynamics of the network cannot be embedded into the space spanned by the outputs and the new variables, then additional variables are introduced based on past data [

32]. We show that the obtained coupling function is a projection of the future values of the dynamical inputs onto the space spanned by some pre-defined observable functions, and discuss the causes of identification errors. Compared to previous efforts, the contribution of this paper is two-fold:

The proposed method is applicable to networks whose nodes have unknown dynamics and only the outputs can be measured;

The proposed method is applicable to the case where data transmission in the network is represented by nonlinear functions.

With these advantages, the proposed method is expected to be applicable to practical problems such as analyzing electronic circuits with limited measurements and modeling nervous systems.

This paper is organized as follows. In

Section 2, we provide brief introductions to the Koopman operator and Koopman mode decomposition.

Section 3 describes the proposed identification method, and

Section 4 presents numerical identification examples to show the validity and usefulness of the obtained results. In

Section 5, we summarize the paper and conclude with some remarks and discussions.

Throughout this paper, , and denote the sets of all the natural numbers, all the real numbers and all the complex numbers, respectively. denotes the p norm of an n-dimensional vector x defined by , where and denote the Frobenius norm of matrix defined by . For constants, vectors, matrices or functions , or denote the composed vector (or matrix) , where is the Hermitian transpose of . For a vector , denotes the space spanned by all its entries, i.e., , and denotes the space of n-dimensional vectors whose entries are contained in . For a functional space and its subspace , denotes the projection operator defined by , where and is a norm defined on . denotes the ith standard basis vector of appropriate dimension such that the ith entry of is 1 and other entries are 0. denotes the evolution of variable x, i.e., where , and specially, .

2. Preliminary: The Koopman Operator

In this paper, we consider Koopman operators in discrete-time settings [

33,

34]. For the discrete-time system described by

where

and

is a nonlinear vector field, define

to be an

observable (function), and denote the space of all the observables by

. Define the operator space of all the operators that map

to

by

, i.e.,

. Then, the evolution of the states of system (

1) can be described by the evolution of the observable functions governed by the

Koopman Operator K defined on

by

The Koopman operator is an infinite-dimensional operator that preserves all the nonlinear characteristics of vector field

, and is linear in the sense that

for any

and

. Define

and

to be eigenfunctions and the corresponding eigenvalues of Koopman operator

K such that

. If the eigenfunctions span

, then for any

, there exist

for

such that

and

Equation (

3) is called the

Koopman mode decomposition of

, and

is called the eigenmode of

with respect to

.

To obtain a

q-dimensional approximation of

K, we consider the first

q dominant pairs of eigenvalues and eigenfunctions of Koopman operators

K, denoted by

. Define the space spanned by the

q eigenfunctions by

, i.e.,

. For any

, there exists a

such that

and

where

and

. If the set of eigenfunctions

is sufficiently rich, then the spanned space can be considered to contain

all the observable functions, i.e.,

. Furthermore, consider an observable function set

of

q linearly independent elements, and define

. Suppose that

also forms a set of linearly independent basis of

, then there exists an invertible linear map

P that maps

to

, i.e.,

, and

Setting

,

A is a

q-dimensional approximation of Koopman operator

K, such that

. For any

that has an approximation

, a

q-dimensional approximation of

can be obtained as

In this paper, a Koopman operator acts on a vector in an entry-wise manner, i.e., for , where .

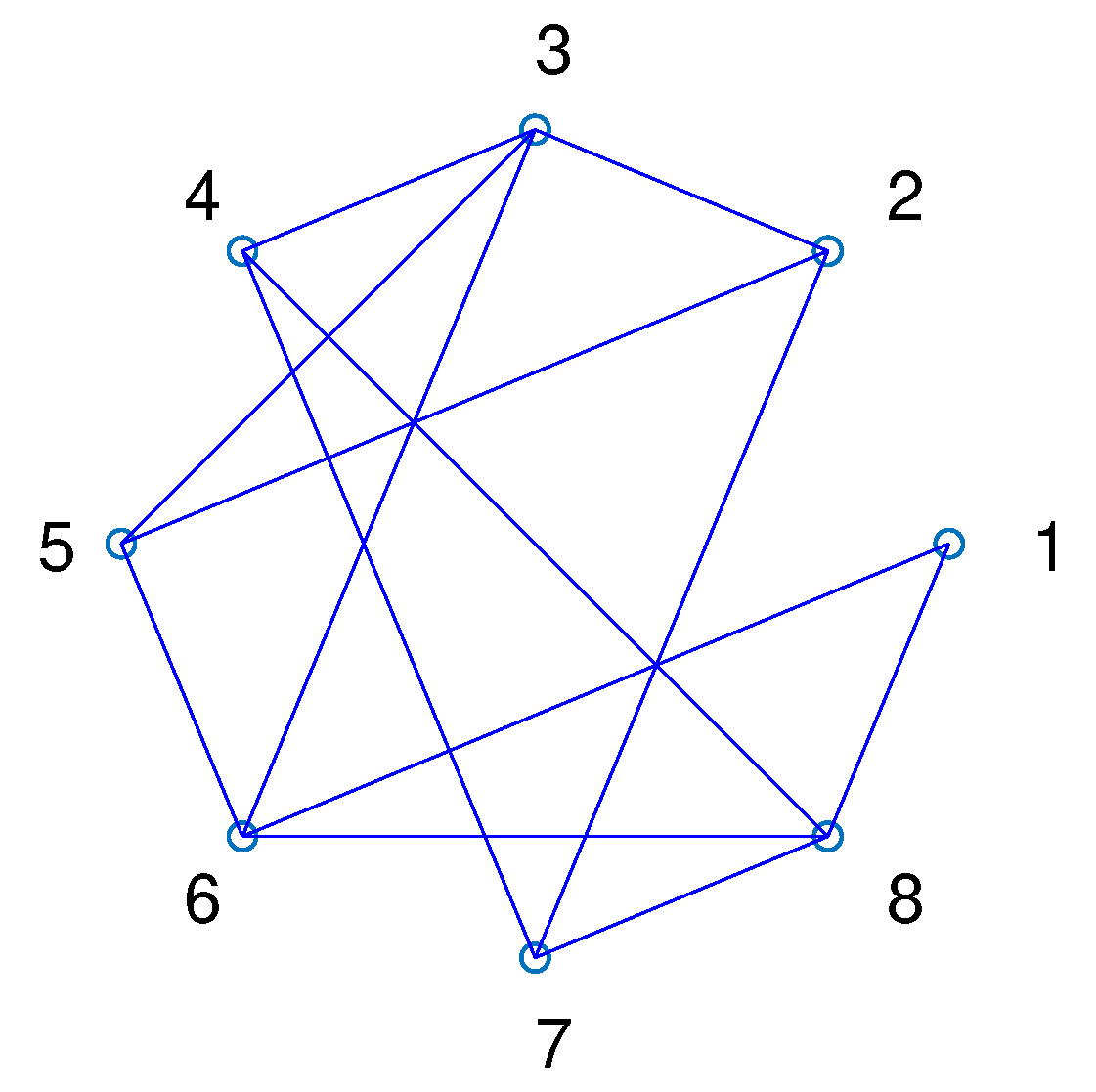

3. Network Structure Identification Based on Output Data

Consider the network system of

N nodes described by

where

,

denotes the states,

is locally Lipschitz continuous,

is the output of node

i, and

denotes the transmission sent to node

i via the network where

is the

entry of the adjacency matrix associated with the network topology. Define

and rewrite the dynamics of the network into

where

,

,

,

, and ⊗ denotes the Kronecker product. Here, the network structure is fully contained in

, so the network structure identification problem is reduced to identifying

. Specifically, we consider the case where a linear approximation of the dynamical model is known, and attempt to identify the network structure from measured output data. We first reformulate the dynamical model of the nodes such that the data of the new variables used to describe the dynamics can be calculated from the output, and then describe a numerical method to obtain the coupling function. The identification method is also considered in two cases where the dimension of the output is sufficiently high or not, respectively.

We first construct the dynamical model of the nodes such that the outputs

correspond to the full states, and the unmeasurable states as unknown dynamical inputs. Let

be a linear approximation of

where

,

and

denote the modeling error. Let

be such that

has full rank, and rewrite (

5) into

Since only data of the outputs

of the nodes can be measured, we rewrite

into

, where

consists of the first

m columns of

and

. Define

for

. Substituting

into (

7a),

is considered a dynamical input to

, i.e.,

, where the dynamics of

are unknown. The merits of defining the variables

are two-fold: the dynamics of

becomes fully known and

can be obtained as

. We consider two cases where the dimension of

is smaller than the dimensional of

or not, respectively. Note that though

is unknown, data for

can be calculated from

and the dimension of

can be inferred by checking the linear dependency of the data series.

We first consider the case where

. In this case, no additional variables are required to span the dynamics of

, so let the dynamics of

be described by

, where

and

. Reformulate the dynamical models of the nodes as

for

, and rewrite the dynamics of the network into

where

,

and ⊗ denotes the Kronecker product.

Next, we attempt to extract the dynamics of

y, i.e.,

, from measured data

and

using the Koopman operator theory. Define

to be observable for

where

is the space of all the complex-valued scalar functions, i.e.,

. Let

K denote the Koopman operator associated with dynamics (9), which governs the evolution of the observables, i.e.,

for

. Define

to be an observable set. Then, for any observable

, which can be approximated as

,

, it follows from (

4) that a

q-dimensional approximation of

can be obtained as

where

is such that

. Here,

and

A numerically satisfy

and

, respectively. As a result, by considering the states

y as variables, the right-hand side of (9b) can be approximated as

where

is such that

. Note that the existence of the expansion matrix

can always be ensured by including the states

w and

y as observables in

. The identification problem is then reduced to obtaining

A, which is a matrix approximation of

K.

To obtain

A from data, suppose that

steps of data, indexed by

, are measured. Then, data for

can be obtained as

for

. Define data matrices

as

According to the definition of

K, i.e., (

10), entries in

Y contain the evolution of the observations in

X so

holds, and the desired

A can be obtained as the transition matrix that maps

X to

Y, i.e.,

where

denotes the Frobenius norm. Denote the optimality of optimization problem (

12) by

, and an approximation of dynamics (9b) is obtained as

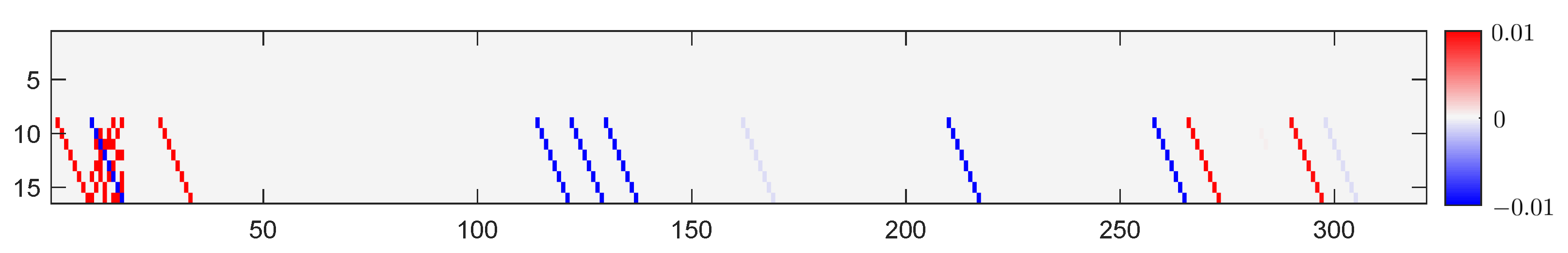

Next, we obtain information about the network structure from the identified dynamics of

y, i.e.,

. Specifically, we extract the information sent to the nodes from other nodes via the network [

31]. If all the connections in the network were cut, then

and

should depend only on

and

. This fact indicates that the transmission from node

j to node

i can be identified as the terms in the dynamical model of node

i that depends on

or

. It follows (

13) that for the

ith node,

where

is such that

. Define

to be the observable vector such that all the entries, which are

dependent on

or

for any

, in

are set to 0, and define

. Note that

now contains observables that only depend on the states of node

i. Then, (

14) can be rewritten as

Here,

corresponds to the information sent from other nodes to node

i via the network, and is considered as the network structure to be identified. As a result, the dynamics of the network are identified as

where

is the identified coupling function.

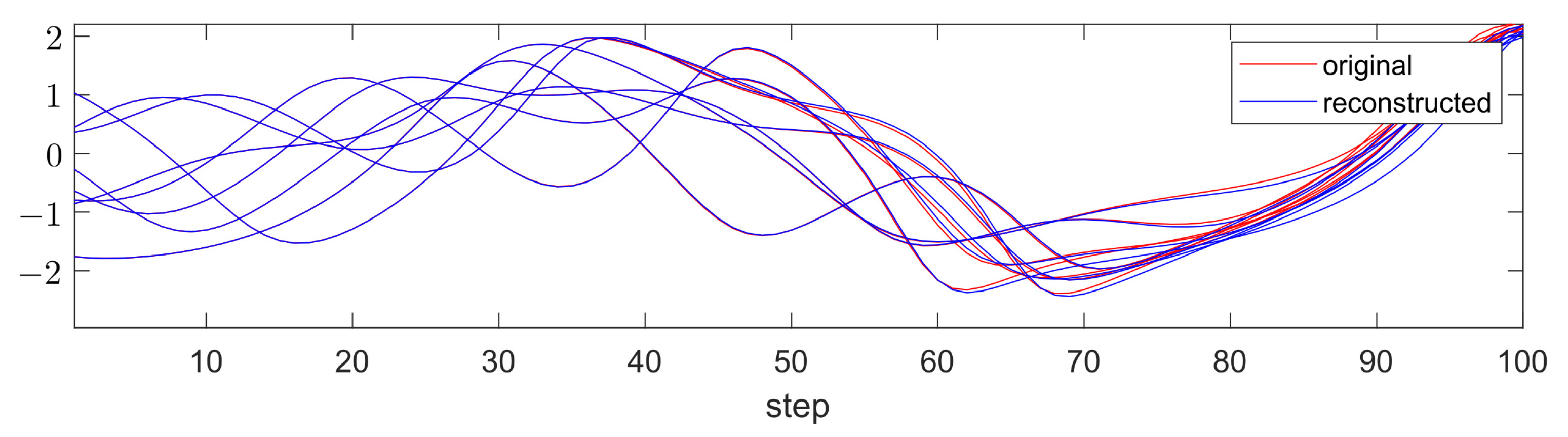

Remark 1. Since in (7b) is dimensional, theoretically only linearly independent variables are required to fully describe the dynamics. Without loss of generality, define to be a vector consisting of the first linearly independent entries in and define . Then, dynamics (9b) can be rewritten intowhere . Next, we consider the case where

. In such a case, with

defined by

, as in (8),

, which means that additional variables are required for the dynamics of

to be fully embedded into the space spanned by the new variables. Here, we make use of delay coordinates [

32] to complement the dimension. Let

r be the smallest integer such that

, and rewrite the dynamics of the network (9) into

where

is defined the same as in (9). Further, define

for

and define

. Then, we have

and the dynamics of

x can be embedded into the space spanned by

and

z. The identification is then performed by finding an approximation

of the Koopman operator defined by

using data matrices defined by

constructed with

steps of measured data of

w. The dynamics of the network are identified as

where

is such that

,

contains observables that solely depend on the states of node

i, and

is the identified coupling function, where

as in (

15). Note that the case where

can be considered as a special case where

and

z does not exist.

The proposed identification algorithm is summarized as Algorithm 1.

| Algorithm 1 Proposed identification algorithm |

Input: measured time series of the outputs of the nodes, as an approximation of , C, and n Output: or 1. Initialization: find r as the minimum integer with which 2. Matrices construction: if then design an observable set and construct data matrices with (11) else design an observable set and construct data matrices with (21) end if 3. Approximate the Koopman operator: calculate with ( 12) 4. Obtain the coupling function: if then obtain with ( 17) else obtain with ( 22) end if 5. Output: or |

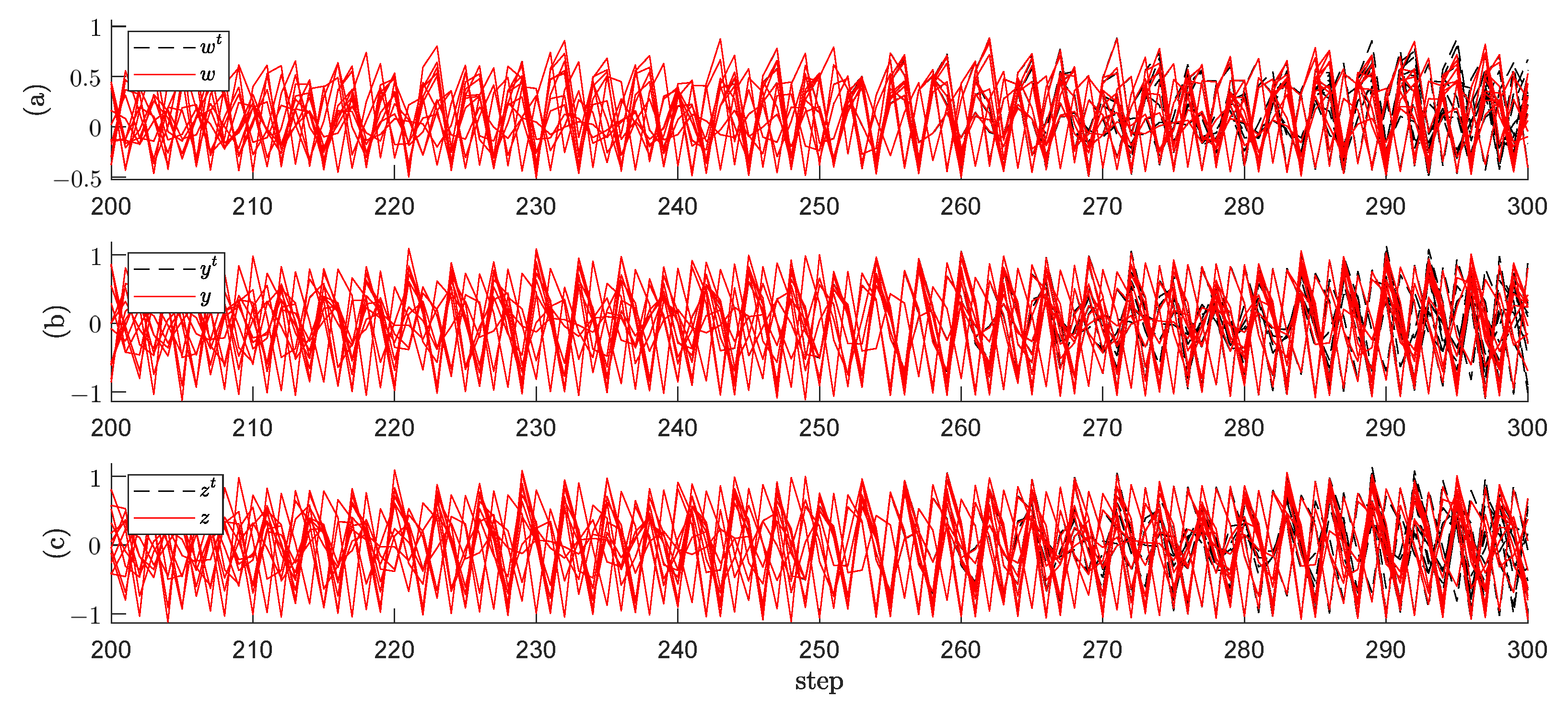

Next, we consider the relationship between the identified coupling function and the original , and discuss the identification error in terms of the difference between the original and the identified dynamics of the measured output w.

Proposition 1. If the amount of data is sufficiently large () and the data are uniformly random, then for and , the jth component of the identified coupling function for the ith node, i.e., , is a projection of onto :with respective to dynamics (19). Proof. We first show the relationship between the theoretical and the identified dynamics of

y, and then show the validity of Equation (

23).

As shown in [

25,

35], the solution of optimization problem (

12), i.e.,

, minimizes

over the manifold where data are measured, so

is an approximation of the Koopman operator defined in (

10), in the sense that for any complex-value scalar function

[

35],

where

. Since we can design

to contain

and

z as observables, the evolution of the

jth entry of

is obtained as

i.e., the identified evolution

is a projection of the true evolution

onto the space spanned by the observables in

.

On the other hand, by the construction of

in (

22), the coupling function of node

i is obtained as a linear combination of the observables in

, i.e.,

Making use of dynamics (

19a) that

and the fact that

, we have

where we substituted

. □

Proposition 1 means that the coupling function is obtained in terms of the

r-step-future and the

-step-future values of

w, which is estimated using the dynamics identified from measured data. On the other hand, the identification error of the proposed method depends on two factors, namely the design of the observable set

and the amount of measured data [

25,

31]. To reduce the identification errors, one could design the observable set sufficiently rich to obtain a close approximation of

, and increase the amount of measurements.

Remark 2. The Koopman mode decomposition theory guarantees that the evolution of any coupling function can be decomposed into a linear combination of the evolution of an infinite amount of eigenfunctions, i.e., , , and such decomposition holds globally in the space of state variables. In practical situations, since we can only handle a finite amount of variables, the dynamic-mode-composition-like numerical method we employed is only able to recover point spectra of the Koopman operator [36,37]. As a result, the proposed method gives an optimal coefficient matrix of the observable vector such that the corresponding linear combination approximates the coupling function over a certain set where data are measured. Remark 3. The observable set should be designed sufficiently rich to reach high accuracy, and the computational cost would blow up as the number of nodes or the complexity of the coupling function increase. Since there is no a priori knowledge about the coupling functions, we can only design the observable set to be as rich as possible to hope the spanned space contains the coupling function, i.e., using trigonometric basis or power series. Raising the amount of observables would also lead to higher computational costs, which reveals a trade-off between computation costs and identification accuracy of nonlinearity.