1. Introduction

Most optimization algorithms can only provide one of the optimal solutions when it is applied, even if more than one optimal solution may exist. Nevertheless, in some situations, finding multiple optimal solutions is desired, for example the following:

The global optimal solution is not always implementable in real-world problems, due to some unforeseen physical, financial, or political constraints, such as the availability of some critical resources in the future and the dynamic environment in path-planning problems. Knowing multiple good alternatives is helpful for decision-makers to switch the solutions quickly when needed. For instance, if a machine fails during production and its repair is time-consuming, the decision-maker can quickly change the production plans without using the machine during that time.

It is common in practice that some issues are difficult to formulate into the objective function or to evaluate exactly, such as the sensitivity of the selected machine parameters, the maintenance policy, and the preferences of decision-makers. In this situation, having a set of different and good alternatives in advance is often desired for further analysis.

In some cases, it is critical to determine all highly valued areas (or all possibilities), such as the contaminant source identification in the water distribution network [

1].

In surrogate-based optimization methods [

2,

3], the promising solution pointed out by optimizing the constructed surrogate model is simulated iteratively. Finding multiple promising solutions in one iteration allows the methods to take advantage of parallel computing to run several time-consuming simulations simultaneously.

Finding different locations of the peaks of the objective function in the search space can indicate some structural knowledge of the optimized function and provide some helpful insights into the properties of the studied system.

Multimodal optimization problem is concerned with locating multiple optima in one single run [

4]. The aim of this paper is to deal with multimodal optimization problems, seeking multiple global optimal solutions and high-quality local optimal solutions (local optimal solutions with good objective function values) of the given problem. The benefits of applying multimodal optimization have been studied in many fields [

5], such as seismological problems [

6], metabolic network modeling [

7], job-shop scheduling [

8], virtual camera configuration problems [

9], and feature selection [

10].

In order to handle multimodal optimization tasks, classic optimization methods can be applied in multiple runs hoping to find different optima. Nevertheless, the same optimal solution may be obtained in different runs. If all

m optimal solutions have the same probability to be found, the expectation of the number of optimization runs required to locate all optima is

. This value is usually much larger in practice since one of the optima may have higher probability to be discovered than others. To avoid converging to the same solution, a common approach is that if one optimal solution is determined, the fitness (i.e., the objective function values) in the observed region is depressed in subsequent runs so that different optimal solutions can be found sequentially, e.g., [

11,

12]. Still, at least the same number of optimization runs as the number of the optimal solutions are required. In addition, if the fitness derating function and the distance parameter that defines the neighbor range are not carefully selected, it may result in elimination of other optima that have not been found, or spurious optima caused by the modified objective function, although the occurrence of spurious optima can be reduced by incorporating a local search method based on the original objective function, e.g., sequential niching memetic algorithm (SNMA) [

13]. Approaches without the determination of the neighbor radius can also be found in the literature, but a lot of effort is spent in an additional sampling of interior points, e.g., [

14,

15].

Evolutionary algorithms (EAs), e.g., genetic algorithm (GA) [

16], particle swarm optimization (PSO) [

17], and differential evolution (DE) [

18], have the ability to preserve multiple solutions during the optimization process. With the help of niching techniques, they are capable of capturing multiple optima in a single run. Niching techniques were originally developed to preserve the population diversity and reduce the impact of the genetic shift. They are also used in multiobjective optimization problems to search for the Pareto-optimal set, e.g., nondominated sorting GA II (NSGA-II) [

19]. In multimodal optimization problems, the use of niching techniques promotes population diversity, allowing multiple optima to be found and maintained. Among the niching techniques in the literature, the clearing procedure [

20] eliminates neighbors before the selection until only a few dominating individuals remain in the clearing radius. Singh and Deb [

21] reallocate the cleared individuals outside the clearing radius, hoping to find other areas of interest. Fitness sharing methods [

22,

23,

24] depress the fitness of densely located individuals according to the population density within the sharing radius. Clustering algorithms, e.g., k-means method [

25], can be used in fitness sharing methods for the formation of niches to reduce the computational cost and avoid the determination of sharing radius, e.g., [

26]. In crowding approaches [

27,

28], the new generated individual replaces the most similar individual to maintain the initial diversity, if better fitness is observed. In restricted tournament selection [

29], the new generated individuals compete with the nearest individuals in a subpopulation randomly sampled from the population. In species conservation [

30,

31], the species seeds dominating other individuals in the same species are conserved into the next generation and updated iteratively. Li [

32] designed a ring topology [

33] on the particle swarm and used the

lbest PSO to create small niches without niching parameters. A detailed survey on some basic niching techniques in multimodal optimization can be found in [

34]. In addition, several niching mutation operator strategies for DE have been proposed for multimodal optimization problems recently. For example, local-binary-pattern-based adaptive DE (LBPADE) [

35] uses the local binary pattern operator to identify multiple regions of interests; distributed individuals DE (DIDE) [

36] constructs a virtual population for each individual so that each individual can search for its own optima; automatic niching DE (ANDE) [

37] locates multiple peaks based on the affinity propagation clustering.

In the aforementioned niching techniques, the entire population evolves together and genetic operators are designed to preserve the population diversity. In contrast, some niching algorithms divide the entire population into parallel subpopulations, including forking GA [

38], multinational GA [

39], multipopulation GA [

40], NichePSO [

41], speciation-based PSO (SPSO) [

42], swarms [

43,

44], culture algorithm with fuzzing cluster [

45], LAM-ACO [

46], and dual-strategy DE (DSDE) [

47]. Each subpopulation evolves independently, searching for its own optimum. Different from classic EAs with multiple runs, subpopulations will be merged, split, interchanged, or reformed during the search process according to the positions of the individuals in the entire population. As a consequence, repeated convergence and inefficiency search are avoided.

Most existing niching techniques are sensitive to the selected parameters, which are usually application-dependent and may be heterogeneous in the search space, such as the radius parameters in clearing and fitness sharing, the species distance in species conservation, the window size in restricted tournament selection, and the number of seeds in clustering algorithms. Adaptive methods are studied to make the algorithms more robust to the distance parameters or to let the parameters vary in the search space, e.g., [

48,

49,

50]. Some approaches are developed to detect whether two individuals belong to the same peak without the use of distance parameters, such as the hill–valley function [

39] and the recursive middling [

51]. Nevertheless, a great amount of additional fitness evaluations are required, significantly reducing the efficiency of the algorithm. The formation of the niches is still a challenge in the multimodal optimization community.

Recently, some researchers solved the multimodal optimization problem by converting it into a multiobjective optimization problem, named

multiobjectivization [

52]. For instance, biobjective multipopulation genetic algorithm (BMPGA) [

53,

54] uses the average absolute value of the gradient as another ranking criterion, in addition to the original objective function, for elitism and selection in the GA framework, thus providing a chance for survival for local optima. Deb and Saha [

55,

56] created a second objective function (e.g., the norm of the gradient or the number of better neighboring solutions) so that all optima are located in the weak Pareto-optimal set. Then, the modified NSGA-II algorithm [

19], developed for multiobjective optimization problems, was applied to find all the optima in a single run. Diversity indicators, such as the distance from other individuals and the density of niches, are also considered as the second objective function to maintain the population diversity (similar to the niching techniques), e.g., [

57,

58]. Conflicting objective functions were also designed to increase the efficiency of the applied multiobjective optimization algorithm, e.g., multiobjective optimization for multimodal optimization (MOMMOP) [

59] and triobjective differential evolution for multimodal optimization (TriDEMO) [

60].

All the multimodal optimization methods mentioned above are developed under the framework of EAs, in which the population size should be determined based on the number of desired optima. However, the number of optima is usually unknown before executing the algorithm, although in some cases it can be estimated from prior knowledge about the system. Saving the obtained optima in an archive and reinitializing the individuals can extend the optimal solution set, but it may cause the population to converge to the previous found solutions.

Moreover, when dealing with the local optima, the existing multimodal optimization methods may fall into the following situations:

Can only find multiple global optimal solutions (e.g., MOMMOP [

59] and TriDEMO [

60]), i.e., the local optimal solutions cannot be found.

Assume that the global optimal solutions and local optimal solutions with different objective function values have the same importance (e.g., [

55,

56]).

Prefer local optimal solutions in sparse areas to local optimal solutions with good objective function values (e.g., EAs with niching).

Prior knowledge to determine the threshold (or tolerance) of the objective function value to decide whether to save a solution or not.

Therefore, when the computational time is limited, these methods cannot meet the demand of searching only the global optimal solutions and high-quality local optimal solutions (i.e., local optimal solutions with good objective function values) without prior knowledge.

A completely different approach, which requires no prior knowledge about number of optima in the studied problem, is proposed in this paper. In the proposed method, the feasible domain is partitioned into smaller and smaller subregions iteratively. At each iteration, solutions from different subregions are sampled and evaluated. Based on the observed information, different regions are partitioned at different rates. By controlling the partition rates of different regions, promising areas are exploited and reach the smallest size (e.g., singleton regions in discrete cases or regions with acceptable precision in continuous cases) earlier than nonpromising areas. Multiple promising areas can be partitioned in parallel, allowing multiple optimal solutions to be found in a single run. If the available budget size (the budget size indicates the number of evaluations of the objective function) is unlimited, all areas of the feasible domain will eventually be partitioned into subregions of the smallest size, i.e., all optima (both global and local) will be discovered eventually.

Partition-based random search methods, such as nested partition (NP) [

61], COMPASS [

62] and adaptive hyperbox algorithm [

63], are efficient in optimization problems with large search space, i.e., the feasible range of the decision variable is large compared to its desired precision. The entire domain is partitioned into subregions, based on previous observations, trying to guide the search towards a promising region. However, in most partition-based methods, only the most promising region can be identified and stored; thus, only one optimal solution can be found. The probabilistic branch and bound (PBnB) [

64,

65] is developed to locate a subset of feasible solutions whose objective function values reach the given quantile level. Different from our approach, the partition rates are homogeneous in the search space in the PBnB. All regions are partitioned at the same rate until they are pruned (statistically, no solutions in this region belong to the subset of interest) or maintained (statistically, all solutions in this region belong to the desired subset). The PBnB method may also be extended to find multiple global optimal solutions by setting an extremely small quantile level. However, this will result in a large budget spent on estimating quantiles.

The classification of related works and the difference compared to the proposed algorithm are summarized in

Table 1. A previous version of the proposed algorithm was presented in a conference paper [

66], which mainly focused on searching for the global optimal solution under the interference of a large number of local optimal solutions. The algorithm is extended in this paper to find and store multiple global optimal solutions as well as high-quality local optimal solutions, including a scheme to extract the optimal solutions and a local search to refine the extracted optimal solutions during the optimization process. The research contributions of this paper are summarized below:

Estimating the number of optima is not needed before performing the proposed method (which is required in all EA-based multimodal optimization methods). All optimal solutions (both global optimal solutions and local optimal solutions) will be discovered subsequently as the algorithm proceeds.

Given a computational time, global optimal solutions and high-quality local optimal solutions are captured with higher probabilities than low-quality local optimal solutions, and no prior knowledge about the objective function is needed. Current multimodal optimization methods either cannot find local optimal solutions or treat all optimal solutions as equally important.

The proposed method can also handle the cases in which the optimal solutions are regions rather than single points, i.e., there exists a region in the feasible domain where all solutions are optimal. The density of the solutions in the region depends on the user-defined precision level. This is new compared to all multimodal optimization methods.

The proposed method is tested in benchmark functions. The numerical results show that the proposed method works as expected and demonstrates good performance compared to other state-of-the-art multimodal optimization methods in the literature.

This paper is organized as follows.

Section 2 describes the proposed method in detail.

Section 3 combines the proposed method with a local search method to improve the efficiency of the algorithm. Numerical results on benchmark functions are discussed in

Section 4. An engineering case showing the application of the proposed method is presented in

Section 5. Finally, conclusions and future developments are presented in

Section 6.

2. Proposed Method

Without loss of generality, a minimization problem is considered: . The objective function is deterministic and the feasible domain is denoted as , where .

In the proposed method, the entire feasible domain is iteratively partitioned into subregions such that and . Each subregion contains a set of feasible solutions. In most partition-based optimization methods, the extreme value, the mean, or the quantile of the sampled values, i.e., the objective function values of sampled solutions, are commonly used to rank the regions. Compared to the extreme value, the quantile contains more global information about the region so that the influence of outliers can be mitigated. Compared to the mean, the quantile focuses more on the good part of solutions in this region, rather than the overall region. Therefore, the quantile is adopted as the ranking criterion in this paper. The lower the estimated -quantile, where , the more promising the region. A promising region is further partitioned into smaller subregions so that this region can be further exploited. Multiple promising regions can be partitioned in parallel in order to obtain a set of optimal solutions. More specifically, different partition rates are adopted for different regions, and promising regions would be partitioned faster (exploited earlier) than nonpromising regions.

In this paper, we define the partition rate of a region as the reciprocal of the number of iterations required for this region to be further partitioned. The idea of controlling the partition rates for different regions is realized with the help of a budget allocation strategy [

67], which is introduced for the sake of completeness in

Section 2.1. The computational complexity of the proposed method is analyzed in

Section 2.3.

Section 2.2 describes the proposed method in detail and a simple example is introduced in

Section 2.4 to give the reader a better understanding of the proposed method.

2.1. Budget Allocation for Quantile Minimization (BAQM)

The BAQM method [

67] is proposed to allocate a budget of size

N to

K groups, i.e., sample

N values from

K groups, aiming to minimize the

-quantile of all the sampled values. The budget is allocated to each group dynamically, based on the previous observations, trying to let the sample size in group

k be approximately proportional to its posterior probability of being the best group, i.e., the group having the lowest

-quantile. This budget allocation method is developed on the assumption that the values sampled from a group are independently, identically, and normally distributed. Nevertheless, the numerical results show that it also has good performance, even if the normality assumption is not satisfied.

Assume that for any group

k,

values have been observed and the group sample mean

and the group sample variance

are calculated based on the

observations. According to [

67], at each sampling stage, a new budget of size

should be allocated using the following equations:

where

denotes the total budget size allocated to group

k,

is the current best group defined as

,

is the

-quantile of the standard normal distribution,

,

is the cumulative distribution function of the F-distribution with degrees of freedom

and

,

and

Then, additional

values should be sampled from group

k at this sampling stage, where

indicates that the value is rounded to the nearest integer.

The BAQM method is easy to implement and it links the sample size to the ranking of groups defined by quantiles. Therefore, it is selected as the budget allocation strategy in the proposed method.

2.2. Partition-Based Random Search for Multimodal Optimization

Denote by the set of stored regions in iteration s. By regarding a region as a group, the BAQM method can be applied to sample solutions from different regions dynamically. Gradually, the sample sizes in different regions, denoted by , will be approximately proportional to their posterior probability of being the best region, i.e., the region having the minimal -quantile, based on the previous observations. If we set a threshold , the sample sizes in promising regions will achieve this threshold faster than that in nonpromising regions because more samples are allocated. Therefore, we further partition a region when its sample size reaches the threshold. This makes the promising regions have a higher partition rate than the nonpromising regions.

In the proposed method, the data observed in previous iterations are reused. This allows the deviations caused by the sampling noise to be transmitted to subsequent iterations. To mitigate the influence of the sampling noise, the BAQM formulas are modified. Denote by

the partition depth of a region

. In this paper, the partition depth of a region refers to the minimum number of partition actions required to obtain this region, which is often related to the size of the region. The smaller the region size, the larger the partition depth. The adjusted sample size

is calculated based on the partition depth as follows:

where

is the number of observations in region

and

indicates that the value is rounded to the nearest integer. This modification aims at forcing the method to also sample from nonpromising but broad regions. As all regions shrink, i.e., all

increase, and the influence of Equation (

4) will decrease. Then, according to the BAQM formulas, the weight assigned to region

is calculated as follows:

where

is the current best region defined as

,

is the

-quantile of the standard normal distribution, and

and

are the sample mean and sample variance of the objective function values of solutions sampled from region

, respectively.

,

is the cumulative distribution function of the F-distribution with degrees of freedom

and

. Given the new budget size

, the theoretical total budget sizes in each region

can be calculated as follows:

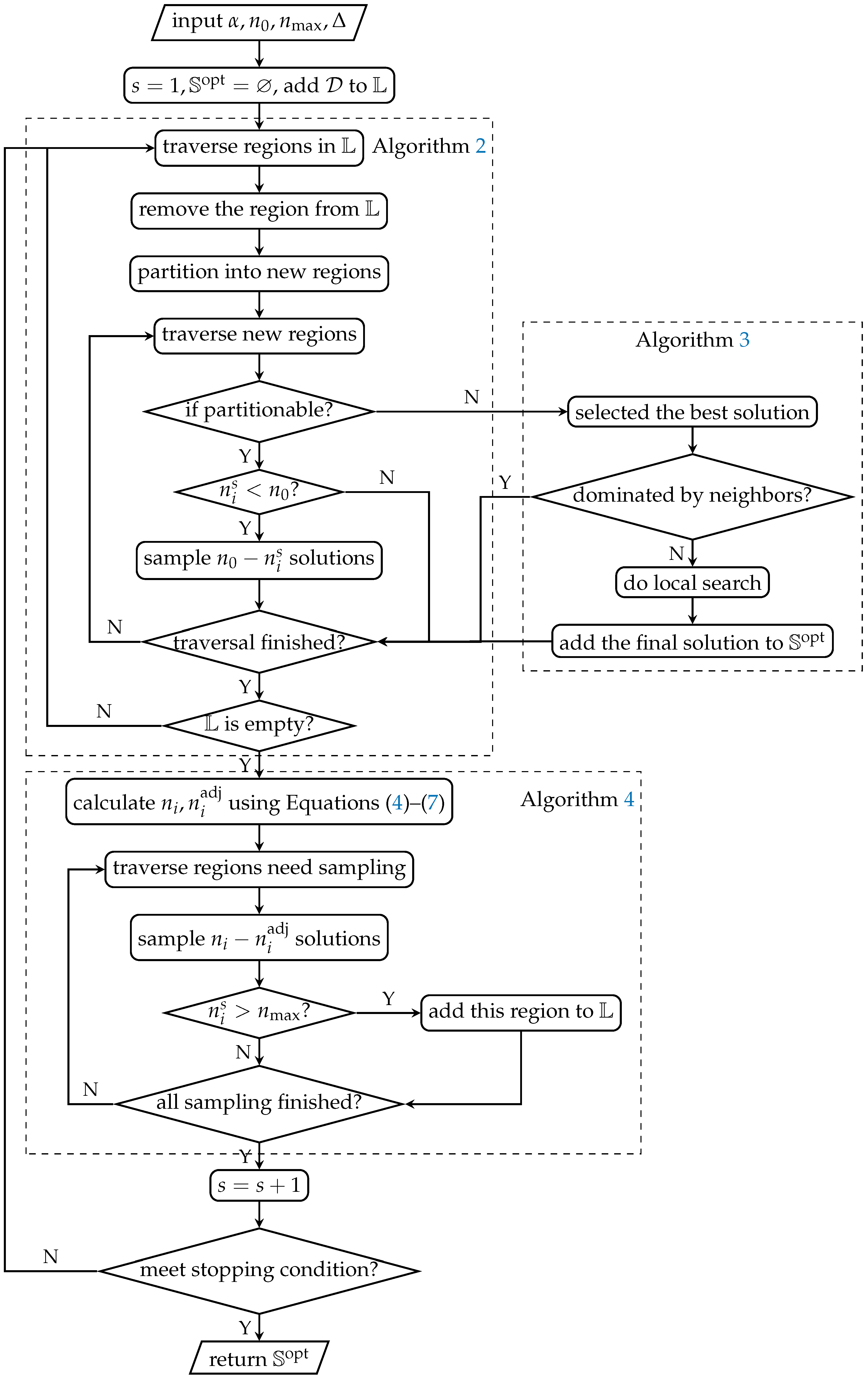

The proposed method is very straightforward and easy to implement. The flow chart is as shown in

Figure 1 and the main framework is presented in Algorithm 1. Four algorithm parameters are required:

| The quantile level in the definition of the best region, ; |

| The base sample size, ; |

| The sample size threshold to further partition a region, ; |

| ∆ | The new budget size at each iteration. |

| Algorithm 1 PAR- MMO. |

| Input: |

| Output: |

| 1: |

| 2: |

| 3: |

| 4: while do |

| 5: Partitioning (Algorithm 2) |

| 6: if then |

| 7: Updating (Algorithm 3) |

| 8: |

| 9: end if |

| 10: Budget Allocation (Algorithm 4) |

| 11: |

| 12: end while |

| Algorithm 2 Partitioning. |

- 1:

fordo - 2:

- 3:

- 4:

for do - 5:

update - 6:

if then - 7:

- 8:

else - 9:

if then - 10:

- 11:

end if - 12:

update - 13:

if then - 14:

- 15:

- 16:

else - 17:

0 - 18:

- 19:

- 20:

- 21:

end if - 22:

end if - 23:

end for - 24:

end for - 25:

|

| Algorithm 3 Extracting. |

- 1:

- 2:

whiledo - 3:

- 4:

if then - 5:

- 6:

end if - 7:

- 8:

end while - 9:

|

| Algorithm 4 Budget allocation. |

- 1:

- 2:

- 3:

- 4:

fordo - 5:

- 6:

update - 7:

if then - 8:

- 9:

else - 10:

update - 11:

- 12:

- 13:

end if - 14:

end for

|

, , , and are the user-defined partition strategy, sampling strategy, neighborhood of the selected solution, and stopping criteria, respectively. The output is the set of all sampled solutions and is the set of obtained optimal solutions. At the beginning of the algorithm, the set of the current regions and the partition list is set as the entire feasible domain . In the subsequent iterations, partitioning all regions in the partition list using Algorithm 2 and allocating budget to each region using Algorithm 4 are performed alternatively until the stopping criterion is met, such as the available budget is exhausted, the desired number of optimal solutions are obtained, or the number of obtained optimal solutions are not changed in several iterations. After the partitioning phase, if new potential optimal solutions are generated, i.e., , the set of optimal solutions are updated using Algorithm 3. This step can also be executed only at the end of the whole algorithm to save the computational effort, if is not related to the stopping criterion.

Algorithm 2 describes in detail the partitioning phase in Algorithm 1.

denotes the set of nonpartitionable regions and

is the indicator function. Every region in the partition list

is partitioned into several new subregions. The new subregions are added to the partition list if they are partitionable and their sample size

is still larger than or equal to the threshold

. Otherwise, new solutions are sampled so that the sample size in this new subregion is not less than the base sample size

. Then, the group sample mean

and the group sample variance

are updated. The adjusted sample size

is calculated using Equation (

4) and the weight for the budget allocation

is calculated using Equation (

5). After traversing the entire partition list

,

is set to an empty set.

Once a new nonpartitionable region is generated, the weight

and the adjusted sample size

are set to zero, since the solutions within this region are considered not different; thus, it is not necessary to keep sampling from this region. If the current best region

is nonpartitionable,

is saved for the calculation of Equation (

5). All the samples in the new generated nonpartitionable region are considered as potential optimal solutions; thus, they are added into the set of optima candidates

, and

is set to one to send the signal to run Algorithm 3.

Remark 1. In Algorithm 2, if the maximal partition depth, i.e., , in Equation (4) is changed, the adjusted sample size of all regions should be recalculated. Thus, all weights also need to be recalculated. Remark 2. In Algorithm 2, if the current optimal region, i.e., , in Equation (5) is changed, all weights should be recalculated. Algorithm 3 presents the detailed procedure to extract the set of the optimal solutions from the set of potential optima . In the algorithm, indicates that solution is not dominated by the neighboring solutions. The values are set to one for all solutions newly added into . Starting from the first solution with , the values are set to zero for all the solutions in the neighborhood whose objective function value is worse than the objective function value of the selected solution . If there exists a better solution in the neighborhood, the value of the selected solution is also set to zero. In optimization problems with continuous variables, a commonly used neighborhood function is , where is the Euclidean distance between and . In this case, Algorithm 3 is not sensitive to the selection of r.

Algorithm 4 describes in detail how to allocate a new budget of size at each iteration. The adjusted total budget size is calculated by summing the adjusted region sample size and . The theoretical total budget size at each region is calculated using the weight . Then, new solutions are sampled from region , where indicates that the value is rounded into the nearest integer. Once the sample size in a region reaches the threshold , this region is added to the partition list .

2.3. Computational Complexity

As the total budget size increases, the number of existing regions grows as well, which will results in raised computational time in later iterations. The computational complexity of the proposed method is investigated in this section to understand the extent to which the total budget size affects the computational effort.

In Algorithm 2, the maximal length of the partition list is in each iteration; thus, the time complexity of Algorithm 2 is in each iteration. The number of total iterations is less than , where N is the total budget size allocated. Thus, the time complexity of Algorithm 2 is in the entire optimization process.

Similar to Algorithm 2, the time complexity of lines 5–13 in Algorithm 4 is in each iteration and in the entire optimization process, respectively. As for lines 1–4 in Algorithm 4, the value should be calculated and compared to for all existing regions in each iteration. Thus, the time complexity is in iteration s. The number of existing regions is less than , where h indicates the maximal number of subregions that will be generated after a partition action. Therefore, the time complexity of lines 1–4 becomes in iteration s. In the entire optimization process, the time complexity is , i.e., it is increasing quadratically with respect to the total budget size N.

Algorithm 3 is only executed when new potential optimal solutions appear. Since the distance between every two solutions in the set of the potential optimal solutions should be calculated to determine the neighboring solutions, the time complexity of Algorithm 3 is in the entire optimization process. Usually, the number of potential optima is much less than the total budget size.

Therefore, the overall time complexity of the proposed method with respect to the total budget size is due to line 3 and line 4 in Algorithm 4.

2.4. An Illustrative Example

Minimizing the Himmelblau’s function is considered in this section as an illustrative example for the reader to better understand the proposed method. This problem has four global optimal solutions, and the objective function value varies from 0 to 2186 (the detailed information can be found in the selected benchmark function F2 in

Appendix A Figure A1). The goal of this problem is to find and store all these four global optimal solutions.

The proposed method is applied with

, and

. The regions are evenly partitioned into half from the horizontal and vertical directions iteratively until all edges of all regions are smaller than 0.05, which are considered as nonpartitionable regions.

Figure 2 presents the partition states on the entire feasible domain as the budget size

N increases. The sampled solutions are shown by dots, where their colors represent their objective function values. The darker the color, the lower the objective function value.

It can be seen that as the budget size increases, regions containing good solutions are partitioned at higher rates than other regions. The areas around the four global optimal solutions are exploited in parallel and reach the smallest size earlier than other areas. The sampling of solutions is guided by the proposed method. At the beginning of the algorithm, solutions are sampled evenly among the entire domain. As the budget size increases, the sampling probability of good solutions increases as well.

After about 3000 solutions are evaluated, Algorithm 3 is performed with , where , i.e., twice the length of the shortest edge of a nonpartitionable region. Four solutions are contained in and their objective function values are all less than . The distances from the four solutions to their corresponding real optimal solutions are all less than 0.014. The same results can be obtained with r varying from 0.042 to 3.9, which shows that Algorithm 3 is not sensitive to the selection of the distance parameter r.

4. Numerical Results

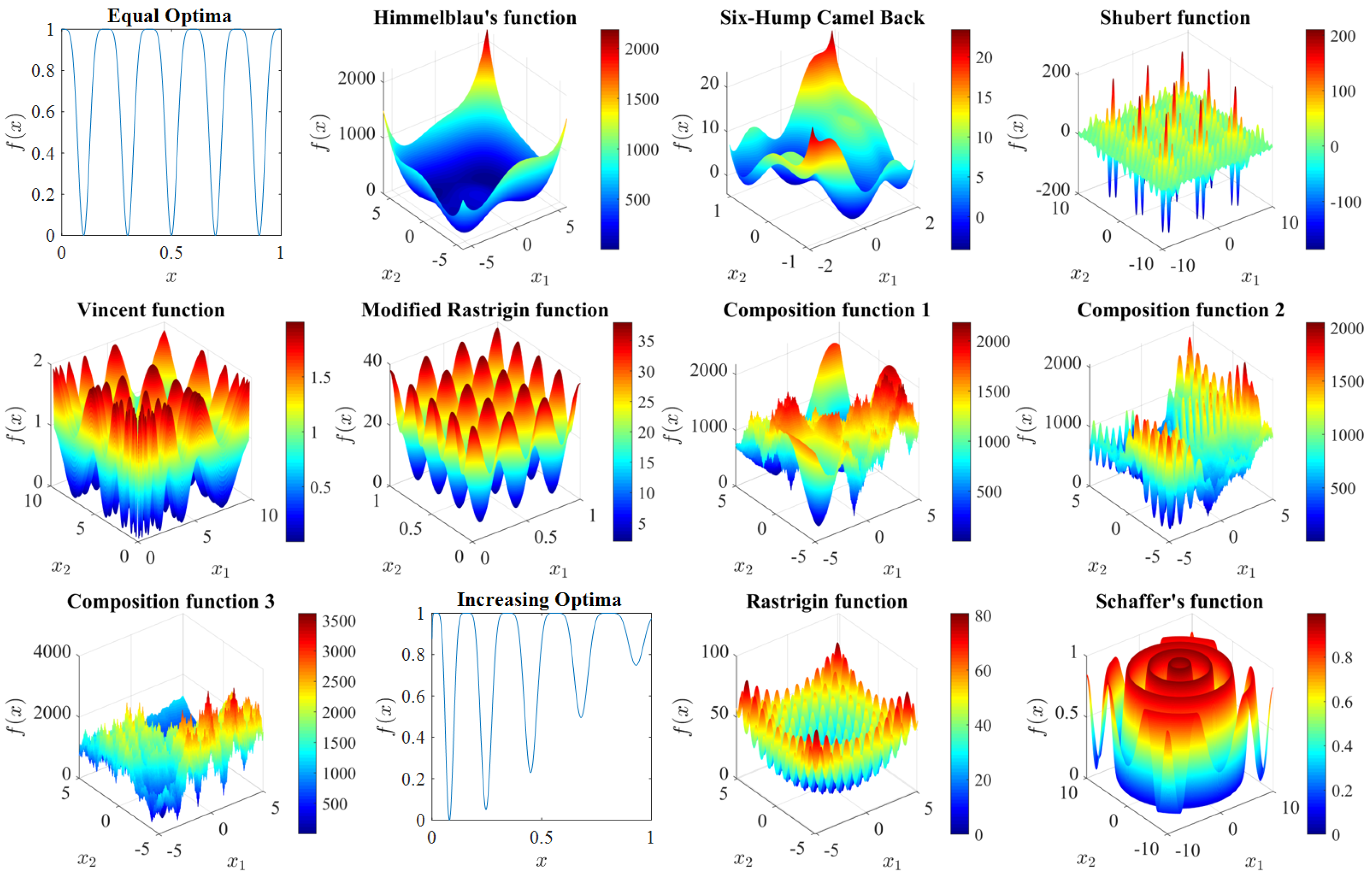

The proposed method is applied to minimization problems constructed by several benchmark functions with different properties extracted from the well-known test problems of the CEC’2013 competition for multimodal optimization [

34,

69].

Table 2 shows the selected objective function, the dimension of the decision variable, the number of global optima and local optima, the feasible domain, the objective function value range, and the maximum budget size

Max_Fes in different test problems. F1–F3 are simple examples with multiple global optima. F4 is a volatile function with numerous local optima. The optimal solutions in F5 are located in different topographies in the feasible domain. F6 contains multiple global optimal solutions distributed in a grid. F7 is composited by different basic functions such that the properties of different functions are mixed. F1–F7 contain multiple global optima, while F8 and F9 have only one global optimum and multiple local optima of different qualities. In F10, there are several regions in which all points are local optimal solutions. The detailed information and the surface plots of the benchmark functions can be found in

Appendix A.

In the following experiments, if the deviation from the objective function value of an obtained optimal solution to the objective function value of a real optimal solution is below

(level of accuracy) and the distance between these two solutions is less than the radius in

Table 2 (level of precision), this real optimal solution is considered as being found. For the proposed method, a solution is considered as an obtained optimal solution only when it is stored in

(]the datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request).

For the sake of simplicity, the proposed method, denoted as “PAR-MMO”, is applied with

, and

for all the following experiments, unless specifically stated. If a budget is allocated to a region, new solutions are sampled from this region uniformly (the sampling strategy). If the partitioning condition is met, the region is partitioned evenly into two subregions from the dimension with the largest range (the partition strategy). The accuracy level of the obtained optima is controlled by the acceptable precision of the obtained solution, i.e., the size of the nonpartitionable region. Once a region reaches the smallest size, i.e., it is nonpartitionable, the proposed method will stop sampling from this region and the accuracy of the obtained optima in this region will not be further improved. The smaller the region that is considered nonpartitionable, the more precise the solution obtained, and the larger the budget required. In the following experiments, the size of a nonpartitionable region is defined specific to the problem in order to met the required accuracy level

(as shown in

Appendix B). In practice, the size of the nonpartitionable region can be defined according to the acceptable precision of the solution obtained. The neighborhood function in Algorithm 3 for extracting optimal solutions from the sample set is selected as

, where

r is twice the length of the shortest edge of a nonpartitionable region.

In the case that local search is adopted, denoted as “PARL-MMO”, the size of the nonpartitionable region can be much larger (as shown in

Appendix B), since both the accuracy level of the obtained optima and the precision of the obtained solution can be improved through the local search. In the following experiments, the step threshold

in Algorithm 5 for local search is selected as the half length of the edge of the nonpartitionable region defined when the PAR-MMO method is used without local search.

Section 4.1 presents how the selected parameters affect the proposed method. In

Section 4.2, the proposed method is applied to some problems with multiple global optima and compared with other approaches in the literature.

Section 4.3 shows how the proposed method performs in the problems with multiple local optima of different qualities.

Section 4.4 deals with problems that exist an region in which all solutions are optimal. The computational time of the proposed method is analyzed in

Section 4.5.

4.1. Effect of Algorithm Parameters

This section investigates how the algorithm parameters, i.e., the quantile level , the base sample size , the partition sample size threshold , and the new budget size , affect the proposed method. In this section, “PARL-MMO” is applied and the accuracy level is set as ]1E-4. The base sample size is set to avoid the situation where too few samples remain in a subregion after a partition action. Thus, the is set as , where means that the value is rounded up to the nearest integer.

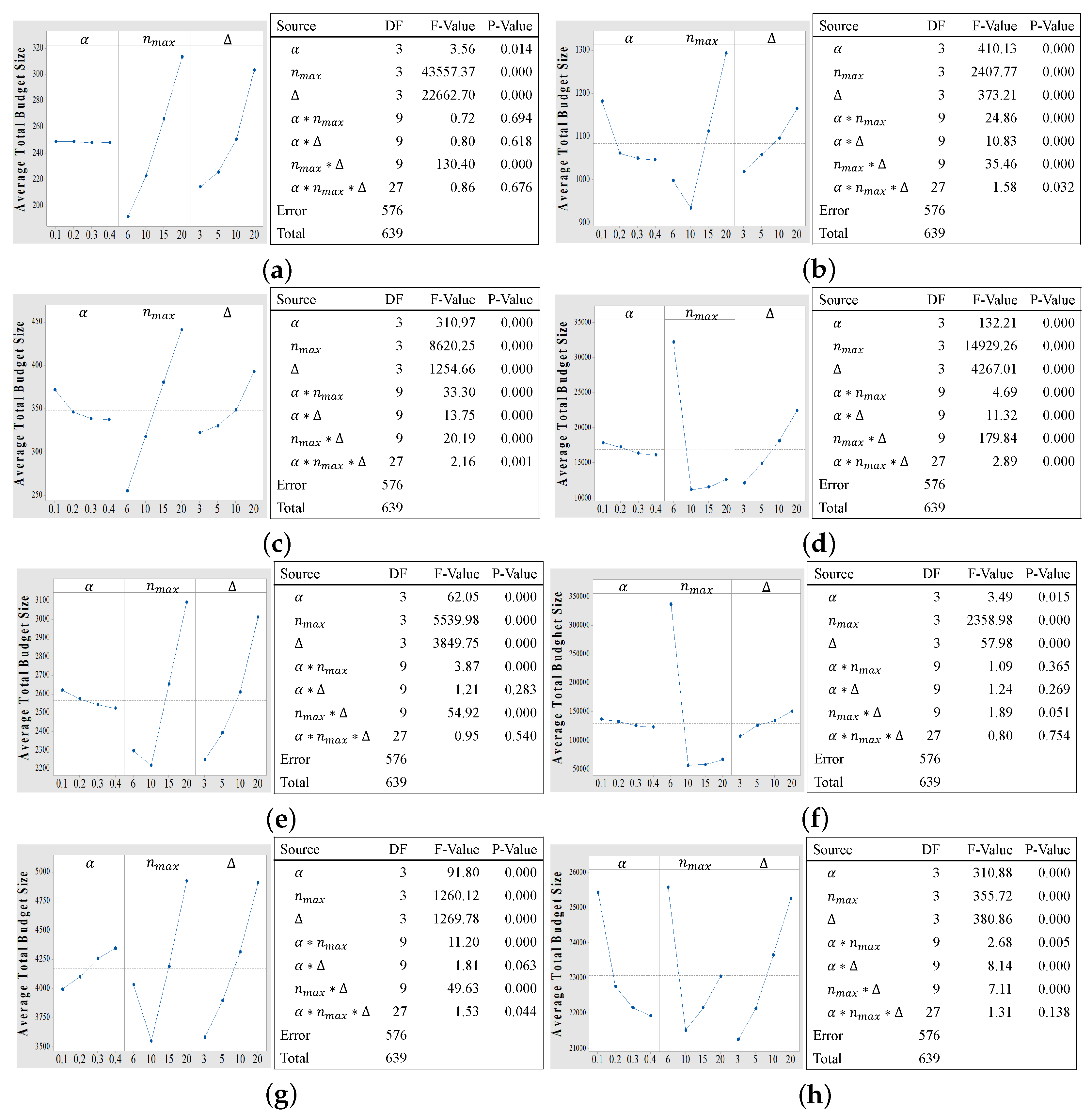

4.1.1. Main Effect Plot

Figure 3 shows the main effect plot and the table of analysis of variance (ANOVA) after running a full factorial design in Problem F

with three factors:

. A total of 500 independent replications are executed and they are divided into 10 batches. The response is the average total budget size, which is required to obtain all global optima, in a batch. The ANOVA is implemented after the Box–Cox transformation so that the standardized residuals do not violate the normality assumption (the

p-value equals 0.376 in the Anderson–Darling test) and the equal variance assumption (the

p-value equals 0.983 in the Levene test). All the parameters and interactions have significant effect on the proposed method with significant level

. The influences of the

value and the

value are much larger than that of the

value and the interactions based on the

F-values. Similar conclusions can be drawn in other problems (see

Appendix C), except for Problem F5, where the optima are located in different topographies in the feasible domain, and Problem F7(2-2D). According to the Tukey pairwise comparison with confidence level

, the optimal combinations of parameters for Problem F

are

, and

.

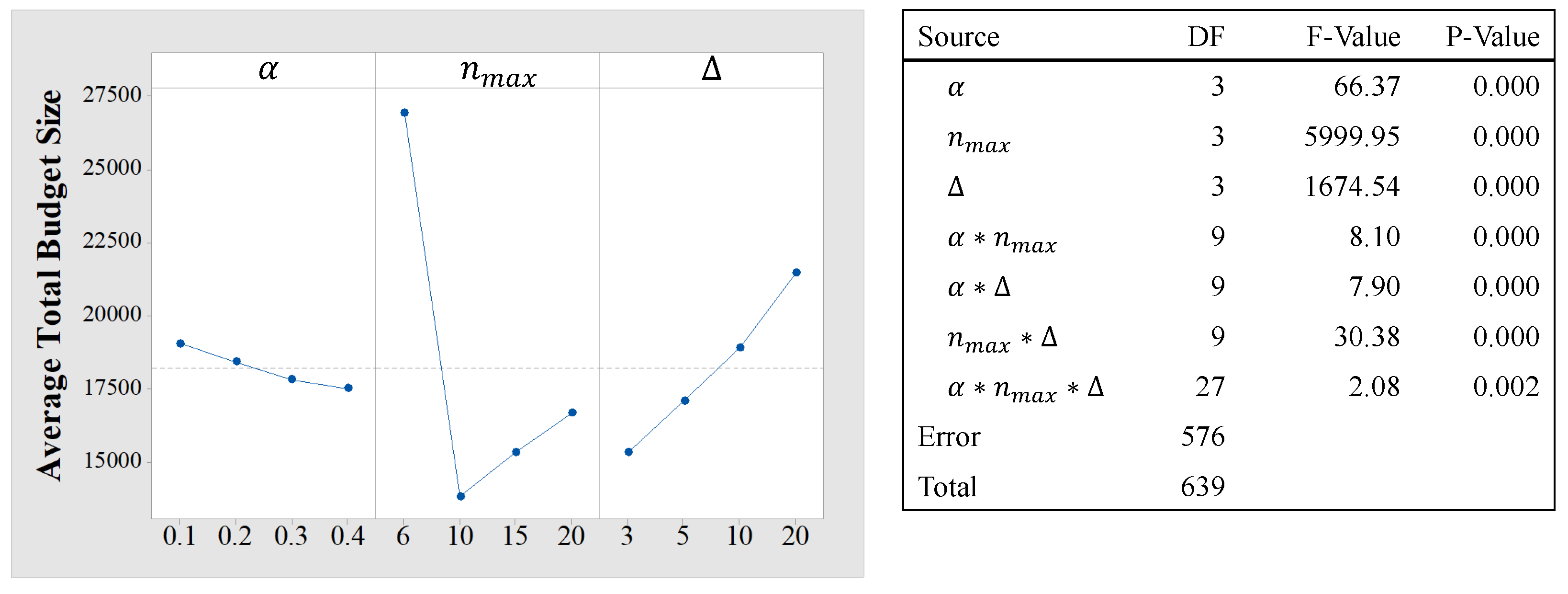

Figure 4 shows the main effect plot and the ANOVA table for Problem F5(2D). Although the normality hypothesis and the equal variance hypothesis are not met with confidence level 95%, the F-test is robust since the experiments with all combinations are performed with the same number of replications. Different from Problem F

, the parameter

has the highest effect on the algorithm performance. According to the Tukey pairwise comparison with confidence level

, the optimal combinations of the algorithm parameters for Problem F5(2D) are

, and

.

4.1.2. Effect of the Partition Sample Size Threshold

As the

value increases, the average total budget size required to obtain all optima declines first and then rises. This is because when the

value is too small, the partition action is executed based on biased information due to the small sample size. When the

value is too large, the budget that could be used to exploit subregions with good performance is wasted on exploring the current region. A similar phenomenon can be observed in different problems, as shown in

Figure 5, in which the budget size in the figure is scaled according to the maximal average budget size in different problems, i.e., the values in the legend.

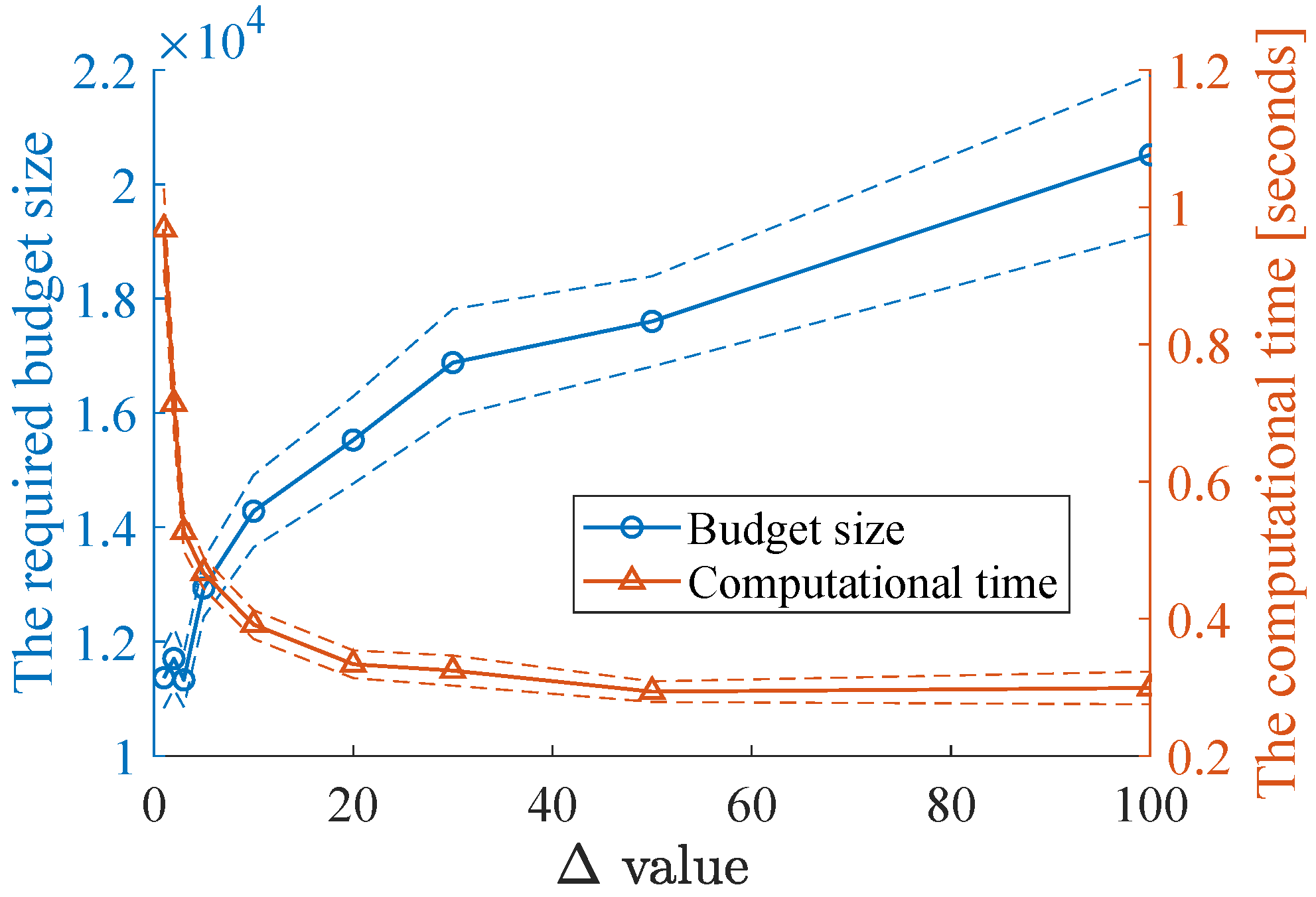

4.1.3. Effect of the New Budget Size

The results of the ANOVA in

Figure 3 show that a small

value is preferred in Problem F6

in terms of the total budget size, because it allows more budgets to be allocated after obtaining more information and generating more precise regions. Nevertheless, according to the discussion in

Section 2.3, a small

value may increase the computational time of the proposed method. In

Figure 6, the average total budget sizes and the corresponding computational times required to obtain all optima in Problem F6

with different

values are presented. The confidence intervals with confidence level

are also plotted. The experiments are executed in Matlab R2020a on a computer (Intel(R) Core(TM) i7-7700U CPU @ 3.6 GHz and 16 GB of RAM).

In this problem, it is very fast to calculate the objective function. Thus, the computational time is mainly affected by the computational complexity of the algorithm, i.e.,

, where

N is the total budget size. This is why the total computational time in

Figure 6 decreases as the

value increases. In practice, if the calculation of the objective function is time-consuming, a small

value can be used to save the expensive budget, whereas if the calculation of the objective function is not critical, a relatively large

value can be used to reduce the computational time.

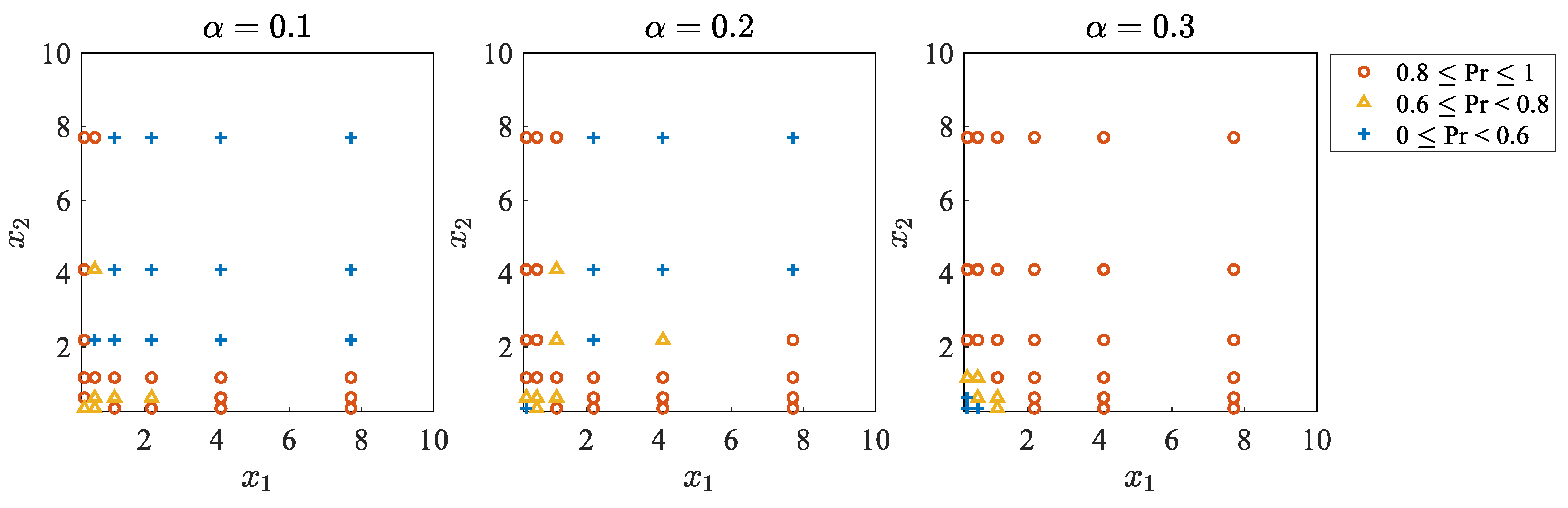

4.1.4. Effect of the Quantile Level

The definition of promising regions is affected by the selected value. A low value prefers regions with high variability; thus, the proposed method will focus more on exploration to avoid the loss of some promising areas, whereas a high value prefers regions with a low sample mean, so that the detected promising area will be firstly exploited. Although the effect of the value is less significant than the other two parameters in Problem F, it has a great influence in Problem F5(2D), where some optimal solutions are located in flat areas and others are located in steep areas. A low value will make promising regions in flat areas struggle to reach the smallest size due to their small variability; thus, the samples in these regions are not considered as potential optimal solutions.

Figure 7 presents another influence of the

value in Problem F5(2D). In 100 replications, the frequencies of the optimal solutions in different locations being captured within a budget of size 8000 is represented by different colors and shapes. In the studied case, the size of nonpartitionable regions are the same among the whole domain. A promising region in flat areas (i.e., large

and

values) has a small sample mean, while a promising region of the same size in steep areas (i.e., small

and

values) has a large sample variance. When the

value is high (

), promising regions in flat areas will be partitioned earlier, and the global optimal solutions located in these areas can be found with a higher frequency (larger than 0.8). When the

value is reduced to a lower value (e.g., 0.1 or 0.2), the influence of the sample variance rises. Therefore, the frequency of finding the optimal solutions in flat areas decreases, whereas the frequency of finding the optimal solutions in steep areas increases.

4.2. Comparison with Other Methods on Multiple Global Optima

The functions F1–F7, which have multiple global optima, are considered in this section. The proposed method is compared with other multimodal optimization methods developed from different mechanisms: multiobjectivization, subpopulations, differential evolution with niching mutation operator, and two-phase method. In addition, they all have good performance in the selected benchmark functions.

- MOMMOP

[

59] transfers the multimodal optimization problem to a multiobjective optimization problem with

conflicting objective functions so that all optima are located in the Pareto front. Then, differential evolution combined with modified nondominated sorting [

19] is used to solve the multiobjective optimization problem. The MOMMOP method belongs to the category of multiobjectivization and it is superior to ten state-of-the-art multimodal optimization algorithms in 20 benchmark functions.

- LAMS-ACO

[

46] divides the entire population into subpopulations that evolve separately through a modified ant colony optimization algorithm ACO

[

70]. Random cluster size is adopted. A local search scheme is applied to refine the obtained solution at each iteration. The LAMS-ACO method belongs to the category of population-based niching using subpopulations and demonstrates good performance with respect to the required total number of evaluations compared to the other twelve multimodal optimization algorithms.

- DIDE

[

36] constructs a virtual population for each individual so that each individual can track its own peak. The DIDE method belongs to the category of population-based method using niching mutation operator, and it has good performance compared to the other 13 methods in the benchmark functions.

- EMO-MMO

[

68] develops an algorithm to detect the peaks from solutions sampled by a multiobjectivization method. Then, the competitive swarm optimizer (CSO) [

71] is applied within each peak to refine the obtained optima. The idea of the EMO-MMO method is very similar to the proposed method; thus, it is also applied for comparison purposes.

These methods are applied with parameters suggested by the authors.

Three criteria are adopted for the assessment of the applied algorithms [

69]. The first one is the peak ratio (PR), which measures the average percentage of the found global optima. The second one is the success rate (SR), which is the percentage of runs that find all the global optima. The third one is the convergence speed (CS), which is the average budget size, i.e., the average number of evaluations of the objective function, required to find all the global optima. If not all global optima are found when the budget is exhausted, the maximum budget size

Max_Fes is used in the calculation.

The results of the applied algorithms are presented in

Table 3 with the accuracy level

varying from 1 ×

to 1 ×

. The winners are highlighted in bold and marked with dark background color (determined through the Wilcoxon rank sum test with

p-value smaller than 0.0125 for the PR and

p-value smaller than 0.0167 for the CS). All the experiments are repeated 100 times independently. It should be noticed that the EMO-MMO method uses all the available budget; thus, the CS column is not presented.

As discussed in

Section 3, if the PAR-MMO method is used alone, as the

value decreases, the average number of evaluations required to find all the optima increases a lot, or the percentage of global optima found reduces rapidly. However, if the PAR-MMO method is applied to detect the promising areas and local search is applied to improve the accuracy level of the obtained optima, i.e., the PARL-MMO method, the evaluation budget can be saved significantly, especially when the

value is small. When the PARL-MMO method is applied, the number of evaluations used in the PAR-MMO method is not much different, regardless of the

value, because the definition of the nonpartitionable region is the same. The required total budget size differs depending on the budget required in the local search stage, which is less affected by the

value compared to the PAR-MMO method.

In most cases, the PARL-MMO method behaves better than the three state-of-the-art methods, except for problems F4(3D), F5(3D), F7(3-2D), and F7(3-3D). In Problem F5(3D), the MOMMOP method has the best performance. Although the PARL-MMO method does not have good performance in this problem according to the criterion SR, the PR is still high (not less than 0.97 for all values). For the LAMS-ACO method, a large ant size is required to generate sufficient subpopulations, especially when the number of optima is high. For example, poor performance is observed for Problem F5(3D), because an ant size of 300 is too small for 216 optima. However, a too-large ant size may result in waste of budget. Prior knowledge about the number of optima is important for methods that divide the whole population into subpopulations, whereas this knowledge is not needed in the proposed method.

In Problem F4(3D), the criterion SR of the PARL-MMO method is not as good as that of the EMO-MMO method, but almost all the global optima are discovered (the PR values are all close to one). In addition, a lot of local optima are also contained in the in the PARL-MMO method. In problems F7(3-2D) and F7(3-3D), there are numerous local optima that are very close to one of the global optima. For example, in Problem F7(3-2D), the distance between a global optimum and one of the local optima with objective function value 0.5761 is 3 × . In this case, if the size of the nonpartitionable region is not small enough to separate these optimal solutions, Algorithm 5 may be stuck in one of the local optima, i.e., the results of PARL-MMO. However, if the size of the nonpartitionable region is small enough, the maximum budget size is not sufficient to reach the corresponding nonpartitionable region, i.e., the results of PAR-MMO. In the EMO-MMO method, the CSO method, which has the ability to escape the local optima, is applied to locally search the promising areas. Therefore, good performance is observed for the EMO-MMO method in problems F7(3-2D) and F7(3-3D).

As for the composition functions with more than five dimensions in the CEC’2013 functions, the proposed method does not have good performance, although all multimodal optimization methods hardly find all global optima in these functions within the given budget. The proposed method has difficulty handling functions with large jumps everywhere. In this case, an intelligent partitioning strategy developed from the system knowledge would be required to make the function smoother.

In summary, the PARL-MMO method has good performance in most of the benchmark functions for capturing multiple global optimal solutions.

4.3. Effect of Local Optima

The PARL-MMO method is applied to functions F8 (one-dimensional increasing optima) and F9 (Rastrigin function), which have only one global optimum and multiple local optima with different qualities, i.e., different objective function values. In this section, the accuracy level

is set as 1 ×

and 500 replications are executed.

Figure 8 shows the frequencies of different optima being found as the total budget size grows. In the Rastrigin function, similar performance is observed for optimal solutions having the same objective function values due to the symmetry property. Thus, to make the figure clear, they are combined as a single line. In the legend, the number in bold shows the number of optimal solutions having this objective function value. Many other local optimal solutions of the Rastrigin function with worse objective function values are not all found due to the budget limitation. Thus, they are not plotted in

Figure 8.

It can be found from

Figure 8 that, in the studied cases, the global optimum and the high-quality local optima have higher frequencies to be found than low-quality local optima when the available budget size is small. As the total budget size increases, the rest of the local optima will be found subsequently according to their objective function values. This is one of the features of the proposed method. Other multimodal optimization methods either cannot store local optima, e.g., MOMMOP [

59], LAM-ACO [

46], and EMO-MMO [

68], or optima with different qualities are considered equally important, e.g., [

55].

4.4. Schaffer’s Function

In this section, the PARL-MMO method is applied to Problem F10, in which the local optimal solution is not a single point. There is only one global optima and there are several regions in which all solutions are local optimal solutions with slightly worse objective function values. The optimal solutions located in the same circle have the same objective function values, which are 0.0097, 0.0372, 0.0782, and 0.1270 from inner circle to outer circle, respectively.

Figure 9 shows the partition states and the obtained optima, i.e., the solutions in

, as the total budget size

N increases. Unlike EA-based methods, the number of optimal solutions contained in the optimal solution set provided by the proposed method can grow without limit. Multiple local optimal solutions can be captured and the density of the solutions is affected by the size of the nonpartitionable regions and the distance parameter

r in Algorithm 3. As discussed in the previous section, the inner circles are found earlier than outer circles.

It should be noticed that if the optimal area is flat in all the dimensions, the variances of all regions (broad and partitionable) within this area will be zero. These regions will not reach the smallest size, since zero weights are assigned according to Equation (

5). Thus, the solutions within these regions are not considered as potential optimal solutions in the algorithm. In this situation, an archive can be created to store all the partitionable regions with a good mean and a very low variance.

4.5. Computational Time

In this section, the PAR-MMO method, in which local search is not included, is applied to Problem F4

(five dimensions) with

1 × 10

−4. A total of 20 independent replications are executed in Matlab R2020a on a computer (Intel(R) Core(TM) i7-7700U CPU @ 3.6 GHz and 16 GB of RAM).

Figure 10 shows how the average computational time changes as the total budget size increases. It can be found that the computational time increases quadratically as discussed in

Section 2.3 (

for the quadratic regression). Although the proposed method will become time-consuming when the total budget size is very large, it is still efficient in the studied case (on average, 121 s for a budget of size 1 ×

) and the computational time climbs slowly before the total budget size reaches one million.

5. Application

The optimal control problem of a nonlinear stirred tank reactor [

72] is considered in this section. The chemical process can be modeled by the following differential equations:

where

is the flow rate of the cooling fluid,

is the dimensionless steady temperature,

is the deviation from dimensionless steady concentration, and the interval of integration is

. The performance index to minimize is

, which can be evaluated using

ode45 in Matlab, and the initial condition is

. The problem has a global optimum

and a local optimum

. These two values are directly associated with two different control trajectories [

72].

To solve the problem, the time interval is discretized into 13 time slots in order to obtain a reasonably good integration accuracy, and constant control is used within a time slot. Then, becomes 13 decision variables . The PARLMMO method is applied with . The edge threshold that is considered as nonpartitionable is set to 0.8 and the stop threshold of the local search is set as 0.01. The maximum budget size is 1 × .

Twenty replications are carried out. The optimum is regarded as found only when the algorithm recognizes that the point is an optimum and saves it in

.

Table 4 shows the number of the replications that find each optimum, the average budget to find each optimum, and the average absolute percentage gap of the objective function value compared to the real optimum (0.13309 and 0.24442). It should be noticed that the gap could be caused by the precision of the captured solution, the calculation of the integration, and the discretization of

.

For comparison purpose, the MOMMOP method [

59], which outperforms other methods in the benchmark functions, is also applied with population size 30, mutation factor 0.5, crossover index 0.7, crowded radius 0.01, and maximum budget size 1 ×

. The optimal solution is considered as captured if it is contained in the Pareto set.

The first comment is that the global optimum is captured in all replications. In addition, no points other than these two optimal solutions appear in the final optimization set. This means that the algorithm will not mislead the users with fake optima.

However, the local optimum is captured only in three replications. This is because the local optimum is 84% worse than the global optimum. Thus, the area around the local optimum is not considered as promising by the algorithm, unless other areas with better objective function values have not been discovered or have been exploited. In this case, according to the budget size required to find each optimum, the area around the local optimum is only searched before the area around the global optimum is discovered. This result is consistent with the feature of the algorithm that focuses on the global optima and the high-quality local optima. When only good solutions are of interest, the algorithm would not waste budget to search for optima with poor objective functions. However, this may become a disadvantage when all optima (no matter if good or poor) are required.

The MOMMOP method is proposed for seeking multiple global optimal solutions. Thus, all points in the Pareto set are points around the global optimum, and the local optimum is not identified in any replication. The best solution in the Pareto set is used to calculate the average absolute percentage gap. This gap is less than the PARL-MMO. A possible reason for this is that the function around the global optimum is not smooth due to the discretization of the integration. Therefore, the local search may be trapped when refining the found solution in the PARL-MMO method. This may be improved by using different algorithms, such as EA, in the refining phase.

6. Conclusions

A partition-based random search method is proposed, in which by controlling the partition rates of different regions, promising areas are exploited probabilistically earlier than nonpromising areas. Multiple optimal solutions (both global and local) can be found and stored. It does not require prior knowledge about the number of optima in the studied problem and it is not sensitive to the distance parameter.

Numerical results show that, by cooperating with local search, the proposed method has good performance in finding multiple global optimal solutions in 14 benchmark functions compared to four state-of-the-art methods. In problems containing local optima of different qualities, i.e., different objective function values, high-quality local optima will be found earlier than low-quality optima. Therefore, it will focus on exploiting global optima and high-quality local optima when the budget is not quite sufficient. In addition, the proposed method can also deal with optimization problems that exist in regions where all solutions are optimal solutions.

One of the limitations of the proposed method is that a large amount of calculation memory is needed because all existing regions and all sampled solutions are stored. The increased number of regions also makes the computational time increase quadratically as the total budget size increases, although it is still efficient when the total budget size is not extremely large. Therefore, the pruning of the stored regions is one of the directions of further work. The other limitation is introduced by the property of the partition-based random search framework. Partition-based methods are efficient for problems in which each decision variable has a large search space. However, it could be inefficient for high-dimensional problems if the partition strategy is simply partitioning each dimension into several ranges. In this case, intelligent partition strategies should be developed based on the features of the studied problem to improve the efficiency of the algorithm. The efficiency of the proposed method could be highly affected by selection of the partition strategy.

The future development includes several directions. The first one is the development of an algorithm for the deletion of less interesting regions to solve the problem of quadratically increased computational time. The second one is adopting an adaptive value in the proposed method. As discussed in the paper, a low value focuses more on exploration, whereas a high value focuses more on exploitation. Therefore, an increasing value may improve the efficiency of the proposed method. The third one is to deal with the optimization problems with constraints. Although the constraints can be handled by manipulating the sampling strategy to avoid the sampling of infeasible solutions, this action may be difficult for some applications. Penalty function is another common way to deal with constraints, but the regions containing the boundary may have biased performance estimates, which may affect the proposed method. Therefore, an adaptive penalty function to deal with the constraints in the proposed method will be an interesting research direction. The last one is extending the method to optimization problems with stochastic objective function or constraints.