A More Realistic Markov Process Model for Explaining the Disjunction Effect in One-Shot Prisoner’s Dilemma Game

Abstract

1. Introduction

2. Background

2.1. Cognitive Settings in Quantum BAE and Markov BA Models

2.2. Dual Processing in Socio-Economic Decision Making

2.3. Model Evaluation Metric

2.3.1. Root Mean Squared Deviation (RMSD)

2.3.2. Bayesian Information Criterion (BIC)

2.3.3. Model Flexibility

3. Re-Examination of Quantum BAE and Markov BA Models

3.1. Unrealistic Payoff Evaluation

3.1.1. The Preference for Higher Payoff Is Not Always Satisfied

3.1.2. DMs Treat the Same Payoff Information Regardless of Opponent’s Actions

3.2. Unrealistic Belief and Action Entanglement

3.3. Violation of the Dual Processing Framework

3.4. The Fixed Decision Time Parameter ‘π/2’

3.5. Summary

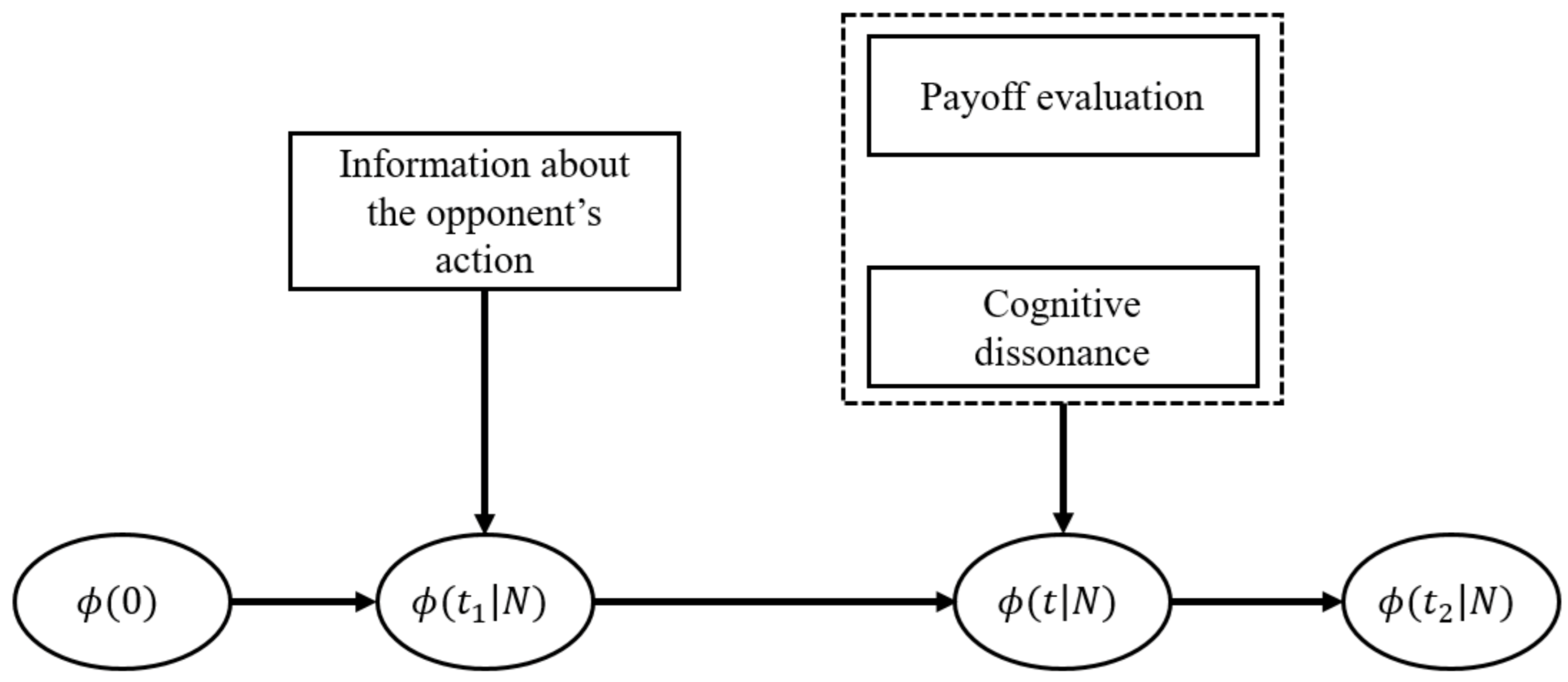

4. Proposed Method

4.1. Model Highlights

- The intensity matrices for payoff evaluation and cognitive dissonance are dependent on the information about the opponent’s action.

- The weight between payoff evaluation and cognitive dissonance is moderated by the DM’s DSN and is dynamically evolving during the decision process.

- The weight between payoff evaluation and cognitive dissonance can be considered as unchanged during a small period of time .

- The final decision time is determined by the time when the probability distribution of a DM’s belief and action states reaches stationary.

4.2. Model Construction

- A probability transition can only happen from one of any unstable states to one of any stable states.

- A probability transition can neither happen from one stable state to another nor from an unstable state to another.

5. Model Fitting and Comparison

5.1. Model Fitting

5.2. Model Comparison

5.3. Academic and Practical Implications

6. Concluding Remarks

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Nomenclature

| N | The set of decision conditions, with KD for the condition where the opponent is known to defect, KC for the condition where the opponent is known to cooperate and Ukn for the condition where the opponent’s action is unknown. |

| L | The number of choices included in each condition. |

| J | The number of decision conditions. |

| The payoff corresponding to the ith belief and action state: , , and corresponding to 1st, 2nd, 3rd and 4th state, respectively. | |

| The bounded rationality parameter. | |

| The indicator of cognitive dissonance in the quantum BAE model. | |

| The indicator of cognitive dissonance in the Markov model. | |

| The probability distribution across belief–action states. | |

| t | Time of decision process, with for the time point receiving the information about the opponent’s action, (quantum BAE and Markov BA models) and (proposed Markov model) for the time point of the end of the decision process. |

| The time between and . | |

| The number of free parameters in the model. | |

| The utility differences between the payoffs and in the quantum BAE model. | |

| The utility differences between the payoffs and in the quantum BAE model. | |

| The utility differences between the payoffs and in the Markov BA model. | |

| The utility differences between the payoffs and in the Markov BA model. | |

| The small time increment for the decision process. |

References

- Savage, L.J. The Foundations of Statistics; John Wiley & Sons: New York, NY, USA, 1954. [Google Scholar]

- Shafir, E.; Tversky, A. Thinking through uncertainty: Nonconsequential reasoning and choice. Cogn. Psychol. 1992, 24, 449–474. [Google Scholar] [CrossRef]

- Tversky, A.; Shafir, E. The disjunction effect in choice under uncertainty. Psychol. Sci. 1992, 3, 305–310. [Google Scholar] [CrossRef]

- Denolf, J.; Martínez-Martínez, I.; Josephy, H.; Barque-Duran, A. A quantum-like model for complementarity of preferences and beliefs in dilemma games. J. Math. Psychol. 2017, 78, 96–106. [Google Scholar] [CrossRef]

- Li, S.; Taplin, J.E.; Zhang, Y. The equate-to-differentiate’s way of seeing the prisoner’s dilemma. Inf. Sci. 2007, 177, 1395–1412. [Google Scholar] [CrossRef]

- Bruza, P.D.; Wang, Z.; Busemeyer, J.R. Quantum cognition: A new theoretical approach to psychology. Trends Cogn. Sci. 2015, 19, 383–393. [Google Scholar] [CrossRef]

- Busemeyer, J.R.; Wang, Z.; Townsend, J.T. Quantum dynamics of human decision-making. J. Math. Psychol. 2006, 50, 220–241. [Google Scholar] [CrossRef]

- Ram, M.; Tyagi, V. Reliability characteristics of railway communication system subject to switch failure. Oper. Res. Eng. Sci. Theory Appl. 2021, 4, 124–139. [Google Scholar] [CrossRef]

- Tanackov, I.; Jevtić, Ž.; Stojić, G.; Sinani, F.; Ercegovac, P. Rare Events Queueing System-REQS. Oper. Res. Eng. Sci. Theory Appl. 2019, 2, 1–11. [Google Scholar] [CrossRef]

- Busemeyer, J.R.; Wang, Z.; Pothos, E.M. Quantum models of cognition and decision. In The Oxford Handbook of Computational and Mathematical Psychology; Busemeyer, J.R., Wang, Z., Townsend, J.T., Eidels, A., Eds.; Oxford University Press: Oxford, UK, 2015; pp. 369–389. [Google Scholar]

- Busemeyer, J.R.; Wang, Z.; Lambert-Mogiliansky, A. Empirical comparison of Markov and quantum models of decision making. J. Math. Psychol. 2009, 53, 423–433. [Google Scholar] [CrossRef]

- Wang, Z.; Busemeyer, J.R. Interference effects of categorization on decision making. Cognition 2016, 150, 133–149. [Google Scholar] [CrossRef]

- Busemeyer, J.R.; Kvam, P.D.; Pleskac, T.J. Comparison of Markov versus quantum dynamical models of human decision making. Wiley Interdiscip. Rev. Cogn. Sci. 2020, 11, e1526. [Google Scholar] [CrossRef] [PubMed]

- Bear, A.; Rand, D.G. Intuition, deliberation, and the evolution of cooperation. Proc. Natl. Acad. Sci. USA 2016, 113, 936–941. [Google Scholar] [CrossRef] [PubMed]

- Jagau, S.; van Veelen, M. A general evolutionary framework for the role of intuition and deliberation in cooperation. Nat. Hum. Behav. 2017, 1, 152. [Google Scholar] [CrossRef]

- Chen, F.; Krajbich, I. Biased sequential sampling underlies the effects of time pressure and delay in social decision making. Nat. Commun. 2018, 9, 3557. [Google Scholar] [CrossRef]

- Gallotti, R.; Grujić, J. A quantitative description of the transition between intuitive altruism and rational deliberation in iterated Prisoner’s Dilemma experiments. Sci. Rep. 2019, 9, 17046. [Google Scholar] [CrossRef]

- Ratcliff, R.; Smith, P.L.; Brown, S.D.; McKoon, G. Diffusion decision model: Current issues and history. Trends Cogn. Sci. 2016, 20, 260–281. [Google Scholar] [CrossRef] [PubMed]

- Broekaert, J.B.; Busemeyer, J.R.; Pothos, E.M. The Disjunction Effect in two-stage simulated gambles. An experimental study and comparison of a heuristic logistic, Markov and quantum-like model. Cogn. Psychol. 2020, 117, 101262. [Google Scholar] [CrossRef] [PubMed]

- Busemeyer, J.R.; Bruza, P.D. Quantum Models of Cognition and Decision; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Evans, J.S.B.T. Dual-processing accounts of reasoning, judgment, and social cognition. Annu. Rev. Psychol. 2008, 59, 255–278. [Google Scholar] [CrossRef] [PubMed]

- Phillips, W.J.; Fletcher, J.M.; Marks, A.D.G.; Hine, D.W. Thinking styles and decision making: A meta-analysis. Psychol. Bull. 2015, 142, 260. [Google Scholar] [CrossRef]

- Li, H.; Chen, S.; Ni, S. Escape decision-making based on intuition and deliberation under simple and complex judgment and decision conditions. Acta Psychol. Sin. 2013, 45, 94–103. [Google Scholar] [CrossRef]

- Maldonato, M.; Dell’Orco, S. Decision making styles and adaptive algorithms for human action. Psychology 2011, 2, 811. [Google Scholar] [CrossRef][Green Version]

- Rusou, Z.; Zakay, D.; Usher, M. Intuitive number evaluation is not affected by information processing load. In Advances in Human Factors, Business Management, Training and Education; Springer: Cham, Switzerland, 2017; pp. 135–148. [Google Scholar]

- Trippas, D.; Thompson, V.A.; Handley, S.J. When fast logic meets slow belief: Evidence for a parallel-processing model of belief bias. Mem. Cogn. 2017, 45, 539–552. [Google Scholar] [CrossRef] [PubMed]

- Rand, D.G.; Greene, J.D.; Nowak, M.A. Spontaneous giving and calculated greed. Nature 2012, 489, 427–430. [Google Scholar] [CrossRef] [PubMed]

- Sloman, S.A. The empirical case for two systems of reasoning. Psychol. Bull. 1996, 119, 3. [Google Scholar] [CrossRef]

- Sloman, S. Two systems of reasoning. In Heuristics and Biases: The Psychology of Intuitive Judgment; Cambridge University Press: Cambridge, UK, 2002; pp. 379–396. [Google Scholar]

- Evans, J.S.B.; Stanovich, K.E. Dual-process theories of higher cognition: Advancing the debate. Perspect. Psychol. Sci. 2013, 8, 223–241. [Google Scholar] [CrossRef] [PubMed]

- van den Berg, P.; Wenseleers, T. Uncertainty about social interactions leads to the evolution of social heuristics. Nat. Commun. 2018, 9, 2151. [Google Scholar] [CrossRef]

- van den Berg, P.; Dewitte, S.; Wenseleers, T. Uncertainty causes humans to use social heuristics and to cooperate more: An experiment among Belgian university students. Evol. Hum. Behav. 2021, 42, 223–229. [Google Scholar] [CrossRef]

- Farrell, S.; Lewandowsky, S. Computational Modeling of Cognition and Behavior; Cambridge University Press: Cambridge, UK, 2018. [Google Scholar]

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Pitt, M.A.; Kim, W.; Navarro, D.J.; Myung, J.I. Global model analysis by parameter space partitioning. Psychol. Rev. 2006, 113, 57. [Google Scholar] [CrossRef]

- Dai, J.; Pleskac, T.J.; Pachur, T. Dynamic cognitive models of intertemporal choice. Cogn. Psychol. 2018, 104, 29–56. [Google Scholar] [CrossRef]

- Pitt, M.A.; Myung, J.I.; Montenegro, M.; Pooley, J. Measuring model flexibility with parameter space partitioning: An introduction and application example. Cogn. Sci. 2008, 32, 1285–1303. [Google Scholar] [CrossRef] [PubMed]

- Steingroever, H.; Wetzels, R.; Wagenmakers, E.J. A comparison of reinforcement learning models for the Iowa Gambling Task using parameter space partitioning. J. Probl. Solving 2013, 5, 2. [Google Scholar] [CrossRef]

- Hristova, E.; Grinberg, M. Testing two explanations for the disjunction effect in Prisoner’s Dilemma games: Complexity and quasi-magical thinking. In Proceedings of the 32th Annual Meeting of the Cognitive Science Society, Portland, OR, USA, 11–14 August 2010. [Google Scholar]

- Li, S.; Taplin, J.E. Examining whether there is a disjunction effect in Prisoner’s Dilemma games. Chin. J. Psychol. 2002, 44, 25–46. [Google Scholar]

- Pothos, E.M.; Perry, G.; Corr, P.J.; Matthew, M.R.; Busemeyer, J.R. Understanding cooperation in the Prisoner’s Dilemma game. Pers. Individ. Differ. 2011, 51, 210–215. [Google Scholar] [CrossRef]

- Hristova, E.; Grinberg, M. Disjunction effect in prisoner’s dilemma: Evidences from an eye-tracking study. In Proceedings of the 30th Annual Conference of the Cognitive Science Society, Washington, DC, USA, 23–26 July 2008. [Google Scholar]

- Pothos, E.M.; Busemeyer, J.R. A quantum probability explanation for violations of ‘rational’decision theory. Proc. R. Soc. B Biol. Sci. 2009, 276, 2171–2178. [Google Scholar] [CrossRef]

- Festinger, L. A Theory of Cognitive Dissonance; Stanford University Press: Stanford, CA, USA, 1957. [Google Scholar]

- Croson, R.T. The disjunction effect and reason-based choice in games. Organ. Behav. Hum. Decis. Process. 1999, 80, 118–133. [Google Scholar] [CrossRef] [PubMed]

- Stewart, N.; Gächter, S.; Noguchi, T.; Mullett, T.L. Eye movements in strategic choice. J. Behav. Decis. Mak. 2016, 29, 137–156. [Google Scholar] [CrossRef] [PubMed]

- Forstmann, B.U.; Ratcliff, R.; Wagenmakers, E.J. Sequential sampling models in cognitive neuroscience: Advantages, applications, and extensions. Annu. Rev. Psychol. 2016, 67, 641–666. [Google Scholar] [CrossRef]

- Diederich, A.; Busemeyer, J.R. Simple matrix methods for analyzing diffusion models of choice probability, choice response time, and simple response time. J. Math. Psychol. 2003, 47, 304–322. [Google Scholar] [CrossRef]

- Martínez-Martínez, I.; Sánchez-Burillo, E. Quantum stochastic walks on networks for decision-making. Sci. Rep. 2016, 6, 23812. [Google Scholar] [CrossRef]

- Dobrow, R.P. Introduction to Stochastic Processes with R; John Wiley & Sons: New York, NY, USA, 2016. [Google Scholar]

- Busemeyer, J.R.; Matthew, M.R.; Wang, Z. A quantum information processing explanation of disjunction effects. In Proceedings of the 28th Annual Meeting of the Cognitive Science Society, Vancouver, BC, Canada, 26–29 July 2006. [Google Scholar]

- Fiedler, S.; Glöckner, A.; Nicklisch, A.; Dickert, S. Social value orientation and information search in social dilemmas: An eye-tracking analysis. Organ. Behav. Hum. Decis. Process. 2013, 120, 272–284. [Google Scholar] [CrossRef]

- Lohse, J.; Goeschl, T.; Diederich, J.H. Giving is a question of time: Response times and contributions to an environmental public good. Environ. Resour. Econ. 2017, 67, 455–477. [Google Scholar] [CrossRef]

- Lotito, G.; Migheli, M.; Ortona, G. Is cooperation instinctive? Evidence from the response times in a public goods game. J. Bioecon. 2013, 15, 123–133. [Google Scholar] [CrossRef]

- Piovesan, M.; Wengström, E. Fast or fair? A study of response times. Econ. Lett. 2009, 105, 193–196. [Google Scholar] [CrossRef]

- He, Z.; Jiang, W. An evidential dynamical model to predict the interference effect of categorization on decision making results. Knowl.-Based Syst. 2018, 150, 139–149. [Google Scholar] [CrossRef]

- He, Z.; Jiang, W. An evidential Markov decision making model. Inf. Sci. 2018, 467, 357–372. [Google Scholar] [CrossRef]

- Ikeda, K. Foundation of quantum optimal transport and applications. Quantum Inf. Process. 2020, 19, 25. [Google Scholar] [CrossRef]

- Ikeda, K.; Aoki, S. Infinitely repeated quantum games and strategic efficiency. Quantum Inf. Process. 2021, 20, 387. [Google Scholar] [CrossRef]

- Ikeda, K.; Aoki, S. Theory of quantum games and quantum economic behavior. Quantum Inf. Process. 2022, 21, 27. [Google Scholar] [CrossRef]

- Ikeda, K. Quantum contracts between schrödinger and a cat. Quantum Inf. Process. 2021, 20, 313. [Google Scholar] [CrossRef]

- Henderson, A.; Pine, S.; Fox, A. Behavioral inhibition and developmental risk: A dual-processing perspective. Neuropsychopharmacology 2015, 40, 207–224. [Google Scholar] [CrossRef] [PubMed]

| The Opponent Defects | The Opponent Cooperates | |

|---|---|---|

| You defect | You and your opponent each get 30 (x1) | You get 85 (x3) and your opponent gets 25 (x2) |

| You cooperate | You get 25 (x2) and your opponent gets 85 (x3) | You and your opponent each get 75 (x4) |

| Literature | ||||

|---|---|---|---|---|

| [2] | Obs a | 0.97 | 0.84 | 0.63 |

| Q b | 0.83 | 0.77 | 0.69 | |

| M c | 0.95 | 0.85 | 0.63 | |

| [45] | Obs | 0.67 | 0.32 | 0.30 |

| Q | 0.67 | 0.37 | 0.35 | |

| M | 0.72 | 0.34 | 0.32 | |

| [40] | Obs | 0.82 | 0.77 | 0.72 |

| Q | 0.82 | 0.77 | 0.73 | |

| M | 0.83 | 0.74 | 0.73 | |

| [52] | Obs | 0.91 | 0.84 | 0.66 |

| Q | 0.83 | 0.79 | 0.70 | |

| M | 0.94 | 0.82 | 0.66 | |

| [42] | Obs | 0.97 | 0.93 | 0.88 |

| Q | 0.95 | 0.91 | 0.93 | |

| M | 0.98 | 0.92 | 0.88 | |

| [39] | Obs | 0.91 | 0.86 | 0.79 |

| Q | 0.85 | 0.88 | 0.83 | |

| M | 0.94 | 0.84 | 0.79 | |

| [41] | Obs | 0.94 | 0.89 | 0.88 |

| Q | 0.93 | 0.87 | 0.90 | |

| M | 0.95 | 0.87 | 0.86 | |

| Average | Obs | 0.88 | 0.78 | 0.69 |

| Q | 0.83 | 0.77 | 0.73 | |

| M | 0.90 | 0.77 | 0.69 |

| Literature | k | RMSD | BIC | ||

|---|---|---|---|---|---|

| [2] | Q | 3 | 444 | 0.0150 | 1219.46 |

| M | 2 | 444 | 0.0002 | 1112.57 | |

| [45] | Q | 3 | 80 | 0.0031 | 317.83 |

| M | 2 | 80 | 0.0021 | 310.79 | |

| [40] | Q | 3 | 210 | <0.0001 | 692.86 |

| M | 2 | 210 | 0.0007 | 687.90 | |

| [52] | Q | 3 | 528 | 0.0074 | 1547.99 |

| M | 2 | 528 | 0.0006 | 1473.06 | |

| [42] | Q | 3 | 180 | 0.0018 | 298.92 |

| M | 2 | 180 | 0.0001 | 286.16 | |

| [39] | Q | 3 | 1500 | 0.0031 | 3756.77 |

| M | 2 | 1500 | 0.0007 | 3705.17 | |

| [41] | Q | 3 | 150 | 0.0008 | 308.37 |

| M | 2 | 150 | 0.0001 | 295.52 | |

| Average | Q | 2 | 441.72 | 0.0045 | 1163.17 |

| M | 3 | 441.72 | 0.0005 | 1124.50 |

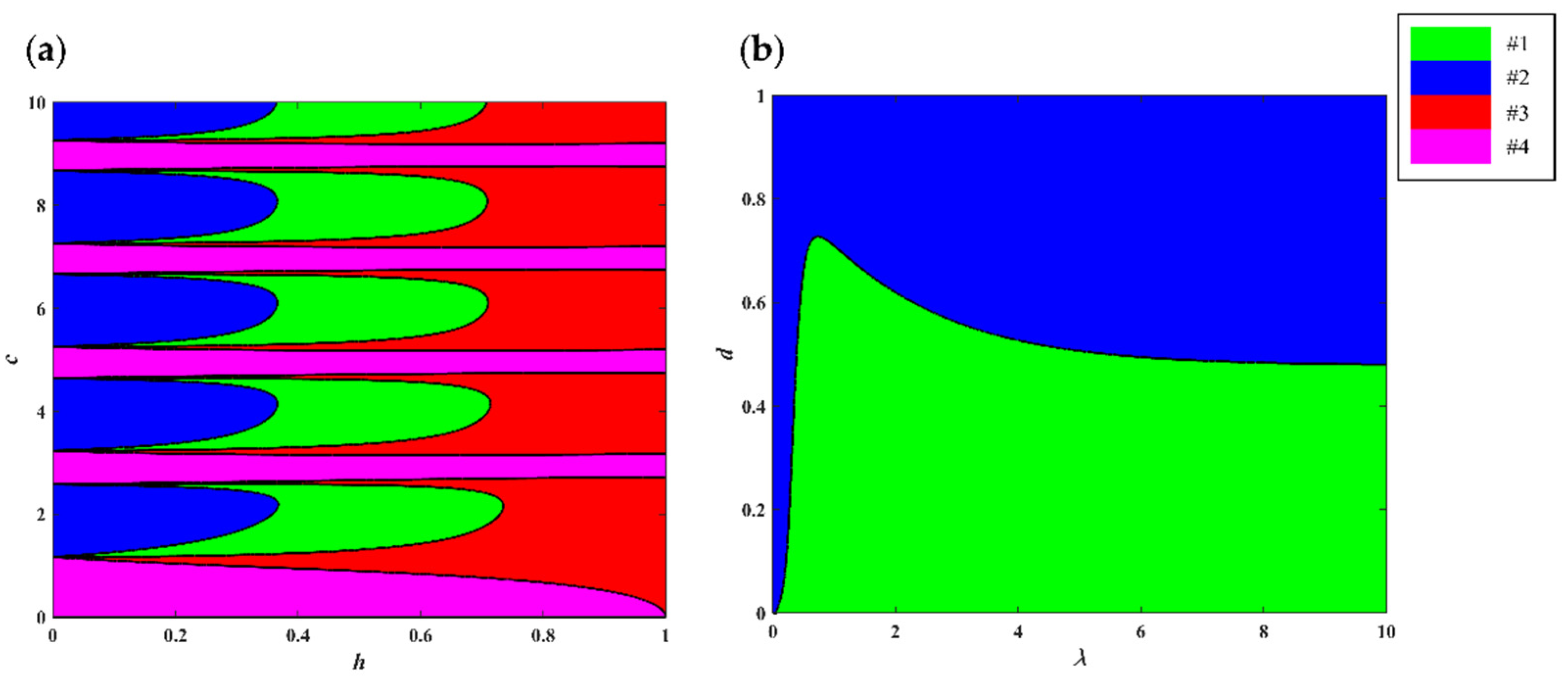

| Choice Pattern | Proportion of All Choice Patterns | |

|---|---|---|

| Q | M | |

| #1: | 0.33 | 0.53 |

| #2: | 0.15 | 0.47 |

| #3: | 0.24 | 0.00 |

| #4: | 0.28 | 0.00 |

| #5: | 0.00 | 0.00 |

| #6: | 0.00 | 0.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xin, X.; Sun, M.; Liu, B.; Li, Y.; Gao, X. A More Realistic Markov Process Model for Explaining the Disjunction Effect in One-Shot Prisoner’s Dilemma Game. Mathematics 2022, 10, 834. https://doi.org/10.3390/math10050834

Xin X, Sun M, Liu B, Li Y, Gao X. A More Realistic Markov Process Model for Explaining the Disjunction Effect in One-Shot Prisoner’s Dilemma Game. Mathematics. 2022; 10(5):834. https://doi.org/10.3390/math10050834

Chicago/Turabian StyleXin, Xiaoyang, Mengdan Sun, Bo Liu, Ying Li, and Xiaoqing Gao. 2022. "A More Realistic Markov Process Model for Explaining the Disjunction Effect in One-Shot Prisoner’s Dilemma Game" Mathematics 10, no. 5: 834. https://doi.org/10.3390/math10050834

APA StyleXin, X., Sun, M., Liu, B., Li, Y., & Gao, X. (2022). A More Realistic Markov Process Model for Explaining the Disjunction Effect in One-Shot Prisoner’s Dilemma Game. Mathematics, 10(5), 834. https://doi.org/10.3390/math10050834