1. Introduction and Motivation

Let

X be a random variable with a cumulative distribution function

Value-at-risk (VaR) of the random variable

X, computed at a probability level

, is defined as

VaR is one of the best known and the most frequently used measures of financial risk. Another important risk measure is the Tail Conditional Expectation (TCE), which is defined as

The TCE has already been discussed in many literatures. In this paper, we introduce the

nth Tail Conditional Moments (TCM) for random variable

X, which is defined as

where

We note that the proposed TCM takes the form of

instead of

, because we think that these moments provide a better interpretation of tail trajectories. We care more about

than

X. It is interesting to note that TCM can be found in asymptotic expansion of the conditional characteristic function:

where

is a special case of (

1), which is a risk measure that examines the dispersion of the tail of a distribution for some quantile

q. The tail variance risk measure (TV) was proposed by Furman and Landsman [

1] and as a measure for the optimal portfolio selection in Landsman [

2].

The risk measures, such as VaR, TCE and TV, do not provide sufficient information on skewness and kurtosis of the tail of a distribution. Therefore, we use the spread of the skewness and kurtosis of the tail of a distribution for some

q-quantiles, which are proposed and studied by Landsman et al. (2016b). The Tail Conditional Skewness (TCS) and the Tail Conditional Kurtosis (TCK) are defined as follows, respectively,

The TCS can help us comprehend whether the distribution is left-skewed or right-skewed. If , the tail is on the left side of the distribution, that is, the probability for X being below will be higher than that of being above it. If , the tail is on the right side of the distribution, that is, the probability for X being above will be higher than that of being below it. By comparing the TCK of the normal distribution, we can know the shape of the probability distribution.

It is well-known that TCE describes the extreme expected losses. Recently, there have been many studies on TCE. For example, Landsman and Valdez [

3] introduced tail conditional expectations for elliptical distributions. Ignatieva and Landsman [

4] discussed conditional tail risk measures for the skewed generalised hyperbolic family. Deng and Yao [

5] extended the Stein-type inequality to the multivariate generalized hyperbolic distribution. Li et al. [

6] derived the conditional tail expectation for log-multivariate generalized hyperbolic distribution. In a more recent paper of Ignatieva and Landsman [

7], where the location-scale mixture of elliptical distributions was introduced (and called the “generalised hyper-elliptical distributions”), they considered the tail conditional risk measures for that distributions; see also Zuo and Yin [

8]. Kim [

9] presented the conditional tail moments for an exponential family, and Landsman et al. [

10] and Eini et al. [

11] presented the conditional tail moments for elliptical distributions and generalized skew-elliptical distributions, respectively. In this paper, the main consideration is tail conditional moments for a location-scale mixture of elliptical distributions, which extends the conclusion of Zuo and Li. Since the location-scale mixture of elliptical distributions can be composed of any elliptical distribution and Generalised Inverse Gaussian distribution, which has generality, we found that location-scale mixtures of elliptical distributions are suitable for heavy-tailed distributions. Therefore, the location-scale mixture of elliptical distributions is more flexible, and we can choose different elliptic distributions and parameters to fit the models.

The paper is organized as follows. In

Section 2 we introduce the location-scale mixture of elliptical distributions. In

Section 3 we obtain the expression of the

nth TCM for univariate cases of mixture of elliptical distributions. In

Section 4 we give the expressions of TV, TCS, and TCK for some important cases of mixture of elliptical distributions.

Section 5 offers the numerical analysis of GH and the mixture of the Student’s

t distribution.

Section 6 shows an illustrative example, and discusses the TCE, TV, TCS, TCK of three stocks.

Section 7 gives concluding remarks.

2. Location-Scale Mixture of Elliptical Distributions

In this section, we introduce the mixture of elliptical distributions. Firstly, let us introduce the elliptical family distributions. The random vector

is said to have an elliptical distribution with parameters

and

if its characteristic function can be expressed as

for some function

. Then we denote

.

An elliptically distributed random vector

does not necessarily have a multivariate density function

. If

has a density, then it will be of the form

where

is an

location vector,

is an

scale matrix, and

,

, is the density generator of

. This density generator satisfies condition:

and the normalizing constant

is given by

A necessary condition for this covariance matrix to exist is

(see Fang et al. [

12]). Suppose

is a

matrix, and

is a

vector. Then

where

.

We denote the cumulative generator

, and a sequence of cumulative generators

Meanwhile, assume the variance of

exists, and let

and

Consequently,

and

, which is a density of random variable

, defined on

. Similarly,

and

is a density of random variable

defined on

.

Next, we introduce the Location-Scale Mixture of Elliptical (LSME) distributions.

is an

n-dimensional LSME distribution with location parameter

and positive definite scale matrix

, if

where

, and

Assuming that

is independent of non-negative scalar random variable

, we have

If

has a Generalised Inverse Gaussian distribution,

, given by pdf

where

denotes a modified Bessel function of the third kind with index

:

Here the parameters satisfy , if ; , if ; , if .

When , the random vector has a new distribution, which is also a special case of LSME distributions. Here, is the Beta function.

We list some examples of the mixture elliptical family, including the Location-Scale Mixture of Normal (LSMN), Location-Scale Mixture of Student’s t (LSMSt), Location-Scale Mixture of Logistic (LSMLo) and Location-Scale Mixture of Laplace (LSMLa) distributions.

Example 1. (Mixture of normal distribution). An n-dimensional normal random vector with location parameter μ and scale matrixΣhas density functionwhere , and is denoted by . Therefore, the location-scale mixture of normal random vector is defined aswhere , and μ,Σ, Θ

and β are the same as those in (

11).

Example 2. (Mixture of logistic distribution). Density function of an n-dimensional logistic random vector with location parameter μ and scale matrixΣcan be expressed aswhereand is denoted by . The location-scale mixture of logistic random vector satisfieswhere , and μ,Σ, Θ

and β are the same as those in (

11).

Remark 1. Here, is the generalized Hurwitz–Lerch zeta function defined by (cf. Lin et al. (2006))which has an integral representationwhere , when , when . Example 3. (Mixture of Laplace distribution). The density of Laplace random vector with location parameter μ and scale matrixΣis given bywhere , and is denoted by . Hence, the location-scale mixture of Laplace random vector is defined aswhere , and μ,Σ, Θ

and β are the same as those in (11). 3. Tail Conditional Moments

In this section, we present the for a univariate case of the mixture of elliptical distributions. We assume that the conditional and mixture distributions are continuous.

Consider

, then

. Before giving the

, we calculate the following conditional moments

Lemma 1. Let be a univariate location-scale mixture of an elliptical random variable defined as (

11).

Let , which implies Proof. The

nth conditional moments are

□

Next, we calculate , which plays an important role in TCM.

Lemma 2. Let be a univariate location-scale mixture of an elliptical random variable defined as (

11).

Then Proof. The nth conditional moments is

where

□

Remark 2. In particular, when , the above equation degenerates intowhere is the normalizing constant of ,and Theorem 1. Let be a univariate location-scale mixture of elliptical random variables defined as (

11).

Then where Proof. Using the Binomial Theorem, we can get

where

and

The measures

are calculated in the spirit of the proof of Lemma 1. Taking the transformation

, we note that the tail function is

, which is, in fact, the

percentile. Furthermore, the transformation simplifies the integral in

, which is as follows:

where

and here,

Taking into account (

21), we get

This ends the proof of Theorem 1. □

The GIG is involved in the mixture of elliptical distributions, resulting in different processes when calculating . At the same time, there is some confusion in the normalization constants corresponding to different generating functions in Landsman (2016), which we pay special attention to when calculating.

Remark 3. We can express in another way. Corollary 1. The tail variance of the elliptical distribution can be derived by considering the cases and . This risk measure takes the formwhere Proof. For convenience, we write , so . □

Corollary 2. The of Y takes the formwhere Proof. Through (

21), we can get

and we have

where

□

Corollary 3. The of Y takes the formwhere Proof. Letting i = 4 in (

21), we get

where

□

Corollary 4. The nth

moments of X takes the form Proof. Consider an

1 random vector

with a location-scale mixture of elliptical distribution

Then using Landsman and Valdez [

3], the distribution of the return

where

is as follows:

Using (

27) and by (

19), (

22), (

23), (

25) we obtain the

and

, respectively,

where

and

□

Remark 4. Now we compare the different distributions of TCM

form. The TCM

for elliptical distributions (see Landsman et al. [10]) and the TCM

for generalized skew-elliptical distributions (see Eini et al. [11]) are presented as follows, respectively, where where The mixture of elliptical distributions has a similar form as above. However, the three of them have different forms. Because the expectation and TCE

of the mixture of ellipitical distributions lead to some differences in the calculation processes, the final form is different. 4. Some Special

In this section, we discuss some measures related to several mixtures of ellipitical distributions.

Example 4. Let be a univariate location-scale mixture of normal random variables, defined as (

14).

In this case, we notice that , and . We have . Thus, we obtain the for a location-scale mixture of normal distributions: where and . Then and are obtained through substituting the above formulas in (

22), (

23)

and (

25).

Example 5. Let be a univariate location-scale mixture of Student’s t random variables defined a (

11).

We know that there is a variance of Z for and . Thus, the cumulative generators of Y are shown as follows:and the normalizing constants are Thus, we obtain the for the location-scale mixture of Student’s t distributions:whereand The measures are calculated in the spirit of the Example 4. and are obtained through substituting the above formulas in (

22), (

23)

and (

25).

Example 6. Let be a univariate location-scale mixture of a logistic random variable defined as (

15).

We find that The cumulative generators of Y are shown as follows: and the normalizing constants are Thus, we obtain the for the location-scale mixture of Logistic distributions:whereand . The measures are calculated in the spirit of Example 4. and are obtained through substituting the above formulas in (

22), (

23)

and (

25).

Example 7. Let be a univariate location-scale mixture of a Laplace random variable, defined as (

15).

We find that , the cumulative generators of Y are shown as follows: and the normalizing constants Thus, we obtain the for a location-scale mixture of Laplace distributions:whereand The measures are calculated in the spirit of the Example 4.

and are obtained through substituting the above formulas in (

22), (

23)

and (

25).

5. Numerical Analysis

In this section, two numerical examples are presented. We first consider for a mixture of normal distributions and mixture of Student’s t distributions.

Example 8. When is a standard normal random variable, . We denote , and its pdf is Using Example 4, we obtain and are obtained through substituting the above formulas in (

22), (

23)

and (

25).

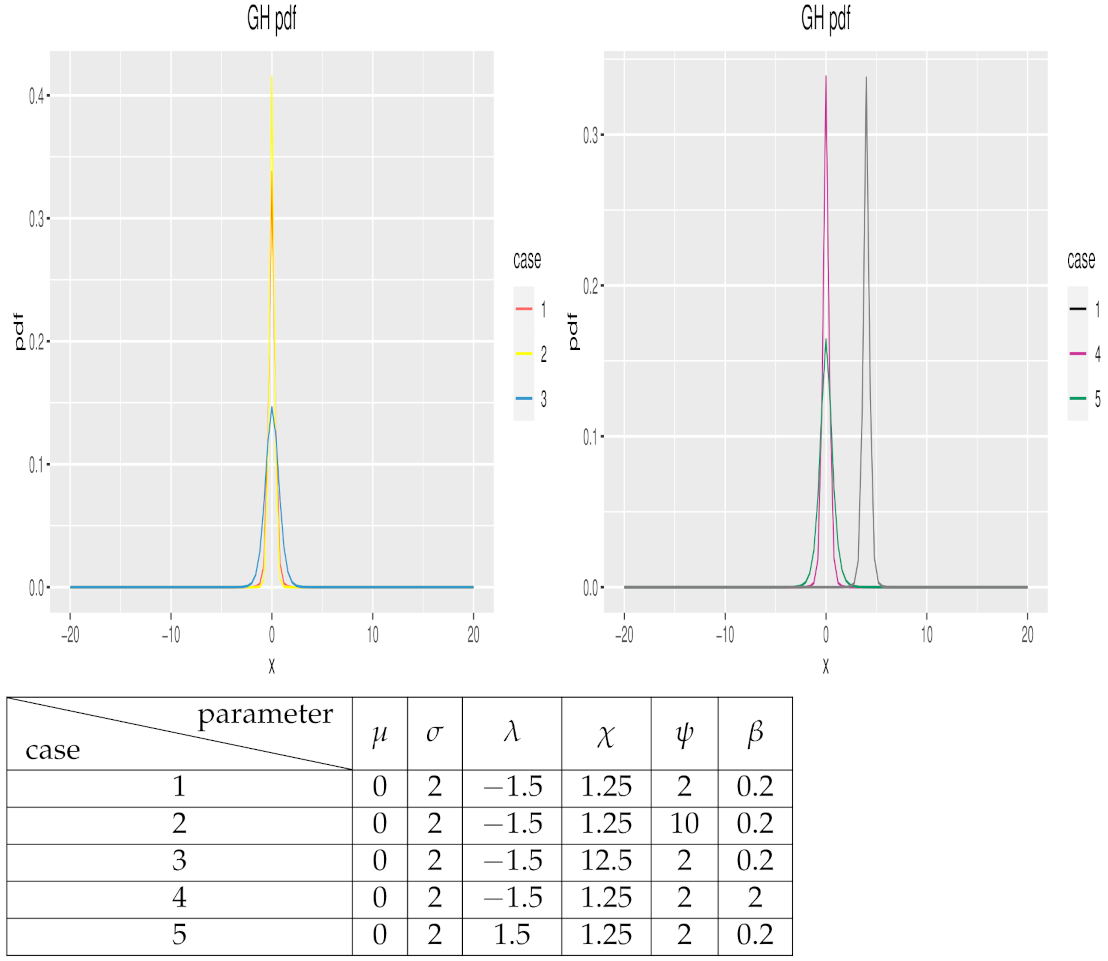

Next, we show the images of GH distribution under different parameters in Figure 1. By assigning different values to each parameter, we can see the influence of each parameter, and adjust the appropriate parameters in the actual fitting to make the fitting effect better.

Example 9. (Ignatieva and Landsman (2020)) Now, we consider the random variable X which has a Student’s t distribution, and . Then Y has the following pdf:where This case is the same as in Example 5, so we can get , and from Example 5. Next, we show the images of Student-t-GIG distribution under different parameters in Figure 2. Next, we consider TCE, TV, TCS and TCK for the universe GH distribution and mixture of Student’s t distribution. Let the parameters of GH:, and the parameters of Student’s t–GIG: . At the same time, we select the skew-normal distribution and skew Student’s t-normal distribution to compare with them. Let the parameters of skew-normal and skew Student’s t-normal be We chose q = 0.75, 0.8, 0.9, 0.95, 0.98, and the results are given in the Table 1. By doing the calculations, we found that the TCK of the Student’s t-GIG mixture distribution and skew Student’s t normal distribution tended to infinity. By comparing the TCK of the GH and skew-normal distribution, we found that GH is a heavy-tailed distribution and skew-normal is a light-tailed distribution. In general, the heavy-tailed distribution appears mainly in financial data, such as the return on securities. Comparing Student’s t–GIG to the skew Student’s t-normal distribution, we found that the Student’s t–GIG distribution was right-skewed and the skew Student’s t-normal distribution was left-skewed. It is easy to discover that the values of the measures of risk increase by raising the q-quantile value, which is not unexpected. Another phenomenon is that the measures of Student’s t–GIG are larger than that of GH.

6. Illustrative Example

We discuss TCE, TV, TCS and TCK of three stocks (Amazon, Google and Apple) covering a time frame from January 2016 to January 2019 by using the results of parameter estimates in Ignatieva and Landsman [

4]. In order to fit GH distributions to the univariate data, we select parameter estimates from the univariate fit of the GH family of distributions to the losses arising from Amazon, Google and Apple stocks. Fixed parameter values are used:

The results are shown in

Table 2.

As we can see, the TCE, TV, TCS and TCK of Amazon, Google and Apple are increasing with the increase of q-quantile, which helps investors understand extreme losses by showing risk measures under different q-quantile values.

7. Concluding Remarks

In this paper, we have considered the univariate location-scale mixture of elliptical distributions, which has received much attention in finance and insurance applications, since this distribution not only includes the location-scale mixture of normal (LSMN) distributions, Student’s t (LSMSt) distributions, logistic (LSMLo) distributions and Laplace (LSMLa) distributions, but also includes the generalized hyperbolic distribution (GHD) and the slash distribution. We have given the general form of TCM and the expressions of TV, TCS, and TCK.