Rectifying Ill-Formed Interlingual Space: A Framework for Zero-Shot Translation on Modularized Multilingual NMT

Abstract

1. Introduction

- We explore the interlingual space of the M2 model and identify the ill-formed interlingual space formed by this model when trained on English-centric data.

- We propose a framework to help the M2 model form a good interlingua for zero-shot translation. Under this framework, we devise an approach that combines multiway training with the denoising task and incorporates a novel neural interlingual module.

- We verify the performance of our method using two zero-shot experiments. The experimental results show that our method can outperform the 1-1 model for zero-shot translation by a large margin and surpasses the pivot-based method when combined with back-translation.

2. Related Works

2.1. Multilingual Neural Machine Translation

2.2. Zero-Shot Neural Machine Translation

2.3. Leveraging Denoising Task in Multilingual NMT

2.4. Incorporating a Neural Interlingual Module into the M2 Model

3. Exploring the Interlingual Space of the M2 Model

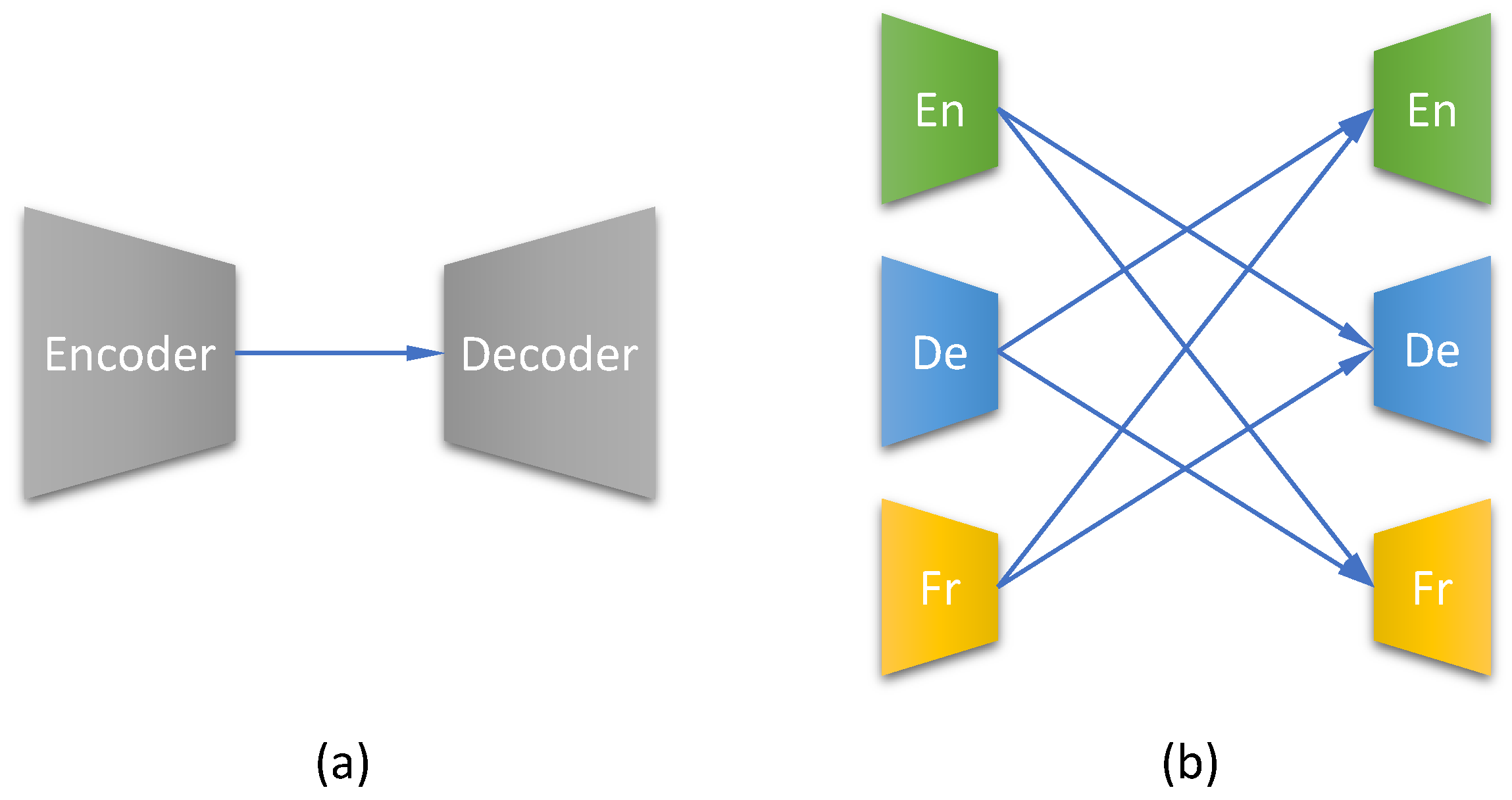

3.1. Background: Modularized Multilingual NMT

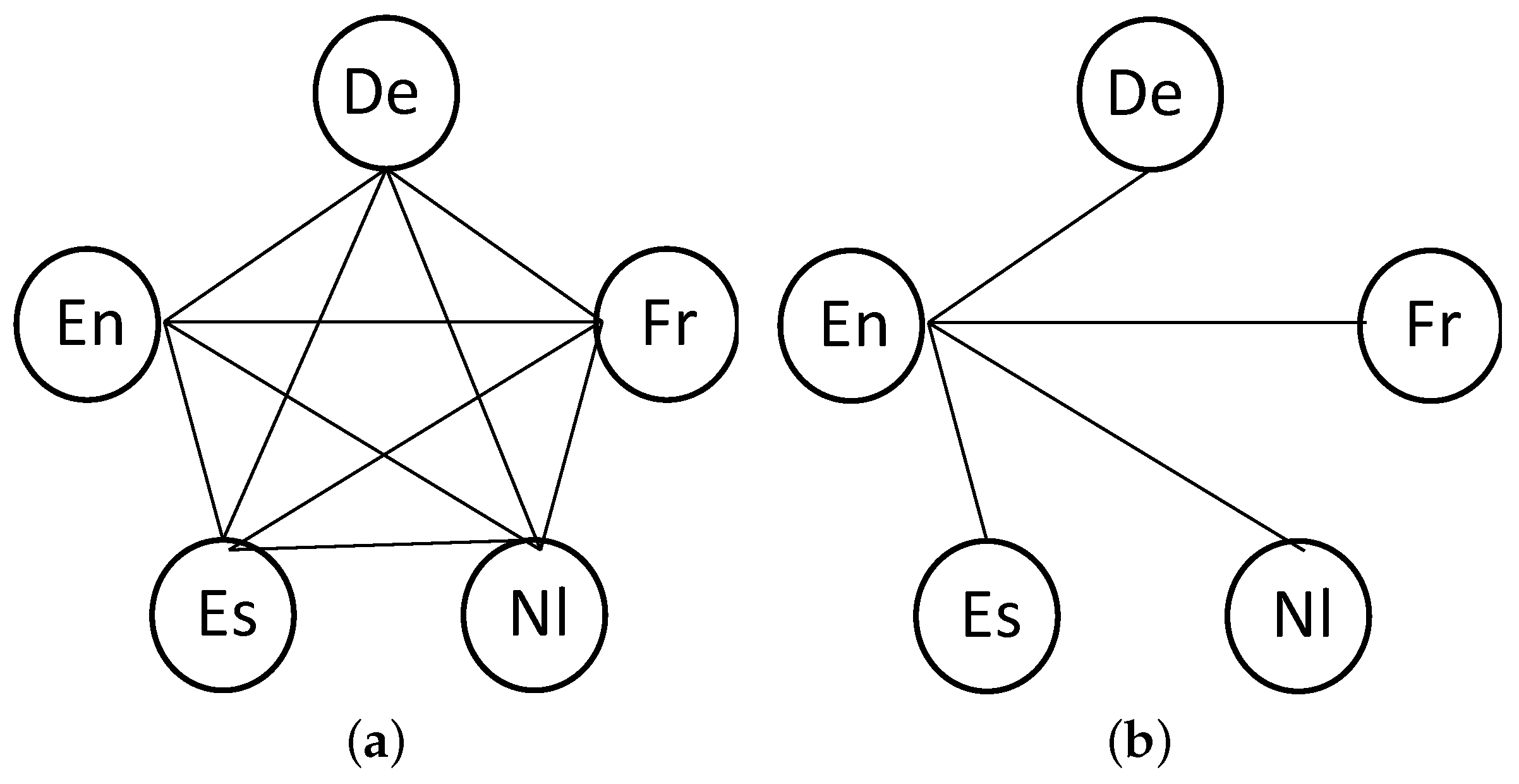

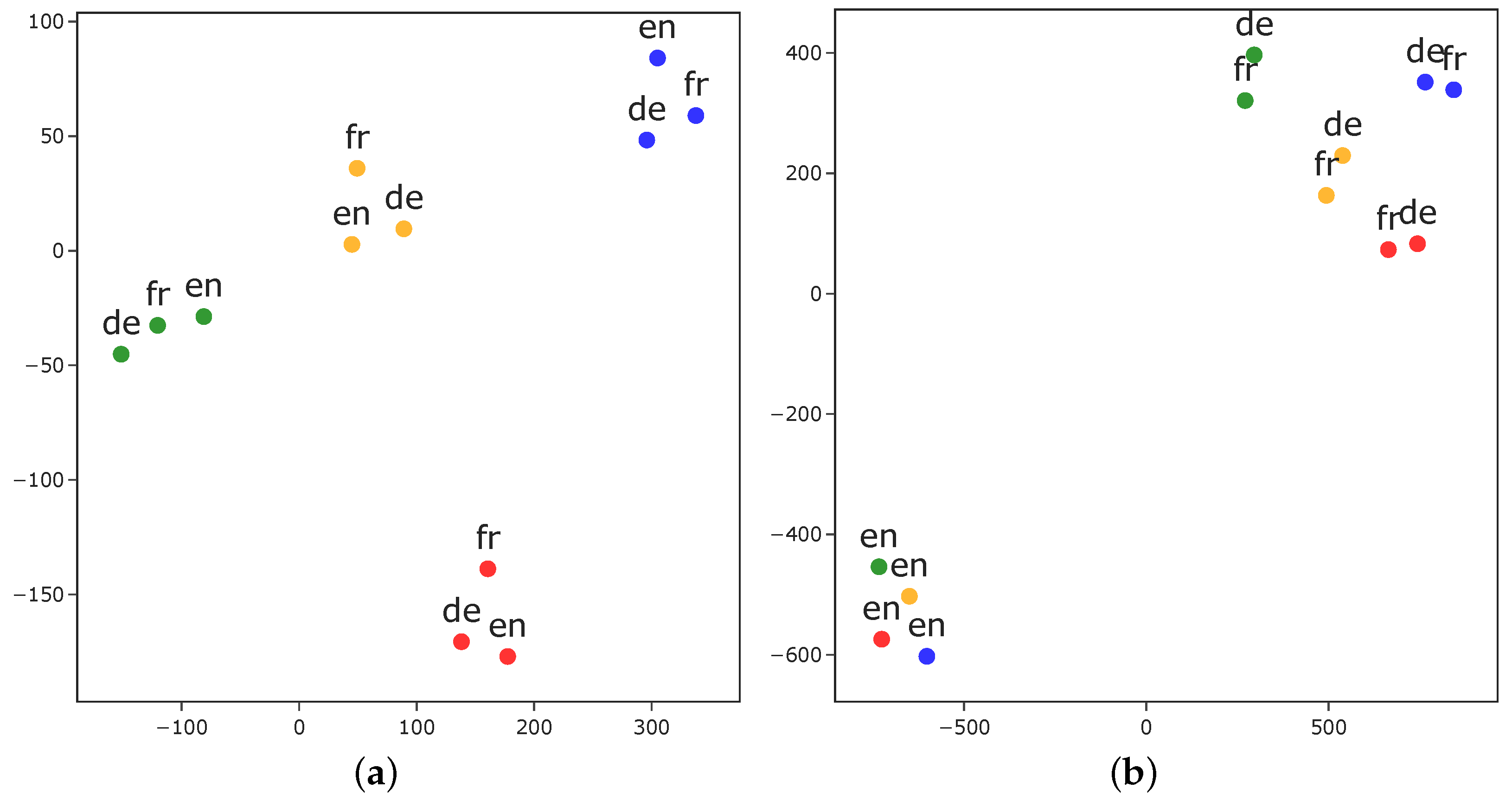

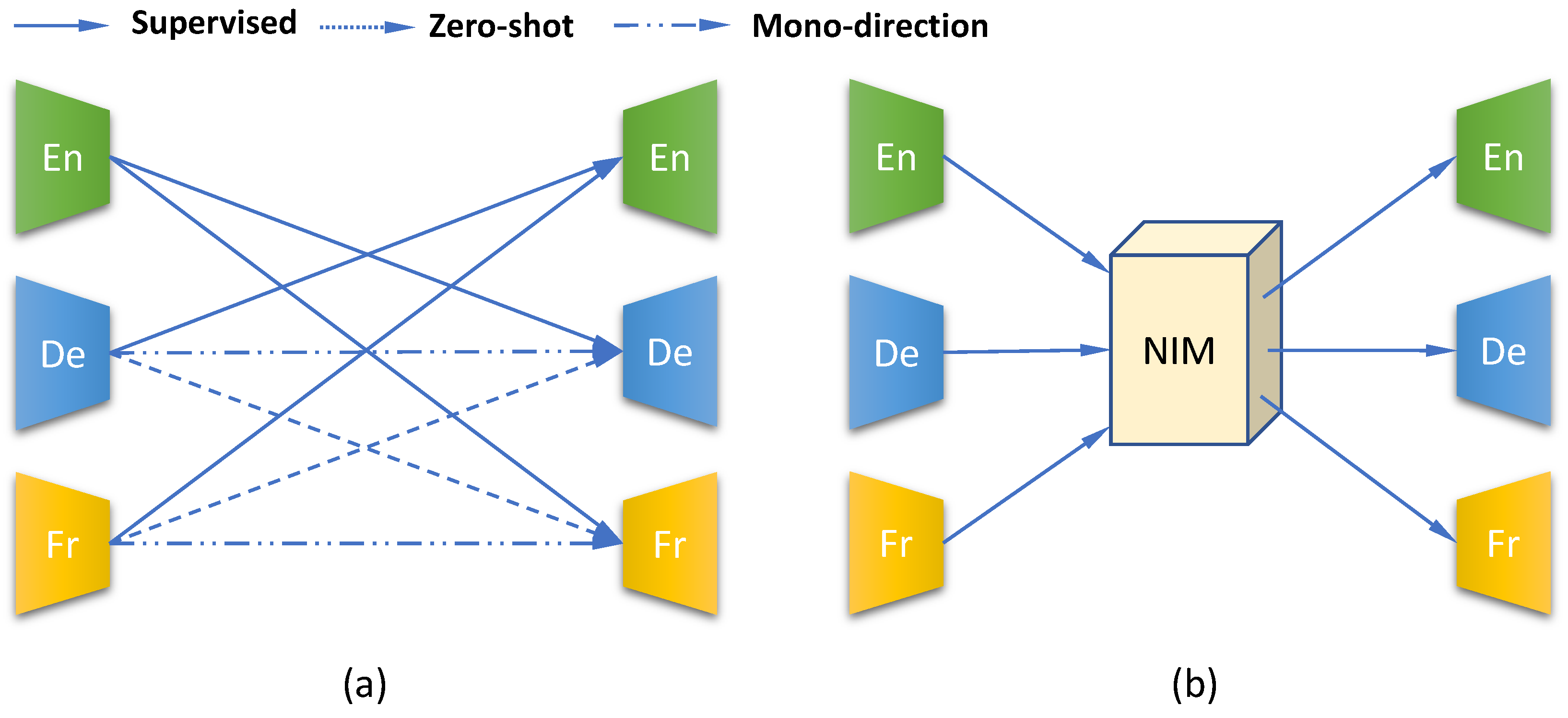

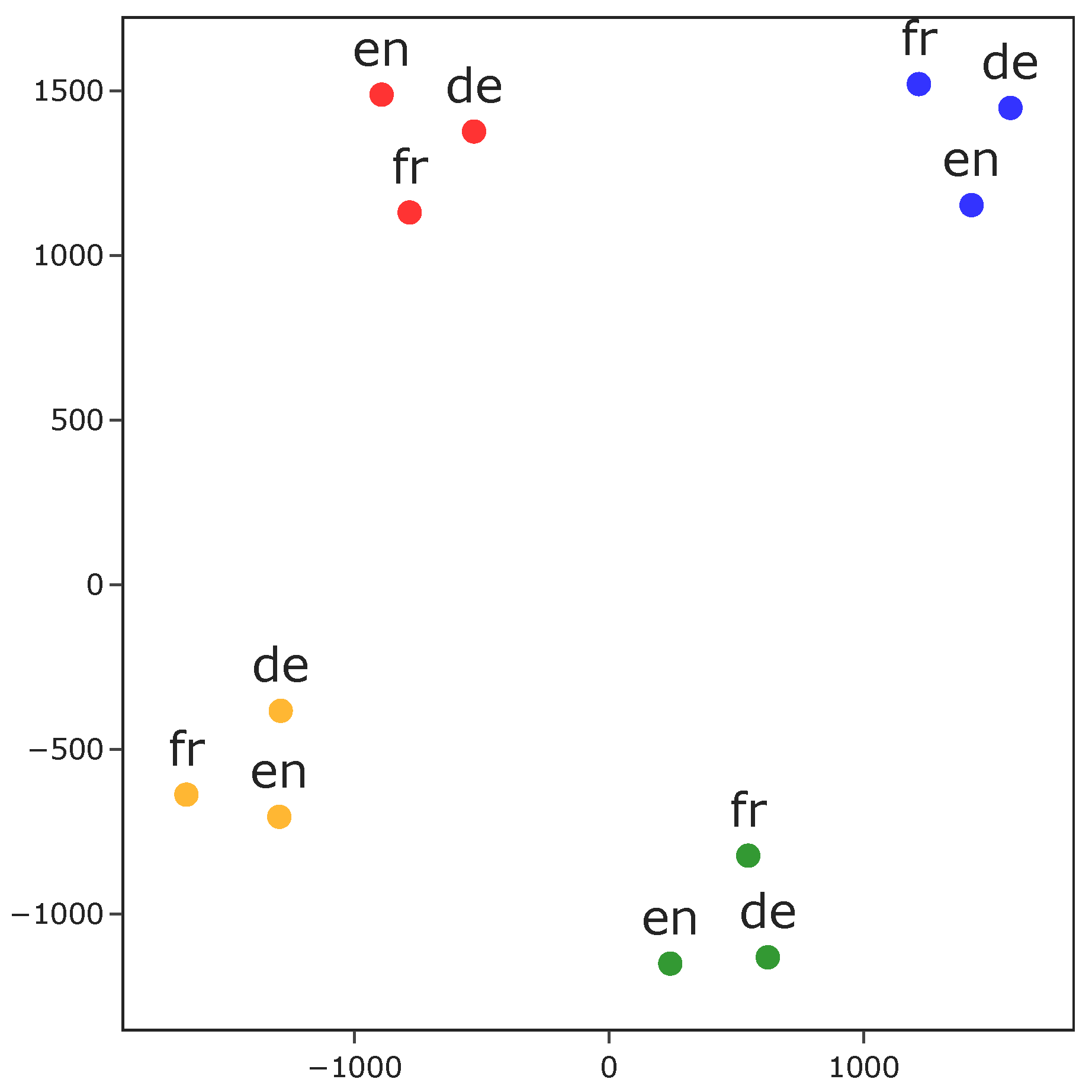

3.2. Comparison of Interlingual Space in M2M and JM2M

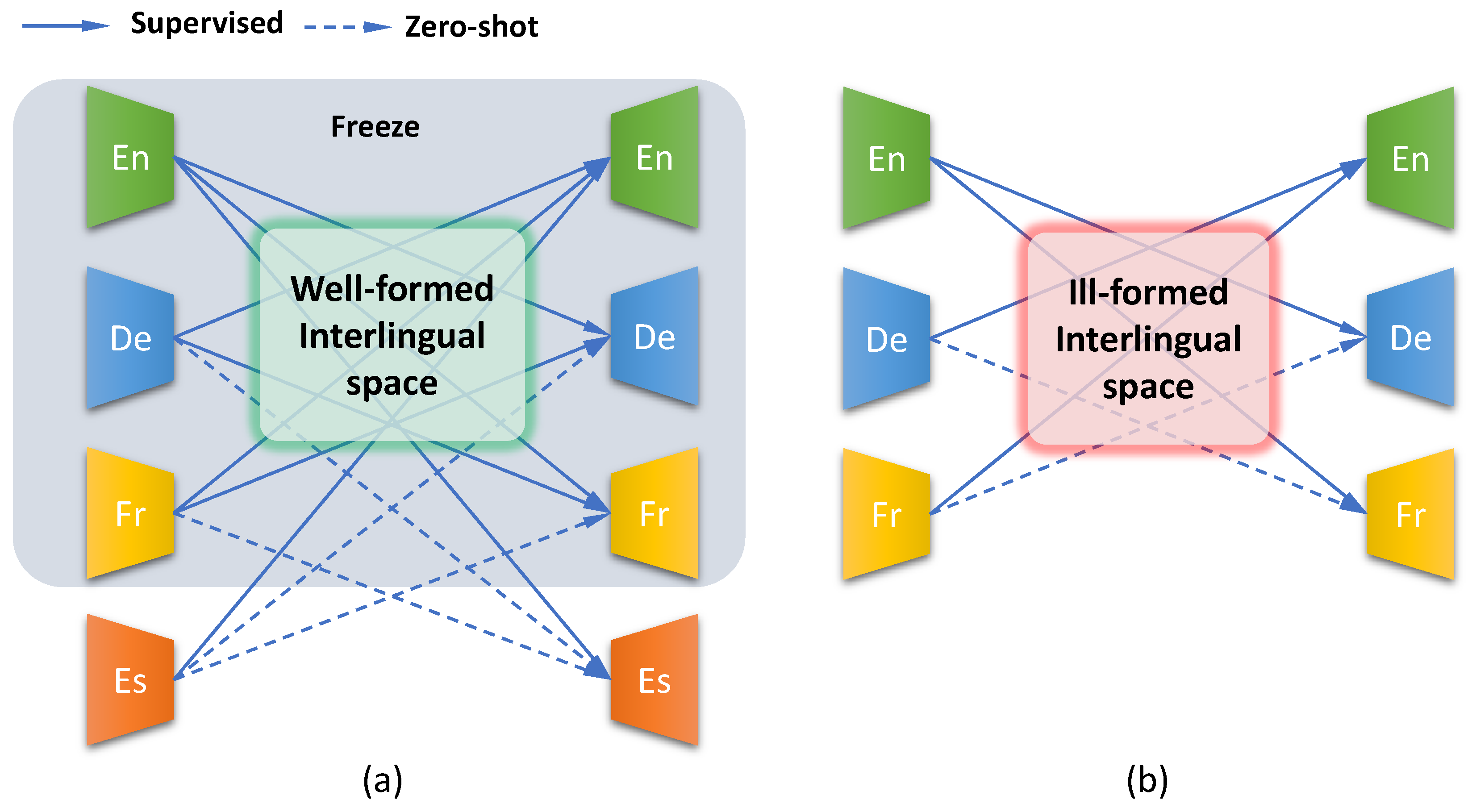

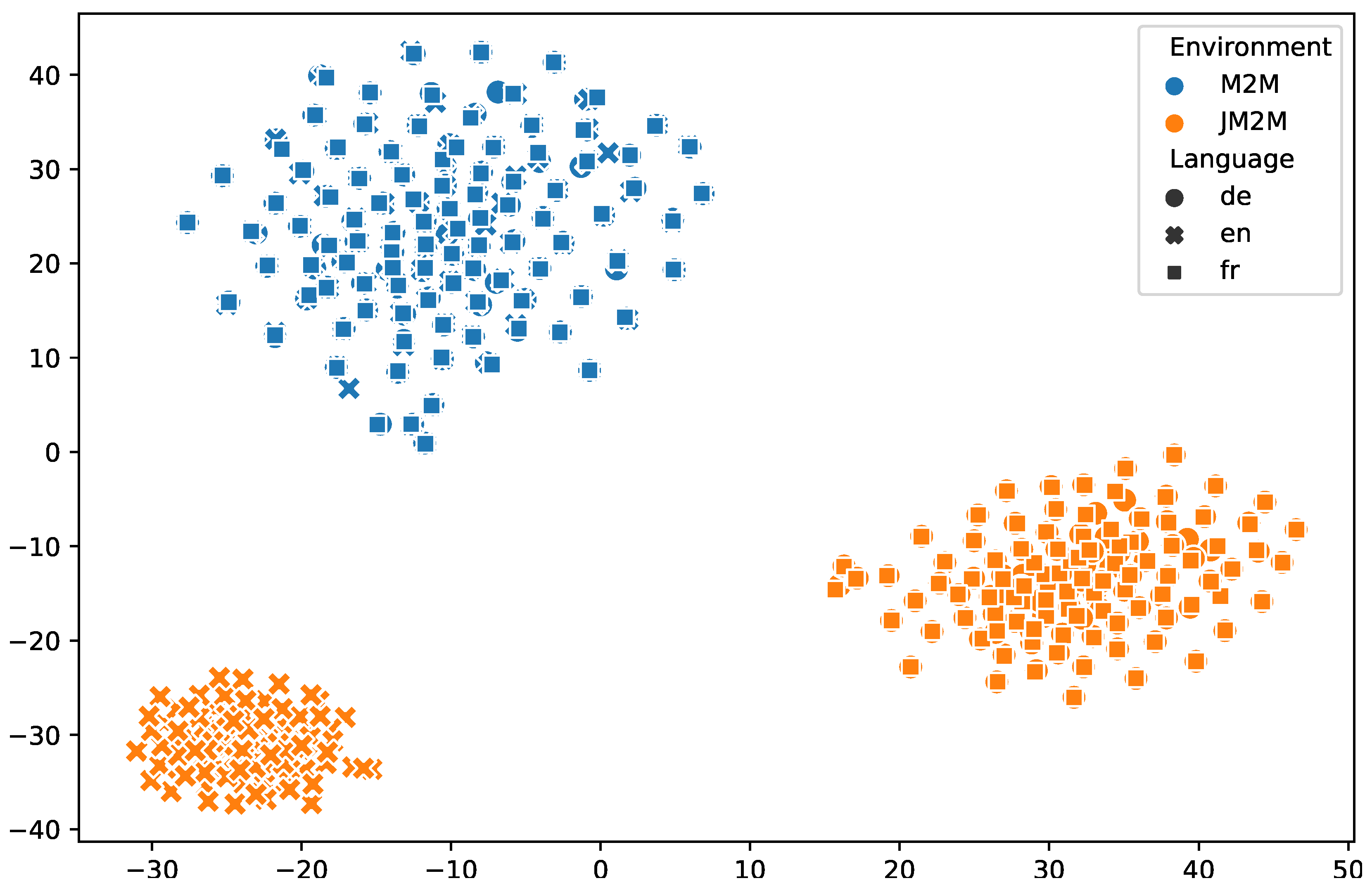

3.3. Interlingua Visualization

4. A Framework for Rectifying Ill-Formed Interlingua of the M2 Model Trained in the JM2M Environment

4.1. Adding a Mono-Direction Translation

4.2. Incorporating a Neural Interlingual Module

5. Approaches

5.1. Adding a Mono-Direction Translation

5.1.1. Reconstruction Task (REC)

5.1.2. Denoising Autoencoder Task (DAE)

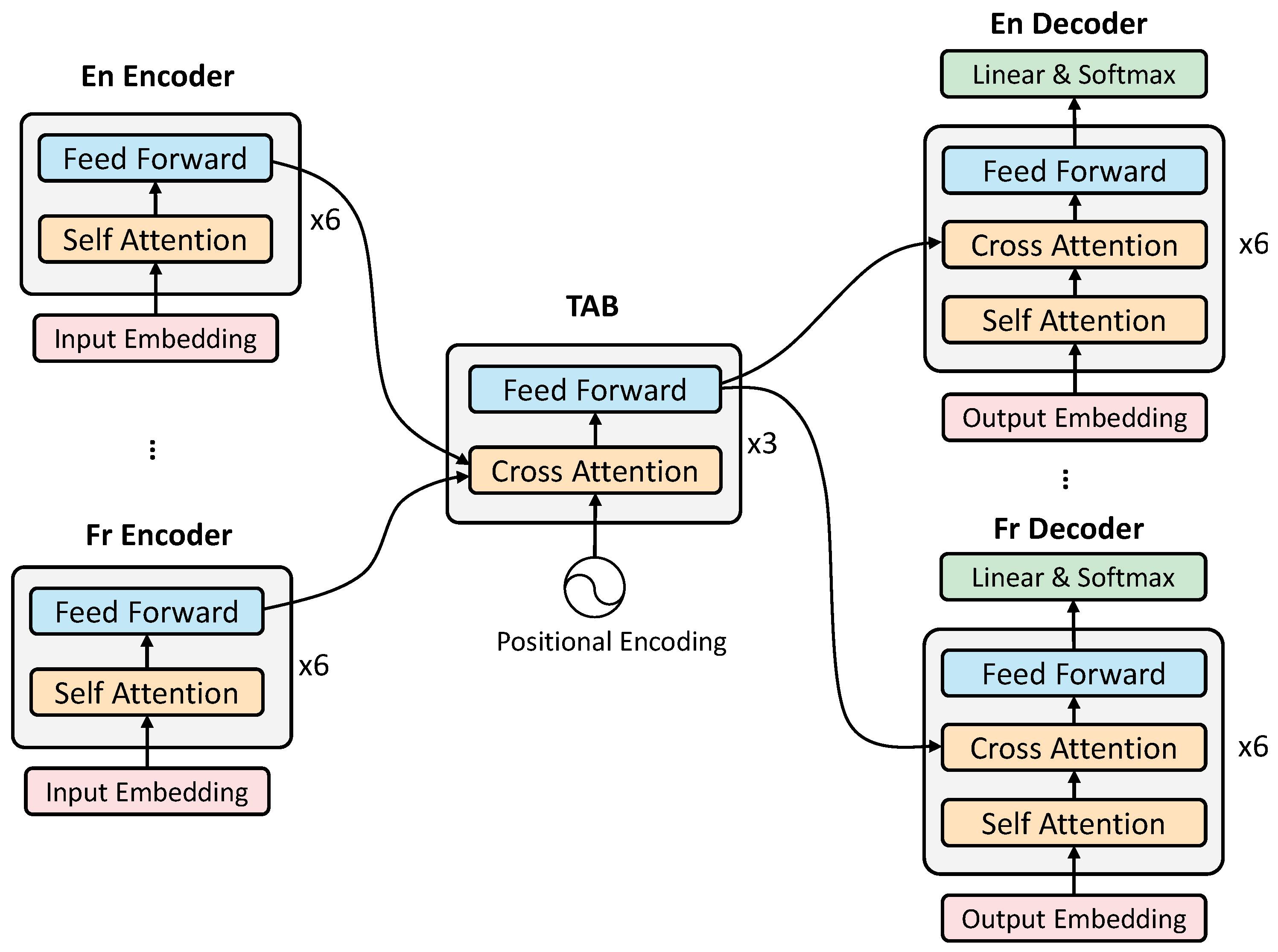

5.2. Incorporating a Neural Interlingual Module

5.2.1. Sharing Encoder Layers (SEL)

5.2.2. Transformer Attention Bridge (TAB)

6. Experiments

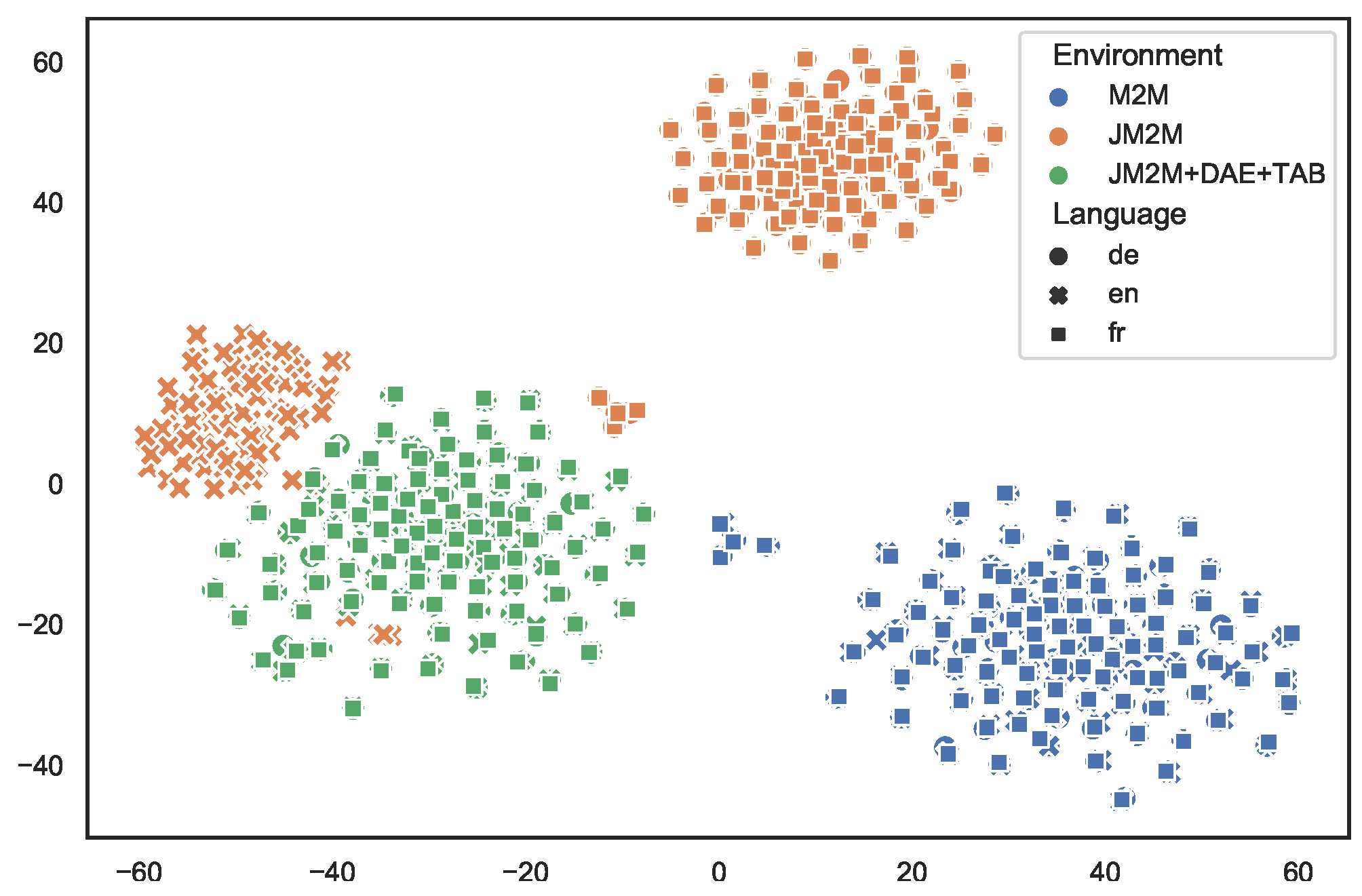

6.1. Interlingua Visualization

6.2. Zero-Shot Translation

6.2.1. Experimental Settings

Dataset

Model

Training

Baselines

6.2.2. Results and Analysis

6.3. Zero-Shot Learning of Adding a New Language Incrementally

6.3.1. Experimental Settings

Dataset

Model

Training

6.3.2. Result and Analysis

7. Discussion

7.1. Takeaways for Model Choice

7.2. Comparison with Similar Conclusions

7.2.1. Language Adapter

7.2.2. Positional Disentangled Encoder

7.2.3. Shared or Language-Specific Parameters

7.3. Future Research

- We can use methods that are orthogonal to the proposed methods to further improve the zero-shot translation quality for the M2 model, such as the positional disentangled encoder.

- We can study the strategy of shared parameters for the M2 model to reduce the number of model parameters without losing the translation quality and model flexibility. We can use conditional computation to allow the model to automatically learn the best strategy of shared parameters from the data.

- We need to explore the potential capacity bottleneck created by the introduction of NIM and find a solution.

- The use of the NIM as shared parameters undermines the modular structure of the M2 model, which prevents the NIM from incremental learning when adding new languages. One possible research direction is to modularize NIM while maintaining its cross-lingual transfer capability in order to support incremental updating of partial model parameters.

- Thanks to the modularity of the M2 model, we can explore the possibility of adding multiple modalities such as image or speech with modality-specific modules.

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Firat, O.; Cho, K.; Bengio, Y. Multi-Way, Multilingual Neural Machine Translation with a Shared Attention Mechanism. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; Association for Computational Linguistics: Stroudsburg, PA, USA, 2016; pp. 866–875. [Google Scholar] [CrossRef]

- Aharoni, R.; Johnson, M.; Firat, O. Massively Multilingual Neural Machine Translation. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; Volume 1, pp. 3874–3884. [Google Scholar] [CrossRef]

- Ha, T.; Niehues, J.; Waibel, A.H. Toward Multilingual Neural Machine Translation with Universal Encoder and Decoder. arXiv 2016, arXiv:1611.04798. [Google Scholar]

- Johnson, M.; Schuster, M.; Le, Q.V.; Krikun, M.; Wu, Y.; Chen, Z.; Thorat, N.; Viégas, F.B.; Wattenberg, M.; Corrado, G.; et al. Google’s Multilingual Neural Machine Translation System: Enabling Zero-Shot Translation. Trans. Assoc. Comput. Linguist. 2017, 5, 339–351. [Google Scholar] [CrossRef]

- Arivazhagan, N.; Bapna, A.; Firat, O.; Lepikhin, D.; Johnson, M.; Krikun, M.; Chen, M.X.; Cao, Y.; Foster, G.F.; Cherry, C.; et al. Massively Multilingual Neural Machine Translation in the Wild: Findings and Challenges. arXiv 2019, arXiv:1907.05019. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems 27: Annual Conference on Neural Information Processing Systems 2014, Montreal, QC, Canada, 8–13 December 2014; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; Curran Associates: Red Hook, NY, USA, 2014; pp. 3104–3112. [Google Scholar]

- Zhang, B.; Williams, P.; Titov, I.; Sennrich, R. Improving Massively Multilingual Neural Machine Translation and Zero-Shot Translation. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 1628–1639. [Google Scholar] [CrossRef]

- Huang, Y.; Cheng, Y.; Bapna, A.; Firat, O.; Chen, D.; Chen, M.X.; Lee, H.; Ngiam, J.; Le, Q.V.; Wu, Y.; et al. GPipe: Efficient Training of Giant Neural Networks using Pipeline Parallelism. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; Wallach, H.M., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E.B., Garnett, R., Eds.; Curran Associates: Red Hook, NY, USA, 2019; pp. 103–112. [Google Scholar]

- Lepikhin, D.; Lee, H.; Xu, Y.; Chen, D.; Firat, O.; Huang, Y.; Krikun, M.; Shazeer, N.; Chen, Z. GShard: Scaling Giant Models with Conditional Computation and Automatic Sharding. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, 3–7 May 2021. [Google Scholar]

- Lyu, S.; Son, B.; Yang, K.; Bae, J. Revisiting Modularized Multilingual NMT to Meet Industrial Demands. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 5905–5918. [Google Scholar] [CrossRef]

- Escolano, C.; Costa-jussà, M.R.; Fonollosa, J.A.R.; Artetxe, M. Multilingual Machine Translation: Closing the Gap between Shared and Language-specific Encoder-Decoders. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, EACL 2021, Online, 19–23 April 2021; Merlo, P., Tiedemann, J., Tsarfaty, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 944–948. [Google Scholar]

- Dabre, R.; Chu, C.; Kunchukuttan, A. A Survey of Multilingual Neural Machine Translation. ACM Comput. Surv. 2020, 53, 99. [Google Scholar] [CrossRef]

- Dong, D.; Wu, H.; He, W.; Yu, D.; Wang, H. Multi-Task Learning for Multiple Language Translation. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; Association for Computational Linguistics: Stroudsburg, PA, USA, 2015; Volume 1, pp. 1723–1732. [Google Scholar] [CrossRef]

- Lee, J.; Cho, K.; Hofmann, T. Fully Character-Level Neural Machine Translation without Explicit Segmentation. Trans. Assoc. Comput. Linguist. 2017, 5, 365–378. [Google Scholar] [CrossRef]

- Zoph, B.; Knight, K. Multi-Source Neural Translation. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; Association for Computational Linguistics: Stroudsburg, PA, USA, 2016; pp. 30–34. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, J.; Goyal, N.; Li, X.; Edunov, S.; Ghazvininejad, M.; Lewis, M.; Zettlemoyer, L. Multilingual Denoising Pre-training for Neural Machine Translation. Trans. Assoc. Comput. Linguist. 2020, 8, 726–742. [Google Scholar] [CrossRef]

- Wang, X.; Pham, H.; Arthur, P.; Neubig, G. Multilingual Neural Machine Translation with Soft Decoupled Encoding. In Proceedings of the 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Blackwood, G.; Ballesteros, M.; Ward, T. Multilingual Neural Machine Translation with Task-Specific Attention. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 3112–3122. [Google Scholar]

- Sachan, D.; Neubig, G. Parameter Sharing Methods for Multilingual Self-Attentional Translation Models. In Proceedings of the Third Conference on Machine Translation: Research Papers, Brussels, Belgium, 31 October–1 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 261–271. [Google Scholar] [CrossRef]

- Platanios, E.A.; Sachan, M.; Neubig, G.; Mitchell, T. Contextual Parameter Generation for Universal Neural Machine Translation. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 425–435. [Google Scholar] [CrossRef]

- Zaremoodi, P.; Buntine, W.; Haffari, G. Adaptive Knowledge Sharing in Multi-Task Learning: Improving Low-Resource Neural Machine Translation. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; Volume 2, pp. 656–661. [Google Scholar] [CrossRef]

- Bapna, A.; Firat, O. Simple, Scalable Adaptation for Neural Machine Translation. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 1538–1548. [Google Scholar]

- Gu, J.; Wang, Y.; Cho, K.; Li, V.O. Improved Zero-shot Neural Machine Translation via Ignoring Spurious Correlations. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 1258–1268. [Google Scholar] [CrossRef]

- Ji, B.; Zhang, Z.; Duan, X.; Zhang, M.; Chen, B.; Luo, W. Cross-Lingual Pre-Training Based Transfer for Zero-Shot Neural Machine Translation. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, the Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, the Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, 7–12 February 2020; AAAI Press: Palo Alto, CA, USA, 2020; pp. 115–122. [Google Scholar]

- Al-Shedivat, M.; Parikh, A. Consistency by Agreement in Zero-Shot Neural Machine Translation. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; Volume 1, pp. 1184–1197. [Google Scholar] [CrossRef]

- Arivazhagan, N.; Bapna, A.; Firat, O.; Aharoni, R.; Johnson, M.; Macherey, W. The Missing Ingredient in Zero-Shot Neural Machine Translation. arXiv 2019, arXiv:1903.07091. [Google Scholar]

- Sestorain, L.; Ciaramita, M.; Buck, C.; Hofmann, T. Zero-Shot Dual Machine Translation. arXiv 2018, arXiv:1805.10338. [Google Scholar]

- He, D.; Xia, Y.; Qin, T.; Wang, L.; Yu, N.; Liu, T.; Ma, W. Dual Learning for Machine Translation. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016, Barcelona, Spain, 5–10 December 2016; Lee, D.D., Sugiyama, M., von Luxburg, U., Guyon, I., Garnett, R., Eds.; Curran Associates: Red Hook, NY, USA, 2016; pp. 820–828. [Google Scholar]

- Chen, G.; Ma, S.; Chen, Y.; Zhang, D.; Pan, J.; Wang, W.; Wei, F. Towards Making the Most of Cross-Lingual Transfer for Zero-Shot Neural Machine Translation. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; Volume 1, pp. 142–157. [Google Scholar]

- Liu, D.; Niehues, J.; Cross, J.; Guzmán, F.; Li, X. Improving Zero-Shot Translation by Disentangling Positional Information. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Virtual Event, 1–6 August 2021; Volume 1, pp. 1259–1273. [Google Scholar]

- Wang, W.; Zhang, Z.; Du, Y.; Chen, B.; Xie, J.; Luo, W. Rethinking Zero-shot Neural Machine Translation: From a Perspective of Latent Variables. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Punta Cana, Dominican Republic, 16–20 November 2021; pp. 4321–4327. [Google Scholar]

- Raganato, A.; Vázquez, R.; Creutz, M.; Tiedemann, J. An Empirical Investigation of Word Alignment Supervision for Zero-Shot Multilingual Neural Machine Translation. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 8449–8456. [Google Scholar]

- Wu, L.; Cheng, S.; Wang, M.; Li, L. Language Tags Matter for Zero-Shot Neural Machine Translation. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online Event, 1–6 August 2021; pp. 3001–3007. [Google Scholar]

- Gonzales, A.R.; Müller, M.; Sennrich, R. Subword Segmentation and a Single Bridge Language Affect Zero-Shot Neural Machine Translation. In Proceedings of the Fifth Conference on Machine Translation, Online Event, 19–20 November 2020; pp. 528–537. [Google Scholar]

- Lu, Y.; Keung, P.; Ladhak, F.; Bhardwaj, V.; Zhang, S.; Sun, J. A neural interlingua for multilingual machine translation. In Proceedings of the Third Conference on Machine Translation: Research Papers, Belgium, Brussels, 31 October–1 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 84–92. [Google Scholar] [CrossRef]

- Vázquez, R.; Raganato, A.; Tiedemann, J.; Creutz, M. Multilingual NMT with a Language-Independent Attention Bridge. In Proceedings of the 4th Workshop on Representation Learning for NLP (RepL4NLP-2019), Florence, Italy, 2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 33–39. [Google Scholar] [CrossRef]

- Siddhant, A.; Bapna, A.; Cao, Y.; Firat, O.; Chen, M.X.; Kudugunta, S.R.; Arivazhagan, N.; Wu, Y. Leveraging Monolingual Data with Self-Supervision for Multilingual Neural Machine Translation. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J.R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 2827–2835. [Google Scholar] [CrossRef]

- Escolano, C.; Costa-jussà, M.R.; Fonollosa, J.A.R. From Bilingual to Multilingual Neural Machine Translation by Incremental Training. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: Student Research Workshop, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 236–242. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Zhu, C.; Yu, H.; Cheng, S.; Luo, W. Language-aware Interlingua for Multilingual Neural Machine Translation. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 1650–1655. [Google Scholar] [CrossRef]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J.R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 7871–7880. [Google Scholar] [CrossRef]

- Conneau, A.; Wu, S.; Li, H.; Zettlemoyer, L.; Stoyanov, V. Emerging Cross-lingual Structure in Pretrained Language Models. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 6022–6034. [Google Scholar] [CrossRef]

- Artetxe, M.; Ruder, S.; Yogatama, D. On the Cross-lingual Transferability of Monolingual Representations. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 4623–4637. [Google Scholar] [CrossRef]

- Kudugunta, S.R.; Bapna, A.; Caswell, I.; Firat, O. Investigating Multilingual NMT Representations at Scale. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; Inui, K., Jiang, J., Ng, V., Wan, X., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 1565–1575. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., von Luxburg, U., Bengio, S., Wallach, H.M., Fergus, R., Vishwanathan, S.V.N., Garnett, R., Eds.; Curran Associates: Red Hook, NY, USA, 2017; pp. 5998–6008. [Google Scholar]

- Koehn, P. Europarl: A parallel corpus for statistical machine translation. In Proceedings of the MT Summit, Phuket, Thailand, 13–15 September 2005; Citeseer: Princeton, NJ, USA, 2005; Volume 5, pp. 79–86. [Google Scholar]

- Kudo, T. Subword Regularization: Improving Neural Network Translation Models with Multiple Subword Candidates. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; Volume 1, pp. 66–75. [Google Scholar] [CrossRef]

- Kudo, T.; Richardson, J. SentencePiece: A simple and language independent subword tokenizer and detokenizer for Neural Text Processing. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Brussels, Belgium, 31 October–4 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 66–71. [Google Scholar] [CrossRef]

- Ott, M.; Edunov, S.; Baevski, A.; Fan, A.; Gross, S.; Ng, N.; Grangier, D.; Auli, M. fairseq: A Fast, Extensible Toolkit for Sequence Modeling. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics (Demonstrations): Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; Ammar, W., Louis, A., Mostafazadeh, N., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 48–53. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Post, M. A Call for Clarity in Reporting BLEU Scores. In Proceedings of the Third Conference on Machine Translation: Research Papers, Brussels, Belgium, 31 October–1 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 186–191. [Google Scholar] [CrossRef]

- Sennrich, R.; Haddow, B.; Birch, A. Improving Neural Machine Translation Models with Monolingual Data. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; Association for Computational Linguistics: Stroudsburg, PA, USA, 2016; Volume 1, pp. 86–96. [Google Scholar] [CrossRef]

- Philip, J.; Berard, A.; Gallé, M.; Besacier, L. Monolingual adapters for zero-shot neural machine translation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Honolulu, HI, USA, 16–18 November 2020; pp. 4465–4470. [Google Scholar]

- Zhang, B.; Bapna, A.; Sennrich, R.; Firat, O. Share or Not? Learning to Schedule Language-Specific Capacity for Multilingual Translation. In Proceedings of the Ninth International Conference on Learning Representations 2021, Virtual Event, 3–7 May 2021. [Google Scholar]

- Bengio, Y.; Léonard, N.; Courville, A. Estimating or propagating gradients through stochastic neurons for conditional computation. arXiv 2013, arXiv:1308.3432. [Google Scholar]

| Color | Lang. | Text |

|---|---|---|

| Red | En | I hope with all my heart, and I must say this quite emphatically, that an opportunity will arise when this document can be incorporated into the Treaties at some point in the future. |

| De | Ich hoffe unbedingt—und das sage ich mit allem Nachdruck-, dass es sich durchaus als möglich erweisen wird, diese Charta einmal in die Verträge aufzunehmen. | |

| Fr | J’espère vraiment, et j’insiste très fort, que l’on verra se présenter une occasion réelle d’incorporer un jour ce document dans les Traités. | |

| Green | En | Should this fail to materialise, we should not be surprised if public opinion proves sceptical about Europe, or even rejects it. |

| De | Anderenfalls darf man sich über den Skeptizismus gegenüber Europa oder gar seine Ablehnung durch die Öffentlichkeit nicht wundern. | |

| Fr | Faute de quoi comment s’étonner du scepticisme, voire du rejet de l’Europe dans l’opinion publique. | |

| Blue | En | The Intergovernmental Conference—to address a third subject—on the reform of the European institutions is also of decisive significance for us in Parliament. |

| De | Die Regierungskonferenz—um ein drittes Thema anzusprechen—zur Reform der europäischen Institutionen ist für uns als Parlament ebenfalls von entscheidender Bedeutung. | |

| Fr | Pour nous, en tant que Parlement—et j’aborde là un troisième thème-, la Conférence intergouvernementale sur la réforme des institutions européennes est aussi éminemment importante. | |

| Orange | En | At present I feel there is a danger that if the proposal by the Belgian Government on these sanction mechanisms were to be implemented, we would be hitting first and examining only afterwards. |

| De | Derzeit halte ich es für bedenklich, dass zuerst besiegelt und dann erst geprüft wird, wenn sich der Vorschlag der belgischen Regierung in Bezug auf die Sanktionsmechanismen durchsetzt. | |

| Fr | En ce moment, si la proposition du gouvernement belge devait être adoptée pour ces mécanismes de sanction, on courrait selon moi le risque de sévir avant d’enquêter. |

| Model | En-De | En-Fr | De-Fr | Avg |

|---|---|---|---|---|

| M2M | 0.8441 | 0.8526 | 0.8421 | 0.8463 |

| JM2M | 0.0161 | 0.0034 | 0.8062 | 0.2752 |

| JM2M + DAE + TAB | 0.8424 | 0.8492 | 0.8526 | 0.8481 |

| Model | De-Fi | Fi-De | De-Fr | Fr-De | Fi-Fr | Fr-Fi | Z_AVG | P_AVG |

|---|---|---|---|---|---|---|---|---|

| PIV-S | 18.66 | 20.75 | 30.04 | 24.07 | 26.96 | 18.84 | 23.22 | – |

| PIV-M | 18.66 | 20.90 | 30.40 | 24.35 | 27.53 | 19.31 | 23.53 | – |

| 1-1 (JM2M) | 10.51 | 11.75 | 18.48 | 15.08 | 15.75 | 11.00 | 13.76 | 33.01 |

| M2 (JM2M) | 0.27 | 0.13 | 0.11 | 0.11 | 0.12 | 0.28 | 0.17 | 33.71 |

| M2 (JM2M + REC) | 8.99 | 8.56 | 15.11 | 13.44 | 12.32 | 9.64 | 11.34 | 32.02 |

| M2 (JM2M + DAE) | 10.77 | 12.83 | 21.49 | 15.39 | 18.57 | 11.44 | 15.08 | 33.88 |

| M2 (JM2M + DAE + SEL) | 16.37 | 17.96 | 27.58 | 20.70 | 24.35 | 16.21 | 20.53 | 33.93 |

| M2 (JM2M + DAE + TAB) | 17.54 | 19.46 | 28.76 | 22.77 | 25.30 | 18.05 | 21.98 | 33.42 |

| +Back-translation | 19.03 | 21.64 | 30.76 | 24.62 | 27.90 | 19.55 | 23.92 | 34.10 |

| M2 (JM2M+SEL) | 0.48 | 0.35 | 1.34 | 0.31 | 1.20 | 0.46 | 0.69 | 33.91 |

| M2 (JM2M+TAB) | 1.03 | 0.62 | 0.57 | 0.64 | 0.54 | 0.96 | 0.73 | 33.48 |

| M2 (M2M) | 20.99 | 23.18 | 32.93 | 26.61 | 29.70 | 21.19 | 25.77 | 34.57 |

| Training | Pairs | INIT | INCR | JOINT | |

|---|---|---|---|---|---|

| Stage | Fr | Fr + TAB | |||

| INIT | P-AVG | 34.33 | 34.33 | 33.24 | 34.77 |

| Z-AVG | 24.28 | 24.28 | 22.71 | 24.44 | |

| INCR | En-Fr | – | 36.12 | 37.59 | 38.29 |

| Fr-En | – | 37.74 | 38.04 | 38.15 | |

| P-AVG | – | 36.93 | 37.82 | 38.22 | |

| De-Fr | – | 27.31 | 27.76 | 27.53 | |

| Fr-De | – | 20.92 | 19.61 | 21.24 | |

| Es-Fr | – | 33.97 | 34.99 | 33.99 | |

| Fr-Es | – | 34.84 | 33.52 | 34.93 | |

| Nl-Fr | – | 25.46 | 25.90 | 25.82 | |

| Fr-Nl | – | 22.61 | 21.70 | 23.14 | |

| Z-AVG | – | 27.52 | 27.25 | 27.78 | |

| train #para (M) | 79.4 | 19.3 | 21.6 | 98.6 | |

| train time (h) | 9.6 | 3.9 | 5.0 | 12.9 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, J.; Shi, Y. Rectifying Ill-Formed Interlingual Space: A Framework for Zero-Shot Translation on Modularized Multilingual NMT. Mathematics 2022, 10, 4178. https://doi.org/10.3390/math10224178

Liao J, Shi Y. Rectifying Ill-Formed Interlingual Space: A Framework for Zero-Shot Translation on Modularized Multilingual NMT. Mathematics. 2022; 10(22):4178. https://doi.org/10.3390/math10224178

Chicago/Turabian StyleLiao, Junwei, and Yu Shi. 2022. "Rectifying Ill-Formed Interlingual Space: A Framework for Zero-Shot Translation on Modularized Multilingual NMT" Mathematics 10, no. 22: 4178. https://doi.org/10.3390/math10224178

APA StyleLiao, J., & Shi, Y. (2022). Rectifying Ill-Formed Interlingual Space: A Framework for Zero-Shot Translation on Modularized Multilingual NMT. Mathematics, 10(22), 4178. https://doi.org/10.3390/math10224178