Abstract

Spectrum-based fault localization (SBFL) is an automated fault localization technique that uses risk evaluation metrics to compute the suspiciousness scores from program spectra. Thus, risk evaluation metrics determine the technique’s performance. However, the existing experimental studies still show no optimal metric for different program structures and error types. It is possible to further optimize SBFL’s performance by combining different metrics. Therefore, this paper effectively explores the combination of risk evaluation metrics for precise fault localization. Based on extensive experiments using 92 faults from SIR and 357 faults from Defects4J repositories, we highlight what and which risk evaluation metrics to combine to maximize the efficiency and accuracy of fault localization. The experimental results show that combining risk evaluation metrics with high negative correlation values can improve fault localization effectiveness. Similarly, even though the combination of positively correlated effective risk evaluation metrics can outperform most negatively correlated non-effective ones, it still cannot improve the fault localization effectiveness. Furthermore, low-correlated risk evaluation metrics should also be considered for fault localization. The study concluded that getting highly negatively correlated risk evaluation metrics is almost impossible. The combination of such risk evaluation metrics would improve fault localization accuracy.

Keywords:

spectrum-based fault localization; risk evaluation metrics; metric combination; software testing MSC:

68N30

1. Introduction

Program fault localization [1,2,3,4] plays an indispensable role in software debugging. It can assist developers in efficiently locating the root cause of software failure and greatly improve the development efficiency and quality of software products. Currently, there exist many families of fault localization, such as spectrum-based fault localization (SBFL), mutation-based fault localization, dynamic program slicing, information-retrieval-based fault localization, and mutating the results of conditional expressions [5]. The SBFL method only uses program spectra (test coverage information) to determine which program statements are most suspicious and associated with the fault by computing the suspiciousness scores for each executed program statement [6,7]. Therefore, the SBFL is lightweight, so it has become one of the most effective methods at present [8].

In SBFL, the risk evaluation metric is the key to determining the performance of fault localization, which is a well-designed formula responsible for computing the suspiciousness score for each program statement based on program spectra. Therefore, researchers have extensively researched and constructed different risk evaluation metrics to maximize fault localization performance. In recent years, although a large number of metrics, such as Tarantula [1], Ample [9], MECO [10], D*2 [11], and GP [12,13], have been proposed one after another, the existing experimental studies [12,14,15,16] have shown that there is no optimal metric for different program structures and error types. Even though the researchers, through empirical evaluations, have identified that some risk evaluation metrics are more effective (referred to as maximal metrics in this study) than others (referred to as non-maximal in this study) [14]. Some risk evaluation metrics such as Tarantula [1], Ample [9], D*2 [11], GP [12,13], OP1 and OP2 [17], SBI [18], Jaccard [19], Ochiai1 and Ochiai2 [20], and Wong 1–3 [21] are regarded as maximal because of their superiority in performance against others [14,22]. At the same time, other risk evaluation metrics such as Anderberg, Sørensen-Dice, Dice, Goodman, Hamman, Simple Matching, Rogers-Tanimoto, Hamming, Euclid, Russel&Rao, Rogot1, Scott, M1, M2, Arithmetic-Mean, Fleiss, and Cohen, are regarded as non-maximal due to their effectiveness in locating the faults [14,17,22].

Due to such a setback, some recent works propose a new way to improve fault localization performance by combining risk evaluation metrics. Some preliminary studies by Wang [23] and Jifeng [24] have tried to combine multiple ranking risk evaluation metrics. The former proposed search-based algorithms to form a combination by searching for the optimal candidate combination from 22 risk evaluation metrics without considering their correlations, which is one of the most critical factors in combining two techniques. The latter proposed a learning-to-rank approach using a machine learning model and randomly selected the existing risk evaluation metrics without considering their suitability for combination. However, these initial attempts have yielded some encouraging results. Regrettably, how to pick and combine risk evaluation metrics to achieve the best performance has become a critical problem to solve.

Fortunately, Zou et al.’s work [5] on how to combine multiple different families of techniques to achieve efficient fault localization found that (1) when there is less correlation between two techniques of different families, they may provide different information and utilizing that information may enhance fault localization effectiveness (2) when two techniques are good at locating specific faults, they are positively correlated, and the performance of their combination might not optimize the efficiency of SBFL. Nevertheless, a prolific qualitative concern is whether different risk evaluation metrics in SBFL have a high or low correlation and whether their combination can boost fault localization.

Similar to the studies mentioned above, and due to the different intuitions in proposing each risk evaluation metric in the literature, it is challenging to determine the practicability of the existing studies. As a result, Xiao-Yi Zhang and Mingye Jiang [25] proposed a SPectra Illustration for Comprehensive Analysis (SPICA) method for reviewing and analyzing the existing SBFL techniques via visualization of the spectrum data. They found that some metrics’ performance curves (MPC) have similar behaviours in SPectra space (SP). In this case, it is the best practice to consider the diversity of risk evaluation metrics by selecting the ones with different MPCs.

The similarity of this problem inspires us to explore choosing the optimal risk evaluation metrics for combination to maximize the solution’s performance. That is, whether it is possible to propose an optimal strategy for selecting risk evaluation metrics for combination through their correlation. Accordingly, these ideas prompted us to conduct our experiment using the following rubric for combining the existing risk evaluation metrics:

- Two positively correlated maximal risk evaluation metrics are already good at locating software faults. The effectiveness of their combination might outweigh their individual fault localization effectiveness.

- Two negatively correlated maximal risk evaluation metrics are good at locating different sorts of faults. Their combined performances might outweigh their individual fault localization effectiveness.

- Two positively correlated non-maximal risk evaluation metrics are not good at locating software faults, yet their combination might increase the overall fault localization effectiveness.

- Two negatively correlated non-maximal risk evaluation metrics are not good at locating different sorts of faults, yet combining them might increase overall fault localization effectiveness.

- Two positively or negatively correlated maximal and non-maximal risk evaluation metrics, where one is good, and the other is not good at locating software faults, might complement each other, and their combination might outweigh their individual fault localization effectiveness.

In order to corroborate the above-listed rubric, a risk evaluation metric is considered good or effective if it can place the faulty statements in the program under test to the top of the ranking list, thereby helping the developers locate faults without going through many non-faulty statements. Therefore, this paper explores and evaluates all the combinations mentioned above. This empirical evaluation of risk evaluation metrics combination will help further research improving spectrum-based fault localization.

It is important to note that this study only focuses on exploring an effective way of choosing the existing risk evaluation and their combination to improve the accuracy of fault localization.

This paper makes the following main contributions:

- An empirical study is performed to explore the performance and combination of different risk evaluation metrics based on whether two risk evaluation metrics are maximal or non-maximal, correlated positively or negatively, and the magnitude of the correlation. We then evaluate which combination is effective for fault localization.

- Experimental results demonstrate that negatively correlated risk evaluation metrics are best combined for effective fault localization, especially those with negatively high correlation values because they can outperform both the existing risk evaluation metrics and the individual ones that make the combination.

- This is the first study to use the degree of correlation of the same fault localization family, spectrum-based fault localization, to suggest the ideal techniques to be combined.

The rest of this paper is organized as follows. Section 2 provides a background on spectrum-based fault localization. Section 3 provides details on how we combine the risk evaluation metrics. In Section 4, we explain the setup of the experiments for our empirical study, and in Section 5, we provide the results. Section 6 discuses the threats to the validity of this empirical study, and Section 7 concludes the paper.

2. Spectrum-Based Fault Localization

The first step of the debugging process is to precisely locate the fault at any granularity level, e.g., statements, blocks, methods, or classes. Spectrum-based fault localization is an automated technique to pinpoint the location of a fault in code.

The primary assumption in spectrum-based fault localization is that the program statements covered in more failing tests and few or no passing tests are assumed to be faulty. Thus, it collects the coverage information of program statements as a tuple of four values (, , , ) called program spectrum. Typically, and represent the failing and passing tests that execute the program statement, and and are the numbers of failing and passing tests that do not execute the program statement, respectively. The risk evaluation metric then translates the program spectrum into a suspiciousness score for each program statement, indicating its likelihood of being faulty.

Various risk evaluation metrics have been proposed by different researchers (e.g., Table 1) to assign the highest rank to the faulty program statement, as mentioned in the introduction part of this paper. The spectrum information of seeded and real faults are not similar, making many risk evaluation metrics’ performances more superior in one sort of fault location to another.

Table 1.

The Maximal risk evaluation metrics.

Thus, combining two different risk evaluation metrics precisely localizing faults in different programs could enhance their performances in localizing unknown faults since both provide information on various aspects of the faults [5]. Therefore, in this paper, our goal is to explore different metrics suitable for combination for effective fault localization.

Many studies have theoretically evaluated the effectiveness of risk evaluation metrics as a standalone method, and some have categorized them according to their efficacy for effective fault localization. This study, therefore, gives a clue to the possible combination of these risk evaluation metrics for effective fault localization.

Theoretically, Xie et al. [26] assess the manually-created risk evaluation metrics used in earlier studies to compute suspiciousness scores and show that the two best SBFL families are ER1 (a, b) and ER5 (a, b, c). The follow-up study by Xie et al. [27] investigated SBFL metrics generated by executing an automated genetic programming technique in a Shin Yoo study [12]. Their research discovered that GP (02, 03, 13, and 19) are the best GP-generated metrics. SAVANT was presented by Le et al. [28], which introduced Daikon [29] invariants to SBFL as an extra feature. They used the learning to rank model to combine SBFL approaches with invariant data. SAVANT outperformed the best four SBFL metrics, including MULTRIC, on real-world faults from the Defects4J dataset [30]. Sohn and Yoo [31] proposed FLUCCS, which combined SBFL approaches with code change measures. They used Genetic Programming and linear rank Support Vector Machines to learn how to rank items. They also evaluated FLUCCS on the Defects4J dataset and discovered that it outperforms existing SBFL approaches. Kim et al. [32] also presented a learn-to-rank fault localization technique named PRINCE. This approach used a genetic algorithm to combine dynamic features, such as spectrum-based fault localization, mutation-based fault localization, and static features, such as dependency information and structural complexity of the program’s entity. The approach was evaluated on 459 artificial and real-world faults. The results show that PRINCE is more effective than all the state-of-the-art techniques, spectrum-based fault localization, mutation-based fault localization, and learning-to-rank.

3. Combining the Risk Evaluation Metrics

Zou et al. demonstrated that when two techniques are effective in different kinds of faults in different ways, they may likely outperform the individual standalone technique [5]. Inspired by this, we study the different performance correlations of existing metrics and then, combine them to determine which combination is the best for effective spectrum-based fault localization.

Therefore, the combinations of the metrics in this study involve direct combination method where suspiciousness scores of two risk evaluation metrics are combined to enhance the efficiency of spectrum-based fault localization.

3.1. Selection of Suspiciousness Metrics for Combination

This study explores and assesses the combinations of risk evaluation metrics based on how the two metrics are correlated and whether or not the metrics are maximal. Thus, based on our rubric, when two risk evaluation metrics are combined, there are three possible combinations to whether or not the metrics are maximal.

- (1)

- Both risk evaluation metrics are maximal.

- (2)

- Both risk evaluation metrics are non-maximal.

- (3)

- One risk evaluation metric is maximal, and the other is non-maximal.

To decide whether a risk evaluation metric is effective (maximal) or non-effective (non-maximal), we rely on the studies by Yoo et al. and Wu et al., who evaluated and found some risk evaluation metrics as maximal [14,22]. Consequently, the other risk evaluation metrics are termed as non-maximal in this study.

Similarly, when two risk evaluation metrics are combined, there are five possible combinations to how much the performance of the two risk evaluation metrics is correlated in locating the faults. The correlation of two risk evaluation metrics may result in (very) high, moderate, low, negligible, and neutral [36].

- (1)

- Both risk evaluation metrics have high (H) correlation ().

- (2)

- Both risk evaluation metrics have moderate (M) correlation ().

- (3)

- Both risk evaluation metrics have low (L) correlation ().

- (4)

- Both risk evaluation metrics have negligible (N) correlation ().

- (5)

- Both risk evaluation metrics have neutral (U) correlation ().

In this case, we first run a pre-experiment to determine which risk evaluation metrics can be combined based on their performance correlation. To quantify the correlation between each pair of techniques, we compute Pearson correlations r, which measures the linear correlation between two variables [37]. Recall that the developers only examine the first few program statements suggested as faulty by any fault localization method. This shows the possibility of using the total number of statements checked by the developers before locating the first fault, wasted effort, to assess the effectiveness of each risk evaluation metric. Therefore, we selected 44 existing risk evaluation metrics (see [17] for the details) to compute the wasted effort scores in 30 iterations for 30 selected faulty programs from Defects4J and SIR-repository datasets to determine their performances. We selected five faults from the SIR-repository and five from each project of Defects4J i-e Charts, Closure, Lang, Math, and Time. Thus, effectively selecting both real and seeded faults in this pre-experiment. Table 2 provides the correlation values (r) amongst risk evaluation metrics (see Algorithm 1 for the process of selecting the studied combined risk evaluation metrics).

| Algorithm 1 Metrics Selection. | |

| Require:risk evaluation metrics (I), faulty and non-faulty programs (F) | |

| ▹ list of metrics | |

| ▹ list of the 30 selected faulty and non-faulty programs | |

| for each do | |

| for each do | |

| compute wasted efforts for each statement | |

| end for | |

| end for | |

| for all do | |

| compute the Pearson correlation (r) | |

| end for | |

| for all r do | |

| compare the correlation of (i), and group in twos accordingly | |

| extract the possible combinations as suggested above | |

| group the possible combinations | |

| end for | |

| return grouped risk evaluation metrics | |

Table 2.

The correlation results of the selected combined metrics: p = 0.005.

Note that this is an experiment to determine which risk evaluation metrics are suitable for combination after observing their correlations. Another experiment is conducted after the combination, and the result is given in Section 5.

Furthermore, we performed a series of experiments to combine three, four, and even five metrics before considering only two risk evaluation metrics for combination. This is because the combination of three, four, and higher numbers of combination require more in-depth analysis, which is beyond the scope of this study. For example, if we want to combine three different metrics, A, B, and C, we must observe the following scenario; A is correlated or not correlated with B and C, B is correlated or not correlated with A and C, and C is correlated or not correlated with A and B. Therefore, our future work will empirically evaluate the higher number of combinations, and their degree of correlation, such as A is highly correlated with B and lowly correlated with C.

Consequently, due to the size of the correlation table, this study only shows the correlation values of the risk evaluation metrics considered for combination.

3.2. Combination Method

In order to demonstrate how our combination method works, we take the spectra information of a program used in Zheng et al. [38]. First, we randomly selected different risk evaluation metrics without considering their correlation to compute suspiciousness scores, Wong1 and Russel&Rao. Second, we purposely selected two risk evaluation metrics after observing their correlation, GP19 and Rogers-Tanimoto, to compute suspiciousness scores for the used spectra. The correlation value of the two risk evaluation metrics, GP19 and Rogers-Tanimoto, is (−0.002). See Section 3.1 and Algorithm 2 for the full details and algorithm of how the correlations and combinations are computed.

| Algorithm 2 Metrics Combination. | |

| Require:paired risk evaluation metrics (I), faulty and non-faulty programs (F) | |

| ▹ list of paired metrics | |

| ▹ list of the faulty programs | |

| for each do | |

| for each do | |

| compute suspiciousness scores for each statement using metric ‘a’ | |

| compute suspiciousness scores for each statement using metric ‘b’ | |

| end for | |

| end for | |

| for all do | |

| normalize using MinMaxScaler() | |

| normalized metric ‘a’ | |

| normalized metric ‘b’ | |

| end for | |

| for each do | |

| for each do | |

| ▹ Final Suspiciousness score | |

| end for | |

| end for | |

| return Final Rank | |

Notably, the natural combination of the suspiciousness scores computed by different risk evaluation metrics is not a fair practice for fault localization. This is because different risk evaluation metrics have different ranges [39]. Some metrics have a range of , such as Rogers-Tanimoto and Russel&Rao, and others have a range of , such as Wong1 and Wong2. In this case, we normalized the suspiciousness scores of each risk evaluation metric shortlisted to combine. To achieve this, we utilized the MinMaxScaler function of the python Scikit-learn [40] library, which can convert the suspiciousness scores to . Similarly, the MinMaxScaler model transforms the minimum suspiciousness score to 0 and the maximum to 1. All other suspiciousness scores are normalized between 0 and 1. Table 3 demonstrates the initial suspiciousness scores and normalized scores after applying the MinMaxScaler function to the example in Zheng et al. [38].

Table 3.

Example of the MinMaxScaler values from scikitlearn. The (n) signifies the normalized columns.

The columns with the letter (n) imply normalized suspiciousness scores, and columns Purposely and Randomly imply the combined suspiciousness scores. In the given example program spectra, the is the faulty statement, and both GP19 and Rogers-Tanimoto risk evaluation metrics did not localize the fault individually. However, the fault is localized successfully when their suspiciousness scores are normalized and combined.

Note that we first used each risk evaluation metric to compute the suspiciousness scores for each statement. The suspiciousness scores are normalized, except the spectra data.

Protocol: If a risk evaluation metric has a high correlation with more than one risk evaluation metric, we select the highest correlation for that group. This implies that if risk evaluation metric has a correlation () with and also has a correlation () with , we only select ( and ) for that group.

It is essential to note in Table 2 that no two maximal risk evaluation metric have a high-negative or neutral correlation. The highest negative correlation () between two maximal risk evaluation metrics is between (Ample and Wong1) and (Ample and Wong2), which according to [36], falls under negligible correlation. This can be explained by the fact that the maximal risk evaluation metrics are efficiently locating software faults. Hence, they are always correlated even though the level of correlation may vary; one metric may outperform the other. Therefore, we use only the available negatively correlated maximal risk evaluation metric in this study.

Table 4 shows the group of the shortlisted risk evaluation metrics. The combined risk evaluation metrics are labelled after their types and their correlation level. The first two letters represent if the two combined risk evaluation metrics are both maximal (), both non-maximal (), or one is maximal, and the other is non-maximal (). The subscript letter indicates the level of correlation i-e high correlation (H), moderate correlation (M), low correlation (L), or negligible correlation (N). The superscript signs + and − indicate whether the correlation is positive or negative, respectively.

Table 4.

Details of selected combined risk evaluation metrics based on correlation.

For example, the first acronym means that the two combined risk evaluation metrics are both maximal and highly negatively correlated.

4. Experimental Design

This section provides the experimental details for assessing and evaluating the combinations of risk evaluation metrics for effective fault localization.

4.1. Fault Localization

We use spectrum-based fault localization as the fault localization process. The fault localization is performed at the statement granularity level. Spectrum-based fault localization can use any risk evaluation metric to determine if a statement is faulty or not.

Thus, we evaluate the effectiveness of the combined risk evaluation metrics and compare their performances against 14 well-known maximal (see Table 1) and 16 non-maximal risk evaluation metrics (see [17]).

4.2. Dataset

For this experimental study, we use two datasets: SIR-repository [41] and Defects4J (version 1.2.0) [30]. Due to unavoidable circumstances, this study cannot use the latest version of the Defects4J dataset, v2.0.0. However, version 1.2.0 still serves our purpose in this study. Furthermore, all the benchmarks used in this study may represent majorities of real faults of Defects4J. SIR-repository is the dataset of seeded faults in programs written in C language that has been used in fault localization research [20,21,26,42]. In contrast, Defects4J is the dataset of 357 real faults from 5 large open-source Java projects recently used in fault localization and repair [43,44,45,46]. The details of the datasets can be found in our previous study [10].

To evaluate the effectiveness of the combined and existing risk evaluation metrics in fault localization concerning program size, we partition the projects of SIR-repository and Defects4J into three categories. This shall indicate which risk evaluation metrics are suitable for projects of different sizes.

There is no precisely defined limit to determine if a program is small, medium, or large, as Zhang et al. consider Flex, Grep, Gzip, and Sed programs in SIR-repository measuring between 5.5 and 9.69 KLOC as real-life medium-sized programs [47]. In contrast, others consider these programs large [2,48]. Keller et al. categorize Defects4J projects in the 28–96 KLOC range as medium size [49]. While de Souza et al. consider Flex, Grep, Gzip, and Sed as small with the assumption that programs with more than 10 KLOC are large programs, programs containing between 2 and 10 KLOC are medium-sized, while programs with less than 2 KLOC are small programs [50].

In this study, we categorize the datasets as:

- Small:

- Projects with ≤10 KLOC, such as Flex, Sed, Grep, and Gzip with an average executed statements of 3037, and have 92 faults.

- Medium:

- Projects >10 KLOC ≤50 KLOC, such as Lang and Time with an average executed statements of 5725, and have 92 faults.

- Large:

- Projects >50 KLOC, such as Math, Closure, and Chart with an average executed statements of 14,333, and contain 265 faults.

4.3. Research Questions

This study answers the following research questions.

- RQ1.

- Which combined metrics perform the best among the combinations? To answer this research question, we evaluate the performance of each group of the risk evaluation metric (see Table 4) in the given datasets in [10].

- RQ2.

- How do the best performing combined risk evaluation metrics compare against the performance of standalone maximal and non-maximal risk evaluation metrics? We select the best-performing risk evaluation metrics among the combined (maximal and maximal, non-maximal and maximal, and non-maximal and non-maximal) risk evaluation metrics to answer this research question. This is done by comparing all the combined risk evaluation metrics with each other. If a combined risk evaluation metric outperforms all other ones in two or more categories of the partitioned dataset, it is assumed to be better than the other risk evaluation metrics. We, therefore, select such a metric to represent the group of combinations. This resulted in 6 combined risk evaluation metrics compared with 14 maximal and 16 non-maximal risk evaluation metrics. In total, we compared 36 metrics.

- RQ3.

- Is there any statistical performance difference between the combined and standalone maximal and non-maximal risk evaluation metrics? This research question statistically analyses the overall performance differences between the combined and existing risk evaluation metrics. We combine all the datasets for this experiment. We set the experiment to iterate fifteen times using each risk evaluation metric to compute the average wasted effort for each fault (see Section 4.4.3 for details). The experiment is instrumented to automatically exclude five faults per iteration to obtain a different wasted effort value for each risk evaluation metric in each iteration. We then used Wilcoxon signed-rank test to test the statistical differences and Cliff’s delta to test the effect sizes using the scores computed above. This aims to examine the significance of the performance difference between the combined and existing risk evaluation metrics.

4.4. Evaluation Metrics

We use the following four evaluation metrics to assess the effectiveness of the risk evaluation metrics.

4.4.1. Exam Score

The Exam score is the percentage of statements a developer needs to go through until the faulty statement is found [16,44]. Thus, the metric with the lowest Exam score has the highest effectiveness in locating the faults. The Exam metric is defined in Equation (1).

where the is the rank of the first faulty statement, and N is the total number of executable statements.

4.4.2. acc@n

The acc@n metric counts the number of faults successfully localized at the top n position in the ranking list [28]. In our case, the . When the fault expands multiple statements, we assume the fault is localized if any of the faulty statements is ranked among the top n positions. This study also used a max tie-breaker for this evaluation metric.

4.4.3. Average Wasted Effort (AWE)

As spectrum-based fault localization produces a ranked list of statements with the aim of ranking the faulty statements at the top, the common assumption is that developers start from the top of the list to identify the fault. The wasted effort metric measures the developer effort wasted in inspecting the non-faulty statements ranked higher than the faulty ones. While some non-faulty statements may be ranked higher than the faulty ones, some non-faulty statements likely share the same rank as the faulty ones—a tie. Sarhan et al. approximated that for 54–56% of the cases in Defects4J, the faulty methods share the same rank with at least one other method.

In the case of ties, there are two cases. The best case is that the faulty statement () is ranked higher than non-faulty statements () in the tie. Hence, the best case wasted effort is defined in Equation (2).

The worst case is that the faulty statement () is ranked last than non-faulty statements () in the tie. Hence, the worst-case wasted effort is defined in Equation (3).

Therefore, inspired by Keller et al., we define the wasted effort (WE) as an average case in Equation (4) [49].

Finally, we use Equation (4) to calculate the Average Wasted Effort (AWE), which is the mean of the wasted effort (WE) in all the rankings.

4.5. Tie Breaking

Ties always occur when ranking each statement in the program under test using the SBFL formulas. Breaking a tie between two or more program statements with the same suspiciousness scores is expedient. To achieve this, we use the rankdata function from the Scipy [51] library of the python programming language, which is also a module for statistical functions and probability distribution. Tie-breaking is achievable on rankdata by calling a function scipy.stats.rankdata(a, method), where a is the list of the suspiciousness scores, and the method can be average, Min, Max, dense, and ordinal. In our case, this study uses the Max() method because we hope to assign the maximum ordinal rank for the corresponding ties. When the returned values are sorted in descending order, the first value assigned with the maximum ordinal rank among the tied values is ranked first. Here, we assume that the sorting function breaks ties arbitrarily, as specified by Spencer Pearson et al. [44]. For example, when rankdata function is applied to column purposely in Table 3, it returns [9, 9, 10, 5, 11, 12, 2, 2, 3, 5, 9, 9] and when applying a sorting function, it returns [12, 11, 10, 9, 9, 9, 9, 5, 5, 3, 2, 2]. Similarly, when the same function is applied to column randomly in the same Table 3, it returns [12, 12, 12, 6, 12, 12, 6, 6, 6, 6, 6, 12], when applying sorting function, it yields [12, 12, 12, 12, 12, 12, 6, 6, 6, 6, 6, 6]. Recall that the sixth value on the list belongs to the faulty statement. Therefore, the sorting function arbitrarily breaks the tie on the list by sorting the values from the largest to the lowest (descending order).

4.6. Statistical Tests

In this study, we used Wilcoxon signed-rank test to test the significance and Cliff’s delta to measure the effect size. We determined if the combined risk evaluation metrics examined fewer program statements than the maximal and non-maximal ones before locating the first fault in the studied programs.

4.6.1. Wilcoxon Signed-Rank Test

Wilcoxon signed-rank test is a suitable alternative to other statistical tests, such as the t-test, when a normal distribution of a given population cannot be assumed, particularly when there are matched pairs [13,16]. Since we have a matched pair comparison where we assess whether or not one risk evaluation metric or their combinations performs better than the other, we choose the Wilcoxon signed-rank test with a degree freedom of 5%.

4.6.2. Cliff’s Delta

Cliff’s delta is a measure of effect size quantifying the magnitude of difference between two groups, X and Y. Cliff’s delta indicates that the dominance probability of observations in one group is larger than in the other group. In the context of this study, it informs us if a risk evaluation metric performs better than the other and its practical usefulness. Cliff’s delta d is defined in Equation (5) [52].

where # is the cardinality indicating the number of times, m and n is the size of group X and Y, respectively. Each observation X is compared against each Y to count the number of times and . Finally, the difference in counts is divided by total comparisons.

The value of Cliff’s delta d is always . The extreme values and indicate the two groups, X and Y, are completely non-overlapping (the two groups are significantly different), while 0 indicates the two overlap completely (the two groups are similar).

We used this statistical tool to compare the mean values of the Average Wasted Effort scores computed by the combined risk evaluation metrics and all other ones studied in this study.

We adopt the interpretations of Cliff’s delta d from Romano et al., which are approximated from Cohen’s d as follows [53].

- there is no difference in the performance of two risk evaluation metrics, and they are essentially the same.

- there is a small difference in the performance of two risk evaluation metrics.

- there is a medium difference in the performance of two risk evaluation metrics.

- there is a large difference in the performance of two risk evaluation metrics.

When comparing two risk evaluation metrics, if the output results in negative, the average mean value of the treatment groups’ data which are the combined risk evaluation metrics in our case, is smaller than the average mean value of the control groups’ data which are the standalone risk evaluation metrics in this case.

5. Results

This section presents the results of the empirical evaluation of different risk evaluation metrics.

5.1. RQ1: Which Combined Metrics Perform the Best among the Combinations?

We evaluate the effectiveness of combined risk evaluation metrics concerning their correlation, as mentioned in Section 3.1. We compare their performance using the Exam and the wasted effort (WE) risk evaluation metrics. The evaluations and comparisons are based on the programs of SIR (92 faults) and Defects4J (357 faults).

Table 5 shows the performance of positively and negatively correlated combined risk evaluation metrics in small, medium, and large programs. Since it is difficult to see negatively correlated two maximal risk evaluation metrics. Therefore, the few available ones are reported. The first column in Table 5 shows whether the two combined risk evaluation metrics are positively or negatively correlated. Next are the acronyms, as explained in Section 3, and the subsequent columns show the performance of each combined risk evaluation metric in terms of Exam and wasted effort. The highlighted combined risk evaluation metrics show the best-performing ones to be compared with the existing risk evaluation metrics.

Table 5.

Performance comparison of positively and negatively correlated combined-risk evaluation metrics. The highlighted metrics performed the best in each group and are shortlisted for further assessments.

The best performing combined-risk evaluation metrics are determined when each shortlisted combined-risk evaluation metric outperforms the rest in two or more subject programs. We select one combined-risk evaluation metric to represent each grouped risk evaluation metrics. Recall that the groups are maximal and maximal (MM)), non-maximal and maximal (NM), and non-maximal and non-maximal (NN).

- Negative Correlation

Among the combined two maximal risk evaluation metrics, the highly-correlated maximal risk evaluation metric, , outperformed the others. For the combination of two non-maximal risk evaluation metrics, neutrally-correlated metrics, outperformed the rest. The combination of maximal and non-maximal risk evaluation metrics shows that the lowly-correlated metric, , is promising. Furthermore, in terms of Exam scores, outperformed all other combinations in small programs and outperformed all other combinations in medium and large programs. In terms of wasted effort, outperformed all other combinations in the small program and outperformed all other combinations in medium and large programs. In summary, on average performance, the best negatively correlated combined-risk evaluation metric is , which is the combination of lowly-correlated maximal and non-maximal risk evaluation metrics.

- Positive Correlation

For positive correlations, in terms of Exam scores, highly-correlated two maximal risk evaluation metrics outperformed all the other counterparts in small programs, lowly-correlated maximal and non-maximal risk evaluation metrics performed better in medium programs, and lowly-correlated two non-maximal risk evaluation metrics showed more promising than the others. In terms of wasted effort, two highly-correlated maximal risk evaluation metrics are better in the small program, and the lowly-correlated combined-risk evaluation metric performs the best in medium and large programs. On average, the lowly-correlated combined-risk evaluation metric, , is the best among the positively correlated risk evaluation metrics.

- Answer to RQ1:

The overall performance shows that we can combine two positively or negatively correlated risk evaluation metrics for effective fault localization. Two findings hold for this research question; (1) two combined maximal risk evaluation metrics can not optimize fault localization because their combination can not outperform their standalone ones; (2) lowly correlated risk evaluation metrics, whether positive or negative, can help in fault localization because their combination can outperform the individual metric. Therefore, this question is answered as follows; lowly correlated risk evaluation metrics are suitable for combination for effective fault localization.

5.2. RQ2: How Do the Best Performing Combined Risk Evaluation Metrics Compare against the Performance of Standalone Maximal and Non-Maximal Risk Evaluation Metrics?

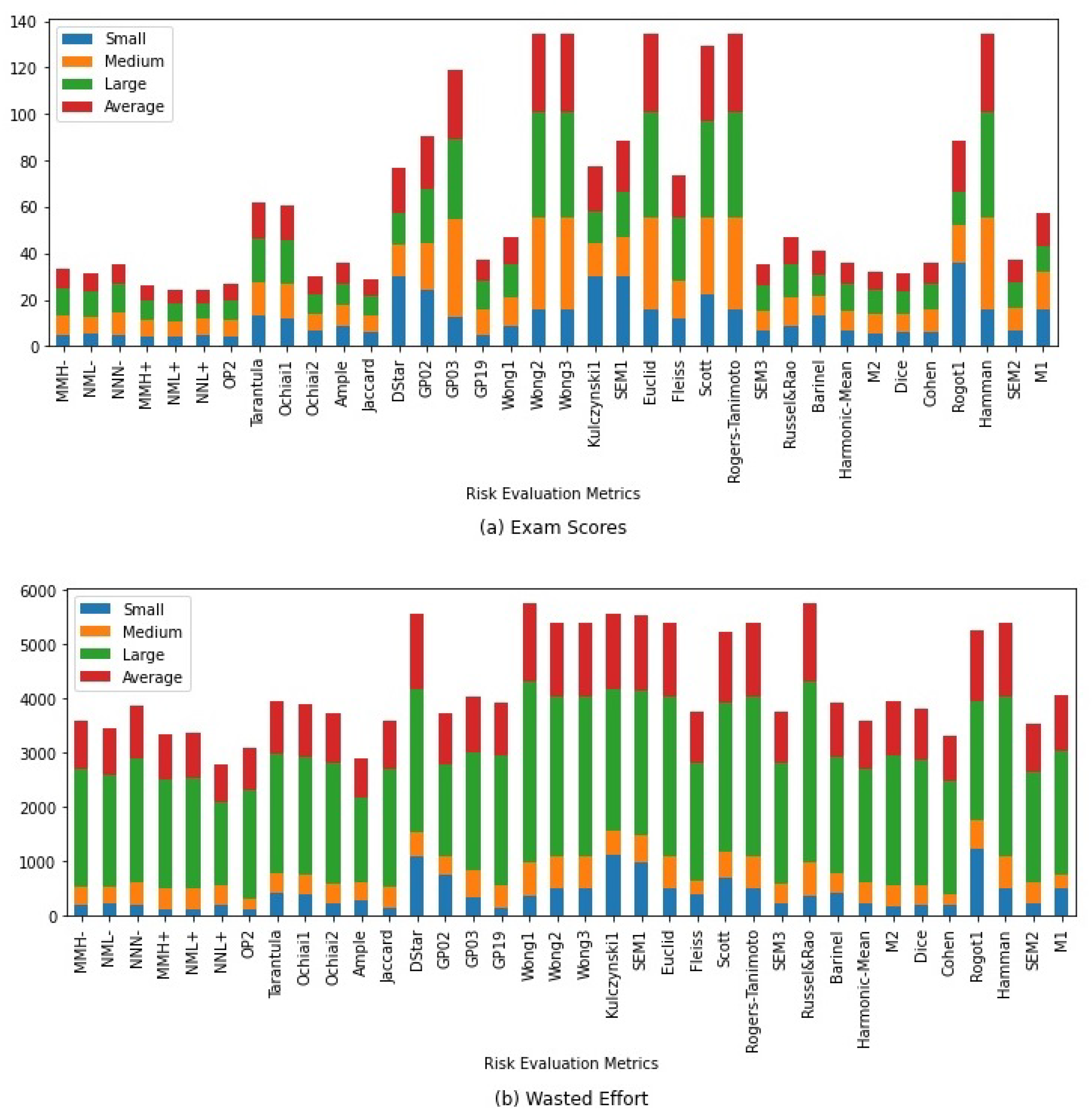

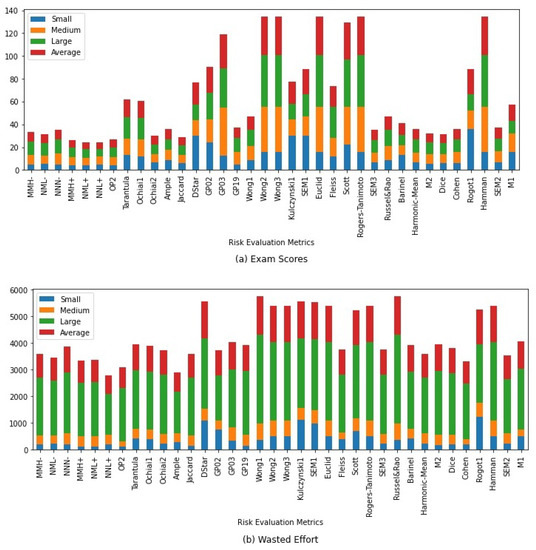

Table 6 shows the performance comparison of the best-performing combined metrics and the existing metrics. Similarly, Figure 1a shows the visualization of the Exam scores, and Figure 1b shows the visualization of the wasted efforts of the compared risk evaluation metrics. We selected , , , , , and to represent the combined-risk evaluation metrics, maximal and maximal, non-maximal and maximal, and non-maximal and non-maximal risk evaluation metrics because of their outstanding performances against other risk evaluation metrics.

Table 6.

Performance comparison of the combined risk evaluation metrics with the standalone risk evaluation metrics. The best metrics for each subject program are highlighted in grey colour for easy visualization.

Figure 1.

(a) shows the stacked bar-chart of Exam Scores of the combined and existing risk evaluation metrics. (b) shows the wasted effort computed by the combined and existing risk evaluation metrics.

Therefore, we compare these six risk evaluation metrics with the other existing ones.

- Small Programs:

- The small program benchmarks assessed the performance of the combined risk evaluation metrics and the existing ones. This analysis once again proved that some existing risk evaluation metrics are optimal in small programs, such as OP2. The OP2 risk evaluation metric is more effective in the small program in terms of Exam and wasted effort than all the combined risk evaluation metrics. Furthermore, the risk evaluation metric, which comprises OP2 and Wong1, performs like OP2 but better than Wong1. Similarly, apart from positively-correlated two maximal risk evaluation metrics, all other combinations outperformed the individual risk evaluation metric combined to form them in small programs.

- Medium Programs:

- The combined risk evaluation metric outperformed all other combined-risk evaluation metrics and the existing risk evaluation metrics in terms of Exam in the medium program. In terms of wasted effort, the OP2 risk evaluation metric shows more effective performance than all other risk evaluation metrics. The combined-risk evaluation metric, which outperformed other risk evaluation metrics in terms of Exam, comprises Jaccard and Harmonic-Mean. This combined-risk evaluation metric outperformed the standalone risk evaluation metrics used to form it. Similarly, apart from two highly correlated maximal risk evaluation metrics and low negatively correlated non-maximal risk evaluation metrics, all other combinations outperformed their standalone risk evaluation metric combined to form them.

- Large Programs:

- The combined-risk evaluation metric, , comprising two lowly correlated non-maximal risk evaluation metrics, Rogot1 and Barinnel, outperformed all other studied risk evaluation metrics in the large programs in terms of Exam and wasted effort. All other combined-risk evaluation metrics outperformed the individual existing-risk evaluation metrics that make up the combined-risk evaluation metric, except the negative negligibly-correlated two non-maximal risk evaluation metrics in terms of Exam. Therefore, lowly-correlated two non-maximal risk evaluation metrics are suitable for large programs.

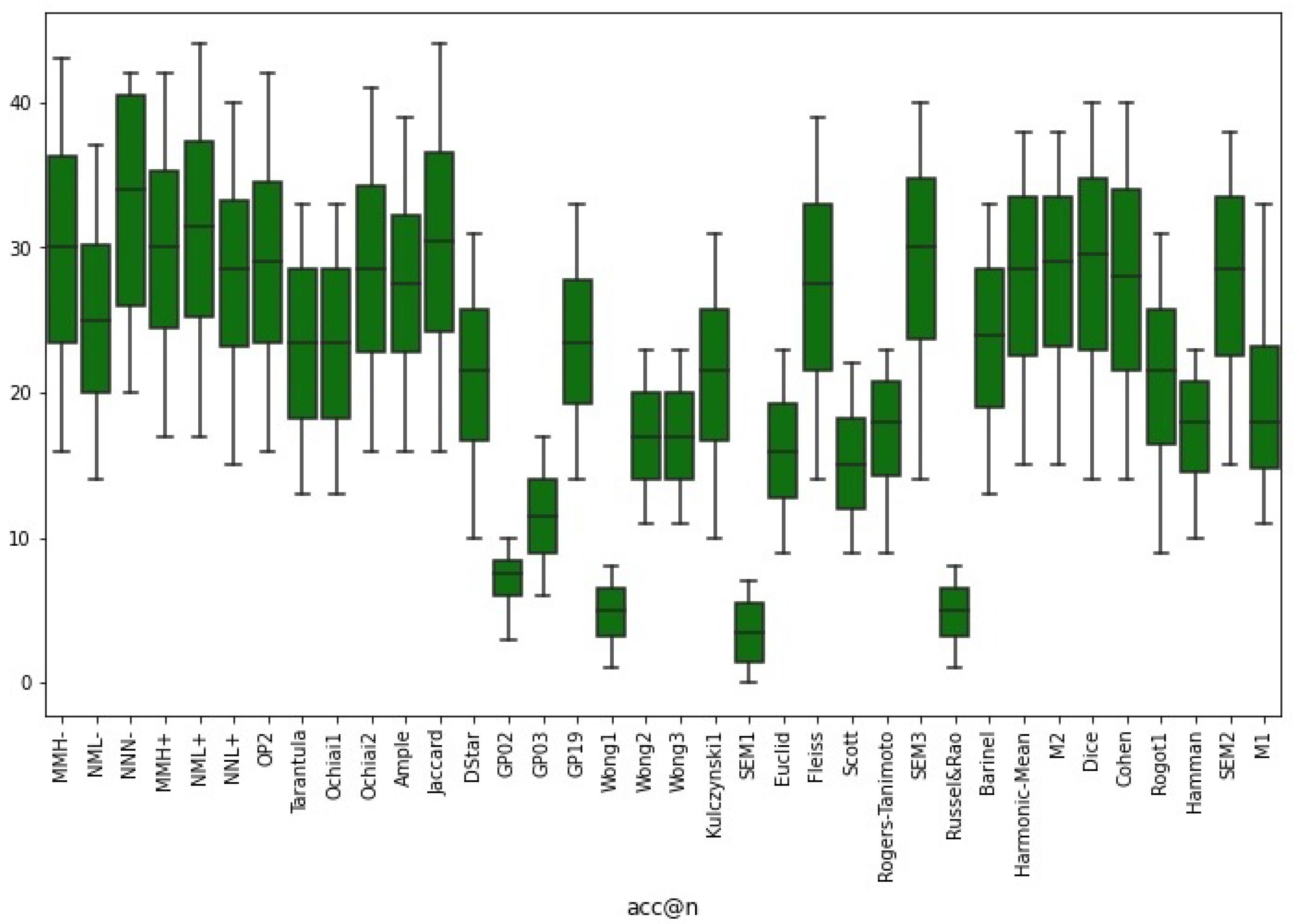

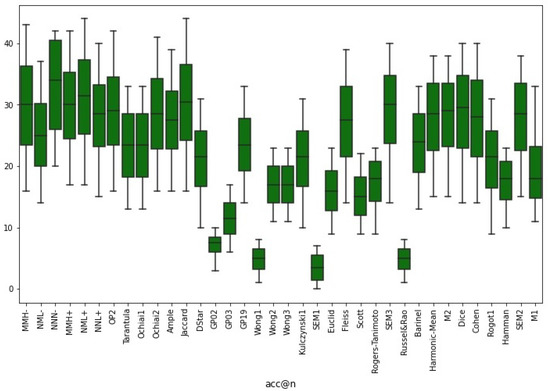

We further conducted an accuracy level of all the risk evaluation metrics studied in this paper. We use acc@n to calculate the number of faults each metric localizes at the top of the suspiciousness list. The higher the number of faults located by the metric, the better such metric is in fault localization.

Table 7 and Figure 2 show the percentage and its visualization of faults that the combined and standalone risk evaluation metrics localized in small, medium, and large program benchmarks at the statement granularity level.

Table 7.

Performance comparison of the combined risk evaluation metrics with the standalone risk evaluation metrics based on acc@n evaluation metric.

Figure 2.

Fault Localization accuracy of the combined and existing risk evaluation metrics.

In small program benchmarks, Ample and GP19 placed more faults at the top of the ranking list than all the studied risk evaluation metrics, including the combined one in the small program benchmarks. Even though the majority of the combined-risk evaluation metrics, except positively low correlated two non-maximal and negatively low correlated maximal and non-maximal ones, localized more faults at the top of the ranking list in the small programs than all the other risk evaluation metrics. Furthermore, no individual risk evaluation metric that makes up combined-risk evaluation metrics outperformed any of the combined-risk evaluation metrics.

In the medium programs, all the combined-risk evaluation metrics localized more faults than the studied standalone risk evaluation metrics, except the low positively correlated two non-maximal risk evaluation metrics that localized the same faults as some existing risk evaluation metrics.

In large programs, negligible negatively correlated risk evaluation metric, , localized more faults at the top of the ranking list. We observed that aside from this risk evaluation metric, all other combined-risk evaluation metrics localized faults like or better than all the other studied risk evaluation metrics.

The general observation shows all the combined risk evaluation metrics localized more faults than all the existing risk evaluation metrics at the Top-10, and no individual risk evaluation metric combined to make the combined-risk evaluation metrics localized more faults than combined-risk evaluation metrics.

- Answer to RQ2:

The result shows that the combined risk evaluation metrics did not only outperform the individual risk evaluation metrics combined to form the combination but also outperformed all the existing ones, except OP2 in some cases. On average performance, low positive correlated maximal and non-maximal risk evaluation metrics performed the best in terms of Exam, low positive correlated two non-maximal risk evaluation metrics performed the best in terms of wasted effort, and negligible negatively correlated two non-maximal risk evaluation metrics performed the best in terms of acc@1.

5.3. RQ3: Is There Any Statistical Performance Difference between the Combined and Standalone Maximal and Non-Maximal Risk Evaluation Metrics?

After comparing the performance of our proposed method and the existing methods, we estimate the statistical reliability of their performances using the two statistical tools, the Wilcoxon signed-rank test and Cliff’s delta, that were previously used in [13,54,55,56].

We aim to confirm if the performance of the two compared risk evaluation metrics is similar or different. If their performances differ, we assume that one risk evaluation metric is better than the other. In this case, Wilcoxon signed-rank test, which can be used to compare two independent samples (wasted effort in our case), is an appropriate tool for this purpose. On the other hand, Cliff’s delta is an effect size computational tool that can quantify the differences between two groups of samples beyond the p-value. We use this tool to quantify the performance difference between the studied risk evaluation metrics; refer to RQ3 in Section 4.3 for how we generate the data for this purpose.

Table 8 contains the interpretations of the Wilcoxon statistical analysis. In the context of this study, for a given combined-risk evaluation metric (A) and existing-risk evaluation metric (B), the list of measures would be the list of the Average Wasted Effort score values for all the program faulty statements computed by A and B in 30 iterations. For the p-value, if , and , we assume there is a significant difference between the two compared risk evaluation metrics, but if , we assume there is no significant difference in the performance of the two compared risk evaluation metrics. We have 6 combined, 14 maximal, and 16 non-maximal risk evaluation metrics for comparison, and therefore, we have 180 pairs of statistical comparisons in this study.

Table 8.

The statistical comparison of the combined and standalone risk evaluation metrics.

Table 8 also contains Cliff’s delta statistics that measure the effect sizes. The advantage of Cliff’s delta statistic is that if the Average Wasted Effort of the combined risk evaluation metric (A) is smaller than the existing-risk evaluation metric (B), then the d-value of Cliff’s delta will return a negative value. This implies that risk evaluation metric (A) outperformed risk evaluation metric (B). The symbol ‘<’ denotes if () of the Wilcoxon signed-rank test is less than 0.05, ‘>’ if higher than 0.05, and ’=’ is equal to 0.05.

From Table 8, each metric has two rows. The first row shows Cliff’s delta (d) values, and the second row shows Wilcoxon signed-rank test (). We, therefore, deduce the following: There exist statistically significant differences between the combined-risk evaluation metrics and the existing-risk evaluation metrics with large effect sizes. The OP2 metric’s performance against all the combined-risk evaluation metrics is statistically significant, with a large effect size in favour of OP2. Contrarily, the combined-risk evaluation metric, , outperformed OP2 with no visible effect size.

Therefore, the statistical analysis shows that the best performing combined-risk evaluation metric, the positive lowly correlated one, did not only outperform all the existing studied metrics by chance, but the performances are statistically significant with large effect sizes.

- Answer to RQ3:

The statistical analysis shows significant differences in the performance of the best combined-risk evaluation metrics and all other standalone risk evaluation metrics, and the effect sizes are large in many cases.

5.4. Discussion

Many techniques have been proposed in software testing, especially in an SBFL, to improve the suspicious statement’s ranking in computer software programs. Human design techniques and Genetic Programming techniques have been adopted in the literature to minimize the effort of locating faults in a program. We have shown that combining two of these existing risk evaluation metrics to enhance fault localization is possible. We have combined different risk evaluation metrics to discover some types that can naturally be combined without machine learning or learning-to-rank algorithms. The majority of the studies in the literature that attempted combining various metrics did that with the aid of the Genetic Algorithm [23] and the learning-to-rank [24,28,31] algorithm. Many of these studies combined many formulas without considering their compatibility.

This study combined different metrics by computing their suspiciousness scores for each program statement, and the suspiciousness scores are normalized to the range 0 and 1. The normalized scores are combined to form a single suspiciousness score. The single suspiciousness score is then served as the final suspiciousness score. We compared the effectiveness of the combined-risk evaluation metrics with the existing maximal and non-maximal risk evaluation metrics.

Table 5 shows the performances of negatively and positively correlated risk evaluation metrics. We observed that when combining two risk evaluation metrics, the result will either outperform one of the risk evaluation metrics or not perform like any of them. This can be observed when combining highly positively correlated risk evaluation metrics. This is why we ensured a comprehensive study of different combinations. Surprisingly, only two maximal risk evaluation metrics that are negatively highly or moderately correlated are good for combination, unlike the positive and negative lowly or neutrally correlated ones.

Furthermore, most risk evaluation metrics with higher positive correlations outperformed those with lower correlations, even though their combined power can not supersede the individual ones. This bolsters the study by Zou [5], which says two techniques are positively correlated if they are good at locating the same sort of faults. This study further shows that if two techniques of the same family are highly and positively correlated, it does not mean they both have the same localization ability, and their combination will consistently outperform one of the combined techniques. This performance can not enhance fault localization because the combination aims to optimize the fault localization performance.

Similarly, in addition to the findings above, some non-maximal risk evaluation metrics can also be combined with the maximal ones, provided they are low negatively correlated. Contrarily, positive lowly or neutrally correlated two non-maximal risk evaluation metrics should be considered if the developer intends to combine non-maximal risk evaluation metrics.

Table 6 shows the performance comparisons of the best performing combined-risk evaluation metrics with the existing ones. A combined-risk evaluation metric must outperform all other combined ones in two or more categorized datasets before concluding that it is the best among others. We use one best risk evaluation metric to represent each group (maximal vs maximal), (non-maximal vs maximal), and (non-maximal vs non-maximal). This produces six combined-risk evaluation metrics ( (Tarantula and Wong2), (Euclid and Tarantula), (Euclid and SEM2), (OP2 and Wong1), (Jaccard and Harmonic-Mean), (Rogot1 and Barinnel)). We then compare their performances with the existing risk evaluation metrics.

This study shows that only one existing risk evaluation metric, OP2 is more effective than the majority of the combined-risk evaluation metrics, and overall performance of , which comprises positively correlated two non-maximal risk evaluation metrics (Rogot1 and Barinnel)) outperformed all the compared risk evaluation metrics.

Therefore, to combine two techniques of the same family for effective fault localization, we may use two low-positive correlated non-maximal techniques. Even though it is very hard to obtain high negatively correlated risk evaluation metrics, their availability for combination will improve the accuracy of fault localization.

Therefore, we use the rubric highlighted in Section 1 to summarize our findings in this study.

- Two positively correlated maximal metrics are already good at locating software faults. Their combination can not outweigh their performance and can not enhance fault localization.

- Negatively correlated two maximal risk evaluation metrics are good at locating different sorts of faults. Their combined performances can outweigh their individual fault localization effectiveness, provided their degree of correlation is moderate or high.

- Two positively correlated non-maximal metrics are not good at locating software faults, yet their combination can increase the overall fault localization effectiveness, provided their degree of correlation is low or neutral.

- Two negatively correlated non-maximal metrics are not good at locating software faults, and their combination can not improve fault localization effectiveness.

- Two negatively correlated maximal and non-maximal metrics, where one is good, and the other is not good at locating software faults, can complement each other provided they have low correlation, and their combination can outweigh their individual fault localization effectiveness.

6. Threats to Validity

Since empirical research faces many risks concerning the validity of results, we identify those measures we took to alleviate them. We organize such threats to validity in three categories as follows.

- Construct validity: Threat to construct validity relates to the program granularity used for spectrum-based fault localization. In this study, we localize the faults at the statement level. Since this is the smallest possible granularity level and captures the program behaviour at a deficient level, this risk to construct validity is minimized. Furthermore, this is also in line with existing works. Many previous studies have localized faults at the statement granularity.

- Internal validity: The threat to internal validity relates to the evaluation metrics used to compare different risk evaluation metrics. One technique might be better than the other for a particular evaluation metric. Therefore, we use three evaluation metrics: the Exam score, Average Wasted Effort, and acc@n, which concern different aspects. Previous studies have also used these metrics [12,13,16,44]. Since each metric is concerned with different aspects used in previous studies, this threat is reasonably mitigated.

- External validity: The evaluation of risk evaluation metrics in this study depends on the dataset of subject programs used and may not be generalizable. Indeed, the results and findings of many fault localization studies are not directly generalizable.The threat to external validity is our method to determine which risk evaluation metrics are suitable for combination. We initially computed performance correlations between different risk evaluation metrics on 30 randomly selected faults from SIR-repository and Defects4J. It is highly likely to obtain different correlation results for another set of randomly selected faults from a different dataset.Nonetheless, this study has suggested the best metrics suitable for combination for effective fault localization.

7. Conclusions

This paper explored and empirically evaluated different combinations of maximal and non-maximal risk evaluation metrics based on their correlations. The primary aim of this empirical study was to ascertain which combinations of the risk evaluation metrics may improve the effectiveness of fault localization, more specifically, the spectrum-based fault localization.

The following findings are confirmed in this study.

- Finding 1:

- The highest negatively correlated value of two maximal risk evaluation metrics obtained is -0.129. The combination outperformed the two combined risk evaluation metrics but did not outperform the best existing risk evaluation metric in this study, OP2. This means there are very high chances of getting an effective fault localization performance from two highly correlated maximal risk evaluation metrics with a value of at least −0.70 and above.

- Finding 2:

- Practically, combining maximal and non-maximal risk evaluation metrics with moderate or high correlation power, whether positive or negative, can only outperform one individual risk evaluation metric, but not the two risk evaluation metrics. It is best to consider their low correlation power for effective fault localization.

- Finding 3:

- Combining two non-maximal risk evaluation metrics, with high or moderate and positive or negative correlation power can not outperform the individual risk evaluation metric. Contrarily, the low or negligible positive correlation power of these risk evaluation metric can be considered for effective fault localization.

Author Contributions

Conceptualization, A.A. and T.S.; methodology, A.A. and T.S.; software, A.A.; data curation, A.A., T.S. and L.G.; original draft preparation, A.A.; writing-review and editing, A.A., L.G. and T.S.; visualization, A.A.; funding acquisition, T.S. and Z.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Zhejiang Provincial Natural Science Foundation of China under Grant No. LY22F020019, the Zhejiang Science and Technology Plan Project under Grant No. 2022C01045, and the Natural Science Foundation of China under Grants 62132014 and 61101111.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jones, J.A.; Harrold, M.J.; Stasko, J. Visualization of test information to assist fault localization. In Proceedings of the 24th International Conference on Software Engineering, ICSE 2002, Orlando, FL, USA, 25 May 2002; pp. 467–477. [Google Scholar]

- Abreu, R.; Zoeteweij, P.; Golsteijn, R.; Van Gemund, A.J.C. A practical evaluation of spectrum-based fault localization. J. Syst. Softw. 2009, 82, 1780–1792. [Google Scholar] [CrossRef]

- Wong, C.P.; Santiesteban, P.; Kästner, C.; Le Goues, C. VarFix: Balancing edit expressiveness and search effectiveness in automated program repair. In Proceedings of the 29th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Athens, Greece, 23–28 August 2021; pp. 354–366. [Google Scholar]

- Ye, H.; Martinez, M.; Durieux, T.; Monperrus, M. A comprehensive study of automatic program repair on the QuixBugs benchmark. J. Syst. Softw. 2021, 171, 110825. [Google Scholar] [CrossRef]

- Zou, D.; Liang, J.; Xiong, Y.; Ernst, M.D.; Zhang, L. An Empirical Study of Fault Localization Families and Their Combinations. IEEE Trans. Softw. Eng. 2019, 47, 332–347. [Google Scholar] [CrossRef]

- Srivastava, S. A Study on Spectrum Based Fault Localization Techniques. J. Comput. Eng. Inf. Technol. 2021, 4, 2. [Google Scholar]

- Ghosh, D.; Singh, J. Spectrum-based multi-fault localization using Chaotic Genetic Algorithm. Inf. Softw. Technol. 2021, 133, 106512. [Google Scholar] [CrossRef]

- Jiang, J.; Wang, R.; Xiong, Y.; Chen, X.; Zhang, L. Combining spectrum-based fault localization and statistical debugging: An empirical study. In Proceedings of the 2019 34th IEEE/ACM International Conference on Automated Software Engineering (ASE), San Diego, CA, USA, 11–15 November 2019; pp. 502–514. [Google Scholar]

- Dallmeier, V.; Lindig, C.; Zeller, A. Lightweight defect localization for java. In European Conference on Object-Oriented Programming; Springer: Berlin/Heidelberg, Germany, 2005; pp. 528–550. [Google Scholar]

- Ajibode, A.; Shu, T.; Said, K.; Ding, Z. A Fault Localization Method Based on Metrics Combination. Mathematics 2022, 10, 2425. [Google Scholar] [CrossRef]

- Wong, C.P.; Xiong, Y.; Zhang, H.; Hao, D.; Zhang, L.; Mei, H. Boosting bug-report-oriented fault localization with segmentation and stack-trace analysis. In Proceedings of the 2014 IEEE International Conference on Software Maintenance and Evolution, Victoria, BC, Canada, 29 Septembe–3 October 2014; pp. 181–190. [Google Scholar]

- Yoo, S. Evolving human competitive spectra-based fault localisation techniques. In International Symposium on Search Based Software Engineering; Springer: Berlin/Heidelberg, Germany, 2012; pp. 244–258. [Google Scholar]

- Ajibode, A.A.; Shu, T.; Ding, Z. Evolving Suspiciousness Metrics From Hybrid Data Set for Boosting a Spectrum Based Fault Localization. IEEE Access 2020, 8, 198451–198467. [Google Scholar] [CrossRef]

- Wu, T.; Dong, Y.; Lau, M.F.; Ng, S.; Chen, T.Y.; Jiang, M. Performance Analysis of Maximal Risk Evaluation Formulas for Spectrum-Based Fault Localization. Appl. Sci. 2020, 10, 398. [Google Scholar] [CrossRef]

- Heiden, S.; Grunske, L.; Kehrer, T.; Keller, F.; Van Hoorn, A.; Filieri, A.; Lo, D. An evaluation of pure spectrum-based fault localization techniques for large-scale software systems. Softw. Pract. Exp. 2019, 49, 1197–1224. [Google Scholar] [CrossRef]

- Wong, W.E.; Debroy, V.; Gao, R.; Li, Y. The DStar method for effective software fault localization. IEEE Trans. Reliab. 2013, 63, 290–308. [Google Scholar] [CrossRef]

- Naish, L.; Lee, H.J.; Ramamohanarao, K. A model for spectra-based software diagnosis. ACM Trans. Softw. Eng. Methodol. (TOSEM) 2011, 20, 1–32. [Google Scholar] [CrossRef]

- Liblit, B.; Naik, M.; Zheng, A.X.; Aiken, A.; Jordan, M.I. Scalable statistical bug isolation. Acm Sigplan Not. 2005, 40, 15–26. [Google Scholar] [CrossRef]

- Chen, M.Y.; Kiciman, E.; Fratkin, E.; Fox, A.; Brewer, E. Pinpoint: Problem determination in large, dynamic internet services. In Proceedings of the Proceedings International Conference on Dependable Systems and Networks, Washington, DC, USA, 23–26 June 2002; pp. 595–604. [Google Scholar]

- Abreu, R.; Zoeteweij, P.; Van Gemund, A.J.C. An evaluation of similarity coefficients for software fault localization. In Proceedings of the 2006 12th Pacific Rim International Symposium on Dependable Computing (PRDC’06), Riverside, CA, USA, 18–20 December 2006; pp. 39–46. [Google Scholar]

- Wong, W.E.; Qi, Y.; Zhao, L.; Cai, K.Y. Effective fault localization using code coverage. In Proceedings of the 31st Annual International Computer Software and Applications Conference (COMPSAC 2007), Beijing, China, 24–27 July 2007; Volume 1, pp. 449–456. [Google Scholar]

- Yoo, S.; Xie, X.; Kuo, F.C.; Chen, T.Y.; Harman, M. Human competitiveness of genetic programming in spectrum-based fault localisation: Theoretical and empirical analysis. ACM Trans. Softw. Eng. Methodol. (TOSEM) 2017, 26, 1–30. [Google Scholar] [CrossRef]

- Wang, S.; Lo, D.; Jiang, L.; Lau, H.C. Search-based fault localization. In Proceedings of the 2011 26th IEEE/ACM International Conference on Automated Software Engineering (ASE 2011), Lawrence, KS, USA, 6–10 November 2011; pp. 556–559. [Google Scholar]

- Xuan, J.; Monperrus, M. Learning to Combine Multiple Ranking Metrics for Fault Localization. In Proceedings of the 2014 IEEE International Conference on Software Maintenance and Evolution, Victoria, BC, Canada, 6 December 2014; pp. 191–200. [Google Scholar] [CrossRef]

- Zhang, X.Y.; Jiang, M. SPICA: A Methodology for Reviewing and Analysing Fault Localisation Techniques. In Proceedings of the 2021 IEEE International Conference on Software Maintenance and Evolution (ICSME), Luxembourg, 27 September–1 October 2021; pp. 366–377. [Google Scholar]

- Xie, X.; Chen, T.Y.; Kuo, F.C.; Xu, B. A theoretical analysis of the risk evaluation formulas for spectrum-based fault localization. ACM Trans. Softw. Eng. Methodol. (TOSEM) 2013, 22, 1–40. [Google Scholar] [CrossRef]

- Xie, X.; Kuo, F.C.; Chen, T.Y.; Yoo, S.; Harman, M. Provably optimal and human-competitive results in sbse for spectrum based fault localisation. In International Symposium on Search Based Software Engineering; Springer: Berlin/Heidelberg, Germany, 2013; pp. 224–238. [Google Scholar]

- B. Le, T.D.; Lo, D.; Le Goues, C.; Grunske, L. A learning-to-rank based fault localization approach using likely invariants. In Proceedings of the 25th International Symposium on Software Testing and Analysis, Saarbrücken, Germany, 18–20 July 2016; pp. 177–188. [Google Scholar]

- Ernst, M.D.; Cockrell, J.; Griswold, W.G.; Notkin, D. Dynamically discovering likely program invariants to support program evolution. IEEE Trans. Softw. Eng. 2001, 27, 99–123. [Google Scholar] [CrossRef]

- Just, R.; Jalali, D.; Ernst, M.D. Defects4J: A database of existing faults to enable controlled testing studies for Java programs. In Proceedings of the 2014 International Symposium on Software Testing and Analysis, San Jose, CA, USA, 21–25 July 2014; pp. 437–440. [Google Scholar]

- Sohn, J.; Yoo, S. Fluccs: Using code and change metrics to improve fault localization. In Proceedings of the 26th ACM SIGSOFT International Symposium on Software Testing and Analysis, Santa Barbara, CA, USA, 10–14 July 2017; ACM: New York, NY, USA, 2017; pp. 273–283. [Google Scholar]

- Kim, Y.; Mun, S.; Yoo, S.; Kim, M. Precise learn-to-rank fault localization using dynamic and static features of target programs. ACM Trans. Softw. Eng. Methodol. (TOSEM) 2019, 28, 1–34. [Google Scholar] [CrossRef]

- Jones, J.A.; Harrold, M.J. Empirical Evaluation of the Tarantula Automatic Fault-Localization Technique. In Proceedings of the 20th IEEE/ACM International Conference on Automated Software Engineering, Long Beach, CA, USA, 7–11 November 2005; Association for Computing Machinery: New York, NY, USA, 2005. ASE ’05. pp. 273–282. [Google Scholar] [CrossRef]

- Ochiai, A. Zoogeographic studies on the soleoid fishes found in Japan and its neighbouring regions. Bull. Jpn. Soc. Sci. Fish. 1957, 22, 526–530. [Google Scholar] [CrossRef]

- Choi, S.S.; Cha, S.H.; Tappert, C.C. A survey of binary similarity and distance measures. J. Syst. Cybern. Informatics 2010, 8, 43–48. [Google Scholar]

- Van de Vijver, F.J.; Leung, K. Methods and Data Analysis for Cross-Cultural Research; Cambridge University Press: Cambridge, UK, 2021; Volume 116. [Google Scholar]

- Golagha, M.; Pretschner, A.; Briand, L.C. Can we predict the quality of spectrum-based fault localization? In Proceedings of the 2020 IEEE 13th International Conference on Software Testing, Validation and Verification (ICST), Porto, Portugal, 24–28 October 2020; pp. 4–15. [Google Scholar]

- Zheng, W.; Hu, D.; Wang, J. Fault localization analysis based on deep neural network. Math. Probl. Eng. 2016, 2016. [Google Scholar] [CrossRef]

- Lo, D.; Jiang, L.; Budi, A. Comprehensive evaluation of association measures for fault localization. In Proceedings of the 2010 IEEE International Conference on Software Maintenance, Timisoara, Romania, 12–18 September 2010; pp. 1–10. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Rothermel, G.; Elbaum, S.; Kinneer, A.; Do, H. Software-artifact infrastructure repository. 2006. Available online: http://sir.unl.edu/portal (accessed on 10 December 2020).

- Zhang, X.; Gupta, N.; Gupta, R. Locating faults through automated predicate switching. In Proceedings of the 28th International Conference on Software Engineering, Shanghai, China, 20–28 May 2006; pp. 272–281. [Google Scholar]

- Laghari, G.; Murgia, A.; Demeyer, S. Fine-Tuning Spectrum Based Fault Localisation with Frequent Method Item Sets. In Proceedings of the 31st IEEE/ACM International Conference on Automated Software Engineering, Singapore, 3–7 September 2016; Association for Computing Machinery: New York, NY, USA, 2016. ASE 2016. pp. 274–285. [Google Scholar] [CrossRef]

- Pearson, S.; Campos, J.; Just, R.; Fraser, G.; Abreu, R.; Ernst, M.D.; Pang, D.; Keller, B. Evaluating and improving fault localization. In Proceedings of the 2017 IEEE/ACM 39th International Conference on Software Engineering (ICSE), Buenos Aires, Argentina, 20–28 May 2017; pp. 609–620. [Google Scholar]

- Just, R.; Parnin, C.; Drosos, I.; Ernst, M.D. Comparing developer-provided to user-provided tests for fault localization and automated program repair. In Proceedings of the ISSTA 2018, Proceedings of the 2018 International Symposium on Software Testing and Analysis, Amsterdam, The Netherlands, 16–21 July 2018; pp. 287–297.

- Chen, Z.; Kommrusch, S.J.; Tufano, M.; Pouchet, L.; Poshyvanyk, D.; Monperrus, M. SEQUENCER: Sequence-to-Sequence Learning for End-to-End Program Repair. IEEE Trans. Softw. Eng. 2019, 47, 1943–1959. [Google Scholar] [CrossRef]

- Zhang, Z.; Chan, W.K.; Tse, T.H.; Jiang, B.; Wang, X. Capturing propagation of infected program states. In Proceedings of the 7th Joint Meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on the Foundations of Software Engineering, Amsterdam, The Netherlands, 24–28 August 2009; pp. 43–52. [Google Scholar]

- Debroy, V.; Wong, W.E.; Xu, X.; Choi, B. A grouping-based strategy to improve the effectiveness of fault localization techniques. In Proceedings of the 2010 10th International Conference on Quality Software, Zhangjiajie, China, 14–15 July 2010; pp. 13–22. [Google Scholar]

- Keller, F.; Grunske, L.; Heiden, S.; Filieri, A.; van Hoorn, A.; Lo, D. A critical evaluation of spectrum-based fault localization techniques on a large-scale software system. In Proceedings of the 2017 IEEE International Conference on Software Quality, Reliability and Security (QRS), Prague, Czech Republic, 25–29 July 2017; pp. 114–125. [Google Scholar]

- de Souza, H.A.; Chaim, M.L.; Kon, F. Spectrum-based software fault localization: A survey of techniques, advances, and challenges. arXiv 2016, arXiv:1607.04347. [Google Scholar]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [PubMed]

- Cliff, N. Dominance statistics: Ordinal analyses to answer ordinal questions. Psychol. Bull. 1993, 114, 494. [Google Scholar] [CrossRef]

- Romano, J.; Kromrey, J.D.; Coraggio, J.; Skowronek, J.; Devine, L. Exploring methods for evaluating group differences on the NSSE and other surveys: Are the t-test and Cohen’sd indices the most appropriate choices. In Proceedings of the Annual Meeting of the Southern Association for Institutional Research, Arlington, USA, 14–17 October 2006; pp. 1–51. [Google Scholar]

- Zhang, L.; Yan, L.; Zhang, Z.; Zhang, J.; Chan, W.; Zheng, Z. A theoretical analysis on cloning the failed test cases to improve spectrum-based fault localization. J. Syst. Softw. 2017, 129, 35–57. [Google Scholar] [CrossRef]

- Ribeiro, H.L.; de Araujo, P.R.; Chaim, M.L.; de Souza, H.A.; Kon, F. Evaluating data-flow coverage in spectrum-based fault localization. In Proceedings of the 2019 ACM/IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM), Porto de Galinhas, Brazil, 19–20 September 2019; pp. 1–11. [Google Scholar]

- Vancsics, B.; Szatmári, A.; Beszédes, Á. Relationship between the effectiveness of spectrum-based fault localization and bug-fix types in javascript programs. In Proceedings of the 2020 IEEE 27th International Conference on Software Analysis, Evolution and Reengineering (SANER), London, ON, Canada, 18–21 February 2020; pp. 308–319. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).