Optimization Methods for Redundancy Allocation in Hybrid Structure Large Binary Systems

Abstract

1. Introduction

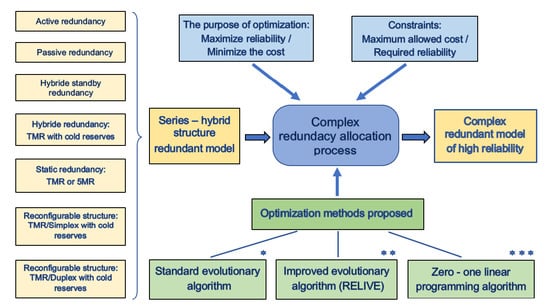

- The formalization of two RAPs for binary systems with hybrid structure, which include no less than eight types of redundancy, where reliability modeling of redundant and reconfigurable structures is based on Markov chains;

- The design and implementation of two evolutionary algorithms and the formulation of a zero-one integer program for solving these complex optimization problems;

- Conducting an extensive performance evaluation study of the three proposed techniques on thousands of problems, which demonstrates the effectiveness of the zero-one integer programming approach for large systems with tens or even hundreds of subsystems.

2. Problem Description

- Minimizing the cost of the redundant system for which a required reliability must be achieved;

- Maximizing the reliability of the system within a maximum allowed cost.

3. Types of Redundancy

- active redundancy ;

- passive redundancy (or cold standby redundancy) ;

- hybrid standby redundancy with a hot reserve or a warm one and possibly other cold ones;

- hybrid redundancy consisting of a TMR structure with control facilities and possibly cold reserves

- static redundancy: TMR or 5MR

- reconfigurable TMR/Simplex type structure with possible other cold-maintained spare components ;

- reconfigurable TMR/Duplex type structure with possible other cold-maintained spare components

3.1. Active Redundancy ()

3.2. Passive Redundancy ()

3.3. Hybrid Standby Redundancy with a Hot () or a Warm () Spare and Possibly Other Cold Ones

3.3.1. Case 1:

3.3.2. Case 2:

3.3.3. Case 3:

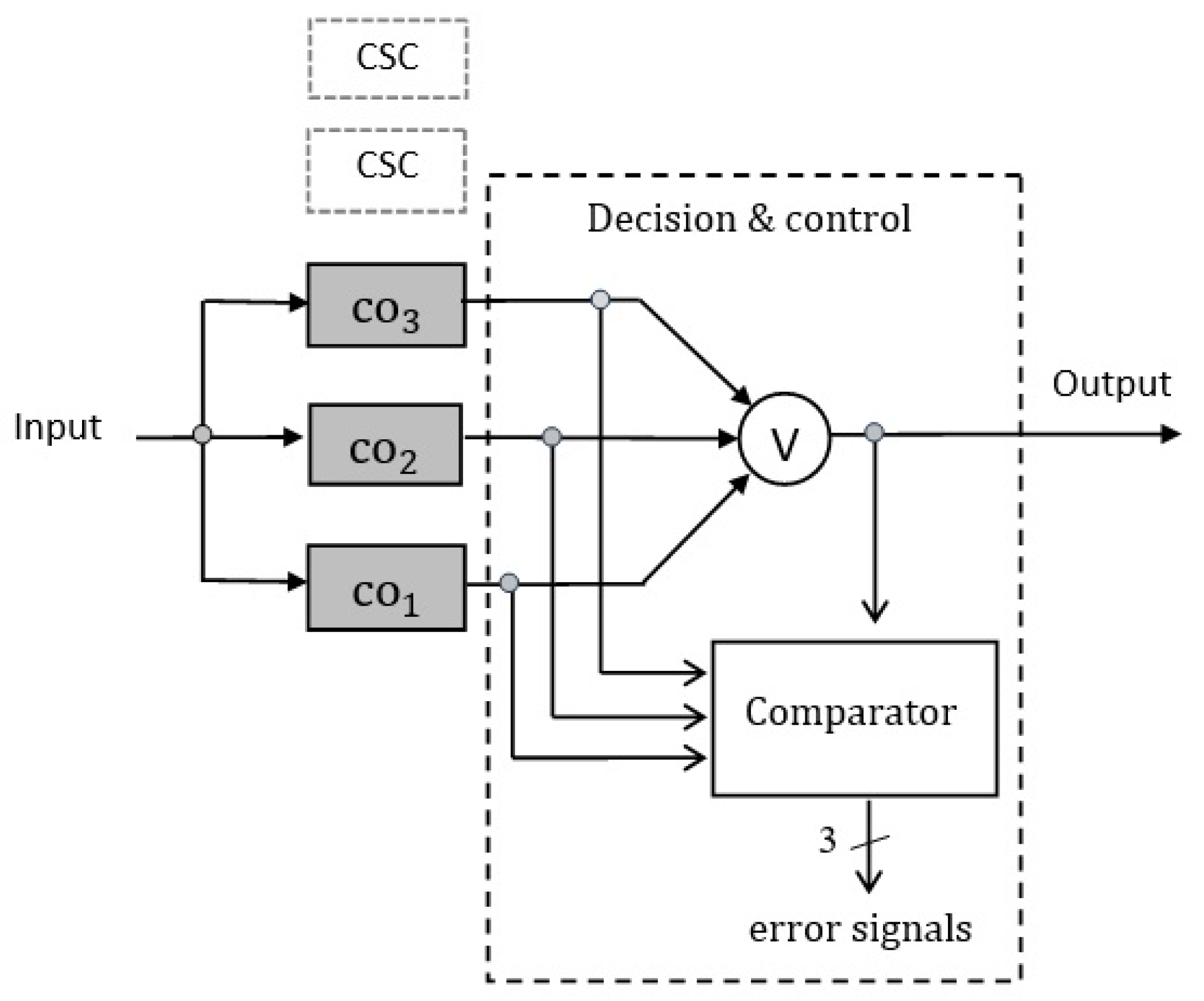

3.4. TMR Structure with Control Facilities and Cold Spare Components ()

3.4.1. Case 1: TMR Structure without Standby Redundancy

3.4.2. Case 2: TMR Structure and One Cold Spare Component

3.4.3. Case 3: TMR Structure and Two Cold Spare Components

3.5. Static Redundancy: TMR or 5MR ()

3.5.1. Case 1: TMR Structure

3.5.2. Case 2: 5MR Structure

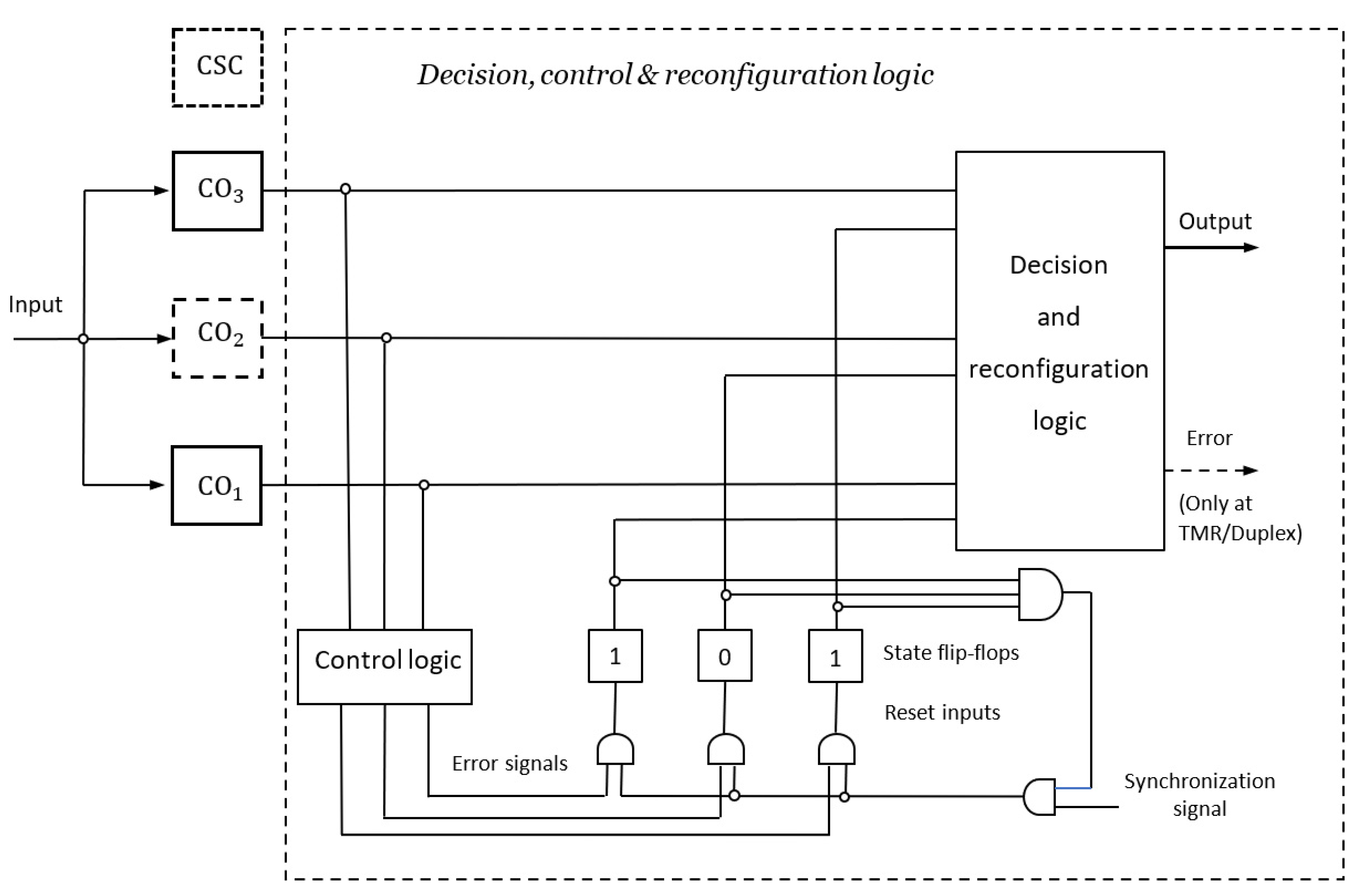

3.6. TMR/Simplex and Cold Standby Redundancy ()

3.6.1. Case 1: TMR/Simplex without Standby Redundancy

3.6.2. Case 2: TMR/Simplex and One Cold Reserve

3.6.3. Case 3: TMR/Simplex and Two Cold Reserves

3.7. TMR/Duplex and Cold Standby Redundancy ()

4. Related Work

5. The Optimization Algorithms

5.1. Classic Evolutionary Algorithm

- tournament selection with two individuals;

- elitism is used, i.e., the best individual is directly copied into the next generation;

- arithmetic crossover, where a child chromosome is a linear combination of the parent chromosomes, with a probability of 0.9;

- mutation by gene resetting, where the value of a randomly selected gene is set to a random number from a uniform distribution defined on its domain of definition, with a probability of 0.2;

- stopping criterion with a fixed number of generations; depending on the experiment 1000 or 10,000 generations are used.

5.2. RELIVE

- the initial size of the population is 50;

- the fraction of newly generated chromosomes in a generation is 0.25;

- the life span of an individual is 4;

- the number of neighbors generated for hill climbing is 20;

- the number of hill climbing steps is 20;

- the probability of overall mutation is 0.2, divided into:

- ○

- Gaussian mutation, with a probability of 0.05, where the value of a randomly selected gene is set to a normal random number with the mean equal to the original gene value and a standard deviation of 2;

- ○

- resetting mutation, with a probability of 0.05, where the value of a randomly selected gene is set to a random number from a uniform distribution defined on its domain of definition is 0.25;

- ○

- pairwise mutation, with a probability of 0.1, where two genes exchange a unit.

5.3. Linear Programming

6. Designing the Objective Functions

6.1. Evolutionary Algorithms

6.1.1. Problem Definition

6.1.2. Genotype-Phenotype Mapping

6.1.3. Chromosom Repairing Procedure

- The selection of the subsystem with the highest cost. Because of the genotype-phenotype distinction, this could sometimes lead to infinite loops (e.g., the repairing procedure decrements a value, and the corresponding adjustment rule increments it);

- The selection of the subsystem with the highest reliability. This is even slower because it requires the recomputation of the system reliability after each is decremented, with from 1 to .

- The selection of the subsystem with the lowest reliability. This method is slower and its results are not much better;

- A more elaborate method, where the number of components is increased on layers, with subsystems taken in a random order. When one layer of incrementation is completed, the next one begins. This method was the slowest, about an order of magnitude slower than random selection.

6.2. Linear Programming

7. Lower Bound Solution

8. Experimental Results

- active redundancy , passive redundancy (or cold standby redundancy) , and hybrid standby redundancy with a hot reserve and possibly other cold ones: no additional parameters;

- hybrid standby redundancy with a warm reserve and possibly other cold ones: parameter α (the coefficient of reduction of the failure rate for a warm-maintained reserve compared to the failure rate of the component in operation);

- hybrid redundancy consisting of a TMR structure with control facilities and possibly cold reserves : parameter β (the reduction factor used to express the failure rate of the decision and control logic of a TMR structure based on the failure rate of the basic components);

- static redundancy: TMR or 5MR : parameters β (as above) and γ (the reduction factor used to express the failure rate of the decision and control logic of a 5MR structure based on the failure rate of the basic components);

- reconfigurable TMR/Simplex type structure with possible other cold-maintained spare components and reconfigurable TMR/Duplex type structure with possible other cold-maintained spare components : parameter δ (the reduction factor used to express the failure rate of the decision, control and reconfiguration logic of a TMR/Simplex or a TMR/Duplex structure based on the failure rate of the basic components).

- Consider problem for which the best solutions generated by the three optimization algorithms are shown in Table 6. All three solutions require 3719 cost units, but the solution given by SEA achieves lower reliability (0.973398) compared to that given by RELIVE and LP (0.977724);

- Consider problem for which the best solutions generated by the three optimization algorithms are shown in Table 12. The solutions given by RELIVE and LP both require 7737 cost units, but the solution generated by LP achieves higher reliability (0.947769) compared to that given by RELIVE (0.927214);

- Consider problem for which the best solutions generated by the three optimization algorithms are shown in Table 15. Please note that the solution given by SEA requires the highest cost and offers the lowest reliability compared to the solutions given by RELIVE and LP.

9. Discussion

- For the results presented in Figure 8, Figure 9, Figure 10 and Figure 11, each algorithm was run a single time for a problem and 2000 problems were used, i.e., 1000 problems for n = 50 and another 1000 problems for n = 100. Due to the high number of problems, the results are statistically significant to assess the performance of the algorithms. These figures show this statistical analysis in terms of mean and standard deviation;

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| Reliability | The probability that a component or a system works successfully within a given period of time |

| Binary system | A system in which each component can be either operational or failed |

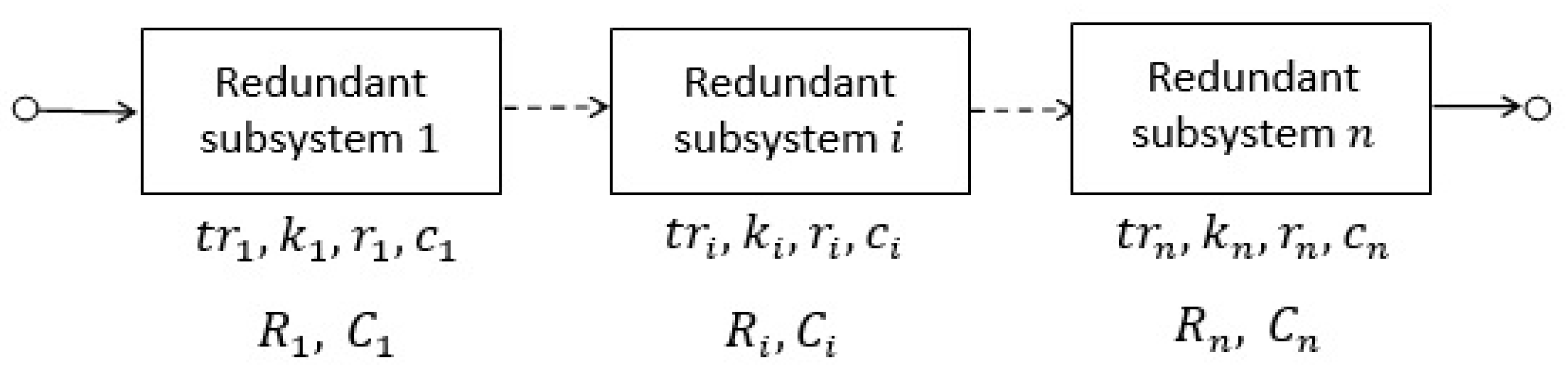

| Series-redundant model | A reliability model that reflects a redundant system composed of subsystems consisting of basic components or redundant structures, and possibly other spare components |

| Notations | |

| The number of components in the non-redundant system or the number of subsystems in the redundant system, as appropriate | |

| A certain period of time for which reliability is assessed | |

| The reliability of a component of type for a given period of time | |

| The cost of a component of type | |

| The failure rate for a component of type | |

| The number of components that make up the redundant subsystem | |

| The reliability of subsystem (subsystem with redundant structure) | |

| The cost of subsystem | |

| The type of redundancy for subsystem | |

| The coefficient of reduction of the failure rate for a warm-maintained reserve compared to the failure rate of the component in operation | |

| The reduction factor used to express the failure rate of the decision and control logic of a TMR structure based on the failure rate of the basic components | |

| The reduction factor used to express the failure rate of the decision and control logic of a 5MR structure based on the failure rate of the basic components | |

| The reduction factor used to express the failure rate of the decision, control and reconfiguration logic of a TMR/Simplex or a TMR/Duplex structure based on the failure rate of the basic components | |

| The reliability of the non-redundant system (system with series reliability model) | |

| The cost of the non-redundant system | |

| The reliability of the redundant system (system with series-redundant reliability model) | |

| The reliability of the redundant system (system with series-redundant reliability model) | |

| The cost of the redundant system | |

| The required level of reliability of the system | |

| The maximum allowable cost of the system | |

| A component in operation (active component) | |

| A warm-maintained spare component | |

| A cold-maintained spare component | |

| : For notations to , when the subsystem is not indicated the index is not necessary, therefore the notations used are , , and so on. | |

| Assumptions | |

| |

| |

| |

References

- Coit, D.W.; Zio, E. The evolution of system reliability optimization. Reliab. Eng. Syst. Saf. 2019, 192, 106259. [Google Scholar] [CrossRef]

- Soltani, R. Reliability optimization of binary state non-repairable systems: A state of the art survey. Int. J. Ind. Eng. Comput. 2014, 5, 339–364. [Google Scholar] [CrossRef][Green Version]

- Kuo, W.; Wan, R. Recent Advances in Optimal Reliability Allocation, Computational Intelligence in Reliability Engineering; Springer: Berlin/Heidelberg, Germany, 2007; pp. 1–36. [Google Scholar]

- Leon, F.; Cașcaval, P.; Bădică, C. Optimization Methods for Redundancy Allocation in Large Systems. Vietnam. J. Comput. Sci. 2020, 7, 281–299. [Google Scholar] [CrossRef]

- Kuo, W.; Lin, H.H.; Xu, Z.; Zhang, W. Reliability optimization with the Lagrange-multiplier and branch-and-bound technique. IEEE Trans. Reliab. 1987, 36, 624–630. [Google Scholar] [CrossRef]

- Misra, K.B. Reliability Optimization of a Series-Parallel System, part I: Lagrangian Multipliers Approach, part II: Maximum Principle Approach. IEEE Trans. Reliab. 1972, 21, 230–238. [Google Scholar] [CrossRef]

- Chern, M. On the Computational Complexity of Reliability Redundancy Allocation in Series System. Oper. Res. Lett. 1992, 11, 309–315. [Google Scholar] [CrossRef]

- Dobani, E.R.; Ardakan, M.A.; Davari-Ardakani, H.; Juybari, M.N. RRAP-CM: A new reliability-redundancy allocation problem with heterogeneous components. Reliab. Eng. Syst. Saf. 2019, 191, 106–563. [Google Scholar] [CrossRef]

- Gholinezhad, H.; Hamadani, A.Z. A new model for the redundancy allocation problem with component mixing and mixed redundancy strategy. Reliab. Eng. Syst. Saf. 2017, 164, 66–73. [Google Scholar] [CrossRef]

- Hsieh, T.J. A simple hybrid redundancy strategy accompanied by simplified swarm optimization for the reliability–redundancy allocation problem. Eng. Optim. 2022, 54, 369–386. [Google Scholar] [CrossRef]

- Ali Najmi, K.B.; Ardakan, M.A.; Javid, A.Y. Optimization of reliability redundancy allocation problem with component mixing and strategy selection for subsystems. J. Stat. Comput. Simul. 2021, 91, 1935–1959. [Google Scholar] [CrossRef]

- Peiravi, A.; Karbasian, M.; Ardakan, M.A.; Coit, D.W. Reliability optimization of series-parallel systems with K-mixed redundancy strategy. Reliab. Eng. Syst. Saf. 2019, 183, 17–28. [Google Scholar] [CrossRef]

- Feizabadi, M.; Jahromi, A.E. A new model for reliability optimization of series-parallel systems with non-homogeneous components. Reliab. Eng. Syst. Saf. 2017, 157, 101–112. [Google Scholar] [CrossRef]

- Hsieh, T.-J.; Yeh, W.C. Penalty guided bees search for redundancy allocation problems with a mix of components in series–parallel systems. Comput. Oper. Res. 2012, 39, 2688–2704. [Google Scholar] [CrossRef]

- Sadjadi, S.J.; Soltani, R. An efficient heuristic versus a robust hybrid meta-heuristic for general framework of serial–parallel redundancy problem. Reliab. Eng. Syst. Saf. 2009, 94, 1703–1710. [Google Scholar] [CrossRef]

- Coit, D.W.; Konak, A. Multiple weighted objectives heuristic for the redundancy allocation problem. IEEE Trans. Reliab. 2006, 55, 551–558. [Google Scholar] [CrossRef]

- Prasad, V.R.; Nair, K.P.K.; Aneja, Y.P. A Heuristic Approach to Optimal Assignment of Components to Parallel-Series Network. IEEE Trans. Reliab. 1992, 40, 555–558. [Google Scholar] [CrossRef]

- Shi, D.H. A new heuristic algorithm for constrained redundancy optimization in complex system. IEEE Trans. Reliab. 1987, 36, 621–623. [Google Scholar]

- Cașcaval, P.; Leon, F. Active Redundancy Allocation in Complex Systems by Using Different Optimization Methods. In Proceedings of the 11th International Conference on Computational Collective Intelligence (ICCCI 2019), Hendaye, France, 4–6 September 2019; Nguyen, N., Chbeir, R., Exposito, E., Aniorte, P., Trawinski, B., Eds.; Computational Collective Intelligence, Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2019; Volume 11683, pp. 625–637. [Google Scholar]

- Everett, H. Generalized Lagrange Multiplier Method of Solving Problems of Optimal Allocation of Resources. Oper. Res. 1963, 11, 399–417. [Google Scholar] [CrossRef]

- Yalaoui, A.; Châtelet, E.; Chu, C. A new dynamic programming method for reliability & redundancy allocation in a parallel-series syste. IEEE Trans. Reliab. 2005, 54, 254–261. [Google Scholar]

- Shooman, M. Reliability of Computer Systems and Networks; John Wiley & Sons: New York, NY, USA, 2002. [Google Scholar]

- Misra, K.B. Dynamic programming formulation of the redundancy allocation problem. Int. J. Math. Educ. Sci. Technol. 1971, 2, 207–215. [Google Scholar] [CrossRef]

- Sahoo, L.; Bhunia, A.K.; Roy, D. Reliability optimization with high and low level redundancies in interval environment via genetic algorithm. Int. J. Syst. Assur. Eng. Manag. 2014, 5, 513–523. [Google Scholar] [CrossRef]

- Tavakkoli-Moghaddam, R.; Safari, J.; Khalili-Damghani, F.; Abtahi, K.; Tavana, A.-R. A new multi-objective particle swarm optimization method for solving reliability redundancy allocation problems. Reliab. Eng. Syst. Saf. 2013, 111, 58–75. [Google Scholar]

- Coelho, L.D.S. Self-organizing migrating strategies applied to reliability-redundancy optimization of systems. IEEE Trans. Reliab. 2009, 58, 501–510. [Google Scholar] [CrossRef]

- Agarwal, M.; Gupta, R. Genetic Search for Redundacy Optimization in Complex Systems. J. Qual. Maint. Eng. 2006, 12, 338–353. [Google Scholar] [CrossRef]

- Berkelaar, M.; Eikland, K.; Notebaert, P. lpsolve, Mixed Integer Linear Programming (MILP) Solver. 2021. Available online: https://sourceforge.net/projects/lpsolve (accessed on 1 September 2022).

- Leon, F.; Cașcaval, P. 01IP and QUBO: Optimization Methods for Redundancy Allocation in Complex Systems. In Proceedings of the 2019 23rd International Conference on System Theory, Control and Computing, Sinaia, Romania, 9–11 October 2019; pp. 877–882. [Google Scholar]

- Misra, K.B.; Sharma, U. An efficient algorithm to solve integer-programming problems arising in system-reliability design. IEEE Trans. Reliab. 1991, 40, 81–91. [Google Scholar] [CrossRef]

- Floudas, C.A. Nonlinear and Mixed-Integer Optimization: Fundamentals and Applications; Oxford University Press: New York, NY, USA, 1995. [Google Scholar]

- Trivedi, K.S. Probability and Statistics with Reliability, Queueing, and Computer Science Applications; John Wiley & Sons: New York, NY, USA, 2002. [Google Scholar]

- Misra, K.B. (Ed.) Handbook of Performability Engineering; Springer: London, UK, 2008. [Google Scholar]

- McGeoch, C.C.; Harris, R.; Reinhardt, S.P.; Bunyk, P. Practical Annealing-Based Quantum Computing, Whitepaper, D-Wave Systems. 2019. Available online: https://www.dwavesys.com/media/vh5jmyka/ (accessed on 1 September 2022).

- Holland, J.H. Genetic Algorithms. Sci. Am. 1992, 267, 66–73. Available online: http://www.jstor.org/stable/24939139 (accessed on 1 September 2022). [CrossRef]

- De Jong, K.A. Evolutionary Computation: A unified Approach; MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Dantzig, G.B.L. Linear Programming and Extensions; Princeton University Press: Princeton, NJ, USA, 1963. [Google Scholar]

- Albert, A.A. A Measure of the Effort Required to Increase Reliability; Technical Report, No. 43; Stanfort University, Applied Mathematics and Statistics Laboratory: Stanford, CA, USA, 1958. [Google Scholar]

| Redundancy Type | Adjustment Rule |

|---|---|

| No adjustment | |

| A, B, C, D | E, F, G, H | |

|---|---|---|

| A, B, C, D | E, F, G, H | |

|---|---|---|

| (1: D, 0.989, 39; α = 0.55), (2: C, 0.958, 25), (3: C, 0.905, 41), (4: E, 0.952, 46; β = 50), (5: C, 0.975, 44), (6: A, 0.984, 14), (7: D, 0.939, 43; α = 0.86), (8: A, 0.944, 13), (9: G, 0.987, 48; δ = 74), (10: A, 0.914, 9), (11: H, 0.955, 32; δ = 65), (12: A, 0.986, 41), (13: D, 0.957, 16; α = 0.84), (14: D, 0.920, 1; α = 0.31), (15: C, 0.913, 27), (16: A, 0.985, 8), (17: A, 0.902, 9), (18: F, 0.956, 26; β = 80, γ = 40), (19: B, 0.910, 32), (20: F, 0.986, 42; β = 95, γ = 48), (21: F, 0.968, 47; β = 80, γ = 40), (22: D, 0.965, 47; α = 0.24), (23: H, 0.981, 31; δ = 72), (24: H, 0.982, 31; δ = 53), (25: F, 0.953, 45; β = 77, γ = 39), (26: B, 0.959, 18), (27: H, 0.962, 13; δ = 49), (28: E, 0.974, 46; β = 98), (29: C, 0.915, 26), (30: D, 0.983, 18; α = 0.74), (31: H, 0.975, 8; δ = 47), (32: A, 0.988, 12), (33: A, 0.971, 21), (34: C, 0.909, 17), (35: C, 0.953, 7), (36: C, 0.926, 7), (37: D, 0.989, 8; α = 0.74), (38: C, 0.906, 43), (39: H, 0.971, 11; δ = 66), (40: C, 0.944, 16), (41: E, 0.989, 21; β = 79), (42: A, 0.907, 36), (43: B, 0.942, 5), (44: C, 0.975, 18), (45: F, 0.961, 42; β = 95, γ = 48), (46: G, 0.979, 8; δ = 60), (47: E, 0.970, 38; β = 82), (48: H, 0.952, 23; δ = 48), (49: G, 0.958, 15; δ = 68), (50: C, 0.975, 7) |

| , |

| (1: D, 0.925, 39; α = 0.42), (2: B, 0.985, 31), (3: E, 0.968, 29; β = 67), (4: A, 0.969, 35), (5: B, 0.904, 36), (6: A, 0.909, 18), (7: F, 0.973, 6; β = 92, γ = 46), (8: C, 0.976, 10), (9: C, 0.947, 19), (10: C, 0.940, 33), (11: A, 0.931, 22), (12: G, 0.970, 35; δ = 62), (13: H, 0.966, 14; δ = 69), (14: B, 0.989, 31), (15: A, 0.945, 41), (16: C, 0.974, 17), (17: B, 0.980, 47), (18: H, 0.972, 4; δ = 79), (19: C, 0.917, 44), (20: B, 0.902, 32), (21: B, 0.981, 1), (22: C, 0.983, 34), (23: F, 0.983, 12; β = 92, γ = 46), (24: G, 0.960, 12; δ = 54), (25: D, 0.936, 28; α = 0.41), (26: G, 0.965, 11; δ = 56), (27: F, 0.976, 7; β = 53, γ = 26), (28: B, 0.978, 10), (29: H, 0.972, 21; δ = 55), (30: C, 0.980, 2), (31: G, 0.975, 46; δ = 41), (32: B, 0.901, 46), (33: H, 0.972, 26; δ = 56), (34: C, 0.928, 7), (35: A, 0.909, 5), (36: A, 0.977, 49), (37: D, 0.973, 22; α = 0.72), (38: C, 0.918, 42), (39: A, 0.930, 29), (40: B, 0.986, 37), (41: G, 0.968, 37; δ = 60), (42: G, 0.977, 31; δ = 41), (43: F, 0.981, 41; β = 84, γ = 42), (44: G, 0.975, 33; δ = 40), (45: B, 0.975, 25), (46: E, 0.965, 37; β = 81), (47: B, 0.941, 20), (48: F, 0.979, 8; β = 86, γ = 43), (49: F, 0.964, 40; β = 58, γ = 29), (50: E, 0.967, 25; β = 64) |

| , |

| Algorithm | ||||

|---|---|---|---|---|

| SEA | 2, 2, 3, 4, 2, 2, 3, 4, 3, 5, 4, 2, 3, 3, 3, 3, 4, 3, 3, 3, 3, 2, 3, 3, 3, 2, 5, 4, 3, 2, 4, 3, 2, 3, 3, 4, 4, 3, 4, 3, 3, 3, 3, 3, 3, 4, 3, 4, 4, 2 | 3719 | 0.973398 | 33.714 |

| RELIVE | 2, 3, 3, 4, 2, 2, 3, 3, 3, 4, 4, 2, 3, 4, 3, 2, 4, 3, 3, 3, 3, 2, 3, 3, 3, 3, 4, 4, 3, 2, 4, 2, 2, 3, 3, 4, 2, 3, 4, 3, 3, 3, 3, 2, 3, 4, 4, 4, 4, 3 | 3719 | 0.977724 | 40.260 |

| LP | 2, 3, 3, 4, 2, 2, 3, 3, 3, 4, 4, 2, 3, 4, 3, 2, 4, 3, 3, 3, 3, 2, 3, 3, 3, 3, 4, 4, 3, 2, 4, 2, 2, 3, 3, 4, 2, 3, 4, 3, 3, 3, 3, 2, 3, 4, 4, 4, 4, 3 | 3719 | 0.977724 | 40.260 |

| Algorithm | ||||

|---|---|---|---|---|

| SEA | 3, 2, 4, 2, 3, 3, 3, 2, 3, 3, 4, 4, 5, 2, 3, 3, 2, 5, 3, 3, 8, 2, 3, 4, 3, 4, 3, 2, 4, 5, 3, 3, 4, 5, 4, 2, 4, 3, 3, 2, 3, 3, 3, 3, 2, 4, 3, 3, 3, 4 | 3856 | 0.978930 | 41.911 |

| RELIVE | 3, 2, 4, 3, 3, 4, 3, 3, 3, 3, 3, 4, 4, 2, 3, 3, 2, 5, 3, 3, 4, 2, 3, 4, 3, 4, 3, 2, 4, 3, 3, 3, 4, 4, 4, 2, 2, 3, 3, 2, 4, 3, 3, 3, 2, 4, 3, 3, 3, 4 | 3861 | 0.981474 | 47.666 |

| LP | 3, 2, 4, 3, 3, 4, 3, 3, 3, 3, 3, 4, 4, 2, 3, 3, 2, 5, 3, 3, 4, 2, 3, 4, 3, 4, 3, 2, 4, 3, 3, 3, 4, 4, 4, 2, 2, 3, 3, 2, 4, 3, 3, 3, 2, 4, 3, 3, 3, 4 | 3861 | 0.981474 | 47.666 |

| Algorithm | ||||

|---|---|---|---|---|

| SEA R* = 0.973398 | 2, 3, 3, 4, 2, 2, 3, 3, 3, 3, 4, 2, 3, 4, 3, 3, 4, 3, 3, 3, 3, 2, 3, 3, 3, 3, 5, 3, 3, 2, 4, 2, 2, 3, 3, 3, 2, 3, 4, 3, 3, 3, 3, 3, 3, 4, 3, 4, 4, 3 | 3658 | 0.973465 | 33.798 |

| RELIVE R* = 0.977724 | 2, 3, 3, 4, 2, 2, 3, 3, 3, 4, 4, 2, 3, 4, 3, 2, 4, 3, 3, 3, 3, 2, 3, 3, 3, 3, 4, 4, 3, 2, 4, 2, 2, 3, 3, 4, 2, 3, 4, 3, 3, 3, 3, 2, 3, 4, 4, 4, 4, 3 | 3719 | 0.977724 | 40.260 |

| LP R* = 0.977724 | 2, 3, 3, 4, 2, 2, 3, 3, 3, 4, 4, 2, 3, 4, 3, 2, 4, 3, 3, 3, 3, 2, 3, 3, 3, 3, 4, 4, 3, 2, 4, 2, 2, 3, 3, 4, 2, 3, 4, 3, 3, 3, 3, 2, 3, 4, 4, 4, 4, 3 | 3719 | 0.977724 | 40.260 |

| Algorithm | ||||

|---|---|---|---|---|

| SEA R* = 0.978930 | 3, 2, 4, 3, 3, 4, 3, 2, 3, 3, 3, 3, 4, 2, 3, 2, 2, 5, 3, 3, 2, 2, 3, 4, 3, 4, 3, 2, 4, 4, 3, 3, 4, 3, 5, 2, 3, 3, 3, 2, 4, 3, 3, 3, 2, 4, 3, 3, 3, 4 | 3819 | 0.979250 | 42.556 |

| RELIVE R* = 0.981474 | 3, 2, 4, 3, 3, 4, 3, 3, 3, 3, 3, 4, 4, 2, 3, 3, 2, 5, 3, 3, 4, 2, 3, 4, 3, 4, 3, 2, 4, 3, 3, 3, 4, 4, 4, 2, 2, 3, 3, 2, 4, 3, 3, 3, 2, 4, 3, 3, 3, 4 | 3861 | 0.981474 | 47.666 |

| LP R* = 0.981474 | 3, 2, 4, 3, 3, 4, 3, 3, 3, 3, 3, 4, 4, 2, 3, 3, 2, 5, 3, 3, 4, 2, 3, 4, 3, 4, 3, 2, 4, 3, 3, 3, 4, 4, 4, 2, 2, 3, 3, 2, 4, 3, 3, 3, 2, 4, 3, 3, 3, 4 | 3861 | 0.981474 | 47.666 |

| (1: F, 0.987, 9; β = 75, γ = 38), (2: H, 0.970, 16; δ = 40), (3: F, 0.959, 20; β = 82, γ = 41), (4: C, 0.984, 40), (5: B, 0.919, 23), (6: D, 0.953, 9; α = 0.34), (7: G, 0.985, 8; δ = 44), (8: A, 0.966, 23), (9: H, 0.978, 38; δ = 48), (10: B, 0.908, 5), (11: C, 0.919, 23), (12: A, 0.946, 18), (13: A, 0.969, 42), (14: F, 0.970, 15; β = 94, γ = 47), (15: B, 0.921, 17), (16: B, 0.913, 32), (17: D, 0.905, 15; α = 0.41), (18: H, 0.958, 14; δ = 58), (19: B, 0.963, 12), (20: B, 0.930, 29), (21: A, 0.954, 18), (22: C, 0.989, 27), (23: A, 0.990, 7), (24: C, 0.983, 23), (25: D, 0.928, 10; α = 0.22), (26: E, 0.958, 13; β = 93), (27: A, 0.962, 25), (28: F, 0.967, 20; β = 53, γ = 27), (29: G, 0.970, 36; δ = 67), (30: B, 0.972, 20), (31: C, 0.943, 23), (32: G, 0.982, 43; δ = 58), (33: H, 0.978, 45; δ = 64), (34: B, 0.952, 20), (35: A, 0.944, 7), (36: C, 0.969, 19), (37: F, 0.953, 43; β = 57, γ = 29), (38: G, 0.953, 18; δ = 47), (39: H, 0.987, 25; δ = 54), (40: A, 0.940, 25), (41: B, 0.962, 43), (42: H, 0.958, 31; δ = 77), (43: A, 0.947, 26), (44: E, 0.984, 48; β = 57), (45: E, 0.969, 6; β = 87), (46: A, 0.900, 46), (47: C, 0.945, 47), (48: G, 0.967, 8; δ = 52), (49: F, 0.961, 27; β = 64, γ = 32), (50: E, 0.971, 44; β = 82), (51: B, 0.912, 47), (52: F, 0.968, 34; β = 52, γ = 26), (53: G, 0.978, 19; δ = 51), (54: E, 0.966, 32; β = 69), (55: B, 0.946, 35), (56: C, 0.983, 32), (57: H, 0.970, 10; δ = 50), (58: D, 0.926, 46; α = 0.61), (59: H, 0.975, 30; δ = 77), (60: D, 0.902, 10; α = 0.99), (61: D, 0.982, 33; α = 0.30), (62: A, 0.940, 38), (63: C, 0.922, 37), (64: F, 0.986, 19; β = 78, γ = 39), (65: G, 0.975, 32; δ = 59), (66: D, 0.938, 30; α = 0.22), (67: B, 0.974, 22), (68: H, 0.958, 22; δ = 70), (69: E, 0.951, 9; β = 75), (70: G, 0.969, 48; δ = 77), (71: D, 0.905, 38; α = 0.21), (72: E, 0.989, 47; β = 64), (73: H, 0.962, 38; δ = 63), (74: B, 0.923, 37), (75: H, 0.976, 36; δ = 53), (76: A, 0.937, 36), (77: B, 0.942, 2), (78: C, 0.913, 8), (79: E, 0.968, 18; β = 69), (80: C, 0.928, 14), (81: B, 0.962, 16), (82: C, 0.924, 17), (83: A, 0.913, 42), (84: A, 0.987, 41), (85: A, 0.960, 22), (86: D, 0.902, 39; α = 0.72), (87: H, 0.953, 24; δ = 54), (88: B, 0.925, 13), (89: H, 0.953, 35; δ = 65), (90: E, 0.972, 24; β = 86), (91: D, 0.924, 9; α = 0.48), (92: B, 0.971, 46), (93: H, 0.969, 37; δ = 66), (94: D, 0.980, 15; α = 0.11), (95: E, 0.972, 41; β = 80), (96: B, 0.922, 6), (97: E, 0.988, 44; β = 54), (98: C, 0.955, 7), (99: F, 0.960, 16; β = 90, γ = 45), (100: A, 0.904, 25) |

| (1: D, 0.974, 45; α = 0.98), (2: B, 0.902, 13), (3: C, 0.955, 24), (4: D, 0.958, 21; α = 0.91), (5: E, 0.954, 39; β = 82), (6: A, 0.923, 46), (7: D, 0.952, 8; α = 0.23), (8: B, 0.900, 33), (9: A, 0.926, 19), (10: D, 0.933, 3; α = 0.55), (11: D, 0.973, 4; α = 0.13), (12: E, 0.976, 2; β = 100), (13: D, 0.912, 12; α = 0.43), (14: G, 0.963, 19; δ = 45), (15: B, 0.975, 27), (16: D, 0.985, 11; α = 0.23), (17: C, 0.984, 34), (18: B, 0.940, 47), (19: F, 0.981, 35; β = 79, γ = 40), (20: F, 0.961, 20; β = 79, γ = 39), (21: D, 0.929, 17; α = 0.36), (22: H, 0.989, 7; δ = 63), (23: E, 0.977, 1; β = 57), (24: A, 0.943, 44), (25: F, 0.965, 40; β = 97, γ = 48), (26: E, 0.982, 34; β = 97), (27: F, 0.974, 49; β = 79, γ = 39), (28: H, 0.969, 12; δ = 42), (29: D, 0.949, 45; α = 0.44), (30: G, 0.977, 11; δ = 56), (31: D, 0.915, 2; α = 0.48), (32: C, 0.975, 10), (33: A, 0.904, 10), (34: A, 0.928, 16), (35: H, 0.976, 49; δ = 65), (36: E, 0.958, 25; β = 55), (37: D, 0.962, 47; α = 0.15), (38: B, 0.909, 1), (39: H, 0.960, 37; δ = 44), (40: B, 0.923, 49), (41: C, 0.907, 32), (42: E, 0.985, 49; β = 63), (43: B, 0.918, 4), (44: F, 0.964, 38; β = 90, γ = 45), (45: A, 0.952, 36), (46: B, 0.945, 41), (47: C, 0.906, 16), (48: D, 0.915, 24; α = 0.70), (49: B, 0.905, 21), (50: A, 0.902, 20), (51: C, 0.969, 15), (52: H, 0.964, 24; δ = 51), (53: D, 0.916, 44; α = 0.68), (54: E, 0.973, 37; β = 53), (55: C, 0.945, 13), (56: D, 0.976,38; α = 0.23), (57: D, 0.931, 13; α = 0.09), (58: B, 0.912, 30), (59: F, 0.960, 31; β = 71, γ = 35), (60: A, 0.925, 5), (61: B, 0.958, 46), (62: E, 0.954, 46; β = 57), (63: F, 0.968, 38; β = 85, γ = 43), (64: B, 0.955, 8), (65: H, 0.958, 1; δ = 59), (66: B, 0.988, 44), (67: D, 0.954, 42; α = 0.19), (68: C, 0.974, 46), (69: G, 0.977, 19; δ = 47), (70: D, 0.958, 3; α = 0.04), (71: A, 0.922, 13), (72: A, 0.975, 33), (73: C, 0.918, 10), (74: D, 0.946, 36; α = 0.42), (75: C, 0.918, 38), (76: H, 0.968, 18; δ = 70), (77: F, 0.981, 3; β = 93, γ = 46), (78: H, 0.963, 12; δ = 78), (79: A, 0.981, 8), (80: D, 0.980, 48; α = 0.97), (81: B, 0.967, 19), (82: C, 0.939, 26), (83: F, 0.967, 40; β = 55, γ = 27), (84: C, 0.947, 25), (85: D, 0.982, 46; α = 0.07), (86: E, 0.982, 28; β = 84), (87: G, 0.976, 15; δ = 66), (88: D, 0.941, 22; α = 0.44), (89: F, 0.983, 3; β = 97, γ = 49), (90: C, 0.972, 12), (91: A, 0.976, 13), (92: B, 0.950, 18), (93: D, 0.976, 20; α = 0.07), (94: G, 0.989, 32; δ = 42), (95: H, 0.974, 3; δ = 66), (96: E, 0.989, 36; β = 93), (97: G, 0.967, 11; δ = 45), (98: H, 0.974, 46; δ = 68), (99: G, 0.956, 38; δ = 74), (100: G, 0.974, 42; δ = 73) |

| Algorithm | ||||

|---|---|---|---|---|

| SEA | 5, 3, 3, 2, 4, 3, 5, 3, 3, 7, 4, 4, 2, 3, 3, 3, 4, 3, 3, 3, 2, 4, 7, 2, 3, 4, 3, 3, 3, 5, 2, 3, 4, 3, 2, 3, 3, 5, 3, 2, 2, 3, 2, 3, 3, 3, 2, 4, 3, 3, 3, 3, 3, 4, 2, 2, 4, 3, 4, 4, 3, 2, 4, 3, 3, 2, 2, 4, 5, 3, 3, 3, 3, 3, 3, 3, 5, 3, 3, 5, 2, 3, 3, 2, 2, 3, 4, 2, 3, 3, 4, 2, 3, 3, 3, 4, 3, 5, 3, 3 | 7722 | 0.894261 | 9.375 |

| RELIVE | 3, 4, 3, 2, 3, 3, 4, 2, 3, 4, 3, 3, 3, 3, 3, 3, 4, 4, 3, 3, 3, 2, 2, 2, 3, 5, 2, 3, 3, 2, 3, 3, 4, 3, 2, 3, 3, 3, 3, 3, 2, 4, 3, 3, 5, 3, 2, 4, 3, 3, 3, 3, 4, 3, 3, 2, 4, 2, 3, 4, 2, 3, 2, 3, 3, 2, 2, 4, 5, 4, 3, 3, 4, 2, 4, 3, 8, 4, 4, 4, 2, 3, 3, 2, 3, 3, 4, 3, 4, 4, 3, 2, 4, 2, 4, 3, 3, 3, 3, 4 | 7737 | 0.927214 | 13.619 |

| LP | 3, 4, 3, 2, 3, 3, 4, 2, 3, 3, 3, 3, 2, 3, 3, 3, 3, 4, 2, 3, 3, 2, 2, 2, 3, 4, 3, 3, 3, 2, 3, 3, 3, 3, 3, 2, 3, 4, 3, 3, 2, 4, 3, 3, 4, 3, 3, 4, 3, 4, 3, 3, 3, 4, 2, 2, 4, 3, 4, 4, 2, 3, 3, 3, 3, 3, 2, 4, 4, 3, 3, 3, 4, 3, 3, 3, 3, 4, 4, 3, 2, 3, 3, 2, 3, 3, 4, 3, 4, 4, 3, 2, 4, 2, 4, 3, 3, 3, 3, 3 | 7737 | 0.947769 | 18.979 |

| Algorithm | ||||

|---|---|---|---|---|

| SEA | 2, 3, 3, 3, 4, 3, 4, 4, 3, 3, 4, 4, 4, 3, 3, 3, 2, 2, 3, 3, 4, 3, 4, 2, 3, 4, 3, 4, 2, 4, 4, 4, 4, 5, 3, 4, 2, 5, 4, 3, 3, 3, 8, 3, 3, 2, 3, 3, 4, 4, 3, 3, 3, 3, 3, 2, 4, 2, 3, 3, 2, 3, 3, 2, 5, 2, 2, 2, 5, 3, 3, 4, 5, 2, 3, 5, 3, 5, 3, 2, 3, 5, 3, 3, 3, 3, 3, 2, 3, 2, 3, 3, 2, 4, 5, 3, 5, 4, 3, 3 | 7499 | 0.930610 | 14.281 |

| RELIVE | 3, 3, 2, 3, 4, 3, 3, 3, 4, 4, 2, 5, 4, 4, 2, 2, 2, 2, 3, 3, 3, 4, 3, 2, 3, 3, 3, 5, 3, 4, 4, 3, 5, 4, 3, 4, 2, 6, 4, 3, 4, 3, 4, 3, 3, 2, 4, 3, 3, 4, 3, 4, 3, 3, 3, 2, 3, 3, 3, 4, 3, 4, 3, 2, 5, 2, 3, 2, 4, 4, 4, 2, 4, 3, 3, 4, 5, 5, 3, 2, 2, 3, 3, 3, 2, 4, 4, 3, 3, 3, 3, 3, 2, 3, 5, 3, 4, 3, 4, 3 | 7518 | 0.952116 | 20.695 |

| LP | 2, 3, 3, 3, 4, 3, 3, 3, 3, 4, 3, 5, 3, 4, 2, 2, 2, 3, 3, 3, 3, 4, 5, 3, 3, 3, 3, 4, 3, 4, 4, 3, 4, 3, 4, 4, 2, 4, 4, 3, 3, 3, 3, 3, 3, 3, 4, 3, 3, 4, 3, 4, 3, 4, 3, 2, 3, 3, 3, 4, 2, 4, 3, 3, 5, 2, 2, 2, 4, 3, 4, 2, 3, 3, 3, 4, 3, 4, 3, 2, 2, 3, 3, 3, 2, 3, 4, 3, 3, 3, 3, 3, 2, 3, 4, 3, 4, 4, 4, 3 | 7518 | 0.962884 | 26.699 |

| Algorithm | ||||

|---|---|---|---|---|

| SEA = 0.894261 | 3, 5, 3, 2, 2, 2, 3, 3, 4, 2, 2, 3, 2, 5, 3, 3, 4, 4, 2, 2, 3, 2, 3, 2, 4, 5, 4, 5, 3, 2, 2, 3, 3, 2, 7, 2, 3, 5, 3, 4, 2, 5, 3, 3, 4, 3, 2, 5, 3, 3, 3, 3, 3, 3, 2, 2, 3, 2, 3, 3, 3, 4, 3, 3, 3, 4, 2, 3, 5, 3, 3, 3, 4, 2, 3, 2, 8, 3, 4, 5, 3, 3, 3, 2, 2, 3, 5, 4, 4, 3, 4, 2, 3, 3, 3, 6, 3, 5, 3, 3 | 7735 | 0.896609 | 9.588 |

| RELIVE = 0.927214 | 3, 4, 3, 2, 3, 4, 3, 2, 3, 3, 3, 3, 2, 3, 3, 3, 4, 4, 3, 3, 3, 2, 2, 2, 3, 5, 3, 3, 3, 2, 3, 3, 3, 3, 3, 2, 3, 4, 3, 3, 2, 4, 3, 3, 5, 3, 2, 5, 3, 5, 2, 3, 3, 4, 2, 2, 5, 3, 4, 4, 2, 2, 3, 3, 3, 2, 2, 4, 4, 3, 3, 3, 4, 2, 3, 2, 3, 5, 5, 3, 3, 3, 3, 2, 3, 3, 4, 3, 4, 5, 4, 2, 3, 2, 3, 3, 3, 5, 3, 3 | 7622 | 0.927251 | 13.626 |

| LP = 0.947769 | 3, 4, 3, 2, 3, 3, 4, 2, 3, 3, 3, 3, 2, 3, 3, 3, 3, 4, 2, 3, 3, 2, 2, 2, 3, 4, 3, 3, 3, 2, 3, 3, 3, 3, 3, 2, 3, 4, 3, 3, 2, 4, 3, 3, 4, 3, 3, 4, 3, 4, 3, 3, 3, 4, 2, 2, 4, 3, 4, 4, 2, 3, 3, 3, 3, 3, 2, 4, 4, 3, 3, 3, 4, 3, 3, 3, 3, 4, 4, 3, 2, 3, 3, 2, 3, 3, 4, 3, 4, 4, 3, 2, 4, 2, 4, 3, 3, 3, 3, 3 | 7737 | 0.947769 | 18.979 |

| Algorithm | ||||

|---|---|---|---|---|

| SEA = 0.930610 | 2, 3, 5, 2, 4, 3, 4, 4, 2, 4, 2, 5, 3, 4, 4, 3, 3, 3, 5, 3, 3, 5, 4, 3, 3, 3, 3, 3, 3, 5, 4, 5, 6, 5, 5, 5, 2, 8, 3, 3, 3, 3, 8, 3, 3, 2, 5, 4, 4, 4, 5, 4, 3, 3, 4, 3, 2, 4, 3, 4, 2, 3, 3, 5, 5, 2, 2, 3, 5, 3, 4, 2, 4, 2, 3, 4, 5, 5, 3, 4, 2, 5, 3, 4, 2, 5, 3, 2, 5, 4, 2, 5, 2, 4, 3, 3, 5, 4, 3, 3 | 8213 | 0.932688 | 14.722 |

| RELIVE = 0.952116 | 2, 3, 3, 3, 4, 3, 4, 3, 3, 4, 4, 4, 3, 4, 2, 2, 2, 2, 3, 3, 3, 5, 5, 3, 3, 3, 3, 5, 3, 5, 4, 3, 4, 4, 3, 4, 2, 3, 4, 2, 3, 3, 3, 3, 3, 2, 3, 3, 4, 3, 3, 4, 3, 4, 3, 2, 3, 3, 3, 3, 2, 4, 3, 3, 4, 2, 2, 2, 4, 3, 4, 2, 3, 3, 3, 5, 5, 4, 3, 2, 3, 3, 3, 3, 2, 3, 5, 3, 5, 3, 3, 3, 2, 3, 4, 3, 4, 3, 4, 3 | 7384 | 0.952135 | 20.703 |

| LP = 0.962884 | 2, 3, 3, 3, 4, 3, 3, 3, 3, 4, 3, 5, 3, 4, 2, 2, 2, 3, 3, 3, 3, 4, 5, 3, 3, 3, 3, 4, 3, 4, 4, 3, 4, 3, 4, 4, 2, 4, 4, 3, 3, 3, 4, 3, 3, 3, 4, 3, 3, 4, 3, 4, 3, 4, 3, 2, 3, 3, 3, 4, 2, 4, 3, 3, 5, 2, 2, 2, 4, 3, 3, 2, 4, 3, 3, 4, 3, 4, 3, 2, 2, 3, 3, 3, 2, 3, 4, 3, 3, 3, 3, 3, 2, 3, 4, 3, 4, 4, 4, 3 | 7518 | 0.962884 | 26.699 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cașcaval, P.; Leon, F. Optimization Methods for Redundancy Allocation in Hybrid Structure Large Binary Systems. Mathematics 2022, 10, 3698. https://doi.org/10.3390/math10193698

Cașcaval P, Leon F. Optimization Methods for Redundancy Allocation in Hybrid Structure Large Binary Systems. Mathematics. 2022; 10(19):3698. https://doi.org/10.3390/math10193698

Chicago/Turabian StyleCașcaval, Petru, and Florin Leon. 2022. "Optimization Methods for Redundancy Allocation in Hybrid Structure Large Binary Systems" Mathematics 10, no. 19: 3698. https://doi.org/10.3390/math10193698

APA StyleCașcaval, P., & Leon, F. (2022). Optimization Methods for Redundancy Allocation in Hybrid Structure Large Binary Systems. Mathematics, 10(19), 3698. https://doi.org/10.3390/math10193698