Abstract

Split variational inclusions are revealed as a large class of problems that includes several other pre-existing split-type issues: split feasibility, split zeroes problems, split variational inequalities and so on. This makes them not only a rich direction of theoretical study but also one with important and varied practical applications: large dimensional linear systems, optimization, signal reconstruction, boundary value problems and others. In this paper, the existing algorithmic tools are complemented by a new procedure based on a three-step iterative process. The resulting approximating sequence is proved to be weakly convergent toward a solution. The operation mode of the new algorithm is tracked in connection with mixed optimization–feasibility and mixed linear–feasibility systems. Standard polynomiographic techniques are applied for a comparative visual analysis of the convergence behavior.

Keywords:

split monotone variational inclusions; split variational inequality; split feasibility; monotone maximal operators; mixed optimization–feasibility systems MSC:

47H10; 54H25; 37C25; 47H04

1. Introduction

In general, the setting for split problems includes two Hilbert spaces and a linear operator between them. The statement of the problem refers to finding the points of the domain that satisfy some given conditions so that their images through the linear operator satisfy analogical conditions within the range. Depending on the restrictions initially set, as well as to other mappings involved in the issue, several types of split problems were defined and analyzed so far. Most often, there is a very well-defined inclusion relationship between these issues. However, the most important feature common to all split problems is the application area, which makes them all go beyond a simple theoretical exercise.

For instance, the split monotone variational inclusion problem (SMVI) [1] is fit for those approaches including both multivalued mappings as well as single-valued self-mappings on the Hilbert spaces. Through certain assignments, several other split problems arise from this general framework:

- When the multivalued mappings are the normal cones corresponding to some given closed and convex subsets of the two Hilbert spaces, one finds the split variational inequality problem (SVI) [2]. Furthermore, the split feasibility problem (SFP) [3] (please also see [4,5,6,7,8,9,10,11,12,13]) is obtained by special assignments for the self-mapping involved in variational inequalities. A panoramic picture about various iteration algorithms developed so far to solve the SFP was provided by Hamdi et al. in [14].

- Because the split equality problem (SEP) (see [15,16]) can equivalently be converted to a split feasibility problem in some product space, it follows that the SEP also provides a subclass of the SMVI.

- When the multivalued mappings in a split monotone variational inclusion problem are null, one reaches the split zeroes problem (SZP) [2] and, in particular, the split common fixed-point problem (SCFPP) (an interesting survey of iteration algorithms fit for this type of problem is provided in [14]).

- By excluding the self-mappings and keeping only the multivalued mappings, we find the split common null-point problem (SCNPP) [17]. Some possible iterative algorithms to solve this problem are listed in Xiong et al. [18]. In particular, when the multivalued mappings are precisely the subdifferential operators of two lower semi-continuous convex functions, we reach the split minimization problem (SMP).

- When there is coincidence between the two Hilbert spaces, the self-mappings and the multivalued mappings, and the linear operator is the identity map, then the (simple, single space-related) inclusion problem is recovered. A deep survey of it is realized by Luo in [19], together with an inertial self-adaptive splitting algorithm, as an alternative to the classical forward–backward splitting method. If, furthermore, the self-mapping is null, then Rockefeller’s variational inclusion problem [20] is obtained, with its particular case of a convex minimization problem. In this case, the forward–backward splitting procedure is nothing else than the proximal point algorithm [21]. An interesting approach on implicit variational inclusion problems was realized by Agarwal and Verma in [22].

All the issues listed above prove the significant generality of the SMVI problem. This makes us highly motivated to provide new solutions for it. We aim to initiate a new algorithm to solve the SMVI problem. The inspiration comes from the recently developed algorithm initiated by Feng et al. in [7] to solve the split feasibility problem. Feng et al. combined the CQ algorithm with Thakur et al.’s [23] iteration procedure and obtained the so-called SFP-TTP projective algorithm. Similar ideas were developed in [24,25] using other three-step iteration procedures, resulting in the so-called partially projective algorithms. They benefit of an interesting upgrade: one of the projections is made only once per iteration. In the following, we shall adapt these ideas to the SMVI problem. For this, we start by properly defining the framework, as well as by listing some of the original procedures.

2. Preliminaries

In this section, we recall the formal statements of all the split-type problems listed above, starting with the most general one, the split monotone variational inclusion problem.

Let and be two real Hilbert spaces and let and be two multivalued mappings on the Hilbert spaces and , respectively. Consider also a bounded linear operator, as well as and , two given single-valued operators. The split monotone variational inclusion problem (SMVI) was initiated by Moudafi in [1] as a generalization for the split variational inequality problem (SVI) defined and analyzed by Censor et al. in [2]. The statement of the SMVI problem is the following:

and, simultaneously,

The SVI problem is recovered by considering C and Q, two closed and convex subsets of and , respectively, and by taking the multivalued mappings as the corresponding normal cones: and . Consequently, the split variational inequality problem adopts the following statement:

and, simultaneously,

Furthermore, by letting , one finds the split feasibility problem (SFP) introduced by Censor and Elfving in [3]:

By letting and be the zero operators, one reaches the split zeroes problem (SZP), which was also introduced in [2]:

and, simultaneously,

In particular, if and , we recover the split common fixed-point problem (SCFPP):

and, simultaneously,

Not least, by taking f and g from the SMVI problem as zero maps, one finds an important particular case; this resulting case was also studied by Byrne et al. in [17] as a split common null-point problem (SCNPP) for two set-valued mappings:

and, simultaneously,

Finally, from optimality conditions for convex minimization, it is well known that if is a lower semi-continuous convex function defined on a closed convex subset C of some Hilbert space H, then minimizes if and only if , where stands for the subdifferential operator (which is well known to be maximal monotone). That is why, given two closed and convex subsets C and Q and two lower semi-continuous convex functions and , by setting and , one reaches the split minimization problem (SMP):

and, simultaneously,

In the following, we recall some standard definitions which appear naturally in connection with split-type problems and are commonly encountered in the literature.

The usual assumptions about the split monotone variational inclusion problem refer, first of all, to its consistency, meaning that the solution set

is nonempty. Secondly, and are usually considered to be maximal monotone operators, while f and g are assumed inverse strongly monotone. In this paper, we shall adopt these standard hypotheses too.

Definition 1

([5] and the references herein). Let H be a Hilbert space and be a (possibly nonlinear) self-mapping of H. Then:

- T is said to be nonexpansive, if

- T is said to be an averaged operator if , where , I is the identity map and is a nonexpansive mapping.

- T is called monotone if

- Assume . Then, T is called ν-inverse strongly monotone (ν-ism) if

- Any 1-ism T is also known as being firmly nonexpansive, that is,

Several properties are worth being mentioned next.

Proposition 1

([5] and the references herein). The following statements hold true on Hilbert spaces.

- (i)

- Each firmly nonexpansive mapping is averaged and each averaged operator is nonexpansive.

- (ii)

- T is a firmly nonexpansive mapping if and only if its complement is firmly nonexpansive.

- (iii)

- The composition of a finite number of averaged operators is averaged.

- (iv)

- An operator N is nonexpansive if and only if its complement is a -ism.

- (v)

- An operator T is averaged if and only if its complement is a ν-ism, for some . Moreover, if , then is a -ism.

- (vi)

- If T is a ν-ism and , then is -ism.

Definition 2.

Let H be a real Hilbert space. Let and .

- B is called a monotone mapping if

- B is called a maximal monotone mapping if B is monotone and its graph is not properly contained in the graph of any other monotone operator.

- The resolvent of B with parameter λ is denoted and defined by , where I is the identity operator (recall that the scalar multiplication and the addition of multivalued operators are defined as follows: and while the inverse of B is the operator defined by .)

The most important properties of maximal monotone operators from the perspective of variational inclusions are given below.

Proposition 2.

If B is a maximal monotone operator, then

- (i)

- is a firmly nonexpansive (single-valued) operator (see [26,27]). Furthermore, according to Proposition 1 (i), it is also averaged and, ultimately, nonexpansive.

- (ii)

- (an immediate consequence of the definition).

- (iii)

- Let be an α-inverse strongly monotone operator. Then, is averaged for each (see [1,17]). Again, Proposition 1 (i) ensures the nonexpansiveness of .

Moudafi presented in [1] an algorithm (we shall call it SMVI-Standard Algorithm) that converged weakly to a solution of the SMVI under certain conditions. It relies on an iteration procedure defined as follows: for and an arbitrary initial point , the sequence is generated by

where , being the adjoint operator of A, while is the spectral radius or the largest eigenvalue of the selfadjoint operator ; moreover, and .

In particular, for the split common null-point problem for two set-valued mappings, this procedure becomes: for and an arbitrary initial point , the sequence is generated by

We shall further refer to it as SCNPP-Standard Algorithm.

The main result in [1] is stated below.

Theorem 1

([1]). Consider a bounded linear operator , and being two Hilbert spaces. Let and be and inverse strongly monotone operators on and , respectively, and two maximal monotone operators, and set . Consider the operators , with . Then, any sequence generated by the SMVI-Standard Algorithm weakly converges to (a solution for the SMVI), provided that .

In addition, for the particular case of a split common null-point problem (i.e., ), Byrne et al. [17] provided a similar result under the more relaxed condition . A strong convergence result was also stated and proved in [17].

Theorem 2

([17]). Let and be two real Hilbert spaces. Let two set-valued, odd and maximal monotone operators and , and a bounded linear operator be given. If , then any sequence generated by the SCNPP-Standard Algorithm converges strongly to (a solution for the SCNPP).

The following Lemmas will provide important tools in the main chapter.

Lemma 1

([28]). If in a Hilbert space H the sequence is weakly convergent to a point x, then for any , the following inequality holds true

Lemma 2

([28]). In a Hilbert space H, for every nonexpansive mapping defined on a closed convex subset , the mapping is demiclosed at 0 (if and , then ).

Lemma 3

([29], Lemma 1.3). Suppose that X is a uniformly convex Banach space and for all (i.e., is bounded away from 0 and 1). Let and be two sequences of X such that , and hold true for some . Then, .

3. New Three-Step Algorithm for Split Variational Inclusions

Further on, we will work under the following hypotheses: and are maximal monotone set-valued mappings, and f and g are and inverse strongly monotone operators, respectively. Set and denote , with . According to Proposition 2 (iii), both T and U are averaged, meaning that they are also nonexpansive.

Feng et al. initiated in [7] a three-step algorithm to solve the split feasibility problem. Their starting point was the TTP three-step iterative procedure introduced in [23] to solve the fixed-point problem for a nonexpansive mapping T. For an arbitrarily initial point , the sequence is generated through the TTP procedure by the iteration scheme

where are three real sequences in (0,1).

Turning back to Moudafi’s SMVI-Standard Algorithm, we may rewrite it as follows

where

We notice that the resulting procedure has the pattern of a Krasnosel’skii iterative process. This inspires us to a change involving the TTP procedure. Moreover, we use the mapping U partially (once on every iteration step, as in [24,25]). Our procedure is defined as follows: for an arbitrarily initial point , the sequence is generated by

where are three real sequences in (0,1). We shall refer to this iteration procedure as SMVI-TTP Algorithm.

We start our approach with some fundamental Lemmas.

Lemma 4.

If S is defined by relation (1), then , where and denote the sets of fixed points for operators U and S, respectively.

Proof.

According to Proposition 2 (ii), . Moreover, because , if is a fixed point of T it follows that x is a fixed point of S. Therefore, . Let us prove next the converse inclusion relationship.

Let . It follows that . Let . We wish to prove that . Assume the contrary, i.e., . Let . Using the fact that and , we find

Because , it follows that

which contradicts the fact that T is nonexpansive (see Proposition 2 (iii)).

In conclusion, , and the proof is complete. □

Lemma 5.

The mapping S defined by relation (1) is nonexpansive.

Proof.

According to Proposition 2 (iii), T is averaged. It follows from Proposition 1 (v) that is -ism, for some . Therefore,

hence is a -ism. Moreover, from Proposition 1 (vi), it follows that is a -ism. Applying Proposition 1 (v) again, we obtain that is averaged and also nonexpansive. □

Lemma 6.

Let be the sequence generated by the SMVI-TTP Algorithm (2). Then, exists for any .

Proof.

Let (according to Lemma 4). Because S is nonexpansive, it follows that S is also quasi-nonexpansive, i.e., for each . Thus, the iteration procedure (2) leads to

The same reasoning applies to , and one obtains

Now, using inequality (3), one finds

In addition, using the property of mapping U being nonexpansive (according to Proposition 2 (iii)) and the fact that p is a fixed point of U, we find that

and together with (3) and (5), these lead to

We conclude from (7) that is bounded and decreasing for all . Hence, exists. □

Lemma 7.

Let be the sequence generated by the SMVI-TTP Algorithm (2), with bounded away from 0 and 1. Then:

- (i)

- ;

- (ii)

- .

Proof.

(i) Let . By Lemma 6, it follows that exists. Let us denote

From (3), it is known that . Taking lim sup on both sides of the inequality, one obtains

Again, because S is quasi-nonexpansive, one has

Relation (12) can be rewritten as

(ii) For proving the second part of the Lemma, we start with some additional limits. First of all, using the definition of from equation (2), one finds

so

Furthermore, again using the nonexpansiveness of S, as well as relation (13), we obtain

following that

Similarly, by considering the definition of , we obtain

which together with the limit (13) finally leads to

hence

Next, we use the results included in relations (14) and (16) and the nonexpansiveness of U to evaluate . We have

and therefore

Next, we shall support our proof on the following identity, which relates an operator F to its complement (see [5]):

Let us also recall that U is an averaged mapping (see Proposition 2 (iii)); so, according to Proposition 1 (v), its complement is a -ism, for some . From Definition 1, we may conclude that

Based on equation (18), we may conclude that . □

Theorem 3.

Let be the sequence generated by the SMVI-TTP Algorithm (2), with bounded away from 0 and 1. Then, is weakly convergent to a point of Ω.

Proof.

One immediate consequence of Lemma 6 is that is bounded. In conclusion, there exists at least one weakly convergent subsequence. Let

denote the weakly subsequential limit set of the sequence . Then, is a nonempty subset. We prove next that it contains exactly one weak limit point. To start, let us assume the contrary: let , and let and . By Lemma 7, we have and , where S and U are nonexpansive mappings (see Lemma 5). Applying Lemma 2, we find that and ; hence, . Similar arguments provide . In general, .

From Lemma 6, the sequences and are convergent. These properties, together with Lemma 1, generate the following inequalities

This provides the expected contradiction. Hence, is a singleton. Let . We just need to prove that . Assume the contrary. Then, for a certain point , there exists such that, for all , one could find satisfying . The resulting subsequence is itself bounded (because is bounded); hence, it contains a weakly convergent subsequence . However, this new subsequence is also a weakly convergent subsequence of ; hence, its weak limit must be p. Taking in the inequality

one finds . Absurd! Hence, . □

4. Mixed Optimization–Feasibility Systems with Simulation

Let us suppose that from the minimizers of some given function (assuming that there is more than one such optimizing element), we wish to select those having a particular feature. We obtain an optimization–feasibility system. For instance, consider a system of the following type:

where and denote some Hilbert spaces, Q is a nonempty, closed and convex subset of , is a bounded linear operator and is a proper lower semi-continuous and convex function on .

This problem could be regarded as a split variational inclusion, by taking and , and . Let us notice that f and g are inverse strongly monotone for each . Because is proper lower semi-continuous and convex, is a maximal monotone operator. Moreover, is maximal monotone provided that Q is closed and convex. Moreover, for an arbitrary selected parameter ,

that is the proximal mapping defined by Moreau in [30], and

making

Adapting the SMVI-TTP Algorithm and the SMVI-Standard Algorithm for this particular setting, we obtain some proximal procedures to solve the optimization–feasibility system:

- the Prox-TTP Algorithm: for an arbitrary initial point , the sequence is generated bywhere are three real sequences in (0,1), .

- Prox-Standard Algorithm: for an arbitrary initial point , the sequence is generated bywhere and .

Based on the results obtained in the previous section, any sequence resulting from the previous procedures is weakly convergent to a solution of the mixed system.

In particular, we can apply the same algorithms for solving a mixed linear–feasibility system of the following type:

where M is a real matrix (), , A is a real matrix and Q is a closed and convex subset of . Using the least-square function, we can rephrase the problem as an optimization–feasibility system:

In this particular case, we have , so

Example 1.

Consider the mixed system

where defines the Euclidean norm (-norm) on and We notice that it is a linear–feasibility-type system, by identifying M as the line matrix , and Q as the -unit ball on . Moreover,

while will be computed using the Formula (23), for each particular value assigned to the parameter λ.

To find a solution, we repeatedly apply Prox-TTP Algorithm (21) until the distance between two consecutive estimates falls below a certain allowable error, say , and count the number of iterations to perform, . For this, we need to assign some values to the parameters involved in the procedure. For instance, by choosing and the initial estimation , as well as the iteration step coefficients , and , the algorithm reaches the approximate solution , after iterations.

It would be interesting to see what happens if the parametric features of the algorithm or the initial estimation are changed. The resulting data, for different parametric assignments, are included in Table 1.

Table 1.

The resulting solutions for different parametric assignments.

By comparing the first three lines, we notice that the approximate solution obtained, starting from a fixed initial estimate, does not seem to be affected by the choice of the iteration step coefficients. Therefore, to simplify the procedure, we could use constant coefficients. Comparing the lines 3, 4 and 5, we may conclude that the larger , the smaller , the number of iterations required. Moreover, the lines 5, 6 and 7 tell us that the problem has multiple solutions. As expected, not all the solutions of the equation also satisfy the feasibility condition so as to provide a solution for the system. The initial estimate is itself a solution for the system (as pointed in line 7), while is not (see line 6).

Another interesting issue with the newly introduced algorithm is its efficiency compared to other procedures. We will apply Prox-TTP Algorithm (21), as well as Prox-Standard Algorithm (22) and compare the results. In order to have a global picture about the convergence behavior of these two algorithms, we shall apply a special technique, called polynomiography. Generally, this means that instead of analyzing the resulting approximate solutions and the required number of iterations starting from a single particular initial estimate , we will choose an entire region of and take each point of the region as a starting point. Then, for each such initial approximation, we count the number of iterations required for its orbit to reach a system solution. Depending on this number, we assign to that particular starting element a color. The color–number correspondence is usually set through a colorbar that accompanies the picture. The result is a colored image in which the particular color of a pixel encodes the number of iterations needed to obtain a solution when the algorithm is set to start from that point. Obviously, the color corresponding to one iteration will define the solution set itself.

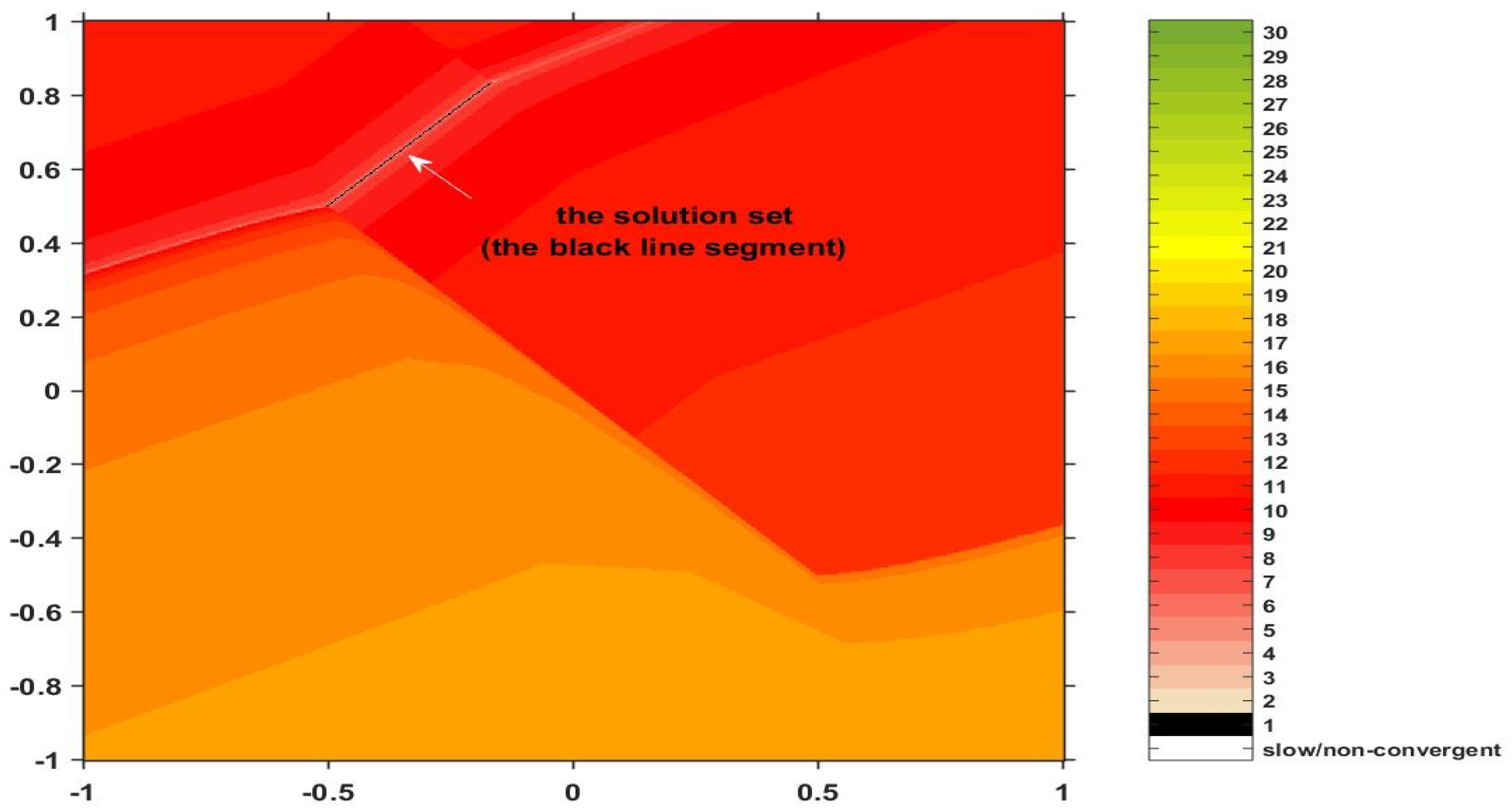

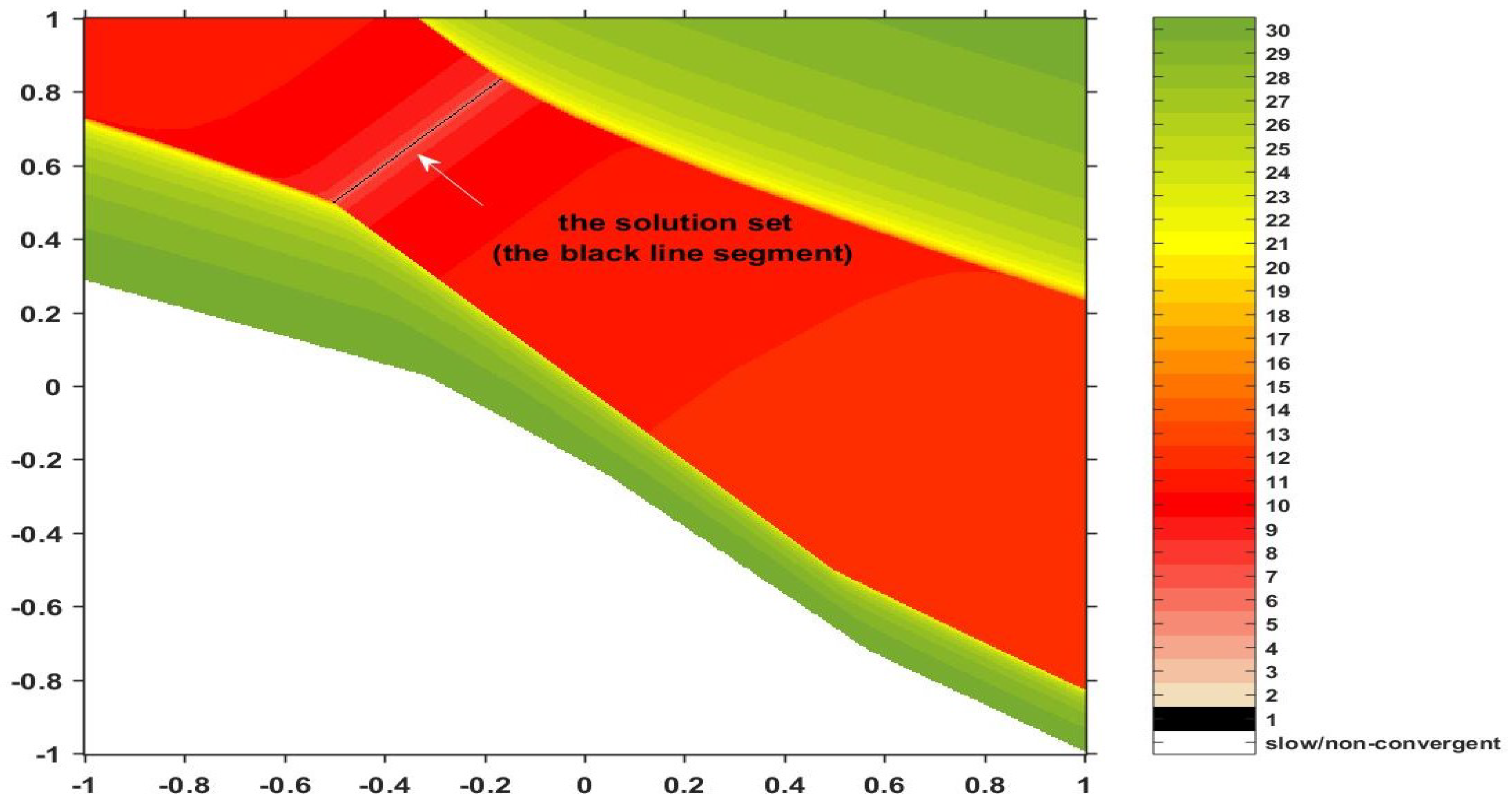

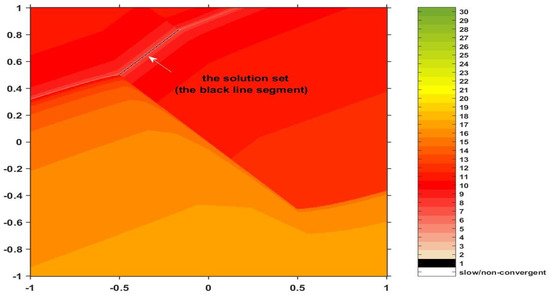

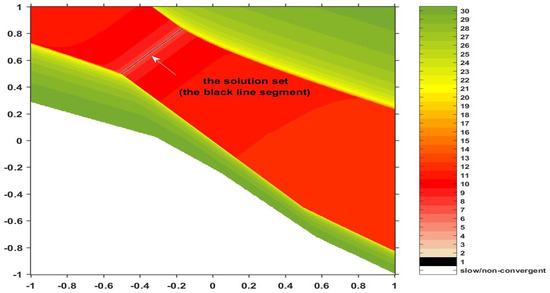

In our example, we choose the region enclosed in a square set to and we set the inputs for the algorithm as follows: the admissible error controlling the exit criterion is ; the iteration step coefficients are set to be constant for the Prox-TTP procedure and for the Prox-Standard procedure; and the resolvent parameter is chosen . In addition to the error-related exit command, we consider an extra stopping condition: if the given accuracy does not result after 30 iterative steps, the algorithm is set to break. This helps us to avoid infinite loops or very slow processes corresponding to those initial points which generate slow-convergent iteration sequences. For these points, we supplement the colorbar with white. We also assign the color black to points for which only one iteration is required (the approximate solutions). The resulting polynomiographs are included in Figure 1 and Figure 2.

Figure 1.

Convergence behavior via the Prox–TTP Algorithm.

Figure 2.

Convergence behavior via the Prox–Standard Algorithm.

Analyzing the two images, the most important conclusion refers to the system solutions. As expected, both algorithms provide the same image of the solution set (the segment marked by the dark line). Moreover, apparently, the TTP procedure is more efficient because it generally uses colors from the bottom of the palette, while the standard procedure also uses colors from the top as well as white (indicating slow convergence). However, we must not forget that these images resulted for a particular selection of inputs (, , , , and ). It is not excluded that, by choosing the entries differently, the standard procedure is to become more efficient.

5. Conclusions

The standard algorithm that Moudafi suggested for the split variational inclusion problem includes two control parameters, and . This means that, by simply adjusting the values of the parameters, one could obtain more efficient procedures. It is natural to think that the performance could be even better controlled if more parameters are involved. The three-step iteration procedure we analyzed here included four control inputs: and the iteration coefficients and . We proved next, using polynomiography, that a particular selection of these parameters improves the general convergence trend of the approximating sequences. More importantly, two new types of systems have been addressed as examples of SMVI problems; they combine an optimization problem and a linear subsystem, respectively, with a feasibility condition. For a particular selected experiment, we proved that the standard Moudafi procedure, as well as the newly introduced algorithm provide similar images of the solution set.

Author Contributions

Conceptualization, A.B. and M.P.; software, A.B.; validation, A.B.; formal analysis, A.B. and M.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Moudafi, A. Split monotone variational inclusions. J. Optim. Theory Appl. 2011, 150, 275–283. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Algorithms for the split variational inequality problem. Numer. Algorithms 2012, 59, 301–323. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Byrne, C. Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 2002, 18, 441–453. [Google Scholar] [CrossRef]

- Byrne, C. An unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 2004, 20, 103–120. [Google Scholar] [CrossRef]

- Dang, Y.Z.; Gao, Y. The strong convergence of a three-step algorithm for the split feasibility problem. Optim. Lett. 2013, 7, 1325–1339. [Google Scholar] [CrossRef]

- Feng, M.; Shi, L.; Chen, R. A new three-step iterative algorithm for solving the split feasibility problem. Univ. Politeh. Buch. Ser. A 2019, 81, 93–102. [Google Scholar]

- Sahu, D.R.; Pitea, A.; Verma, M. A new iteration technique for nonlinear operators as concerns convex programming and feasibility problems. Numer. Algorithms 2020, 83, 421–449. [Google Scholar] [CrossRef]

- Vuong, P.T.; Strodiot, J.J.; Nguyen, V.H. A gradient projection method for solving split equality and split feasibility problems in Hilbert spaces. Optimization 2014, 64, 2321–2341. [Google Scholar] [CrossRef]

- Wang, F.; Xu, H.K. Approximating curve and strong convergence of the CQ algorithm for the split feasibility problem. J. Inequal. Appl. 2010, 2010, 102085. [Google Scholar] [CrossRef]

- Xu, H.K. A variable Krasnosel’skii-Mann algorithm and the multiple-set split feasibility problem. Inverse Probl. 2006, 22, 2021–2034. [Google Scholar] [CrossRef]

- Yao, Y.; Postolache, M.; Liou, Y.C. Strong convergence of a self-adaptive method for the split feasibility problem. Fix Point Theory A. 2013, 2013, 201. [Google Scholar] [CrossRef]

- Yao, Y.; Postolache, M.; Zhu, Z. Gradient methods with selection technique for the multiple-sets split feasibility problem. Optimization 2020, 69, 269–281. [Google Scholar] [CrossRef]

- Hamdi, A.; Liou, Y.C.; Yao, Y.; Luo, C. The common solutions of the split feasibility problems and fixed point problems. J. Inequal. Appl. 2015, 2015, 385. [Google Scholar] [CrossRef]

- Tian, D.; Jiang, L.; Shi, L. Gradient methods with selection technique for the multiple-sets split equality problem. Mathematics 2019, 7, 928. [Google Scholar] [CrossRef]

- Xu, H.K.; Cegielski, A. The Landweber operator approach to the split equality problem. SIAM J. Optim. 2021, 31, 626–652. [Google Scholar] [CrossRef]

- Byrne, C.; Censor, Y.; Gibali, A.; Reich, S. Weak and strong convergence of algorithms for the split common null point problem. J. Nonlinear Convex Anal. 2012, 13, 759–775. [Google Scholar]

- Xiong, J.F.; Ma, Z.L.; Zhang, L.S. Convergence theorems for the split variational inclusion problem in Hilbert spaces. J. Nonlinear Funct. Anal. 2021, 40, 1–12. [Google Scholar]

- Luo, Y. An inertial splitting algorithm for solving inclusion problems and its applications to compressed sensing. J. Appl. Numer. Optim. 2020, 2, 279–295. [Google Scholar]

- Rockafellar, R.T. Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 1976, 14, 877–898. [Google Scholar] [CrossRef]

- Martinet, B. Régularisation d’inéquations variationnelles par approximations successives. Rev. Fr. Autom. Inform. Rech. Opér. 1970, 4, 154–158. [Google Scholar]

- Agarwal, R.P.; Verma, R.U. General implicit variational inclusion problems based on A–Maximal (m)-relaxed monotonicity (AMRM) framework. Appl. Math. Comput. 2009, 215, 367–379. [Google Scholar] [CrossRef]

- Thakur, B.S.; Thakur, D.; Postolache, M. A new iteration scheme for approximating fixed points of nonexpansive mapping. Filomat 2016, 30, 2711–2720. [Google Scholar] [CrossRef]

- Bejenaru, A.; Ciobanescu, C. New partially projective algorithm for split feasibility problems with applications to BVP. J. Nonlinear Convex Anal. 2022, 23, 485–500. [Google Scholar]

- Usurelu, G.I. Split feasibility handled by a single-projection three-step iteration with comparative analysis. J. Nonlinear Convex. Anal. 2021, 22, 543–557. [Google Scholar]

- Bauschke, H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd ed.; Springer: New York, NY, USA, 2017. [Google Scholar]

- Minty, G.J. Monotone (nonlinear) operators in Hilbert space. Duke Math. J. 1962, 29, 341–346. [Google Scholar] [CrossRef]

- Opial, Z. Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 1967, 73, 591–597. [Google Scholar] [CrossRef]

- Schu, J. Weak and strong convergence of fixed points of asymptotically nonexpansive mappings. Bull. Austral. Math. Soc. 1991, 43, 153–159. [Google Scholar] [CrossRef]

- Moreau, J.J. Proximité et dualité dans un espace hilbertien. Bull. Soc. Math. Fr. 1965, 93, 273–299. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).