1. Introduction

Outstanding achievements and world records in athletics events such as the 100 m sprint always make headlines and arouse widespread admiration. Similarly, considerable media attention and public concern are attached to record figures (often bad) relating to the economy, the weather or healthcare systems. Crucial social questions arise when we are faced with a steady flow of records, which are presented as ominous signs of dramatic underlying phenomena. It is therefore unsurprising that the term “record” has become such a constant in our modern everyday life and in a wide range of specialist domains. The probabilistic theory and statistical analysis of record breaking data can be helpful in assessing the seriousness of these issues.

The mathematical theory of records is well developed, especially for data generated by independent and identically distributed (i.i.d.) random variables (r.v.) with a continuous underlying distribution function. As is well known, in this setting, one can only expect about log

n record values among

n observations, which means that records are rare. The reader interested in the theory of records can consult the monographs [

1,

2,

3]. For statistical inference from record data, see [

4].

Concepts of “quasi-records” emerged as natural extensions of records and have proven to be worthwhile from a mathematical as well as an applied perspective. The general idea of values close to records was translated into a variety of definitions that were theoretically analysed and applied in widely different contexts. Near-records were introduced in [

5] for applications in finance, and their properties were analysed in [

6,

7]. In addition, the related concept of the

-record was introduced in [

8] and later studied in [

9,

10,

11,

12]. These objects have practical applications in the case of negative

, since

-records are more numerous than records. So, by considering samples of

-records for statistical inference, we address the problem of the scarcity of records while keeping the extremal nature of the data. Indeed, it has been shown in [

9] and in references therein that inferences based on

-records outperform those based on records only.

The main objects of interest in this work are near-records. An observation is a near-record if it is not a record but is at a distance of less than

units from the last record; that is, it falls short of being a record by less than

a units. While the number of records in an i.i.d. sequence of continuous r.v. grows with the logarithm of the number of observations, the number of near-records grows at speeds depending on the distribution of the observations. In fact, for heavy-tailed distributions, there are fewer near-records than records (in extreme cases, only a finite number of near-records can be observed along the whole sequence), while for light-tailed distributions, near-records outnumber records; see [

13] for details.

Another interesting aspect of near-records is related to their values. It is well known that record values of an i.i.d. sequence behave as the so-called Shorrock process [

14], which is a mixture of a non-homogeneous Poisson process and a Bernoulli process. In the particular case of non-negative integer-valued r.v.,

k is a record value with probability

, and the events wherein

is a record value} are independent.

In this paper, we focus on the process of near-record values for i.i.d. sequences of r.v., taking non-negative integer values. The case of continuous r.v., analysed in [

13], showed that near-record values follow a Poisson cluster point process, where records are the centres of the clusters and near-records are the points in each cluster. The main characteristics of this process, including its asymptotic behaviour, were derived from the properties of the Poisson cluster process, which has been thoroughly studied in the literature. In the discrete setting of the present paper, we prove that near-record values also behave as a cluster process, with centres following a Bernoulli process. We fully characterise the process by giving an expression for its probability generating functional. In particular, we find the exact distribution of the number of times that

k is a near-record, which turns out to be a mixture of a point mass at 0 and a geometric distribution. We also characterise the distribution of the total number of near-records for heavy-tailed distributions. Moreover, we study the limiting behaviour of the number of near-records with values less than

n, as

n goes to infinity, by giving laws of large numbers and central limit theorems. Rather than relying on properties of cluster processes, as done in [

13], here, we use a more direct approach that consists of approximating the sequences under study by a sum of independent r.v. We give several examples of applications of our results to particular families, ranging from heavy-tailed distributions, with a finite number of near-records, to light-tailed ones, such as the Poisson distribution.

The paper is organised as follows: notations and first definitions are presented in

Section 2. The process of near-record values is studied in

Section 3, while in

Section 4, we consider the eventual finiteness of the total number of near-records, followed by asymptotic results in

Section 5. Finally, illustrative examples are shown in

Section 6 and

Appendix A is devoted to technical results.

2. Notation and Preliminary Definitions

The sets of real and positive real numbers are denoted by and , respectively. The sets of positive and non-negative integers are denoted by and , respectively. Sequences in are indexed by and are written in lower-case letters, between parentheses, such as , etc. All r.v. are assumed to be defined on a common probability space . The indicator r.v. of an event , taking the value 1 on B and 0 otherwise, is denoted by . The indicator function of , equal to 1 on A and 0 on , is denoted by .

When referring to a geometric r.v. or distribution throughout the paper, we assume 0 as the starting value. The probability generating function (p.g.f.) of an r.v. X, taking values in , is defined as , for all , such that the series is absolutely convergent.

Sequences of r.v. are also indexed by and are written in upper-case letters, such as , etc. The convergence of deterministic sequences to a limit L is denoted by or , and it is implicitly understood as , unless otherwise stated. The notation stands for . The same notation applies to random sequences, where the mode of convergence (almost sure or in distribution ) is indicated over the arrow. The -algebra of Borel subsets of is denoted by .

Definition 1. Let be a sequence of r.v. and let a be a positive parameter. Then, for ,

is a record if , and

is near-record if

where , with , by convention.

From the above definitions, it is clear that a near-record is not a record, but it can take the value of the current record. Other random sequences of interest related to records are record times

, defined as

for

, with

, and record values

, given by

, for

. Additionally, we consider the set of record values as a point process on

, which can be described by the random counting measure

, defined by

We also define —the indicator of the event for which a record takes the value n.

Observe that record times are the jump times of the sequence of partial maxima and that record values are the (strictly increasing) subsequence of partial maxima , sampled at those jump times. However, without further probabilistic assumptions on , it may happen that , from some value of n on, which is equivalent to the existence of a final record. Furthermore, we have to ensure that the counting measure is boundedly finite in the sense of being finite on bounded sets.

Similarly, the sequence

of near-record times is defined by

for

, with

, and near-record values

are given by

, for

. We define the counting measure of near-record values by

and define the related r.v.

and

, for

.

As for records, assumptions are needed in order to ensure that near-record times and values are well defined. Additionally, in order to characterise as a cluster point process, we consider a classification of near-records in terms of their proximity to records.

Definition 2. (a) For, the n-th near-record value is said to be associated to the m-th record valueif.

(b) For, the point processof near-record values associated tois defined by the random counting measure We state here the probabilistic assumptions regarding , which hold throughout the paper. We assume that is a sequence of i.i.d. r.v., taking non-negative integer values, with . For convenience, we define and for .

In addition, let

be the hazard or failure rates, and

Note that , .

In order to ensure that no final record exists and thus that all record times are well defined, we assume that . This, in particular, implies . In addition, to avoid unnecessary complications, we assume .

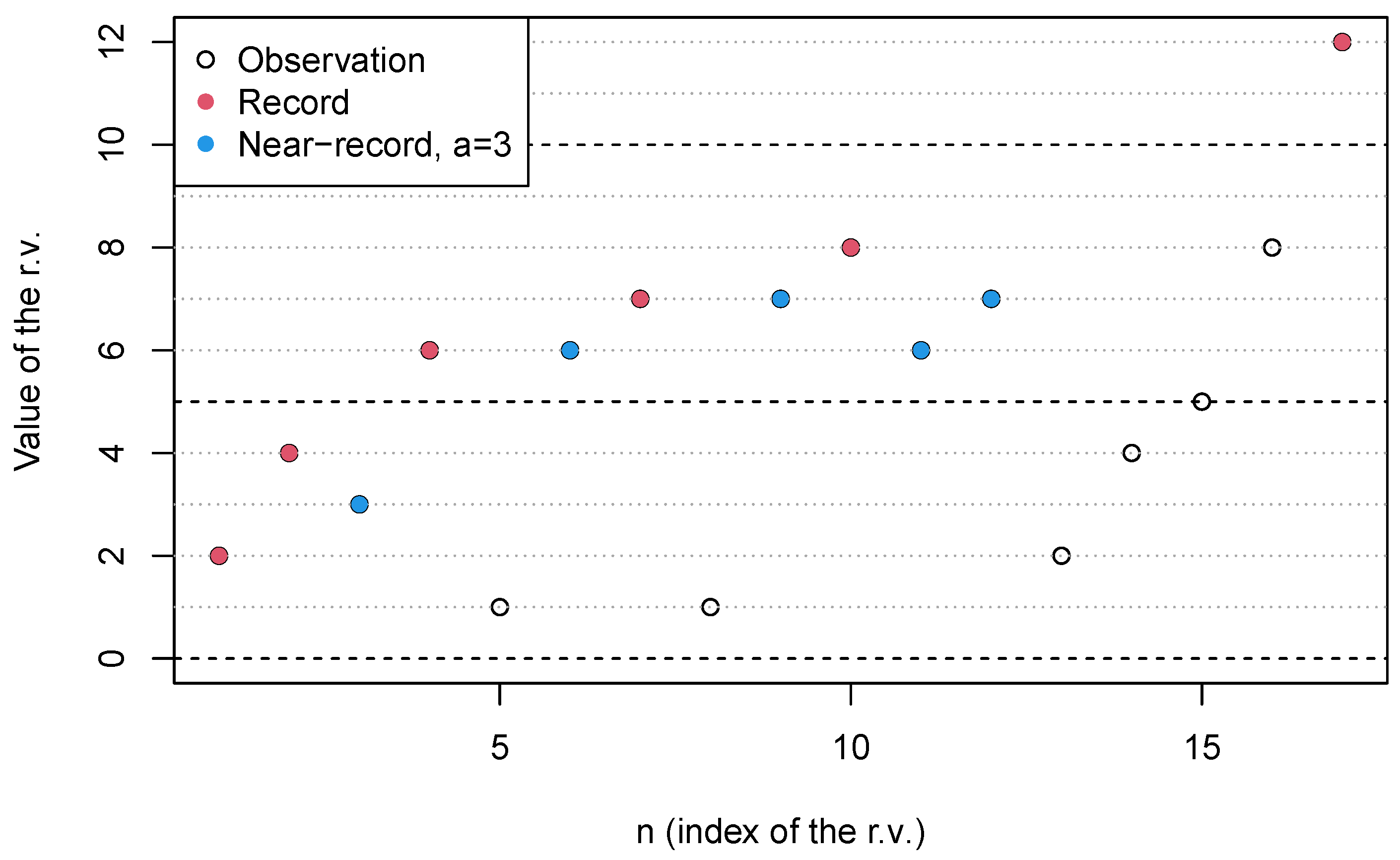

Example 1 (Records and near-records)

. Let us consider a near-record parameter

and the following sequence of 17 observations:

. See

Figure 1.

The sequence of partial maxima is , , , , .

For the record value sequence, we have , , , , , .

According to Definition 2, there are no near-records associated to , there is one near-record (with value 3) associated to , one near-record (with value 6) associated to , one near record (with value 7) associated to and two near-records (with values 6 and 7) associated to . Note also that, as and , there will be no near-records with value smaller than 10 after observation 17. Thus, , the number of near-records with value in the interval , is equal to 5.

Figure 1.

Representation of the sequence given in Example 1. Red dots represent record observations, while blue dots are near-record observations with parameter .

Figure 1.

Representation of the sequence given in Example 1. Red dots represent record observations, while blue dots are near-record observations with parameter .

3. The Point Process of Near-Record Values

We recall that a point process

N on

can be seen as a random measure and has a probability generating functional (p.g.fl.) defined by

, under appropriate conventions regarding the logarithm of 0, where

is a measurable function equal to 1 outside some bounded subset of

(such functions are referred to as “suitable”). Alternative formulas for the p.g.fl., in the form of a product–integral or a product are given by

In this section, we show that the near-record process is a discrete cluster process. Indeed, since , process can be seen as a superposition of a denumerable family of point processes which, by Proposition 1 (c) below, are conditionally independent. Moreover, since the r.v. take values in , we find that, for every bounded A, , where is an upper bound of A, the process is boundedly finite.

We characterise by means of its p.g.fl. and compute its first moments and other quantities of interest. To that end, we first present some useful results about records and near-records.

Lemma 1. (a) The point process ξ of record values has its atoms in , and the r.v. are independent Bernoulli, with

(b) For any suitable function h, Proof. For a proof of (a), see, for instance, Theorem 16.1 in [

3]. To prove (b), from (a) and the second formula in (

6), we obtain, noting that

outside a bounded set, and using the convention

,

□

Proposition 1. - (a)

Let be the number of near-records associated to record , according to Definition 2. Then, That is, is geometrically distributed, conditionally on .

- (b)

Let be the near-record times associated to . Then, conditionally on , the near-record values are i.i.d. withwhere . Moreover, conditionally on , the r.v. , for , are multinomially distributed, with parameters .

- (c)

The σ-algebras are independent, conditionally on .

Proof. - (a)

Note that the r.v. are independent and identically distributed as . Define the subsequence , with and . Then, conditionally on , the sequence is also i.i.d., but their common (conditional) distribution is . Lastly, is the number of terms up to (but no including) the first . Hence, conditionally on , is geometrically distributed, as stated.

- (b)

The near-record values are precisely the before the next record. So, conditionally on , they are i.i.d. with probabilities . In addition, from the arguments above, it is clear that the are (conditionally) multinomial.

- (c)

Note that, since the are i.i.d, the -algebras are independent, conditionally on . Then, since the r.v. , , are -measurable, the result follows.

□

We compute below the p.g.fl. of the point process

, which is obtained from (

6) by taking the conditional expectation. That is,

For

measurable,

and

, let

Additionally, let .

Proposition 2. For a suitable function h, Proof. Suppose

, for some

. From (

10) and (b) of Proposition 1, we get

where the third and fourth equalities, as shown above, follow from the expressions of the p.g.f. of the multinomial and geometric distributions, respectively. □

Definition 3 (Definition 6.3.I in [

15]).

A (boundedly finite) point process N is a cluster point process on , with the centre process on and component processes the family of point processes if, for every bounded , Definition 4. For , let be the point process with p.g.fl. given bywhere h is a suitable function and is defined in (

11).

Theorem 1. - (a)

The point process η of near-records is a cluster process on , with the centre process ξ and independent components processes .

- (b)

For a suitable function h, In particular, taking and bounded, we obtain the p.g.f. of , given by - (c)

For every bounded ,

,

,

, for .

Proof. So, according to Definition 3, is a cluster point process, as asserted. Independence of component processes follows from (c) in Proposition 1, because is -measurable, for any .

(b) For

h, a suitable function, let

be defined as

, which is also a suitable function. From 6.3.6 in [

15], we have

. Therefore, by Lemma 1 (b) and Proposition 2,

For

we replace

h by

in (

14) and get (

15), noting that

.

(c1) Observe that and recall that is binomial, conditional on , with parameters , and that is geometric, conditional on , with parameter .

Moreover,

is binomial, conditional on

, with parameters

, where

, hence

So, noticing that

,

which is finite since

only for a finite set of

i values.

(c2) From the computations above, it is clear that

Hence, the variance of the conditional expectation is

We compute next the expectation of the conditional variance, namely

. Observe that, because of the conditional independence of the

, we have

Therefore,

and so,

. Collecting terms from the expressions above and using the formula

, we obtain

(c3) The covariance , when , follows immediately from the formula for the variance, noting that . □

Corollary 1. For , the r.v. (the number of near-records taking the value N) is distributed as a mixture of a point mass at 0, with probability , and a geometric distribution, with a success probability equal to . That is,where and . Moreover, Proof. After simple computations, we obtain the p.g.f.,

which yields the probability mass function (p.m.f.) (

20). Formulas in (

21) follow from Theorem 1 (c), observing that

, for

. □

Remark 1. Note that , which implies , if A and B are at least a units apart; that is, if In fact, the r.v. and are independent if , due to the independence of the r.v. . In other words, the r.v. are -dependent.

4. Finiteness of the Number of Near-Records

Theorem 2. If , then a.s; that is, the number of near-records in the whole sequence is finite a.s. Moreover, has the finite expectation with .

Proof. From Proposition 1, we have

Taking the expectation above, we obtain

where the final term in the display above follows from the Cauchy–Schwarz inequality. Therefore, by the Borel–Cantelli lemma,

, which yields the result.

In order to compute the p.g.f. of

, we observe that

, and so, by the monotone convergence theorem,

, for

. Furthermore, from (

15), we have

The interchange of the limit and product above is justified by the monotone convergence theorem, after taking logarithms, since the sequence inside the product decreases with n.

Finally, (

23) is obtained, for example, from the derivative of

at

or as the limit of

. Finiteness follows from the bound

, used in (

25), which implies

. Indeed, for sufficiently large

i, we have

and

The conclusion

is obtained after arguing as in (

25). □

5. Asymptotic Behaviour

We now focus on the asymptotic behaviour of . From Theorem 2, we know that if , then is finite a.s. In this section, we obtain laws of large numbers and a central limit theorem for under the assumption .

Lemma 2. are independent with p.g.f. Proof. For simplicity, we prove pairwise independence since the argument extends easily to the general case, but details are somewhat laborious. We compute the joint p.g.f. of

as follows: for

,

From the formula above, we get

, as in (

28). In addition,

, which implies the independence of

because the interval of convergence of the p.g.f. of

can be extended from

to

. □

Remark 2. Note that in (27) is the number of near-records associated to i if i is a record value and is equal to 0 otherwise. Indeed, (28) shows that is distributed as a mixture of a point mass at 0 and a geometric random variable of parameter , with respective weights . Our interest in the variable

arises from the following inequalities, which are easily verified:

The strategy of the proof is to establish the desired asymptotic results for the sum of s, which are then transferred to . For that purpose, we assume some minimal conditions on the hazard rates , besides .

The following proposition gathers some useful facts about the variable .

Proposition 3. (a) , .

(b) Ifthen.

(c) If either

- (i)

andor

- (ii)

and

hold, then

- (c1)

,

- (c2)

Proof. (a) The (factorial) moments of

are computed by differentiating the p.g.f. in (

28).

For the variance, we obtain from (a) and the divergence of expectations that

(c) Suppose that (i) holds. Then, , which implies that expectations and variances are bounded above. Hence, from (b), the limits in (c1) hold.

Suppose now that (ii) holds, then

, and also,

hence

The same argument applies to prove that and so, claim (c1) follows from Lemma A1. Finally, convergence in (c2) is obtained from (c1) and Markov’s inequality, noting that, from (a), we have . □

Theorem 3. If either

- (i)

and or

- (ii)

and , for some ,

Proof. By (

29) and (c1) in Proposition 3, the result follows if we show that

By the strong law of large numbers for sequences of i.i.d. r.v., (

32) follows if we prove that

Suppose first that (i) holds. Note that

and also that

. Hence, there exists a positive constant

such that

, for

. So,

where convergence in the right-hand side of (

34) follows from Abel-Dini’s Theorem A1.

On the other hand, if condition (ii) holds, then it is easy to see that

and

. In addition,

and

. Therefore, (

33) is equivalent to

which follows from Proposition A1, thus proving the stated result. □

Theorem 4. If either

- (i)

and or

- (ii)

and ,

thenwhere stands for the normal distribution with expectation μ and variance . Proof. First, we prove asymptotic normality for

and then transfer the result to

. To that end, we show that the following Lyapunov condition holds:

where

. Indeed, from the elementary inequality

, for

, we get

Note first that

. Moreover, if (i) holds, then

, and from (

37) above, we obtain

, where

is a generic constant. So, the sequence in (

36) is bounded above by

, which tends to 0, because of Proposition 3 (b).

If (ii) holds, then

, which implies

and

, for sufficiently large

, where

are generic constants. Then, the Lyapunov condition in (

36) holds if

From the Cauchy–Schwarz inequality, we have

, and so,

Convergence to 0 in (

38) is obtained from Lemma A2, since

.

From the Lyapunov central limit theorem, we conclude that

which, by (

29) and Proposition 3 (c2), implies that

Now, taking expectations in (

29), we have

. In addition, from (a) in Proposition 3, we have

, and so

by (b) and (c1) in Proposition 3. To conclude the proof, we must show that

From Theorem 1 (c), we have

, and noting that

we obtain

In addition, from Proposition 3 (a), we get

and, from (

42), we have

and

From the inequalities above, (

41) is a direct consequence of (c1) in Proposition 3. □

6. Examples

In this section, we present some examples of application of our results to particular distributions. For each distribution, we consider the r.v. analysed in Corollary 1. In particular, we give formulas for , and the correlation . We also study the asymptotic behaviour of .

The distributions that we consider in this section are very different in terms of their right tails. Example 2 is devoted to a heavy-tailed distribution, similar to the Zeta distribution (see Example 3.1 in [

16]), which is the discrete counterpart of the Pareto distribution. Example 3 deals with the geometric distribution, which has an exponential-like tail, while Example 4 is about the Poisson distribution, which is light-tailed.

Example 2 (Heavy-tailed distribution)

. Let

, hence

and

. Then, from (

21) we have

Note that

, as

, for every

. The p.m.f. of

can be obtained from (

20) by noting that

and

.

Regarding the asymptotic behaviour of

, we observe that

and so, from Theorem 2,

a.s. We now compute the main characteristics of this r.v. For the expectation, note that

, for

, and

, for

. So, from (

23), we obtain

It is interesting to see that the expected total number of near-records is equal to the near-record parameter

a. For the variance, we use (

19) to obtain

which, after some algebra (see

Appendix B), yields

In addition, the p.g.f. of

is easily computed from (

24) as

The p.m.f. of

can be obtained from (

44). For instance, taking

, we get

Example 3 (Geometric distribution)

. The geometric distribution has

,

,

, for

, with

. From (

21), it is easy to see that

Observe that none of the quantities above depend on

N. The p.m.f. of

is given by (

20), with

,

.

Since

, hypothesis

of Theorems 3 and 4 holds, and so we have a strong law of large numbers and a central limit theorem for

. For Theorem 3 note that

and therefore,

For the central limit theorem, as shown in the proof of Theorem 4, we can replace

by

in the denominator of (

35). Since

, for

, from Proposition 3 (a), we get

Example 4 (Poisson distribution)

. The Poisson distribution has

for

. Although there is not a manageable form of

and

, the following bound, taken from [

17], are useful:

Explicit expressions for the quantities in (

21) can be written out, but they shed little light on their dependence on

and

N. Instead, we analyse their asymptotic behaviour for large

N. By (

46),

, so

. Therefore,

and

as

. This immediately yields the asymptotic behaviour of the formulas in (

21):

and

. Hence, as in Example 2, the correlation coefficient between

and

converges to 0 as

.

For the asymptotic behavior of

, note that (

46) guarantees that

and, moreover,

and

, for all large enough values of

n and some positive constants

C and

D. Hence, condition

in Theorem 3 holds, with

, and so does condition

in Theorem 4. In order to apply Theorem 3 note that, since

, we have

and

For the central limit theorem, as in Example 3, the scaling sequence can be taken as

. Since

, we have

, so

In addition, from the proof of Theorem 4, the centring sequence in the central limit theorem can be chosen as

, which in turn can be replaced by

. Indeed, for

,

Thus, by (

46),

, for a given constant

, which implies

We can further replace

by

since, by (

46), it can be shown that

for all

and some constant

. Therefore, we conclude

where

.

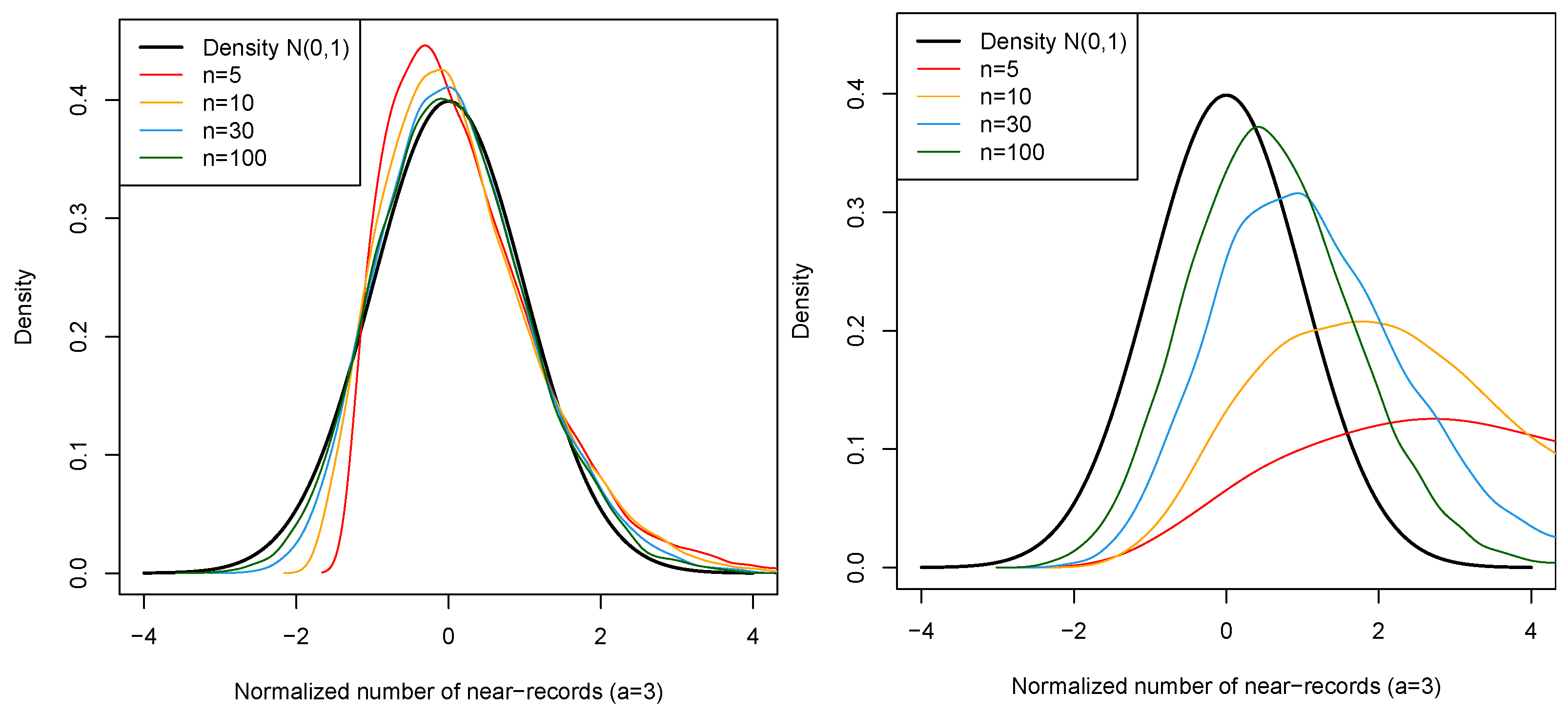

Remark 3. In Examples 3 and 4 above, we observe that the normalising sequences in the law of large numbers and central limit theorem depend on the right-tail behaviour of the parent distribution of the observations. This is also the case for the speed of convergence of to the normal distribution in the central limit theorem, as shown in Figure 2. Convergence is very fast for the geometric distribution, while it is much slower in the Poisson distribution (the distribution of in the geometric distribution is closer to the normal than the distribution of in the Poisson). 7. Conclusions and Future Work

In this paper, we have studied the point process of near-record values from a sequence of independent and identically distributed discrete random variables. Near-records arise as a natural complement of records, with applications in statistical inference.

We have shown that this process is a Bernoulli cluster process and obtained its probability generating functional, as well as formulas for the expectation, variance, covariance and probability generating functions for related counting processes.

We have given a condition for the finiteness of the total number of near-records along the whole sequence of observations. This condition is provided in terms of convergence of the squared hazard rates series. In addition, the explicit expression of its probability generating function is obtained.

In the case where the total number of near-records is not finite, strong convergence and central limit theorems for the number of record values in growing intervals are derived under mild regularity conditions. Finally, we have presented examples of the application of our results to particular families, which show that the asymptotics of near-record values depends critically on the right-tail behaviour of the parent distribution.

Some interesting questions remain open, such as a more detailed analysis of the sequence , including the law of the iterated logarithm and large deviations, or departures from the i.i.d. hypothesis (e.g., linear trend model). They will be addressed in future work.