1. Introduction

Consider linear regression model , where is the response variable, is the predictor variable, is the unknown regression parameter and is the random error satisfying . Given an random sample , , the model can be written in the matrix form as , where , and . In this paper, we are mainly interested in the high-dimensional sparse model, where .

Regularized methods for simultaneous model selection and parameter estimation have been intensively studied in the literature, e.g., the lasso [

1], smoothly clipped absolute deviation (SCAD) [

2], adaptive lasso [

3], bridge [

4], adaptive elastic net [

5], and minimax concave penalty (MCP) [

6], as well as the Dantzig selector [

7]. In addition, screening rules for dimension reduction are proposed, e.g., the sure independent screening method and iteratively sure independent screening method [

8], lasso-based screening rules [

9,

10,

11], etc.

However, most of these works focus on selection and estimation, with respect to regression parameters, and few studies deal with estimation of error variance, although it is a fundamental and crucial problem in statistical inference and regression analysis. In conventional linear models, the common estimator, based on residuals, plays an important role in statistical inferences and model checking. In high-dimensional models, however, variance estimation becomes a difficult problem, mainly due to two reasons. One is that the traditional residual-based methods may perform poorly or, even, fail, as, for example, the ordinary least squares method does not work when the number of covariates is greater than the sample size. The other reason is that it is difficult to select the true model, accurately, since in practice the selected model, often, contains spurious variables that are correlated with the residuals, resulting in significant underestimation of error variance (e.g., [

12,

13]).

Next, we provide some examples, where model error variance is involved and plays an important role.

Example 1 (Model selection)

. Penalization is a common approach to model selection and parameter estimation, in high-dimensional linear models. The efficiency and accuracy of such methods depend on certain tuning parameters that are chosen using some criteria, such as Mallows’s , Akaike’s information criterion (AIC) and the Bayesian information criterion (BIC). For example, the AIC and BIC for the lasso [14] are given byandrespectively, where is the lasso estimator with tuning parameter and the degrees of freedom is equal to the number of non-zero elements in . It is easy to see that these criteria rely on error variance. Example 2 (Confidence intervals)

. For a least-squares-based penalized estimator , let be its index set, corresponding to non-vanishing parameters. If has the oracle property, then for each , the confidence interval for is given bywhere is the -th quantile of the standard normal distribution and is the i-th diagonal element of the matrix . It is clear that the above intervals depend on the variance parameter. Example 3 (Penalized second-order least squares estimation)

. The second-order least squares method, in [15], extends the ordinary least squares method by, simultaneously, minimizing the first two order distancesand yields the joint estimators for the regression and variance parameters. Under general conditions, the second-order least squares estimator has been shown to be, asymptotically, more efficient than the ordinary least squares estimator, if the model error has a nonzero third moment, and they are equivalent otherwise. The regularized version of this method can be used in high-dimensional models. 1.1. Literature Review

Variance estimation in high-dimensional models has attracted increasing attention in recent years. Here, we briefly review some important advances in this area. First, if the true parameter vector was known, then the ideal variance estimator, called the oracle estimator, is . Correspondingly, the estimator , based on some estimator for , is called a naive estimator. Since the naive estimator is downward biased, a modified unbiased estimator is given by , where is the number of nonzero elements in . Unfortunately, when p is much larger than n, even a small change in will cause huge fluctuation in , if .

To overcome this problem, Ref. [

16] estimated the mean and variance parameters jointly, by maximizing a reparameterized likelihood with

penalty:

where

,

and

. Moreover, they proposed a generalized EM algorithm for the numerical optimization.

A refitted cross-validation (RCV) method, to derive a variance estimator, was proposed in [

12], and its asymptotic properties were studied. The main idea of RCV is to attenuate the influence of irrelevant variables with high spurious correlations, via a data-splitting technique. Ref. [

12], also, discussed the asymptotic properties of the lasso-based estimator

and SCAD-based estimator

, where

and

are the least squares estimator, with

penalty [

1] and SCAD penalty [

2], respectively;

and

.

Further, a scaled lasso was proposed in [

17], for simultaneous estimation of regression and variance parameters. Their model can be written as

Under some regularity conditions, Ref. [

17] proved the oracle inequalities for prediction and their estimators.

A moment estimator for the error variance, based on the covariance matrix

of the predictor variables, was studied in [

18], where three cases were considered:

, estimable

and non-estimable

. A maximum likelihood method for the normally distributed noise was developed in [

19].

Moreover, Ref. [

13] considered another re-parameterized likelihood, with lasso penalty

where

,

. In particular, they proposed two estimators: the natural lasso with

and the organic lasso with

.

Finally, Ref. [

20] proposed a ridge-based method to estimate the error variance, under certain conditions, which is defined as follows:

where

,

and

is the tuning parameter. This method performs well in low-dimensional cases, with weak signals, and it is suitable for sparse as well as non-sparse models.

1.2. Notation and Outline

Throughout the paper, let

be the index set and

be the number of the nonzero elements of

, respectively. Given a design matrix

and a subset

of

,

denotes the

i-th column vector of

, and

denotes the sub-matrix, consisting of the columns with indices in

. For vectors

,

,

denotes the Hadamard product. Moreover, let

,

,

,

, where

The rest of this paper is organized as follows:

Section 2 defines and describes the proposed natural adaptive lasso, and

Section 3 gives its asymptotic properties.

Section 4 deals with the numerical optimization of the proposed estimators. Monte Carlo simulation studies of finite sample properties are provided in

Section 5. The conclusions and discussion are given in

Section 6, while the mathematical proofs are given in

Section 7.

2. Natural Adaptive Lasso (NAL)

Some researchers, e.g., Refs. [

13,

16], used reparameterized likelihood to jointly estimate the mean and variance parameters in high-dimensional linear models. In particular, the method of [

13] has good performance, and the associated numerical computation can be converted to some simple optimization procedures. However, the natural lasso in [

13] always overestimates error variance, due to the over-selection of the covariates. This motivates us to consider the more generally adaptive lasso penalty, to further improve the properties of the estimators. Consider the following adaptively weighted

-penalized likelihood

where

is the reparameterized likelihood as (

1),

is the tuning parameter and

is the adaptive weight vector. Given a solution

of problem (

2), the natural adaptive lasso estimators (NALE) for

and

are given by

It is easy to see that, when

, the NALE reduces to the natural lasso estimator (NLE) of [

13].

Note that the quality of the NALE depends on the weight vector

. It follows from Proposition 1 in

Section 3, that the weight

in problem (

2) plays the same role as in the adaptive lasso estimation of the regression coefficients only, which solves the following convex optimization problem:

where the weight

depends on the initial estimator

. As indicated by [

3], any root-

n consistent estimator can be used as the initial estimator for

. For example, the least squares estimator

can be used, and the weight vector is calculated as

,

. Ref. [

4] discusses the selection of the initial estimators in linear models, with

for some

. They show that their marginal regression estimator can be used in the adaptive lasso, to yield the desirable selection and estimation properties. In addition, the weight

in adaptive elastic-net [

5], for moderate dimensional models (

), can be constructed as

,

, where

is the elastic-net estimator. In this paper, we use the following two-step procedure to calculate the weight vector.

Step 1: Solve the lasso problem to obtain the NLE , which is used as the initial estimator .

Step 2: Set with , where and is a folded-concave penalty function (such as SCAD, MCP or bridge).

Remark 1. From [7,21,22], under some regularity conditions, the lasso is consistent with a near-oracle rate and has the sure-screening property, i.e., Further, based on the order of the bias of the lasso, under suitable conditions for the minimum signal strength (dee the first part of Condition 4 in Section 7) and the choice of tuning parameter, will be close, or even equal, to zero vector, when n is sufficiently larger, if a folded-concave penalty, such as SCAD, is used. These properties play an important role in some of the conclusions that follow. 3. Asymptotic Properties

In this section, we, first, establish the relationship between the NALE and the adaptive lasso, then analyze the asymptotic properties of the NALE for .

Proposition 1. The NALE estimator , defined in (3), where is a solution of (2), satisfies the following properties: - (i)

is a solution of the adaptive lasso (4); - (ii)

is the optimal value, of the objective function of the adaptive lasso (4). Furthermore, we have .

The results of Proposition 1 are instrumental in the derivation of the other theoretical results in this paper. Moreover, they, also, provide a method for calculating the NALE for

and

. It is well known that the adaptive lasso (

4) is a convex optimization, and many existing optimization tools can be used to compute this problem.

Note that, since

and

will be close or even equal to zero, for suitably chosen

, the NALE for

will be close to the naive estimator, if

. As mentioned before, the naive estimator for

, based on the adaptive lasso estimator

, may work well when non-zero variables are selected, accurately. However, when more irrelevant variables are selected, the value of the penalty term will not be close to 0 in the finite sample, so that the naive estimator for

will, always, underestimate the true error variance. In this case, the penalty term will mitigate the difference between the naive estimator and the true variance. Although the form of the natural lasso estimator of [

13] is similar to (

5), their method often tends to over-select predictors, due to the use of a lasso penalty. In addition, the value of the penalty term in [

13] remains large because it is not controlled by the weight vector. These facts explain why the natural lasso estimator for

tends to be larger than the true error variance in the simulation studies, in [

13].

Next, we establish a key inequality for the NALE for .

Lemma 1. If , then The above inequality is deterministic, in that it does not rely on any statistical assumptions for

and

. Unlike Lemma 1 in [

13], the proof of this result uses the fact that any vector

provides an upper bound on

and the convexity of the loss function. In addition, if

and

, Lemma 1 reduces to Lemma 1 in [

13]. If

is close or equal to zero, and

, then the bound on the right-hand side of the inequality in Lemma 1 is lower than that for the natural lasso and organic lasso in [

13].

3.1. Adaptive Lasso

It follows, from Lemma 1, that the error bound of the NALE for

is controlled by the convergence rate of the adaptive-lasso estimator

. Therefore, it is necessary to establish the asymptotic properties for

. The results in this subsection are similar to that in [

23]. All regularity conditions and proofs are given in

Section 7.

Theorem 1. Suppose Conditions 1–3 hold. Assume thatand , where is some positive constant and . Then, with probability at least , there exists unique minimizer of problem (4), such that and , wherewith some constant , and are defined in the regularity conditions. It follows from inequality (

18) that the extra term

in

is due to the bias of the initial estimator

. When

tends to zero, the order of the extra term is

. Thus, under some general conditions, the convergence rate of

is

. Usually, the order of

L is

.

We, now, present the asymptotic normality of the adaptive-lasso estimator .

Theorem 2. Assume that conditions of Theorem 1 hold. Let for any satisfying . Then, under Conditions 1–4, with probability at least , the minimizer in Theorem 1 satisfieswhere . The result of Theorem 2 is consistent with the asymptotic normality, for the bridge estimator of

in [

4]. The only difference is in the form of the penalty function.

Next, we consider the convergence performance of the specific adaptive-lasso estimator

, with a weight vector decided by the SCAD penalty [

23], which is defined by

where

is a given constant and

. Usually, the order of

is

. By definition, it holds

, and Condition 4 is satisfied when

. Thus, we have the following result.

Corollary 1. Assume that the conditions of Theorem 1 hold. Then, under Conditions 1–4, with probability at least , there exists unique minimizer of problem (4), such that Furthermore, satisfieswhere for any satisfying . The rate of convergence of the estimators in Theorem 1 and Corollary 1 is controlled by the distribution of random error and predictor matrix. Moreover, these results can be generalized for other situations, where random error follows sub-Gaussian or sub-exponential distributions.

3.2. Error Bounds of NALE

In this subsection, we establish the error bound for the NALE of

. It follows from (

14) that, under the conditions of Theorem 1,

holds, with probability

. Since

, we have

. Thus, in order to establish the asymptotic properties of NALE for

, we still need to determine the order of

. By Condition 2 and Theorem 1, we have

Thus, we have the following result on the error bound of the NALE for .

Theorem 3. Under the conditions of Theorem 1, the NALE for has the following error bound, with probability at least :where . The proof of the above result follows, straightforwardly, from Lemma 1 and Theorem 1, so it is omitted. Since , is close or equal to zero, and the order of for the adaptive lasso is , we have . It follows that when , the error bound of NALE for is smaller than that of the NLE, OLE and SLE, when n is sufficiently large. In the following, we analyze the mean squared error bound for the NALE of .

Theorem 4. Under the conditions of Theorem 1, for any and , the NALE for satisfies Note that the above mean squared error bound of NALE for is lower than that for the NLE, OLS and SLE estimators. Finally, we consider the case using the SCAD penalty. Then, by Theorem 3 and the fact that , under the condition on minimum signal strength, we have the following result.

Corollary 2. Under the conditions of Corollary 1, the NALE for using the SCAD has the following error bound, with probability at least : Further, by Theorem 4 and Corollary 2, we have the mean squared error bound of the NALE for using the SCAD.

Corollary 3. Under the conditions of Corollary 1, for any , the NALE for using SCAD with satisfies the following relative mean squared error bound: 4. Numerical Optimization

In this section, we study the optimization method for the NALE. Proposition 1 provides an easy way to calculate the NALE for

, through existing optimization tools, to compute the adaptive lasso (

4). Given the tuning parameter

, we consider the proximal gradient algorithm (PGA) to calculate this problem, which has the following steps:

Initialization: take initial value , .

Iterative step: .

In the above framework,

is taken to be the Lipschitz constant of

,

, such that for any

,

,

Usually,

. In addition, by the definition of proximal mapping,

Finally, the PGA is terminated, when either the sequence

meets the criterion

or the maximum number of iterations is reached.

5. Numerical Simulations

In this section, we carry out Monte Carlo simulations to study the finite-sample performance of the NALE, with the weight calculated by using the SCAD penalty. Further, we compare the NALE with the square-root/scaled lasso (SLE) [

17], the natural lasso (NLE) [

13], the organic lasso (OLE) [

13] and the ridge-based estimator (RBE) [

20]. We, also, include the oracle estimator (OE)

, as a benchmark in the comparisons. All numerical computation was done using Matlab. The programs are available upon request, from the first author of this paper or

Supplementary Materials.

5.1. Simulation Settings

Following [

23], throughout the simulations we use the sample size

and parameter dimension

. Further, each row of the design matrix

is generated from the multivariate normal distribution

, with

and

. The sparsity of

is set to be the largest integer less than or equal to

, and the locations of the nonzero elements in

are determined randomly. We consider various parameter values,

,

and

, and use the following true regression parameter vectors

We have, also, considered other variance settings, such as , however, the simulation results are similar to that of the above settings and, therefore, are not included. To assess the performances of the estimators, we calculate the average mean squared error (MSE) and the average relative error (RE) , based on 100 Monte Carlo runs.

5.2. Selection of Tuning Parameters

Usually, five-fold cross-validation can be used, to select tuning parameters for each estimation, which is fairly expensive. In order to reduce the computational cost, we consider the following methods, with a fixed choice of tuning parameters for all estimators, except for the NLE and NALE.

For the SLE, we consider three penalty levels

,

, which is similar to Example 1 in [

17]. Then, the best estimator is selected as the final SLE estimator. Indeed, Ref. [

17] found that

works very well for SLE. By the simulation results of [

13], the OLE with

and

performed very well, where

. From [

20,

24], the tuning parameter used in RBE is calculated by setting

with

.

5.3. Simulation Results

In each simulation, 100 runs are carried out to calculate the average of the performance measures. The results are presented in

Table 1,

Table 2,

Table 3 and

Table 4. These results show that, overall, both the MSE and RE of the NALE are very close to that of the OE, and are remarkably better than the other estimators, in most of the cases. However, in a few cases, such as

and

with both

and

, the NALE has a slightly larger MSE than the NLE, although it has smaller RE than the latter. As expected, the NLE often overestimates the true value, due to the bias and over-selection of the lasso. Moreover, in the cases where the NLE has a relatively large MSE, the NALE tends to have a large MSE as well, indicating that the poor performance of the NLE will impact the performance of the NALE, since it is used as the initial estimator. Finally, Ref. [

20] reported that the RBE performs well in the cases with relatively small

p and weak signals, however, it performs poorly and is, even, ineffective in the settings of our simulations.

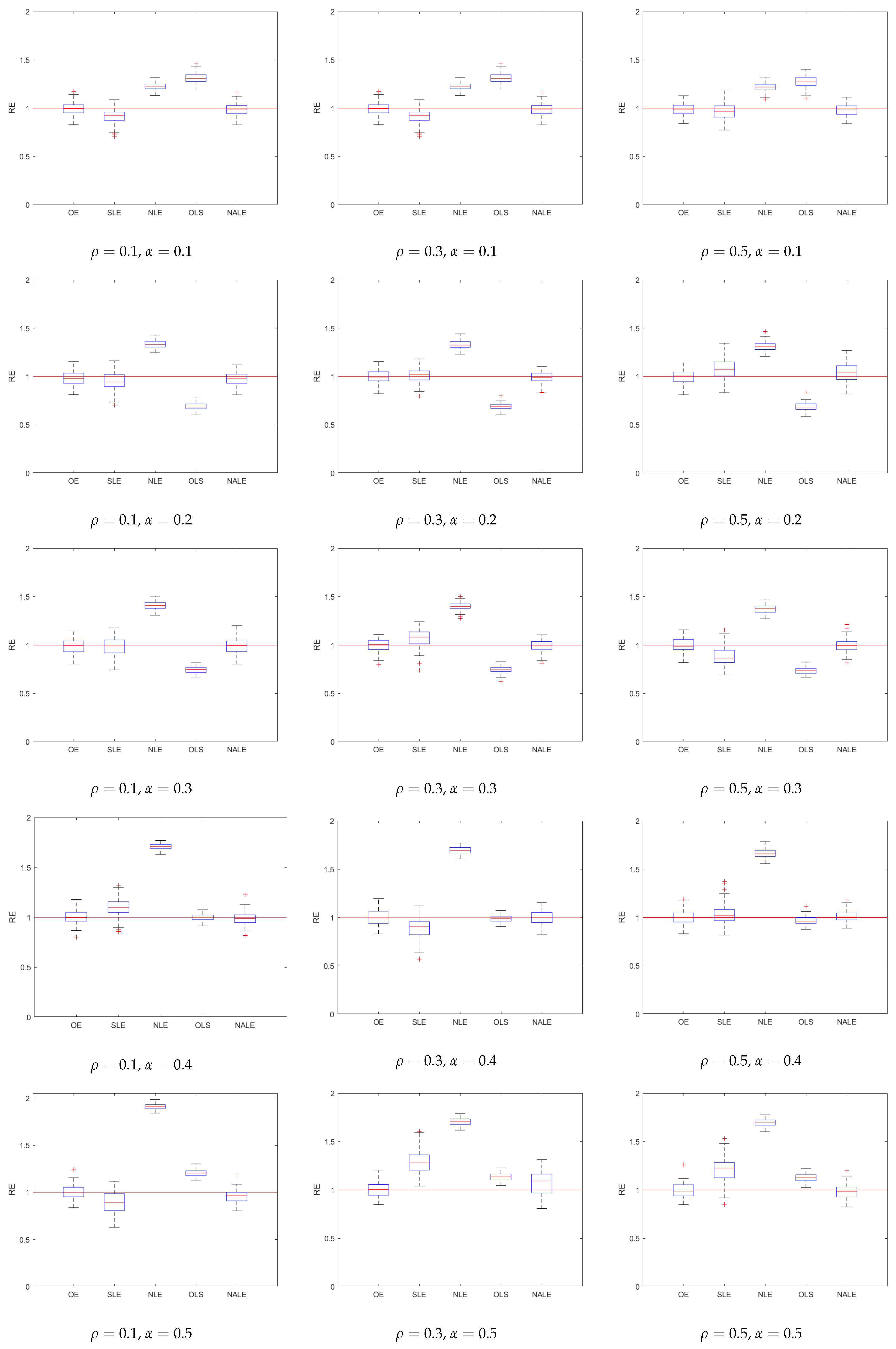

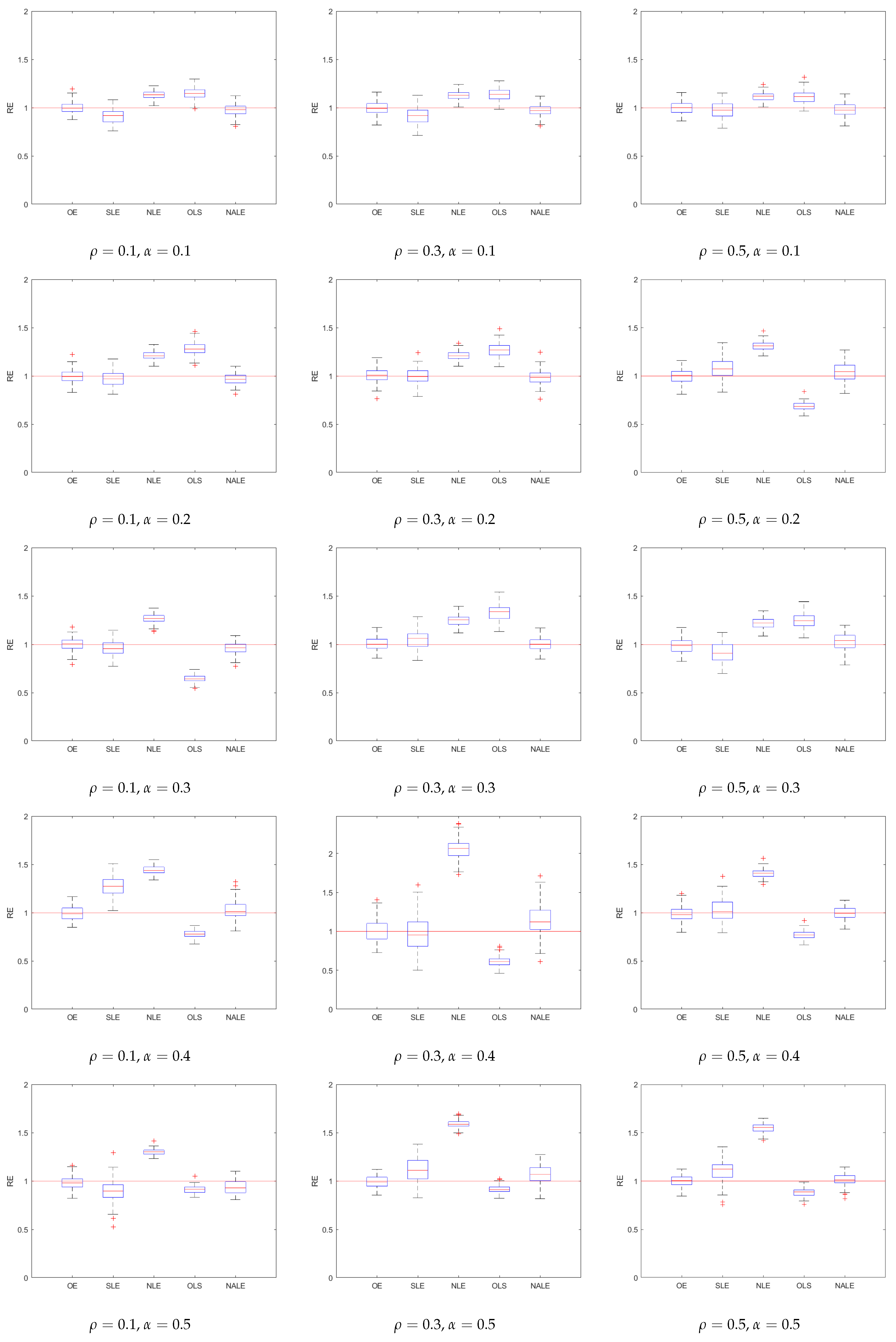

We, further, summarize the performances of various methods using boxplots, in

Figure 1 and

Figure 2. As one can easily see, the NALE is accurate and stable in all cases, while the OLE is less accurate, although it is, still, fairly stable. Further, the NALE performs well in extremely sparse scenarios. Another interesting point is that the NALE inherits the variable selection and parameter estimation of the adaptive lasso. Although we focus on the variance estimation in this work, the method performs well in estimating the regression coefficients as well.

7. Regularity Conditions and Proofs

This section provides theoretical proofs. We first state the following regularity conditions.

Condition 1. With probability approaching one, the initial estimator satisfies .

Condition 2. is non-increasing in and is Lipschitz with constant , that is,for any , . Moreover, for sufficiently large n, where is defined in Condition 1. Condition 3. There exist positive constants , such thatandwhere , , is defined in Theorem 1. Condition 4. The true coefficients satisfy . Moreover, it holds for any and .

As we pointed out in Remark 1, the lasso estimator

satisfies Condition 1. Condition 2 affects the bound between

and

and is used in the proof of Theorem 1. Further, it determines the bound between

and

. The first part of Condition 3 is a very common regularity condition (see [

4,

12,

23]) in high-dimensional regression. The remaining part is similar to Condition 3 in [

23], which is used in the proofs of Theorems 1 and 2. Condition 4 is needed in the analysis of Corollary 1.

Proof of Proposition 1. - (i)

Since

is a solution of (

2),

is a solution of the problem

Hence, by optimality of the above problem, we have

where

. It follows that

Since

, we have

, which, further, implies that

is a solution of the adaptive lasso (

4).

- (ii)

Since

is a solution of (

2), by optimality of problem (

2), we have

where

. Therefore, we have

Since

, we have

. Further,

Combining (

7) and (

8), we obtain

Further, by the first term in (

7),

Combining the above equality and the second term in (

7), we have

which implies that

is the optimal value of the adaptive lasso (

4). □

Proof of Lemma 1. From Proposition 1, we have

Since the loss function in the adaptive lasso is convex, we have

Combining inequalities (

9) and (

10), we obtain

which completes the proof. □

Proof of Theorem 1. Since problem (

4) is a convex optimization, by Theorem 1 of [

25], it suffices to show that, with probability tending to 1, there exists a minimizer

of problem (

4) that satisfies

where

.

Let

and

. Since

and

, it follows from Corollary 4.3 in [

26] that, for any

,

Now we show that there exists a minimizer

of problem (

4) satisfies conditions (

11)–(

13).

Equation (

11): Consider the minimizer of problem (

4) in the subspace

. Let

, where

with

,

, and

is some large enough constant. Note that

where

,

. For

, by (

14), we have

where the last inequality holds, due to

. For

, we have

By the two-steps procedure of weight vector and Condition 2, it holds that

Hence, combining (

15)–(

18) yields

Taking a large enough

C, we have obtained, with probability tending to one,

It follows, immediately, that, with probability approaching one, there exists a minimizer

of problem (

4), subject to subspace

, such that

, with some constant

. Therefore, equality (

11) holds, by the optimality theory.

Inequality (

12): It remains to be proven that with asymptotic probability 1, (

12) holds. Then, by optimality theory,

is the unique global minimizer of problem (

4).

By triangle inequality, we have

Further, by Condition 1, we have

with probability approaching one, where

. Moreover, by the definition of the fold-concave penalty function,

Therefore, by Condition 2 and inequality (

20), we conclude that

Thus, for the first term of the right hand of inequality (

19), by (

14) and the condition that

, with probability approaching one,

As for the second term of right hand of inequality (

19), by Condition 3, inequality (

21) and inequality (

14), with probability approaching one, we have

Combining (

19), (

22) and (

23), we obtain inequality (

12).

Inequality (

13): it follows from Condition 3 that with an asymptotic probability of one, inequality (

13) holds. This completes the proof of Theorem 1. □

Proof of Theorem 2. By equality (

11),

Since

, we have

By the first part of Condition 4 and the bound of

in Theoroem 1, we have

. Then,

. In addition, by the second part in condition 4,

where

and

lie on the line segment

. It follows that

. Further, since

we have, for the second term of the left hand of (

24),

Finally, the result follows, by verifying the conditions of the Lindeberg–Feller central limit theorem, in the same way as in the proof of Theorem 2 in [

4]. □

Proof of Theorem 4. For any

, take

and denote

. Then, by Theorems 1 and 3, we have

Further, since

, we have

By the proof of Theorem 12 in [

13], we have

Combining (

25) and (

26), we obtain

The proof is complete. □