Academic Vocabulary and Reading Fluency: Unlikely Bedfellows in the Quest for Textual Meaning

Abstract

1. Introduction

2. Background

2.1. Theoretical Framework

2.2. Vocabulary Learning

2.3. Decoding Processes and Vocabulary

2.4. Academic Vocabulary

2.5. Study Rationale

- RQ1: What is the attainment level of students on measures of academic vocabulary, reading fluency, and comprehension?

- RQ2: Do differences exist in students between grades on the measured variables?

- RQ3: To what extent is silent reading comprehension predicted by academic vocabulary and reading fluency indicators?

- RQ4: Does reading fluency mediate the relationship between academic vocabulary and reading comprehension?

3. Methods

3.1. Participants

3.2. Assessments

3.3. Test of Academic Word Knowledge (TAWK)

3.4. Reading Accumaticity Indicators

3.5. Reading Prosody

3.6. Scholastic Reading Inventory

4. Results

5. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- NAEP Reading: National Average Scores. Available online: https://www.nationsreportcard.gov/reading_2017/#nation/scores?grade=8 (accessed on 26 September 2018).

- National Institute of Child Health and Development. National Reading Panel. Teaching Children to Read: An Evidence-Based Assessment of the Scientific Research Literature on Reading and Its Implications for Reading Instruction. Available online: https://www.nichd.nih.gov/sites/default/files/publications/pubs/nrp/Documents/report.pdf (accessed on 26 September 2018).

- Beck, I.L.; Perfetti, C.A.; McKeown, M.G. Effects of long-term vocabulary instruction on lexical access and reading comprehension. J. Educ. Psychol. 1982, 74, 506–521. [Google Scholar] [CrossRef]

- Kameenui, E.J.; Carmine, D.; Freschi, R. Effects of text construction and instructional procedures for teaching word meanings on comprehension and recall. RRQ 1982, 17, 367–388. [Google Scholar] [CrossRef]

- McKeown, M.G.; Beck, I.L.; Omanson, R.C.; Perfetti, C.A. The effects of long-term vocabulary instruction on reading comprehension: A replication. J. Read. Behav. 1983, 15, 3–18. [Google Scholar] [CrossRef]

- Ouellette, G.; Beers, A. A not-so-simple view of reading: How oral vocabulary and visual-word recognition complicate the story. Read. Writ. 2010, 23, 189–208. [Google Scholar] [CrossRef]

- Coxhead, A. A new academic word list. TESOL Q. 2000, 34, 213–238. [Google Scholar] [CrossRef]

- Kintsch, W. Comprehension: A Paradigm for Cognition; Cambridge University Press: Cambridge, UK, 1998. [Google Scholar]

- Paris, S.G.; Hamilton, E.E. The development of children’s reading comprehension. In Handbook of Research on Reading Comprehension; Israel, S.E., Duffy, G.G., Eds.; Routledge: New York, NY, USA, 2009; pp. 32–53. [Google Scholar]

- Gernsbacher, M.A. Language Comprehension as Structure Building; Erlbaum: Hillsdale, NJ, USA, 1990. [Google Scholar]

- LaBerge, D.; Samuels, S.J. Toward a theory of automatic information processing in reading. Cogn. Psychol. 1974, 6, 293–323. [Google Scholar] [CrossRef]

- Logan, G.D. Toward an instance theory of automatization. Psychol. Rev. 1988, 95, 492–527. [Google Scholar] [CrossRef]

- Perfetti, C.A. Reading Ability; Oxford University Press: New York, NY, USA, 1985. [Google Scholar]

- Perfetti, C.A. Reading ability: Lexical quality to comprehension. Sci. Stud. Read. 2007, 11, 357–383. [Google Scholar] [CrossRef]

- Anderson, R.C.; Freebody, P. Vocabulary knowledge. In Comprehension and Teaching: Research Reviews; Guthrie, J.T., Ed.; International Reading Association: Newark, DE, USA, 1981; pp. 77–117. [Google Scholar]

- Cunningham, A.E.; Stanovich, K.E. What reading does for the mind. Am. Educ. 1998, 22, 8–15. [Google Scholar]

- Stanovich, K.E. Matthew effects in reading: Some consequences of individual differences in the acquisition of literacy. RRQ 1986, 21, 360–407. [Google Scholar] [CrossRef]

- Stahl, S.A.; Nagy, W.E. Teaching Word Meanings; Erlbaum: Mahwah, NJ, USA, 2006. [Google Scholar]

- Perfetti, C.A.; Stafura, J. Word knowledge in a theory of reading comprehension. Sci. Stud. Read. 2014, 18, 22–37. [Google Scholar] [CrossRef]

- Perfetti, C.; Yang, C.L.; Schmalhofer, F. Comprehension skill and word-to-text integration processes. Appl. Cogn. Psychol. 2008, 22, 303–318. [Google Scholar] [CrossRef]

- Yang, C.L.; Perfetti, C.A.; Schmalhofer, F. Less skilled comprehenders ERPs show sluggish word-to-text integration processes. WL&L 2005, 8, 157–181. [Google Scholar]

- Yang, C.L.; Perfetti, C.A.; Schmalhofer, F. Event-related potential indicators of text integration across sentence boundaries. J. Exp. Psychol. Learn. Mem. Cogn. 2007, 33, 55–89. [Google Scholar] [CrossRef] [PubMed]

- Bolger, D.J.; Balass, M.; Landen, E.; Perfetti, C.A. Context variation and definitions in learning the meanings of words: An instance-based learning approach. Discourse Process. 2008, 45, 122–159. [Google Scholar] [CrossRef]

- Perfetti, C.A.; Wlotko, E.W.; Hart, L.A. Word learning and individual differences in word learning reflectedin event-related potentials. J. Exp. Psychol. Learn. Mem. Cogn. 2005, 31, 1281. [Google Scholar] [CrossRef] [PubMed]

- Van Daalen-Kapteijns, M.M.; Elshout-Mohr, M. The acquisition of word meanings as a cognitive learning process. J. Verbal Learn. Verbal Behav. 1981, 20, 386–399. [Google Scholar] [CrossRef]

- Perfetti, C.A.; Hart, L. The lexical quality hypothesis. In Precursors of Functional Literacy; Vehoeven, L., Elbro, C., Reitsma, P., Eds.; John Benjamins: Amsterdam, The Netherlands, 2002; pp. 189–213. [Google Scholar]

- Lahey, M. Language Disorders and Language Development; Macmillan: Needham, MA, USA, 1988. [Google Scholar]

- Hoover, W.A.; Gough, P.B. A simple view of reading. Read. Writ. 1990, 2, 127–160. [Google Scholar] [CrossRef]

- Goswami, U. Early phonological development and the acquisition of literacy. In Handbook of Early Literacy Research; Neuman, S.B., Dickinson, D.K., Eds.; Guilford Press: New York, NY, USA, 2001; pp. 111–125. [Google Scholar]

- Metsala, J.L. Young children’s phonological awareness and nonword repetition as a function of vocabulary development. J. Educ. Psychol. 1999, 91, 3–19. [Google Scholar] [CrossRef]

- Walley, A.C.; Metsala, J.L.; Garlock, V.M. Spoken vocabulary growth: Its role in the development of phonological awareness and early reading ability. Read. Writ. 2003, 16, 5–20. [Google Scholar] [CrossRef]

- Seidenberg, M.S.; McClelland, J.L. A distributed developmental model of word recognition and naming. Psychol. Rev. 1989, 96, 523–568. [Google Scholar] [CrossRef] [PubMed]

- Ouellette, G.P. What’s meaning got to do with it: The role of vocabulary in word reading and reading comprehension. J. Educ. Psychol. 2006, 98, 554–566. [Google Scholar] [CrossRef]

- Levelt, W.J.M.; Roelofs, A.; Meyer, A.S. A theory of lexical access in speech production. BBS 1999, 22, 1–75. [Google Scholar]

- Dickinson, D.K.; McCabe, A.; Anastasopoulos, L.; Feinberg, E.S.; Poe, M.D. The comprehensive language approach to early literacy: The interrelationships among vocabulary, phonological sensitivity, and print knowledge among preschool-aged children. J. Educ. Psychol. 2003, 95, 465–481. [Google Scholar] [CrossRef]

- Scarborough, H.S. Connecting early language and literacy to later reading (dis)abilities: Evidence, theory, and practice. In Handbook of Early Literacy Research; Neuman, S.B., Dickinson, D.K., Eds.; Guilford Press: New York, NY, USA, 2001; pp. 97–110. [Google Scholar]

- Sénéchal, M.; Ouellette, G.; Rodney, D. The misunderstood giant: On the predictive role of early vocabulary to future reading. In Handbook of Early Literacy Research; Neuman, S.B., Dickinson, D.K., Eds.; Guilford Press: New York, NY, USA 2006; pp. 173–182. [Google Scholar]

- Rasinski, T.V.; Reutzel, C.R.; Chard, D.; Linan-Thompson, S. Reading fluency. In Handbook of Reading Research; Kamil, M.L., Pearson, P.D., Moje, E.B., Afflerbach, P.P., Eds.; Routledge: New York, NY, USA, 2011; pp. 286–319. [Google Scholar]

- Samuels, S.J. The DIBELS tests: Is speed of barking at print what we mean by reading fluency? RRQ 2007, 42, 563–566. [Google Scholar]

- Hock, M.F.; Brasseur, I.F.; Deshler, D.D.; Catts, H.W.; Marquis, J.G.; Mark, C.A.; Stibling, J.W. What is the reading component skill profile of struggling adolescent readers in urban schools? LDQ 2009, 32, 21–38. [Google Scholar]

- Paige, D.D.; Smith, G.S.; Rasinski, T.V.; Rupley, W.H.; Magpuri-Lavell, T.; Nichols, W.D. A PATH analytic model linking foundational skills to grade 3 reading achievement. J. Educ. Res. 2018. [Google Scholar] [CrossRef]

- Paige, D.D.; Rasinski, T.; Magpuri-Lavell, T.; Smith, G. Interpreting the relationships among prosody, automaticity, accuracy, and silent reading comprehension in secondary students. J. Lit. Res. 2014, 46, 123–156. [Google Scholar] [CrossRef]

- Jenkins, J.R.; Fuchs, L.S.; van den Broek, P.; Espin, C.; Deno, S.L. Accuracy and fluency in list and context reading of skilled and RD groups: Absolute and relative performance levels. LDRP 2003, 18, 237–245. [Google Scholar] [CrossRef]

- Pinnell, G.S.; Pikulski, J.J.; Wixson, K.K.; Campbell, J.R.; Gough, P.B.; Beatty, A.S. Listening to Children Read Aloud: Data from Naep’s Integrated Reading Performance Record (IRPR) at Grade 4. Available online: https://eric.ed.gov/?id=ED378550 (accessed on 26 September 2018).

- Wise, B.W.; Ring, J.; Olson, R.K. Training phonological awareness with and without explicit attention to articulation. J. Exp. Child Psychol. 1999, 72, 271–304. [Google Scholar] [CrossRef] [PubMed]

- Paige, D.D.; Rupley, W.H.; Smith, G.S.; Rasinski, T.V.; Nichols, W.; Magpuri-Lavell, T. Is prosodic reading a strategy for comprehension? J. Educ. Res. Online 2017, 9, 245–275. [Google Scholar]

- Kuhn, M.R.; Stahl, S.A. Fluency: A Review of Developmental and Remedial Practices. Available online: http://psycnet.apa.org/buy/2003-01605-001 (accessed on 26 September 2018).

- Benjamin, R.G.; Schwanenflugel, P.J. Text complexity and oral reading prosody in young children. RRQ 2010, 45, 388–404. [Google Scholar] [CrossRef]

- Daane, M.C.; Campbell, J.R.; Grigg, W.S.; Goodman, M.J.; Oranje, A. Fourth-Grade Students Reading Aloud: NAEP 2002 Special Study of Oral Reading. Available online: https://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=2006469 (accessed on 26 September 2018).

- Klauda, S.L.; Guthrie, J.T. Relationships of three components of reading fluency to reading comprehension. J. Educ. Psychol. 2008, 100, 310–321. [Google Scholar] [CrossRef]

- Paige, D.D.; Rasinski, T.V.; Magpuri-Lavell, T. If fluent, expressive reading important for high school readers? JAAL 2012, 56, 67–76. [Google Scholar] [CrossRef]

- Rasinski, T.V.; Rikli, A.; Johnston, S. Reading fluency: More than automaticity? More than a concern for the primary grades? Lit. Res. Instr. 2009, 48, 350–361. [Google Scholar] [CrossRef]

- Valencia, S.W.; Smith, A.; Reece, A.M.; Li, M.; Wixon, K.K.; Newman, H. Oral reading fluency assessment: Issues of content, construct, criterion, and consequent validity. RRQ 2010, 45, 270–291. [Google Scholar] [CrossRef]

- Beckman, M.E. The parsing of prosody. Lang. Cogn. Process. 1996, 11, 17–67. [Google Scholar] [CrossRef]

- Cutler, A.; Dahan, D.; van Donselaar, W. Prosody in the comprehension of spoken language: A literature review. Lang. Speech 1997, 40, 141–201. [Google Scholar] [CrossRef] [PubMed]

- Peppé, S.; McCann, J. Assessing intonation and prosody in children with atypical language development: The PEPS-C test and the revised version. Clin. Linguist. Phon. 2003, 17, 345–354. [Google Scholar] [CrossRef] [PubMed]

- Sanderman, A.A.; Collier, R. Prosodic phrasing and comprehension. Lang. Speech 1997, 40, 391–409. [Google Scholar] [CrossRef]

- Schwanenflugel, P.J.; Benjamin, R.G. Lexical prosody as an aspect of reading fluency. Read. Writ. 2017, 30, 143–162. [Google Scholar] [CrossRef]

- Veenendaal, N.J.; Groen, M.A.; Verhoeven, L. The role of speech prosody and text reading prosody in children’s reading comprehension. Br. J. Educ. Psychol. 2014, 84, 521–536. [Google Scholar] [CrossRef] [PubMed]

- Veenendaal, N.J.; Groen, M.A.; Verhoeven, L. What oral reading fluency can reveal about reading comprehension. J. Res. Read. 2015, 38, 213–225. [Google Scholar] [CrossRef]

- Hiebert, E.H.; Lubliner, S. The nature, learning, and instruction of general academic vocabulary. In What Research Has to Say about Vocabulary Instruction; Farstrup, A.E., Samuels, S.J., Eds.; International Reading Association: Newark, DE, USA, 2008; pp. 106–129. [Google Scholar]

- Corson, D. The learning and use of academic English words. Lang. Learn. 1997, 47, 671–718. [Google Scholar] [CrossRef]

- Cunningham, J.W.; Moore, D.W. The contribution of understanding academic vocabulary to answering comprehension questions. J. Read. Behav. 1993, 25, 171–180. [Google Scholar] [CrossRef]

- Nation, I.S.P.; Kyongho, H. Where would general service vocabulary stop and special purposes vocabulary begin? System 1995, 23, 35–41. [Google Scholar] [CrossRef]

- Scarcella, R.C. Accelerating Academic English: A Focus on English Language Learners. Available online: https://www.librarything.com/work/3402943 (accessed on 29 September 2018).

- Nagy, W.; Townsend, D. Words as tools: Learning academic vocabulary as language acquisition. RRQ 2012, 47, 91–108. [Google Scholar] [CrossRef]

- Beck, I.L.; McKeown, M.G.; Kucan, L. Bringing Words to Life; The Guilford Press: New York, NY, USA, 2002. [Google Scholar]

- Biber, D. University Language: A Corpus-Based Study of Spoken and Written Registers; John Benjamins: Philadelphia, PA, USA, 2006. [Google Scholar]

- Lesaux, N.K.; Kieffer, M.J.; Faller, S.E.; Kelley, J.G. The effectiveness and ease of implementation of an academic vocabulary intervention for linguistically diverse students in urban middle schools. RRQ 2010, 45, 196–228. [Google Scholar] [CrossRef]

- Snow, C.E.; Lawrence, J.; White, C. Generating knowledge of academic language among urban middle school students. SREE 2009, 2, 325–344. [Google Scholar] [CrossRef]

- Townsend, D.; Collins, P. Academic vocabulary and middle school English learners: An intervention study. Read. Writ. 2009, 22, 993–1019. [Google Scholar] [CrossRef]

- Hancioğlu, N.; Neufeld, S.; Eldridge, J. Through the looking glass and into the land of lexico-grammar. ESP 2008, 27, 459–479. [Google Scholar] [CrossRef]

- Biemiller, A. Language and Reading Success; Brookline: Newton Upper Falls, MA, USA, 1999. [Google Scholar]

- Stahl, S.A. Beyond the instrumentalist hypothesis: Some relationships between word meanings and comprehension. In The Psychology of Word Meanings; Schwanenflugel, P.J., Ed.; Erlbaum: Hillsdale, NJ, USA, 1991; pp. 157–186. [Google Scholar]

- Townsend, D.; Filippini, A.; Collins, P.; Giancarosa, G. Evidence for the importance of academic word knowledge for the academic achievement of diverse middle school students. ESJ 2012, 112, 497–518. [Google Scholar] [CrossRef]

- Nunnally, J.C.; Bernstein, I.H. Psychometric Theory; McGraw Hill: New York, NY, USA, 1994. [Google Scholar]

- Deno, S.L. Curriculum-based measurement: The emerging alternative. Except. Child. 1985, 52, 219–232. [Google Scholar] [CrossRef] [PubMed]

- Deno, S.L.; Mirkin, P.K.; Chiang, B. Identifying valid measures of reading. Except. Child. 1982, 49, 36–45. [Google Scholar] [PubMed]

- Fuchs, L.S.; Fuch, D.; Hosp, M.K.; Jenkins, J.R. Oral reading fluency as an indicator of reading competence: A theoretical, empirical, and historical analysis. Sci. Stud. Read. 2001, 5, 239–256. [Google Scholar] [CrossRef]

- McGlinchey, M.T.; Hixon, M.D. Using curriculum-based measurement to predict performance on state assessments in reading. SPR 2004, 33, 193–203. [Google Scholar]

- National Governors Association Center for Best Practices & Council of Chief State School Officers. Common Core State Standards for English Language Arts and Literacy in History/Social Studies, Science, and Technical Subjects; National Governors Association Center for Best Practices, Council of Chief State School Officers: Washington, DC, USA, 2010.

- Graesser, A.C.; McNamara, D.; Louwerse, M.M.; Cai, Z. Coh-metrix analysis of text on cohesion and language. Behav. Res. Method. Instr. Comput. 2004, 36, 193–202. [Google Scholar] [CrossRef]

- Zutell, J.; Rasinski, T.V. Training teaches to attend to their students’ oral reading fluency. TIP 1991, 30, 211–217. [Google Scholar]

- Loo, R. Motivational orientations toward work: An evaluation of the work preference inventory (Student form). MECD 2001, 33, 222–233. [Google Scholar]

- Nunnally, J.C. Psychometric Theory, 1st ed.; McGraw-Hill: New York, NY, USA, 1967. [Google Scholar]

- Streiner, D.L. Starting at the beginning: An introduction to coefficient alpha and internal consistency. J. Pers. Assess. 2003, 80, 99–103. [Google Scholar] [CrossRef] [PubMed]

- Moser, G.P.; Sudweeks, R.R.; Morrison, T.G.; Wilcox, B. Reliability of ratings of children’s expressive reading. Read. Psychol. 2014, 35, 58–79. [Google Scholar] [CrossRef]

- Smith, G.S.; Paige, D.D. A study of reliability across multiple raters when using the NAEP and MDFS rubrics to measure oral reading fluency. Read. Psychol. 2018, in press. [Google Scholar]

- SRI: Technical Guide. Available online: https://www.hmhco.com/products/assessment-solutions/assets/pdfs/sri/SRI_TechGuide.pdf (accessed on 29 September 2018).

- Hasbrouck, J.; Tindal, G. Oral reading fluency norms: A valuable assessment tool for reading teachers. Reading Teacher 2006, 59, 636–644. [Google Scholar] [CrossRef]

- Baron, R.M.; Kenny, D.A. The moderator-mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. J. Personal. Soc. Psychol. 1986, 51, 1173–1182. [Google Scholar] [CrossRef]

- Preacher, K.J.; Hayes, A.F. SPSS and SAS procedures for estimating indirect effects in simple mediation models. Behav. Res. Method. Instrum. Comput. 2004, 36, 717–731. [Google Scholar] [CrossRef]

- Preacher, K.J.; Kelly, K. Effect size measures for mediation models: Quantitative strategies for communicating indirect effects. Psychol. Method. 2011, 16, 93–115. [Google Scholar] [CrossRef] [PubMed]

- Preacher, K.J.; Curran, P.J.; Bauer, D.J. Computational tools for probing interaction effects in multiple linear regression, multilevel modeling, and latent curve analysis. J. Educ. Behav. Stat. 2006, 31, 437–448. [Google Scholar] [CrossRef]

- Hiebert, E.H. The forgotten reading proficiency: Stamina in silent reading. In Teaching Stamina and Silent Reading in the Digital-Global Age; Hiebert, E.H., Ed.; TextProject: Santa Cruz, CA, USA, 2015; pp. 16–31. [Google Scholar]

| Variable | Sixth-Grade (n = 83) | Seventh-Grade (n = 55) | All Students (n = 138) |

|---|---|---|---|

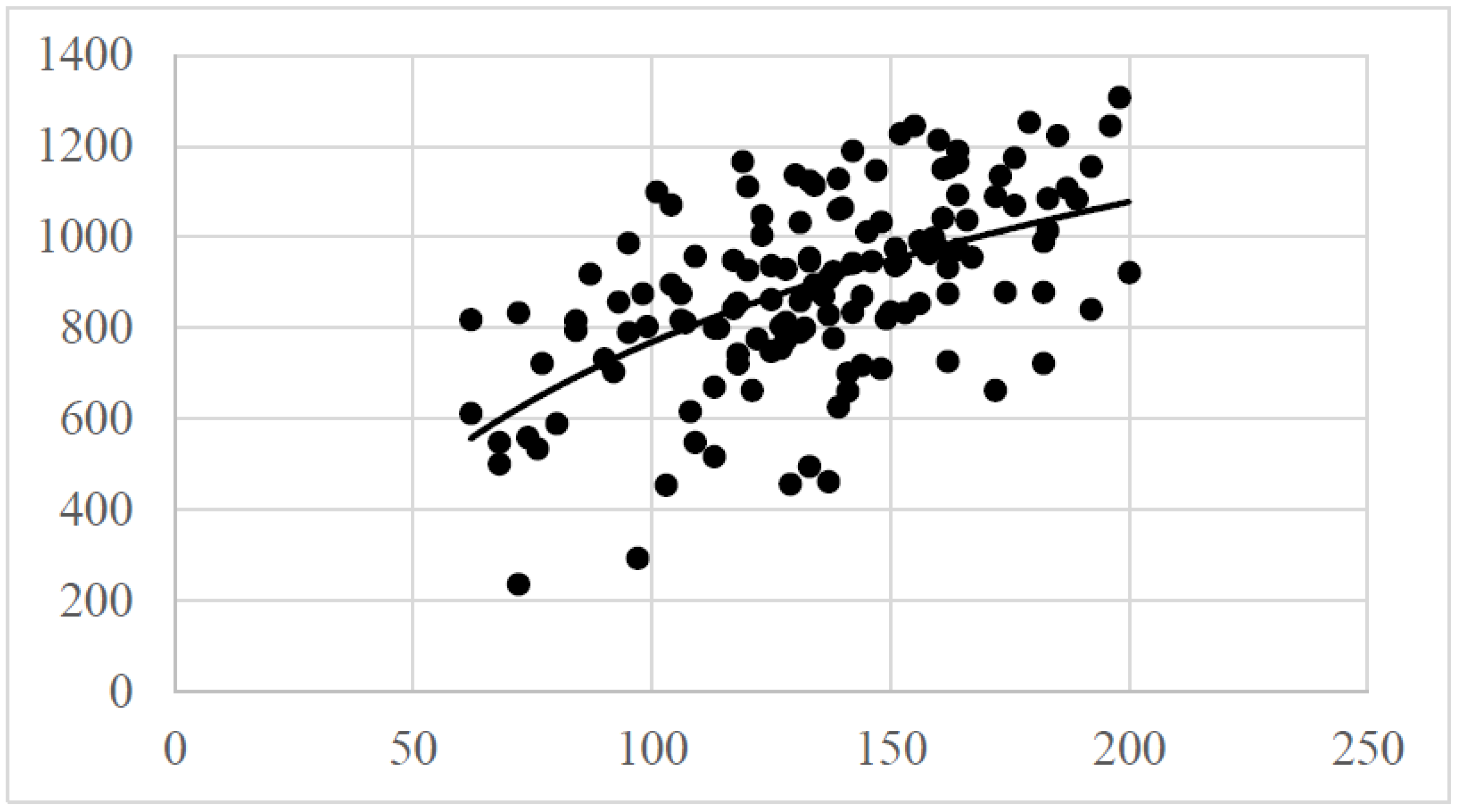

| Comprehension | 851.52(206.57) | 942.49(196.14) | 887.78(206.64) |

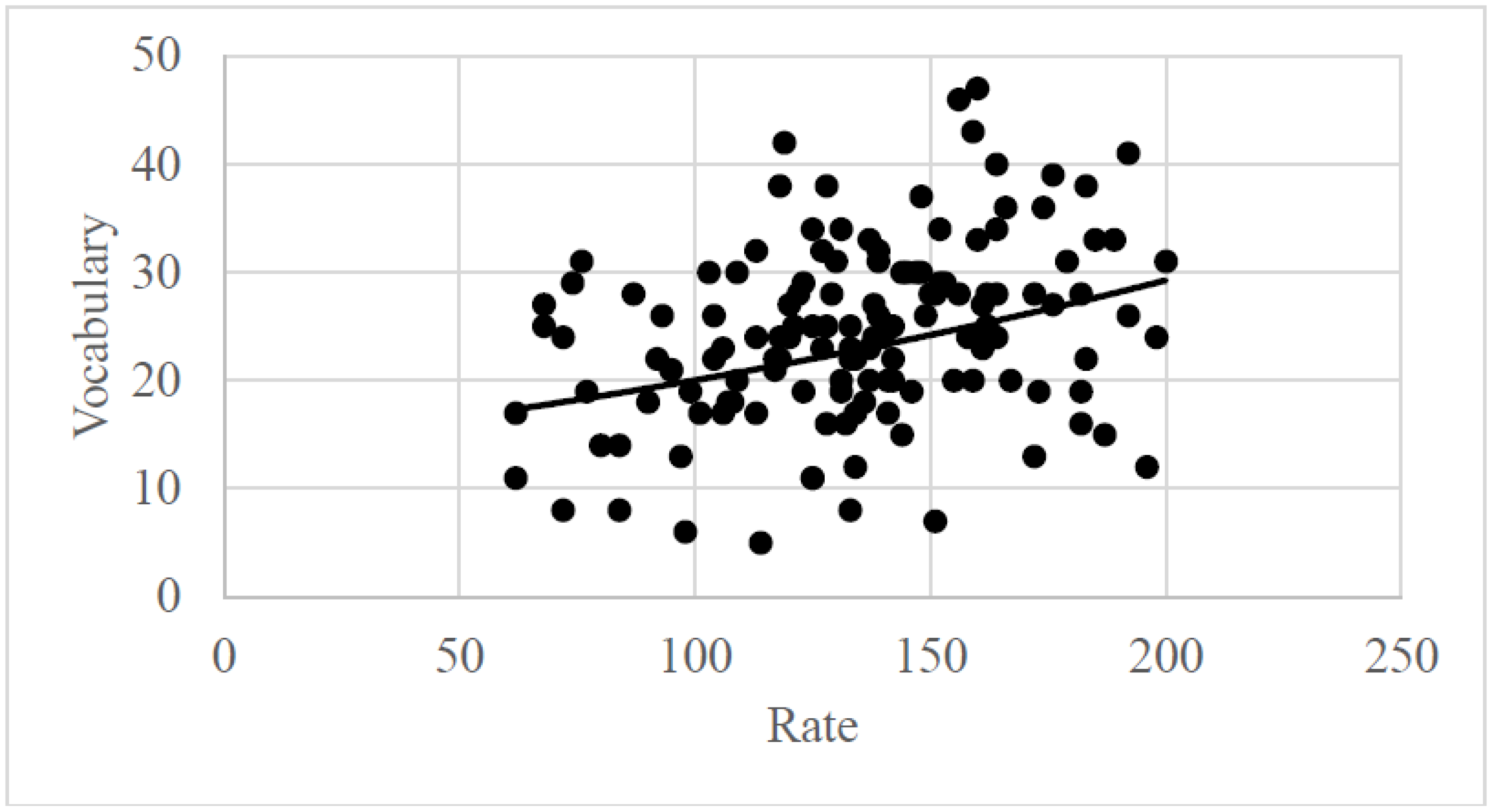

| Academic Vocabulary | 23.30(7.99) | 26.16(8.42) | 24.44(8.25) |

| Reading Rate | 136.93(33.48) | 131.36(29.97) | 134.71(32.13) |

| Word Identification Accuracy | 134.43(33.99) | 126.89(34.46) | 130.33(38.26) |

| Prosody | 10.64(2.18) | 10.05(1.67) | 10.41(2.01) |

| Variable | Comprehension | Academic Vocabulary | Reading Rate | Word Accuracy | Prosody |

|---|---|---|---|---|---|

| Comprehension | 1 | ||||

| Academic Vocabulary | 0.485 | 1 | |||

| Reading Rate | 0.566 | 0.337 | 1 | ||

| Word Accuracy | 0.544 | 0.321 | 0.958 | 1 | |

| Prosody | 0.489 | 0.380 | 0.755 | 0.738 | 1 |

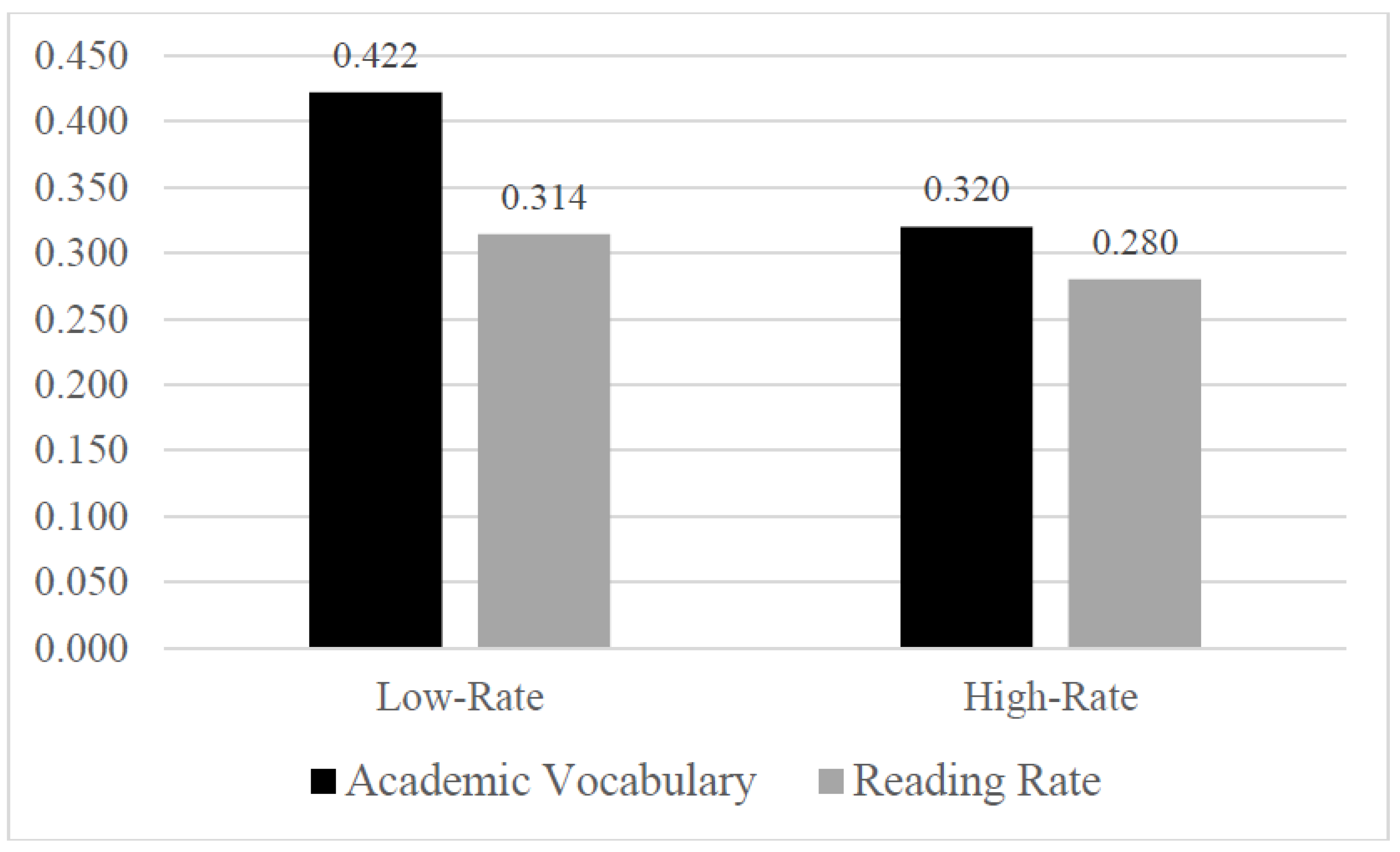

| All Students (n = 138) | Low-Rate Students (n = 58; Rate < 127) | High-Rate Students (n = 80; Rate ≥ 127) | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Variable | Std. Error | B | SEβ | β | R2 | ∆R2 | t | Std. Error | B | SEβ | β | R2 | ∆R2 | t | Std. Error | B | SEβ | β | R2 | ∆R2 | t |

| Constant | 55.72 | 591.14 | 48.42 | 12.21 *** | 79.19 | 517.08 | 68.61 | 7.39 *** | 61.78 | 729.73 | 60.81 | 11.52 *** | |||||||||

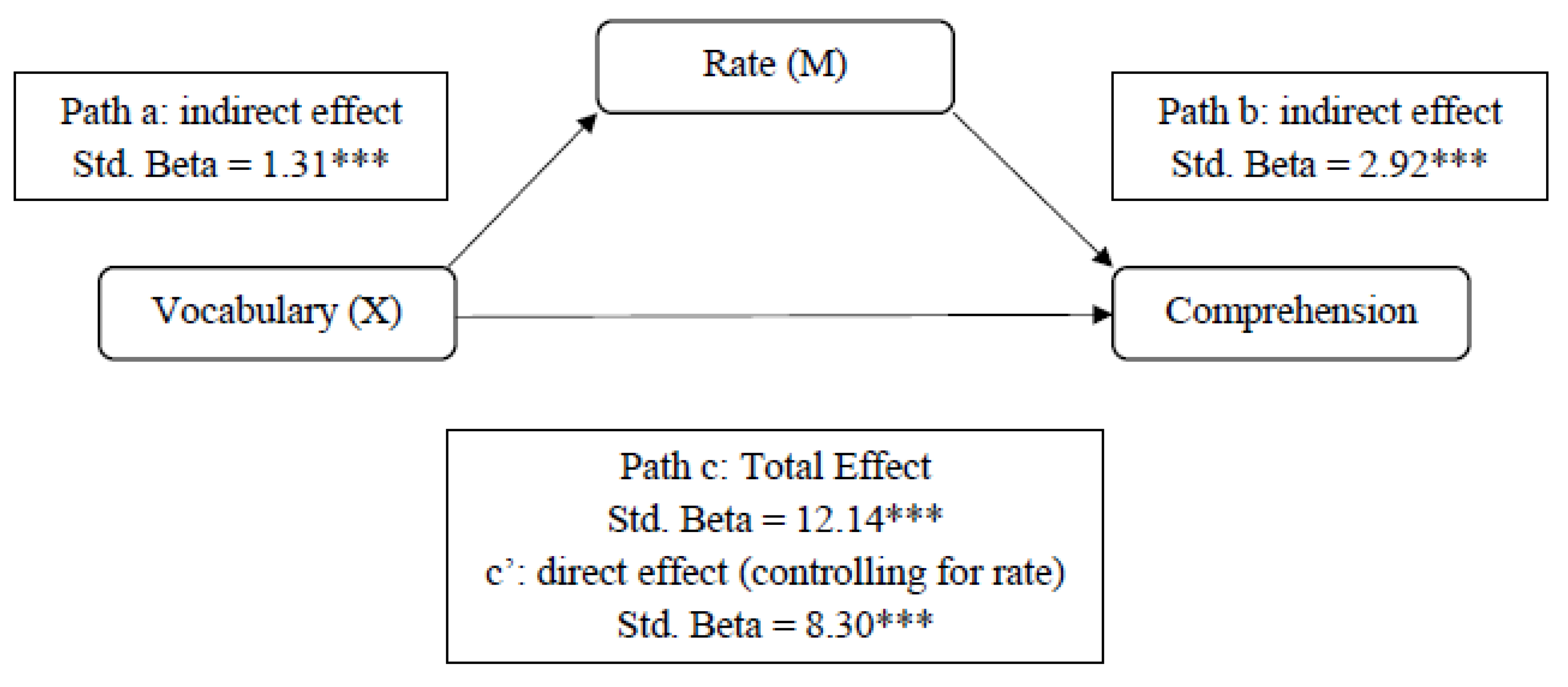

| TAWK | 2.16 | 12.14 | 1.88 | 0.485 | 0.229 | 6.46 *** | 3.37 | 11.78 | 2.90 | 0.477 | 0.214 | 4.07 *** | 2.26 | 9.09 | 2.23 | 0.418 | 0.165 | 4.06 *** | |||

| Constant | 67.40 | 291.30 | 62.58 | 4.66 *** | 128.49 | 221.23 | 124.45 | 1.78 | 150.79 | 376.26 | 145.85 | 2.58 * | |||||||||

| TAWK | 1.91 | 8.30 | 1.75 | 0.332 | 0.229 | 4.76 *** | 3.18 | 10.41 | 2.78 | 0.422 | 0.214 | 3.75 *** | 2.35 | 6.96 | 2.30 | 0.320 | 0.165 | 3.03 ** | |||

| Rate | 0.47 | 2.92 | 0.45 | 0.454 | 0.409 | 0.18 | 6.51 *** | 0.33 | 3.12 | 1.12 | 0.314 | 0.299 | 0.085 | 2.79 *** | 1.01 | 2.61 | 0.99 | 0.280 | 0.224 | 0.059 | 2.65 * |

| Model/Path | Estimate | SE | 95% CI (Lower) | 95% CI (Upper) |

|---|---|---|---|---|

| Vocabulary—Reading Rate (a) | 1.31 *** | 0.314 | 0.691 | 1.934 |

| R2M.X | 0.114 | |||

| Reading Rate—Comprehension (b) | 2.92 *** | 0.449 | 2.034 | 3.809 |

| R2Y.M | ||||

| Vocabulary—Comprehension (c) | 12.14 *** | 1.878 | 8.423 | 15.850 |

| R2Y.X. | 0.235 | |||

| Vocabulary—Comprehension (c’) | 8.30 *** | 1.746 | 4.849 | 11.756 |

| R2Y.M.X | 0.418 | |||

| Indirect Effect of Rate in Y.M.X | 3.84 *** | 1.139 | 1.839 | 6.278 |

| Group | |||

|---|---|---|---|

| Rate Cut-Point | Low Rate (b) | High Rate (b) | ∆Rate |

| 114 | 0.287ns | 0.418 *** | ns |

| 117 | 0.293ns | 0.412 *** | ns |

| 118 | 0.336 * | 0.414 *** | 0.78 |

| 119 | 0.329 * | 0.390 *** | 0.61 |

| 120 | 0.374 ** | 0.417 *** | 0.43 |

| 121 | 0.398 ** | 0.428 *** | 0.30 |

| 122 | 0.398 ** | 0.428 *** | 0.30 |

| 123 | 0.392 ** | 0.420 *** | 0.28 |

| 125 | 0.437 ** | 0.445 ** | 0.08 |

| 127 | 0.429 ** | 0.427 *** | 0.02 |

| 128 | 0.416 ** | 0.409 *** | 0.07 |

| 129 | 0.417 ** | 0.395 *** | 0.22 |

| 130 | 0.363 ** | 0.369 ** | 0.06 |

| 131 | 0.388 ** | 0.392 *** | 0.04 |

| 133 | 0.387 ** | 0.377 ** | 0.10 |

| 134 | 0.364 ** | 0.356 ** | 0.08 |

| 136 | 0.395 ** | 0.368 ** | 0.27 |

| 137 | 0.398 ** | 0.363 ** | 0.35 |

| Group | Comprehension | Academic Vocabulary | Reading Rate |

|---|---|---|---|

| Low-Rate (n = 58, 42%) | 780.02(196.32) | 22.33(7.96) | 104.62(19.75) |

| High-Rate (n = 80, 58%) | 965.90(177.59) | 25.98(8.17) | 156.53(19.04) |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paige, D.D.; Smith, G.S. Academic Vocabulary and Reading Fluency: Unlikely Bedfellows in the Quest for Textual Meaning. Educ. Sci. 2018, 8, 165. https://doi.org/10.3390/educsci8040165

Paige DD, Smith GS. Academic Vocabulary and Reading Fluency: Unlikely Bedfellows in the Quest for Textual Meaning. Education Sciences. 2018; 8(4):165. https://doi.org/10.3390/educsci8040165

Chicago/Turabian StylePaige, David D., and Grant S. Smith. 2018. "Academic Vocabulary and Reading Fluency: Unlikely Bedfellows in the Quest for Textual Meaning" Education Sciences 8, no. 4: 165. https://doi.org/10.3390/educsci8040165

APA StylePaige, D. D., & Smith, G. S. (2018). Academic Vocabulary and Reading Fluency: Unlikely Bedfellows in the Quest for Textual Meaning. Education Sciences, 8(4), 165. https://doi.org/10.3390/educsci8040165