The Relationship between Administrative Intensity and Student Retention and Success: A Three-Year Study

Abstract

1. Introduction

2. Literature Review

2.1. Higher Education Administration

2.2. Complexity Theory

3. Materials and Methods

3.1. Functional and Natural Classification of the Expense–Nacubo Farm

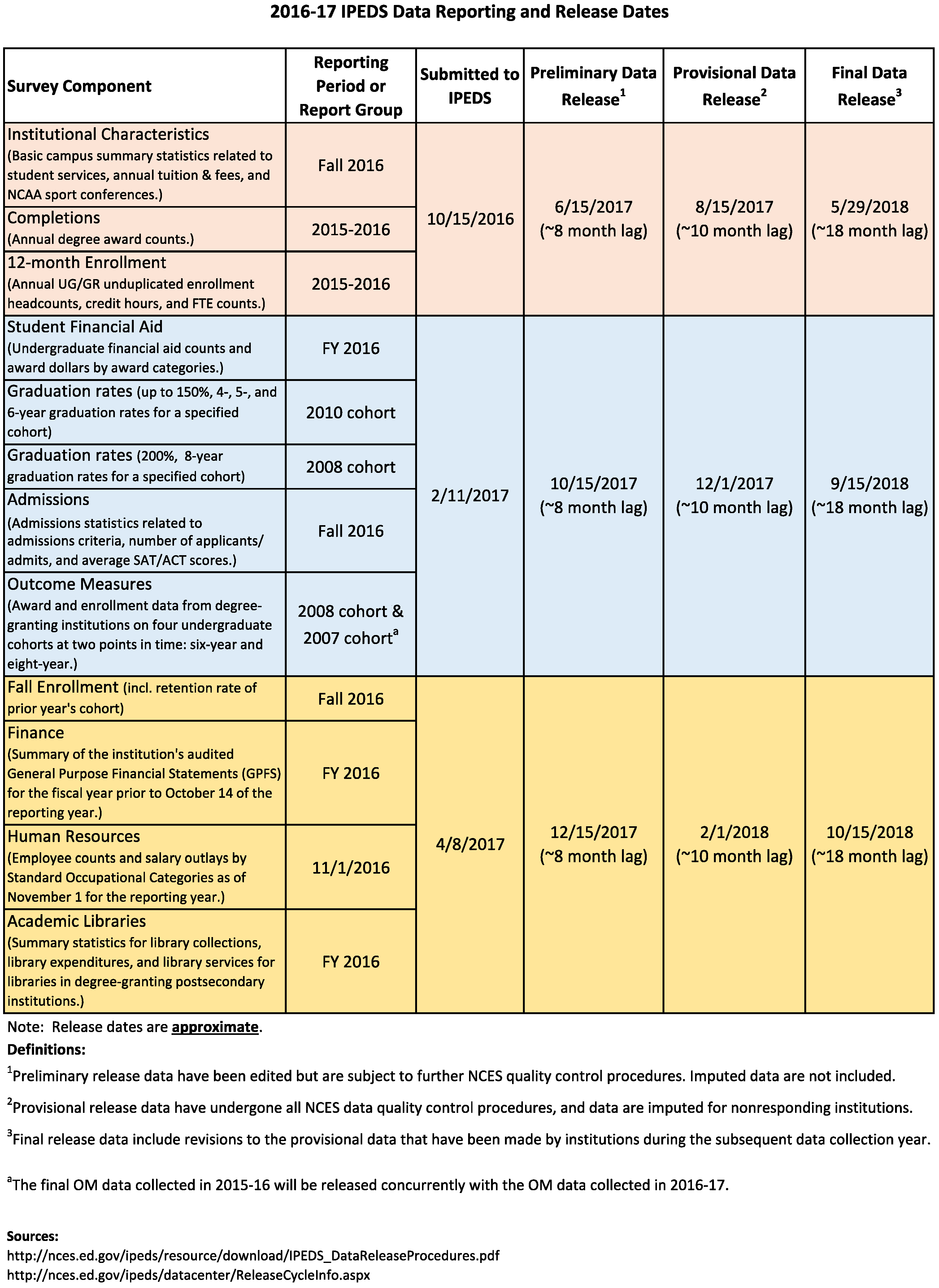

3.2. IPEDS

3.3. Respondents and Variables Used

3.4. Secondary Data Analysis Techniques

3.5. Statistical Analyses

3.5.1. Distributional Normality

3.5.2. Bivariate Correlation Analysis

3.5.3. Canonical Correlation Analysis

4. Results

5. Discussion

5.1. Average Faculty Pay with Benefits (FACCOST/F)

5.2. Average Administrative Staff Pay with Benefits (ADMCOST/A)

5.3. Number of Undergraduate and Graduate Student Headcount

5.4. Academic Organizational Complexity Index

5.5. Medical School Exists

5.6. Doctoral Institutions

5.7. Research Expenditures

5.8. Complexity

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- John, E.P.S. Changes in pricing behavior during the 1980s: An analysis of selected case studies. J. High. Educ. 1992, 63, 165–187. [Google Scholar] [CrossRef]

- Shin, J.C.; Milton, S. Student response to tuition increase by academic majors: Empirical grounds for a cost-related tuition policy. High. Educ. 2008, 55, 719–734. [Google Scholar] [CrossRef]

- Hedrick, D.W.; Wassell, C.S.; Henson, S.E. Administrative costs in higher education: How fast are they really growing? Educ. Econ. 2009, 17, 123–137. [Google Scholar] [CrossRef]

- Cardno, C. Images of academic leadership in large New Zealand polytechnics. J. High. Educ. Policy Manag. 2013, 35, 124. [Google Scholar] [CrossRef]

- Gander, J.P. Administrative intensity and institutional control in higher education. Res. High. Educ. 1999, 40, 309–322. [Google Scholar] [CrossRef]

- U.S. Department of Education. Institute of Education Sciences, National Center for Education Statistics. Integrated Postsecondary Education Data System. Available online: http://nces.ed.gov/ipeds/surveys/2005/pdf/f1a_form.pdf (accessed on 15 November 2016).

- Leslie, L.L.; Rhoades, G. Rising Administrative Costs: Seeking Explanations. J. High. Educ. 1995, 66, 187–212. [Google Scholar] [CrossRef]

- Branson, C.; Franken, M.; Penney, D. Middle leadership in higher education: A relational analysis. Educ. Manag. Adm. Leadersh. 2016, 44, 128–145. [Google Scholar] [CrossRef]

- Elton, L. Collegiality and complexity: Humboldt’s relevance to British universities today. High. Educ. Q. 2008, 62, 224–236. [Google Scholar] [CrossRef]

- Elton, L. Complexity theory—an approach to assessment that can enhance learning and—more generally—could transform university management. Assess. Eval. High. Educ. 2010, 35, 637–646. [Google Scholar] [CrossRef]

- Berger, J.B.; Hudson, K.E.; Blanco Ramírez, G. How universities work: Understanding higher education organization in northwest China. Educ. Policy Anal. Arch. 2013, 21, 64. [Google Scholar] [CrossRef]

- Nisar, M.A. Higher education governance and performance based funding as an ecology of games. High. Educ. 2015, 69, 289–302. [Google Scholar] [CrossRef]

- Klein, M.W. Settling a U.S. Senatorial Debate: Understanding Declines in State Higher Education Funding. J. Educ. Financ. 2015, 41, 1–29. [Google Scholar]

- Barr, N. Higher education funding. Oxford Rev. Econ. Policy 2004, 20, 264–283. [Google Scholar] [CrossRef]

- Cretan, G.C.; Gherghina, R. Funding higher education in a few EU countries: Implications for competition and competitiveness in higher education. J. Knowl. Manag. Econ. Inf. Technol. 2015, 1, 1–12. [Google Scholar]

- Dragoescu, R.M.; Oancea, B. Trends in funding higher education in Romania and EU. Manager 2014, 19, 7–17. [Google Scholar]

- Johnston, R. England’s new scheme for funding higher education through student fees: ‘Fair and progressive’? Polit. Q. 2013, 84, 200–210. [Google Scholar] [CrossRef]

- Teferra, D. Funding higher education in Africa: State, trends and perspectives. J. High. Educ. Afr. 2013, 11, 19–51. [Google Scholar]

- McLendon, M.K.; Hearn, J.C.; Mokher, C.G. Partisans, professionals, and power: The role of political factors in state higher education funding. J. High. Educ. 2009, 80, 686–713. [Google Scholar] [CrossRef]

- Tinkelman, D.; Mankaney, K. When is administrative efficiency associated with charitable donations? Nonprofit Volunt. Sect. Q. 2007, 36, 41–64. [Google Scholar] [CrossRef]

- Volkwein, J.F.; Malik, S.M. State regulation and administrative flexibility at public universities. Res. High. Educ. 1997, 38, 17–42. [Google Scholar] [CrossRef]

- Tong, Y.K.; Arvey, R.D. Managing complexity via the Competing Values Framework. J. Manag. Dev. 2015, 34, 653–673. [Google Scholar] [CrossRef]

- Humboldt, W.V. On the spirit and organisational framework of intellectual institutions in Berlin. Minerva 1970, 8, 242–267. [Google Scholar]

- Financial and Accounting Reporting Manual for Higher Education. Available online: http://www.nacubo.org/Products/Financial_Accounting_and_Reporting_Manual_FARM.html (accessed on 15 November 2016).

- National Association of College and University Business Officers (NACUBO). Available online: http://www.nacubo.org (accessed on 15 November 2016).

- Institute for Education Sciences. Available online: https://ies.ed.gov/aboutus/ (accessed on 15 March 2018).

- Umbach, P.D. How effective are they? Exploring the impact of contingent faculty on undergraduate education. Rev. High. Educ. 2007, 30, 91–123. [Google Scholar] [CrossRef]

- Hutto, P.N. The Relationship Between Student Retention in Community College Courses and Faculty Employment Status. Community College. J. Res. Prac. 2017, 41, 4–17. [Google Scholar] [CrossRef]

- Southwell, K.H.; Whiteman, S.D.; MacDermid Wadsworth, S.M.; Barry, A.E. The Use of University Services and Student Retention: Differential Links for Student Service Members or Veterans and Civilian Students. J. Coll. Stud. Retent. Res. Theory Prac. 2016, 19, 394–412. [Google Scholar] [CrossRef]

- Wibrowski, C.R.; Matthews, W.K.; Kitsantas, A. The Role of a Skills Learning Support Program on First-Generation College Students’ Self-Regulation, Motivation, and Academic Achievement: A Longitudinal Study. J. Coll. Stud. Retent. Res. Theory Prac. 2016, 19, 317–332. [Google Scholar] [CrossRef]

- Martin, J.M. It Just Didn’t Work Out. J. Coll. Stud. Retent. Res. Theory Prac. 2015, 19, 176–198. [Google Scholar] [CrossRef]

- Mezick, E.M. Return on Investment: Libraries and Student Retention. J. Acad. Libririansh. 2007, 33, 561–566. [Google Scholar] [CrossRef]

- Eng, S.; Stadler, D. Linking Library to Student Retention: A Statistical Analysis. Evid. Based Libr. Info. Prac. 2015, 10, 50–63. [Google Scholar] [CrossRef]

- Gregerman, S.R.; Lerner, J.S.; Hippel, W.V.; Jonides, J.; Nagda, B.A. Undergraduate Student-Faculty Research Partnerships Affect Student Retention. Rev. High. Educ. 1998, 22, 55–72. [Google Scholar] [CrossRef]

- Sav, G.T. Stochastic Cost Inefficiency Estimates and Rankings of Public and Private Research and Doctoral Granting Universities. J. Knowl. Manag. Econ. Info. Technol. 2012, 2, 1–13. [Google Scholar]

- Pascarella, T.; Terenzin, P. How College Affects Students: A Third decade of Research, 2nd ed.; Jossey-Bass: San Francisco, CA, USA, 2005. [Google Scholar]

- Tinto, V. Research and Practice of Student Retention: What Next? J. Coll. Stud. Retent. Res. Theory Prac. 2006, 8, 1–19. [Google Scholar] [CrossRef]

- Lee, R.B. Comparative Case Study Analysis of Collaboration and Perceptions of Merging Academic Affairs and Student Affairs Divisions and the Impact on Student Success. Ph.D. Thesis, Delaware State University, Dover, Delaware, 2017. [Google Scholar]

- Petrou, P.; Demerouti, E.; Schaufeli, W.B. Crafting the Change: The Role of Employee Job Crafting Behaviors for Successful Organizational Change. J. Manag. 2018, 44, 1766–1792. [Google Scholar] [CrossRef]

| Variable | Definition/Calculation | Mean | Min | Max | Std. Deviation |

|---|---|---|---|---|---|

| ADMCOST | Salaries and Benefits for Academic Support and Institutional Support ($) | 14,036,123 | 0 | 801,099,000 | 41,269,634 |

| 14,717,889 | 0 | 851,853,000 | 43,703,407 | ||

| 15,404,125 | 0 | 890,335,000 | 45,429,712 | ||

| FACCOST | Salaries and Benefits for Instruction, Research and Public Service ($) | 40,436,681 | 0 | 1,650,127,000 | 134,862,339 |

| 41,898,823 | 0 | 1,759,393,528 | 43,074,849 | ||

| 50,827,954 | 0 | 2,632,559,000 | 45,436,924 | ||

| Q1 | Undergraduate Headcount Enrollment | 3877 | 1 | 69,380 | 6642 |

| 3804 | 2 | 66,298 | 6547 | ||

| 3847 | 1 | 155,872 | 7263 | ||

| Q2 | Graduate Headcount Enrollment | 1440 | 1 | 41,513 | 2764 |

| 1406 | 1 | 42,811 | 2749 | ||

| 1417 | 1 | 43,228 | 2907 | ||

| F | Total All Faculty Instructional, Research, and Public Service Full-time | 209 | 1 | 5947 | 430 |

| 212 | 1 | 5971 | 441 | ||

| 217 | 1 | 6068 | 452 | ||

| A | Administrative and Professional Staff Full-time | 349 | 0 | 21,258 | 1169 |

| 381 | 0 | 13,981 | 1002 | ||

| 309 | 0 | 13,896 | 986 | ||

| Q | All Students (Q1 + Q2) | 4178 | 0 | 77,734 | 7928 |

| 4209 | 0 | 77,338 | 7912 | ||

| 4308 | 0 | 195,059 | 8777 | ||

| TOC | Type of Institutional Control | 0.76 | 0 = “Public” | 1 = “Private” | 0.43 |

| 0.76 | 0.43 | ||||

| 0.76 | 0.43 | ||||

| S | Enrollment Selectivity | 0 = “Not Selective” | 1 = “Selective” | ||

| M | Medical School Exists | 0.05 | 0 = “No” | 1 = “Yes” | 0.22 |

| 0.06 | 0.23 | ||||

| 0.05 | 0.22 | ||||

| A/F | Adm/Faculty Intensity | 4.24 | 0 | 1191 | 24.53 |

| 3.47 | 0 | 189 | 6.84 | ||

| 3.75 | 0 | 290 | 10.84 | ||

| PF | Average Faculty Pay with Benefits (FACCOST/F) ($) | 129,191 | 0 | 14,101,292 | 329,733 |

| 126,227 | 0 | 6,507,428 | 191,464 | ||

| 176,859 | 0 | 7,673,085 | 246,935 | ||

| PA | Average Administrative Staff Pay with Benefits (ADMCOST/A) ($) | 18,693 | 0 | 3,570,789 | 88,109 |

| 95,110 | 0 | 27,240,083 | 715,111 | ||

| 18,419 | 0 | 301,328 | 18,722 | ||

| DEG1 | Bachelor Institutions | 0.24 | 0 = “No” | 1 = “Yes” | 0.42 |

| 0.24 | 0.42 | ||||

| 0.24 | 0.42 | ||||

| DEG2 | Master’s Institutions | 0.21 | 0 = “No” | 1 = “Yes” | 0.41 |

| 0.21 | 0.41 | ||||

| 0.21 | 0.41 | ||||

| DEG3 | Doctoral Institutions | 0.12 | 0 = “No” | 1 = “Yes” | 0.32 |

| 0.12 | 0.32 | ||||

| 0.12 | 0.32 | ||||

| Q2/Q1 | Academic Organizational Complexity Index (Graduate Headcount Enrollment/Undergraduate Headcount Enrollment) | 0.78 | 0 | 395.67 | 10.47 |

| 0.92 | 0 | 510.00 | 12.62 | ||

| 1.04 | 0 | 797.00 | 18.06 | ||

| DVRES | Research Expenditures | 0.15 | 0 = “No Expenses” | 1 = “Yes Expenses” | 0.36 |

| 0.16 | 0.36 | ||||

| 0.15 | 0.36 | ||||

| PUBSOR | Public Sources of Funds per $ Revenue | 0.11 | 0 | 1.35 | 0.22 |

| 0.11 | 0 | 1.02 | 0.22 | ||

| 0.11 | 0 | 0.99 | 0.22 | ||

| PRISOR | Private Sources of Funds per $ Revenue | 0.11 | −0.35 | 1.00 | 0.22 |

| 0.11 | −0.08 | 1.00 | 0.22 | ||

| 0.11 | 0.00 | 1.00 | 0.22 |

| Variable | Definition/Calculation | Mean | Min | Max | Std. Deviation | Variable |

|---|---|---|---|---|---|---|

| lnA/F | Natural logarithmic transformation of the sum of lnPF, lnPA, lnQ, Q2/Q1, M, Deg2, Deg3, DVRes | 2827 | 2.82 | 0 | 6.03 | 0.70 |

| 2899 | 3.00 | 0.83 | 6.30 | 0.72 | ||

| 2845 | 2.83 | 0 | 6.03 | 0.70 | ||

| lnPF | Natural logarithmic transformation of PF | 2991 | 7.78 | 0 | 16.46 | 5.63 |

| 2992 | 7.92 | 0 | 15.69 | 5.59 | ||

| 2977 | 7.88 | 0 | 16.46 | 5.60 | ||

| lnPA | Natural logarithmic transformation of PA | 2991 | 3.90 | 0 | 15.09 | 4.85 |

| 2992 | 7.69 | 0 | 16.44 | 4.98 | ||

| 2977 | 3.93 | 0 | 15.09 | 4.86 | ||

| lnQ | Natural logarithmic transformation of Q | 2991 | 6.77 | 0 | 11.22 | 2.36 |

| 2992 | 6.92 | 0 | 11.26 | 2.13 | ||

| 2977 | 6.83 | 0 | 11.22 | 2.27 | ||

| Q2/Q1 | Academic Organizational Complexity Index | 2991 | 0.66 | 0 | 395.67 | 9.88 |

| 2992 | 0.81 | 0 | 510.00 | 12.05 | ||

| 2977 | 0.67 | 0 | 395.67 | 9.90 | ||

| M | Medical School Exists | 2991 | 0.05 | 0 = “No” | 1 = “Yes” | 0.22 |

| 2992 | 0.06 | 0.23 | ||||

| 2977 | 0.06 | 0.23 | ||||

| DEG1 | Bachelor Institutions | 2991 | Reference category | |||

| 2992 | ||||||

| 2977 | ||||||

| DEG2 | Master’s Institutions | 2991 | 0.21 | 0 = “No” | 1 = “Yes” | 0.41 |

| 2992 | 0.22 | 0.41 | ||||

| 2977 | 0.21 | 0.41 | ||||

| DEG3 | Doctoral Institutions | 2991 | 0.12 | 0 = “No” | 1 = “Yes” | 0.32 |

| 2992 | 0.12 | 0.33 | ||||

| 2977 | 0.12 | 0.33 | ||||

| DVRES | Research Expenditures | 2991 | 0.15 | 0 = “No Expenses” | 1 = “Yes Expenses” | 0.36 |

| 2992 | 0.16 | 0.37 | ||||

| 2977 | 0.15 | 0.36 | ||||

| FTRetension | Full Time Retention (%) | 2007 | 70.90 | 8.00 | 100 | 16.41 |

| 2020 | 72.22 | 8.00 | 100 | 15.69 | ||

| 2012 | 73.17 | 9.00 | 100 | 15.64 | ||

| 4yrgrad | 4-year graduation rate (%) | 1625 | 36.65 | 1.00 | 100 | 21.78 |

| 1677 | 36.51 | 1.00 | 100 | 21.74 | ||

| 1685 | 36.90 | 1.00 | 100 | 21.75 | ||

| 6yrgrad | 6-year graduation rate (%) | 1715 | 50.40 | 1.00 | 100 | 20.34 |

| 1777 | 50.01 | 1.00 | 100 | 20.64 | ||

| 1799 | 49.94 | 1.00 | 100 | 20.59 | ||

| 8yrgrad | 8-year graduation rate (%) | 1734 | 52.34 | 2.00 | 100 | 20.15 |

| 1790 | 51.94 | 2.00 | 100 | 20.40 | ||

| 1805 | 51.54 | 1.00 | 100 | 20.41 | ||

| Variable | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. | lnA/F | -- | ||||||||||||

| -- | ||||||||||||||

| -- | ||||||||||||||

| 2. | lnPF | 0.916 ** | -- | |||||||||||

| 0.939 ** | -- | |||||||||||||

| 0.912 ** | -- | |||||||||||||

| 3. | lnPA | 0.696 ** | 0.519 ** | -- | ||||||||||

| 0.929 ** | 0.876 ** | -- | ||||||||||||

| 0.691 ** | 0.510 ** | -- | ||||||||||||

| 4. | lnQ | 0.614 ** | 0.582 ** | 0.400 ** | -- | |||||||||

| 0.512 ** | 0.561 ** | 0.405 ** | -- | |||||||||||

| 0.617 ** | 0.570 ** | 0.390 ** | -- | |||||||||||

| 5. | Q2/Q1 | 0.155 ** | 0.004 | −0.017 | 0.006 | -- | ||||||||

| 0.160 ** | 0.022 | 0.018 | 0.005 | -- | ||||||||||

| 0.154 ** | 0.002 | −0.018 | 0.004 | -- | ||||||||||

| 6. | M | 0.217 ** | 0.189 ** | 0.152 ** | 0.237 ** | 0.023 | -- | |||||||

| 0.207 ** | 0.188 ** | 0.181 ** | 0.255 ** | 0.062 ** | -- | |||||||||

| 0.203 ** | 0.185 ** | 0.151 ** | 0.236 ** | 0.021 | -- | |||||||||

| 7. | DEG2 | 0.339 ** | 0.304 ** | 0.247 ** | 0.363 ** | −0.011 | −0.090 ** | -- | ||||||

| 0.295 ** | 0.285 ** | 0.240 ** | 0.362 ** | −0.013 | −0.089 ** | -- | ||||||||

| 0.334 ** | 0.295 ** | 0.240 ** | 0.361 ** | −0.012 | −0.087 ** | -- | ||||||||

| 8. | DEG3 | 0.286 ** | 0.239 ** | 0.219 ** | 0.345 ** | 0.049 ** | 0.571 ** | −0.191 ** | -- | |||||

| 0.256 ** | 0.237 ** | 0.216 ** | 0.360 ** | 0.038* | 0.568 ** | −0.194 ** | -- | |||||||

| 0.285 ** | 0.235 ** | 0.214 ** | 0.351 ** | 0.049 ** | 0.567 ** | −0.193 ** | -- | |||||||

| 9. | DVRES | 0.271 ** | 0.283 ** | 0.142 ** | 0.157 ** | 0.048 ** | 0.158 ** | 0.030 | 0.157 ** | -- | ||||

| 0.285 ** | 0.272 ** | 0.293 ** | 0.144 ** | 0.035 * | 0.171 ** | 0.026 | 0.162 ** | -- | ||||||

| 0.268 ** | 0.277 ** | 0.139 ** | 0.149 ** | 0.047 ** | 0.158 ** | 0.023 | 0.157 ** | -- | ||||||

| 10. | FTRetension | 0.355 ** | 0.366 ** | 0.235 ** | 0.277 ** | 0.043 | 0.203 ** | 0.057* | 0.253 ** | 0.337 ** | -- | |||

| 0.316 ** | 0.287 ** | 0.339 ** | 0.251 ** | 0.051* | 0.203 ** | 0.026 | 0.246 ** | 0.335 ** | -- | |||||

| 0.259 ** | 0.259 ** | 0.222 ** | 0.228 ** | 0.0.069 ** | 0.201 ** | 0.034 | 0.238 ** | 0.303 ** | -- | |||||

| 11. | 4yrgrad | 0.128 ** | 0.165 ** | 0.026 | −0.014 | 0.082 ** | 0.116 ** | −0.150 ** | 0.090 ** | 0.485 ** | 0.709 ** | -- | ||

| 0.182 ** | 0.156 ** | 0.210 ** | 0 | 0.078 ** | 0.119 ** | −0.144 ** | 0.101 ** | 0.486 ** | 0.695 ** | -- | ||||

| 0.135 ** | 0.171 ** | 0.034 | −0.006 | 0.148 ** | 0.131 ** | −0.145 ** | 0.103 ** | 0.475 ** | 0.686 ** | -- | ||||

| 12. | 6yrgrad | 0.328 ** | 0.316 ** | 0.183 ** | 0.260 ** | 0.097 ** | 0.216 ** | −0.035 | 0.242 ** | 0.444 ** | 0.783 ** | 0.918 ** | -- | |

| 0.347 ** | 0.320 ** | 0.345 ** | 0.293 ** | 0.086 ** | 0.220 ** | −0.036 | 0.253 ** | 0.455 ** | 0.782 ** | 0.916 ** | -- | |||

| 0.363 ** | 0.367 ** | 0.211 ** | 0.316 ** | 0.076 ** | 0.236 ** | −0.018 | 0.258 ** | 0.442 ** | 0.745 ** | 0.916 ** | -- | |||

| 13. | 8yrgrad | 0.330 ** | 0.321 ** | 0.186 ** | 0.267 ** | 0.105 ** | 0.220 ** | −0.026 | 0.253 ** | 0.421 ** | 0.775 ** | 0.896 ** | 0.992 ** | -- |

| 0.367 ** | 0.332 ** | 0.366 ** | 0.313 ** | 0.109 ** | 0.224 ** | −0.024 | 0.266 ** | 0.440 ** | 0.785 ** | 0.890 ** | 0.992 ** | -- | ||

| 0.387 ** | 0.385 ** | 0.235 ** | 0.345 ** | 0.074 ** | 0.244 ** | −0.009 | 0.277 ** | 0.438 ** | 0.754 ** | 0.891 ** | 0.993 ** | -- |

| Function | Correlation | Eigenvalue | Wilks L | F | Num df | Denom df | Sig. |

|---|---|---|---|---|---|---|---|

| 2012(1) | 0.670 | 0.813 | 0.370 | 55.74 | 32 | 5761.97 | 0.000 |

| 2012(2) | 0.558 | 0.451 | 0.671 | 31.92 | 21 | 4488.64 | 0.000 |

| 2012(3) | 0.142 | 0.021 | 0.973 | 3.58 | 12 | 3128.00 | 0.000 |

| 2012(4) | 0.083 | 0.007 | 0.993 | 2.19 | 5 | 1565.00 | 0.053 |

| 2013(1) | 0.664 | 0.787 | 0.375 | 55.91 | 32 | 5865.23 | 0.000 |

| 2013(2) | 0.555 | 0.445 | 0.669 | 32.64 | 21 | 4569.04 | 0.000 |

| 2013(3) | 0.162 | 0.027 | 0.967 | 4.43 | 12 | 3184.00 | 0.000 |

| 2013(4) | 0.080 | 0.006 | 0.994 | 2.05 | 5 | 1593.00 | 0.069 |

| 2014(1) | 0.672 | 0.823 | 0.380 | 55.54 | 32 | 5916.86 | 0.000 |

| 2014(2) | 0.532 | 0.396 | 0.692 | 30.01 | 21 | 4609.24 | 0.000 |

| 2014(3) | 0.144 | 0.021 | 0.966 | 4.67 | 12 | 3212.00 | 0.000 |

| 2014(4) | 0.116 | 0.014 | 0.986 | 4.42 | 5 | 1607.00 | 0.001 |

| Function 1 | Function 2 | ||||||

|---|---|---|---|---|---|---|---|

| Coefficient | rs | %rs2 | Coefficient | rs | %rs2 | % h2 | |

| lnPF | −0.246 | −0.537 | 28.84 | −0.273 | −0.354 | 12.53 | 41.37 |

| 0.006 | −0.544 | 2959 | 0.481 | −0.285 | 8.12 | 37.72 | |

| −0.202 | −0.514 | 26.42 | −0.205 | −0.292 | 8.53 | 34.95 | |

| lnPA | −0.062 | −0.496 | 24.60 | 0.014 | −0.052 | 0.27 | 24.87 |

| −0.298 | −0.536 | 28.73 | −0.723 | −0.361 | 13.03 | 41.76 | |

| −0.110 | −0.536 | 28.73 | −0.021 | −0.056 | 0.31 | 29.04 | |

| lnQ | −0.716 | −0.953 | 90.82 | 0.078 | 0.020 | 0.04 | 90.86 |

| −0.730 | −0.937 | 87.80 | 0.113 | 0.080 | 0.64 | 88.44 | |

| −0.730 | −0.954 | 91.01 | 0.155 | 0.045 | 0.20 | 91.21 | |

| Q2/Q1 | 0.195 | −0.221 | 4.88 | −0.275 | −0.355 | 12.60 | 17.49 |

| 0.213 | −0.156 | 2.43 | −0.283 | −0.322 | 10.37 | 12.80 | |

| 0.200 | −0.206 | 4.24 | −0.246 | −0.343 | 11.76 | 16.01 | |

| M | −0.042 | −0.425 | 18.06 | 0.093 | −0.200 | 4.00 | 22.06 |

| −0.045 | −0.437 | 19.10 | −0.087 | −0.185 | 3.42 | 22.52 | |

| −0.037 | −0.438 | 19.18 | −0.139 | −0.235 | 5.52 | 24.71 | |

| DEG2 | −0.157 | −0.264 | 6.97 | 0.356 | 0.270 | 7.29 | 14.26 |

| −0.123 | −0.219 | 4.80 | 0.347 | 0.294 | 8.64 | 13.44 | |

| −0.108 | −0.235 | 5.52 | 0.315 | 0.292 | 8.53 | 14.05 | |

| DEG3 | −0.228 | −0.608 | 36.97 | 0.184 | −0.163 | 2.66 | 39.62 |

| −0.233 | −0.619 | 38.32 | 0.171 | −0.145 | 2.10 | 40.42 | |

| −0.224 | −0.617 | 38.07 | 0.121 | −0.185 | 3.42 | 41.49 | |

| DVRES | 0.011 | −0.052 | 0.27 | −0.796 | −0.905 | 81.90 | 82.17 |

| −0.017 | −0.104 | 1.08 | −0.761 | −0.897 | 80.46 | 81.54 | |

| −0.020 | −0.084 | 0.71 | −0.816 | −0.913 | 83.36 | 84.06 | |

| Rc2 | 63.00 | 32.90 | |||||

| 62.50 | 33.10 | ||||||

| 62.00 | 30.80 | ||||||

| FTRetension | −0.488 | −0.574 | 32.95 | −0.352 | −0.777 | 60.37 | 93.32 |

| −0.462 | −0.622 | 38.69 | −0.245 | −0.725 | 52.56 | 91.25 | |

| −0.360 | −0.569 | 32.38 | −0.192 | −0.730 | 53.29 | 85.67 | |

| 4yrgrad | 1.683 | 0.019 | 0.04 | −10.261 | −0.978 | 95.65 | 95.68 |

| 1.654 | −0.027 | 0.07 | −10.276 | −0.987 | 97.42 | 97.49 | |

| 1.440 | 0.007 | 0 | −10.170 | −0.993 | 98.60 | 98.61 | |

| 6yrgrad | 1.323 | −0.342 | 11.70 | 0.870 | −0.877 | 76.91 | 88.61 |

| 0.790 | −0.393 | 15.44 | 0.622 | −0.876 | 76.74 | 92.18 | |

| 2.546 | −0.351 | 12.32 | 0.538 | −0.898 | 80.64 | 92.96 | |

| 8yrgrad | −2.822 | −0.404 | 16.32 | −0.298 | −0.860 | 73.96 | 90.28 |

| −2.332 | −0.458 | 20.98 | −0.126 | −0.853 | 72.76 | 93.74 | |

| −3.946 | −0.425 | 18.06 | −0.207 | −0.875 | 76.56 | 94.63 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Romine, K.D.; Baker, R.M.; Romine, K.A. The Relationship between Administrative Intensity and Student Retention and Success: A Three-Year Study. Educ. Sci. 2018, 8, 159. https://doi.org/10.3390/educsci8040159

Romine KD, Baker RM, Romine KA. The Relationship between Administrative Intensity and Student Retention and Success: A Three-Year Study. Education Sciences. 2018; 8(4):159. https://doi.org/10.3390/educsci8040159

Chicago/Turabian StyleRomine, Kerry D., Rose M. Baker, and Karla A. Romine. 2018. "The Relationship between Administrative Intensity and Student Retention and Success: A Three-Year Study" Education Sciences 8, no. 4: 159. https://doi.org/10.3390/educsci8040159

APA StyleRomine, K. D., Baker, R. M., & Romine, K. A. (2018). The Relationship between Administrative Intensity and Student Retention and Success: A Three-Year Study. Education Sciences, 8(4), 159. https://doi.org/10.3390/educsci8040159