Abstract

Numerous studies have explored the integration of technology-enhanced feedback systems in education. However, there is still a need for further investigation into their specific impact on teacher satisfaction, which is essential for effective feedback delivery to students. This study addresses this gap by analyzing teachers’ satisfaction with the “Compliments and Comments Tool”, a technology-enhanced system developed to provide written feedback to students. Using a quantitative approach, this study examined teachers’ perceptions of the tool’s usability in the Slovenian education context, involving a diverse group of 3412 primary and secondary school teachers. Data were collected through surveys employing the System Usability Scale (SUS) and Technology Acceptance Model (TAM) for quantitative analysis, complemented by qualitative insights. The results showed high teacher satisfaction, valuing the tool for facilitating feedback and supporting a positive learning environment. These findings suggest that the “Compliments and Comments Tool” is a valuable addition to educational technology, promoting effective teaching and enhancing student engagement. This study emphasizes the critical role of user-centered design and system usability in educational technology, particularly in fostering effective feedback and promoting student self-regulation.

1. Introduction

In recent years, the integration of technology-enhanced feedback systems into education has demonstrated significant potential for improving student learning outcomes [1], with findings suggesting that such technology use positively influences learners’ motivation and achievement [2]. These systems, grounded in constructivist and cognitive learning theories, emphasize the importance of timely and personalized feedback in fostering self-regulation and metacognition among students [3]. The development of students’ self-regulated learning skills is critically dependent on receiving appropriate feedback during the learning process [4,5,6], making effective feedback essential for guiding students toward improved learning practices and self-regulation [3,4]. This feedback helps students understand their current performance levels, identify areas for improvement, and recognize their achievements, thereby fostering a growth mindset that views challenges as opportunities rather than setbacks [4]. This process is crucial for helping students make informed decisions about their learning strategies, promoting self-assessment and reflection, and enhancing critical thinking and problem-solving skills [7]—skills essential for lifelong learning and adaptability.

High-information feedback, which includes not only corrective information but also insights into self-regulation aspects, such as monitoring attention, emotions, or motivation during the learning process, has been found to be particularly effective [8]. A recent meta-analysis of 435 studies highlighted that this type of feedback achieved a substantial effect size of d = 0.99, underscoring its significant impact [8]. This comprehensive feedback approach enables students to gain a deeper understanding of their learning processes, fostering a more engaged and proactive approach to education. Thus, emphasizing the quality and type of feedback is critical in educational settings as it significantly influences student growth and learning outcomes.

Providing comprehensive individual feedback to all students remains a time-consuming task for teachers [9]. Advancements in technology have facilitated this process by enabling teachers to regularly document and share feedback digitally [10,11], either after lessons or even through automated [12] and intelligent [13] systems. This digital approach not only streamlines the feedback process [10] but also enhances parental engagement, which is strongly associated with improved academic success for students [14,15,16]. However, there is a notable gap in the research, particularly regarding teachers’ views on the usability of technology-enhanced feedback systems, despite teachers playing a crucial role in the effective implementation of these systems.

Most studies on technology-supported feedback systems have focused on feedback related to specific tasks, emphasizing learning in terms of knowledge acquisition. Therefore, there is a research gap concerning the usability of feedback systems aimed at the holistic growth and self-regulation of individuals. Furthermore, recent studies have increasingly focused on automating feedback using analytics [17,18] or intelligent systems [13]. Nonetheless, there is a lack of research considering the critical role of teachers’ perceptions of the adoption and success of these technologies.

This study aimed to fill this gap by investigating the usability and effectiveness of the “Compliments and Comments Tool” developed with this purpose in mind, featuring categories specifically designed to support holistic growth and self-regulation. Implemented within the Slovenian education system, this tool’s usability and impact are assessed by examining teachers’ satisfaction with it, their perceptions of its usefulness, and the frequency and appropriateness of its use in providing feedback. By addressing these aspects, this study contributes to the ongoing discourse on educational technology, emphasizing the importance of user-centered design and system usability in fostering an environment conducive to effective feedback and self-regulation among students. This focus on teachers’ perspectives and the practical use of the system aims to provide valuable insights into the design and implementation of feedback tools that support both teacher satisfaction and students’ personal and academic growth.

2. Literature Review

2.1. Technology-Enhanced Feedback and Its Impact on Students’ Self-Regulation

The rapid development and ubiquity of technological paradigms have led to an increased focus on integrating digital methods to provide feedback in educational settings [19]. Empirical research has highlighted the pedagogical effectiveness of digital text feedback, noting its superiority over traditional handwritten counterparts [20]. Digital platforms enable timely and efficient delivery of feedback beyond classroom constraints, allowing them to be tailored to learners’ specific needs and preferences [18,21]. Additionally, mobile technologies have been shown to facilitate collaborative learning environments, enhancing student interaction and engagement through shared digital platforms [22]. This capability for systematic storage, monitoring, and analysis of digital feedback facilitates the continuous tracking of student knowledge, learning strategies, skills, and behaviors over extended periods. Additionally, technological tools such as progress graphs and frequency analysis make data visualization accessible to students, teachers, and parents, thereby promoting a comprehensive understanding of student progress [23].

Recent research has emphasized the importance of feedback literacy for both teachers [24,25] and students [26,27]. For teachers, effective feedback literacy involves crafting and delivering feedback that is constructive, clear, and timely using digital tools to enhance engagement and learning outcomes [24]. On the other hand, students must develop the ability to interpret and utilize feedback to improve their learning processes [27]. This dual literacy is essential to ensure that feedback is not only received but also understood and acted upon, leading to improved educational outcomes. However, challenges, such as ensuring equitable access [28,29] and developing digital literacy skills among teachers [30,31] and students [32] must be addressed.

Research based on dual-coding theory suggests that while students have an intrinsic preference for auditory (oral) feedback, the cognitive processing required for written feedback, whether analog or digital, significantly enhances comprehension and retention [33]. The impact of written feedback is further amplified when combined with Socratic questioning and collaborative discourse, which fosters self-efficacy, self-regulation, and active learning [34]. The principle of immediacy in sharing feedback, rooted in cognitive load theory, is paramount [5,35]. However, critiques have pointed to the potential pitfalls of written feedback and emphasized the need for sustained dialogic engagement to alleviate cognitive overload and promote schema acquisition [36]. Nonetheless, researchers have found that students frequently ask questions to self-reflect on their tasks while reading written feedback, indicating self-regulatory behavior [5,33].

In classroom settings, most feedback is typically directed to the entire class and, as such, often fails to effectively engage individual students [37]. Personalized feedback, which addresses each student’s specific needs and developmental stage [38,39,40,41], particularly their zone of proximal development [42,43], is more effective in fostering learning and improvement. Perspectives on the primarily feedforward nature of feedback indicate its positive reception by students, as it significantly contributes to the self-regulation of learning [44,45]. Despite the recognized benefits, delivering individualized and valuable feedback remains a significant challenge for educators because of increasing student-to-teacher ratios and diverse academic needs. Constraints such as time and resources further intensify these challenges, making it difficult to consistently provide comprehensive and high-quality feedback [46,47].

Utilizing technology-enhanced feedback systems can significantly reduce teachers’ workload by efficiently managing comprehensive student data and implementing a structured feedback categorization system. These systems allow for the simultaneous distribution of feedback to multiple students, making it easier to provide timely and personalized responses. Additionally, the ability to track and analyze feedback over extended periods offers educators a more detailed understanding of classroom dynamics and individual student progress. This comprehensive analysis provides deeper insights into how students are advancing, both academically and in terms of self-regulation.

Research has indicated that feedback from technologically enhanced learning platforms is a critical factor in reducing dropout rates, increasing course completion rates, and improving overall learning outcomes [48]. Central to our approach is the principle of tailoring feedback to meet individual learning needs, which includes a focus on learners’ self-regulation. By providing targeted feedback, educators can more effectively support students in developing self-regulatory skills, thereby enhancing their overall learning experiences. This personalized approach ensures that feedback is not only relevant and actionable but also instrumental in fostering a more engaged and proactive approach to learning.

2.2. Overview of Technology-Enhanced Feedback Tools in Education

Feedback tools are essential in education, shaping student engagement, teacher–student interactions, and overall learning outcomes. Technology-enhanced feedback (TEF) systems have been increasingly implemented to improve feedback quality and effectiveness for students [49]. These systems use various technological tools to provide timely, personalized, and interactive feedback, which is crucial for student learning and development. The role of feedback in enhancing educational experiences and professionalizing teaching practices has been well documented. However, traditional feedback systems often fail to meet modern educational needs, prompting a shift towards technology-driven approaches [46]. Technological advancements have led to the development of diverse feedback tools, from traditional face-to-face interactions to advanced online platforms. These tools deliver immediate, specific, and actionable feedback that significantly contributes to the educational process [4,46]. The integration of technology into education has given rise to several feedback systems that utilize digital platforms to enhance communication between teachers, students, and parents [50]. The global adoption of these systems varies and is influenced by local educational policies, cultural contexts, and technological infrastructure.

TEF systems employ various tools and techniques. Classroom response systems (CRSs) are used in science instruction for technology-enhanced formative assessment (TEFA), applying principles such as question-driven instruction and dialogic discourse [51]. Video feedback screencasts are used to provide individualized feedback that is favorably perceived by students, providing them with better insight into the assessment process [52]. Additionally, video-enhanced mobile observation (VEO) apps are used in teacher education for peer feedback, supporting self-reflection, and inquiry-based learning [53]. These tools support practices such as question-driven instruction and peer feedback, fostering self-reflection and inquiry-based learning. Despite the potential benefits, TEF systems face challenges related to ease of use, perceived usefulness, and broader socioeconomic and cultural factors that shape educational practices. The effective use of these technologies assumes a high level of digital proficiency among educators, which is not uniformly present across factors such as age, gender, education, and educational institution [54]. This digital divide is a significant barrier to the widespread and effective implementation of TEF tools, limiting their accessibility and impact [55,56]. While video feedback has shown positive outcomes, offering a personalized touch and clarifying complex content [57], it requires robust technology and digital skills among educators.

Integrating technology into parent–teacher communication systems has been shown to significantly enhance parental engagement, a critical predictor of student success [58]. Enhanced engagement results from improved trust and communication, facilitated by feedback systems that use technology to connect parents and educators more effectively [59]. The triangular relationship between students, parents, and teachers ensures consistent messages and support across home and school settings, which is crucial for academic and personal development [60,61].

The rapid development of educational technology continues to transform teaching and learning practices. Recent advances in AI-driven feedback systems support student self-regulation and improve educational outcomes [62,63]. However, continuous research is essential to fully explore the impact of these technologies, as their implementation in diverse educational systems can provide deeper insights into their benefits and limitations. Platforms like “Tutoria” illustrate a shift towards personalized, AI-enhanced feedback systems, catering to the specific needs of both students and teachers, further enriching the educational technology landscape [64]. However, concerns regarding the reliability, authenticity, ethical considerations, and emotional intelligence of AI-generated feedback remain prevalent in academic literature [65,66]. National policies and legal frameworks play a crucial role in this domain, often acting as barriers and constraints for the advancement of AI systems within the educational sector in various countries. These innovations highlight the evolving nature of educational technology and present new opportunities for enhancing the feedback process in educational systems worldwide. However, continued research and development are essential to optimize these systems and fully integrate them into educational practices.

2.3. Comparative Insights from Finnish and Slovenian Technology-Enhanced Feedback Tools in Education

A notable example of a technology-enhanced feedback system integrated as a core educational component is found in the Finnish education system. Finnish schools utilize online platforms that enable teachers to provide structured feedback on student learning and behavior [67]. This system supports daily feedback practices and offers instant feedback during lessons, thereby maintaining continuity and immediacy. These practices have been documented for their high efficiency and positive impact on both teacher performance and student learning outcomes [68,69]. The use of predefined feedback options in Finland streamlines the feedback process, ensuring consistency and reducing subjective bias, although it may increase teacher workload and potentially diminish student responsibility for self-directed learning [70].

The “Compliments and Comments Tool”, whose usability has been evaluated in this research, was developed in Slovenia with insights from the Finnish system, particularly focusing on the benefits of structured feedback and integration features. However, it incorporates an even more detailed structure tailored to the local context, as research shows that teachers experience high levels of work stress due to parental interference in their work [71]. This tool was designed to enhance direct communication between teachers and parents, potentially boosting parental engagement without disrupting daily educational routines. The categories within the tool are aligned with the development of self-regulation skills and competencies required for the 21st century [72]. By refining and adapting these elements from the Finnish system, the Slovenian tool aims to improve feedback delivery efficiency and effectiveness, addressing local educational needs while leveraging global best practices. The enhancement of the Slovenian system allows for the holistic monitoring of students’ growth and development, focusing on self-regulation skills.

In the context of Slovenian primary and secondary schools, both teachers and students generally lack advanced digital competencies [73], and there are no established systems for comprehensive student monitoring. Consequently, it is crucial that technological developments are tailored to the existing skills and knowledge levels of both students and teachers. The “Compliments and Comments Tool” has been developed to include text-based feedback options, with the potential for audio and video feedback to be incorporated in the future once the necessary resources and skills are available among users and schools. This tool also provides analytics for both teachers and school administrators, offering insights at the class or school level. However, new AI technologies have not been integrated, as their inclusion requires changes in established legal frameworks.

2.4. Feedback System Usability and User-Centred Design

Usability, defined as “the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use” [74], is crucial in technology adoption. User acceptance is influenced by internal beliefs, attitudes, system design, and user involvement [75,76]. Perceived usefulness and ease of use are fundamental to positive attitudes and behavioral intention to use technology [77,78].

Various measures have been employed to assess usability, including the Software Usability Scale (SUS) [79], Software Usability Measurement Inventory (SUMI) [80], User Experience Questionnaire (UEQ) [81], and Technology Acceptance Model (TAM) [75,82]. Brooke created the System Usability Scale (SUS) to assess perceived usability [79]. The SUS is a free psychometric tool that has gained worldwide acceptance due to its high validity and reliability and is, according to Tullis and Albert, the most widely adopted tool for usability evaluations [83].

Blažica and Lewis presented a Slovenian translation, and the results indicated that the SUS-SI has properties similar to the English version and can therefore be used with confidence when conducting user research [82]. Lewis points out that usability can only be defined by reference to specific contexts and not as an absolute concept [84]. The SUS scale is technology-agnostic [85,86,87] and can therefore be deployed to evaluate various technological products, including school information systems and learning management systems.

Another usability scale widely adopted in educational technology is the Technology Acceptance Model (TAM) [88]. TAM is a holistic approach that combines several aspects of the technology adoption process by considering the characteristics of the user, system, environment, tasks, and context [89]. External variables (i.e., technology characteristics) of the TAM influence both perceived ease of use and usefulness. Perceived ease of use plays a crucial role in TAM because it directly and indirectly influences intention, affecting the likelihood of using technology [77]. Perceived usefulness is defined as “the degree to which a person believes that using a certain system enhances his or her productivity” [90]. It is significantly influenced by perceived ease of use because the easier a technology is to use, the more useful it becomes. Higher acceptance rates for a product increase the likelihood that it will be used continuously in the future [91].

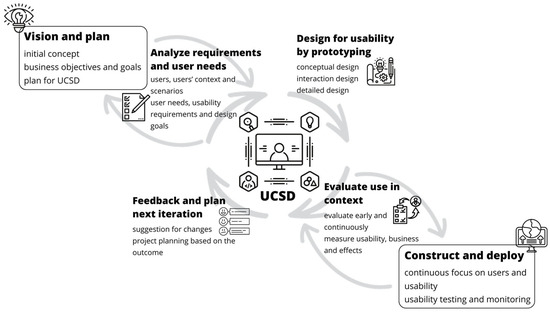

User-centered system design (UCSD) emphasizes usability throughout the system lifecycle, involving activities such as vision, planning, requirements analysis, prototyping, deployment, and evaluation (Figure 1) [92].

Figure 1.

User-centered system design (UCSD) phases.

Continuous iterations and user evaluations are key to enhancing utility and usability [93]. Involving users in the design process increases the likelihood of adoption [76]. Therefore, we included teachers as end users when developing the “Compliments and Comments Tool”.

2.5. Research Questions

Feedback has an impact when given in a timely manner and worded in a way that the learner understands. Parents, especially those of younger students, also play an important role in understanding and responding to the teacher’s feedback.

The usability and user-centered design questionnaire used to analyze user satisfaction with the functionality of the existing “Compliments and Comments Tool” in our case study provides three main areas for measuring user satisfaction:

- Efficiency, as reflected in the user’s ability to perform the task and the quality of the task itself or its outcome;

- Ease of use, reflected in the amount of resources used to perform the task;

- Ease of learning and satisfaction, reflected in the speed and ease of learning to work with the tool and the user’s perceived satisfaction in using the tool.

This research aims to examine teachers’ opinions on the importance and frequency of feedback and assess their satisfaction with the usability of the written feedback tool. The following research questions (RQs) and hypotheses (Hs) were proposed:

RQ1. What are the teachers’ opinions on the importance and frequency of feedback?

Constructivist and cognitive learning theories emphasize the role of frequent personalized feedback in student learning. The objective of RQ1 was to understand the perceived significance and optimal recurrence of feedback as perceived by teachers, influencing their daily interactions and feedback approaches with students and parents.

H1.

There are no gender or age group differences in teachers’ opinions on the importance and frequency of feedback given to students and sent to parents.

This hypothesis aimed to investigate the potential impact of demographic variables on teachers’ perceptions of the significance and regularity of feedback.

RQ2. How satisfied are teachers with the usability of written feedback tools?

The objective of RQ2 is to assess the degree of satisfaction among teachers regarding the feedback tool, to identify areas for enhancement, and to comprehend its effectiveness within the educational environment.

H2.

Younger teachers tend to have more experience using technology, while older teachers tend to have more experience in giving feedback. Therefore, age may affect teacher satisfaction with system use, suggesting possible age differences.

The objective of H2 was to examine how demographic elements, specifically age, influence teachers’ satisfaction with the usability of the feedback tool. This goal strives to discern the extent to which varying degrees of technological familiarity and proficiency among younger and older educators affect their assessment of the tool’s efficacy and user-friendliness.

H3.

The perceived usefulness of the “Compliments and Comments Tool” for promoting self-regulation and learning strategies will differ between primary and secondary school teachers, with variations in evaluations depending on the educational level at which the teacher works.

This hypothesis explores whether there are differences in teachers’ assessments of the effectiveness of the feedback tool based on the educational level they teach (primary versus secondary). It posits that the tool’s impact on self-regulation and learning strategies may be perceived differently because of distinct developmental and educational needs at these levels.

3. Materials and Methods

3.1. Research Design

This study employed a mixed-methods approach, combining both quantitative and qualitative methods, to analyze teachers’ opinions on the importance and frequency of feedback and to offer comprehensive insight into teachers’ perceived usability of the TEF system “Compliments and Comments Tool”. This was developed to help teachers provide feedback easily and efficiently using technology and is seamlessly integrated within the school management information system “e-Asistent” used in Slovenian schools. This research aimed to gather teachers’ perceptions of the tool, focusing on its impact on promoting self-regulation and learning strategies among students. Two separate datasets were collected using questionnaires distributed through the “e-Asistent” information system, commonly used in Slovenian schools.

3.2. Participants and Procedure

The participants comprised two distinct groups of teachers. The first dataset was obtained from 106 teachers from a small number of schools involved in the research and development process of “e-Asistent”. Schools were purposefully selected from various regions to capture the diverse educational contexts. This sample included 78 women and 15 men, with the majority (61 teachers) aged between 41 and 60 years, followed by 30 teachers aged between 25 and 40 years, and one teacher over 61 years. This purposive sampling approach aims to provide insights from a diverse range of educational settings and perspectives.

The second dataset included responses from 3412 primary and secondary school teachers, representing approximately 13% of all teachers in Slovenia. This broader survey targeted all schools using the “e-Asistent” system, allowing teachers to participate. The respondents included 2396 women, 573 men, and 443 who did not identify their gender. Additionally, 1063 were secondary school teachers. In the Slovenian educational system, children aged 6–15 years are enrolled in primary school, while teens aged 15–19 attend secondary school. Regarding age distribution, 268 teachers were aged between 25 and 30 years, 899 between 31 and 40 years, 1003 between 41 and 50 years, and 806 over 51 years, with 436 participants not disclosing their age.

3.3. Measures

To evaluate teachers’ opinions on the importance and frequency of feedback and answers to RQ1 and H1, we used a questionnaire that consisted of eight questions, five of which were closed and three were open-answer questions.

To evaluate the usability of the “Compliments and Comments Tool” and answer RQ2, H2, and H3, this study utilized an adapted version of the System Usability Scale (SUS) [79] and the Technology Acceptance Model (TAM) [75,82], aligned with ISO 9241-11 standards [74]. These instruments were adapted to the educational context and specifically focused on the application of the tool to teachers. The measures assessed the ease of use, perceived usefulness, and efficiency of the feedback tool along with the importance of various skill developments facilitated by the tool. The demographic questions were related to gender, age group, and the level of school taught by teachers. The questionnaire consisted of nine questions, five of which were closed, and four questions were answered using a 5-point Likert scale.

The questionnaires were developed through a rigorous process involving multiple stages. Initially, a pool of items was generated based on a comprehensive review of the relevant literature and existing scales on teacher feedback, student monitoring, and usability. Subsequently, we assembled a diverse team of experts to ensure a comprehensive and precise evaluation of the “Compliments and Comments Tool” through the development of questionnaires. This team comprised distinguished academics and practitioners from various relevant fields including educational psychology, computer science, digital pedagogy, and educational technology research. An educational psychologist, with over 30 years of experience, provided expertise in guiding the psychological and pedagogical validity of our questions. Additionally, a Professor with a Ph.D. in Computer Science and specialization in digital pedagogy ensured that the technical content of the questionnaire accurately reflected the current technological advancements. Furthermore, a Ph.D. candidate researching Educational Technology contributed expertise in digital learning environments to align our instrument with cutting-edge educational technology practices. We also collaborated closely with a team from the “e-Asistent” company, which has been at the forefront of educational system development for over 25 years. Their insights were crucial in refining the aspects of the “Compliments and Comments Tool” within the questionnaire to ensure real-world applications and utility. Their understanding of the user experience and system functionality helped tailor our questions to effectively probe the efficacy and reception of the tool in educational settings. This collaborative effort ensured that the questions were relevant and grounded in theoretical and practical insights.

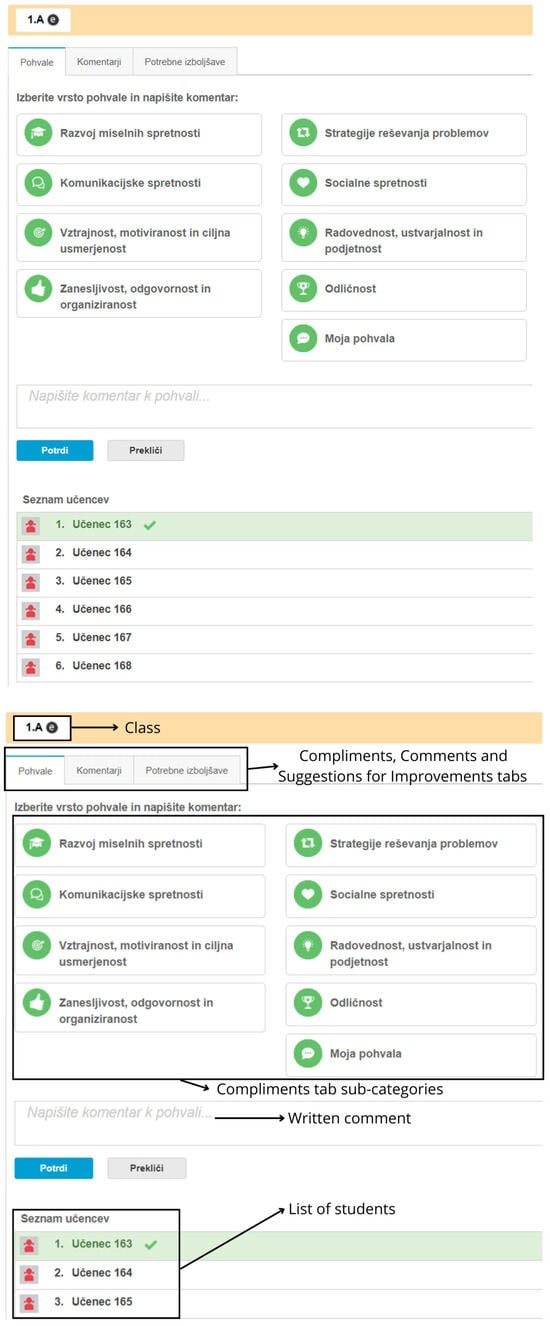

“Compliments and Comments Tool” Description

The “Compliments and Comments Tool” is a component of the “e-Asistent” information system designed for comprehensive student monitoring and feedback. The tool includes a Compliments tab, which is divided into nine sub-categories. The sub-categories, along with their English translations, are as follows: Razvoj miselnih spretnosti—Development of thinking skills; Komunikacijske spretnosti—Communication skills; Vztrajnost, motiviranost in ciljna usmerjenost—Perseverance, motivation, and goal orientation; Zanesljivost, odgovornost in organiziranost—Reliability, responsibility, and organization; Strategije reševanja problemov—Problem-solving strategies; Socialne spretnosti—Social skills; Radovednost, ustvarjalnost in podjetnost—Curiosity, creativity, and entrepreneurship; Odličnost—Excellence; and Moja pohvala—My compliment (Figure 2). Below the sub-categories, there is a text box where teachers can write specific comments related to the selected category. Additionally, the list of students is shown at the bottom, allowing teachers to select a student and provide individualized feedback.

Figure 2.

Compliments tab of the tool.

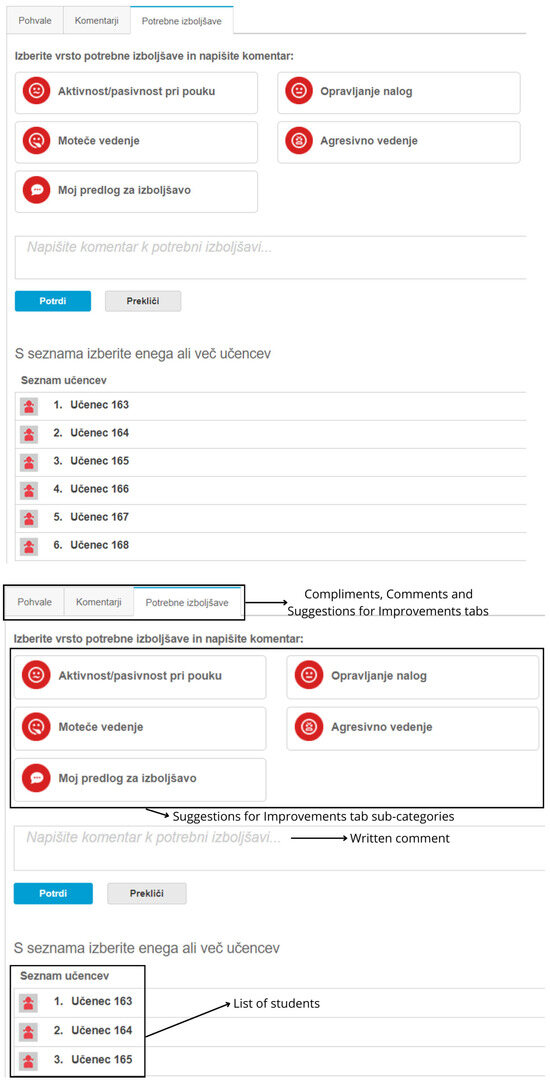

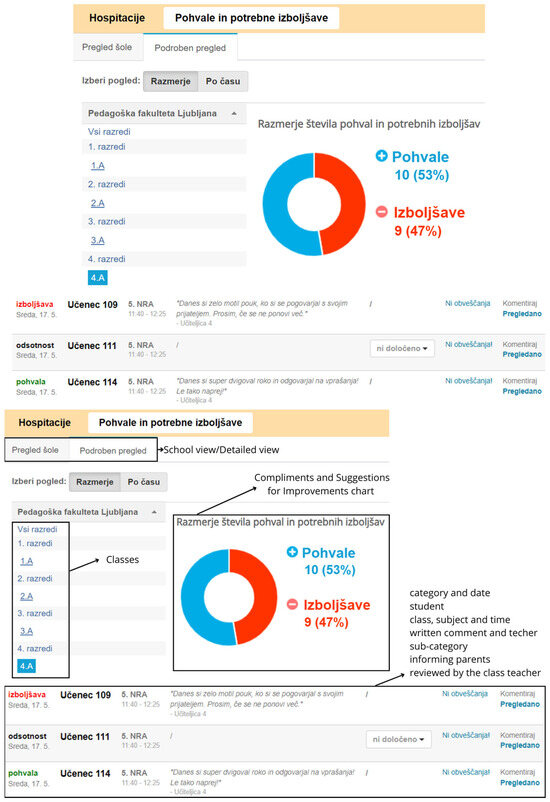

The Suggestions for Improvements tab focuses on providing formative feedback and includes five sub-categories, such as activity/passivity, disruptive behavior, and task performance (Figure 3). The sub-categories, along with their English translations, are as follows: Aktivnost/pasivnost pri pouku—Activity/passivity in class; Moteče vedenje—Disruptive behavior; Moj predlog za izboljšavo—My suggestion for improvement; Opravljanje nalog—Task completion; and Agresivno vedenje—Aggressive behavior. Below the sub-categories, the user interface follows the same structure as for the Compliments tab. The tool also offers analytics capabilities, allowing teachers and school administrators to monitor overall classroom and school behavior (Figure 4 and Figure 5). Figure 4 shows the ratio of compliments and needed improvements in the form of a pie chart with the navigation pane on the left side, listing different classes and grades. At the bottom, there is a section providing specific examples of feedback given to individual students, including the feedback type, student ID, date, time, and teacher comments, for example, “Danes si zelo motil pouk, ko si se pogovarjal s svojim prijateljem. Prosim, če se ne ponovi več”—“Today you were very disruptive in class while talking to your friend. Please do not let it happen again” and “Danes si super dvigoval roko in odgovarjal na vprašanja! Le tako naprej!”—“Today you raised your hand and answered questions very well! Keep it up!” Figure 5 shows the overall view of the schools’ ratio of compliments and needed improvements with a pie chart, a trend, and at the bottom, the latest compliments and needed improvements, structured the same way as at the classroom level.

Figure 3.

Suggestions for Improvements tab of the tool.

Figure 4.

Overall view of the class (examples from the fictitious testing environment).

Figure 5.

Overall view of the school (e.g., from a fictitious testing environment).

3.4. Data Analyses

The data analysis aimed to address the research questions (RQ1 and RQ2) and hypotheses (H1, H2, H3) posed in this study, focusing on teachers’ opinions on the importance and frequency of feedback, as well as the usability of the “Compliments and Comments Tool”.

A mixed-methods approach was used to answer RQ1 and test H1. Quantitative data analysis involved calculating the means, frequencies, and percentages to summarize the participants’ responses. Due to the non-normal distribution of the data, as indicated by the Shapiro–Wilk test, non-parametric statistical methods were employed. Specifically, the Mann–Whitney U test and the Wilcoxon rank-sum test were employed to compare responses between genders, and the Kruskal–Wallis H test was used to compare responses between age groups. The effect sizes (Cohen’s d) were calculated to assess the magnitude of the differences. The qualitative data were analyzed using thematic analysis, where open-ended responses were processed and categorized into themes using Microsoft Excel to provide a detailed understanding of teachers’ perceptions of feedback practices.

For RQ2 and the associated hypotheses (H2 and H3), descriptive and inferential statistical techniques were applied. Descriptive statistics, including means, frequencies, and percentages, were used to summarize responses regarding the usability of the tool. The distribution characteristics of the data were examined using skewness and kurtosis values to understand overall response tendencies. The Mann–Whitney U and Kruskal–Wallis H tests were applied to explore variations in perceptions of the tool across different demographic groups, including age and school type (primary vs. secondary). Item difficulty and discrimination analyses were conducted to evaluate the tool’s effectiveness. Item difficulty was assessed by calculating the proportion of respondents who rated items positively (scores of 4 or 5). Item discrimination was measured by correlating individual item scores with total scores to determine the degree to which items differentiated between satisfied and less-satisfied users.

Factor analysis was conducted to test the construct validity of the usability questionnaire. The Kaiser–Meyer–Olkin (KMO) measure of sampling adequacy was 0.943, indicating that the sample size was sufficient for factor analysis. Bartlett’s Test of Sphericity was significant (χ2 = 87,571.99, df = 703, p < 0.001), confirming the suitability of the data for this analysis. Principal component analysis revealed seven factors with eigenvalues greater than 1, explaining 70.75% of the total variance, with the first factor accounting for 21.18% of the variance. The subsequent factors explained 16.70% and 2.85% of the variance. The commonalities were all above 0.5, confirming that each item shared some common variance with the other items. Factor analysis revealed a coherent structure with seven factors representing different dimensions of teachers’ experiences and perceptions of using the technology-enhanced feedback tool. These factors include ease of use, importance of skills development, usefulness and efficiency, behavioral observations, school type, age, and education, as well as teaching experience and dealing with student behavior, which supports the validity of the questionnaire.

The internal consistency and reliability of the questionnaire items were confirmed using Cronbach’s alpha, which indicated high internal consistency (α = 0.93 for the first questionnaire and α = 0.96 for the Likert-type scales measuring the tool’s usefulness in the second questionnaire).

To ensure instrument validity, the questions and scales were carefully aligned with the research objectives. Triangulation was employed by collecting data from a diverse range of schools, considering factors such as the school level and location. Objectivity was maintained by providing standardized instructions and ensuring consistent conditions for completing the questionnaires.

Sensitivity in the data was addressed using 5-point Likert-type scales in the usability questionnaire, allowing for nuanced responses. Appropriate response specifications for the closed questions in both questionnaires ensured the collection of precise and relevant data.

4. Results

4.1. Teachers’ Opinions on the Importance and Frequency of Feedback

This section addresses Research Question 1 (RQ1) and Hypothesis 1 (H1), focusing on teachers’ views of the importance and frequency of providing feedback to parents and students. Data were collected using five closed and three open-ended questions.

- Importance of sharing feedback with parents

The vast majority (93.6%) of teachers agreed that providing feedback to parents is crucial for fostering a stimulating learning environment.

Open-ended responses, categorized into three themes, revealed that 59.0% of teachers believed regular feedback was essential for informing parents about their child’s progress and behavior, facilitating cooperation among teachers, parents, and students. Additionally, 20.5% stressed the importance of timely parental intervention, while 20.5% felt that direct communication or student responsibility for relaying feedback was preferable (Table 1).

Table 1.

Content response categories of open-ended teacher responses on why it is important to provide feedback to parents.

- Frequency of feedback

Regarding feedback frequency, 76.6% of the teachers favored weekly over daily updates, citing that weekly feedback balances the need for timely intervention without overwhelming parents.

The qualitative responses highlighted that 39.4% viewed weekly feedback as sufficient for tracking student behavior and academic progress. In contrast, 22.7% supported daily feedback for immediacy, while 21.2% argued that students were responsible for communicating with their parents (Table 2).

Table 2.

Content response categories of open-ended teacher responses on weekly versus daily feedback.

- Preferred timing for recording feedback

Most teachers indicated a preference for providing feedback during or immediately after lessons (30.4%) or at the end of the workday (28.3%), suggesting a preference for prompt feedback (Table 3).

Table 3.

Teachers’ opinions on when it would be easiest for them to write feedback.

- Frequency of recording feedback

In terms of recording feedback, 64.8% of the teachers advocated for daily or continuous documentation, underscoring the importance of detailed records (Table 4).

Table 4.

Teachers’ views on how often feedback should be recorded.

4.2. Differences between Genders and Age Groups in Teachers’ Opinions about the Importance and Frequency of Providing Feedback

In H1, we hypothesized that there would be no differences between genders and age groups in teachers’ opinions about the importance and frequency of providing feedback to students and sending it to parents.

The Shapiro–Wilk test revealed that none of the question responses followed a normal distribution (p < 0.05), justifying the use of non-parametric tests for subsequent analyses.

The Mann–Whitney U test and Wilcoxon rank-sum test were used to compare responses between male and female teachers. The results indicated no significant differences in responses across all questions (p > 0.05), except for a marginally nonsignificant difference in question on weekly versus daily feedback (p = 0.041, not statistically significant when considering multiple comparisons). The effect sizes (Cohen’s d) were calculated to assess the magnitude of the differences. All questions showed negligible effect sizes, except for the question on the most appropriate time to write feedback (Cohen’s d = −0.44). However, this difference was not statistically significant and was considered small to moderate, suggesting minimal practical significance.

The Kruskal–Wallis H test was used to examine the differences in responses across various age groups. The analysis revealed no statistically significant differences for any question (p > 0.05), indicating that age did not significantly influence teachers’ opinions regarding the importance and frequency of feedback.

The data suggest that teachers, regardless of gender or age, generally agree on the importance of feedback and maintain similar opinions regarding their frequency. Therefore, the findings support H1, indicating that demographic variables such as gender and age do not significantly impact teachers’ views of feedback practices. It is important to note that the results are based on teachers’ self-reported opinions and may be subject to individual bias or contextual factors. However, these findings provide insights into teachers’ perspectives on feedback and can inform educational practices and policies related to parent–teacher communication and student self-regulation.

4.3. Usability of the Technology-Enhanced Feedback Provision Tool

The usability of the “Compliments and Comments Tool” was evaluated on the basis of three key aspects: effectiveness, ease of use, ease of learning, and satisfaction. The following results address Research Question 2 (RQ2), which explores the extent of teachers’ satisfaction with the tool’s functionality.

4.3.1. Teachers’ Opinions of the Effectiveness of the Compliments and Comments Tool

Teachers evaluated the effectiveness of the tool based on six statements using a 5-point Likert scale. The responses are summarized in Table 5.

Table 5.

Teachers’ opinions on the effectiveness of the “Compliments and Comments Tool”.

Frequency analysis showed that most teachers rated the usability and effectiveness of the tool positively, with average scores ranging from 3.3 to 3.9 out of 5. The data showed a left-skewed distribution (negative skewness values ranging from −0.3 to −0.8), indicating a tendency towards higher ratings. The flat distribution (kurtosis values close to zero) suggests a spread-out range of responses, with mode values typically at 3 or 4, underscoring a generally favorable reception of the tool among teachers despite some ambivalence regarding its efficiency and time-saving capabilities.

Item difficulty and discrimination analyses were conducted to further evaluate the effectiveness of the tool. Item difficulty analysis revealed that the proportion of respondents rating the items as positive (4 or 5) ranged from 61.28% (“Allows giving feedback to students and parents”) to 74.85% (“Saves time”). This indicates a generally high level of satisfaction with the tool’s effectiveness.

Item discrimination, measured by the correlation between individual item scores and the total score, demonstrated a strong differentiation between satisfied and less satisfied users. Discrimination indices ranged from 0.743 (“Saves time”) to 0.879 (“Helps me to be more efficient”), with the highest values indicating that these items effectively distinguished between respondents with differing overall levels of satisfaction.

These analyses confirm a broadly positive perception of the effectiveness of the tool among teachers, highlighting areas of strength such as perceived usefulness and efficiency. However, the analysis also suggests potential areas for improvement, particularly in terms of time-saving capabilities and efficiency, as indicated by the relatively lower item difficulty and discrimination scores in these areas.

4.3.2. Teachers’ Opinion on the Ease of Use of the Compliments and Comments Tool

The usability of the “Compliments and Comments Tool” was further evaluated based on ease of use, which is critical given the limited classroom time during which teachers must provide feedback. Teachers’ responses were collected on a 5-point scale, assessing various ease-of-use aspects, including simplicity, user-friendliness, and the need for instructions (Table 6).

Table 6.

Teachers’ opinions on the ease of use of the “Compliments and Comments Tool”.

Users are more quickly and easily able to adopt the tool, which is easy to use and allows instant applications without written instructions or lengthy learning curves. User acceptance is also influenced by the functionality and design of the user interface. The user interface should be clear and understandable, which should enable the user to perform tasks quickly and efficiently. The user should make as few mistakes as possible during the use of the application because of misunderstandings or confusion in the user interface. In technology, this is called making an application or user interface “user-friendly” [94]. It is crucial for an application user to have simple access to self-correction features after making mistakes.

Frequency analysis revealed that most teachers rated the ease of use of the “Compliments and Comments Tool” positively, with average scores ranging from 3.7 to 4.1 out of five, although some teachers noted a need for more flexibility in feedback options. The data exhibited a left-skewed distribution (negative skewness values ranging from −0.6 to −1.1), indicating a tendency towards higher ratings. The relatively flat or negative kurtosis values suggest a diverse range of responses, with mode values typically at 4 or 5, underscoring a generally favorable reception of the tool’s ease of use among teachers (Table 6).

To further assess the ease of use of the tool, item difficulty and discrimination analyses were performed. Item difficulty analysis showed that the proportion of respondents who rated the items as positive (4 or 5) ranged from 61.3% (“It allows user flexibility”) to 74.9% (“I do not need written instructions to use it”). This indicates a generally high level of satisfaction with the ease of use of the tool. Item discrimination, measured by the correlation between individual item scores and the total score, demonstrated a strong differentiation between more and less satisfied users. Discrimination indices ranged from 0.752 (“I do not need written instructions to use it”) to 0.877 (“It is user-friendly”), with the highest values indicating that these items effectively distinguished between respondents with differing overall perceptions of ease of use.

These analyses confirm a broadly positive perception of the tool’s ease of use among teachers, highlighting strengths such as its perceived ease of use and minimal need for instruction. However, the analysis also suggested areas for improvement, particularly in aspects related to user flexibility and the ability to correct errors, as indicated by the relatively lower item difficulty and discrimination scores in these areas.

4.3.3. Teachers’ Opinion on Ease of Learning to Use and Satisfaction with the Compliments and Comments Tool

For new technologies to be successfully adopted in educational settings, they must be easy to learn and satisfy user expectations. The ease of learning a new tool is critical as it influences whether teachers will continue using it and recommend it to others. The “Compliments and Comments Tool” was evaluated based on these criteria using a 5-point Likert scale (Table 7).

Table 7.

Teachers’ opinions on the ease of learning to use and satisfaction with the “Compliments and Comments Tool”.

Frequency analysis revealed that most teachers rated the ease of learning and satisfaction with the “Compliments and Comments Tool” positively, with average scores ranging from 3.8 to 4.2 out of 5. The data exhibited a left-skewed distribution, as evidenced by the negative skewness values, suggesting a tendency towards higher ratings. The kurtosis values, varying across items (from −0.081 to 1.616), indicated distributions with sharper peaks for some items (e.g., “I quickly learned to use the tool” and “I can remember how to use the tool”) and more normal distributions for others (e.g., satisfaction and recommendation likelihood). This indicates a generally favorable perception among teachers regarding the tool’s ease of learning and overall satisfaction.

Item difficulty and discrimination analyses were conducted to further assess the tool’s ease of learning and satisfaction. Item difficulty analysis showed that the proportion of respondents rating the items as positive (4 or 5) ranged from 64.5% (“I would recommend the tool to a colleague teacher”) to 80.4% (“I can remember how to use the tool”). This finding suggests a high level of satisfaction with these aspects of the tool. Item discrimination, measured by the correlation between individual item scores and the total score, demonstrated a strong differentiation between more and less satisfied users. Discrimination indices ranged from 0.645 (“I would recommend the tool to a colleague teacher”) to 0.840 (“I am satisfied with the tool”), indicating that these items effectively distinguished between respondents with varying levels of satisfaction.

These analyses confirm a broadly positive perception of the tool’s ease of learning and user satisfaction among teachers, highlighting strengths such as the learning curve (“I quickly learned to use the tool”) and remembering how to use the tool. However, the analysis also suggested areas for improvement, particularly in enhancing satisfaction and the likelihood of recommending the tool, as indicated by the relatively lower item difficulty and discrimination scores in these areas.

Overall, the analyses of the usability of the tool across these three dimensions—effectiveness, ease of use, and ease of learning and satisfaction—confirm a generally positive perception of the “Compliments and Comments Tool” among teachers. The tool is well received for its usability and functionality, although specific areas such as efficiency, user flexibility, and comprehensive user satisfaction present opportunities for further refinement and enhancement.

4.4. Statistically Significant Differences in Opinions on Usefulness of the Tool According to the Age Group of Participants and Type of School Participants Teach in (Primary/Secondary)

We hypothesized that there would be differences in system usability satisfaction among teachers based on their age group (H2) and type of school (primary or secondary) (H3).

4.4.1. Statistically Significant Differences between the Age Groups

Hypothesis H2 sought to explore the impact of different age groups on teachers’ evaluations of the usability of a feedback tool in promoting self-regulation and learning strategies. A Kruskal–Wallis H test was conducted to determine whether there were differences in perceptions of the “Compliments and Comments Tool” between different age groups (20–30 years, 31–40 years, 41–50 years, and 51 years and older) across various variables.

- Differences between the age groups and opinions on the effectiveness of the tool

Statistically significant differences were found between the age groups of the participants and their opinions on the effectiveness of the tool.

Regarding the general perception of the usefulness of the tool and its capability to facilitate feedback to students and parents, there were no statistically significant differences among the age groups (p > 0.05). This indicates that teachers across all age groups generally perceived the tool as equally useful and effective in providing feedback. However, significant differences were observed in the following areas:

- Effectiveness in enhancing efficiency: The test showed a statistically significant difference, with a Kruskal–Wallis H value of 8.373 and a p-value of 0.039 (Table 8). This suggests that different age groups perceive the tool’s efficiency benefits differently, with some age groups finding it more effective than others in enhancing their efficiency. Specifically, teachers aged 31–40 and 41–50 years reported higher mean ranks (1445.25 and 1506.57, respectively) than those aged 20–30 (mean rank 1404.02) and 51 years and older (mean rank 1536.82).

Table 8. Kruskal–Wallis H test results of differences between age groups and opinions on the effectiveness of the tool.

Table 8. Kruskal–Wallis H test results of differences between age groups and opinions on the effectiveness of the tool. - Providing classroom overview: There was a statistically significant difference in perceptions of the tool providing better classroom oversight, with a Kruskal–Wallis H value of 8.045 and a p-value of 0.045 (Table 8). This indicates that the tool’s ability to offer insights into classroom activities differs across age groups. Teachers aged 31–40 years (mean rank 1461.60) and 41–50 years (mean rank 1516.85) rated this aspect higher than those aged 20–30 years (mean rank 1377.37) and those aged 51 years and older (mean rank 1520.18).

- Time saving: The tool’s ability to save teachers’ time showed a significant difference among age groups, with a Kruskal–Wallis H value of 13.817 and a p-value of 0.003 (Table 8). This implies that some age groups perceive the tool as more effective in saving time than others do. Teachers aged 31–40 years (mean rank 1439.28), 41–50 years (mean rank 1523.73), and 51 years and older (mean rank 1540.52) reported higher mean ranks than those aged 20–30 years (mean rank 1365.31).

- Meeting expectations: The perception of the tool enabling teachers to accomplish their expected tasks also showed a statistically significant difference, with a Kruskal–Wallis H value of 13.520 and a p-value of 0.004 (Table 8). This finding suggests variability in how different age groups perceive the tool’s ability to meet their expectations. Teachers aged 31–40 years (1473.14), 41–50 years (mean rank 1502.99), and 51 years and older (mean rank 1540.30) reported higher mean ranks than those aged 20–30 years (mean rank 1330.02).

The results revealed that age groups significantly impacted perceptions of the feedback tool in terms of enhancing efficiency, providing classroom oversight, saving time, and meeting expectations. Specifically, teachers aged 31–40 years and 41–50 years tended to have more favorable perceptions of the tool’s effectiveness in enhancing efficiency and providing classroom oversight. Teachers aged 31–40 years, 41–50 years, and 51 years and older perceived the tool as more effective in saving time and meeting their expectations compared to younger teachers aged 20–30 years.

These findings highlight the importance of considering age-related differences when implementing and training teachers in the use of feedback tools. Tailored support and training programs that address the specific needs of younger teachers (20–30 years old) may help enhance their perceptions and utilization of the tool, ultimately leading to more effective and efficient feedback processes across all age groups.

- Differences between the age groups and opinions on the ease of use of the tool

Statistically significant differences were also found between the age groups of the participants and their opinions on the ease of use of the tool.

The Kruskal–Wallis H test results indicate that perceptions of the feedback tool’s ease of use, user-friendliness, required steps for entry, flexibility, consistency, and ease of correcting errors do not significantly differ among age groups. However, there was a statistically significant difference in perceptions of the tool that did not require written instructions among different age groups, with a Kruskal–Wallis H value of 21.413 and a p-value < 0.001 (Table 8). Younger teachers aged 20–30 years (mean rank 1557.85) and those aged 31–40 years (mean rank 1552.24) found the tool easier to use without written instructions compared to older age groups. Thus, we conclude that older teachers who do not have extensive knowledge of digital technology need written guidance regarding the technical use of the tool (Table 9).

Table 9.

Kruskal–Wallis H test results of differences between age groups and opinions on the ease of use of the tool.

These findings highlight the importance of addressing the specific needs of different age groups, particularly in providing adequate instruction and support to ensure that all teachers can use the tool effectively.

- Differences between the age groups and opinions on the ease of learning and satisfaction with the tool

Statistically significant differences were also found between the age groups of the participants and their opinions on the ease of learning and satisfaction with the tool.

The Kruskal–Wallis H test results indicated significant differences in perceptions of the tool’s ease of use and user-friendliness among different age groups of teachers. However, there were no significant differences in perceptions regarding the number of steps required to enter and the flexibility provided by the tool.

There was a statistically significant difference in perceptions of the tool being easy to use among different age groups, with a Kruskal–Wallis H value of 35.702 and a p-value < 0.001. Younger teachers aged 20–30 years and 31–40 years (mean ranks 1559.09 and 1518.89, respectively) find the tool easier to use than older teachers aged 51 years and older. This suggests that younger teachers adapt to the tool more readily than their older counterparts. There was also a statistically significant difference in perceptions of the tool being user-friendly among different age groups, with a Kruskal–Wallis H value of 27.529 and a p-value < 0.001. Teachers aged 20–30 years and 31–40 years perceived the tool to be more user-friendly than those aged 51 years and older (Table 10).

Table 10.

Kruskal–Wallis H test results of differences between age groups and opinions on the ease of learning and satisfaction with the tool.

4.4.2. Statistically Significant Differences between Educational Levels

Hypothesis H3 sought to explore the impact of educational level (primary versus secondary school) on teachers’ evaluations of the usability of a feedback tool in promoting self-regulation and learning strategies. This objective was to analyze variations in the usability of the feedback tool between primary and secondary education levels, acknowledging potential disparities in the emphasis on self-regulatory skills and learning strategies at these respective levels.

The Mann–Whitney U test was conducted to compare the perceptions of primary and secondary school teachers regarding the usability of the tool. The results showed no statistically significant differences between the two groups for any of the measured variables (p > 0.05). Teachers from both the primary and secondary schools provided similar evaluations of the tool’s effectiveness, ease of use, and overall satisfaction. This implies that the tool is perceived similarly by teachers across different school types, which could mean that training and support efforts do not need to be differentiated based on school type (primary vs. secondary). We thus reject hypothesis H3.

These findings underscore the importance of addressing the specific needs of different age groups, particularly in providing adequate instruction and support to ensure that all teachers can use the tool effectively. Tailored training and support for older teachers can help bridge this gap and improve their ease of use and satisfaction with the tool.

5. Discussions

The integration of TEF systems into education has shown promising potential for improving teaching effectiveness and student outcomes. This study investigated teachers’ views on the importance and frequency of feedback and the usability of the “Compliments and Comments Tool”, focusing on teachers’ satisfaction and their perceptions of its impact on student self-regulation. This tool was designed to facilitate written feedback for students in the Slovenian education context. Our findings provide valuable insights into the significance of feedback in the educational process and underscore the necessity of user-centered design in educational technology.

5.1. Importance and Frequency of the Feedback Provided to Students

The surveyed teachers overwhelmingly recognized the critical role of feedback in fostering a stimulating learning environment and supporting student academic success [4,95,96]. Continuous feedback significantly enhances student performance, motivation, and self-regulation [2,24,70,97]. In digital learning environments, timely and positive feedback is particularly influential as it helps students adjust their learning strategies and enhance their self-efficacy [98]. Gambari et al. [2] found that consistent feedback correlates with improved academic performance and engagement, underscoring the need for effective feedback mechanisms in educational technology.

Regular feedback also keeps parents informed about their child’s progress and behavior, enabling timely intervention and problem-solving. This finding aligns with research indicating that effective communication among teachers, students, and parents is essential for academic and social success [99]. Face-to-face communication often faces practical and logistical constraints, making digital feedback systems a valuable alternative [100]. These systems allow teachers to efficiently provide detailed, personalized feedback [101,102]. Kounin’s research on classroom management shows that immediate, clear communication about student behavior can prevent disruptive behavior and foster a positive learning environment [103].

Teachers prefer weekly feedback over daily feedback, balancing the need for timely updates with the risk of overwhelming parents. Weekly feedback is generally sufficient for monitoring student behavior and academic progress, whereas daily feedback is reserved for immediate issues [104,105,106,107,108]. Older teachers particularly favor less frequent notifications, relying more on students’ self-reporting and comfort with fewer digital interactions [109]. This preference aligns with studies suggesting that experienced teachers prefer less frequent but more substantial feedback sessions, fostering greater student independence and self-regulation [110,111].

Despite these benefits, there are still areas for improvement. Some teachers struggle to balance the timeliness and quality of feedback, suggesting enhancements for more efficient delivery without sacrificing depth or personalization. Additionally, predefined feedback categories may limit flexibility and prevent teachers from effectively addressing unique or emergent issues.

Research has consistently shown that regular and continuous feedback supports reflective thinking, encouraging learners to integrate and apply new or deepened theoretical knowledge into practice and to plan productively for future learning [109,110]. Immediate feedback benefits inexperienced learners by offering prompt guidance, whereas delayed feedback allows for deeper reflection and understanding [41,111,112]. This dual approach promotes metacognitive skills, enabling students to critically evaluate their learning processes and outcomes [7,35]. In digital environments, the immediacy and accessibility of feedback can significantly enhance learning experiences. TEF systems, such as the “Compliments and Comments Tool”, streamline the feedback process and ensure that feedback is detailed and specific, which is crucial for effective student learning [10,12]. These systems also improve parental engagement by providing a transparent view of student progress and areas needing improvement, which are strongly associated with improved academic outcomes [14,15,16].

In conclusion, teachers highly value feedback due to its substantial impact on students’ academic success and personal development. Feedback has become a focus of teaching research and practice, as suggested by a recent study by Wisniewski et al. [8]. Continuous and regular feedback supports reflective thinking, learning integration, and productive planning. While face-to-face communication is crucial, online feedback platforms offer convenience, instant communication, and opportunities for teachers, students, and parents to remain informed and involved. Effective feedback and communication are vital for classroom management and addressing student behavior. Balancing immediate and delayed feedback is important depending on the context and student needs. Teachers’ investment in providing feedback underscores its significance, and systemic solutions can help optimize the process while considering teachers’ workloads and stakeholders’ needs.

5.2. Usability of the Feedback Provision Compliments and Comments Tool

The perceived usefulness of the system reflects a user’s belief that using the technology will help them improve their work performance [113]. Therefore, the usability of the “Compliments and Comments Tool” is a critical aspect of its effectiveness and overall acceptance by teachers. Our study revealed that the tool is generally well received, with teachers appreciating its ease of use and the structured approach it provides for delivering feedback. The tool’s user interface was rated positively, with a significant number of teachers agreeing that it helps them perform their tasks more efficiently, gives them a better overview of classroom activities, and enables them to provide feedback to their students and parents, making it usable according to usability measure instruments [79,82] and user-friendly [94].

However, some areas require improvement to further enhance the usability of the tool. Teachers pointed out that, while the tool is easy to use, it lacks flexibility. While useful, predefined categories do not always allow for the customization required to address unique classroom situations or individual student needs effectively. This rigidity can limit the tool’s effectiveness in providing personalized feedback, which is a critical component in fostering student self-regulation and learning [3,38,101]. Additionally, some teachers indicated that the tool could benefit from a more intuitive interface and better integration with other educational technologies to further streamline the feedback process [114,115,116].

User satisfaction with the tool’s effectiveness was high, with many teachers acknowledging its role in enhancing their efficiency, providing a comprehensive view of classroom dynamics and enabling them to provide feedback to their students and parents. These aspects are crucial for fostering an environment where students can receive immediate feedback, which is essential for their growth and development, and aligns with constructivist and cognitive learning theories that emphasize timely and personalized feedback as critical to student learning [4,5]. However, the tool’s ability to save time was met with mixed reviews. While some teachers found it time-saving, others felt that the process of entering feedback could be streamlined further, suggesting that the system’s usability might be improved by reducing the number of steps required to make an entry and enhancing user flexibility, as suggested by SUS and TAM measure instruments [80,81,82].

The tool’s ease of learning was also positively rated, with most teachers agreeing that they could quickly learn to use it and remember how to use it without requiring extensive written instructions [79,88]. This suggests that the tool is accessible to a broad range of users, including those who may not be technologically proficient. However, the feedback indicated a need for ongoing professional development to help teachers fully utilize the tool’s capabilities and ensure that it meets their needs effectively [91].

In conclusion, while the “Compliments and Comments Tool” is generally effective and user-friendly, there is room for improvement. Enhancements that allow for greater customization and flexibility as well as more intuitive design elements could significantly increase its usability and effectiveness. Future iterations of the tool should focus on these areas to better support teachers in delivering high-quality personalized feedback that fosters student self-regulation and academic success.

5.3. Interpretation of the Statistically Significant Differences

The analysis of statistically significant differences in perceptions of the “Compliments and Comments Tool” among teachers reveals critical insights into how various demographic groups interact with the tool. Age significantly influenced the teachers’ satisfaction with the tool’s usability and effectiveness. Conversely, there were no significant differences in the tool’s perceived effectiveness and ease of use between the primary and secondary school teachers.

Teachers aged 31–40 and 41–50 generally found the tool more effective in enhancing efficiency, providing classroom oversight, and saving time than their younger and older counterparts. This could be due to these age groups’ balanced experiences with both traditional and digital feedback methods, enabling them to leverage the tool’s functionalities effectively [98,117]. Conversely, teachers aged 51 years and older reported lower satisfaction with the tool’s ease of use, indicating a greater need for written instructions and support. This suggests that while the tool is generally accessible, older teachers might require additional training and resources to effectively utilize it [117,118]. Interestingly, younger teachers (20–30 years) found the tool easy to use without written instructions but were less satisfied with its overall effectiveness. This discrepancy might reflect a gap between younger teachers’ digital proficiency and their pedagogical experience, highlighting the need for professional development that addresses both technological and instructional competencies [119,120,121]. On the other hand, we found no significant differences in the perceived effectiveness and ease of use of the tool between primary and secondary school teachers. This suggests that the tool’s design is broadly applicable across different educational levels, indicating its versatility. However, the lack of differentiation might imply that the tool does not fully address the unique needs of primary and secondary education settings, which could be an area for future improvement.

In conclusion, the statistically significant differences in perceptions of the “Compliments and Comments Tool” underscore the importance of tailored support and training for different user groups. Ensuring that the tool meets the diverse needs of all teachers, regardless of their age or educational level, is crucial for its widespread adoption and effectiveness. Future research should focus on developing more nuanced training programs and tool enhancements that consider these demographic differences, ultimately leading to a more inclusive and effective TEF system.

6. Conclusions

This study provides valuable insights into the usability and effectiveness of the “Compliments and Comments Tool” for supporting student monitoring and feedback practices. Teachers generally received the tool well, appreciating its user-friendly interface and its potential to enhance feedback to students and parents. The tool facilitates student monitoring and promotes self-regulation through timely and personalized feedback, leading to higher motivation and better academic performance [122,123,124]. Educators should use tools that support students in setting learning and behavioral goals, self-monitoring, and self-reflection. Research has shown that self-regulated learning and behavior intervention programs significantly impact students’ academic performance, self-regulated strategies, and motivational beliefs [125,126,127].

Although the tool’s usability is satisfactory, there is room for improvement through content updates and customization to better meet diverse needs. Teachers’ competencies in using technology significantly impact their attitudes towards it [128,129,130,131], with positive attitudes enhancing adoption [132,133] and negative attitudes such as fear and risk aversion hindering it [134,135]. Statistically significant differences in perceived usability among different age groups suggest the need for tailored support and training. Younger teachers found the tool easy to use without written instructions but were less satisfied with its overall effectiveness. Older teachers reported lower satisfaction with the tool’s ease of use and required more written instructions and support.

The positive reception of this tool has several practical implications. It enhances teacher efficiency by streamlining the feedback process, facilitates better communication between teachers and parents, promotes self-regulation, and aligns with best practices in educational psychology. To maximize the potential of the tool, ongoing training and professional development for teachers should be prioritized, focusing on effective feedback and technology utilization. Policymakers should support the integration of technology-enhanced feedback systems by providing the necessary resources and infrastructure to ensure equitable access across all schools.

The positive reception of this tool has several practical implications. It enhances teacher efficiency by streamlining the feedback process, facilitating better communication between teachers and parents, promoting self-regulation, and aligning with best practices in educational psychology. Schools should integrate these tools to support holistic student development.

This study has several limitations that must be addressed. Further refinements in customization options and flexibility are required to meet diverse needs. While validity assessments support the use of the questionnaire in measuring teachers’ satisfaction, further research should explore other aspects of validity and the long-term impact of the tool on student outcomes. Another limitation was the questionnaire used to measure usability. Further studies are needed to investigate the relationships between participants’ personality traits and usability outcomes, as well as their attitudes toward technology and cultural and economic factors.

This study establishes a foundation and suggests potential directions for future research. Future studies should explore customizing the tool to meet diverse needs, conduct longitudinal studies to assess long-term impacts, and compare the effectiveness of the tool with other feedback systems. Integrating artificial intelligence for more personalized feedback and reducing teachers’ workloads is a promising area for future development. The “Compliments and Comments Tool” represents a significant advancement in educational technology, offering a user-friendly and effective means for teachers to provide feedback. Emphasizing user-centered design and system usability, the tool has the potential to significantly enhance educational practices and outcomes. Ongoing research and development are essential to refine the tool and ensure it meets the evolving needs of educators and students.

Funding

This research received no external funding.

Institutional Review Board Statement

The ethical review and approval for this study was waived. All data was collected in an anonymised format and participants volunteered to take part in the study.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Research data is available upon reasonable request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jeno, L.M.; Grytnes, J.-A.; Vandvik, V. The Effect of a Mobile-Application Tool on Biology Students’ Motivation and Achievement in Species Identification: A Self-Determination Theory Perspective. Comput. Educ. 2017, 107, 1–12. [Google Scholar] [CrossRef]

- Gambari, I.A.; Gbodi, B.E.; Olakanmi, E.U.; Abalaka, E.N. Promoting Intrinsic and Extrinsic Motivation among Chemistry Students Using Computer-Assisted Instruction. Contemp. Educ. Technol. 2016, 7, 25–46. [Google Scholar] [CrossRef] [PubMed]

- Butler, D.L.; Winne, P.H. Feedback and self-regulated learning: A theoretical synthesis. Rev. Educ. Res. 1995, 65, 245–281. [Google Scholar] [CrossRef]

- Hattie, J.; Timperley, H. The power of feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Nicol, D.; Macfarlane-Dick, D. Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Stud. High. Educ. 2006, 31, 199–218. [Google Scholar] [CrossRef]

- Butler, A.C.; Woodward, N.R. Toward Consilience in the Use of Task-Level Feedback to Promote Learning. Psychol. Learn. Motiv. 2018, 69, 1–38. [Google Scholar] [CrossRef]

- Truax, M.L. The Impact of Teacher Language and Growth Mindset Feedback on Writing Motivation. Lit. Res. Instr. 2017, 57, 135–157. [Google Scholar] [CrossRef]

- Wisniewski, B.; Zierer, K.; Hattie, J. The Power of Feedback Revisited: A Meta-Analysis of Educational Feedback Research. Front. Psychol. 2020, 10, 487662. [Google Scholar] [CrossRef]

- Gibbs, J.C.; Taylor, J.D. Comparing Student Self-Assessment to Individualized Instructor Feedback. Act. Learn. High. Educ. 2016, 17, 111–123. [Google Scholar] [CrossRef]

- Willis, J.; Gibson, A.; Kelly, N.; Spina, N.; Azordegan, J.; Crosswell, L. Towards Faster Feedback in Higher Education through Digitally Mediated Dialogic Loops. Australas. J. Educ. Technol. 2021, 37, 22–37. [Google Scholar] [CrossRef]

- Gibson, L.; Musti-Rao, S. Using Technology to Enhance Feedback to Student Teachers. Interv. Sch. Clin. 2015, 51, 307–311. [Google Scholar] [CrossRef]