Closing the Gap? The Ability of Adaptive Learning Courseware to Close Outcome Gaps in Principles of Microeconomics

Abstract

1. Introduction

Adaptive Learning Courseware and Its Impact on Outcomes

2. Materials and Methods

2.1. Research Questions and Hypotheses

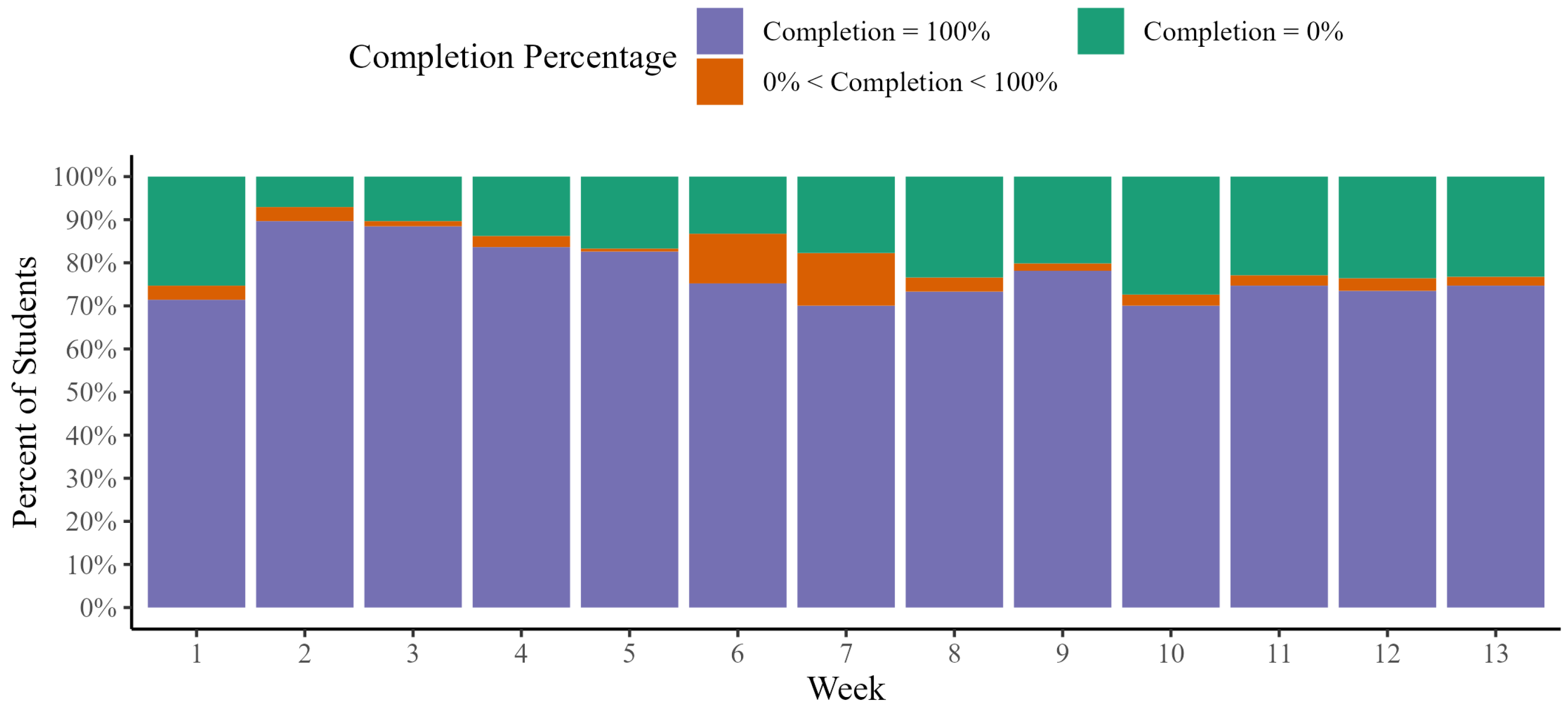

2.2. Implementation of Adaptive Learning Courseware into Principles of Microeconomics

2.3. Data Collected for Analysis

2.4. Research Methods

2.4.1. Descriptive Statistics

2.4.2. Regression Analysis (Fixed Effects Panel Models)

3. Results

- Adaptive learning assignment completion is positively correlated with the likelihood of answering exam questions correctly ( in Equation (2)), thus suggesting that completing improves student outcomes.

- Adaptive learning assignment completion is positively correlated with a disproportionate effect on exam question correctness for key demographics of students ( in Equation (2)), thus suggesting that completing can help close outcome gaps.

- Adaptive learning effects for the previous two hypotheses are strongest for foundational (easy) exam questions (with and being more positive for easy questions as compared to moderate and difficult questions), reflecting the adaptive assignment’s emphasis on the lower level of Bloom’s taxonomy.

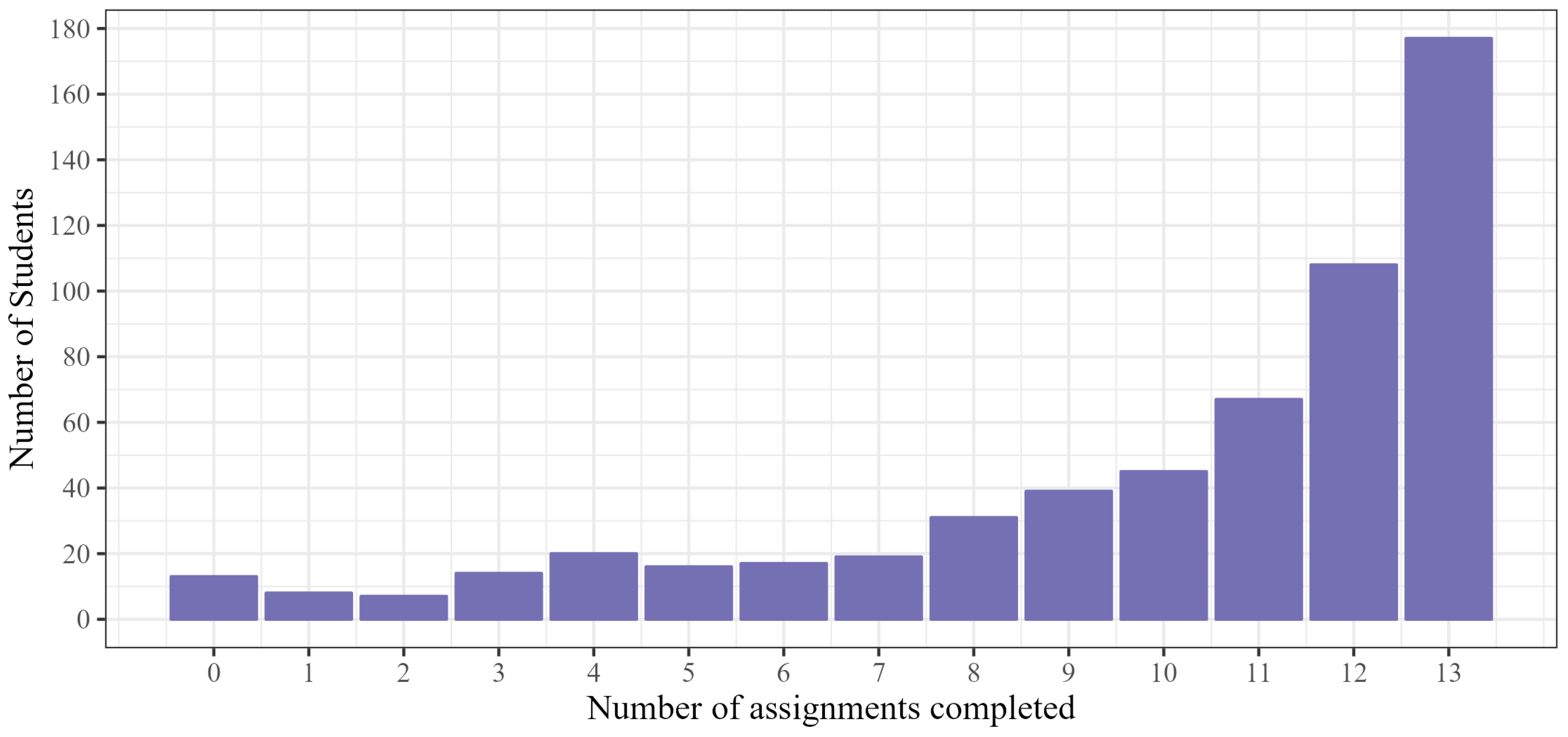

3.1. Descriptive Statistics

3.2. Regression Analysis Results

3.2.1. Stratified Regression Results

3.2.2. Robustness Checks

3.2.3. Results by Exam Question Difficulty

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| A (in tables) | Adaptive Learning Assignment Completers |

| ALEKS | Assessment and LEarning in Knowledge Spaces |

| ALMAP | Adaptive Learning Market Acceleration Program |

| DFW | D, F (grades earned), or Withdrawing students |

| F (in tables) | First-gen Student Indicator |

| L (in tables) | Low-income Student Indicator |

| M (in tables) | Minority Student Indicator |

| MDPI | Multidisciplinary Digital Publishing Institute |

| OLS | Ordinary Least Squares |

Appendix A. Fixed Effects Regression Results for Female and Male Students

| Dependent Variable: Exam Question Correct (1 = Yes, 0 = No) | ||||

|---|---|---|---|---|

| Low-Income Only | First-Gen Only | Minority Only | Non-All Interactions | |

| Completer Indicator (A) | 0.032 ** | 0.041 *** | 0.025 * | 0.036 ** |

| (0.013) | (0.013) | (0.014) | (0.017) | |

| A:Low-Income Indicator (L) | 0.026 | −0.001 | ||

| (0.031) | (0.072) | |||

| A:First-Gen Indicator (F) | −0.014 | −0.047 * | ||

| (0.027) | (0.028) | |||

| A:Minority Indicator (M) | 0.036 | 0.029 | ||

| (0.025) | (0.030) | |||

| A:L:F | −0.010 | |||

| (0.137) | ||||

| A:L:M | −0.023 | |||

| (0.084) | ||||

| A:F:M | 0.003 | |||

| (0.069) | ||||

| A:L:F:M | 0.122 | |||

| (0.161) | ||||

| Fixed Effects: | ||||

| Student | Yes | Yes | Yes | Yes |

| Week | Yes | Yes | Yes | Yes |

| Course Section | Yes | Yes | Yes | Yes |

| Unit (Exam) | Yes | Yes | Yes | Yes |

| Observations | 19,898 | 19,898 | 19,898 | 19,898 |

| R2 | 0.102 | 0.102 | 0.102 | 0.102 |

| Dependent Variable: Exam Question Correct (1 = Yes, 0 = No) | ||||

|---|---|---|---|---|

| Low-Income Only | First-Gen Only | Minority Only | All Interactions | |

| Completer Indicator (A) | 0.042 *** | 0.032 *** | 0.013 | 0.030 ** |

| (0.011) | (0.012) | (0.012) | (0.014) | |

| A:Low-Income Indicator (L) | −0.047 * | −0.083 ** | ||

| (0.028) | (0.037) | |||

| A:First-Gen Indicator (F) | −0.005 | 0.005 | ||

| (0.025) | (0.028) | |||

| A:Minority Indicator (M) | 0.069 *** | 0.042 | ||

| (0.023) | (0.027) | |||

| A:L:F | −0.021 | |||

| (0.052) | ||||

| A:L:M | 0.072 | |||

| (0.058) | ||||

| A:F:M | 0.009 | |||

| (0.042) | ||||

| A:L:F:M | 0.271 ** | |||

| (0.124) | ||||

| Fixed Effects: | ||||

| Student | Yes | Yes | Yes | Yes |

| Week | Yes | Yes | Yes | Yes |

| Course Section | Yes | Yes | Yes | Yes |

| Unit (Exam) | Yes | Yes | Yes | Yes |

| Observations | 21,002 | 21,002 | 21,002 | 21,002 |

| R2 | 0.100 | 0.100 | 0.100 | 0.101 |

Appendix B. Fixed Effects Regression Results for Honors and Non-Honors Students

| Dependent Variable: Exam Question Correct (1 = Yes, 0 = No) | ||||

|---|---|---|---|---|

| Low-Income Only | First-Gen Only | Minority Only | All Interactions | |

| Completer Indicator (A) | 0.046 | 0.058 | 0.060 | 0.072 |

| (0.035) | (0.040) | (0.056) | (0.067) | |

| A:Low-Income Indicator (L) | 0.116 ** | 0.137 *** | ||

| (0.041) | (0.032) | |||

| A:First-Gen Indicator (F) | −0.044 | −0.060 | ||

| (0.061) | (0.078) | |||

| A:Minority Indicator (M) | −0.015 | −0.046 | ||

| (0.058) | (0.062) | |||

| Fixed Effects: | ||||

| Student | Yes | Yes | Yes | Yes |

| Week | Yes | Yes | Yes | Yes |

| Course Section | Yes | Yes | Yes | Yes |

| Unit (Exam) | Yes | Yes | Yes | Yes |

| Observations | 1324 | 1324 | 1324 | 1324 |

| R2 | 0.100 | 0.099 | 0.099 | 0.100 |

| Dependent Variable: Exam Question Correct (1 = Yes, 0 = No) | ||||

|---|---|---|---|---|

| Low-Income Only | First-Gen Only | Minority Only | All Interactions | |

| Completer Indicator (A) | 0.038 *** | 0.035 *** | 0.017 * | 0.031 *** |

| (0.009) | (0.009) | (0.009) | (0.011) | |

| A:L | −0.019 | −0.055 | ||

| (0.022) | (0.035) | |||

| A:F | −0.007 | −0.014 | ||

| (0.019) | (0.021) | |||

| A:M | 0.057 *** | 0.045 ** | ||

| (0.017) | (0.022) | |||

| A:L:F | −0.025 | |||

| (0.050) | ||||

| A:L:M | 0.025 | |||

| (0.048) | ||||

| A:F:M | −0.011 | |||

| (0.046) | ||||

| A:L:F:M | 0.152 * | |||

| (0.089) | ||||

| Fixed Effects: | ||||

| Student | Yes | Yes | Yes | Yes |

| Week | Yes | Yes | Yes | Yes |

| Course Section | Yes | Yes | Yes | Yes |

| Unit (Exam) | Yes | Yes | Yes | Yes |

| Observations | 39,576 | 39,576 | 39,576 | 39,576 |

| R2 | 0.100 | 0.100 | 0.101 | 0.101 |

Appendix C. Fixed Effects Regression Results for Non-Participant Students

| Dependent Variable: Exam Question Correct (1 = Yes, 0 = No) | ||||

|---|---|---|---|---|

| Low-Income Only | First-Gen Only | Minority Only | All Interactions | |

| Completer Indicator (A) | 0.008 | 0.014 | 0.007 | −0.007 |

| (0.018) | (0.018) | (0.018) | (0.022) | |

| A:L | 0.059 | 0.061 * | ||

| (0.037) | (0.032) | |||

| A:F | 0.035 | 0.072 * | ||

| (0.036) | (0.038) | |||

| A:M | 0.052 | 0.066 | ||

| (0.032) | (0.051) | |||

| A:L:F | −0.098 | |||

| (0.070) | ||||

| A:L:M | −0.045 | |||

| (0.064) | ||||

| A:F:M | −0.146 * | |||

| (0.074) | ||||

| A:L:F:M | 0.157 | |||

| (0.144) | ||||

| Fixed Effects: | ||||

| Student | Yes | Yes | Yes | Yes |

| Week | Yes | Yes | Yes | Yes |

| Course Section | Yes | Yes | Yes | Yes |

| Unit (Exam) | Yes | Yes | Yes | Yes |

| Observations | 5829 | 5829 | 5829 | 5829 |

| R2 | 0.108 | 0.108 | 0.108 | 0.109 |

| Within R2 | 0.002 | 0.002 | 0.002 | 0.002 |

Appendix D. Fixed Effects Regression Results for “Sometimes-Completer” Students

| Dependent Variable: Exam Question Correct (1 = Yes, 0 = No) | ||||

|---|---|---|---|---|

| Low-Income Only | First-Gen Only | Minority Only | All Interactions | |

| Completer Indicator (A) | 0.040 *** | 0.038 *** | 0.020 ** | 0.034 *** |

| (0.009) | (0.009) | (0.009) | (0.011) | |

| A:Low-Income Indicator (L) | −0.015 | −0.052 | ||

| (0.022) | (0.035) | |||

| A:First-Gen Indicator (F) | −0.007 | −0.015 | ||

| (0.019) | (0.020) | |||

| A:Minority Indicator (M) | 0.058 *** | 0.035 * | ||

| (0.017) | (0.020) | |||

| A:L:F | −0.031 | |||

| (0.049) | ||||

| A:L:M | 0.044 | |||

| (0.047) | ||||

| A:F:M | 0.014 | |||

| (0.045) | ||||

| A:L:F:M | 0.121 | |||

| (0.087) | ||||

| Fixed Effects: | ||||

| Student | Yes | Yes | Yes | Yes |

| Week | Yes | Yes | Yes | Yes |

| Course Section | Yes | Yes | Yes | Yes |

| Unit (Exam) | Yes | Yes | Yes | Yes |

| Observations | 27,522 | 27,522 | 27,522 | 27,522 |

| R2 | 0.104 | 0.104 | 0.105 | 0.105 |

References

- Kuh, G.D.; Kinzie, J.L.; Buckley, J.A.; Bridges, B.K.; Hayek, J.C. What Matters to Student Success: A Review of the Literature; National Postsecondary Education Cooperative: Washington, DC, USA, 2006; Volume 8. [Google Scholar]

- Millea, M.; Wills, R.; Elder, A.; Molina, D. What matters in college student success? Determinants of college retention and graduation rates. Education 2018, 138, 309–322. [Google Scholar]

- Lewis, N.A., Jr.; Yates, J.F. Preparing disadvantaged students for success in college: Lessons learned from the preparation initiative. Perspect. Psychol. Sci. 2019, 14, 54–59. [Google Scholar] [CrossRef] [PubMed]

- Kassis, M.M.; Boldt, D.J. Factors impacting student success in introductory economics courses. J. Econ. Educ. 2020, 20, 41–63. [Google Scholar]

- Anderson, J.; Devlin, M. Data analytics in adaptive learning for equitable outcomes. In Data Analytics and Adaptive Learning; Routledge: Abingdon, UK, 2023; pp. 170–188. [Google Scholar]

- Hout, M. Social and economic returns to college education in the United States. Annu. Rev. Sociol. 2012, 38, 379–400. [Google Scholar] [CrossRef]

- Tyton Partners. Learning to Adapt: A Case for Accelerating Adaptive Learning in Higher Education. 2013. Available online: https://tytonpartners.com/learning-to-adapt-a-case-for-accelerating-adaptive-learning-in-higher-education/ (accessed on 1 May 2024).

- McCarthy, B. Journey to Personalized Learning-Bright Future: A Race to the Top-District Initiative in Galt Joint Union Elementary School District. WestEd. 2017. Available online: https://www.wested.org/wp-content/uploads/2017/03/resource-journey-to-personalized-learning.pdf (accessed on 1 May 2024).

- Konnova, L.; Lipagina, L.; Postovalova, G.; Rylov, A.; Stepanyan, I. Designing adaptive online mathematics course based on individualization learning. Educ. Sci. 2019, 9, 182. [Google Scholar] [CrossRef]

- Sharma, A.; Szostak, B. Adapting to Adaptive Learning. 2018. Available online: https://www.chieflearningofficer.com/2018/01/10/adapting-adaptive-learning/ (accessed on 1 May 2024).

- Gebhardt, K. Adaptive learning courseware as a tool to build foundational content mastery: Evidence from principles of microeconomics. Curr. Issues Emerg. eLearning 2018, 5, 2. [Google Scholar]

- Dziuban, C.; Moskal, P.; Parker, L.; Campbell, M.; Howlin, C.; Johnson, C. Adaptive Learning: A Stabilizing Influence across Disciplines and Universities. Online Learn. 2018, 22, 7–39. [Google Scholar] [CrossRef]

- Xie, H.; Chu, H.C.; Hwang, G.J.; Wang, C.C. Trends and development in technology-enhanced adaptive/personalized learning: A systematic review of journal publications from 2007 to 2017. Comput. Educ. 2019, 140, 103599. [Google Scholar] [CrossRef]

- Murray, M.C.; Pérez, J. Informing and performing: A study comparing adaptive learning to traditional learning. Inform. Sci. Int. J. Emerg. Transdicipline 2015, 18, 111. [Google Scholar]

- Griff, E.R.; Matter, S.F. Evaluation of an adaptive online learning system. Br. J. Educ. Technol. 2013, 44, 170–176. [Google Scholar] [CrossRef]

- White, G. Adaptive learning technology relationship with student learning outcomes. J. Inf. Technol. Educ. Res. 2020, 19, 113–130. [Google Scholar] [CrossRef] [PubMed]

- Hagerty, G.; Smith, S. Using the web-based interactive software ALEKS to enhance college algebra. Math. Comput. Educ. 2005, 39, 183–194. [Google Scholar]

- Bailey, A.; Vaduganathan, N.; Henry, T.; Laverdiere, R.; Pugliese, L. Making Digital Learning Work: Success Strategies from Six Leading Universities and Community Colleges; Boston Consulting Group: Boston, MA, USA, 2018. [Google Scholar]

- Yarnall, L.; Means, B.; Wetzel, T. Lessons learned from early implementations of adaptive courseware. SRI Educ. 2016. [Google Scholar] [CrossRef]

- Li, W.; Sun, K.; Schaub, F.; Brooks, C. Disparities in students’ propensity to consent to learning analytics. Int. J. Artif. Intell. Educ. 2022, 32, 564–608. [Google Scholar] [CrossRef]

- Bergé, L. Efficient Estimation of Maximum Likelihood Models with Multiple Fixed-Effects: The R Package FENmlm. CREA Discussion Papers. 2018. Available online: https://cran.r-project.org/web/packages/FENmlm/vignettes/FENmlm.html (accessed on 1 August 2024).

- Renick, T.M. Predictive analytics, artificial intelligence and the impact of delivering personalized supports to students from underserved backgrounds. In Data Analytics and Adaptive Learning; Routledge: Abingdon, UK, 2023; pp. 78–91. [Google Scholar]

- Long, M.G.; Gebhardt, K.; McKenna, K. Success Rate Disparities between Online and Face-to-Face Economics Courses: Understanding the Impacts of Student Affiliation and Course Modality. Online Learn. 2023, 27, 461–485. [Google Scholar] [CrossRef]

- Haak, D.C.; HilleRisLambers, J.; Pitre, E.; Freeman, S. Increased structure and active learning reduce the achievement gap in introductory biology. Science 2011, 332, 1213–1216. [Google Scholar] [CrossRef] [PubMed]

| Difficulty Ranking | Difficulty Description | Bloom’s Taxonomy | Exam Question Keywords |

|---|---|---|---|

| 1 | Easy | Remember Knowledge | Define Identify Choose |

| 2 | Moderate | Understand Comprehension | Explain Interpret Show |

| 3 | Difficult | Apply Application | Calculate Implement Solve |

| All Students | Frequent + Always Completers (F/A) | Infrequent + Never Completers (I/N) | (F/A) − (I/N) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Variable | n | Mean | SD | Min. | Max. | n | Mean | SD | n | Mean | SD | t-Test (p-Value) |

| Female | 581 | 0.470 | 0.500 | 0.000 | 1.000 | 490 | 0.500 | 0.500 | 91 | 0.310 | 0.460 | 0.0004 |

| Census GPA | 581 | 2.950 | 0.890 | 0.000 | 4.000 | 490 | 2.930 | 0.910 | 91 | 3.060 | 0.740 | 0.1476 |

| Resident | 581 | 0.750 | 0.440 | 0.000 | 1.000 | 490 | 0.750 | 0.430 | 91 | 0.730 | 0.450 | 0.6427 |

| Honors Participation | 581 | 0.040 | 0.190 | 0.000 | 1.000 | 490 | 0.030 | 0.180 | 91 | 0.040 | 0.210 | 0.6897 |

| Low-income (L) | 581 | 0.180 | 0.390 | 0.000 | 1.000 | 490 | 0.180 | 0.380 | 91 | 0.200 | 0.400 | 0.6564 |

| First-gen (F) | 581 | 0.250 | 0.440 | 0.000 | 1.000 | 490 | 0.250 | 0.430 | 91 | 0.300 | 0.460 | 0.3400 |

| Minority (M) | 581 | 0.260 | 0.440 | 0.000 | 1.000 | 490 | 0.250 | 0.440 | 91 | 0.270 | 0.450 | 0.6717 |

| Participation | 580 | 0.850 | 0.360 | 0.000 | 1.000 | 490 | 0.900 | 0.300 | 91 | 0.580 | 0.500 | 0.0000 |

| Major Requirement | 468 | 0.720 | 0.260 | 0.000 | 1.000 | 392 | 0.720 | 0.450 | 76 | 0.700 | 0.460 | 0.7036 |

| Adaptive Assignment | 581 | 0.790 | 0.260 | 0.000 | 1.000 | 490 | 0.880 | 0.140 | 91 | 0.280 | 0.170 | 0.0000 |

| Exam Questions Correct | 579 | 0.720 | 0.110 | 0.380 | 0.980 | 490 | 0.720 | 0.110 | 89 | 0.690 | 0.110 | 0.0039 |

| Non-Completer (NC) | Completer (C) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Group | n | Mean | SD | Diff (BL) | n | Mean | SD | Diff (BL) | Diff (C-NC) | Comp (%) |

| Baseline | 4354 | 0.69 | 0.46 | 20,751 | 0.74 | 0.44 | 0.05 | 83 | ||

| L only | 621 | 0.71 | 0.45 | 0.02 | 2144 | 0.72 | 0.45 | −0.02 | 0.01 | 78 |

| F only | 1484 | 0.69 | 0.46 | −0.01 | 6712 | 0.70 | 0.46 | −0.04 | 0.01 | 82 |

| M only | 1416 | 0.65 | 0.48 | −0.04 | 4974 | 0.75 | 0.44 | 0.01 | 0.10 | 78 |

| L + F | 385 | 0.69 | 0.46 | −0.02 | 1210 | 0.65 | 0.48 | −0.09 | −0.04 | 76 |

| L + M | 460 | 0.72 | 0.45 | 0.03 | 3230 | 0.76 | 0.43 | 0.02 | 0.04 | 88 |

| F + M | 353 | 0.62 | 0.49 | −0.07 | 1487 | 0.66 | 0.47 | −0.08 | 0.04 | 81 |

| L + F + M | 286 | 0.57 | 0.50 | −0.12 | 818 | 0.69 | 0.46 | −0.05 | 0.12 | 74 |

| Non-Completer (NC) | Completer (C) | ||||||

|---|---|---|---|---|---|---|---|

| Difficulty | n | Mean | SD | n | Mean | SD | Diff (C-NC) |

| All students | |||||||

| Easy | 3639 | 0.72 | 0.45 | 15,721 | 0.78 | 0.42 | 0.06 |

| Moderate | 3049 | 0.68 | 0.47 | 13,972 | 0.72 | 0.45 | 0.04 |

| Difficult | 2671 | 0.62 | 0.49 | 11,650 | 0.67 | 0.47 | 0.05 |

| Baseline | |||||||

| Easy | 1684 | 0.73 | 0.44 | 7882 | 0.79 | 0.41 | 0.06 |

| Moderate | 1482 | 0.69 | 0.46 | 7007 | 0.73 | 0.44 | 0.04 |

| Difficult | 1241 | 0.63 | 0.48 | 5862 | 0.68 | 0.47 | 0.05 |

| L only | |||||||

| Easy | 245 | 0.74 | 0.44 | 809 | 0.78 | 0.42 | 0.04 |

| Moderate | 202 | 0.72 | 0.45 | 726 | 0.71 | 0.45 | −0.01 |

| Difficult | 174 | 0.66 | 0.47 | 608 | 0.64 | 0.48 | −0.02 |

| F only | |||||||

| Easy | 579 | 0.73 | 0.44 | 2555 | 0.74 | 0.44 | 0.01 |

| Moderate | 485 | 0.68 | 0.47 | 2265 | 0.71 | 0.45 | 0.03 |

| Difficult | 420 | 0.64 | 0.48 | 1892 | 0.64 | 0.48 | 0.00 |

| M only | |||||||

| Easy | 537 | 0.68 | 0.47 | 1905 | 0.80 | 0.40 | 0.12 |

| Moderate | 468 | 0.65 | 0.48 | 1685 | 0.72 | 0.45 | 0.07 |

| Difficult | 411 | 0.60 | 0.49 | 1384 | 0.69 | 0.46 | 0.09 |

| L + F + M | |||||||

| Easy | 109 | 0.67 | 0.47 | 315 | 0.73 | 0.44 | 0.06 |

| Moderate | 88 | 0.51 | 0.50 | 278 | 0.68 | 0.47 | 0.17 |

| Difficult | 89 | 0.51 | 0.50 | 225 | 0.63 | 0.48 | 0.12 |

| Dependent Variable: Exam Question Correct (1 = Yes, 0 = No) | ||||

|---|---|---|---|---|

| Low-Income Only | First-Gen Only | Minority Only | All Interactions | |

| Completer Indicator (A) | 0.038 *** | 0.036 *** | 0.018 * | 0.033 ** |

| (0.008) | (0.009) | (0.009) | (0.011) | |

| Low-Income Indicator (L) | −1.754 | −1.510 | ||

| (50,650.2) | (40,453.8) | |||

| First-Gen Indicator (F) | −0.530 | −0.554 | ||

| (22,125.3) | (21,763.2) | |||

| Minority Indicator (M) | −0.581 | |||

| (27,499.7) | ||||

| A:L | −0.018 | −0.056 | ||

| (0.021) | (0.034) | |||

| A:F | −0.008 | −0.015 | ||

| (0.019) | (0.020) | |||

| A:M | 0.055 *** | 0.039 | ||

| (0.017) | (0.020) | |||

| A:L:F | −0.024 | |||

| (0.050) | ||||

| A:L:M | 0.034 | |||

| (0.047) | ||||

| A:F:M | −0.006 | |||

| (0.045) | ||||

| A:L:F:M | 0.144 | |||

| (0.088) | ||||

| Fixed Effects: | ||||

| Student | Yes | Yes | Yes | Yes |

| Week | Yes | Yes | Yes | Yes |

| Course Section | Yes | Yes | Yes | Yes |

| Unit (Exam) | Yes | Yes | Yes | Yes |

| Observations | 40,900 | 40,900 | 40,900 | 40,900 |

| R2 | 0.100 | 0.100 | 0.101 | 0.101 |

| Dependent Variable: Exam Question Correct (1 = Yes, 0 = No) | ||||

|---|---|---|---|---|

|

Major Requirement, Minority Only |

Major Requirement, All Interactions |

Not a Requirement, Minority Only |

Not a Requirement, All Interactions | |

| Completer Indicator (A) | 0.024 ** | 0.043 *** | 0.004 | 0.008 |

| (0.011) | (0.013) | (0.016) | (0.020) | |

| A:Low-Income Indicator (L) | −0.080 * | 0.031 | ||

| (0.041) | (0.024) | |||

| A:First-Gen Indicator (F) | −0.019 | −0.006 | ||

| (0.025) | (0.036) | |||

| A:Minority Indicator (M) | 0.041 ** | 0.027 | 0.084 *** | 0.065 |

| (0.019) | (0.024) | (0.032) | (0.039) | |

| A:L:F | 0.006 | −0.167 * | ||

| (0.057) | (0.094) | |||

| A:L:M | 0.052 | −0.058 | ||

| (0.058) | (0.056) | |||

| A:F:M | 0.009 | −0.035 | ||

| (0.057) | (0.061) | |||

| A:L:F:M | 0.040 | 0.456 *** | ||

| (0.098) | (0.137) | |||

| Fixed Effects: | ||||

| Student | Yes | Yes | Yes | Yes |

| Week | Yes | Yes | Yes | Yes |

| Course Section | Yes | Yes | Yes | Yes |

| Unit (Exam) | Yes | Yes | Yes | Yes |

| Observations | 40,900 | 40,900 | 40,900 | 40,900 |

| R2 | 0.100 | 0.100 | 0.101 | 0.101 |

| Dependent Variable: Exam Question Correct (1 = Yes, 0 = No) | ||||

|---|---|---|---|---|

| Low-Income Only | First-Gen Only | Minority Only | All Interactions | |

| Completer Indicator (A) | 0.043 *** | 0.040 *** | 0.019 * | 0.044 *** |

| (0.009) | (0.010) | (0.011) | (0.012) | |

| A:Low-Income Indicator (L) | −0.039 | −0.073 ** | ||

| (0.025) | (0.037) | |||

| A:First-Gen Indicator (F) | −0.019 | −0.039 * | ||

| (0.021) | (0.022) | |||

| A:Minority Indicator (M) | 0.053 *** | 0.024 | ||

| (0.020) | (0.022) | |||

| A:L:F | −0.004 | |||

| (0.053) | ||||

| A:L:M | 0.030 | |||

| (0.055) | ||||

| A:F:M | 0.030 | |||

| (0.053) | ||||

| A:L:F:M | 0.175 * | |||

| (0.102) | ||||

| Fixed Effects: | ||||

| Student | Yes | Yes | Yes | Yes |

| Week | Yes | Yes | Yes | Yes |

| Course Section | Yes | Yes | Yes | Yes |

| Unit (Exam) | Yes | Yes | Yes | Yes |

| Observations | 35,071 | 35,071 | 35,071 | 35,071 |

| R2 | 0.098 | 0.098 | 0.098 | 0.099 |

| Dependent Variable: Exam Question Correct (1 = Yes, 0 = No) | |||

|---|---|---|---|

| Easy Questions | Moderate Questions | Difficult Questions | |

| Completer Indicator (A) | 0.034 ** | 0.032 * | 0.026 |

| (0.016) | (0.016) | (0.023) | |

| A:Low-Income Indicator (L) | −0.033 | −0.011 | −0.132 ** |

| (0.059) | (0.044) | (0.063) | |

| A:First-Gen Indicator (F) | −0.037 | 0.030 | −0.035 |

| (0.033) | (0.046) | (0.042) | |

| A:Minority Indicator (M) | 0.075 *** | −0.033 | 0.084 ** |

| (0.026) | (0.034) | (0.037) | |

| A:L:F | −0.050 | −0.087 | 0.076 |

| (0.080) | (0.087) | (0.085) | |

| A:L:M | −0.028 | 0.028 | 0.108 |

| (0.073) | (0.066) | (0.085) | |

| A:F:M | −0.046 | 0.041 | −0.011 |

| (0.054) | (0.080) | (0.086) | |

| A:L:F:M | 0.196 | 0.175 | 0.046 |

| (0.132) | (0.127) | (0.139) | |

| Fixed Effects: | |||

| Student | Yes | Yes | Yes |

| Week | Yes | Yes | Yes |

| Course Section | Yes | Yes | Yes |

| Unit (Exam) | Yes | Yes | Yes |

| Observations | 15,671 | 13,716 | 11,512 |

| R2 | 0.125 | 0.144 | 0.133 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gebhardt, K.; Blake, C.D. Closing the Gap? The Ability of Adaptive Learning Courseware to Close Outcome Gaps in Principles of Microeconomics. Educ. Sci. 2024, 14, 1025. https://doi.org/10.3390/educsci14091025

Gebhardt K, Blake CD. Closing the Gap? The Ability of Adaptive Learning Courseware to Close Outcome Gaps in Principles of Microeconomics. Education Sciences. 2024; 14(9):1025. https://doi.org/10.3390/educsci14091025

Chicago/Turabian StyleGebhardt, Karen, and Christopher D. Blake. 2024. "Closing the Gap? The Ability of Adaptive Learning Courseware to Close Outcome Gaps in Principles of Microeconomics" Education Sciences 14, no. 9: 1025. https://doi.org/10.3390/educsci14091025

APA StyleGebhardt, K., & Blake, C. D. (2024). Closing the Gap? The Ability of Adaptive Learning Courseware to Close Outcome Gaps in Principles of Microeconomics. Education Sciences, 14(9), 1025. https://doi.org/10.3390/educsci14091025