Abstract

To help prepare students to address future challenges in Science, Technology, Engineering, and Mathematics (STEM), they need to develop 21st-century skills. These skills are mediated by their beliefs about the nature of scientific knowledge and practices, or epistemological beliefs. One approach shown to support students’ development of these beliefs and skills is problem-based instruction (PBI), which encourages collaborative self-directed learning while working on open-ended problems. We used a mixed-method qualitative approach to examine how implementing PBI in a physics course taught at a Dutch university affected students’ beliefs about physics and learning physics. Analysis of the responses to the course surveys (41–74% response rates) from the first implementation indicated students appreciated opportunities for social interactions with peers and use of scientific equipment with PBI but found difficulties connecting to the Internet given the COVID-19 restrictions. The Colorado Learning Attitudes towards Science Survey (CLASS), a validated survey on epistemological beliefs about physics and learning physics, was completed by a second cohort of students in a subsequent implementation of PBI for the same course; analysis of the students’ pre- and post-responses (28% response rate) showed a slight shift towards more expert-like perspectives despite challenges (e.g., access to lab). Findings from this study may inform teachers with an interest in supporting the development of students’ epistemological beliefs about STEM and the implementation of PBI in undergraduate STEM courses.

1. Introduction

Undergraduate education in Science, Technology, Engineering, and Mathematics (STEM) plays an important role in contributing to the common good, especially in face of today’s economic, environmental, and societal challenges. The goals of higher education have been shifting away from pure knowledge acquisition to also include scientific skills and practices that can help students contribute to the future workforce and global economy [1,2,3]. University instructors can support their students in meeting those challenges by helping them develop 21st-century skills and practices (e.g., critical thinking, problem solving, and collaboration) [1] in STEM fields, such as physics [2,3]. The development of these skills and practices is mediated by students’ epistemologies [4], which address the nature of knowledge (e.g., structure and source) and knowing (e.g., control of learning and rate of knowledge acquisition). For instance, Kardash and Scholes [5] found that students who expressed complex epistemological beliefs (e.g., knowledge is tentative and derived by reason) also expressed more sophisticated critical thinking and problem-solving skills when compared to students who expressed naïve epistemological beliefs (e.g., knowledge is absolute and comes from “expert” sources); their study showed that students’ epistemological beliefs affected their use of 21st-century skills (critical thinking and problem solving).

Because epistemological beliefs influence students’ learning of scientific knowledge and 21st-century skills, it is important to consider how learning environments can be designed to promote more sophisticated beliefs about scientific knowledge in students. While few studies describe the impact of learning environments on epistemological beliefs [6,7], further research is needed to examine how those relationships connect to students’ development of 21st-century skills in STEM higher education. One teaching practice widely used to support the development of critical thinking and problem solving is problem-based instruction (PBI) [8]. In PBI, instructors design and facilitate learning experiences that motivate and support students’ independent learning by presenting real-world issues for them to solve while students collaborate with their peers to clarify unclear terms, define, and analyze the problem, formulate goals, seek information from appropriate resources, and synthesize and apply the new information [9,10,11].

The aim of this study is to determine how undergraduate students’ beliefs about physics and learning physics change in a Waves and Optics course taught with PBI. To address this aim, we use design-based implementation research (DBIR) [12]. DBIR is a process where practitioners and researchers iteratively and collaboratively develop, test, and refine a context-specific intervention [13,14]. In this study, the intervention is the implementation of PBI in a Waves and Optics course over two subsequent years. Our research questions are as follows: (1) How did students respond to PBI during the first year of implementation? (2) How did students’ beliefs about physics and learning physics change during the second year of implementation? In the following sections, we explain our methodological choices and their appropriateness for informing curricular revisions. Using the data collected from surveys, we analyze the students’ responses to the implemented changes and changes in their beliefs about physics and learning physics. We then discuss the benefits and challenges of this design change and how the final implementation of PBI influenced students’ beliefs about physics and learning physics, in addition to the possibilities of implementing PBI across bachelor-level STEM courses.

2. Materials and Methods

In this study, we used a parallel mixed-method research approach to characterize students’ responses to the PBI approach implemented in a Waves and Optics course. Data were collected over a two-year period using surveys, which were used to document students’ engagement with the content, projects, and course interactions.

2.1. Participants and Research Context

The participants were two cohorts of mostly second-year undergraduate students enrolled in the 10-week Waves and Optics course offered at the University of Groningen, The Netherlands. Waves and Optics is a standard second-year course in physics that follows from ideas introduced during the first-year Electricity and Magnetism course. It is appropriate for this study because topics in Waves and Optics are often readily apparent in physical phenomena, which makes them more conducive to designing PBI prompts. Additionally, second-year students would already have had practical experiences from their lab courses in the first year. Both cohorts were similar with respect to their chosen field of study (50% physics, 25% applied physics, and 25% astronomy), nationality (60% Dutch, 25% other European Union, and 15% rest of the world), and gender (27% female and 63% male), with 197 students in the first cohort (8% dropout) and 132 students in the second cohort (7% dropout). Prior to Waves and Optics, students had not engaged with PBI in their other university courses.

Prior to PBI implementation, Waves and Optics consisted of three components: lecture, tutorial, and lab. Lectures and tutorials were each two hours in duration and met twice per week while lab sessions were three hours, and students met once every three weeks. Students were assigned pre-lecture reading assignments that the instructor used to introduce and explain topics during lectures. During tutorials, teaching assistants (TAs) helped students make progress on the assigned textbook exercises. During labs, students worked in pairs to document and analyze findings from carrying out lab procedures; this work culminated in a written lab report. Course grades were determined by scores on reading assignments (10%), lab reports (30%), midterm exam (20%), and final exam (40%).

2.2. Design, Data Sources, and Procedures

We used design-based implementation research (DBIR) as our methodological framework because it offers insights into the complexities associated with enacting curricular adaptations [10]. The guiding principles of DBIR include (1) jointly defined problems between practitioners and researchers, (2) commitment to iterative and collaborative design, (3) development of knowledge and theory through disciplined inquiry, and (4) development of the capacity to sustain change within systems [15,16,17]. The third author was the instructor for the course, the second author was a TA for the course, and the first author was an education researcher who was present during the weekly planning meetings but not involved with the instruction. In collaboration with other staff (e.g., TAs, lab instructors, and faculty), the authors discussed the design of the course, along with possible challenges in implementing PBI and possible responses to those challenges (first DBIR principle).

The learning objectives were revised to align with our purpose for implementing PBI, which included (1) understanding the core disciplinary ideas in waves and optics, (2) using mathematical tools to represent the relationships between physical variables, (3) designing and carrying out experimental investigations for specific core ideas, (4) researching relevant resources to learn more about the core ideas and communicate findings, and (5) working constructively to engage in problem-solving skills (Appendix B). Of these objectives, the last two explicitly addressed collaboration with peers. The final exam used in the previous year to assess students, prior to PBI implementation, was replaced with group research projects. Course grades were determined by their scores on group research reports (60%), contribution to the group process (20%), and individually completed homework sets (20%).

The implementation of PBI primarily affected the tutorial and lab components of the course, with fewer changes to the lecture and overall course grades. The format and content of the lectures were the same as the previous year except students did not need to complete reading assignments prior to lectures, and it was held online due to COVID-19 restrictions. Students were also introduced to problem-based learning during the first lecture, which they were to apply during tutorials instead of completing textbook exercises. During tutorials, students worked in groups of four to investigate sets of related topics, as shown in Table 1.

Table 1.

Tutorial sections with associated topics.

Each topic (e.g., sun spectrum) was summarized in a document created by the TAs and instructor with the intent of guiding students in developing their own questions and experimental designs. The documents included a brief introduction of the topic, suggested readings, and prompts to help students start thinking about their questions to investigate (see Appendix A for a sample topic).

Prior to the start of the course, each group of four students (self-selected) enrolled in the section based on the topics of most interest to them and section availability. During tutorials, groups were instructed to collaborate within their groups to identify a research question in which they would design and conduct the experiment. The experimental design was carried out during lab, with only two students per group present at a time due to COVID-19 restrictions. After data collection was completed, group members analyzed the data and produced a report documenting their investigation, findings, analysis, and conclusion.

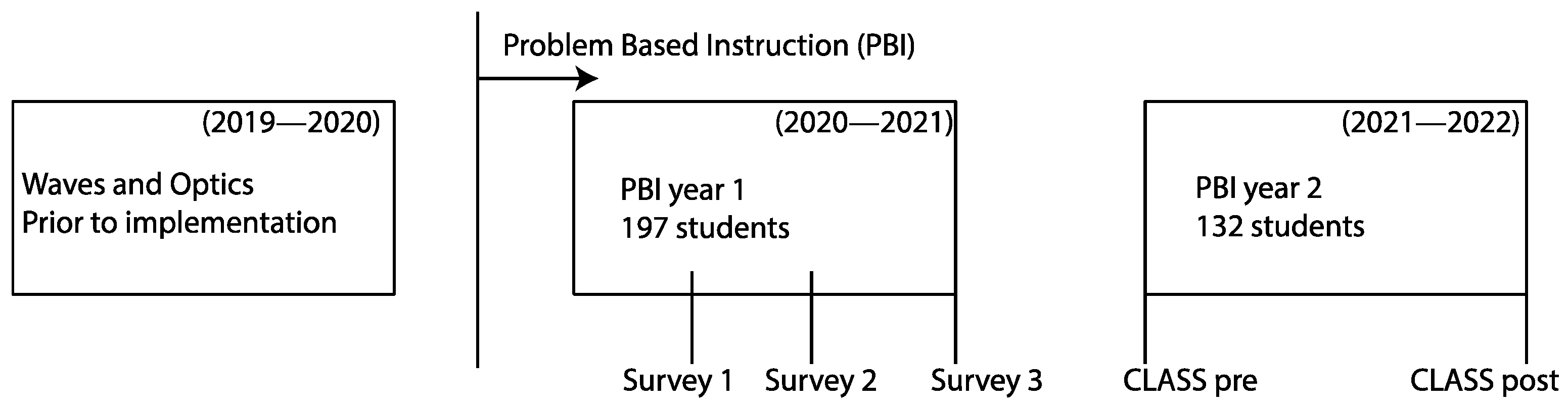

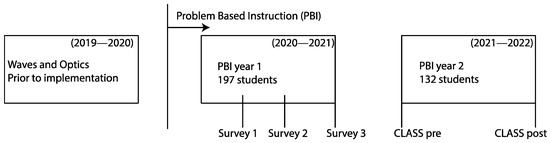

We collected qualitative and quantitative data on students’ experiences with the implementation of PBI through course surveys, course grades on group research reports, contributions to the group, and the Colorado Learning Attitudes Towards Science Survey (CLASS) [18]. The administration of these surveys (data collection strategies) and execution of this research received ethical approval from the university. All surveys were administered online through the anonymous course feedback feature in the university learning management system, and student participation was voluntary. The course surveys were completed by the first cohort of students and used to inform course revisions, while the CLASS was completed by the second cohort of students and used to measure changes in students’ attitudes towards physics and learning physics. A timeline of the administered surveys can be seen below in Figure 1.

Figure 1.

Data collection timeframe of the study.

2.2.1. Course Surveys

Three course surveys (Appendix C) were administered at the beginning, middle, and end of the course, addressing students’ experiences related to the implementation of PBI. These surveys were developed by the authors in collaboration with course staff. The first survey had nine opened-ended prompts addressing their learning experiences in the course. The second survey had 24 prompts, with 10 open-ended prompts addressing their learning experiences in the course, 7 Likert-scale items comparing their PBI experiences to learning experiences in other physics courses (previous physics courses were mostly traditional teacher-centered instruction), and 7 Likert-scale items addressing aspects of PBI implementation. The third survey had 16 prompts, with the same 14 Likert-scale items from the second survey, in addition to 2 open-ended prompts about their overall experiences with the course.

Each survey was administered the day after a lab report was due, and students had about two weeks to respond to maximize the response rate. Their responses to the first two surveys were used to inform the instructor’s and TAs’ subsequent implementations of PBI during the course, while their responses from the third survey were used to inform PBI implementation for the second cohort (second DBIR principle).

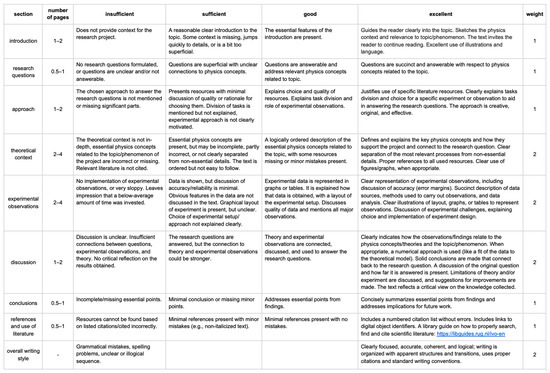

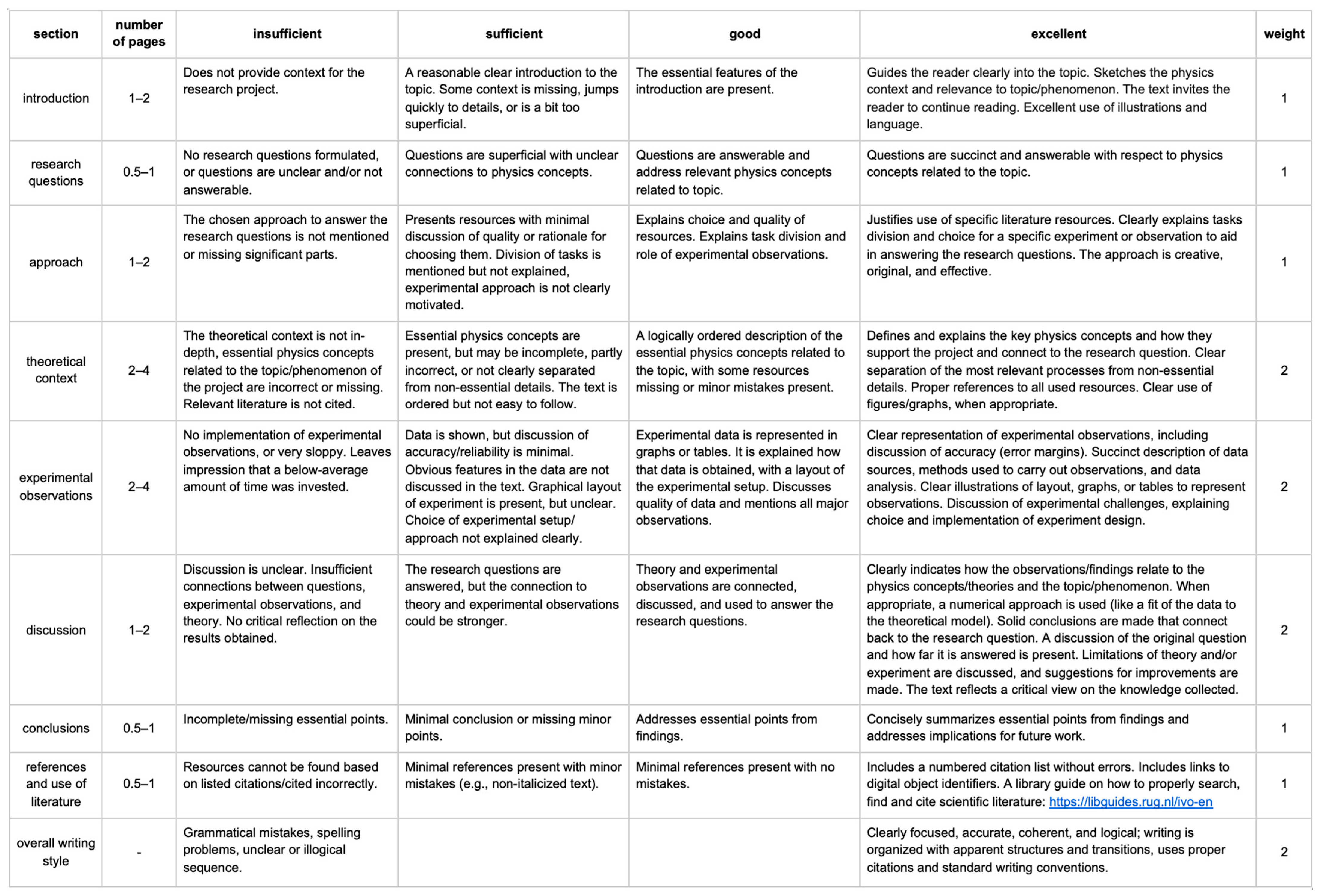

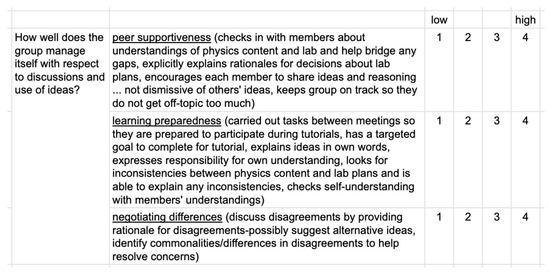

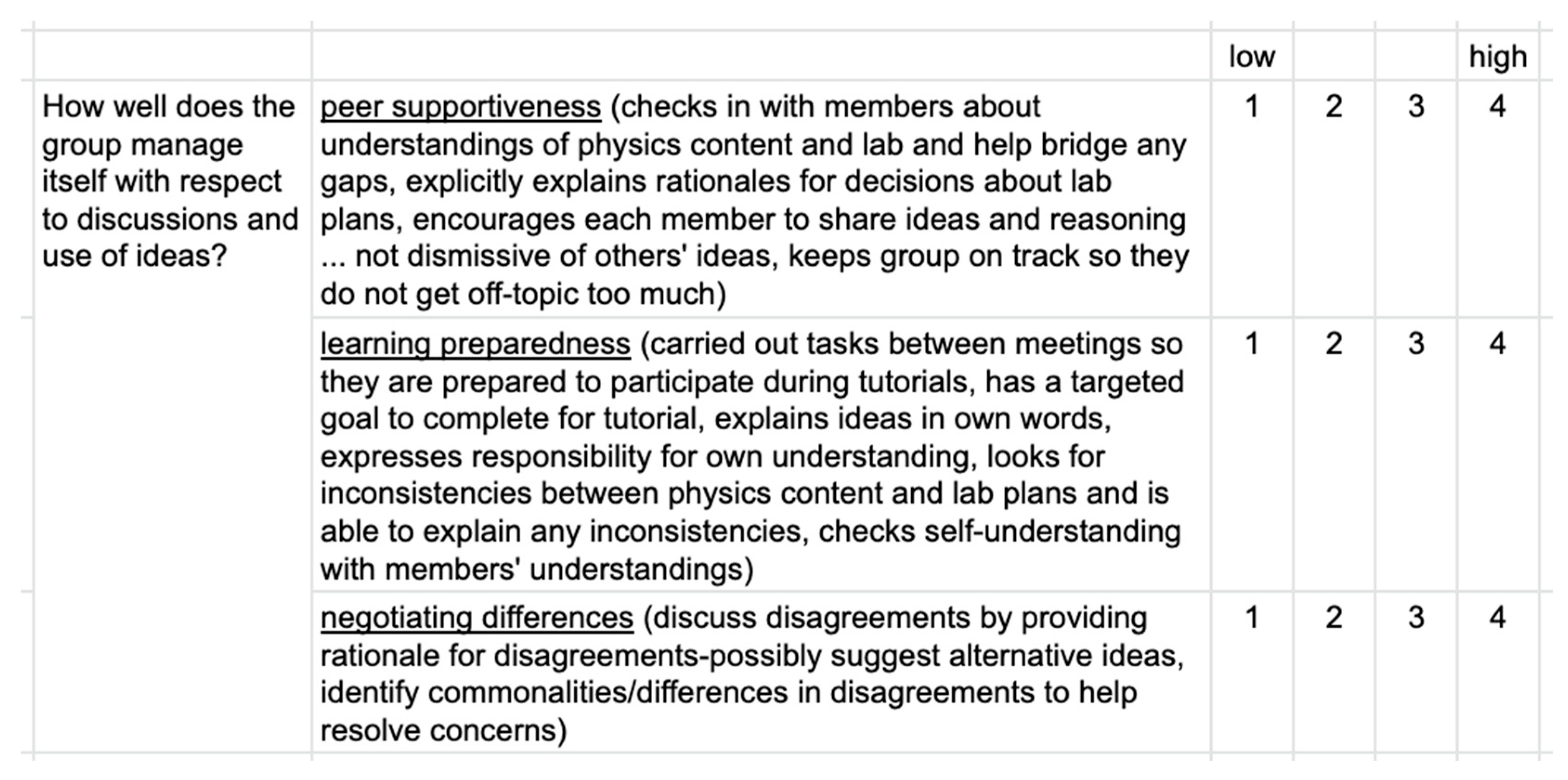

2.2.2. Course Grades for Group Reports and Contributions to the Group

The teaching assistants used separate rubrics to evaluate the group research reports and individual contributions to the group for both PBI implementations. In the rubric for the group research report (Appendix D), the TAs rated the reports with respect to the nine criteria common to communicating scientific findings: introduction, research questions, approach, theoretical context, experimental observations, discussion, conclusions, references, and overall writing style. Each criterion was weighted and scored on a ten-point scale corresponding to four levels (insufficient, sufficient, good, and excellent). In the rubric for the contributions to the group (Appendix E), the students rated each other with respect to three explicitly defined criteria: peer supportiveness, learning preparedness, and negotiation of differences. Each criterion was equally weighted and scored on a four-point Likert scale, with 1 = low and 4 = high. The TAs used the students’ self-reported scores to inform their grading of the contributions of individual students to the group.

2.2.3. CLASS

The CLASS (for physics) is a survey (42 statements rated on a 5-point Likert scale, with 1 = strongly disagree and 5 = strongly agree) addressing students’ epistemological beliefs about physics and physics learning with respect to 8 categories [18]. This survey was validated by Adams et al. [18] using interviews, reliability studies, and extensive statistical analyses of responses from over five thousand students; validation is important because it addresses the dependability of the prompts, which can be influenced by multiple difficult-to-control factors. They designed this survey such that expert-like responses could either be in strong agreement or disagreement with the written statements. Their analysis produced eight statistically robust categories: real-world connection (4 statements), personal interest (6 statements), general problem solving (8 statements), problem-solving confidence (4 statements), problem-solving sophistication (6 statements), sense making/effort (7 statements), conceptual connections (6 statements), and applied conceptual understanding (7 statements). Some statements were not associated with an expert consensus, so they were not used to determine (un)favorable shifts.

2.3. Data Analysis

For the course surveys, separate data analysis was conducted based on item type. For open-ended prompts, two researchers independently used inductive coding to analyze the students’ responses. We focused on prompts addressing the most memorable and most challenging experiences for each course component. After the first round of inductive coding for the first prompt (the most memorable experience from lectures), peer debriefing was used to help establish internal validity. This process was repeated with the remaining prompts. Each time a new code was created, all the previously coded responses were re-coded with the new code. Once all responses were analyzed, and differences in coding were reconciled with 100% agreement, we used conventional content analysis [19] to categorize the codes and identify relationships between categories based on concurrences, antecedents, and/or consequences. For the Likert-scale items, we summarized the responses to prompts about the implementation of PBI. Unanswered prompts or vague responses (e.g., responses with multiple interpretations) were not included in the analysis.

For the course grades corresponding to the group research reports and the individual contributions to the group, we determined the average scores for each by cohort. In the Dutch grading system, 5.5 out of 10 is the minimum passing grade.

For the CLASS, we used a unique identifier (i.e., a string of numbers and letters) associated with each student to match responses from those who completed both the pre-test and post-test. Their pre-test and post-test responses were plotted on a two-dimensional scale to show (any) shifts, with plots created from aggregated responses with respect to categories and statements. These shifts were compared with expert physicist responses and used to investigate how changes in students’ beliefs related to their PBI experiences (third DBIR principle) and to reflect on future directions for the course with respect to the goals of (applied) physics and astronomy bachelors’ programs (fourth DBIR principle).

3. Results from the First Implementation

After submitting their report of the group work, students received a link to the course survey; response rates were 74% (n = 145) for Survey 1, 63% (n = 125) for Survey 2, and 41% (n = 81) for Survey 3. The responses to open-ended prompts about the most memorable and challenging experiences for each course component were categorized into three non-mutually exclusive groups: cognition (C), social interaction (SI), and learning environment (LE). Cognition responses addressed students’ conceptual understanding and application of specific topics. Social interaction responses addressed students’ interactions with the instructor, TAs, group members, lab staff, and people in general. Learning environment responses addressed students’ reactions to the course design (e.g., online format and assessment modes), multimedia resources (i.e., physical demonstrations, YouTube videos, simulations, and apps), and use of specialized lab equipment (e.g., interferometer). A few responses for their most memorable experience were the same as their responses for the most challenging experience. Table 2 shows sample student responses for each category.

Table 2.

Categories and sample student responses.

Table 3 shows the distribution of responses by category (C, SI, and LE) for the first two surveys. The changes in students’ responses between the first and second surveys, by category, did not differ by more than 3% except for the most memorable aspect of tutorials with respect to cognition (−8%) and social interaction (+17%) and for the most challenging aspect of lectures with respect to cognition (−15%) and learning environment (+14%). We look at these changes in further detail in the discussion section.

Table 3.

Categorized responses to open-ended prompts for Survey 1 → Survey 2.

Responses to the Likert-scale items on the second and third surveys were similar, in that students said they valued understanding physics concepts, acquiring mathematical proficiency to solve problems, learning to design experiments, and productively collaborating with peers. Students also indicated that the course helped to improve their conceptual understanding of physics compared with other physics courses and their mathematical skills to a lesser extent. Although most of the responses indicated that students’ engagement and time spent on this course was higher than their other physics courses, and they would like more courses to make use of the problem-based instruction approach, these findings cannot be conclusively attributed to the effects of PBI implementation due to a lack of a control group.

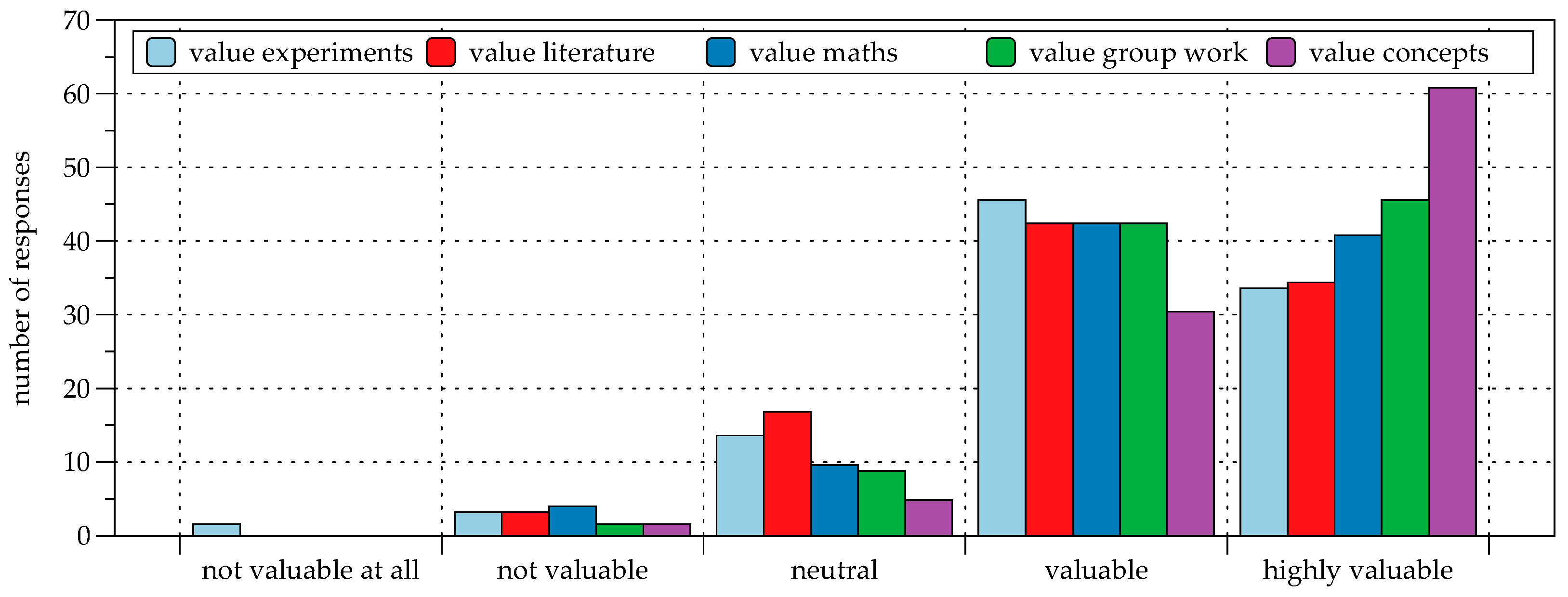

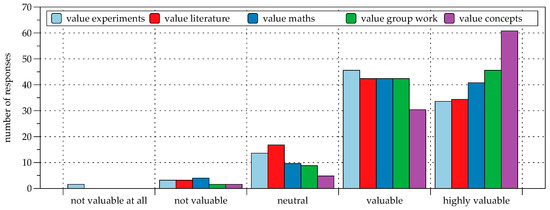

A summary of students’ responses to the third survey with respect to their perceived value of aspects of the group report closely related to PBI (experimental work, literature review, mathematical skills, group work, and conceptual understanding) is shown in Figure 2. Overall, most students indicated that every aspect was valuable or highly valuable.

Figure 2.

Results from the third survey with respect to how much students personally valued their development in the areas of conducting experiments, reviewing the literature, applying mathematical problem solving, completing group work, and understanding concepts.

With respect to students’ grades, their mean scores on the three group research reports were similar (7.9, 8.3, and 8.2) and ranged between 5.5 and 9.5. For their overall contribution to the group by the end of the course, the mean score was 9.7 and ranged between 5 and 10. Almost all the students passed the course.

Given the overall positive response from the surveys, we continued the PBI implementation with a few changes, which are described in the next section.

4. Results from the Second Implementation

Using students’ responses to the course surveys from the first implementation, we made two adjustments to the course in the following year. First, we reduced the number of topics for each tutorial section from three to two topics (see Table 4) so students would have more time to develop their projects, and the deadlines for these projects would not coincide with deadlines for their other courses. The topics that produced the most diverse questions/project from the first iteration, based on feedback from TAs and students, were retained for the second implementation. Second, we reduced the maximum number of student groups per TA from seven to five to allow more time for each group to interact with their TAs. These changes were implemented to provide students additional time to design and carry out their investigations.

Table 4.

Tutorial sections with associated topics.

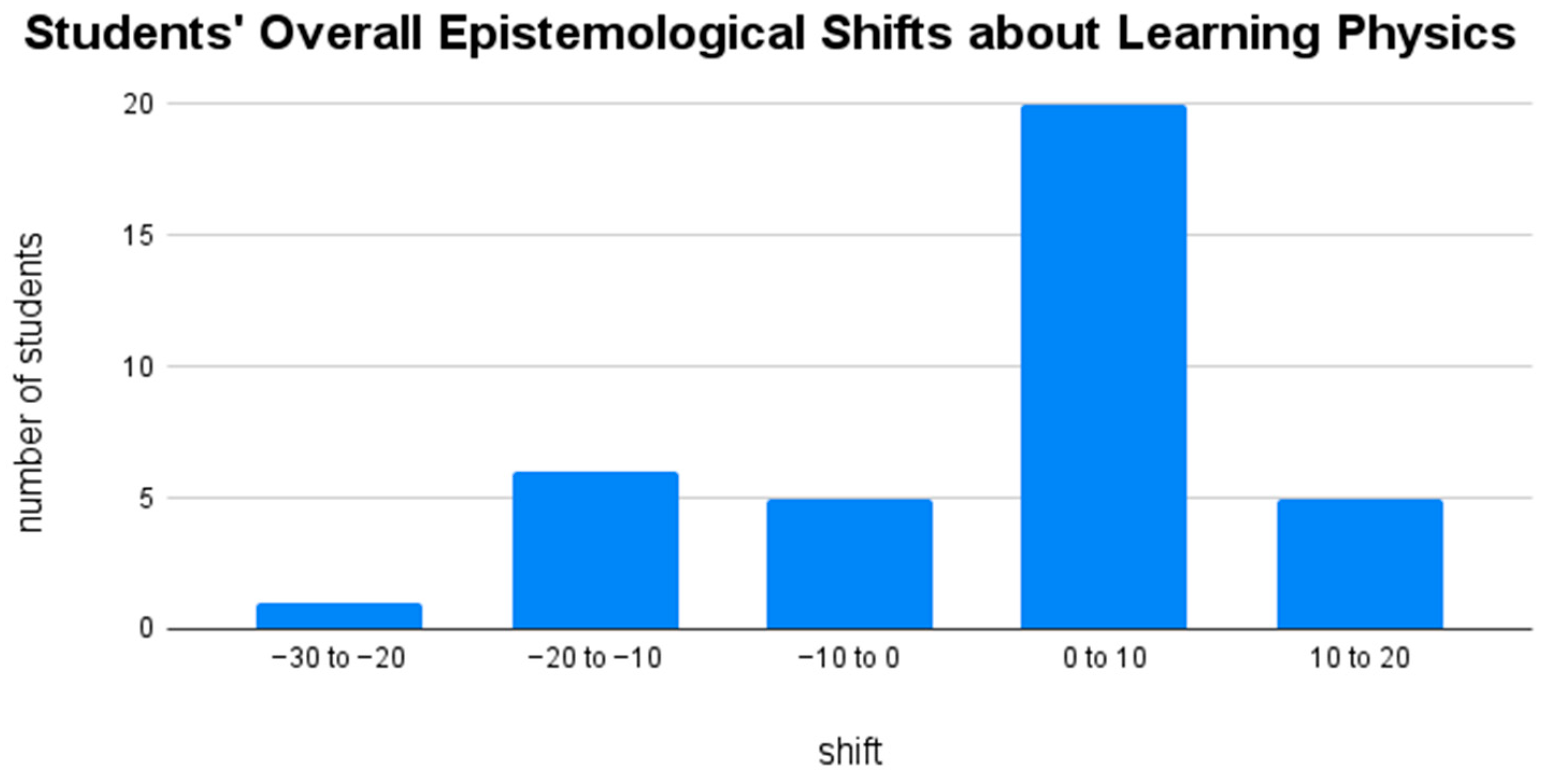

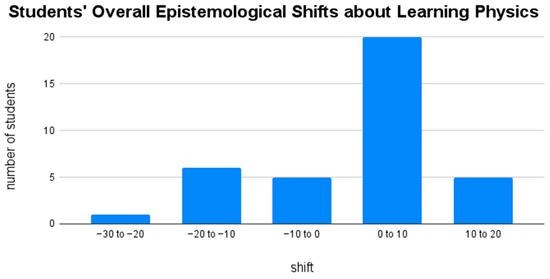

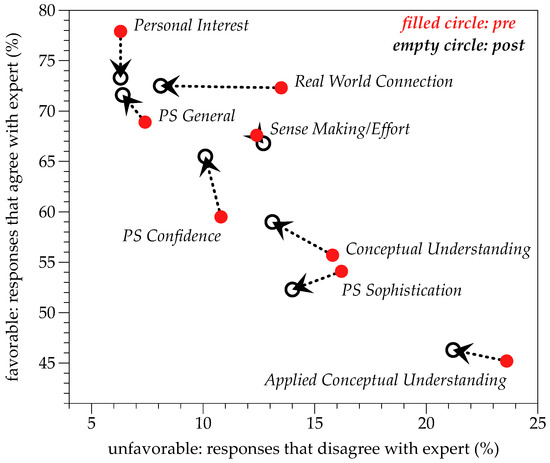

Of the 132 students in this cohort, 28% (n = 37) completed both the pre-test and post-test for the CLASS. Figure 3 shows that most of the students’ responses to the CLASS statements showed a slight shift towards overall expert-like epistemological beliefs in physics and learning physics (i.e., favorable responses).

Figure 3.

Average shifts in students’ overall epistemologies, based on the CLASS.

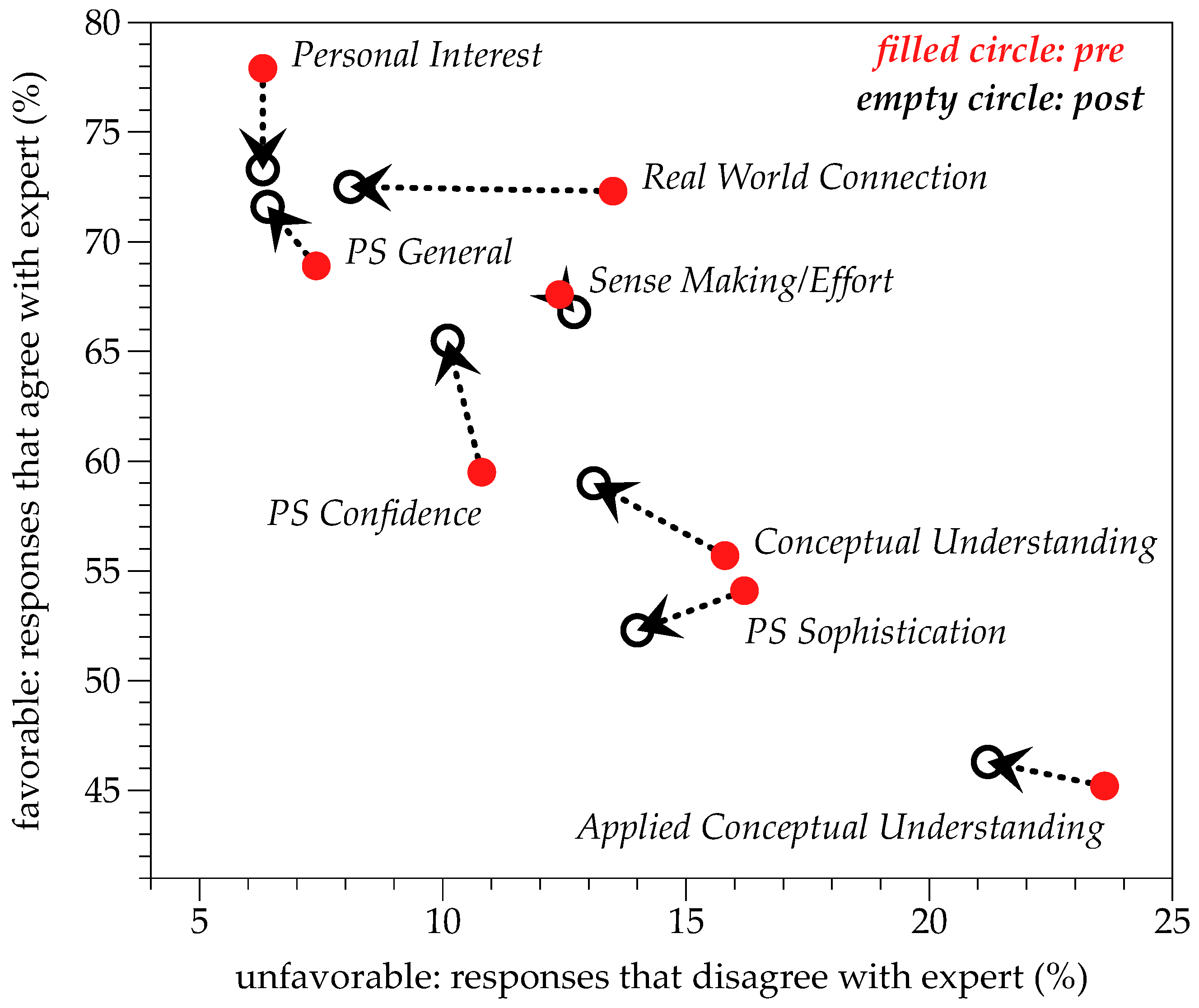

Examining these shifts by CLASS category, the only (slight) overall negative shift towards novice-like epistemological beliefs (i.e., unfavorable responses) occurred in the category of sense making/effort. An overview of these shifts, by category, is shown in Figure 4 with respect to the aggregated favorable and unfavorable responses. For instance, the aggregate responses on the pre-survey for the problem-solving confidence (PS Confidence) category was 59% favorable and 11% unfavorable; on the post-survey, the aggregate responses shifted to 66% favorable and 10% unfavorable. Neutral responses, which are neither favorable nor unfavorable, are defined as sums being less than 100%.

Figure 4.

Average shifts in students’ epistemologies by category, from pre-survey (red-filled circles) to post-survey (black empty circles) responses on the CLASS.

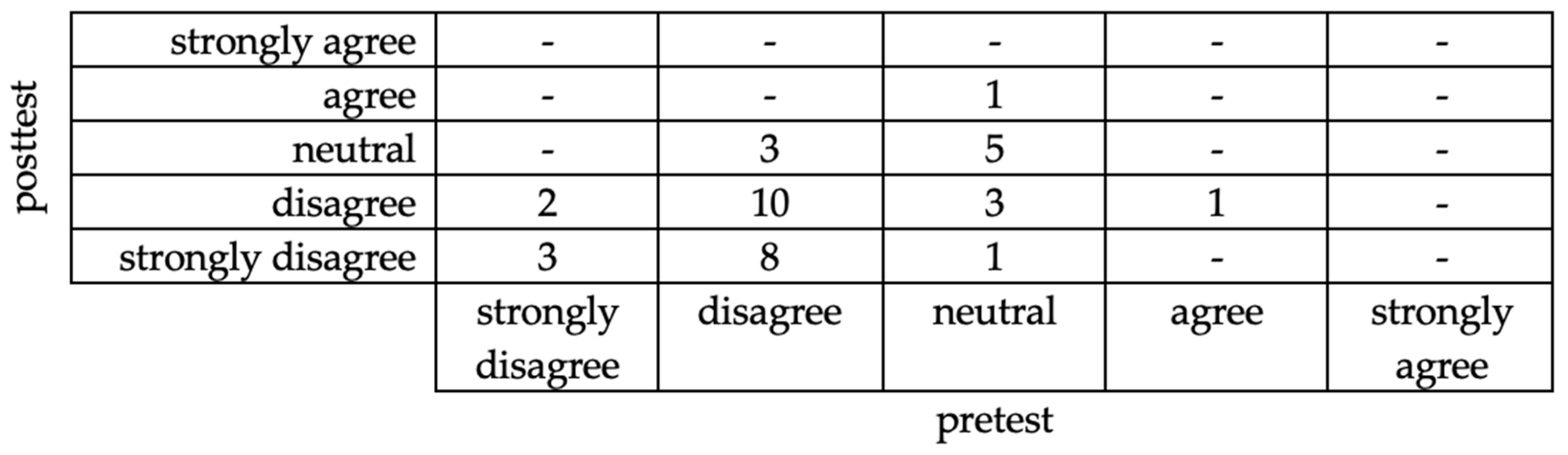

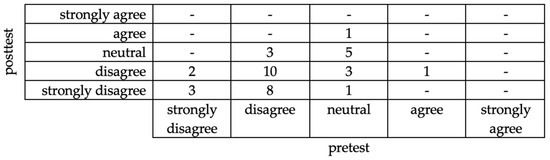

As described earlier, each CLASS category comprised multiple statements, with some statements present in more than one category and a few statements that were not present in any category (but still contributing to the overall measure of students’ epistemologies). Figure 5 shows the distribution plot for Statement 6, which addresses conceptual connections and applied conceptual understanding, with the expert response as strongly disagree. As shown above, 18 students showed no change from pre-test to post-test, 13 students showed favorable shifts (more expert-like), and 6 students showed unfavorable shifts (more naïve). Only a few statements produced overall unfavorable shifts in most of the students who responded. Given the relatively low number of responses, the relatively small changes in the epistemological beliefs are inconclusive.

Figure 5.

Distribution plot of pre-test and post-test responses for Statement 6: “Knowledge in physics consists of many disconnected topics.”.

The students’ mean scores on the two group research reports were similar (7.8 and 8.0) and ranged between 5 and 10. For their contributions to the group, the mean score was 8.0 and ranged between 4 and 10; the scoring process was adjusted from the previous year to avoid a ceiling effect on their grades.

Overall, our results from implementing PBI in the Waves and Optics course showed that the participating students’ beliefs about physics and learning physics shifted slightly towards more expert-like perspectives, contrasting with studies that found students’ beliefs about physics and physics learning in courses using problem-based learning shifted towards more novice-like perspectives [20,21].

5. Discussion

The main contribution of our work is the documentation of PBI implementation in a physics course in a Dutch higher education context. Our findings have implications for European universities, providing a potential model of how PBI may be implemented in a (large-scale and online) physics course. The intersection of PBI, higher education, physics education, and epistemology is a unique combination of fields that are synergistic with each other in supporting a common goal—providing students with opportunities to develop sophisticated beliefs about physics and learning physics that can contribute to their development of 21st-century skills.

Our research questions focused on students’ (1) responses to PBI during the first year of implementation, and (2) changes in beliefs about physics and learning physics during the second year of implementation. Our findings were tempered by limitations to the study, which included a low response rate to the surveys, lack of a control group, and the imposed COVID-19 restrictions. Because students were not required to complete the surveys, the low response rate from those who participated reflects a self-selection bias that may not be representative of the students in the entire course; our findings likely over-represent students with high self-motivation and course performances. The lack of a control group meant that other factors, such as a preference for online learning or an inspiring instructor, could have contributed to our observations and findings. Lastly, the COVID-19 restrictions limited the number of students to two per group (out of a group of four) in the lab at any time, affecting contributions to the group work and dynamics.

From the course surveys administered during the first year of PBI implementation, students expressed challenges using the online learning environment (i.e., technological issues) and appreciation for the opportunities to interact with other people in person after only being able to interact with them virtually during the pandemic (social interaction), in addition to the opportunity to work with the various scientific instruments (learning environment). While Figure 2 shows that students valued conceptual understanding, those who responded to the course surveys indicated they found conceptual understanding difficult to achieve. These findings suggest that from an instructional perspective, TAs needed to take on the role of a coach or mentor rather than that of an authoritative source of knowledge. While the role of a coach or mentor is challenging for some TAs to fulfill, especially those with a deep familiarity with the topics, it is important to support the groups in independently developing their own experimental designs.

From the CLASS administered during the second year of PBI implementation, respondents showed a slight positive shift towards more expert-like beliefs about physics and learning physics. Figure 4 indicates that students’ responses regarding their beliefs about learning physics, with respect to problem-solving confidence, real-world connection, and conceptual understanding, showed the greatest shifts towards more expert-like beliefs by the end of the course. While these shifts represent a tentative signal of the positive impacts of implementing PBI in the Waves and Optics course, increasing the student response rate In future Iterations of this course could help Improve that signal. The students who responded to the surveys about the implementations of PBI in the Waves and Optics course expressed favorable experiences and displayed slightly favorable shifts in their beliefs about physics and learning physics, despite the challenges due to COVID-19 restrictions. However, these findings reflect a self-selection bias towards high-performing students, which suggests that one shortcoming of PBI is that it may not be as beneficial for low-performing students. These students may find PBI challenging, especially since PBI requires students to view knowledge as complex, uncertain, and evolving—rather than simple, certain, and fixed. Low-performing students may also exhibit lower motivation and a lack of prior physics knowledge to successfully engage with PBI, making it difficult to collaborate with their group members. One aspect of students’ responses from the course surveys that did not seem to be highlighted in the literature was the impact of negative experiences when collaborating with others; future work is needed to investigate how negative collaborations influence students’ beliefs about physics and learning physics.

Our findings have practical implications for adapting courses to support all students’ development of sophisticated epistemological beliefs. One of the main challenges associated with implementing PBI was maintaining coherence between course components. For instance, the individual homework assignments were aligned with the lectures during the first year, while the tutorials were relatively disconnected from the lectures (since students were asked to develop their own ideas about each tutorial topic to investigate); this misalignment was problematic because students indicated a lack of conceptual understanding with the topics addressed during tutorials. In the second year, the individual homework assignments were revised to align with the tutorial topics, which resulted in a notable decrease in student attendance during lectures. One possible explanation for this decrease may be that students were not assessed on lecture materials, which addressed the entire Waves and Optics curriculum instead of focusing on select topics in the course. To address this concern, future implementation could involve graded reading quizzes.

Author Contributions

Conceptualization, M.L., C.J.K.L. and S.H.; methodology, M.L.; software, C.J.K.L.; validation, M.L. and C.J.K.L.; formal analysis, M.L., C.J.K.L. and S.H.; investigation, C.J.K.L. and S.H.; resources, not applicable; data curation, M.L. and C.J.K.L.; writing—original draft preparation, M.L., C.J.K.L. and S.H.; writing—review and editing, M.L., C.J.K.L. and S.H.; visualization, C.J.K.L. and S.H.; supervision, M.L. and S.H.; project administration, M.L.; funding acquisition, S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board (or Ethics Committee) of the Faculty of Science and Engineering of the University of Groningen (Protocol Code 71241445 on 25 January 2022).

Informed Consent Statement

Informed consent was waived for all subjects involved in the study, as survey responses were anonymous.

Data Availability Statement

Data from the course survey and the CLASS are available from the authors upon request.

Acknowledgments

The authors would like to thank the Waves and Optics students and teaching assistants for their participation in this project, and to Maxim Pchenitchnikov and Aleksandra Biegun for their support in the design, development, and implementation of the course. C.J.K.L. gratefully acknowledges support from the International Max Planck Research School for Astronomy and Cosmic Physics at the University of Heidelberg in the form of an IMPRS PhD fellowship.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Figure A1.

Sample document shared with student groups during tutorials for the topic focusing on “colors of insects and birds” developed by one of the teaching assistants.

Figure A1.

Sample document shared with student groups during tutorials for the topic focusing on “colors of insects and birds” developed by one of the teaching assistants.

Appendix B

Table A1.

Learning objectives for Waves and Optics (PBI implementation).

Table A1.

Learning objectives for Waves and Optics (PBI implementation).

| Learning Objectives |

|---|

|

|

|

|

|

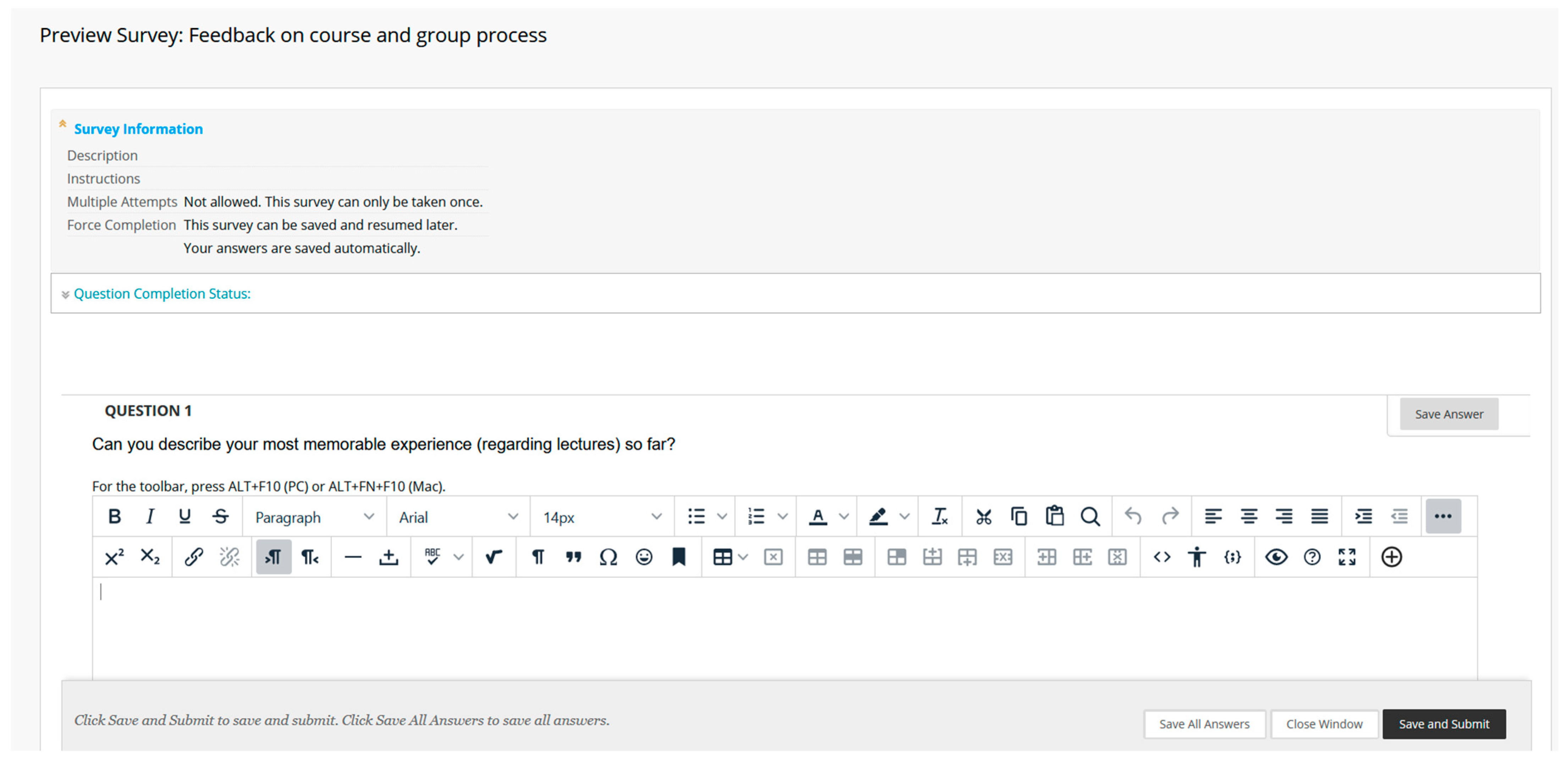

Appendix C

Appendix C.1. Open-Ended Prompts (Course Survey 1, Course Survey 2)

- Can you describe your most memorable experience (regarding lectures) so far?

- Can you describe your most challenging experience (regarding lectures) so far? How did you address it?

- Can you describe your most memorable experience (regarding tutorials) so far?

- Can you describe your most challenging experience (regarding tutorials) so far? How did you address it?

- Can you describe your most memorable experience (regarding experiments) so far?

- Can you describe your most challenging experience (regarding experiments) so far? How did you address it?

- Do you have any other comments/questions regarding the lectures, tutorials, and experiments? There is a separate question concerning group work, and you can also use the feedback box for anonymous feedback.

- Is there anything else you would like your TA to know regarding the group process? If you are in a group of 5, please give the feedback on the 5th team member here.

Appendix C.2. Likert-Scale Items (Course Survey 2, Course Survey 3)

- In this question we ask you to rate your team members on three categories of group work: peer supportiveness, learning preparedness and negotiating differences. The answers are confidential and will only be seen by your TA. They are used as input for the discussion of the group performance. Only at the end of the course you get an individual grade on your contribution to the group process. You will however get feedback following each report.

A team member with high peer supportiveness checks in with members about understandings of physics content and lab and help bridge any gaps, explicitly explains rationales for decisions about lab plans, encourages each member to share ideas and reasoning … not dismissive of others’ ideas, keeps group on track so they do not get off-topic too much.

A team member with high learning preparedness carried out tasks between meetings so they are prepared to participate during tutorials, has a targeted goal to complete for tutorial, explains ideas in own words, expresses responsibility for own understanding, looks for inconsistencies between physics content and lab plans and is able to explain any inconsistencies, checks self-understanding with members’ understandings.

A team member with good negotiation of differences will discuss disagreements by providing rationale for disagreements-possibly suggest alternative ideas, identify commonalities/differences in disagreements to help resolve concerns.

Please rate your team members with a score of 1,2,3, or 4, with 1 meaning a low score on that skill, and 4 a high score on that skill.

I would rate my team member [member1] (name) on peer supportiveness [ps1] (rate 1–4), learning preparedness [lp1] (rate 1–4), negotiating differences [nd1] (rate 1–4). I would rate my team member [member2] (name) on peer supportiveness [ps2] (rate 1–4), learning preparedness [lp2] (rate 1–4), negotiating differences [nd2] (rate 1–4). I would rate my team member [member3] (name) on peer supportiveness [ps3] (rate 1–4), learning preparedness [lp3] (rate 1–4), negotiating differences [nd3] (rate 1–4).

- Compared to other physics courses, how well do you think you are learning the intuition on physics concepts?

- Compared to other physics courses, how well do you think you are learning the technical (mathematical) skills required to solve problems?

- Compared to other physics courses, how well do you think you are learning to design, perform and interpret meaningful experiments?

- Compared to other physics courses, how well do you think you are learning to find, use and share literature resources for learning?

- Compared to other physics courses, how well do you think you are learning to work constructively with a group of peers?

- How valuable is it to you to understand the physics concepts?

- How valuable is it to you to be proficient with the technical (mathematical) skills required to solve problems?

- How valuable is it to you to be able to design, perform and interpret meaningful experiments?

- How valuable is it to you to be able to find, use, and share literature resources for learning?

- How valuable is it to you to work constructively with a group of peers?

- Compared to other courses you’ve taken, how would you rate your engagement with this course?

- Compared to other courses you’ve taken, how would you rate the amount of time you spent on this course?

- Based on your experience so far, would you like more of your courses to be based around problem-based learning?

- Has the teaching assistant for the tutorials been able to spend sufficient time with you and your team?

- Do you have any feedback on the online teaching implementation of the course? Think for example about the effectiveness of the lectures, tutorials, the problem-based learning, and the group work. Both positive and negative feedback is useful.

Appendix D

Figure A2.

Rubric used to assess students’ performance on the group reports.

Figure A2.

Rubric used to assess students’ performance on the group reports.

Appendix E

Figure A3.

Rubric used to assess student contribution to the group process.

Figure A3.

Rubric used to assess student contribution to the group process.

References

- National Research Council. Education for Life and Work: Developing Transferable Knowledge and Skills in the 21st Century; Pellegrino, J.W., Hilton, M.L., Eds.; The National Academies Press: Washington, DC, USA, 2012; ISBN 978-0-309-25649-0. [Google Scholar]

- Bao, L.; Koenig, K. Physics education research for 21st century learning. Discip. Interdiscip. Sci. Educ. Res. 2019, 1, 2. [Google Scholar] [CrossRef]

- McNeil, L.; Heron, P. Preparing physics students for 21st-century careers. Phys. Today 2017, 70, 38–43. [Google Scholar] [CrossRef]

- Hofer, B.K.; Pintrich, P.R. The development of epistemological theories: Beliefs about knowledge and knowing and their relation to learning. Rev. Educ. Res. 1997, 67, 88–140. [Google Scholar] [CrossRef]

- Kardash, C.M.; Scholes, R.J. Effects of preexisiting beliefs, epistemological beliefs, and need for cognition on interpretation of controversial issues. J. Educ. Psychol. 1996, 88, 260–271. [Google Scholar] [CrossRef]

- Baxter Magolda, M.B. Students’ epistemologies and academic experiences: Implications for pedagogy. Rev. High. Educ. 1992, 15, 265–287. [Google Scholar] [CrossRef]

- Tolhurst, D. The influence of learning environments on students’ epistemological beliefs and learning outcomes. Teach. High. Educ. 2007, 12, 219–233. [Google Scholar] [CrossRef]

- Kek, M.Y.C.A.; Huijser, H. The power of problem-based learning in developing critical thinking skills: Preparing students for tomorrow’s digital futures in today’s classrooms. High. Educ. Res. Dev. 2011, 30, 329–341. [Google Scholar] [CrossRef]

- Savery, J.R.; Duffy, T.M. Problem-based learning: An instructional model and its constructivist framework. Educ. Technol. 1995, 35, 31–38. [Google Scholar]

- Fishman, B.J.; Penuel, W.R.; Allen, A.-R.; Cheng, B.H.; Sabelli, N. Design-based implementation research: An emerging model for transforming the relationship of research and practice. Des. Based Implement. Res. Theor. Methods Ex. 2013, 112, 136–156. [Google Scholar] [CrossRef]

- Moust, J.; Bouhuijs, P.; Schmidt, H. Introduction to Problem-Based Learning: A Guide for Students, 4th ed.; Routledge: London, UK, 2021; ISBN 978-1-00-319418-7. [Google Scholar]

- Penuel, W.R.; Fishman, B.J.; Cheng, B.H.; Sabelli, N. Organizing research and development at the intersection of learning, implementation, and design. Educ. Res. 2011, 40, 331–337. [Google Scholar] [CrossRef]

- Brown, A.L. Design experiments: Theoretical and methodological challenges in creating complex interventions in classroom settings. J. Learn. Sci. 1992, 2, 141–178. [Google Scholar] [CrossRef]

- Anderson, T.; Shattuck, J. Design-based research: A decade of progress in education research? Educ. Res. 2012, 41, 16–25. [Google Scholar] [CrossRef]

- Debarger, A.H.; Choppin, J.; Beauvineau, Y.; Moorthy, S. Designing for productive adaptations of curriculum interventions. Teach. Coll. Rec. 2013, 115, 298–319. [Google Scholar] [CrossRef]

- Cobb, P.; Confrey, J.; di Sessa, A.; Lehrer, R.; Schauble, L. Design experiments in educational research. Educ. Res. 2003, 32, 9–13. [Google Scholar] [CrossRef]

- Collins, A.; Joseph, D.; Bielaczyc, K. Design research: Theoretical and methodological issues. J. Learn. Sci. 2004, 13, 15–42. [Google Scholar] [CrossRef]

- Adams, W.K.; Perkins, K.K.; Podolefsky, N.S.; Dubson, M.; Finkelstein, N.D.; Wieman, C.E. New instrument for measuring student beliefs about physics and learning physics: The Colorado Learning Attitudes about Science Survey. Phys. Rev. Spec. Top. Phys. Educ. Res. 2006, 2, 010101. [Google Scholar] [CrossRef]

- Miles, M.B.; Huberman, A.M. Qualitative Data Analysis: An Expanded Sourcebook; SAGE: Thousand Oaks, CA, USA, 1994; ISBN 978-0-8039-5540-0. [Google Scholar]

- Redish, E.F.; Saul, J.M.; Steinberg, R.N. Student expectations in introductory physics. Am. J. Phys. 1998, 66, 212–224. [Google Scholar] [CrossRef]

- Sahin, M. Effects of problem-based learning on university students’ epistemological beliefs about physics and physics learning and conceptual understanding of Newtonian mechanics. J. Sci. Educ. Technol. 2010, 19, 266–275. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).