Feedback is considered to be one of the most effective strategies for improving student learning [

1]. It can be defined as ‘information provided by an agent (e.g., teacher, peer, book, parent, self, experience) regarding aspects of one’s performance or understanding’ ([

1], p. 81). However, feedback currently is also conceptualized as ‘the new knowledge that students generate when they compare their current knowledge and competence against some reference information’ ([

2], p. 757). The second definition elaborates on the first, as feedback is not only about the information provided, but also about the comparison between students’ own work and other information (such as the comments of the teacher). However, whether positive effects of feedback are found depends greatly on how feedback is provided and perceived [

1,

3,

4,

5]. The latter can differ within and between individuals, and is impacted to some extent by the cultural context [

1].

One aim of international large-scale assessments, such as the OECD’s Programme for International Student Assessment (PISA), is to compare classroom practices in educational systems across the world, with the aim of providing policy recommendations based on the strengths and weaknesses of diverse educational systems. Given the critical importance of feedback for student learning, feedback has become a focus in PISA [

6]. To achieve the aim of providing meaningful policy recommendations, it is critical that PISA results can be meaningfully compared across countries. This study therefore evaluated the comparability across countries of feedback in science classes as measured in PISA 2015.

1.1. Feedback across Culturally Different Countries

Differences between the cultures of countries can be characterized along different continuums, but they are most often characterized by the individualism–collectivism continuum proposed by Hofstede in his seminal 1986 work [

7]. There is evidence that this continuum, even though it does not capture the full complexity of cultural differences, can be used as an indicator for certain teacher and student behaviours in the classroom [

8,

9]. It is important to note here that the descriptions below are ideal–typical; there are many countries that fall somewhere between these extremes. Generally, Asian and South American countries tend to be more oriented towards collectivism, whereas northwest European countries and the United States tend to be more oriented towards individualism [

10].

Collectivism is characterized by a stronger we-consciousness: people are born into extended families or clans that protect them in exchange for loyalty. Teachers in countries on the collectivistic end of the spectrum commonly tend to take a more teacher-centred approach: the teacher transfers the knowledge to passive students [

11,

12]. The teacher is more likely to guide the student through each step of the process [

13]. This also means that a stricter hierarchy between teachers and students can often be observed, in which students only respond when they are personally called upon by the teacher. Students are typically not expected to ask for feedback [

14]. As a result, students with more collectivism-oriented identities can be more used to taking a passive role in the classroom than students with more individualism-oriented identities.

However, students being active is critical for feedback to be effective [

15]. Specific cultural values, such as the power distance between teacher and student, can hinder the use of feedback in classrooms in the more collectivism-oriented countries [

16,

17]. This is in line with the fact that teachers in the more collectivism-oriented countries aim to give implicit, group-focused feedback [

18]. Such feedback is also the feedback style more typically preferred by students, as it does not evaluate them in front of their peers (e.g., harmony in the group is preserved) [

19].

Individualism, on the other hand, is characterized by a stronger I-consciousness: everyone is supposed to take care of themselves and their immediate family only. Others are considered as individuals [

10]. Teachers in countries on the more individualism-oriented end of the spectrum tend to have a more student-centred approach in their classrooms. Students are motivated by their teacher to become independent thinkers and to take the initiative [

11,

12,

13]. In the more individualism-oriented countries, two-way communication is more commonly encouraged as a norm in the classroom, and teachers often expect students to seek feedback and ask questions [

10,

18]. This means that students are expected to speak up when they have questions or when questions are asked by the teacher [

10]. Teachers also have a certain amount of autonomy in their lessons and can, therefore, also give more attention to individual differences [

13]. Individual feedback is the style of feedback typically preferred by students in the more individualism-oriented countries [

19].

As mentioned, in practice, no country has all of the traits belonging to the described orientations. For example, in some Western countries, such as the Netherlands, teaching is not completely student-centred (yet), and teachers find it difficult to give students more ownership of their own learning process [

20]. On the other hand, some students in the more collectivism-oriented countries of China, Singapore, and Chinese Taipei actually prefer more individual feedback [

13]. In addition, the move from more teacher-centredness to more student-centredness can be seen in educational contexts worldwide, albeit this move is rather slow. There has (very) slowly been increasing recognition of the view that the student has a significant role to play in feedback processes, while adherence to the transmission model of a teacher telling students what to do in the classroom is slowly fading [

21].

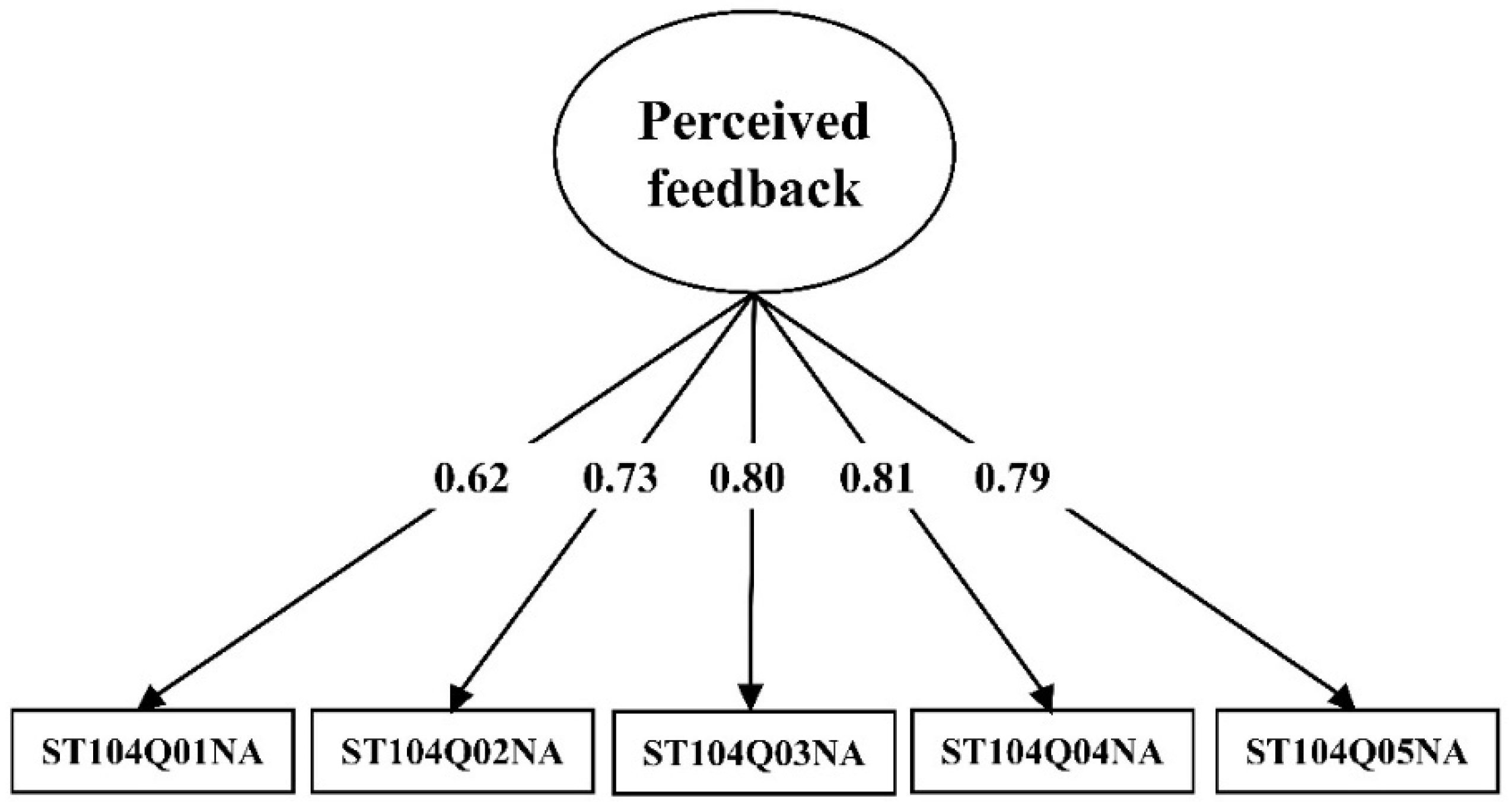

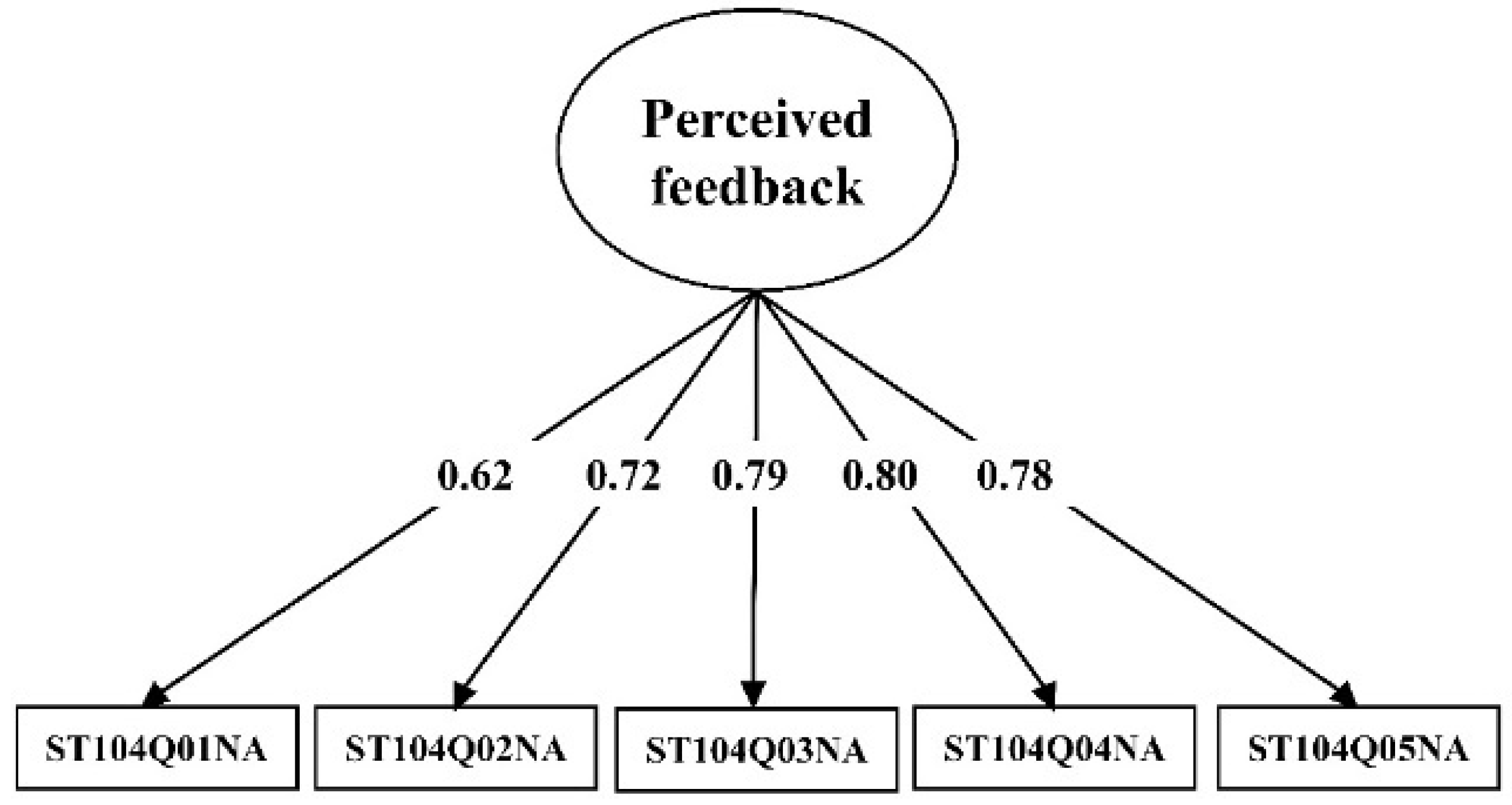

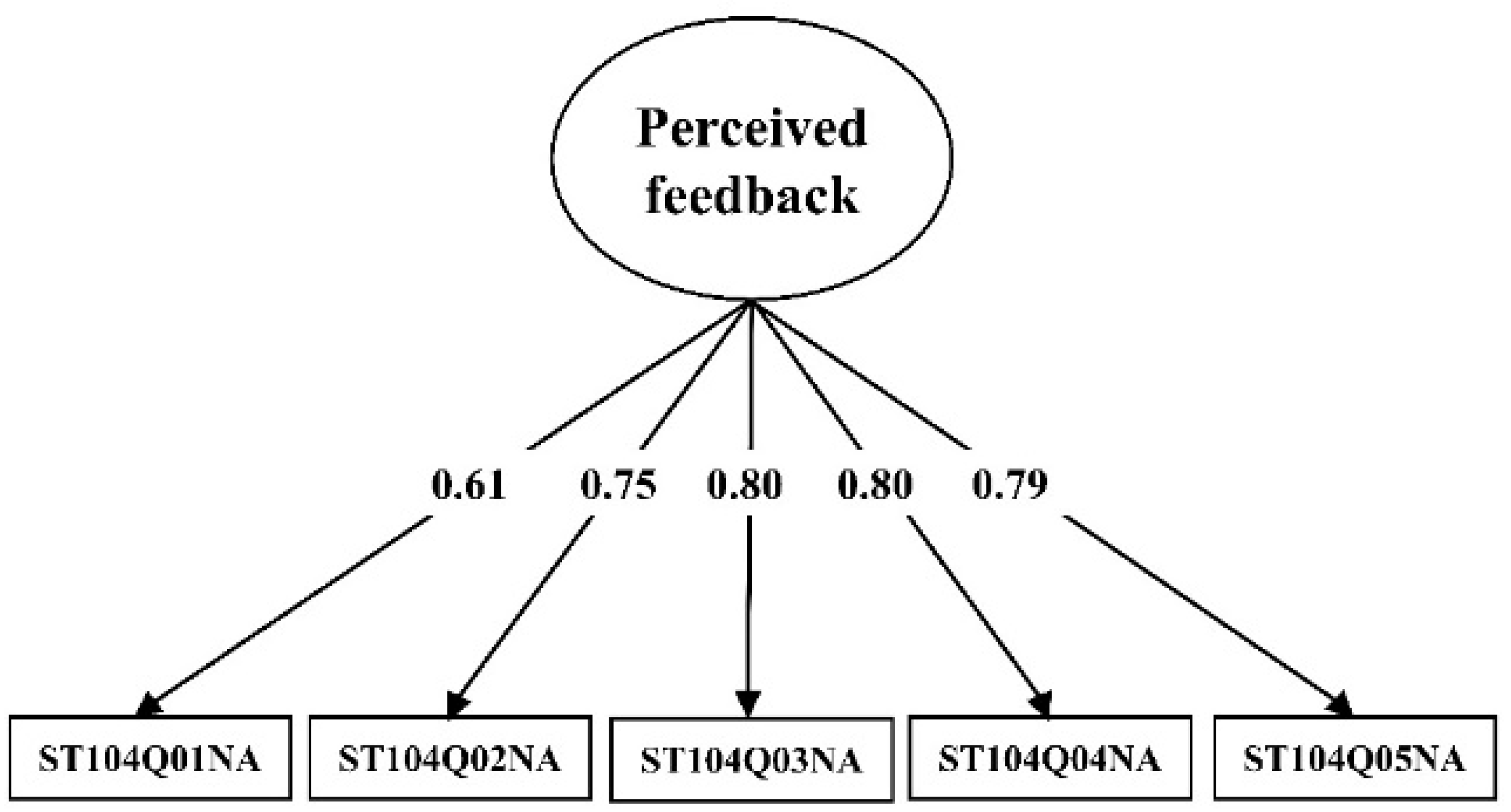

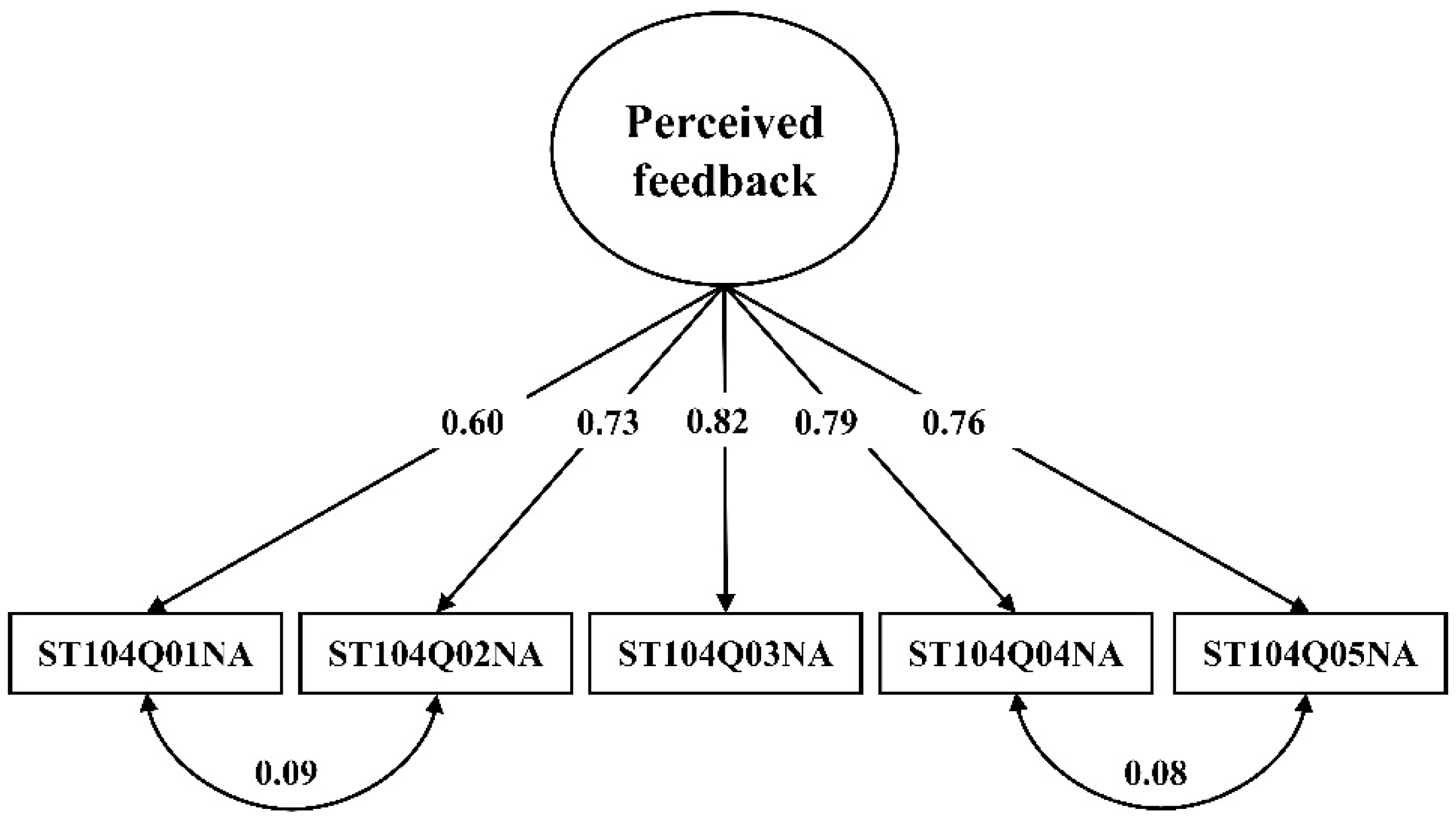

1.2. Feedback in PISA 2015

The data used in this study are from the PISA 2015 survey. The aim of PISA is to map student achievement and educational background factors internationally by country. This gives greater insight into the effectiveness of classroom practices in educational systems across countries worldwide. Every 3 years, PISA assesses the level of 15-year-old students in various subjects, most importantly science, reading and mathematics [

6]. Moreover, PISA distributes background questionnaires to the students, parents and teachers to gain information on the classroom practices and strategies used, and the (educational) background of students. The purpose of including feedback in PISA 2015 was to investigate the use of feedback in science classes as a strategy to improve student learning outcomes across countries worldwide [

22]. However, constructs such as feedback must have the same meaning in order to meaningfully compare results across countries. It is likely that the cultural context of a country can interfere with the meaning of social constructs. The feedback scale implemented in PISA 2015 seems to be focused on the more individualism-oriented classroom: (1) the items are all focused on the relationship between the teacher and the student, and (2) all of the feedback is directed to the first person, the

I. The formulation of these items seems to be better suited for the more individualism-oriented countries, where a more student-centred approach is typical [

13], in contrast with the more collectivism-oriented countries, in which implicit and group-related feedback is more often the norm [

19]. The items for PISA are usually developed in Western, more individualism-oriented, countries, which could easily have caused a greater influence of these countries on the content of the items.

Comparing feedback across countries can only be performed meaningfully if the latent variable, which is feedback in this case, has the same meaning in these diverse countries. In other words, to enable meaningful cross-country comparisons, the latent variable needs to meet the assumption of measurement invariance. In this study, we assessed whether this assumption of measurement invariance holds for the feedback construct across culturally diverse countries, as we expected that feedback might be conceptually different across these countries. We expected, for example, that the feedback construct in PISA 2015, completely focused on teacher–student feedback, might be a better indicator for individualism-oriented countries compared to collectivism-oriented countries. We therefore investigated two aspects related to the validity of the PISA 2015 scale for measuring feedback. The main research question was:

Research Question 1 (RQ1). To what extent is feedback comparable across culturally diverse nations?

The two sub-questions were:

Research Question 1a (RQ1a). To what extent are the feedback scores in PISA 2015 subject to measurement variance across the more collectivism- and more individualism-oriented countries?

Research Question 1b (RQ1b). What is the impact of cultural diversity on the observed relationship between feedback and science achievement scores?