Abstract

With the advent of COVID-19, universities around the world have been forced to move to a fully online mode of delivery because of lockdown policies. This led to a flurry of studies into issues such as internet access, student attitudes to online learning and mental health during lockdown. However, researchers need a validated survey for assessing the classroom emotional climate and student attitudes towards learning in universities that can be used for online, face-to-face or blended delivery. Such a survey could be used to illuminate students’ perceptions of the experiences that make up learning at university level, in terms of such factors as care from teachers, collaboration and motivation. In this article, we report the validation of a University Classroom Emotional Climate (UCEC) questionnaire and an Attitudes to Learning scale, as well as their use in comparing the classroom emotional climate and attitudes during COVID-19 lockdown (fully online delivery) with post-lockdown (mixed-mode delivery). Female students experienced the post-lockdown condition significantly more positively than during lockdown for all scales except Care, while the only significant difference for males between the during and post-lockdown was their choice to engage with learning (Control) and the degree of Challenge that they found with the learning materials.

1. Introduction

When governments closed educational institutions in 2020 to restrict the spread of COVID-19, online learning became the new normal around the world [1], with 90% of the world’s students being impacted [2]. In 10 case studies, Reimers, Amaechi, Banerji and Wang [3] document the remarkable collapse of opportunities to learn, and Reimers [4] provides a comparison of the short-term impact of the pandemic in 13 countries. Accompanying educational institutions’ rapid move to fully online delivery because of the pandemic, many researchers investigated the readiness of institutions for this transition and students’ experience of online learning at the university level. In Western Australia, at the beginning of Semester 1, 2020, the government put restrictions in place to limit the spread of the virus, such as social distancing, and asked that people work from home if possible. Even after these rules were eased, universities which had quickly shifted to fully online delivery continued to deliver all teaching in this format for the rest of Semester 1. By Semester 2, with few COVID-19 cases in Western Australia, some face-to face teaching was re-instated.

Research into the effects on university students in many countries has identified dissatisfaction with the quality of online instruction during COVID-19 lockdown, including Jordan with 585 respondents [5] and Pakistan with 87 respondents [6]. A large study of 1241 Indian university students showed that academic performance declined during lockdown and that 60% of those surveyed could not focus on their studies [7]. However, a study involving 4800 grade 3 and 4 students in 113 government schools in New South Wales, Australia, revealed no significant differences in achievement growth in mathematics and reading between 2019 and 2020 [2].

When 108 Hungarian university students were asked about their access to online learning and their attitudes to learning during lockdown, they were somewhat positive and willing to engage with learning via this medium [8]. A study of gender and ethnic differences in motivation and sense of belonging (8 items) amongst 283 students studying chemistry online during COVID-19 lockdown at US universities [9] revealed lower motivation and sense of belonging among females compared with males.

Other studies with 253, 1111 and 219 students, respectively, focused on the mental health and resilience of university students during lockdown [10,11,12]. A study in China focused on 1040 university students’ growth mindset and engagement with online learning during the pandemic using a modified Dweck Mindset survey [13].

An observational study of blended synchronous learning environments among 24 students who were able to access lectures either in a face-to-face format or through video-conferencing (e.g., using Zoom) was carried out prior to COVID-19 lockdown. Whereas students liked the flexibility of being able to access lectures remotely, they were much less likely to participate actively in classes [14]. Similarly, when 31 students moved to fully online delivery during lockdown, they found synchronous video-conferencing much less engaging than face-to-face classes, they participated less, and they felt less motivated to engage with the teacher or peers [15].

All of the studies reviewed above used questionnaires that were brief (11–19 questions) and focused on students’ attitudes or their access to the internet, as well as failing to provide any evidence of questionnaire validity. Importantly, none of these studies adopted learning environment criteria in investigating the impact of COVID-related lockdowns.

A recent study, however, traced changes in the learning environment perceptions of 230 American preservice teachers before and after pandemic-related course disruption [16]. Five scales from the widely used What Is Happening In this Class? (WIHIC) questionnaire were administered before and after the switch to remote learning. There were statistically-significant declines that were relatively small (0.20–0.28 standard deviations) for the four scales of Teacher Support, Involvement, Task Orientation and Equity, but larger declines (0.56 standard deviations) for Student Cohesiveness.

With the shift towards blended and fully online learning modes that has been occurring at universities over the past decade, and which has been accelerated by the COVID-19 pandemic, there is an even greater need to develop, validate and use questionnaires to assess the classroom emotional climate and student attitudes in face-to-face, online and blended learning environments. Our University Classroom Emotional Climate and Attitudes scales meet this need for validated scales to assess aspects of students’ experiences such as the care and support that they receive from teachers/tutors, their willingness to engage and take control of their learning, the level of challenge that the material presents, their collaboration with peers, their motivation to learn, the consolidation and feedback that they receive about their work, and their overall attitudes to learning.

1.1. Research Question

Our study addressed the following research question:

What differences are there between students’ learning experiences under COVID-19 lockdown conditions (fully online) compared with their learning after lockdown was lifted (mixed mode) at a university in Western Australia. In order to compare these experiences, we developed and validated a classroom emotional climate survey appropriate for learning at university under a variety of conditions (face-to-face, online, blended).

1.2. Understanding Classroom Emotional Climate

This study is part of the long tradition of learning environments research which began with the work of Anderson and Walberg [17] and Moos [18] and was expanded by Fraser [16,19,20,21]. This body of research has resulted in the availability of a large variety of economical, well-validated and widely applicable assessment instruments for obtaining students’ views of what is happening in their classrooms. The quantitative and qualitative data obtained from these instruments provide reliable descriptions of the learning environment that capture students’ experiences within the classroom and the teaching that occurs on a daily basis. By comparison, observational data obtained by external researchers provide a snapshot over a short time period that could miss data or ignore data considered to be unimportant. Students’ perceptions of their learning environment have been shown in many studies over the past decades to be significant predictors of their affective and cognitive outcomes [19,20,21]. Students’ social and emotional interactions with teachers and peers also influence their level of engagement and, hence, their learning outcomes [22]. A positive and supportive emotional climate is one in which the teacher shows care and support for students, listens to their concerns and points of view, ensures a respectful class culture in which students are motivated to be responsible for their learning and encouraged to collaborate with others, and given helpful feedback to consolidate their learning [23].

Observational methods have been developed for understanding the classroom emotional climate in primary and secondary schools, such as the Classroom Assessment Scoring System [24]. Questionnaires, which provide an economical approach to learning about the classroom emotional climate by probing student perceptions, have also been developed for the primary/secondary school contexts, such as the Tripod 7Cs [25] and the Classroom Emotional Climate (CEC) questionnaire for STEM classes [26]. Although there have been questionnaires developed to assess cognitive aspects of teaching and learning at the higher-education level, such as the Experiences of Teaching and Learning Questionnaire (ETLQ) which measures students’ perceptions of their willingness to engage with learning and the teacher’s enthusiasm and support [27,28], there remains a need for a university-level questionnaire that assesses social and emotional interactions that students experience.

1.3. Classroom Emotional Climate (CEC) Survey

This study expands the use of the Classroom Emotional Climate (CEC) survey which was developed and validated for assessing emotional climate and attitudes in integrated secondary-school STEM classes. The CEC (41 items) and an Attitudes scale (10 items) demonstrated excellent validity in terms of Principal Component Analysis (PCA), Confirmatory Factory Analysis (CFA) and Rasch analysis [26]. PCA analysis supported a seven-scale structure for the CEC survey and a single-dimension structure for the Attitudes survey, explaining a total of 74.7% of variance. Additionally, CFA indicated that the data obtained had satisfactory fit with a theoretical seven-scale model (χ2/df = 2.9; RMSEA = 0.07; SRMR = 0.05; CFI = 0.94). Likewise, Rasch analysis revealed satisfactory fit statistics and unidimensionality for items describing each latent variable [26].

Also, differential item functioning of CEC items for males and females suggested that items were understood in the same way irrespective of gender [29]. Use of MANOVA revealed that females in coeducational government schools had significantly more-negative views (0.25–0.50 standard deviations) than males for clarity, motivation, consolidation and attitudes [29]. In further analyses, no significant gender differences in emotional climate and attitudes were found in coeducational nongovernment schools [30].

Based on the validity and reliability of the CEC and Attitudes scales for understanding student perceptions in secondary-school classes, we decided to adapt items from these surveys to ensure their suitability for assessing emotional climate and attitudes within universities.

2. Methods

2.1. Modifying Questionnaire

Prior to attempting to answer the main research question, an appropriate questionnaire was needed to measure the emotional climate experienced by university students. Earlier studies of classroom emotional climate showed that major influences on students’ attitudes towards learning were their perceptions of the care that teachers displayed towards them as learners, the degree to which the teacher controlled the classroom to ensure a conducive learning environment, the clarity of instruction provided, the degree to which students felt challenged to produce high-quality work and think deeply, how motivating the content and delivery were for engagement with learning, whether the teacher provided activities which consolidated prior learning, and the opportunities presented for productive collaboration with peers [23,25,26]. The University Classroom Emotional Climate Survey (UCEC) was modified from our previously validated CEC survey [26] for use with integrated STEM classes in high schools. We thought it appropriate to modify the seven scales of the CEC to suit the university environment because each of these scales arguably could describe the experience of university students. One scale from other classroom emotional climate surveys similar to the CEC, namely, Control, possibly was inappropriate because university lecturers do not usually directly control the behaviour of students. However, university students are expected to control their own behaviour and are responsible for the degree to which they participate in lectures and other activities provided by the lecturers. Modification and validation of the UCEC questionnaire followed the two phases described below.

In the first phase, two experts in learning environments research re-worded CEC items to improve their suitability for the university context. The seven six-item scales of Care, Control, Clarity, Challenge, Motivation, Consolidation and Collaboration each had six items, as did an Attitudes to Learning scale modified from our previous secondary-level questionnaire (Table 1 and Table A1). For instance, in the scale of Consolidation, the original CEC item “My teacher takes time to summarise what I learn each day” was changed to “The lecturers took time to summarise what had been learned so far for me”. In the scale of Challenge, the item “My teacher helps me find challenging STEM projects” was changed to “The lecturers provided challenging tasks and assignments for me”. Whereas the CEC included items which asked students about the degree to which teachers ensured a productive learning environment (e.g., “My teacher makes sure that I stay busy and don’t waste time”), items under the scale of Control in the UCEC focused on the students’ participation in tutorials and the degree to which they focused their attention on lectures.

Table 1.

UCEC scale descriptions and sample items.

2.2. Participants and Data Collection

In the second phase, after obtaining Human Research Ethics Committee approval for the study, an information letter was sent to all students of the university towards the end of Semester 2 to request completion of the survey online through the Qualtrics platform. Our purposes during this phase were to collect data to validate the UCEC and to answer the research question involving comparing experiences in fully online and mixed-mode delivery. For each item, students were asked to consider their experiences during the COVID-19 lockdown, when they had been forced to work from home (April–June, Semester 1, 2020) and their experience with learning at the university after the lockdown was lifted and they returned to blended (online/in-person) learning (July–November, Semester 2, 2020). A total of 194 complete responses were obtained (128 females; 69 males; 3 other). Students from across all of the university’s faculties responded. Students were studying a wide variety of courses, including undergraduate and postgraduate courses, and represented all faculties of the university. Ages of respondents were 16–20 years (n = 67), 21–30 years (n = 93), 31–40 years (n = 25) and 41 + years (n = 9). The majority of respondents were completing an undergraduate degree (n = 154), were studying internally (n = 180) rather than externally, and were domestic rather than international students (n = 147).

2.3. Validation of Questionnaire

The data were analysed in order to validate the UCEC in three stages: Exploratory Factor Analysis using Principal Component Analysis (PCA) with direct oblimin rotation and Kaiser normalisation (because correlations between scales were anticipated) [31]; Confirmatory Factor Analysis (CFA); and reliability/validity measures.

Prior to conducting PCA, a Kaiser–Meyer–Olkin (KMO) test was carried out to check that sampling was adequate. A KMO value of between 0.8 and 1.0 indicates adequate sampling [32]. Bartlett’s test for sphericity was also carried out to determine whether it was appropriate to reduce the number of items based on a statistically significant difference between the correlation and identity matrices [33].

Scales with eigenvalues greater than 1.0 in the PCA were accepted as contributing significantly to the structure of the questionnaire [31]. Items that loaded 0.4 or higher on the expected scale and less than 0.4 on the other scales were retained.

After removing items with low or mixed factor loadings in the PCA, the final measurement model was evaluated using CFA (LISREL 10.20) [34] and goodness-of-fit indices were determined by comparing the data with the theoretical model. Because the number of cases was relatively small, it was not possible to generate an asymptotic covariance matrix and hence some standard errors and ꭓ2 values could be unreliable [34]. χ2/df rather than χ2 was used to gauge fit [35], with a value of <3.0 indicating satisfactory model fit. Cut-off values recommended by Alhija [36] and Hair et al. [37] for other indices were root mean square error of approximation (RMSEA) of <0.08; standardised root mean square residual (SRMR) of <0.06; a comparative fit index (CFI) of >0.90; and a Tucker–Lewis Indicator (TLI) of >9.0 [38].

Following PCA and CFA analysis, checks of composite reliability, discriminant validity and predictive validity were carried out using SPSSTM.

2.4. Comparing Student Experiences during and after Lockdown

Repeated measures multivariate analysis of variance (MANOVA) was carried out to compare students’ responses to each scale during and after lockdown. The initial MANOVA was used to reduce Type 1 errors that could be associated with comparing situations (during/after lockdown) in terms of separate univariate ANOVAs for each dimension [31]. ANOVA results were then used to determine if students’ experiences after and during lockdown were significantly different in terms of each UCEC and Attitudes scale. Each ANOVA yielded the partial η2 statistic as an effect size which indicates the proportion of variance associated with differences in experience on each scale. Additionally, Cohen’s [38] d effect size for differences in experience after and during lockdown for each scale was calculated by dividing the difference between means in two lockdown situations (before and after) by the pooled standard deviation. An effect size of d < 0.2 is considered small, of 0.2 < d < 0.8 medium and of d > 0.8 large [39].

3. Results

3.1. Results for Instrument Validation

Validation was carried out on student responses to our classroom emotional climate and attitudes questionnaire after COVID-19 lockdown for our sample of 194 university students.

3.1.1. Normality Assumptions

Prior to carrying out other statistical analyses, normality assumptions were checked in terms of the skewness and kurtosis for each construct (Table A2). Data met the criteria for a multivariate normal distribution [40], with all absolute skewness values being less than 3 and absolute kurtosis values being less than 10.

3.1.2. Principal Component Analysis

Prior to carrying out explanatory factor analysis using Principal Component Analysis (PCA) of the 48-item UCEC and Attitudes to learning questionnaire, sample adequacy was determined through the KMO (Kaiser–Meyer–Olkin) test which yielded a value of 0.93, which indicates that sampling was more than adequate (>0.80) [32]. Bartlett’s test for sphericity for the UCEC and Attitudes questionnaire was statistically significant (χ2 (861) = 7579.45, p < 0.001)) and indicated that a reduction in the number of items was appropriate [33]. We removed three items (two from Control and one from Motivation) which had low factor loadings on their own scale or additional factor loadings on other dimensions. The final factor loadings and communalities for the 45 CEC and Attitudes items are presented in Table 2, along with eigenvalues and percentages of variance for each scale. Together, the eight scales explained 45.33% of the variance in university student responses. Sample items for each of the eight scales are shown in Table 1, with the full UCEC and Attitudes to learning questionnaires available in the Appendix A.

Table 2.

Exploratory factor analysis (PCA) results for 38 CEC and Attitudes to Learning items 1.

3.1.3. Confirmatory Factor Analysis

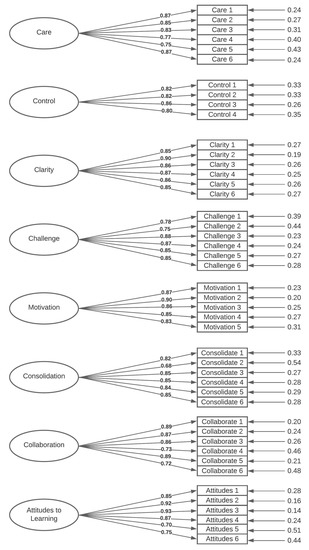

CFA (confirmatory factor analysis) was carried out using LISREL 10.20 [34] to determine goodness-of-fit indices for a comparison of data with the theoretical measurement model presented in Figure 1. The fit indices obtained are reported in Table 3 and indicate good fit between the data and the theoretical measurement model.

Figure 1.

UCEC and Attitudes to Learning Measurement model (CFA).

Table 3.

Model fit indices, obtained values and cut-off guidelines for CFA for UCEC and Attitudes scales.

3.1.4. Reliability, Discriminant Validity and Predictive Validity

Composite reliability for all eight scales was satisfactory with values above the cut-off guidelines of 0.70 [41] (Table 4). Discriminant validity was satisfactory as indicated by Average Variance Extracted (AVE) values of <0.85 [42] (Table 5). The AVE measures the amount of variance within each scale compared with the variance attributable to measurement error. Each UCEC scale has a significant correlation (r = 0.48 to 0.79) with the outcome variable of Attitudes to Learning. Multiple regression analysis indicates that students’ perceptions of Control, Motivation and Collaboration had a significant independent association with students’ Attitudes (Table 4).

Table 4.

UCEC and Attitudes to learning composite reliability, discriminant validity (AVE) and predictive validity (simple correlation and multiple regression results for associations with Attitudes).

Table 5.

Repeated-measures MANOVA comparison of CEC and Attitudes during and after lockdown (N = 194).

3.2. Results for Comparison between Student Responses to CEC and Attitudes Questionnaire during and after COVID-19 Lockdown

When repeated-measure MANOVA was carried out to compare students’ experiences during COVID-19 lockdown and after lockdown, significant multivariate tests of between-subject effects were found: Pillai’s Trace (intercept) = 0.98, F (8,186) = 915.09, p < 0.001, partial η2 = 0.98; Pillai’s Trace (Time) = 0.23, F (8,186) = 6.99, p < 0.001, partial η2 = 0.23. This suggests a significant multivariate effect for time (during lockdown versus after lockdown) on the dependent variables when taking into account correlations between variables. There were significant differences between during and after lockdown in university students’ experiences for all UCEC and Attitudes scales except the degree of Care shown by teachers (Table 5).

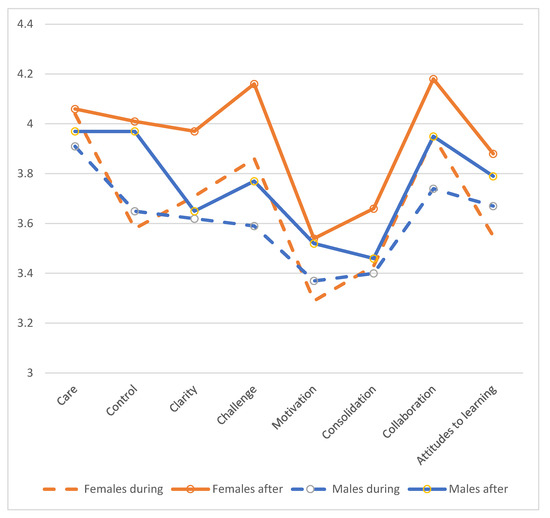

When considering the experience of learning during and after the COVID-19 lockdown by gender (male and female), females had significantly different perceptions of university classroom emotional climate on all scales except Care (Table 6). On the other hand, the only scales for which males had significantly different experiences when comparing lockdown and post-lockdown were Control and Challenge. The Control scale measures the degree to which students feel that they pay attention to the teaching and participate in tutorials. The Challenge scale measures the degree to which students perceive that the work that they are given challenges them to think deeply and correct mistakes. Both males and females felt that, after lockdown when the mode of delivery was a combination of online and face-to-face, they were more engaged and participated more fully and that the material that they were given was more challenging and required them to correct mistakes. Although female students were more positive than males for all scales after lockdown, there was no significant interaction between time (during/after lockdown) and gender in a repeated-measures ANOVAs. Perceptions of males and females during and after lockdown for each scale are depicted in Figure 2.

Table 6.

Repeated-measures MANOVA comparisons of CEC and Attitudes during and after lockdown by gender (Females n = 128; Males n = 69; Other, n = 3 (removed)).

Figure 2.

UCEC and Attitudes to Learning responses by gender and time (during lockdown/after lockdown).

4. Discussion

At a time when modes of delivery of university education are changing rapidly, the need to understand students’ perceptions of emotional and social aspects of their learning experience is essential. Our development of a university-level classroom emotional climate survey helps to fill this gap. The UCEC survey was developed based upon a classroom emotional climate survey (CEC) for secondary schools that was comprehensively validated previously [26]. Firstly, experts in learning environments modified items from the CEC, which had been developed to assess students’ perceptions of the integrated STEM classroom emotional climate, as well as recommending the addition of seven items to address aspects of the university learning experience excluded in the original CEC survey. The initial version of the UCEC was sent to university students at a large university in Western Australia and was completed by 194 students.

Exploratory factor analysis (PCA with direct oblimin rotation and Kaiser normalisation) resulted in an 8-scale solution with 45 items assessing Care, Control, Clarity, Challenge, Motivation, Collaboration, Consolidation and Attitudes to Learning. Factor loadings for each item were greater than 0.4 on their own scale and less than 0.4 on all other scales. Eigenvalues for each scale were greater than 1, and the total proportion of variance explained by the scales was 45.33%. Confirmatory Factor Analysis (CFA) of the 45 items demonstrated satisfactory fit between the data and a theoretical measurement model with 8 latent variables. Values obtained for fit indices (χ2/df =1.80, RMSEA = 0.06, SRMR = 0.05, CFI = 0.91, TLI = 0.91) satisfied criteria recommended in the literature.

Composite reliability, discriminant validity and predictive validity measures also supported the validity of the UCEC survey. The composite reliability for each scale was all above 0.77, indicating satisfactory cohesion of items within those scales. Discriminant validity measures (AVE) ranged from 0.36 to 0.71. Significant bivariate associations were found between each UCEC scale and the outcome of Attitudes to Learning. Three scales were significant predictors of Attitudes to Learning in a multiple regression analysis (Control, Motivation and Collaboration), suggesting that scales of the UCEC can be appropriately used to predict outcomes such as attitudes.

When repeated-measures MANOVA was used to compare students’ perceptions of the learning environment during and after COVID-19 lockdown, when the mode of learning had changed from fully online to blended learning (online with some face-to-face interactions), the UCEC satisfactorily discriminated between student perceptions under different modes of delivery. Except for the Care scale, students were significantly more positive about their experiences after lockdown had lifted and they had returned to a blended mode of learning. Although there was no significant interaction found between gender and time (mode) of delivery in a repeated-measures MANOVA, females perceived significant differences in their experiences during lockdown compared with after lockdown for more scales than did males. Males only had significantly different perceptions of their experience of Control and Challenge, while females had significantly different perceptions for all scales except Care. This is consistent with evidence that women who are required to work from home could have disproportionately more caring tasks competing for their attention within the home environment compared with males [43].

This study was limited in its scope by the relatively small sample size that we were able to obtain. A larger sample would have enabled further statistical analyses, such as a determination of any differences in experience between students in different faculties or degree courses. We used a version of the common pretest–posttest design for which it was impractical to take measurements at two points in time. Instead, we collected data at one point in time and asked students to recall what it was like earlier. A limitation with this approach is that students could have inaccurate recollections of their feelings and experiences during lockdown, several months after the event. The small sample size also limited the accuracy of the CFA, because the impossibility of producing an asymptotic covariance matrix could lead to unreliable values for standard errors and χ2. Likewise, it was not possible to split the data into two separate groups in order to carry out PCA and CFA separately, as is sometimes recommended. These results, therefore, should be considered as preliminary until it is possible to confirm them with a larger data set.

Nevertheless, the evidence presented here still suggests that the UCEC is a valuable tool for illuminating university students’ experiences under different learning conditions. Further research into differences in experience depending on the degree course chosen also could provide useful data for addressing concerns that have been voiced by student bodies [44,45].

5. Conclusions

The study described in this article has made two important contributions to this special journal issue devoted to the field of learning environments. First, it provides a rare example of the use of learning environment criteria to investigate the disruptive impact of the COVID-19 pandemic when learning changed to online. For a sample of 194 university students, scores on classroom emotional climate and attitude scales were significantly lower during lockdown than after lockdown (although effect sizes typically were relatively small).

The second noteworthy contribution is that we have made available to other researchers an economical, valid and widely applicable instrument for evaluating university students’ perceptions of their classroom emotional climates. Now that this questionnaire, which was originally developed and validated for assessing classroom emotional climate at the secondary-school level, has been adapted and cross-validated at the tertiary level, there is potential for educational researchers and practitioners to use it for numerous worthwhile purposes. For example, lecturers could use the UCEC to evaluate their teaching and curricula [46] or to guide improvements in the climates of their classrooms [47]. Researchers could explore gender differences in perceptions of classroom emotional climate [29,48], associations between student outcomes and classroom climate [49] or typologies of classroom emotional climate [50].

Author Contributions

Conceptualization, R.B.K., F.I.M. and B.J.F.; methodology, F.I.M., R.B.K. and B.J.F.; validation, F.I.M.; formal analysis, F.I.M.; writing—original draft preparation, F.I.M.; writing—review and editing, B.J.F.; funding acquisition, R.B.K. and B.J.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Human Research Ethics Committee of CURTIN UNIVERSITY (HRE2018-0084 03/09/2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are not available due to the institution where data were gathered not giving permission for public availability.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

University Classroom Emotional Climate and Attitudes to Learning Questionnaires

If you almost always agreed with the statement click on 5. If you almost never agreed with the statement click on 1. You also can choose the numbers 2, 3 and 4 which are in between.

For each statement, two responses are required: During lockdown and after lockdown

While you respond to the survey consider your overall experience of learning activities at University.

Table A1.

UCEC questionnaire.

Table A1.

UCEC questionnaire.

| During the Lockdown Period (April–June) | July–Present | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| CARE at University | Almost Never Almost Always | Almost Never Almost Always | |||||||||

| 1 | I liked the way I was treated when I needed help. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 2 | I was treated nicely when I asked questions. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 3 | I was made to feel that people cared about me. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 4 | I was encouraged to do my best. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 5 | I was given adequate time to complete tasks. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 6 | I was treated with respect. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| CONTROL During Teaching/Learning sessions: | Almost Never Almost Always | Almost Never Almost Always | |||||||||

| 7 | I listened carefully during lectures/tutorials. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 8 | I participated in tutorials by responding to questions | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 10 | I participated in the ways my lecturers wanted. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 12 | I stayed focused during the teaching/learning sessions. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| CLARITY During Teaching/Learning Sessions: | Almost Never Almost Always | Almost Never Almost Always | |||||||||

| 13 | The lecturers broke up the work into easy steps for me. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 14 | The lecturers explained difficult things to me clearly. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 15 | I understood what I was supposed to be learning. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 16 | I was able to get help when I had difficulty understanding. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 17 | The lecturers ensured that I understood assignments and other tasks. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 18 | The lecturers used a variety of teaching methods to make things clear to me. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| CHALLENGE During Teaching/Learning Sessions: | Almost Never Almost Always | Almost Never Almost Always | |||||||||

| 19 | The lecturers asked questions that made me think hard. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 20 | The lecturers provided challenging tasks and assignments to me | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 21 | I was encouraged to put in my full effort. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 22 | The lecturers encouraged me to keep going when the work was hard. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 23 | The lecturers wanted me to use my thinking skills, not just memorise things. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 24 | I was encouraged to correct my mistakes. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| MOTIVATION During Teaching/Learning Sessions: | Almost Never Almost Always | Almost Never Almost Always | |||||||||

| 26 | The questions in my classes made me want to find out the answers. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 27 | My assignments made me want to learn. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 28 | My assignments/projects were interesting. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 29 | My assignments/project were enjoyable. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 30 | The lectures motivated me to find out more about the topic. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| CONSOLIDATION During Teaching/ Learning Sessions: | Almost Never Almost Always | Almost Never Almost Always | |||||||||

| 31 | The lecturers took time to summarise what had been learned so far for me. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 32 | The lecturers asked me questions whether I volunteered answers or not. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 33 | I got helpful comments to let me know what I did wrong on assignments. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 34 | The lecturers provided feedback to me about how to improve on tasks/assignments. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 35 | The lecturers reminded me of what we learned earlier. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 36 | The lecturers pointed me in the right direction to get further help. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| COLLABORATION | Almost Never Almost Always | Almost Never Almost Always | |||||||||

| 37 | I cooperated with other students in a group when asked to. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 38 | When I worked in a group, I worked as a team. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 39 | I discussed ideas with other students when completing tasks. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 40 | I learned from other students in my classes. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 41 | I worked well with other group members when completing group tasks. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 42 | I helped other students who were having trouble with tasks. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| ATTITUDE TO Learning | Almost Never Almost Always | Almost Never Almost Always | |||||||||

| 43 | I looked forward to participating in learning at University | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 44 | Learning tasks at University were interesting. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 45 | I enjoyed lessons that were part of my course. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 46 | Lessons made me interested in learning more about these fields | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 47 | Topics I studied were relevant to my career goals. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

| 48 | I felt confident that I would succeed in these courses. | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 |

Table A2.

Measures of absolute skewness and kurtosis of mean values for UCEC and Attitudes scales (N = 194).

Table A2.

Measures of absolute skewness and kurtosis of mean values for UCEC and Attitudes scales (N = 194).

| Scale | Skewness | Kurtosis |

|---|---|---|

| Care | −0.98 | −0.97 |

| Control | −1.00 | 0.54 |

| Clarity | −0.84 | 0.53 |

| Challenge | −1.08 | 0.99 |

| Motivation | −0.53 | −0.41 |

| Consolidation | −0.31 | −0.52 |

| Collaboration | −1.31 | 1.60 |

| Attitude to Learning | −0.66 | −0.20 |

References

- UNESCO. COVID-19 Educational Disruption and Response. 2020. Available online: https://en.unesco.org/covid19/educationresponse (accessed on 22 October 2021).

- Gore, J.; Fray, L.; Miller, A.; Harris, J.; Taggart, W. The impact of COVID-19 on student learning in New South Wales primary schools: An empirical study. Aust. Educ. Res. 2021, 48, 605–637. [Google Scholar] [CrossRef] [PubMed]

- Reimers, F.M.; Amaechi, U.; Banerji, A.; Wang, M. (Eds.) An Educational Calamity: Learning and Teaching during the COVID-19 Pandemic; OECD: Paris, France, 2021. [Google Scholar]

- Reimers, F.M. (Ed.) Primary and Secondary Education during COVID-19: Disruptions to Educational Opportunity during a Pandemic; Springer Nature: Basingstoke, UK, 2022. [Google Scholar]

- Almomani, E.Y.; Qablan, A.M.; Atrooz, F.Y.; Almomany, A.M.; Hajjo, R.M.; Almomani, H.Y. The influence of Coronavirus diseases 2019 (COVID-19) pandemic and the quarantine practices on university students’ beliefs about the online learning experience in Jordan. Front. Public Health 2021, 8, 595874. [Google Scholar] [CrossRef]

- Hussain, T.; Rafique, S.; Basit, A. Online learning at university level amid COVID-19 outbreak: A survey of UMT students. Glob. Educ. Stud. Rev. 2020, V, 1–16. [Google Scholar] [CrossRef]

- John, R.R.; John, R.P. Impact of lockdown on the attitude of university students in South India: A cross-sectional observational study. J. Maxillofac. Oral Surg. 2021, 1–8. [Google Scholar] [CrossRef]

- Ismaili, Y. Evaluation of students’ attitude toward distance learning during the pandemic (COVID-19): A case study of ELTE university. On the Horizon 2021, 9. [Google Scholar] [CrossRef]

- Cox, C.T.; Stepovich, N.; Bennion, A.; Fauconier, J.; Izquierdo, N. Motivation and sense of belonging in the large enrollment introductory general and organic chemistry remote courses. Educ. Sci. 2021, 11, 549. [Google Scholar] [CrossRef]

- Sarmiento, A.S.; Ponce, R.S.; Bertolin, A.G. Resilience and COVID-19: An analysis in university students during confinement. Educ. Sci. 2021, 11, 533. [Google Scholar] [CrossRef]

- Wieczorek, T.; Kołodziejczyk, A.; Ciułkowicz, M.; Maciaszek, J.; Misiak, B.; Rymaszewska, J.; Szcześniak, D. Class of 2020 in Poland: Students’ mental health during the COVID-19 outbreak in an academic setting. Int. J. Environ. Res. Public Health 2021, 18, 2884. [Google Scholar] [CrossRef]

- Yassin, A.A.; Razak, N.A.; Saeed, M.A.; Al-Maliki, M.A.A.; Al-Habies, F.A. Psychological impact of the COVID-19 pandemic on local and international students in Malaysian universities. Asian Educ. Dev. Stud. 2021, 10, 574–586. [Google Scholar] [CrossRef]

- Zhao, H.; Xiong, J.; Zhang, Z.; Qi, C. Growth mindset and college students’ learning engagement during the COVID-19 pandemic: A serial mediation model. Front. Psychol. 2021, 12, 621094. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Huang, C.; Quek, C.L. Students’ perspectives on the design and implementation of a blended synchronous learning environment. Australas. J. Educ. Technol. 2018, 34. [Google Scholar] [CrossRef] [Green Version]

- Serhan, D. Transitioning from face-to-face to remote learning: Students’ attitudes and perceptions of using Zoom during COVID-19 pandemic. Int. J. Technol. Educ. Sci. 2020, 4, 335–342. [Google Scholar] [CrossRef]

- Long, C.S.; Sinclair, B.B.; Fraser, B.J.; Larson, T.R.; Harrell, P.E. Preservice teachers’ perceptions of learning environments before and after pandemic-related course disruption. Learn. Environ. Res. 2021. [Google Scholar] [CrossRef]

- Anderson, G.L.; Walberg, H.J. Classroom climate group learning. Int. J. Educ. Sci. 1968, 2, 175–180. [Google Scholar]

- Moos, R.H. The Social Climate Scales: An Overview; Consulting Psychologists Press: Palo Alto, CA, USA, 1974. [Google Scholar]

- Fraser, B.J. Classroom learning environments: Retrospect, context and prospect. In Second International Handbook of Science Education; Fraser, B.J., Tobin, K.G., McRobbie, C.J., Eds.; Springer: Dordrecht, The Netherlands, 2012; Volume 2, pp. 1191–1239. [Google Scholar]

- Fraser, B.J. Classroom learning environments: Historical and contemporary perspectives. In Handbook of Research on Science Education; Lederman, N.G., Abell, S.K., Eds.; Routledge: New York, NY, USA, 2014; Volume II, pp. 104–117. [Google Scholar]

- Fraser, B.J. Milestones in the evolution of the learning environments field over the past three decades. In Thirty Years of Learning Environments; Zandvliet, D.B., Fraser, B.J., Eds.; Brill Sense: Leiden, The Netherlands, 2019; pp. 1–19. [Google Scholar]

- Allen, J.; Gregory, A.; Mikami, A.; Lun, J.; Hamre, B.K.; Pianta, R.C. Observations of effective teacher–student interactions in secondary school classrooms: Predicting student achievement with the Classroom Assessment scoring System—Secondary. School Psychol. Rev. 2013, 42, 76–98. [Google Scholar] [CrossRef]

- Hamre, B.K.; Pianta, R.C. Learning opportunities in preschool and early elementary classrooms. In School Readiness and the Transition to Kindergarten in the Era of Accountability; Pianta, R.C., Cox, M.J., Snow K., L., Eds.; Brookes: Baltimore, MD, USA, 2007; pp. 49–83. [Google Scholar]

- Pianta, R.C.; La Paro, K.M.; Hamre, B.K. Classroom Assessment Scoring SystemTM: Manual K–3; Brookes: Baltimore, MD, USA, 2008. [Google Scholar]

- Ferguson, R.F. Student Perceptions of the Met Project. Bill and Melinda Gates Foundation. 2010. Available online: hppts://k12education.gatesfoundation.org/resource/met-project-student-perceptions (accessed on 23 December 2021).

- Fraser, B.J.; McLure, F.; Koul, R. Assessing classroom emotional climate in STEM classrooms: Developing and validating a questionnaire. Learn. Environ. Res. 2021, 24, 1–21. [Google Scholar] [CrossRef]

- Entwistle, N.; McCune, V.; Hounsell, J. Investigating ways of enhancing university teaching-learning environments: Measuring students’ approaches to studying and perceptions of teaching. In Powerful Learning Environments: Unravelling Basic Components and Dimensions; De Corte, E., Verschaffel, L., Entwistle, N., van Merrienboer, J., Eds.; Elsevier: Amsterdam, The Netherlands, 2003; pp. 89–108. [Google Scholar]

- Parpala, A.; Lindblom-Ylänne, S.; Komulainen, E.; Entwistle, N. Assessing students’ experiences of teaching–learning environments and approaches to learning: Validation of a questionnaire in different countries and varying contexts. Learn. Environ. Res. 2013, 16, 201–215. [Google Scholar] [CrossRef] [Green Version]

- Koul, R.B.; McLure, F.I.; Fraser, B.J. Gender differences in classroom emotional climate and attitudes among students undertaking integrated STEM projects: A Rasch analysis. Res. Sci. Technol. Educ. 2021. [Google Scholar] [CrossRef]

- McLure, F.I.; Koul, R.B.; Fraser, B.J. Gender differences among students undertaking iSTEM projects in multidisciplinary vs unidisciplinary STEM classrooms in government vs nongovernment schools: Classroom emotional climate and attitudes. Learn. Environ. Res. 2021. [Google Scholar] [CrossRef]

- Stevens, J.P. Applied Multivariate Statistics for the Social Sciences, 5th ed.; Routledge: New York, NY, USA, 2009. [Google Scholar]

- Cerny, C.A.; Kaiser, H.F. A study of a measure of sampling adequacy for factor-analytic correlation matrices. Multivar. Behav. Res. 1977, 12, 43–47. [Google Scholar] [CrossRef] [PubMed]

- Bartlett, M.S. Tests of significance in factor analysis. Br. J. Psychol. 1950, 3, 77–85. [Google Scholar] [CrossRef]

- Jöreskog, K.G.; Sörbom, D. LISREL 10: User’s Reference Guide; Scientific Software Inc.: Chicago, IL, USA, 2018. [Google Scholar]

- Byrne, B. Structural Equation Modeling with LISREL, PRELIS and SIMPLIS: Basic Concepts, Application and Programming; Erlbaum: Mahwah, NJ, USA, 1998. [Google Scholar]

- Alhija, F.A.N. Factor analysis: An overview and some contemporary advances. In International Encyclopedia of Education, 3rd ed.; Peterson, P.L., Baker, E., McGaw, B., Eds.; Elsevier: London, UK, 2010; pp. 162–170. [Google Scholar]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis: A Global Perspective, 7th ed.; Prentice-Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Brown, T.A. Confirmatory Factor Analysis for Applied Research, 2nd ed.; Guildford: New York, NY, USA, 2014. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1988. [Google Scholar]

- Kline, R.B. Principles and Practices of Structural Equation Modeling, 3rd ed.; Guildford Press: New York, NY, USA, 2011. [Google Scholar]

- Revelle, W.; Zinbarg, R.E. Coefficients alpha, beta, omega, and the glb: Comments on sijtsma. Psychometrika 2008, 74, 145–154. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Craig, L.; Churchill, B. Dual-earner parent couples’ work and care during COVID-19. Gend. Work Organ. 2021, 28, 66–79. [Google Scholar] [CrossRef]

- Bali, S.; Liu, M.C. Students’ perceptions toward online learning and face-to-face learning courses. J. Phys.: Conf. Ser. 2018, 1108, 012094. [Google Scholar] [CrossRef]

- Cole, M.T.; Shelley, D.J.; Swartz, L.B. Online instruction, e-learning, and student satisfaction: A three year study. The IRRODL 2014, 15. [Google Scholar] [CrossRef]

- Afari, E.; Aldridge, J.M.; Fraser, B.J.; Khine, M.S. Students’ perceptions of the learning environment and attitudes in game-based mathematics classrooms. Learn. Environ. Res. 2013, 16, 131–150. [Google Scholar] [CrossRef]

- Fraser, B.J. Using learning environment perceptions to improve classroom and school climates. In School Climate: Measuring, Improving and Sustaining Healthy Learning Environments; Freiberg, H.J., Ed.; Falmer Press: London, UK, 1999; pp. 65–83. [Google Scholar]

- Parker, L.H.; Rennie, L.J.; Fraser, B.J. (Eds.) Gender, Science and Mathematics: Shortening the Shadow; Kluwer: London, UK, 1995. [Google Scholar]

- Taylor, B.A.; Fraser, B.J. Relationships between learning environment and mathematics anxiety. Learn. Environ. Res. 2013, 16, 297–313. [Google Scholar] [CrossRef]

- Dorman, J.P.; Aldridge, J.M.; Fraser, B.J. Using students’ assessment of classroom environment to develop a typology of secondary school classrooms. Int. Educ. J. 2006, 7, 906–915. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).