Usefulness of Digital Serious Games in Engineering for Diverse Undergraduate Students

Abstract

:1. Introduction and Project Motivation

2. Theoretical Framework and Models

2.1. Technology Acceptance Model (TAM): A Model to Explore Serious Game Usefulness

2.2. Motivational Learning—Expectancy Value Theory

2.3. Game-Based Learning

3. Research Design and Research Questions

- How is usefulness described by engineering student users of the game?

- How do perceptions of game elements vary/stay the same as a function of prior gaming experience?

4. Methods

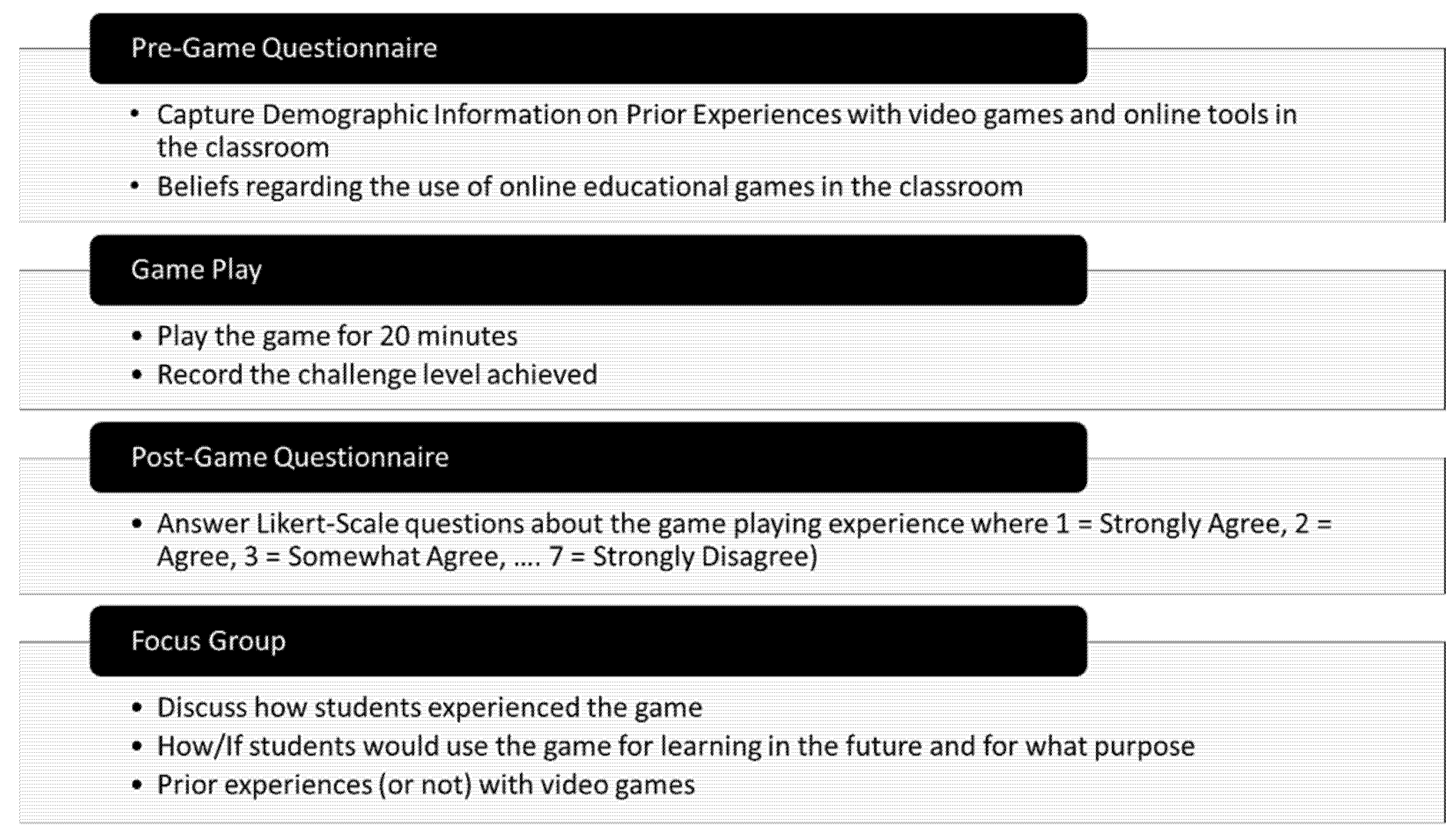

4.1. Data Collection Protocol

4.2. Student Population Demographics

4.3. Online Engineering Game Description

4.4. Statistical Analysis for Quantitative Data

4.5. First Cycle Coding for Qualitative Data—Elemental Coding Approach (Textbox and Focus Group Responses)

4.5.1. Elemental—Structural Coding for Qualitative Data (Textbox Responses)

4.5.2. Elemental—In Vivo Coding for Qualitative Data (Focus Group Responses)

5. Results and Discussion

5.1. Descriptive Statistics for the Entire Population of Students

5.2. Analysis of Variance (ANOVA)

5.3. Multivariate Analysis of Variance (MANOVA)

5.4. Elemental—Structural and In Vivo Coding of Text Box Responses

5.4.1. Question 12: I Would Improve the Game by: __________

5.4.2. Question 13: This Game Is a Good Learning Tool Because: __________

5.5. Focus Group Responses

5.5.1. Theme 1: Serious Engineering Games Should Strengthen Knowledge of Core Course Content

5.5.2. Theme 2: Serious Engineering Games That Do Not Pair with Math and Science Fundamentals Are for Novice Learners

5.5.3. Theme 3: Homework and Supplemental Materials Should Reflect Reflect-Real World Problems

5.5.4. Theme 4: Software Presented a New Way of Learning the Material

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Favis, E. With Coronavirus Closing Schools, Here’s How Video Games Are Helping Teachers. The Washington Post. 15 April 2020. Available online: https://www.washingtonpost.com/video-games/2020/04/15/teachers-video-games-coronavirus-education-remote-learning/ (accessed on 4 December 2021).

- Gamage, K.A.A.; Wijesuriya, D.I.; Ekanayake, S.Y.; Rennie, A.E.W.; Lambert, C.G.; Gunawardhana, N. Online Delivery of Teaching and Laboratory Practices: Continuity of University Programmes during COVID-19 Pandemic. Educ. Sci. 2020, 10, 291. [Google Scholar] [CrossRef]

- Al Mulhem, A.; Almaiah, M.A. A Conceptual Model to Investigate the Role of Mobile Game Applications in Education during the COVID-19 Pandemic. Electronics 2021, 10, 2106. [Google Scholar] [CrossRef]

- Fontana, M.T. Gamification of ChemDraw during the COVID-19 Pandemic: Investigating How a Serious, Educational-Game Tournament (Molecule Madness) Impacts Student Wellness and Organic Chemistry Skills while Distance Learning. J. Chem. Educ. 2020, 97, 3358–3368. [Google Scholar] [CrossRef]

- Krouska, A.; Troussas, C.; Sgouropoulou, C. Mobile game-based learning as a solution in COVID-19 era: Modeling the pedagogical affordance and student interactions. Educ. Inf. Technol. 2021, 1–13. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, M.; Kong, L.; Wang, Q.; Hong, J.-C. The Effects of Scientific Self-efficacy and Cognitive Anxiety on Science Engagement with the “Question-Observation-Doing-Explanation” Model during School Disruption in COVID-19 Pandemic. J. Sci. Educ. Technol. 2020, 30, 380–393. [Google Scholar] [CrossRef] [PubMed]

- Bertram, L. Digital Learning Games for Mathematics and Computer Science Education: The Need for Preregistered RCTs, Standardized Methodology, and Advanced Technology. Front. Psychol. 2020, 11, 2127. [Google Scholar] [CrossRef]

- Hosseini, H.; Hartt, M.; Mostafapour, M. Learning Is Child’s Play: Game-Based Learning in Computer Science Education. ACM Trans. Comput. Educ. 2019, 19, 1–18. [Google Scholar] [CrossRef]

- Alkis, N.; Ozkan, S. Work in Progress—A Modified Technology Acceptance Model for E-Assessment: Intentions of Engineering Students to Use Web-Based Assessment Tools. In Proceedings of the 2010 IEEE Frontiers in Education Conference, Arlington, VA, USA, 27–30 October 2010. [Google Scholar]

- Adams, D.M.; Pilegard, C.; Mayer, R.E. Evaluating the Cognitive Consequences of Playing Portal for a Short Duration. J. Educ. Comput. Res. 2015, 54, 173–195. [Google Scholar] [CrossRef]

- Barreto, F.; Benitti, V.; Sommariva, L. Evaluation of a Game Used to Teach Usability to Undergraduate Students in Computer Science. J. Usability Stud. 2015, 11, 21–39. [Google Scholar]

- Sivak, S.; Sivak, M.; Isaacs, J.; Laird, J.; McDonald, A. Managing the Tradeoffs in the Digital Transformation of an Educational Board Game to a Computer-based Simulation. In Proceedings of the Sandbox Symposium 2007: Acm Siggraph Video Game Symposium, San Diego, CA, USA, 4–5 August 2007; pp. 97–102. [Google Scholar]

- Lee, Y.-H.; Dunbar, N.; Kornelson, K.; Wilson, S.; Ralston, R.; Savic, M.; Stewart, S.; Lennox, E.; Thompson, W.; Elizondo, J. Digital Game based Learning for Undergraduate Calculus Education: Immersion, Calculation, and Conceptual Understanding. Int. J. Gaming Comput.-Mediat. Simul. 2016, 8, 13–27. [Google Scholar] [CrossRef] [Green Version]

- Bian, T.; Zhao, K.; Meng, Q.; Jiao, H.; Tang, Y.; Luo, J. Preparation and properties of calcium phosphate cement/small intestinal submucosa composite scaffold mimicking bone components and Haversian microstructure. Mater. Lett. 2018, 212, 73–77. [Google Scholar] [CrossRef]

- Abt, C.C. Serious Games; University Press of America: Lanham, MD, USA, 1987. [Google Scholar]

- Djaouti, D.; Alvarez, J.; Jessel, J.-P.; Rampnoux, O. Origins of Serious Games. In Serious Games and Edutainment Applications; Ma, M., Oikonomou, A., Jain, L.C., Eds.; Springer: London, UK, 2011. [Google Scholar]

- Gampell, A.; Gaillard, J.C.; Parsons, M.; Le Dé, L. ‘Serious’ Disaster Video Games: An Innovative Approach to Teaching and Learning about Disasters and Disaster Risk Reduction. J. Geogr. 2020, 119, 159–170. [Google Scholar] [CrossRef]

- Gampell, A.V.; Gaillard, J.C.; Parsons, M.; Le Dé, L. Exploring the use of the Quake Safe House video game to foster disaster and disaster risk reduction awareness in museum visitors. Int. J. Disaster Risk Reduct. 2020, 49, 101670. [Google Scholar] [CrossRef]

- Hof, B. The turtle and the mouse: How constructivist learning theory shaped artificial intelligence and educational technology in the 1960s. Hist. Educ. 2020, 50, 93–111. [Google Scholar] [CrossRef]

- Shaffer, D.W.; Squire, K.R.; Halverson, R.; Gee, J.P. Video Games and the Future of Learning. Phi Delta Kappan 2005, 87, 105–111. [Google Scholar] [CrossRef]

- Plass, J.L.; Perlin, K.; Nordlinger, J. The games for learning institute: Research on design patterns for effective educational games. In Proceedings of the Game Developers Conference, San Francisco, CA, USA, 9 March 2010. [Google Scholar]

- Chang, Y.; Aziz, E.-S.; Zhang, Z.; Zhang, M.; Esche, S.K. Evaluation of a video game adaptation for mechanical engineering educational laboratories. In Proceedings of the 2016 IEEE Frontiers in Education Conference, Erie, PA, USA, 12–15 October 2016. [Google Scholar]

- Coller, B.; Scott, M. Effectiveness of using a video game to teach a course in mechanical engineering. Comput. Educ. 2009, 53, 900–912. [Google Scholar] [CrossRef]

- Coller, B.D. A Video Game for Teaching Dynamic Systems & Control to Mechanical Engineering Undergraduates. In Proceedings of the 2010 American Control Conference, Baltimore, MD, USA, 30 June–2 July 2010; pp. 390–395. [Google Scholar]

- Jiau, H.C.; Chen, J.C.; Ssu, K.-F. Enhancing Self-Motivation in Learning Programming Using Game-Based Simulation and Metrics. IEEE Trans. Educ. 2009, 52, 555–562. [Google Scholar] [CrossRef] [Green Version]

- Jong, B.-S.; Lai, C.-H.; Hsia, Y.-T.; Lin, T.-W.; Lu, C.-Y. Using Game-Based Cooperative Learning to Improve Learning Motivation: A Study of Online Game Use in an Operating Systems Course. IEEE Trans. Educ. 2012, 56, 183–190. [Google Scholar] [CrossRef]

- Coller, B.D.; Shernoff, D.J. Video Game-Based Education in Mechanical Engineering: A Look at Student Engagement. Int. J. Eng. Educ. 2009, 25, 308–317. [Google Scholar]

- Landers, R.N.; Callan, R.C. Casual Social Games as Serious Games: The Psychology of Gamification in Undergraduate Education and Employee Training. In Serious Games and Edutainment Applications; Ma, M., Oikonomou, A., Jain, L.C., Eds.; Springer: London, UK, 2011; pp. 399–423. [Google Scholar]

- Orvis, K.A.; Horn, D.B.; Belanich, J. The roles of task difficulty and prior videogame experience on performance and motivation in instructional videogames. Comput. Hum. Behav. 2008, 24, 2415–2433. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, And User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef] [Green Version]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer-technology—A comparison of 2 theoretical-models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef] [Green Version]

- Davis, F.D. User acceptance of information technology: System characteristics, user perceptions and behavioral impacts. Int. J. Man-Mach. Stud. 1993, 38, 475–487. [Google Scholar] [CrossRef] [Green Version]

- Venkatesh, V.; Davis, F.D. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef] [Green Version]

- Aydin, G. Effect of Demographics on Use Intention of Gamified Systems. Int. J. Technol. Hum. Interact. 2018, 14, 1–21. [Google Scholar] [CrossRef]

- Mi, Q.; Keung, J.; Mei, X.; Xiao, Y.; Chan, W.K. A Gamification Technique for Motivating Students to Learn Code Readability in Software Engineering. In Proceedings of the 2018 International Symposium on Educational Technology, Osaka, Japan, 31 July–2 August 2018; pp. 250–254. [Google Scholar]

- Rönnby, S.; Lundberg, O.; Fagher, K.; Jacobsson, J.; Tillander, B.; Gauffin, H.; Hansson, P.-O.; Dahlström, O.; Timpka, T.; Bolling, C.; et al. mHealth Self-Report Monitoring in Competitive Middle- and Long-Distance Runners: Qualitative Study of Long-Term Use Intentions Using the Technology Acceptance Model. JMIR mHealth uHealth 2018, 6, e10270. [Google Scholar] [CrossRef]

- Estriegana, R.; Medina-Merodio, J.-A.; Barchino, R. Student acceptance of virtual laboratory and practical work: An extension of the technology acceptance model. Comput. Educ. 2019, 135, 1–14. [Google Scholar] [CrossRef]

- Eccles, J. Achievement and Achievement Motices; W. H. Freeman: San Francisco, CA, USA, 1983. [Google Scholar]

- Wigfield, A. Expectancy-value theory of achievement motivation: A developmental perspective. Educ. Psychol. Rev. 1994, 6, 49–78. [Google Scholar] [CrossRef]

- Winston, C. The utility of expectancy/value and disidentification models for understanding ethnic group differences in academic performance and self-esteem. Z. Fur Padag. Psychol. 1997, 11, 177–186. [Google Scholar]

- Eccles, J.; Wigfield, A.; Harold, R.D.; Blumenfeld, P. Age and Gender Differences in Children’s Self and Task Perceptions During Elementary-School. Child Dev. 1993, 64, 830–847. [Google Scholar] [CrossRef]

- Piaget, J. Play, Dreams and Imitation in Childhood; Norton: New York, NY, USA, 1962. [Google Scholar]

- Shaffer, D.W. Epistemic frames for epistemic games. Comput. Educ. 2006, 46, 223–234. [Google Scholar] [CrossRef]

- Plass, J.L.; Moreno, R.; Brünken, R. Cognitive Load Theory; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Plass, J.L.; Homer, B.D.; Kinzer, C.K. Foundations of Game-Based Learning. Educ. Psychol. 2015, 50, 258–283. [Google Scholar] [CrossRef]

- Handriyantini, E.; Subari, D. Development of a Casual Game for Mobile Learning With the Kiili Experiential Gaming Model. In Proceedings of the 11th European Conference on Games Based Learning, Graz, Austria, 5–6 October 2017; pp. 213–218. [Google Scholar]

- Cheng, K.W. Casual Gaming; Vrije Universiteit: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Elias, H. First Person Shooter—The Subjective Cyberspace; LabCom: Covilha, Portugal, 2009. [Google Scholar]

- Wardrip-Fruin, N.; Harrigan, P. Second Person: Role-Playing and Story in Games and Playable Media; MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Kolb, D.A. Experiential Learning: Experience as the Source of Learning and Development 1984; Prentice-Hal: Englewood Cliffs, NJ, USA, 1984. [Google Scholar]

- Dunwell, I.; Petridis, P.; Hendrix, M.; Arnab, S.; Al-Smadi, M.; Guetl, C. Guiding Intuitive Learning in Serious Games: An Achievement-Based Approach to Externalized Feedback and Assessment. In Proceedings of the 2012 Sixth International Conference on Complex, Intelligent, and Software Intensive Systems, Palermo, Italy, 4–6 July 2012; pp. 911–916. [Google Scholar] [CrossRef]

- Dondlinger, M. Educational Video Game Design: A Review of the Literature. J. Appl. Educ. Technol. 2007, 4, 21–31. [Google Scholar]

- ISO 9241-210:2019(en). Ergonomics of Human-System Interaction—Part 210: Human-Centred Design for Interactive Systems; International Organization for Standardization: Geneva, Switzerland, 2010; Available online: https://www.iso.org/standard/77520.html (accessed on 1 December 2021).

- Hornbæk, K.; Hertzum, M. Technology Acceptance and User Experience. ACM Trans. Comput.-Hum. Interact. 2017, 24, 1–30. [Google Scholar] [CrossRef]

- Aranyi, G.; van Schaik, P. Modeling user experience with news websites. J. Assoc. Inf. Sci. Technol. 2015, 66, 2471–2493. [Google Scholar] [CrossRef] [Green Version]

- Venkatesh, V. Determinants of Perceived Ease of Use: Integrating Control, Intrinsic Motivation, and Emotion into the Technology Acceptance Model. Inf. Syst. Res. 2000, 11, 342–365. [Google Scholar] [CrossRef] [Green Version]

- McFarland, D.J.; Hamilton, D. Adding contextual specificity to the technology acceptance model. Comput. Hum. Behav. 2006, 22, 427–447. [Google Scholar] [CrossRef]

- Gomez-Jauregui, V.; Manchado, C.; Otero, C. Gamification in a Graphical Engineering course—Learning by playing. In Proceedings of the International Joint Conference on Mechanics, Design Engineering and Advanced Manufacturing (JCM), Catania, Italy, 14–16 September 2016. [Google Scholar]

- Ismail, N.; Ayub, A.F.M.; Yunus, A.S.M.; Ab Jalil, H. Utilising CIDOS LMS in Technical Higher Education: The Influence of Compatibility Roles on Consistency of Use. In Proceedings of the 2nd International Research Conference on Business and Economics (IRCBE), Semarang, Indonesia, 3–4 August 2016. [Google Scholar]

- Janssen, D.; Schilberg, D.; Richert, A.; Jeschke, S. Pump it up!—An Online Game in the Lecture “Computer Science in Mechanical Engineering”. In Proceedings of the 8th European Conference on Games Based Learning (ECGBL), Berlin, Germany, 9–10 October 2014. [Google Scholar]

- Tummel, C.; Richert, A.; Schilberg, D.; Jeschke, S. Pump It up!—Conception of a Serious Game Applying in Computer Science. In Proceedings of the 2nd International Conference on Learning and Collaboration Technologies/17th International Conference on Human-Computer Interaction, Los Angeles, CA, USA, 2–7 August 2015. [Google Scholar]

- Lenz, L.; Stehling, V.; Richert, A.; Isenhardt, I.; Jeschke, S. Of Abstraction and Imagination: An Inventory-Taking on Gamification in Higher Education. In Proceedings of the 11th European Conference on Game-Based Learning (ECGBL), Graz, Austria, 5–6 October 2017. [Google Scholar]

- Martinetti, A.; Puig, J.E.P.; Alink, C.O.; Thalen, J.; Van Dongen, L.A. Gamification in teaching Maintenance Engineering: A Dutch experience in the rolling stock management learning. In Proceedings of the 3rd International Conference on Higher Education Advances (HEAd), Valencia, Spain, 21–23 June 2017. [Google Scholar]

- Menandro, F.C.M.; Arnab, S. Game-Based Mechanical Engineering Teaching and Learning—A Review. Smart Sustain. Manuf. Syst. 2020, 5, 45–59. [Google Scholar] [CrossRef]

- Shernoff, D.; Ryu, J.-C.; Ruzek, E.; Coller, B.; Prantil, V. The Transportability of a Game-Based Learning Approach to Undergraduate Mechanical Engineering Education: Effects on Student Conceptual Understanding, Engagement, and Experience. Sustainability 2020, 12, 6986. [Google Scholar] [CrossRef]

- Alanne, K. An overview of game-based learning in building services engineering education. Eur. J. Eng. Educ. 2015, 41, 204–219. [Google Scholar] [CrossRef]

- Dinis, F.M.; Guimaraes, A.S.; Carvalho, B.R.; Martins, J.P.P. Development of Virtual Reality Game-Based interfaces for Civil Engineering Education. In Proceedings of the 8th IEEE Global Engineering Education Conference (EDUCON), Athens, Greece, 25–28 April 2017. [Google Scholar]

- Ebner, M.; Holzinger, A. Successful implementation of user-centered game based learning in higher education: An example from civil engineering. Comput. Educ. 2007, 49, 873–890. [Google Scholar] [CrossRef]

- Herrera, R.F.; Sanz, M.A.; Montalbán-Domingo, L.; García-Segura, T.; Pellicer, E. Impact of Game-Based Learning on Understanding Lean Construction Principles. Sustainability 2019, 11, 5294. [Google Scholar] [CrossRef] [Green Version]

- Taillandier, F.; Micolier, A.; Sauce, G.; Chaplain, M. DOMEGO: A Board Game for Learning How to Manage a Construction Project. Int. J. Game-Based Learn. 2021, 11, 20–37. [Google Scholar] [CrossRef]

- Tsai, M.-H.; Wen, M.-C.; Chang, Y.-L.; Kang, S.-C. Game-based education for disaster prevention. AI Soc. 2014, 30, 463–475. [Google Scholar] [CrossRef]

- Zechner, J.; Ebner, M. Playing a Game in Civil Engineering The Internal Force Master for Structural Analysis. In Proceedings of the 14th International Conference on Interactive Collaborative Learning (ICL)/11th International Conference on Virtual-University (VU), Piestany, Slovakia, 21–23 September 2011. [Google Scholar]

- Carranza, D.B.; Negron, A.P.P.; Contreras, M. Teaching Approach for the Development of Virtual Reality Videogames. In Proceedings of the International Conference on Software Process Improvement (CIMPS), Leon, Mexico, 23–25 October 2019; pp. 276–288. [Google Scholar]

- Carranza, D.B.; Negrón, A.P.P.; Contreras, M. Videogame development training approach: A Virtual Reality and open-source perspective. JUCS-J. Univers. Comput. Sci. 2021, 27, 152–169. [Google Scholar] [CrossRef]

- Cengiz, M.; Birant, K.U.; Yildirim, P.; Birant, D. Development of an Interactive Game-Based Learning Environment to Teach Data Mining. Int. J. Eng. Educ. 2017, 33, 1598–1617. [Google Scholar]

- Sarkar, M.S.; William, J.H. Digital Democracy: Creating an Online Democracy Education Simulation in a Software Engineering Class. In Proceedings of the 48th ACM Annual Southeast Regional Conference (SE), New York, NY, USA, 15–17 April 2010; pp. 283–286. [Google Scholar]

- Kodappully, M.; Srinivasan, B.; Srinivasan, R. Cognitive Engineering for Process Safety: Effective Training for Process Operators Using Eye Gaze Patterns. In Proceedings of the 26th European Symposium on Computer Aided Process Engineering (ESCAPE), Portoroz, Slovenia, 12–15 June 2016; pp. 2043–2048. [Google Scholar]

- Llanos, J.; Fernández-Marchante, C.M.; García-Vargas, J.M.; Lacasa, E.; de la Osa, A.R.; Sanchez-Silva, M.L.; De Lucas-Consuegra, A.; Garcia, M.T.; Borreguero, A.M. Game-Based Learning and Just-in-Time Teaching to Address Misconceptions and Improve Safety and Learning in Laboratory Activities. J. Chem. Educ. 2021, 98, 3118–3130. [Google Scholar] [CrossRef]

- Tarres, J.A.; Oliver, H.; Delgado-Aguilar, M.; Perez, I.; Alcala, M. Apps as games to help students in their learning process. In Proceedings of the 9th International Conference on Education and New Learning Technologies (EDULEARN), Barcelona, Spain, 3–5 July 2017; pp. 203–209. [Google Scholar]

- Wun, K.P.; Harun, J. The Effects of Scenario-Epistemic Game Approach on Professional Skills and Knowledge among Chemical Engineering Students. In Proceedings of the 6th IEEE Conference on Engineering Education (ICEED), Kuala Lumpur, Malaysia, 9–10 December 2014; pp. 90–94. [Google Scholar]

- Cohen, M.A.; Niemeyer, G.O.; Callaway, D.S. Griddle: Video Gaming for Power System Education. IEEE Trans. Power Syst. 2016, 32, 3069–3077. [Google Scholar] [CrossRef]

- Bilge, P.; Severengiz, M. Analysis of industrial engineering qualification for the job market. In Proceedings of the 16th Global Conference on Sustainable Manufacturing (GCSM), Lexington, KY, USA, 2–4 October 2018; pp. 725–731. [Google Scholar]

- Despeisse, M.; IEEE. Games and simulations in industrial engineering education: A review of the cognitive and affective learning outcomes. In Proceedings of the Winter Simulation Conference (WSC), Gothenburg, Sweden, 9–12 December 2018; pp. 4046–4057. [Google Scholar]

- Galleguillos, L.; Santelices, I.; Bustos, R. Designing a board game for industrial engineering students a collaborative work experience of freshmen. In Proceedings of the 13th International Technology, Education and Development Conference (INTED), Valencia, Spain, 11–13 March 2019; pp. 138–144. [Google Scholar]

- Surinova, Y.; Jakabova, M.; Kosnacova, P.; Jurik, L.; Kasnikova, K. Game-based learning of workplace standardizations basics. In Proceedings of the 8th International Technology, Education and Development Conference (INTED), Valencia, Spain, 10–12 March 2014; pp. 3941–3948. [Google Scholar]

- Pierce, T.; Madani, K. Online gaming for sustainable common pool resource management and tragedy of the commons prevention. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Manchester, UK, 13–16 October 2013; pp. 1765–1770. [Google Scholar]

- Sobke, H.; Hauge, J.B.; Stefan, I.A.; Stefan, A. Using a Location-Based AR Game in Environmental Engineering. In Proceedings of the 1st IFIP TC 14 Joint International Conference on 18th IFIP International Conference on Entertainment Computing (IFIP-ICEC)/5th International Joint Conference on Serious Games (JCSG), Arequipa, Peru, 11–15 November 2015; pp. 466–469. [Google Scholar]

- Frøland, T.H.; Heldal, I.; Sjøholt, G.; Ersvær, E. Games on Mobiles via Web or Virtual Reality Technologies: How to Support Learning for Biomedical Laboratory Science Education. Information 2020, 11, 195. [Google Scholar] [CrossRef] [Green Version]

- Butler, W.M. An Experiment in Live Simulation-Based Learning in Aircraft Design and its Impact on Student Preparedness for Engineering Practice. In Proceedings of the ASEE Annual Conference, Atlanta, GA, USA, 23–26 June 2013. [Google Scholar]

- Roy, J. Engineering by the Numbers: ASEE Retention and Time-to-Graduation Benchmarks for Undergraduate Schools, Departments and Program; American Society for Engineering Education: Washington, DC, USA, 2019. [Google Scholar]

- Buffum, P.S.; Frankosky, M.; Boyer, K.E.; Wiebe, E.; Mott, B.; Lester, J. Leveraging Collaboration to Improve Gender Equity in a Game-based Learning Environment for Middle School Computer Science. In Proceedings of the Research on Equity and Sustained Participation in Engineering Computing and Technology, Charlotte, NC, USA, 13–14 August 2015. [Google Scholar]

- Coller, B.D. Work in progress—A video game for teaching dynamics. In Proceedings of the 2011 Frontiers in Education Conference (FIE), Rapid City, SD, USA, 12–15 October 2011. [Google Scholar]

- Buffum, P.S.; Frankosky, M.; Boyer, K.E.; Wiebe, E.; Mott, B.W.; Lester, J.C. Collaboration and Gender Equity in Game-Based Learning for Middle School Computer Science. Comput. Sci. Eng. 2016, 18, 18–28. [Google Scholar] [CrossRef]

- Cheng, Y.M.; Chen, P.F. Building an online game-based learning system for elementary school. In Proceedings of the 4th International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Harbin, China, 15–17 August 2008. [Google Scholar]

- Lester, J.C.; Spires, H.; Nietfeld, J.L.; Minogue, J.; Mott, B.W.; Lobene, E.V. Designing game-based learning environments for elementary science education: A narrative-centered learning perspective. Inf. Sci. 2014, 264, 4–18. [Google Scholar] [CrossRef]

- Shabihi, N.; Taghiyareh, F.; Faridi, M.H. The Relationship Between Gender and Game Dynamics in e-Learning Environment: An Empirical Investigation. In Proceedings of the 12th European Conference on Games Based Learning (ECGBL), Lille, France, 4–5 October 2018; pp. 574–582. [Google Scholar]

- Pezzullo, L.G.; Wiggins, J.B.; Frankosky, M.H.; Min, W.; Boyer, K.E.; Mott, B.W.; Wiebe, E.N.; Lester, J.C. “Thanks Alisha, Keep in Touch”: Gender Effects and Engagement with Virtual Learning Companions. In Proceedings of the 18th International Conference on Artificial Intelligence in Education (AIED), Wuhan, China, 28 June–1 July 2017. [Google Scholar]

- Robertson, J. Making games in the classroom: Benefits and gender concerns. Comput. Educ. 2012, 59, 385–398. [Google Scholar] [CrossRef]

- Alserri, S.A.; Zin, N.A.M.; Wook, T. Gender-based Engagement Model for Designing Serious Games. In Proceedings of the 6th International Conference on Electrical Engineering and Informatics (ICEEI)—Sustainable Society Through Digital Innovation, Langkawi, Malaysia, 25–27 November 2017. [Google Scholar]

- Creswell, J.W.; Plano Clark, V.L. Designing and Conducting Mixed Methods Research; Sage Publications Inc.: Thousand Oaks, CA, USA, 2018; p. 492. [Google Scholar]

- The Carnegi Classification of Institutions of High Education. 2018 Update—Facts & Figures; Center for Postsecondary Research, Ed.; Indiana University, School of Education: Bloomington, IN, USA, 2019. [Google Scholar]

- Cook-Chennault, K.; Villanueva, I. Preliminary Findings: RIEF—Understanding Pedagogically Motivating Factors for Underrepresented and Nontraditional Students in Online Engineering Learning Modules. In Proceedings of the 2019 ASEE Annual Conference & Exposition, Tampa, FL, USA, 16–19 June 2019. [Google Scholar]

- Cook-Chennault, K.; Villanueva, I. An initial exploration of the perspectives and experiences of diverse learners’ acceptance of online educational engineering games as learning tools in the classroom. In Proceedings of the 2019 IEEE Frontiers in Education Conference, Cincinnati Marriott at RiverCenter, Cincinnati, OH, USA, 16–19 October 2019. [Google Scholar]

- Cook-Chennault, K.; Villanueva, I. Exploring perspectives and experiences of diverse learners’ acceptance of online educational engineering games as learning tools in the classroom. In Proceedings of the 2020 IEEE Frontiers in Education Conference (FIE), Uppsala, Sweden, 21–24 October 2020. [Google Scholar]

- Shojaee, A.; Kim, H.W.; Cook-Chennault, K.; Villanueva Alarcon, I. What you see is what you get?—Relating eye tracking metrics to students’ attention to game elements. In Proceedings of the IEEE Frontiers in Education (FIE)—Education for a Sustainable Future, Lincoln, NE, USA; 2021. [Google Scholar]

- Gauthier, A.; Jenkinson, J. Game Design for Transforming and Assessing Undergraduates’ Understanding of Molecular Emergence (Pilot). In Proceedings of the 9th European Conference on Games-Based Learning (ECGBL), Nord Trondelag Univ Coll, Steinkjer, Norway, 8–9 October 2015. [Google Scholar]

- Johnson, E.; Giroux, A.; Merritt, D.; Vitanova, G.; Sousa, S. Assessing the Impact of Game Modalities in Second Language Acquisition: ELLE the EndLess LEarner. JUCS-J. Univers. Comput. Sci. 2020, 26, 880–903. [Google Scholar] [CrossRef]

- Palaigeorgiou, G.; Politou, F.; Tsirika, F.; Kotabasis, G. FingerDetectives: Affordable Augmented Interactive Miniatures for Embodied Vocabulary Acquisition in Second Language Learning. In Proceedings of the 11th European Conference on Game-Based Learning (ECGBL), Graz, Austria, 5–6 October 2017. [Google Scholar]

- Potter, H.; Schots, M.; Duboc, L.; Werneck, V. InspectorX: A Game for Software Inspection Training and Learning. In Proceedings of the IEEE 27th Conference on Software Engineering Education and Training (CSEE&T), Klagenfurt, Austria, 23–25 April 2014. [Google Scholar]

- Owen, H.E.; Licorish, S.A. Game-based student response system: The effectiveness of kahoot! On junior and senior information science students’ learning. J. Inf. Technol. Educ. Res. 2020, 19, 511–553. [Google Scholar] [CrossRef]

- Lucas, M.J.V.; Daviu, E.A.; Martinez, D.D.; Garcia, J.C.R.; Chust, A.P.; Domenech, C.G.; Cerdan, A.P. Engaging students in out-of-class activities through game-based learning and gamification. In Proceedings of the 9th Annual International Conference of Education, Research and Innovation (iCERi), Seville, Spain, 14–16 November 2016. [Google Scholar]

- Davis, F.D.; Venkatesh, V. A critical assessment of potential measurement biases in the technology acceptance model: Three experiments. Int. J. Hum.-Comput. Stud. 1996, 45, 19–45. [Google Scholar] [CrossRef] [Green Version]

- Arnold, S.R.; Kruatong, T.; Dahsah, C.; Suwanjinda, D. The classroom-friendly ABO blood types kit: Blood agglutination simulation. J. Biol. Educ. 2012, 46, 45–51. [Google Scholar] [CrossRef]

- Saldana, J. The Coding Manual for Qualitative Researchers; Sage Publications Inc.: Thousand Oaks, CA, USA, 2015. [Google Scholar]

- Krueger, R.A.; Casey, M.A. Focus Groups: A Practical Guide for Applied Research, 5th ed.; SAGE: Thousand Oaks, CA, USA, 2015. [Google Scholar]

- Corbin, J.; Strauss, A. Basics of Qualitative Research—Techniques and Procedures for Developing Grounded Theory, 4th ed.; Sage Publications, Inc.: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Charmaz, K. Constructing Grounded Theory, 2nd ed.; Introducing Qualitative Methods Series; Seaman, J., Ed.; Sage: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Grafsgaard, J.F.; Wiggins, J.B.; Boyer, K.E.; Wiebe, E.N.; Lester, J.C. Automatically Recognizing Facial Indicators of Frustration: A Learning-centric Analysis. In Proceedings of the 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction, Geneva, Switzerland, 2–5 September 2013. [Google Scholar]

- Craig, S.; Graesser, A.; Sullins, J.; Gholson, B. Affect and learning: An exploratory look into the role of affect in learning with AutoTutor. J. Educ. Media 2004, 29, 241–250. [Google Scholar] [CrossRef] [Green Version]

- Abramson, J.; Dawson, M.; Stevens, J. An Examination of the Prior Use of E-Learning Within an Extended Technology Acceptance Model and the Factors That Influence the Behavioral Intention of Users to Use M-Learning. SAGE Open 2015, 5. [Google Scholar] [CrossRef] [Green Version]

- Graesser, A.C. Emotions are the experiential glue of learning environments in the 21st century. Learn. Instr. 2019, 70, 101212. [Google Scholar] [CrossRef]

| Discipline | Topic(s) Discussed | Population Studied, Men, Women, Race/Ethnicity | Authors |

|---|---|---|---|

| Computer science, electrical engineering, liberal arts and social sciences, material science engineering, mathematics, mechanical engineering, physics, chemistry (review article)—undergraduate course | Gamification approach, educational objectives, application to curriculum and cognitive learning outcomes | ---- | Menandro, F. Arnab, S. [64] |

| Mechanical engineering (subject: dynamics, Game: Spumone)—undergraduate course | Students’ conceptual understanding (Dynamics Conceptual Inventory), emotional engagement, and experience with video game | N = 243 Predominately male and ethnically diverse [65] (Location: USA) | Shernoff, D., Ryu, J.-C., et al. [65] |

| Mechanical engineering (course subject: graphical engineering)—undergraduate course | Game enjoyment, usefulness, motivation Multiple games: Championship of Limits and Fits, Tournament of Video Search of Fabrication Processes, Forum of Doubts, Proposals of Trivial Questions, Contest of 3D modeling | N = 51 11 Women (22%) 30 gamers (59%) (Location: Spain) | Gomez-Jauregui, V., et al. [58] |

| All disciplines—undergraduate and graduate students: 30% architecture, 26% computer science, 22% engineering sciences, 10% social sciences, 10% economics. | Sought to understand how students expect or not usefulness in gamification. | N = 83 31 women (37%) Ages 18–55 years (Location: Germany) | Lenz, L., et al. [62] |

| Mechanical engineering—graduate course | Examine student engagement, active cooperation within a group, and give real-time feedback to students | N = 36 (Location: Netherlands) | Martinetti, A. [63] |

| Mechanical Engineering (Game—Pump It Up) | Focus on how the game was designed and how it is used by students who use the game to write computer code to program robots that manufacture pump adapter pipes. | No participants—game development | Janssen, D., et al. [61] |

| Mechanical Engineering—dynamic systems and control (Game—NIU-Torcs) | Student engagement and perceived effectiveness of race car game. | N = 51 N = 38 (Location = USA) | Coller, B., et al. [23,27] |

| Civil Engineering—graduate engineering course (Game—Internal Force Master) | Examined if an online learning tool could make complex materials more approachable. | N = 121 (Location = Austria) | Ebner, M. and Holzinger, A. [68] |

| Civil Engineering—virtual reality game for use in civil engineering education (various ages, academic backgrounds) | Examined participant acceptance of a virtual reality game in construction engineering. | N = 72 (Location = Portugal) | Dinis, F. M., et al. [67] |

| Pre-Game Questions | Learning Model/Theory Reference |

|---|---|

| Q1.pre. Do you play video games on your phone? | Expectancy Value Theory [29,38] |

| Q2.pre. Are you currently taking a Dynamics or Statics class? | |

| Q3.pre. Have you played the learning game before? | |

| Q4.pre. Do you play video games on your computer? | |

| Post-Game Questions | Learning Model/ Theory Reference |

| Q1.post. The Challenge Level I ended the game at was (1–24 Challenge Levels): | |

| Q2.post. The game is easy to play. {ease of use} | Technology Acceptance Model [30,32,112] |

| Q3.post. The ways to advance to higher levels in the game are easy to understand. {ease of use} | |

| Q4.post. I understood the engineering topics each level of the game was teaching me. {ease of use} | |

| Q5.post. This game helped me to understand engineering truss structures. {useful—help to do my job better} | |

| Q6.post. Playing the game increased my confidence in my engineering skills. | Added to assess usefulness. |

| Q7.post. The engineering concepts presented in the game were intuitive to me. | |

| Q8. post. Did you enjoy playing the game? | Expectancy Value Theory [29,38] |

| Q9.post. I got frustrated playing this game. | Game-Based Learning [42,52] and Expectancy Value Theory [29,38] |

| Q10.post. The hints motivated me to want to advance to higher challenge levels. | |

| Q11.post. The learning lessons or goals of each challenge are defined in enough detail to play the game. | |

Q12.post I would improve this game by (complete the sentence). Select a choice.

| |

Q13.post. This game is a good learning tool because (select appropriate responses).

| Added to assess usefulness. |

| Focus Group Questions | |

| F1. Would you use this game to prepare for a job interview? Explain your answer. | Added to assess usefulness |

| F2. Would you use this game to prepare for an exam for a class? |

| Self-Identification | AA/Black (%) | Caucasian (%) | LatinX (%) | Asian (%) | Mixed-Race(%) | Other Count (%) | Total (S)(%) |

|---|---|---|---|---|---|---|---|

| Male | 3 (2%) | 38 (19%) | 7 (4%) | 47 (23%) | 9 (5%) | -- | 104 (51%) |

| Female | 10 (5%) | 26 (13%) | 10 (5%) | -- | 2 (1%) | 1 (1%) | 93 (46%) |

| Non-binary | -- | 1 (0%) | -- | -- | -- | -- | 3 (2%) |

| Other | -- | -- | -- | -- | -- | 1 (1%) | 1 (1%) |

| Total Count (%) | (13) 7% | (65) 32% | (17) 8% | (47) 46% | (11) 6% | (2) 1% | (201) 100% |

| Pre-Game Question | Yes Count | Yes (%) | No Count | No (%) |

|---|---|---|---|---|

| Q1.pre. Do you play video games on your phone? | 141 | 70.1% | 60 | 29.9% |

| Q2.pre. Are you currently taking a Dynamics or Statics class? | 144 | 71.6% | 57 | 28.4% |

| Q3.pre. Have you played the learning game before? | 27 | 13.4% | 174 | 86.6% |

| Q4.pre. Do you play video games on your computer? | 126 | 62.7% | 75 | 37.3% |

| Question (Number of Responses) | Mean ± s |

|---|---|

| Q1. The Challenge Level I ended the game at was: (N = 174) | 7.36 ± 3.01 |

| Q2. The game is easy to play. | 3.06 ± 1.43 |

| Q3. The ways to advance to higher levels in the game are easy to understand. | 2.98 ± 1.52 |

| Q4. I understood the engineering topics each level of the game was teaching me. | 3.08 ± 1.52 |

| Q5. This game helped me to understand engineering truss structures. | 2.77 ± 1.22 |

| Q6. Playing the game increased my confidence in my engineering skills. | 3.62 ± 1.47 |

| Q7. The engineering concepts presented in the game were intuitive to me. | 2.61 ± 1.13 |

| Q8. Did you enjoy playing the engineering serious game? | 2.49 ± 1.40 |

| Q9. The hints (in the game) motivated me to want to advance to higher challenge levels. | 3.80 ± 1.64 |

| Q10. The learning lessons or goals of each challenge are defined in enough detail to play the game. | 3.90 ± 1.64 |

| Q11. I got frustrated playing this game. | 3.47 ± 1.65 |

| Choice | Count (%) |

|---|---|

| Adding a story line. | 46/201 (22.9%) |

| Adding avatars/characters. | 35/201 (17.4%) |

| Making the images look more like real life. | 40/201 (19.9%) |

| Adding opportunities to compete against other players while playing. | 91/201 (45.3%) |

| The game is fine the way that it is. | 15/201 (7.5%) |

| Adding more explanation to the challenges. | 157/201 (78.1%) |

| Changing the rewards from nuts to something else. | 37/201 (18.4%) |

| Choice | Count (%) |

|---|---|

| The game is easy to figure out. | 88/201 (43.8%) |

| I can apply what I learned to my classes. | 109/201 (54.2%) |

| The game taught me about stability of truss structures. | 130/201 (64.7%) |

| It is a fun alternative to traditional learning. | 154/201 (76.6%) |

| Post-Game Questions | Gender | N | Mean | Std. Dev. | F | Sig. |

|---|---|---|---|---|---|---|

| Q1: The Challenge Level I ended the game at was: | Male | 87 | 8.47 | 2.90 | 9.12 | 0.000 |

| Female | 83 | 6.30 | 2.72 | |||

| Non-binary | 3 | 5.33 | 2.52 | |||

| Other | 1 | 5.00 | -- | |||

| Total | 174 | 7.36 | 3.01 | |||

| Q10: The learning lessons or goals of each challenge are defined in enough detail to play the game. | Male | 104 | 3.37 | 1.46 | 8.88 | 0.000 |

| Female | 93 | 4.44 | 1.66 | |||

| Non-binary | 3 | 5.33 | 0.58 | |||

| Other | 1 | 5.00 | -- | |||

| Total | 201 | 3.90 | 1.64 | |||

| Q11: I got frustrated playing this game. | Male | 104 | 3.84 | 1.63 | 4.04 | 0.008 |

| Female | 93 | 3.06 | 1.58 | |||

| Non-binary | 3 | 3.67 | 2.08 | |||

| Other | 1 | 2.00 | -- | |||

| Total | 201 | 3.47 | 1.65 |

| Post-Game Questions | Do You Play Video Games on Your Phone? | Mean | Std. Dev. | N | F | Sig. |

|---|---|---|---|---|---|---|

| Q5. This game helped me to understand engineering truss structures. | Yes | 2.58 | 1.13 | 141 | 11.44 | 0.001 |

| No | 3.20 | 1.31 | 60 | |||

| Total | 2.77 | 1.22 | 201 | |||

| Q6. Playing the game increased my confidence in my engineering skills. | Yes | 3.46 | 1.44 | 141 | 5.42 | 0.021 |

| No | 3.98 | 1.49 | 60 | |||

| Total | 3.62 | 1.47 | 201 | |||

| Q8. Did you enjoy playing the serious game? | Yes | 2.26 | 1.20 | 141 | 12.94 | 0.000 |

| No | 3.02 | 1.68 | 60 | |||

| Total | 2.49 | 1.40 | 201 | |||

| Q9. The hints motivated me to want me to advance to higher challenge levels. | Yes | 3.59 | 1.65 | 141 | 7.80 | 0.006 |

| No | 4.28 | 1.53 | 60 | |||

| Total | 3.80 | 1.64 | 201 | |||

| Q10. The learning lessons or goals of each challenge are defined in enough detail to play the game. | Yes | 3.71 | 1.63 | 141 | 6.58 | 0.011 |

| No | 4.35 | 1.60 | 60 | |||

| Total | 3.90 | 1.64 | 201 |

| Post-Game Questions. | Do You Play Video Games on Your Computer? | N | Mean | Std. Dev. | F | Sig. |

|---|---|---|---|---|---|---|

| Q2. This game is easy to play. | Yes | 126 | 2.86 | 1.337 | 7.353 | 0.007 |

| No | 75 | 3.41 | 1.517 | |||

| Total | 201 | 3.06 | 1.429 | |||

| Q1. The Challenge Level I ended the game at was: | Yes | 106 | 7.96 | 3.014 | 11.460 | 0.001 |

| No | 68 | 6.43 | 2.766 | |||

| Total | 174 | 7.36 | 3.007 | |||

| Q4. I understood the engineering topics each level of the game was teaching me. | Yes | 126 | 2.90 | 1.425 | 4.973 | 0.027 |

| No | 75 | 3.39 | 1.635 | |||

| Total | 201 | 3.08 | 1.521 | |||

| Q5. This game helped me to understand engineering truss structures. | Yes | 126 | 2.60 | 1.125 | 6.220 | 0.013 |

| No | 75 | 3.04 | 1.320 | |||

| Total | 201 | 2.77 | 1.217 | |||

| Q6. Playing the game increased my confidence in my engineering skills. | Yes | 126 | 3.37 | 1.457 | 10.342 | 0.002 |

| No | 75 | 4.04 | 1.409 | |||

| Total | 201 | 3.62 | 1.472 | |||

| Q9. The hints motivated me to want me to advance to higher challenge levels. | Yes | 126 | 3.62 | 1.649 | 3.986 | 0.047 |

| No | 75 | 4.09 | 1.595 | |||

| Total | 201 | 3.80 | 1.641 | |||

| Q10. The learning lessons or goals of each challenge are defined in enough detail to play the game. | Yes | 126 | 3.60 | 1.655 | 12.313 | 0.001 |

| No | 75 | 4.41 | 1.499 | |||

| Total | 201 | 3.90 | 1.643 | |||

| Q11. I got frustrated playing this game. | Yes | 126 | 3.69 | 1.671 | 6.327 | 0.013 |

| No | 75 | 3.09 | 1.552 | |||

| Total | 201 | 3.47 | 1.649 |

| Post-Game Question | Do You Play Video Games on Your Phone? | Gender | Mean | Std. Dev. | N | F | Sig. |

|---|---|---|---|---|---|---|---|

| Q1: The Challenge Level I ended the game at was: (1–24 Challenge Levels): ________. | Yes | Male | 8.54 | 2.750 | 65 | 5.022 | 0.000 |

| Female | 6.55 | 2.812 | 53 | ||||

| Non-binary | 4.00 | 1.414 | 2 | ||||

| Other | 5.00 | -- | 1 | ||||

| Total | 7.56 | 2.952 | 121 | ||||

| No | Male | 8.27 | 3.383 | 22 | |||

| Female | 5.87 | 2.543 | 30 | ||||

| Non-binary | 8.00 | -- | 1 | ||||

| Total | 6.91 | 3.109 | 53 | ||||

| Total | Male | 8.47 | 2.905 | 87 | |||

| Female | 6.30 | 2.722 | 83 | ||||

| Non-binary | 5.33 | 2.517 | 3 | ||||

| Other | 5.00 | -- | 1 | ||||

| Total | 7.36 | 3.007 | 174 | ||||

| Q2. This game is easy to play. | Yes | Male | 2.68 | 1.312 | 65 | 2.043 | 0.063 |

| Female | 3.32 | 1.478 | 53 | ||||

| Non-binary | 3.50 | 0.707 | 2 | ||||

| Other | 3.00 | -- | 1 | ||||

| Total | 2.98 | 1.405 | 121 | ||||

| No | Male | 2.77 | 1.152 | 22 | |||

| Female | 3.43 | 1.524 | 30 | ||||

| Non-binary | 1.00 | -- | 1 | ||||

| Total | 3.11 | 1.423 | 53 | ||||

| Total | Male | 2.70 | 1.268 | 87 | |||

| Female | 3.36 | 1.486 | 83 | ||||

| Non-binary | 2.67 | 1.528 | 3 | ||||

| Other | 3.00 | -- | 1 | ||||

| Total | 3.02 | 1.408 | 174 | ||||

| Q5. This game helped me to understand engineering truss structures. | Yes | Male | 2.60 | 1.235 | 65 | 2.432 | 0.028 |

| Female | 2.55 | 1.011 | 53 | ||||

| Non-binary | 2.00 | 0.000 | 2 | ||||

| Other | 5.00 | -- | 1 | ||||

| Total | 2.59 | 1.145 | 121 | ||||

| No | Male | 3.36 | 1.293 | 22 | |||

| Female | 3.07 | 1.388 | 30 | ||||

| Non-binary | 3.00 | -- | 1 | ||||

| Total | 3.19 | 1.331 | 53 | ||||

| Total | Male | 2.79 | 1.286 | 87 | |||

| Female | 2.73 | 1.180 | 83 | ||||

| Non-binary | 2.33 | 0.577 | 3 | ||||

| Other | 5.00 | -- | 1 | ||||

| Total | 2.77 | 1.233 | 174 | ||||

| Q9. The hints motivated me to want me to advance to higher challenge levels. | Yes | Male | 3.54 | 1.768 | 65 | 2.392 | 0.030 |

| Female | 3.45 | 1.539 | 53 | ||||

| Non-binary | 4.00 | 0.000 | 2 | ||||

| Other | 7.00 | -- | 1 | ||||

| Total | 3.54 | 1.674 | 121 | ||||

| No | Male | 4.41 | 1.709 | 22 | |||

| Female | 4.37 | 1.542 | 30 | ||||

| Non-binary | 4.00 | -- | 1 | ||||

| Total | 4.38 | 1.584 | 53 | ||||

| Total | Male | 3.76 | 1.785 | 87 | |||

| Female | 3.78 | 1.593 | 83 | ||||

| Non-binary | 4.00 | 0.000 | 3 | ||||

| Other | 7.00 | -- | 1 | ||||

| Total | 3.79 | 1.687 | 174 | ||||

| Q10. The learning lessons or goals of each challenge are defined in enough detail to play the game. | Yes | Male | 3.26 | 1.482 | 65 | 5.392 | 0.000 |

| Female | 4.23 | 1.739 | 53 | ||||

| Non-binary | 5.50 | 0.707 | 2 | ||||

| Other | 5.00 | 1 | |||||

| Total | 3.74 | 1.667 | 121 | ||||

| No | Male | 3.41 | 1.333 | 22 | |||

| Female | 4.93 | 1.461 | 30 | ||||

| Non-binary | 5.00 | 1 | |||||

| Total | 4.30 | 1.576 | 53 | ||||

| Total | Male | 3.30 | 1.440 | 87 | |||

| Female | 4.48 | 1.670 | 83 | ||||

| Non-binary | 5.33 | 0.577 | 3 | ||||

| Other | 5.00 | 1 | |||||

| Total | 3.91 | 1.656 | 174 |

| Post-Game Question | Gender | Do You Play Video Games on Your Computer? | Mean | Std. Dev. | N | F | Sig. |

|---|---|---|---|---|---|---|---|

| Q1. The Challenge Level I ended the game at was: | Male | Yes | 8.70 | 2.86 | 66 | 6.587 | 0.000 |

| No | 7.76 | 3.02 | 21 | ||||

| Total | 8.47 | 2.90 | 87 | ||||

| Female | Yes | 6.92 | 2.96 | 36 | |||

| No | 5.83 | 2.45 | 47 | ||||

| Total | 6.30 | 2.72 | 83 | ||||

| Non-binary | Yes | 5.33 | 2.52 | 3 | |||

| Total | 5.33 | 2.52 | 3 | ||||

| Other | Yes | 5.00 | -- | 1 | |||

| Total | 5.00 | -- | 1 | ||||

| Total | Yes | 7.96 | 3.01 | 106 | |||

| No | 6.43 | 2.77 | 68 | ||||

| Total | 7.36 | 3.01 | 174 | ||||

| Q2. This game is easy to play. | Male | Yes | 2.68 | 1.31 | 66 | 3.601 | 0.004 |

| No | 2.76 | 1.14 | 21 | ||||

| Total | 2.70 | 1.27 | 87 | ||||

| Female | Yes | 2.89 | 1.26 | 36 | |||

| No | 3.72 | 1.56 | 47 | ||||

| Total | 3.36 | 1.49 | 83 | ||||

| Non-binary | Yes | 2.67 | 1.53 | 3 | |||

| Total | 2.67 | 1.53 | 3 | ||||

| Other | Yes | 3.00 | -- | 1 | |||

| Total | 3.00 | -- | 1 | ||||

| Total | Yes | 2.75 | 1.29 | 106 | |||

| No | 3.43 | 1.50 | 68 | ||||

| Total | 3.02 | 1.41 | 174 | ||||

| Q5. This game helped me to understand engineering truss structures. | Male | Yes | 2.73 | 1.22 | 66 | 2.144 | 0.063 |

| No | 3.00 | 1.48 | 21 | ||||

| Total | 2.79 | 1.29 | 87 | ||||

| Female | Yes | 2.36 | 0.96 | 36 | |||

| No | 3.02 | 1.26 | 47 | ||||

| Total | 2.73 | 1.18 | 83 | ||||

| Non-binary | Yes | 2.33 | 0.58 | 3 | |||

| Total | 2.33 | 0.58 | 3 | ||||

| Other | Yes | 5.00 | -- | 1 | |||

| Total | 5.00 | -- | 1 | ||||

| Total | Yes | 2.61 | 1.15 | 106 | |||

| No | 3.01 | 1.32 | 68 | ||||

| Total | 2.77 | 1.23 | 174 | ||||

| Q6. Playing the game increased my confidence in my engineering skills. | Male | Yes | 3.48 | 1.50 | 66 | 2.118 | 0.066 |

| No | 3.76 | 1.26 | 21 | ||||

| Total | 3.55 | 1.44 | 87 | ||||

| Female | Yes | 3.06 | 1.31 | 36 | |||

| No | 4.04 | 1.49 | 47 | ||||

| Total | 3.61 | 1.49 | 83 | ||||

| Non-binary | Yes | 3.33 | 1.15 | 3 | |||

| Total | 3.33 | 1.15 | 3 | ||||

| Other | Yes | 4.00 | -- | 1 | |||

| Total | 4.00 | -- | 1 | ||||

| Total | Yes | 3.34 | 1.43 | 106 | |||

| No | 3.96 | 1.42 | 68 | ||||

| Total | 3.58 | 1.45 | 174 | ||||

| Q8. Did you enjoy playing the Civil-Build game? | Male | Yes | 2.36 | 1.20 | 66 | 2.376 | 0.041 |

| No | 2.38 | 0.86 | 21 | ||||

| Total | 2.37 | 1.12 | 87 | ||||

| Female | Yes | 2.28 | 1.11 | 36 | |||

| No | 2.96 | 1.61 | 47 | ||||

| Total | 2.66 | 1.45 | 83 | ||||

| Non-binary | Yes | 2.33 | 1.15 | 3 | |||

| Total | 2.33 | 1.15 | 3 | ||||

| Other | Yes | 5.00 | -- | 1 | |||

| Total | 5.00 | -- | 1 | ||||

| Total | Yes | 2.36 | 1.18 | 106 | |||

| No | 2.78 | 1.44 | 68 | ||||

| Total | 2.52 | 1.30 | 174 | ||||

| Q10. The learning lessons or goals of each challenge are defined in enough detail to play the game. | Male | Yes | 3.30 | 1.51 | 66 | 6.986 | 0.000 |

| No | 3.29 | 1.23 | 21 | ||||

| Total | 3.30 | 1.44 | 87 | ||||

| Female | Yes | 4.00 | 1.90 | 36 | |||

| No | 4.85 | 1.38 | 47 | ||||

| Total | 4.48 | 1.67 | 83 | ||||

| Non-binary | Yes | 5.33 | 0.58 | 3 | |||

| Total | 5.33 | 0.58 | 3 | ||||

| Other | Yes | 5.00 | -- | 1 | |||

| Total | 5.00 | -- | 1 | ||||

| Total | Yes | 3.61 | 1.68 | 106 | |||

| No | 4.37 | 1.52 | 68 | ||||

| Total | 3.91 | 1.66 | 174 | ||||

| Q11. I got frustrated playing this game. | Male | Yes | 3.94 | 1.67 | 66 | 3.696 | 0.003 |

| No | 3.90 | 1.55 | 21 | ||||

| Total | 3.93 | 1.63 | 87 | ||||

| Female | Yes | 3.17 | 1.61 | 36 | |||

| No | 2.77 | 1.48 | 47 | ||||

| Total | 2.94 | 1.54 | 83 | ||||

| Non-binary | Yes | 3.67 | 2.08 | 3 | |||

| Total | 3.67 | 2.08 | 3 | ||||

| Other | Yes | 2.00 | -- | 1 | |||

| Total | 2.00 | -- | 1 | ||||

| Total | Yes | 3.65 | 1.68 | 106 | |||

| No | 3.12 | 1.58 | 68 | ||||

| Total | 3.44 | 1.66 | 174 |

| Example Quotes to Question 12 from Women |

|---|

| Having an introductory tutorial that gives general explanations as to how to play the game and what each icon represents. An introduction would allow the gamer to have a better understanding about the game’s goal and rules. Many games that I have seen have this sort of interactive tutorial. Additionally, an explanation of how your structure is scored would also be helpful to allow the gamer to know what actions are “worth more points” and would therefore maximize the gamer’s score (while I was playing, I never got a reward greater than one nut, and I was not sure how to improve that). |

| Providing more incentive to get 3 bolts. A more guided tutorial or explanation would’ve been good as well. They just toss you into the game. |

| giving hints to explain how to get higher scores |

| I think having a brief demo at the beginning would be helpful. As I said earlier, I did not grow up playing video games so I don’t always intuitively know how the controls work. |

| Example quotes to question from men |

| I feel like playing 3D games when I was younger helped me a lot in visualizing 3D objects in Engineering, so perhaps making the game 3D. Also, the placement of the structure on the grid was very awkward and made it frustrating to build symmetrical structures. I may be wrong about this, but structures that earned more than one bolt seemed very impractical to build in real life. While saving on material and money is important, not every type of beam (length, thickness, etc.) is always available. |

| Make the game more calculation based. But then that would make the game boring. But I guess that what it takes to make a game that is informative and useful. |

| Making it impossible to complete levels with nonsense structures. Also, adding maybe a tutorial that explains how some engineering concepts (moments, tensile/compressive strength, buckling) are relevant to the game, or making the challenges in a way such that they teach these concepts, one at a time. |

| Having a small tutorial section on how to use all the tools and improving the method of placing objects. |

| Fully explain about the game before so people know not to spend most of their time trying to get more than one nut. |

| Example quotes to question from non-binary |

| explain the objective of the game within the game itself |

| Option to show the forces in the beams numerically |

| Example Quotes to Question 13 from Women |

|---|

| “I liked how the simulation could be slowed down to really give the gamer an opportunity to see where the weaknesses in their structure lie. This allowed me to correct the issues and construct a better truss.” |

| “I liked the fact that some of the bars were highlighted blue or red, which I assume meant that they were on tension or compression, and that should be included in the instructions.” |

| Example quotes to question from men |

| “With some more explanation in the game about the mathematical statics counterparts to the game experience, the game would provide knowledge which can be applied to classes.” |

| “I didn’t like it. At all. There is nothing about this game that was fun nor educational, any “engineering” aspects were not explained, yet a 5-year-old could probably figure it out by trial and error. (Textbox Q13—men, Pos. 4)” |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cook-Chennault, K.; Villanueva Alarcón, I.; Jacob, G. Usefulness of Digital Serious Games in Engineering for Diverse Undergraduate Students. Educ. Sci. 2022, 12, 27. https://doi.org/10.3390/educsci12010027

Cook-Chennault K, Villanueva Alarcón I, Jacob G. Usefulness of Digital Serious Games in Engineering for Diverse Undergraduate Students. Education Sciences. 2022; 12(1):27. https://doi.org/10.3390/educsci12010027

Chicago/Turabian StyleCook-Chennault, Kimberly, Idalis Villanueva Alarcón, and Gabrielle Jacob. 2022. "Usefulness of Digital Serious Games in Engineering for Diverse Undergraduate Students" Education Sciences 12, no. 1: 27. https://doi.org/10.3390/educsci12010027

APA StyleCook-Chennault, K., Villanueva Alarcón, I., & Jacob, G. (2022). Usefulness of Digital Serious Games in Engineering for Diverse Undergraduate Students. Education Sciences, 12(1), 27. https://doi.org/10.3390/educsci12010027