The AI-Atlas: Didactics for Teaching AI and Machine Learning On-Site, Online, and Hybrid

Abstract

1. Analysis and Exploration

1.1. The Problem of Teaching Artificial Intelligence as a Foundational Subject

1.2. Existing AI Curricula and Their Relation to Our Didactic Concept

2. Design and Construction

2.1. The Atlas Metaphor

2.2. The Core Mentality: AI Professionals Are Explorers

2.3. Didactic Principles

2.3.1. Principle of “Doing It Yourself”

2.3.2. Principle of “Intrinsic Motivation”

2.3.3. Principle of “EEE” (Good Explanation, Enthusiasm, and Empathy)

2.4. The Aspects of Establishing AI Foundations

2.4.1. Canonization

2.4.2. Deconstruction

2.4.3. Cross-Linkage

2.5. The AI-Atlas in Practice: Suggested Didactic Settings to Combine It All

2.5.1. Basic Didactic Setting

2.5.2. Fostering Reflection

2.5.3. Encouraging Self-Responsibility and Motivation

2.5.4. Promoting Cooperative Competence Development

2.5.5. Activation of Students

2.5.6. Enabling Social Learning

2.5.7. Providing Open Educational Resources (OER) and Blended Learning

2.5.8. Creating Practical Career Relevance

3. Implementation and Analysis

3.1. Participants and Data Basis for Analysis

3.2. Going Online by Necessity

3.3. Qualitative Assessment

3.3.1. Dimensions “Canonization and Deconstruction”

“Sustainable technologies are taught; in the process you are brought down to earth.” (AI)“[The] module gives a good overview of the overall topic.” (ML)“I welcome that […] “where’s the intelligence?” is answered [in] each lecture.” (AI)

3.3.2. Dimensions “Motivation and Social Learning”

“The professors […] enthusiastically explained it very precisely. I also had the feeling that the fun of the topic seemed very important to them. It was also important for them that everyone understood.” (ML)“The two lecturers are very motivated and they pass on their enthusiasm and experience in the respective field. I find the exercises and tools we use (Jupyter notebooks, scikit-learn, Orange) very useful and they complement the lessons well. I also appreciate that discussions among each other and in plenary are stimulated.” (ML)“You can feel that the lecturer is convinced of the subject. He also often brings good examples to help the students on their way.” (AI)“Very good commitment, super presentation style. Enthusiasm for the subject is obvious and motivates me a lot.” (AI)

3.3.3. Dimension “Activation”

“The labs support the learning process very much; similarly helpful are the exercises throughout the lectures.” (AI)“Very handy are the labs where one implements hands-on what should be learned.” (AI)“Good lecture-style presentation, active presence of the lecturers during the labs that motivates students to listen even on Friday afternoons.” (AI)

“Good, guided (tutorial) exercises that were very helpful, especially for less advanced students.” (ML)“The lectures are very interactive.” (ML)

3.3.4. Dimension “Open Educational Resources”

“The videos on YouTube are ideal for repeating.” (ML)“The recording of the lectures is very helpful. It gives the students the possibility to review parts of the lecture for exam preparation or if you haven’t understood everything during the lecture.” (ML)

3.3.5. Criticism: Dimensions “Self-Responsibility and Activation”

“More exercises during the lecture or in the lab sessions. The topics are not always easy, and small exercises help to learn them correctly.” (AI)“[The] labs are unfortunately a bit too time-consuming.” (AI)“The theory necessary for the labs was partly a bit postponed, … makes the beginning a bit difficult.” (AI)“Maybe the lab sessions should not be so specific, but should cover a wider range of knowledge and not go into so much detail.”(AI)“The way the lecturers address the subjects, in my opinion, is very theoretical. There are a lot of mathematical demonstrations that I consider to be out of the scope, and this time should be dedicated to make more examples of the subject. […] Also the course demands way more time than the one available by a full-time student.” (ML)“Unfortunately, the material covered is just too much. […] you don’t really see the learning objectives for the exam. It’s good to also cover topics superficially and if you need them you can look them up more precisely. But in the lecture, it is not very clear which topics are such.” (ML)“More exercises, clear knowledge points, mock exam.” (ML)

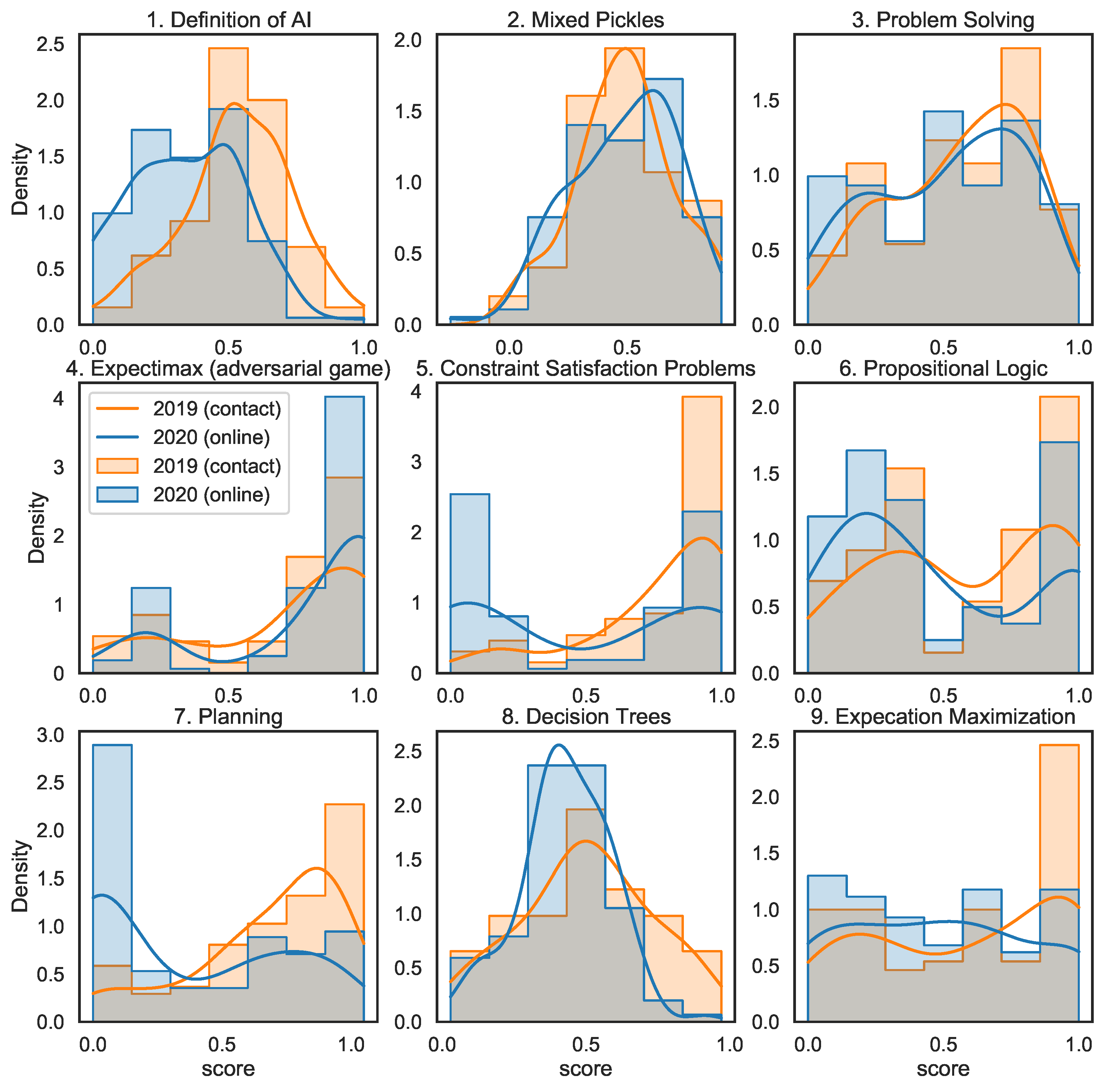

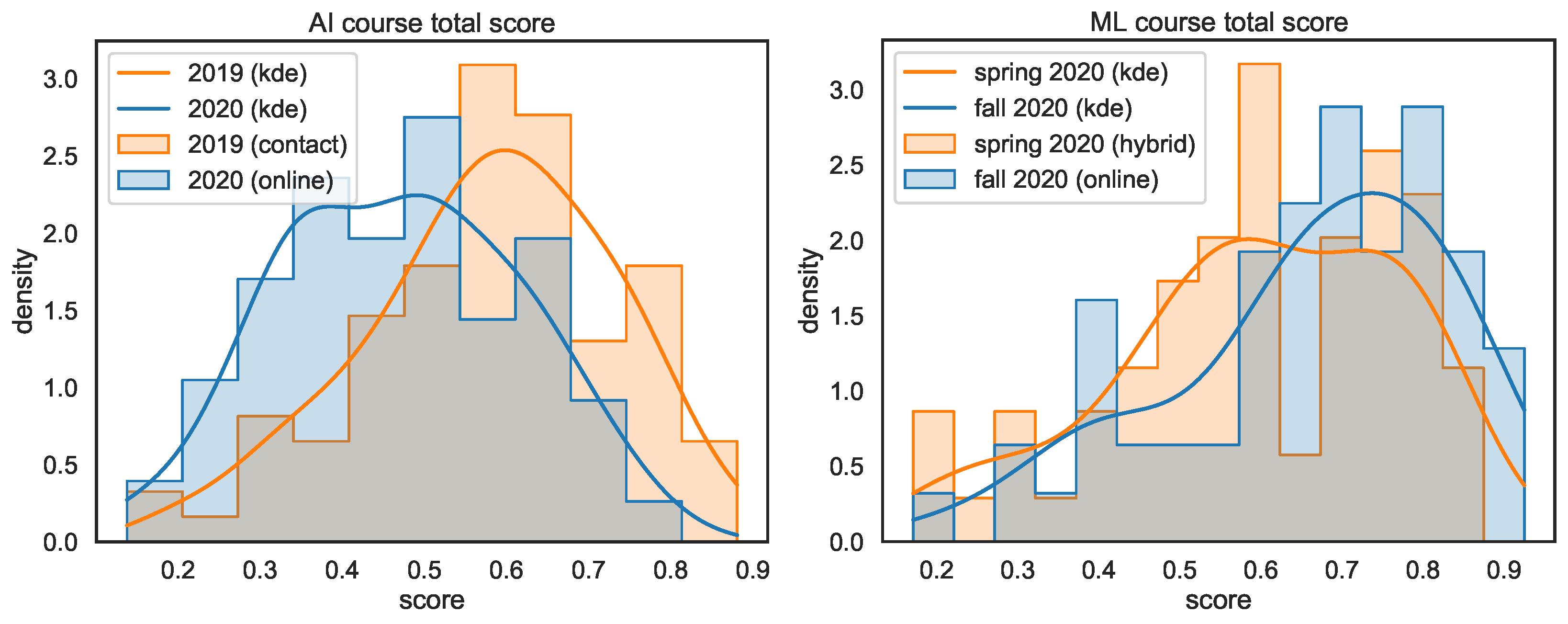

3.4. Quantitative Assessment

3.4.1. AI Course Fall Terms 2019 vs. 2020

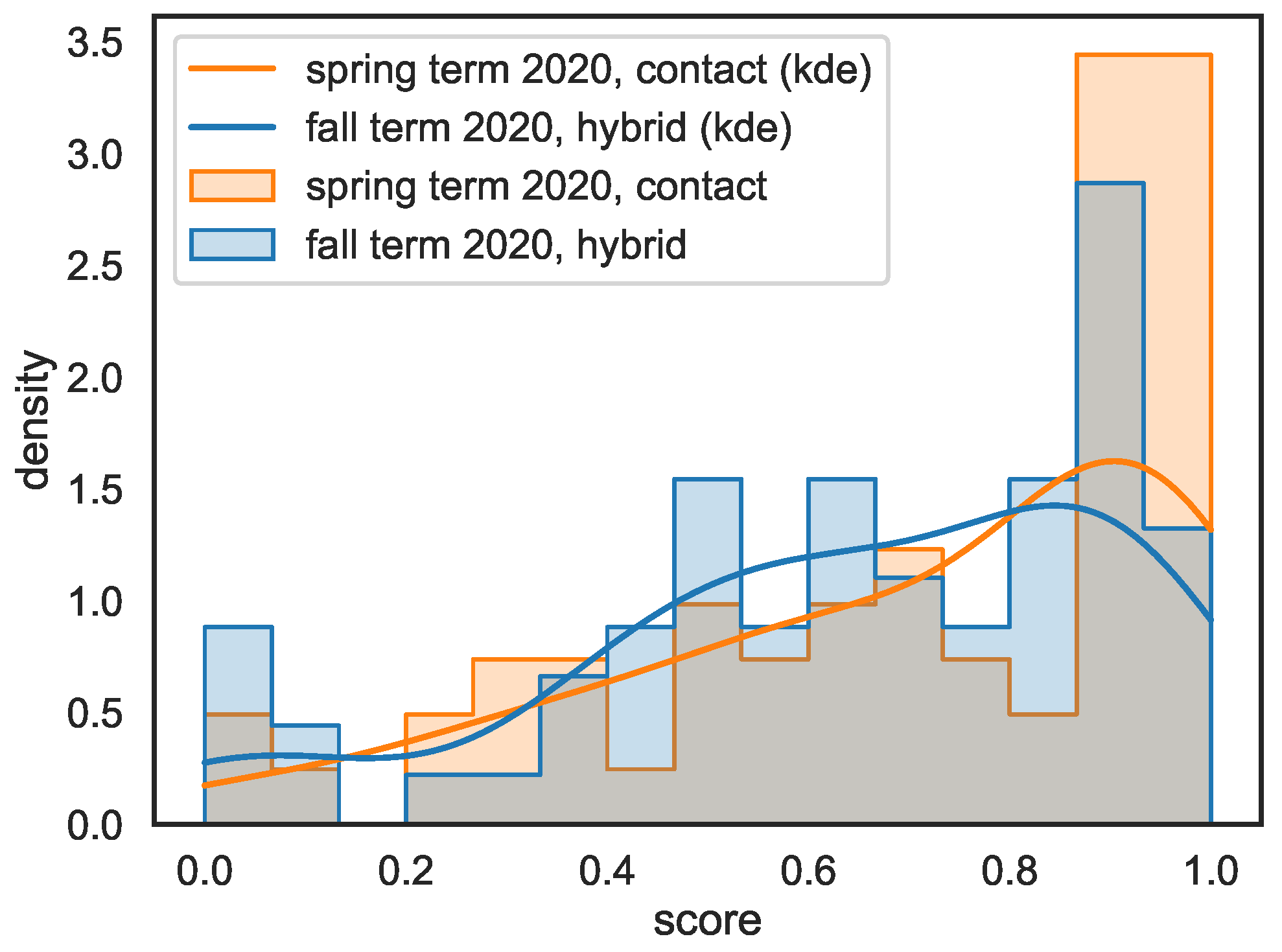

3.4.2. ML Course Spring Term 2020 vs. Fall Term 2020

4. Evaluation and Reflection

4.1. Tracing Weaker Quantitative Results in Online Teaching Mode

4.2. Tracing Worst Quantitative Results in Hybrid Teaching Mode

4.3. Moving towards a Didactic Concept for Flexible Teaching and Learning

4.3.1. Regarding “Reflection” and “Motivation”

4.3.2. Regarding “Cooperation” and “Social Learning”

4.3.3. Regarding “Activation of Students”

4.3.4. Regarding “Blended Learning”

4.4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Outline of the AI and ML Modules

Appendix A.1. The AI Course

| Semester | Fall 2019 | Fall 2020 | ||

|---|---|---|---|---|

| Profile | Absolute | % | Absolute | % |

| Full time | 38 | 39.6% | 55 | 48.7% |

| Part time | 58 | 60.4% | 58 | 51.3% |

| Total | 96 | 100% | 113 | 100% |

| Male | 86 | 89.6% | 104 | 92.0% |

| Female | 10 | 10.4% | 9 | 8.0% |

| Topic (Duration) | Key Question | Methods (Excerpt) | Practice |

|---|---|---|---|

| 1. Introduction to AI (2 weeks) | What is (artificial) intelligence? | The concept of a rational agent | AI for sci-fi readers: formulating one’s own opinion as a reply to a futuristic essay [6] |

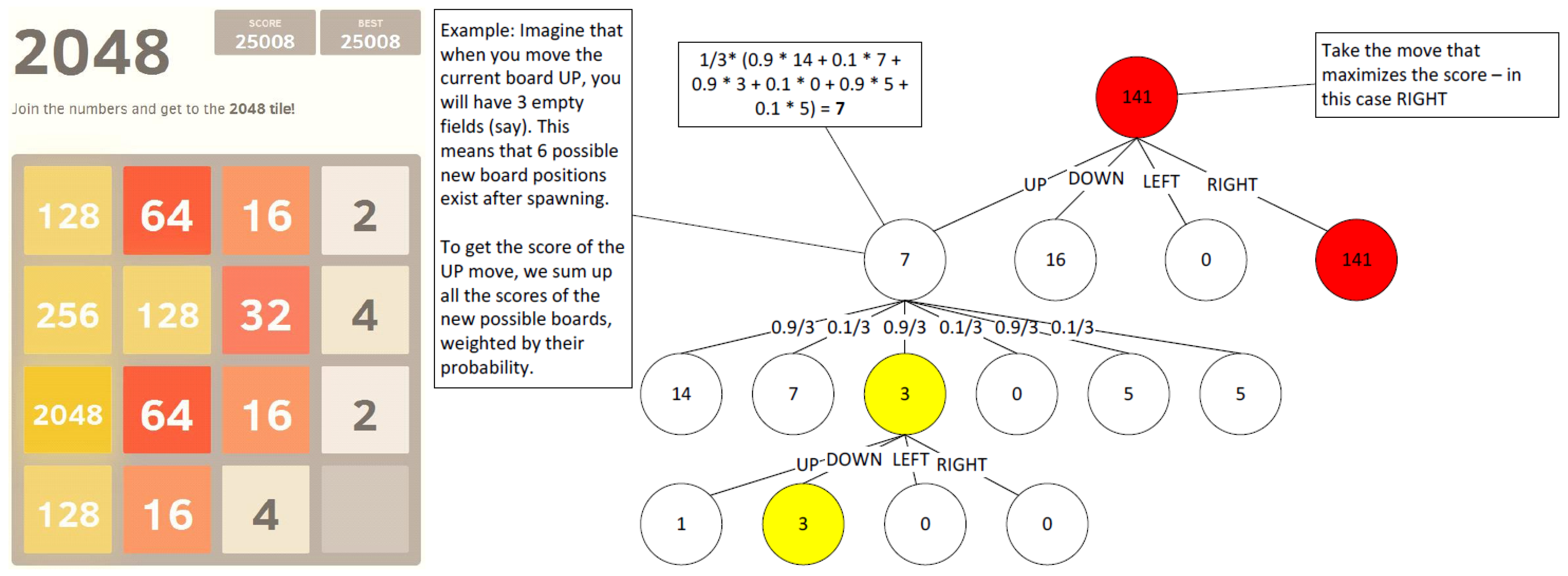

| 2. Search (3 weeks) | How to find suitable sequences of actions to reach a complex goal? | Uninformed and heuristic search, (Expecti-)Minimax, constraint satisfaction problem solvers | AI for the game “2048”: controlling a number puzzle game (cf. Appendix A) |

| 3. Knowledge Representation & Planning (3 weeks) | How to represent the world in a way that facilitates reasoning? | Propositional and first order logic, knowledge engineering and reasoning, Datalog for big data, PDDL | AI for a dragnet investigation: finding potential fraudsters using inference over communication meta data |

| 4. Supervised ML (3 weeks) | What is learning in machines? How to learn from examples? | From linear regression to decision trees and state of the art ensembles | AI for bargain hunters: data mining a dataset of used cars |

| 5. Selected chapters (2 weeks) | What is the current hype about? How does AI effect society? How could society react? | Primer on deep neural networks and generative adversarial training for image generation | Sci-fi revisited: formulating a reply to the blog post from the first week |

Appendix A.2. The ML Course

| Semester | Spring 2019 | Spring 2020 | Fall 2020 | |||

|---|---|---|---|---|---|---|

| Profile | Absolute | % | Absolute | % | Number | % |

| Business Engineering | 2 | 3.1% | 6 | 7.6% | 8 | 10.1% |

| Data Science | 31 | 39.2% | ||||

| Computer Science | 43 | 67.2% | 48 | 60.8% | 18 | 11.4% |

| Electrical Engineering | 3 | 4.7% | 2 | 2.5% | 10 | 12.7% |

| Environmental Science | 3 | 3.8% | ||||

| Industrial Technologies | 16 | 25.0% | 22 | 27.8% | 9 | 11.4% |

| Mechatronics | 2 | 2.5% | ||||

| Medical Engineering | 3 | 3.8% | ||||

| Aviation | 3 | 3.8% | ||||

| Geomatics | 1 | 1.3% | 1 | 1.3% | ||

| Total | 64 | 100 % | 79 | 100 % | 79 | 100% |

| Male | 58 | 90.6% | 74 | 93.7% | 68 | 86.1% |

| Female | 6 | 9.4% | 5 | 6.3% | 11 | 13.9% |

| Topic (Duration) | Key Concept | Cross-Cutting Concerns | Methods (Excerpt) |

|---|---|---|---|

| 1. Introduction (2 weeks) | Convergence for participants with different backgrounds | Hypothesis space search, inductive bias, computational learning theory, ML as representation-optimization-evaluation | No free lunch theorem, VC dimensions; ML from scratch: implementing linear regression with gradient descent purely from formulae |

| 2. Supervised learning (7 weeks) | Learning from labeled data | Feature engineering, making the best of limited data, ensemble learning, debugging ML systems, bias-variance trade-off | Cross-validation, learning curve & ceiling analysis, SVMs, bagging, boosting, probabilistic graphical models |

| 3. Unsupervised learning (3 weeks) | Learning without labels | Probability and Bayesian learning | Dimensionality reduction, anomaly detection, k-means and expectation maximization |

| 4. Special chapters (2 weeks) | Reinforcement learning | Exploration-exploitation trade-off | AlphaZero |

Appendix B. Content Example: AI Model Assignment

Appendix B.1. Summary, Topics, and Audience

Appendix B.2. Strengths, Weaknesses, and Difficulty

Appendix B.3. Dependencies and Variants

References

- Skog, D.A.; Wimelius, H.; Sandberg, J. Digital disruption. Bus. Inf. Syst. Eng. 2018, 60, 431–437. [Google Scholar] [CrossRef]

- Aoun, J.E. Robot-Proof: Higher Education in the Age of Artificial Intelligence; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Stadelmann, T. Wie maschinelles Lernen den Markt verändert. In Digitalisierung: Datenhype mit Werteverlust? Ethische Perspektiven für Eine Schlüsseltechnologie; SCM Hänssler: Holzgerlingen, Germany, 2019; pp. 67–79. [Google Scholar] [CrossRef]

- Perrault, R.; Shoham, Y.; Brynjolfsson, E.; Clark, J.; Etchemendy, J.; Grosz, B.; Lyons, T.; Manyika, J.; Mishra, S.; Niebles, J.C. The AI Index 2019 Annual Report; AI Index Steering Committee, Human-Centered AI Institute, Stanford University: Stanford, CA, USA, 2019. [Google Scholar]

- Stadelmann, T.; Braschler, M.; Stockinger, K. Introduction to applied data science. In Applied Data Science; Springer: Cham, Switzerland, 2019; pp. 3–16. [Google Scholar]

- Urban, T. The AI Revolution: The Road to Superintelligence. 2015. Available online: https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html (accessed on 23 June 2021).

- Luger, G.F. Artificial Intelligence: Structures and Strategies for Complex Problem Solving, 6th ed.; Pearson Education: Boston, MA, USA, 2009. [Google Scholar]

- Wikipedia Contributors. Informatik—Wikipedia, The Free Encyclopedia. 2021. Available online: https://de.wikipedia.org/wiki/Informatik#Disziplinen_der_Informatik (accessed on 27 March 2021).

- Dessimoz, J.D.; Köhler, J.; Stadelmann, T. AI in Switzerland. AI Mag. 2015, 36, 102–105. [Google Scholar] [CrossRef]

- Stadelmann, T.; Stockinger, K.; Bürki, G.H.; Braschler, M. Data scientists. In Applied Data Science; Springer: Cham, Switzerland, 2019; pp. 31–45. [Google Scholar]

- Parsons, S.; Sklar, E. Teaching AI using LEGO mindstorms. In Proceedings of the AAAI Spring Symposium, Palo Alto, CA, USA, 22–24 March 2004. [Google Scholar]

- Goel, A.K.; Joyner, D.A. Using AI to teach AI: Lessons from an online AI class. AI Mag. 2017, 38, 48–59. [Google Scholar] [CrossRef]

- Huang, R.; Spector, J.M.; Yang, J. Design-based research. In Educational Technology; Springer: Singapore, 2019; pp. 179–188. [Google Scholar]

- Williams, R.; Park, H.W.; Oh, L.; Breazeal, C. Popbots: Designing an artificial intelligence curriculum for early childhood education. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 9729–9736. [Google Scholar]

- Stadelmann, T. ATLAS—Analoge Karten für die Digitale Welt der Künstlichen Intelligenz. 2019. Available online: https://stdm.github.io/ATLAS/ (accessed on 3 June 2021).

- Stadelmann, T.; Würsch, C. Maps for an Uncertain Future: Teaching AI and Machine Learning Using the ATLAS Concept; Technical Report; ZHAW Zürcher Hochschule für Angewandte Wissenschaften: Winterthur, Switzerland, 2020. [Google Scholar]

- Siegel, E.V. Why do fools fall into infinite loops: Singing to your computer science class. ACM SIGCSE Bull. 1999, 31, 167–170. [Google Scholar] [CrossRef]

- Eaton, E. Teaching integrated AI through interdisciplinary project-driven courses. AI Mag. 2017, 38, 13–21. [Google Scholar] [CrossRef]

- Schreiber, B.; Dougherty, J.P. Embedding Algorithm Pseudocode in Lyrics to Facilitate Recall and Promote Learning. J. Comput. Sci. Coll. 2017, 32, 20–27. [Google Scholar]

- Chiu, T.K.; Chai, C.S. Sustainable Curriculum Planning for Artificial Intelligence Education: A Self-Determination Theory Perspective. Sustainability 2020, 12, 5568. [Google Scholar] [CrossRef]

- Touretzky, D.; Gardner-McCune, C.; Martin, F.; Seehorn, D. Envisioning AI for k-12: What should every child know about AI? In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 9795–9799. [Google Scholar]

- Li, Y.; Wang, X.; Xin, D. An Inquiry into AI University Curriculum and Market Demand: Facts, Fits, and Future Trends. In Proceedings of the Computers and People Research Conference, Nashville, TN, USA, 20–22 June 2019; pp. 139–142. [Google Scholar]

- Norvig, P. 1525 Schools Worldwide That Have Adopted AIMA. 2021. Available online: http://aima.cs.berkeley.edu/adoptions.html (accessed on 27 March 2021).

- Mercator, G. Atlas sive Cosmographicae Meditationes de Fabrica Mundi et Fabricati Figura; Rumold Mercator: Duisburg, Germany, 1595. [Google Scholar]

- Schneider, U.; Brakensiek, S. (Eds.) Gerhard Mercator: Wissenschaft und Wissenstransfer; WBG: Darmstadt, Germany, 2015. [Google Scholar]

- Ford, K.M.; Hayes, P.J.; Glymour, C.; Allen, J. Cognitive orthoses: Toward human-centered AI. AI Mag. 2015, 36, 5–8. [Google Scholar] [CrossRef]

- Nilsson, N.J. The Quest for Artificial Intelligence; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Morrell, M.; Capparell, S. Shackleton’s Way: Leadership Lessons from the Great Antarctic explorer; Penguin Press: New York, NY, USA, 2001. [Google Scholar]

- Gerstenmaier, J.; Mandl, H. Wissenserwerb unter konstruktivistischer Perspektive. Z. für Pädagogik 1995, 41, 867–888. [Google Scholar]

- Ryan, R.; Deci, E. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am. Psychol. 2000, 55, 68–78. [Google Scholar] [CrossRef]

- Winteler, A. Lehrende an Hochschulen. In Lehrbuch Pädagogische Psychologie; Beltz Psychologie Verlags Union: Weinheim, Germany, 2006; pp. 334–347. [Google Scholar]

- Helmke, A.; Schrader, F.W. Hochschuldidaktik. In Handwörterbuch Pädagogische Psychologie; Beltz Psychologische Verlags Union: Weinheim, Germany, 2010; pp. 273–279. [Google Scholar]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach, 3rd ed.; Pearson Education, Inc.: New York, NJ, USA, 2010. [Google Scholar]

- Mitchell, T.M. Machine Learning; McGraw-Hill: New York, NY, USA, 1997. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Domingos, P. A few useful things to know about machine learning. Commun. ACM 2012, 55, 78–87. [Google Scholar] [CrossRef]

- Bloom, B.S.; Engelhart, M.D.; Furst, E.J.; Hill, W.H.; Krathwohl, D.R. Taxonomy of Educational Objectives: The Classification of Educational Goals; Longmans, Green and Co. Ltd.: London, UK, 1956; Volume 1. [Google Scholar]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Pressman, I.; Singmaster, D. “The jealous husbands” and “the missionaries and cannibals”. Math. Gaz. 1989, 73, 73–81. [Google Scholar] [CrossRef]

- Brockman, G.; Cheung, V.; Pettersson, L.; Schneider, J.; Schulman, J.; Tang, J.; Zaremba, W. Openai gym. arXiv 2016, arXiv:1606.01540. [Google Scholar]

- Tuomi, I. Open educational resources and the transformation of education. Eur. J. Educ. 2013, 48, 58–78. [Google Scholar] [CrossRef]

- Braschler, M.; Stadelmann, T.; Stockinger, K. Applied Data Science; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Brodie, M.L. On developing data science. In Applied Data Science; Springer: Cham, Switzerland, 2019; pp. 131–160. [Google Scholar]

- Downing, K.F.; Holtz, J.K. A Didactic Model for the Development of Effective Online Science Courses. In Online Science Learning: Best Practices and Technologies; IGI Global: Hershey, PA, USA, 2008; pp. 291–337. [Google Scholar] [CrossRef]

- Hoffmann, R.L.; Dudjak, L.A. From onsite to online: Lessons learned from faculty pioneers. J. Prof. Nurs. 2012, 28, 255–258. [Google Scholar] [CrossRef]

- Torres, A.; Domańska-Glonek, E.; Dzikowski, W.; Korulczyk, J.; Torres, K. Transition to online is possible: Solution for simulation-based teaching during the COVID-19 pandemic. Med. Educ. 2020, 54, 858–859. [Google Scholar] [CrossRef]

- Cieliebak, M.; Frei, A.K. Influence of flipped classroom on technical skills and non-technical competences of IT students. In Proceedings of the 2016 IEEE Global Engineering Education Conference (EDUCON), Abu Dhabi, United Arab Emirates, 10–13 April 2016; pp. 1012–1016. [Google Scholar]

- Haslum, P.; Lipovetzky, N.; Magazzeni, D.; Muise, C. An introduction to the planning domain definition language. Synth. Lect. Artif. Intell. Mach. Learn. 2019, 13, 1–187. [Google Scholar] [CrossRef]

- Dougiamas, M.; Taylor, P. Moodle: Using learning communities to create an open source course management system. In Proceedings of the World Conference on Educational Multimedia, Hypermedia and Telecommunications (EDMEDIA), Honolulu, HI, USA, 23–28 June 2003; pp. 171–178. [Google Scholar]

- Kluyver, T.; Ragan-Kelley, B.; Pérez, F.; Granger, B.E.; Bussonnier, M.; Frederic, J.; Kelley, K.; Hamrick, J.B.; Grout, J.; Corlay, S.; et al. Jupyter Notebooks—A Publishing Format for Reproducible Computational Workflows. In Positioning and Power in Academic Publishing: Players, Agents and Agendas; IOS Press: Amsterdam, The Netherlands, 2016; pp. 87–90. [Google Scholar] [CrossRef]

- Bloom, B.S. Taxonomy of Educational Objectives; Pearson Education; Allyn and Bacon: Boston, MA, USA, 1984. [Google Scholar]

- Bityukov, S.; Maksimushkina, A.; Smirnova, V. Comparison of histograms in physical research. Nucl. Energy Technol. 2016, 2, 108–113. [Google Scholar] [CrossRef][Green Version]

- Vallely, K.; Gibson, P. Engaging students on their devices with Mentimeter. Compass J. Learn. Teach. 2018, 11. [Google Scholar] [CrossRef]

- Adams, A.L. Online Teaching Resources. Public Serv. Q. 2020, 16, 172–178. [Google Scholar] [CrossRef]

- Gruber, A. Employing innovative technologies to foster foreign language speaking practice. Acad. Lett. 2021, 2. [Google Scholar] [CrossRef]

- Ng, A. Machine Learning—Coursera (Stanford University). 2021. Available online: https://www.coursera.org/learn/machine-learning (accessed on 28 March 2021).

- Wikipedia Contributors. 2048 (Video Game)—Wikipedia, the Free Encyclopedia. 2021. Available online: https://en.wikipedia.org/wiki/2048_(video_game) (accessed on 27 March 2021).

- Sutton, R. The Bitter lesson—Incomplete Ideas Blog. 2019. Available online: http://www.incompleteideas.net/IncIdeas/BitterLesson.html (accessed on 3 June 2021).

| Topic | Semester | 1 (Strongly Disagree) | 2 (Disagree to Some Extent) | 3 (Agree to Some Extent) | 4 (Strongly Agree) | Score |

|---|---|---|---|---|---|---|

| Motivation | Spring 2019 | 1.7% | 0.0% | 18.8% | 79.5% | 3.8 |

| Fall 2020 | 0.0% | 5.6% | 18.5% | 75.9% | 3.7 | |

| Competence | Spring 2019 | 1.7% | 0.0% | 15.0% | 83.3% | 3.8 |

| Fall 2020 | 0.0% | 1.9% | 13.0% | 85.1% | 3.8 | |

| Teaching | Spring 2019 | 1.7% | 15.0% | 45.0% | 38.3% | 3.2 |

| skills | Fall 2020 | 3.7% | 14.8% | 38.9% | 42.6% | 3.2 |

| Clear | Spring 2019 | 3.4% | 13.4% | 48.3% | 34.9% | 3.1 |

| structure | Fall 2020 | 5.6% | 14.8% | 44.4% | 35.2% | 3.1 |

| Topic | Semester | 1 (Strongly Disagree) | 2 (Disagree to Some Extent) | 3 (Agree to Some Extent) | 4 (Strongly Agree) | Score |

|---|---|---|---|---|---|---|

| Activation | Fall 2019 | 0.0% | 20.8% | 29.2% | 50.0% | 3.3 |

| Fall 2020 | – | |||||

| Practical | Fall 2019 | 0.0% | 16.7% | 25.0% | 58.3% | 3.4 |

| relevance | Fall 2020 | – |

| Topic | Semester | 1 (Strongly Disagree) | 2 (Disagree to Some Extent) | 3 (Agree to Some Extent) | 4 (Strongly Agree) | Score |

|---|---|---|---|---|---|---|

| Labs | Spring 2019 | 7.1% | 21.4% | 50.0% | 21.5% | 2.9 |

| Fall 2020 | 0.0% | 14.8% | 37.0% | 48.2% | 3.3 | |

| Material | Spring 2019 | 0.0% | 21.4% | 50.0% | 28.6% | 3.1 |

| Fall 2020 | 0.0% | 4.2% | 41.7% | 54.1% | 3.5 | |

| Organization | Spring 2019 | 3.3% | 16.7% | 60.0% | 20.0% | 3.0 |

| Fall 2020 | 0.0% | 25.9% | 40.7% | 33.4% | 3.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stadelmann, T.; Keuzenkamp, J.; Grabner, H.; Würsch, C. The AI-Atlas: Didactics for Teaching AI and Machine Learning On-Site, Online, and Hybrid. Educ. Sci. 2021, 11, 318. https://doi.org/10.3390/educsci11070318

Stadelmann T, Keuzenkamp J, Grabner H, Würsch C. The AI-Atlas: Didactics for Teaching AI and Machine Learning On-Site, Online, and Hybrid. Education Sciences. 2021; 11(7):318. https://doi.org/10.3390/educsci11070318

Chicago/Turabian StyleStadelmann, Thilo, Julian Keuzenkamp, Helmut Grabner, and Christoph Würsch. 2021. "The AI-Atlas: Didactics for Teaching AI and Machine Learning On-Site, Online, and Hybrid" Education Sciences 11, no. 7: 318. https://doi.org/10.3390/educsci11070318

APA StyleStadelmann, T., Keuzenkamp, J., Grabner, H., & Würsch, C. (2021). The AI-Atlas: Didactics for Teaching AI and Machine Learning On-Site, Online, and Hybrid. Education Sciences, 11(7), 318. https://doi.org/10.3390/educsci11070318