Patterns of Scientific Reasoning Skills among Pre-Service Science Teachers: A Latent Class Analysis

Abstract

:1. Introduction

2. Materials and Methods

2.1. Sample

2.2. Data Collection

2.3. Data Analysis: Latent Class Analysis

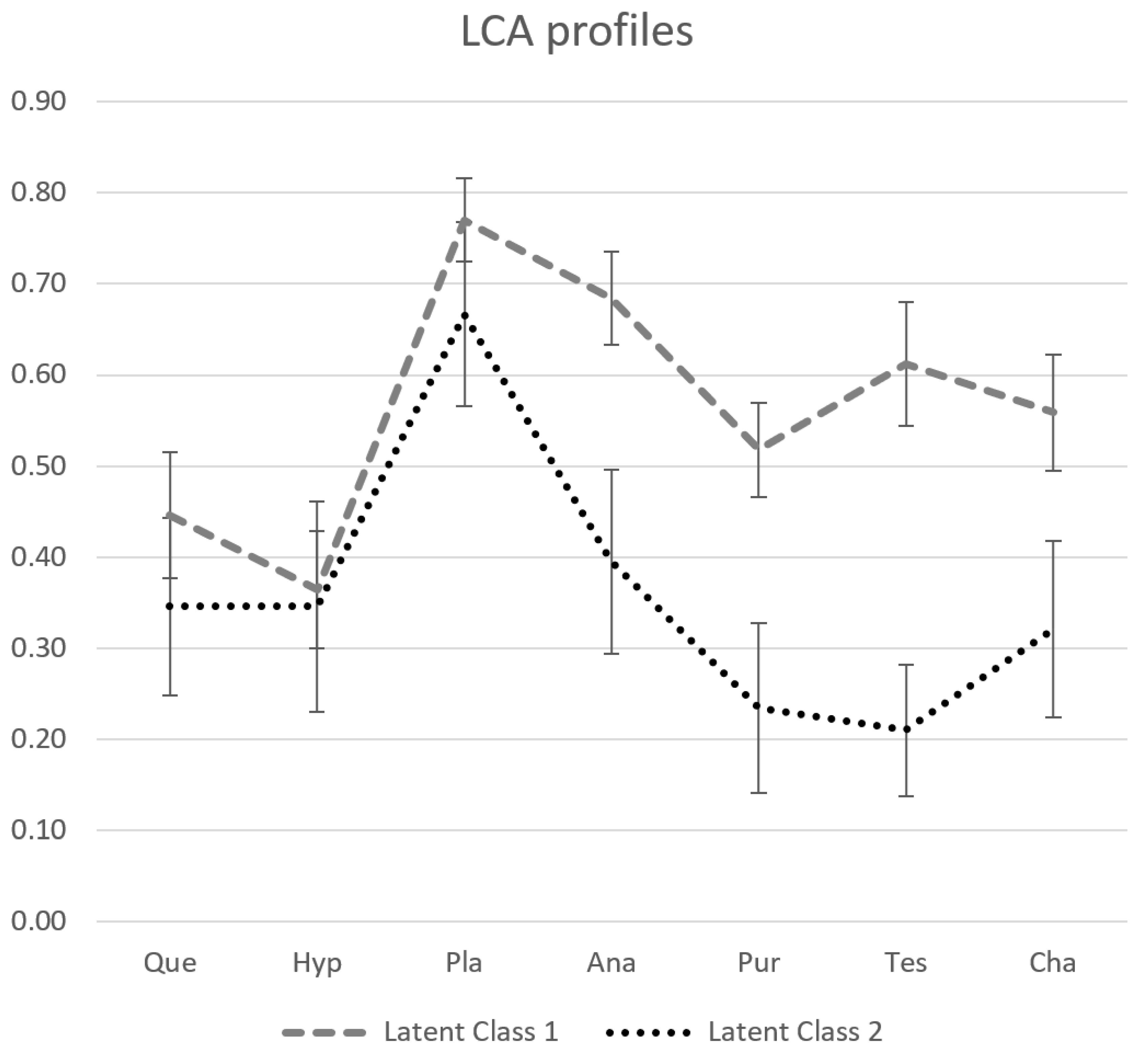

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lawson, A.E. The development and validation of a classroom test of formal reasoning. J. Res. Sci. Teach. 1978, 15, 11–24. [Google Scholar] [CrossRef]

- Osborne, J. The 21st century challenge for science education: Assessing scientific reasoning. Think. Ski. Creat. 2013, 10, 265–279. [Google Scholar] [CrossRef]

- Khan, S.; Krell, M. Scientific reasoning competencies: A case of preservice teacher education. Can. J. Sci. Math. Technol. Educ. 2019, 19, 446–464. [Google Scholar] [CrossRef] [Green Version]

- Lawson, A.E. The nature and development of scientific reasoning: A synthetic view. Int. J. Sci. Math. Educ. 2004, 2, 307–338. [Google Scholar] [CrossRef]

- Zimmerman, C. The development of scientific reasoning skills. Dev. Rev. 2000, 20, 99–149. [Google Scholar] [CrossRef] [Green Version]

- Ford, M.J. Educational implications of choosing “practice” to describe science in the next generation science standards. Sci. Educ. 2015, 99, 1041–1048. [Google Scholar] [CrossRef]

- Díaz, C.; Dorner, B.; Hussmann, H.; Strijbos, J.W. Conceptual review on scientific reasoning and scientific thinking. Curr. Psychol. 2021, 1–13. [Google Scholar]

- Reith, M.; Nehring, A. Scientific reasoning and views on the nature of scientific inquiry: Testing a new framework to understand and model epistemic cognition in science. Int. J. Sci. Educ. 2020, 42, 2716–2741. [Google Scholar] [CrossRef]

- Krell, M.; Hergert, S. Modeling strategies. In Towards a Competence-Based View on Models and Modeling in Science Education; Upmeier zu Belzen, A., Krüger, D., van Driel, J., Eds.; Springer: Cham, Switzerland, 2020; pp. 147–160. [Google Scholar]

- Khan, S. Model-based inquiries in chemistry. Sci. Educ. 2007, 91, 877–905. [Google Scholar] [CrossRef]

- Babai, R.; Brecher, T.; Stavy, R.; Tirosh, D. Intuitive interference in probabilistic reasoning. Int. J. Sci. Math. Educ. 2006, 4, 627–639. [Google Scholar] [CrossRef]

- Upmeier zu Belzen, A.; Engelschalt, P.; Krüger, D. Modeling as scientific reasoning—The role of abductive reasoning for Modeling competence. Educ. Sci. 2021, 11, 495. [Google Scholar] [CrossRef]

- Holyoak, K.J.; Morrison, R.G. Thinking and reasoning: A reader’s guide. In Oxford Handbook of Thinking and Reasoning; Holyoak, K.J., Morrison, R.G., Eds.; Oxford University Press: New York, NY, USA, 2005. [Google Scholar]

- Kuhn, D.; Arvidsson, T.S.; Lesperance, R.; Corprew, R. Can engaging in science practices promote deep understanding of them? Sci. Educ. 2017, 101, 232–250. [Google Scholar] [CrossRef]

- Kind, P.; Osborne, J. Styles of scientific reasoning: A cultural rationale for science education? Sci. Educ. 2017, 101, 8–31. [Google Scholar] [CrossRef] [Green Version]

- Kuhn, D. What is scientific thinking and how does it develop? In Handbook of Childhood Cognitive Development; Goswami, U., Ed.; Blackwell: Oxford, UK, 2002; pp. 371–393. [Google Scholar]

- Schauble, L. In the eye of the beholder: Domain-general and domain-specific reasoning in science. In Scientific Reasoning and Argumentation: The Roles of Domain-Specific and Domain-General Knowledge; Fischer, F., Chinn, C., Engelmann, K., Osborne, J., Eds.; Routledge: New York, NY, USA, 2018. [Google Scholar]

- Dunbar, K.; Klahr, D. Developmental Differences in Scientific Discovery Processes; Psychology Press: Hove, UK, 2013; pp. 129–164. [Google Scholar]

- Morris, B.J.; Croker, S.; Masnick, A.M.; Zimmerman, C. The emergence of scientific reasoning. In Current Topics in Children’s Learning and Cognition; Kloos, H., Morris, B., Amaral, J., Eds.; IntechOpen: London, UK, 2012; pp. 61–82. [Google Scholar]

- Krell, M.; Dawborn-Gundlach, M.; van Driel, J. Scientific reasoning competencies in science teaching. Teach. Sci. 2020, 66, 32–42. [Google Scholar]

- Kusurkar, R.A.; Mak-van der Vossen, M.; Kors, J.; Grijpma, J.W.; van der Burgt, S.M.; Koster, A.S.; de la Croix, A. ‘One size does not fit all’: The value of person-centred analysis in health professions education research. Perspect. Med. Educ. 2020, 10, 245–251. [Google Scholar] [CrossRef] [PubMed]

- Krell, M.; zu Belzen, A.U.; Krüger, D. Students’ levels of understanding models and modeling in biology: Global or aspect-dependent? Res. Sci. Educ. 2014, 44, 109–132. [Google Scholar] [CrossRef]

- Watt, H.M.; Parker, P.D. Person-and variable-centred quantitative analyses in educational research: Insights concerning Australian students’ and teachers’ engagement and wellbeing. Aust. Educ. Res. 2020, 47, 501–515. [Google Scholar] [CrossRef]

- Nersessian, N.J. Creating Scientific Concepts; MIT Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Krell, M.; Redman, C.; Mathesius, S.; Krüger, D.; van Driel, J. Assessing pre-service science teachers’ scientific reasoning competencies. Res. Sci. Educ. 2020, 50, 2305–2329. [Google Scholar] [CrossRef]

- Krell, M. Schwierigkeitserzeugende Aufgabenmerkmale bei Multiple-Choice-Aufgaben zur Experimentierkompetenz im Biologieunterricht: Eine Replikationsstudie [Difficulty-creating task characteristics in multiple-choice questions on experimental competence in biology classes: A replication study]. Z. Didakt. Nat. 2018, 24, 1–15. [Google Scholar]

- Krüger, D.; Hartmann, S.; Nordmeier, V.; Upmeier zu Belzen, A. Measuring scientific reasoning competencies. In Student Learning in German Higher Education; Springer: Wiesbaden, Germany, 2020; pp. 261–280. [Google Scholar] [CrossRef]

- Opitz, A.; Heene, M.; Fischer, F. Measuring scientific reasoning—A review of test instruments. Educ. Res. Eval. 2017, 23, 78–101. [Google Scholar] [CrossRef]

- Bicak, B.E.; Borchert, C.E.; Höner, K. Measuring and Fostering Preservice Chemistry Teachers’ Scientific Reasoning Competency. Educ. Sci. 2021, 11, 496. [Google Scholar] [CrossRef]

- Krell, M.; Mathesius, S.; van Driel, J.; Vergara, C.; Krüger, D. Assessing scientific reasoning competencies of pre-service science teachers: Translating a German multiple-choice instrument into English and Spanish. Int. J. Sci. Educ. 2020, 42, 2819–2841. [Google Scholar] [CrossRef]

- Hartmann, S.; Upmeier zu Belzen, A.; Krüger, D.; Pant, H.A. Scientific reasoning in higher education. Z. Psychol. 2015, 223, 47–53. [Google Scholar] [CrossRef]

- Mathesius, S.; Krell, M. Assessing modeling competence with questionnaires. In Towards a Competence-Based View on Models and Modeling in Science Education; Upmeier zu Belzen, A., Krüger, D., van Driel, J., Eds.; Springer: Cham, Switzerland, 2020; pp. 117–129. [Google Scholar]

- Hagenaars, J.; Halman, L. Searching for ideal types: The potentialities of latent class analysis. Eur. Sociol. Rev. 1989, 5, 81–96. [Google Scholar] [CrossRef]

- Mathesius, S.; Upmeier zu Belzen, A.; Krüger, D. Competencies of biology students in the field of scientific inquiry: Development of a testing instrument. Erkenn. Biol. 2014, 13, 73–88. [Google Scholar]

- Linzer, D.; Lewis, J. poLCA: Polytomous Variable Latent Class Analysis. R Package Version 1.4. 2013. Available online: http://dlinzer.github.com/poLCA (accessed on 7 December 2020).

- Collins, L.; Lanza, S. Latent Class and Latent Transition Analysis; Wiley: Hoboken, NJ, USA, 2010. [Google Scholar]

- Langeheine, R.; Rost, J. (Eds.) Latent Trait and Latent Class Models; Plenum Press: New York, NY, USA, 1988. [Google Scholar]

- Henson, J.; Reise, S.; Kim, K. Detecting mixtures from structural model differences using latent variable mixture modeling: A comparison of relative model fit statistics. Struct. Equ. Model. 2007, 14, 202–226. [Google Scholar] [CrossRef]

- Nylund, K.; Asparouhov, T.; Muthén, B. Deciding on the number of classes in latent class analysis and growth mixture modeling: A Monte Carlo simulation study. Struct. Equ. Model. 2007, 14, 535–569. [Google Scholar] [CrossRef]

- Spiel, C.; Glück, J. A model-based test of competence profile and competence level in deductive reasoning. In Assessment of Competencies in Educational Contexts; Hartig, J., Klieme, E., Leutner, D., Eds.; Hogrefe & Huber: Göttingen, Germany, 2008; pp. 45–68. [Google Scholar]

- Krell, M.; Khan, S.; van Driel, J. Analyzing Cognitive Demands of a Scientific Reasoning Test Using the Linear Logistic Test Model (LLTM). Educ. Sci. 2021, 11, 472. [Google Scholar] [CrossRef]

- Khan, S. New pedagogies on teaching science with computer simulations. J. Sci. Educ. Technol. 2011, 20, 215–232. [Google Scholar] [CrossRef]

| Sub-Competencies | Skills | Necessary Knowledge PSTs Have to Know That… |

|---|---|---|

| Conducting scientific investigations | formulating questions | ... scientific questions are related to phenomena, empirically testable, intersubjectively comprehensible, unambiguous, basically answerable and are internally and externally consistent. |

| generating hypotheses | ... hypotheses are empirically testable, intersubjectively comprehensible, clear, logically consistent and compatible with an underlying theory. | |

| planning investigations | ... causal relationships between independent and dependent variables based on a previous hypothesis can be examined, whereby the independent variable is manipulated during experiments and control variables are considered. ... correlative relationships between independent and dependent variables based on a previous hypothesis can be examined with scientific observations. | |

| analyzing data and drawing conclusions | ... data analysis allows an evidence-based interpretation and evaluation of the research question and hypothesis. | |

| Using scientific models | judging the purpose of models | ... models can be used for hypotheses generation. |

| testing models | ... models can be evaluated by testing model-based hypotheses. | |

| changing models | … models are changed if model-based hypotheses are falsified. |

| LCA Model | BIC | ssaBIC | Extreme Values | Probability of Assignment |

|---|---|---|---|---|

| 2 latent classes | 2685 | 2549 | 0 | 0.93 to 0.98 |

| 3 latent classes | 2722 | 2517 | 9 | 0.92 to 0.98 |

| 4 latent classes | 2779 | 2504 | 11 | 0.91 to 0.97 |

| Variable | LC Assignment | N | M | SD | t-Test |

|---|---|---|---|---|---|

| Age | 1 | 74 | 26.54 | 5.35 | t(99) = 0.591; p = 0.556 |

| 2 | 27 | 27.30 | 6.55 | ||

| Biology | 1 | 74 | 0.65 | 0.48 | t(99) = 2.918; p = 0.004 |

| 2 | 27 | 0.33 | 0.48 | ||

| Chemistry | 1 | 74 | 0.15 | 0.36 | t(37.07) = 1.821; p = 0.077 * |

| 2 | 27 | 0.33 | 0.48 | ||

| Physics | 1 | 74 | 0.14 | 0.34 | t(99) = 0.316; p = 0.753 |

| 2 | 27 | 0.11 | 0.32 | ||

| Previous degrees | 1 | 66 | 1.24 | 0.63 | t(78.93) = 2.072; p = 0.042 * |

| 2 | 25 | 1.08 | 0.40 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, S.; Krell, M. Patterns of Scientific Reasoning Skills among Pre-Service Science Teachers: A Latent Class Analysis. Educ. Sci. 2021, 11, 647. https://doi.org/10.3390/educsci11100647

Khan S, Krell M. Patterns of Scientific Reasoning Skills among Pre-Service Science Teachers: A Latent Class Analysis. Education Sciences. 2021; 11(10):647. https://doi.org/10.3390/educsci11100647

Chicago/Turabian StyleKhan, Samia, and Moritz Krell. 2021. "Patterns of Scientific Reasoning Skills among Pre-Service Science Teachers: A Latent Class Analysis" Education Sciences 11, no. 10: 647. https://doi.org/10.3390/educsci11100647

APA StyleKhan, S., & Krell, M. (2021). Patterns of Scientific Reasoning Skills among Pre-Service Science Teachers: A Latent Class Analysis. Education Sciences, 11(10), 647. https://doi.org/10.3390/educsci11100647