The Effective Use of Information Technology and Interactive Activities to Improve Learner Engagement

Abstract

1. Introduction

- Review a teaching practice to identify issues related to delivery and quality of teaching and learning associated with student engagement in computer science;

- take actions to address the issues identified as a result of the above;

- evaluate the influence of actions taken.

2. Background

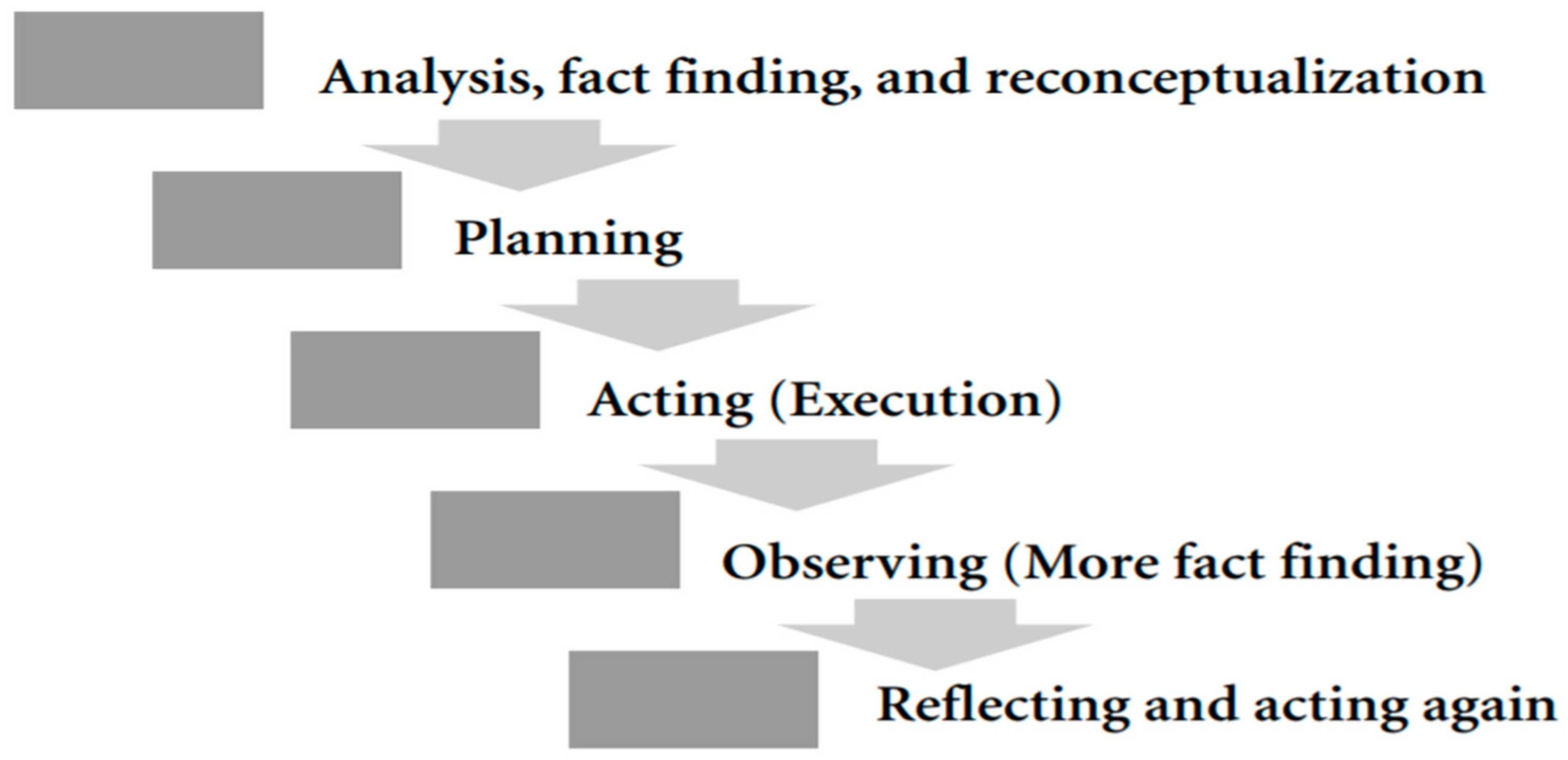

3. Research Methodology

3.1. Survey Method

3.2. Measurement Instrument

3.3. Research Approach

- Participants Recruitment: The study was conducted in a face-to-face, 12-week module using actual events, teaching, and learning activities. Table 2 shows participants’ characteristics summarised from classroom attendance. Student participation was voluntary, and their feedback kept anonymised. The participants’ identification was not recorded as part of the data collection. A total of 24 participants responded to the questionnaire and provided their feedback at different stages of the study.

- Initial Survey (Pre-test): An initial survey was conducted after week 2 for fact findings using the SEEQ questionnaire, which is discussed in Section 4.1.

- Introduction of Interactive Activities (Intervention): Based on the initial findings, we implemented different approaches including Slido, Plickers, group tasks, and card sorting activities to improve learning, which is explained in Section 4.2.

- Repeat Survey (Post-test): A repeat survey was conducted after 8 weeks of the initial survey with the same participants to evaluate the impact of interventions. Participants also responded to an additional questionnaire relating to the specific use of activities.

4. Results and Implementation

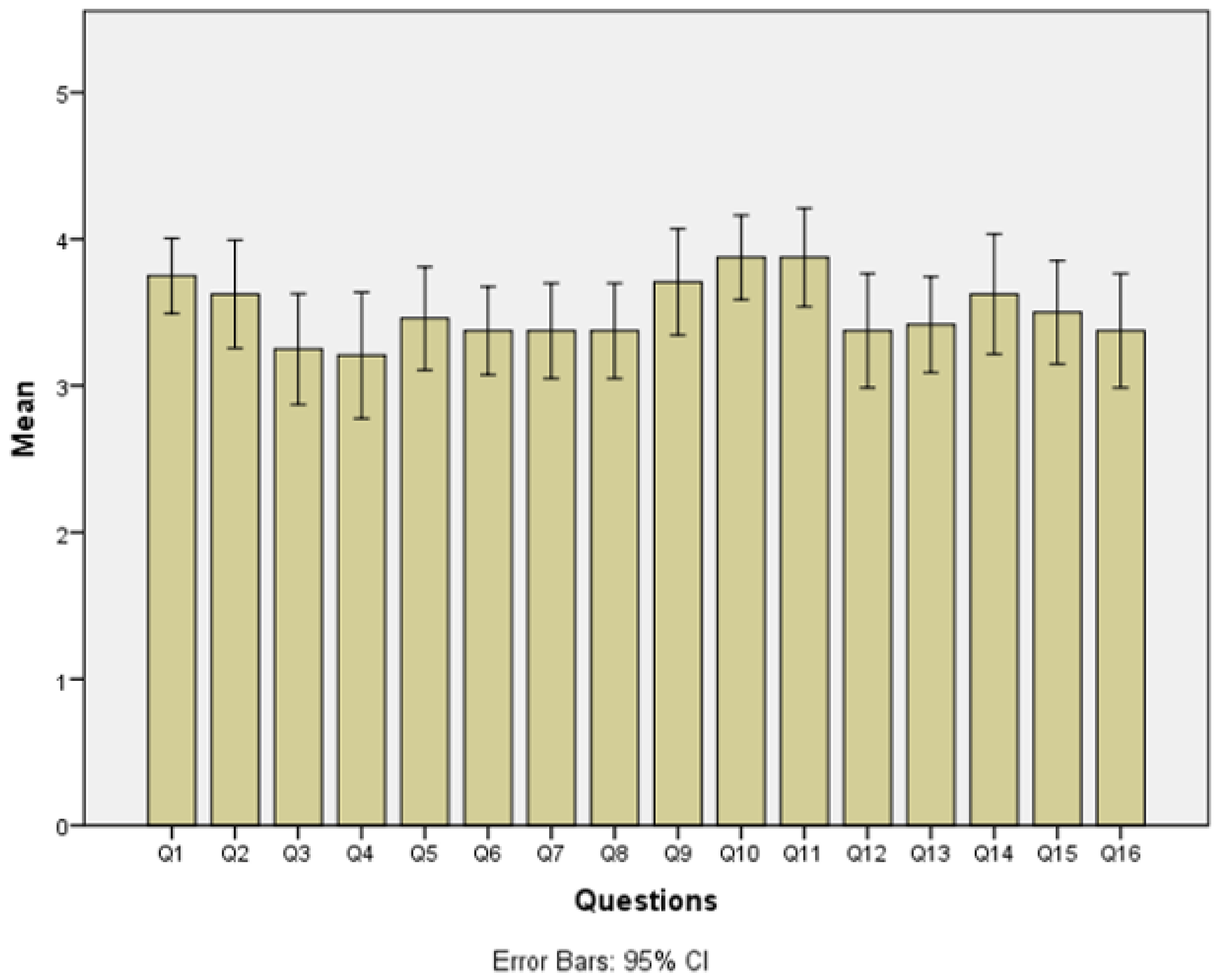

4.1. Initial Survey

4.2. Introduction of Interactive Activities

- Segmentation: As recommended by Gibbs and Jenkins [79,80], each teaching session was broken down into small segments in a power point presentation as described below. The course content and presentation were shared with students on MOODLE Learning Management System (LMS) before the teaching session.

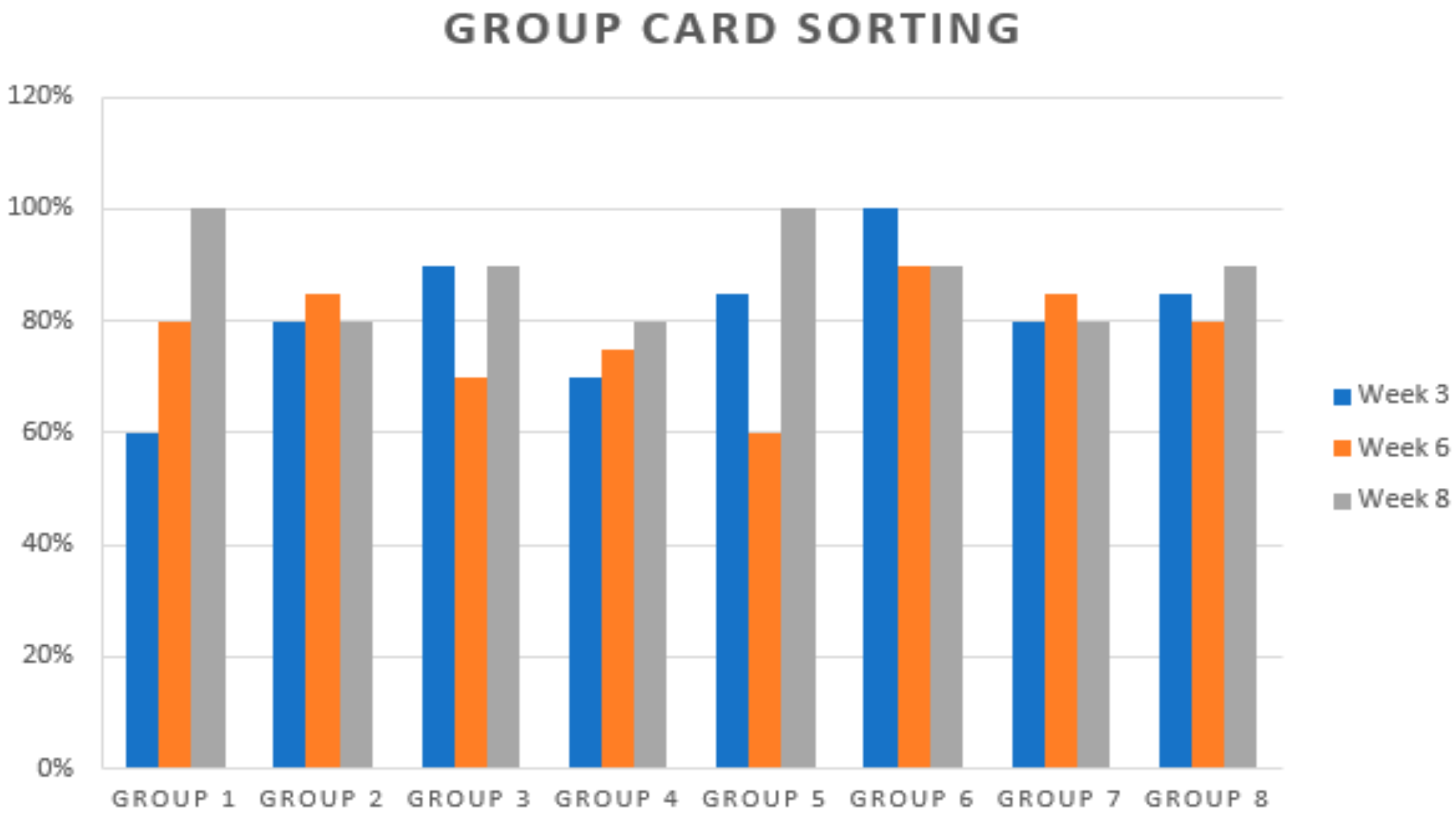

- Card Sorting Activity: In order to involve students in the learning process, questions and answers were printed on laminated paper cards for a card sorting and mapping activity. After teaching the relevant material for 15–20 min, students were invited to sort or map answers to questions on printed cards in groups (group of three), e.g., titles for primary key, foreign key, and super key were mixed with their definitions on cards, and students were asked to pair them up [81]. Some of these definitions were related with previous teaching sessions to help them recall. This was followed by further teaching material on a PowerPoint presentation in the next segment.

- Group Task: After teaching the next segment for 15–20 min, students were invited to perform a 15 min task on paper in groups. For example, in one session, students were asked to normalize data for a “student application form” to 1st, 2nd, and 3rd normal forms in groups. This was followed by further teaching material on a PowerPoint presentation in the next segment.

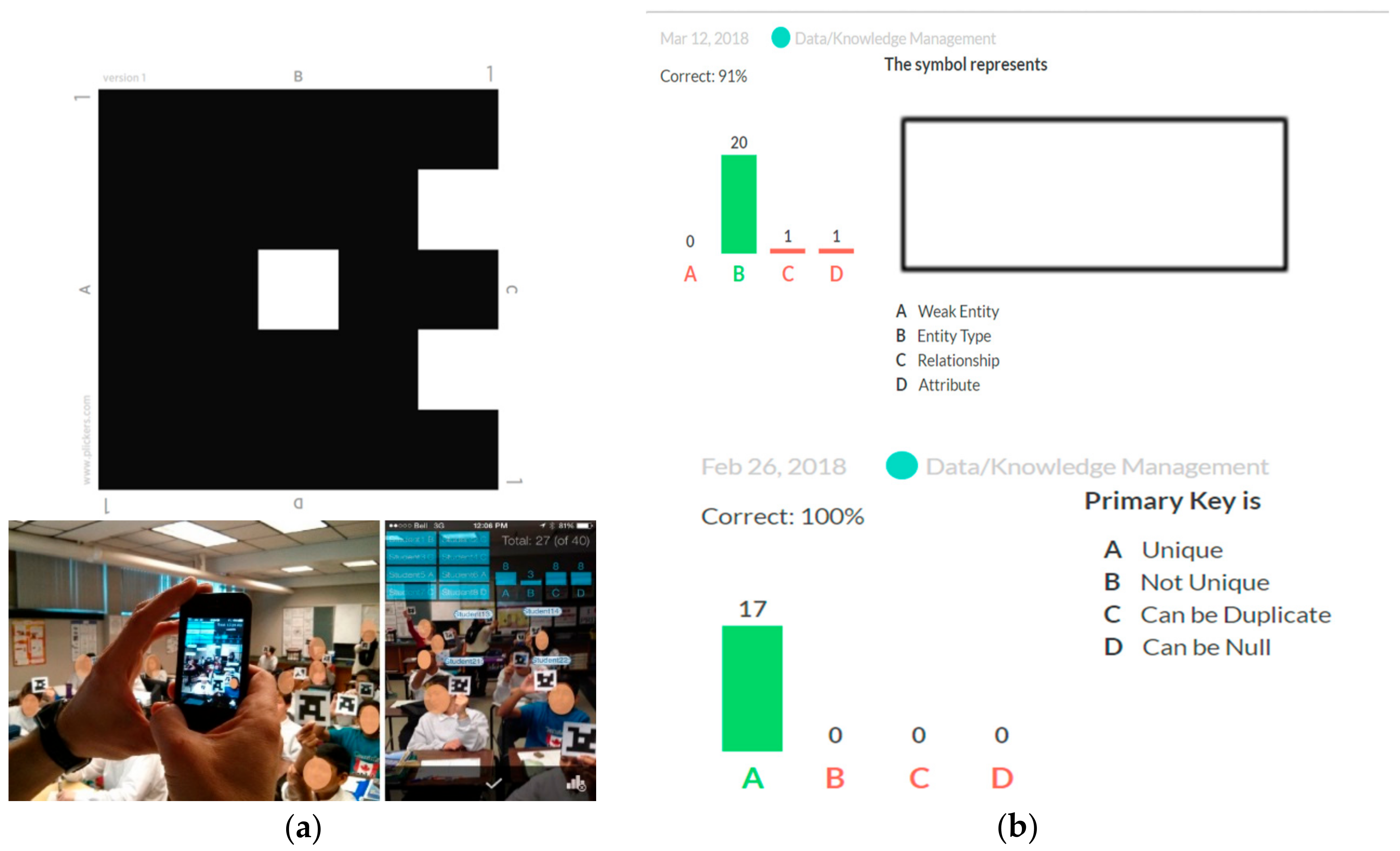

- Interactive Activity (Plickers): After teaching in the next segment for 15–20 min. Students were invited to participate in a “Plickers” Multiple Choice Questions (MCQ) quiz [82], an audience response system. Using this method:

- The lecturer (author) prepared questions before the lecture and uploaded them to the Plickers website.

- To collect answers, mobile readable cards (see Figure 3a,b) were printed and shared with students.

- Students were invited to answer MCQ questions shared from the Plickers library. These were displayed on the multimedia projector.

- Students answered the questions by showing the printed card in a specific position. Each position represents different answer choice, i.e., a, b, c, and d.

- The lecture (author) scanned their responses from display cards using the Plickers mobile app, and results were instantly displayed on the multimedia projector. Each Plicker card is unique and represents an individual student.

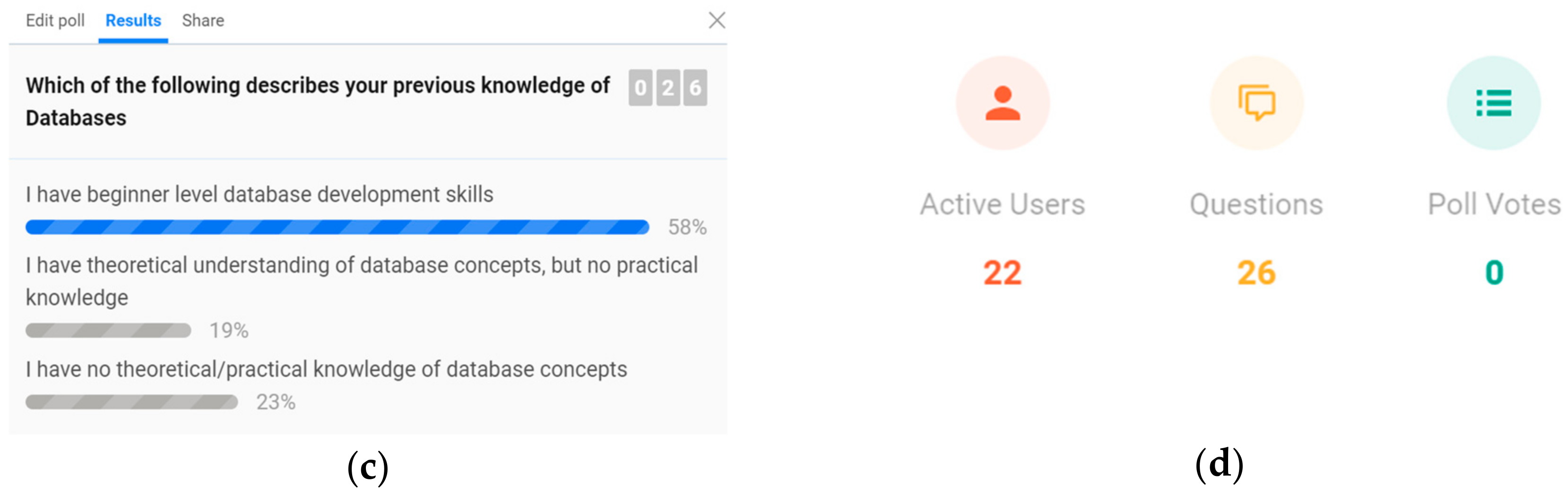

- Interactive Activity (Slido): At the end of lecture, students were invited to answer MCQ questions on Slido using their mobile phones (see Figure 3c,d). Slido is a real time interactive online application for polls, MCQs, and questions [83]. Using this method:

- Students answered MCQ questions on their phones and the results were displayed instantly on projector from the Slido website.

- Students were invited to ask questions regarding the lecture in the Slido “Questions & Answers” section.

- Students were able to rate questions posted by other students and displayed on the projector.

- Students may ask questions anonymously in order to encourage individuals who would otherwise hesitate to ask questions face-to-face.

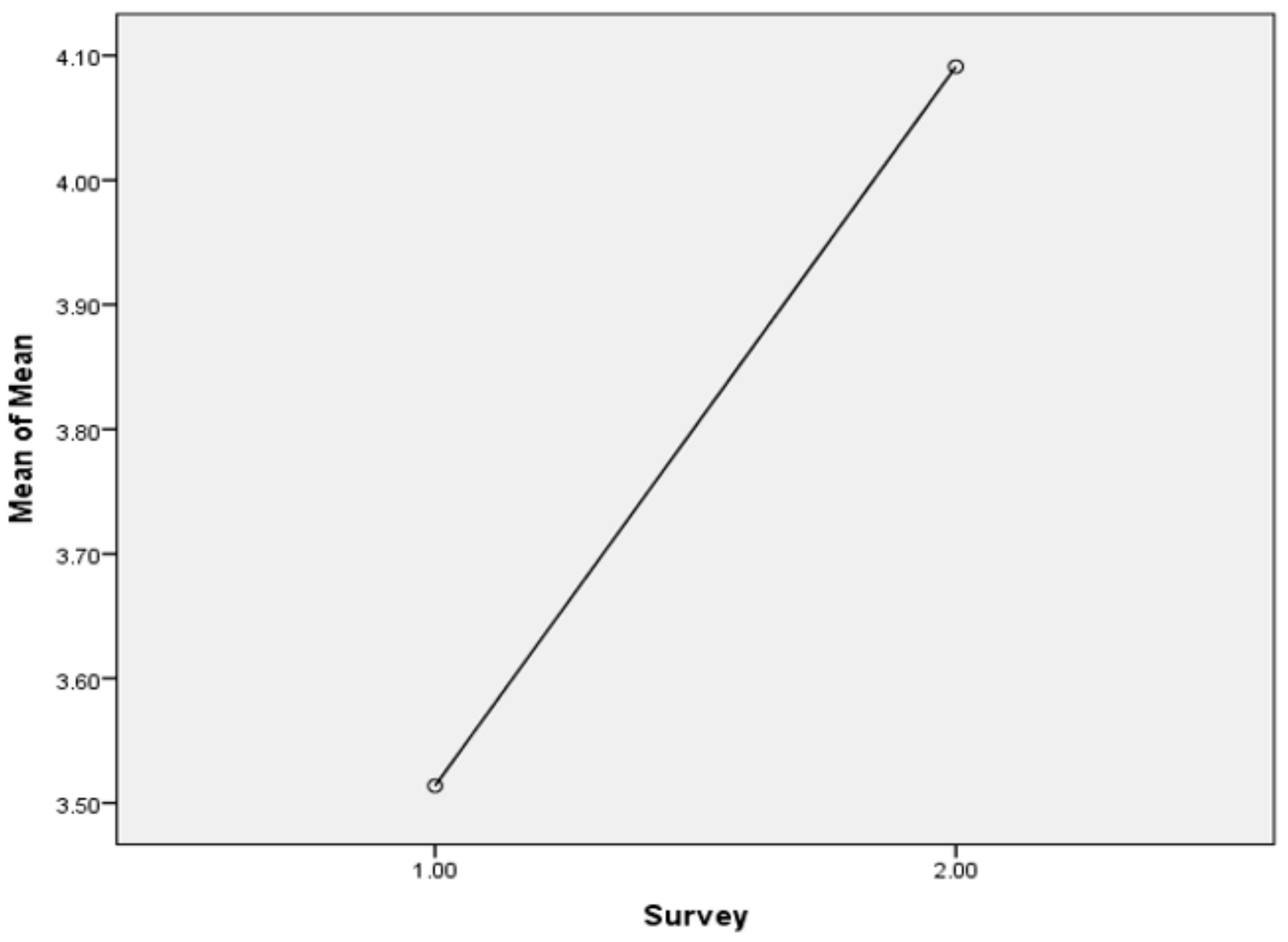

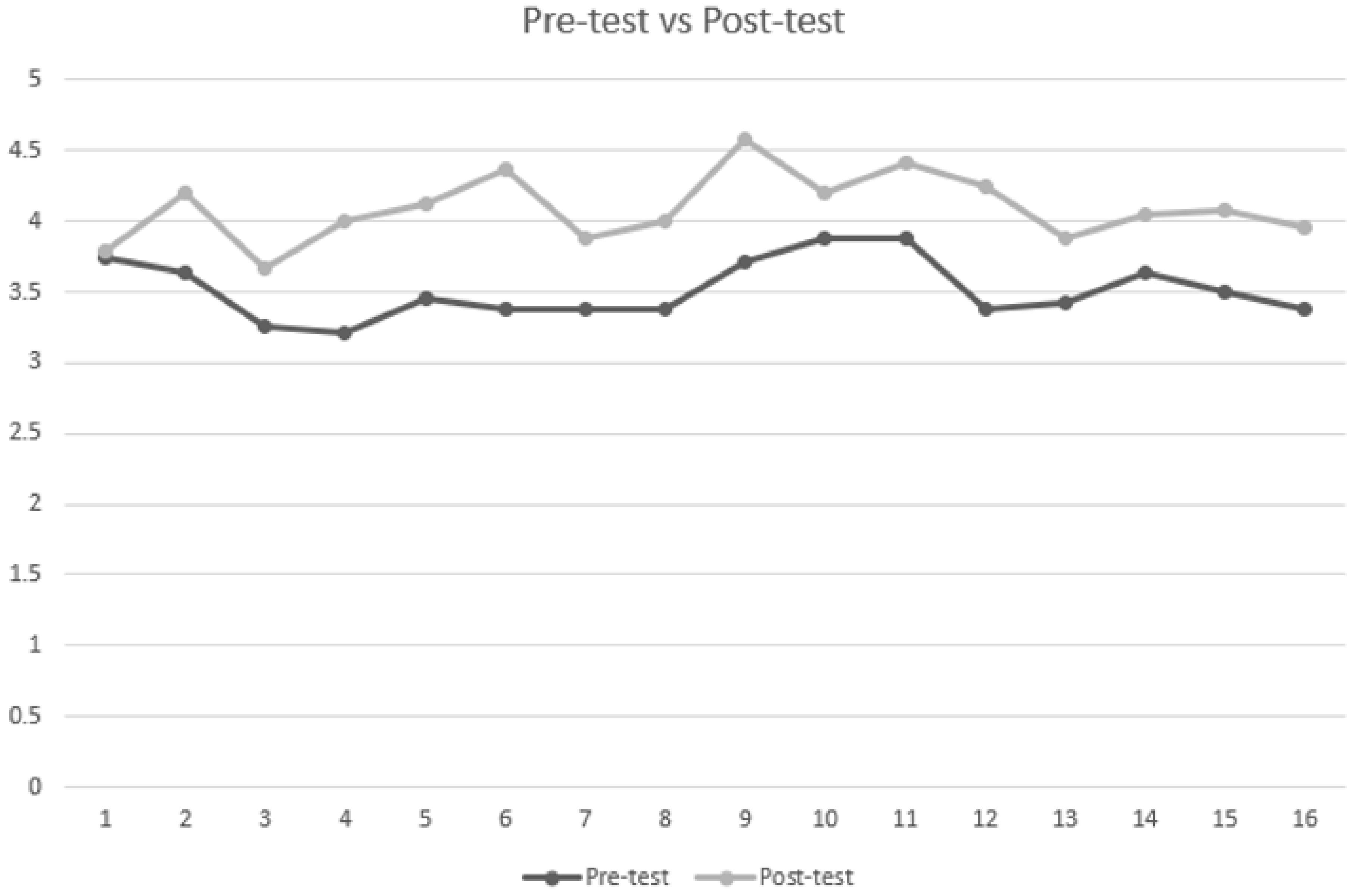

4.3. Repeat Survey

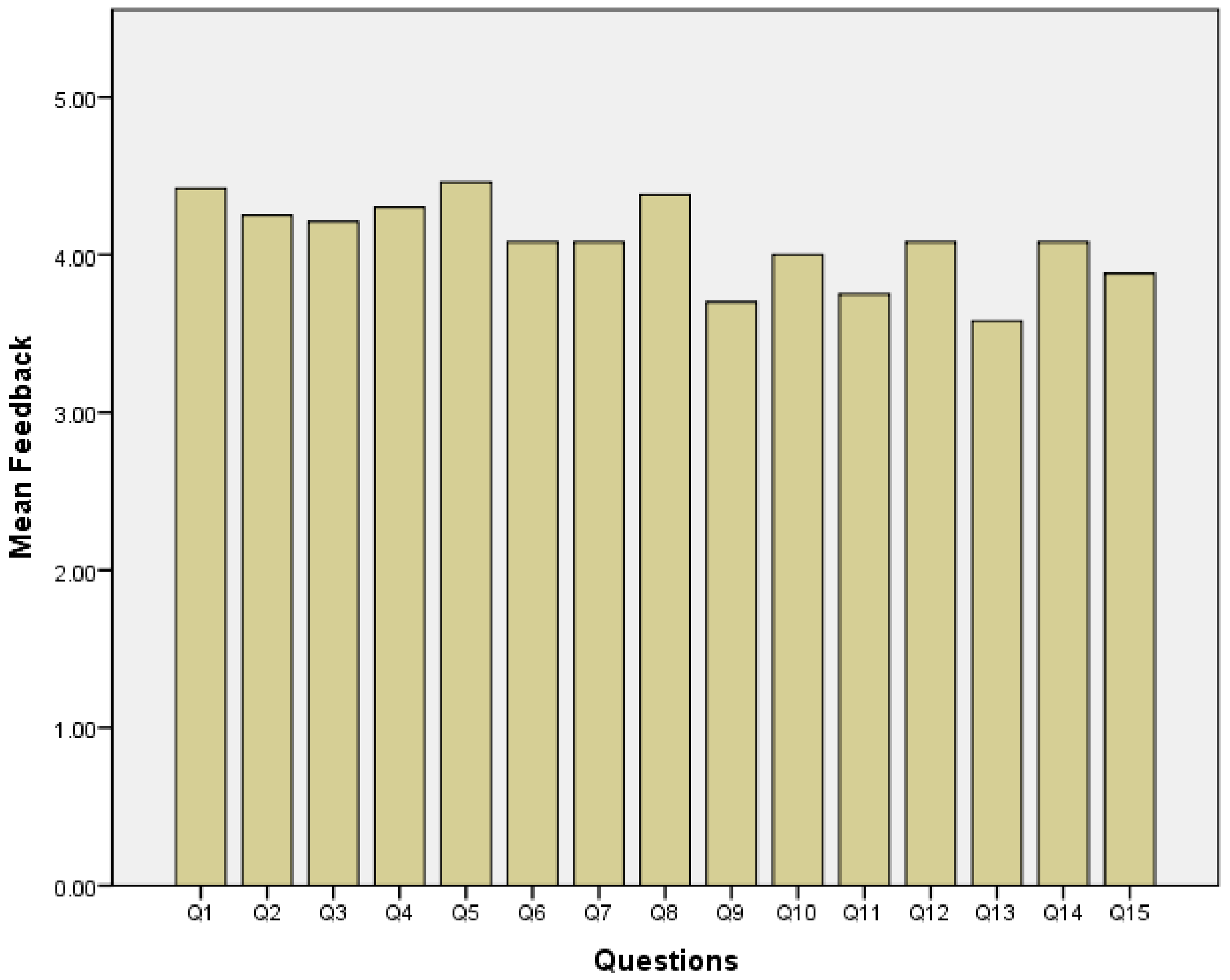

4.4. Learners’ Feedback on Interactive Activities

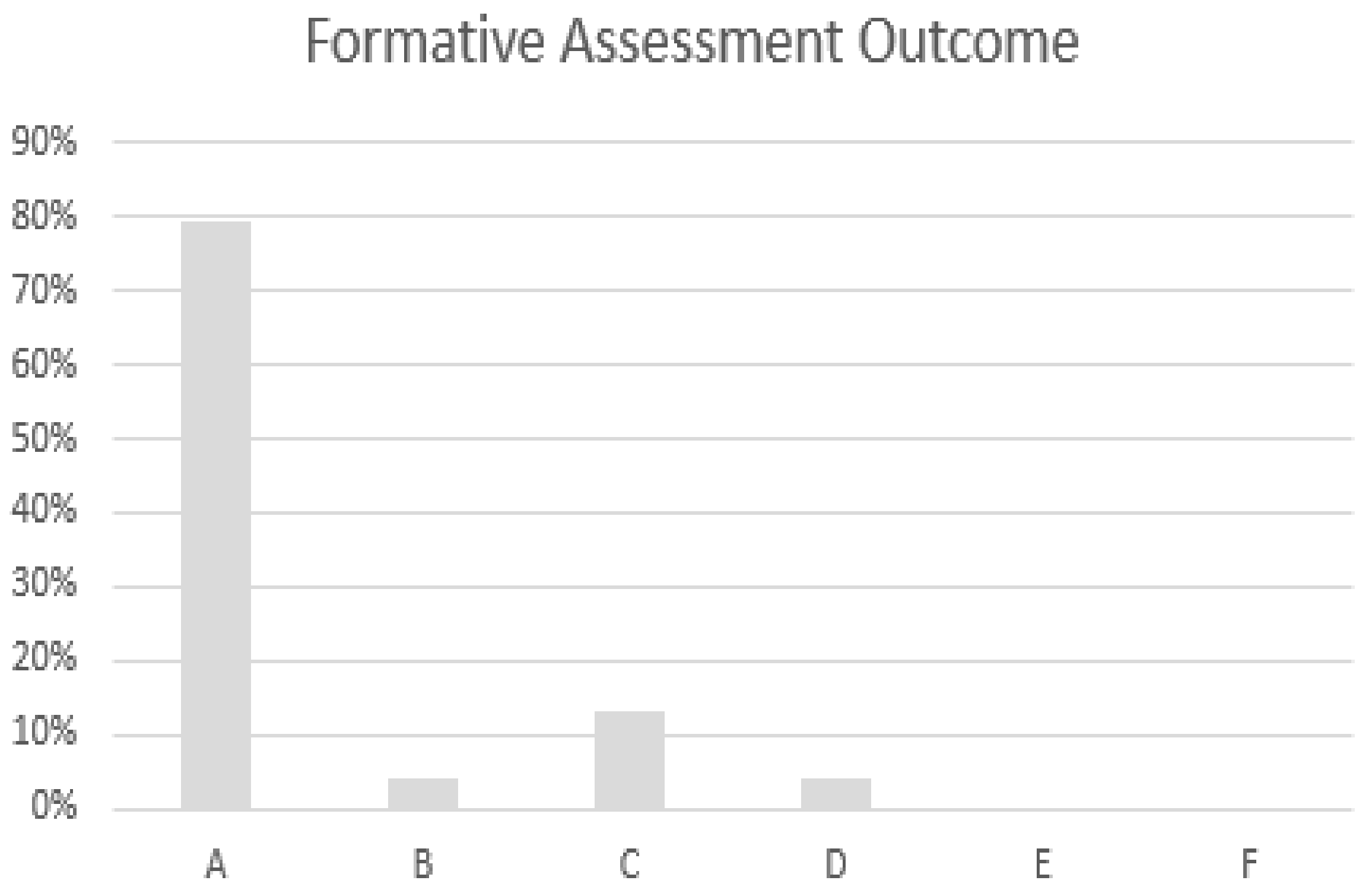

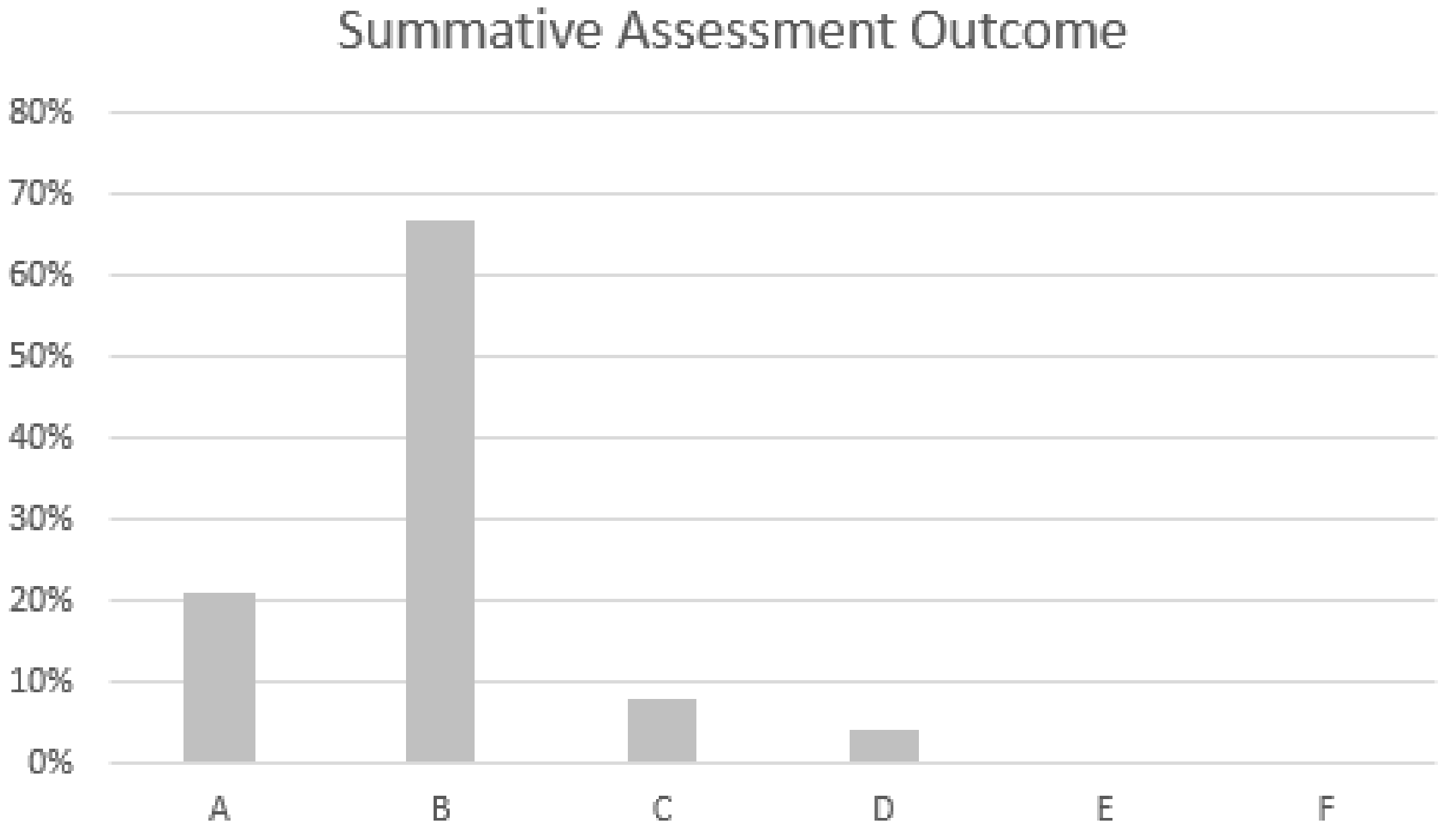

4.5. Learners Outcomes and Feedback

“I like it and I wish all lecturers do the same!”

“Love the questions at the end of the lectures.”

“Seminars are really productive and have made the course very interesting. I have enjoyed the seminars very much so far and will continue to learn more outside of class because of it.”

4.6. Teacher Observations

- Response to Teacher Enquiries: Before the intervention in week 3, the student–teacher interaction was teacher driven. Some students were believed to be reluctant to participate and respond to teacher enquiries. The problem-based group tasks and card sorting activities [81] increased students’ response to the teacher’s enquiries. This fostered learning and increased trust in the student–teacher relationship and promoted collaborative learning. There was a notable increase in the student’s response to teacher’s enquiries.

- Student Enquiries: The number of students’ enquiries was zero in the first two weeks. However, the group-based activities encouraged constructive discussions and enquiries. It helped in knowledge construction and inclusive learning. At times, the problem-based group exercise generated positive debates between peers, students, and the teacher. The level of interest increased in the last 4 weeks, and some students stayed for longer after the lecture time to make further enquiries.

- Peer Chat and Mobile Phone Distractions: It was observed that a small number of students (4–5) were constantly involved in distracting peer chat and occasionally engaged in activities on their phones in the first 3 weeks. Students were randomly seated during the problem-based group tasks and card sorting exercise, which helped to divert the discussion in a positive direction. Furthermore, students used their phones for live participation in Slido quizzes, which minimized digital distraction. The use of Plickers display cards to answer the questions increased the element of excitement and participation.

- Late Joining: It was observed that 2–3 students were joining late in the first two weeks. The use of participatory activities encouraged students to be punctual and arrive on time. This improved the class discipline and minimized distraction.

5. Discussion

6. Study Limitations

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Availability of Data and Material

References

- Halverson, L.R.; Graham, C.R. Learner engagement in blended learning environments: A conceptual framework. Online Learn. 2019, 23, 145–178. [Google Scholar] [CrossRef]

- Paulsen, J.; McCormick, A.C. Reassessing disparities in online learner student engagement in higher education. Educ. Res. 2020, 49, 20–29. [Google Scholar] [CrossRef]

- Kuh, G.D. The national survey of student engagement: Conceptual and empirical foundations. New Dir. Inst. Res. 2009, 2009, 5–20. [Google Scholar] [CrossRef]

- Mallin, I. Lecture and active learning as a dialectical tension. Commun. Educ. 2017, 66, 242–243. [Google Scholar] [CrossRef]

- Deng, R.; Benckendorff, P.; Gannaway, D. Learner engagement in MOOCs: Scale development and validation. Br. J. Educ. Technol. 2020, 51, 245–262. [Google Scholar] [CrossRef]

- Delialioğlu, Ö. Student engagement in blended learning environments with lecture-based and problem-based instructional approaches. J. Educ. Technol. Soc. 2012, 15, 310–322. [Google Scholar]

- Law, K.M.; Lee, V.C.; Yu, Y.-T. Learning motivation in e-learning facilitated computer programming courses. Comput. Educ. 2010, 55, 218–228. [Google Scholar] [CrossRef]

- Jenkins, T. The motivation of students of programming. ACM SIGCSE Bull. 2001, 33. [Google Scholar] [CrossRef]

- Harrison, C.V. Concept-based curriculum: Design and implementation strategies. Int. J. Nurs. Educ. Scholarsh. 2020, 1. [Google Scholar] [CrossRef]

- Pountney, R. The Curriculum Design Coherence. In A Research Approach to Curriculum Development; British Educational Research Association: London, UK, 2020; p. 37. [Google Scholar]

- Blenkin, G.M.; Kelly, A.V. The Primary Curriculum in Action: A Process Approach to Educational Practice; Harpercollins College Division: New York, NY, USA, 1983. [Google Scholar]

- Smith, M.K. Curriculum theory and practice. The Encyclopedia of Informal Education. 2000. Available online: www.infed.org/biblio/b-curricu.htm (accessed on 2 November 2020).

- Tyler, R.W. Basic Principles of Curriculum and Instruction; University of Chicago Press: Chicago, IL, USA, 1949. [Google Scholar]

- Stenhouse, L. An Introduction to Curriculum Research and Development; Pearson Education: London, UK, 1975. [Google Scholar]

- Jonassen, D.H. Thinking technology: Context is everything. Educ. Technol. 1991, 31, 35–37. [Google Scholar]

- Ramsden, P. Learning to Teach in Higher Education; Routledge: London, UK, 2003. [Google Scholar]

- Camilleri, M.A.; Camilleri, A. Student centred learning through serious games. In Student-Centred Learning through Serious Games, Proceedings of the 13th Annual International Technology, Education and Development Conference, Valencia, Spain, 11–13 March 2019; International Academy of Technology, Education and Development (IATED): Valencia, Spain, 2019. [Google Scholar]

- Newmann, F.M. Student Engagement and Achievement in American Secondary Schools; ERIC: New York, NY, USA, 1992. [Google Scholar]

- Stodolsky, S.S. The Subject Matters: Classroom Activity in Math and Social Studies; University of Chicago Press: Chicago, IL, USA, 1988. [Google Scholar]

- Coleman, T.E.; Money, A.G. Student-centred digital game-based learning: A conceptual framework and survey of the state of the art. High. Educ. 2020, 79, 415–457. [Google Scholar] [CrossRef]

- Lee, E.; Hannafin, M.J. A design framework for enhancing engagement in student-centered learning: Own it, learn it, and share it. Educ. Technol. Res. Dev. 2016, 64, 707–734. [Google Scholar] [CrossRef]

- Demuyakor, J. Coronavirus (COVID-19) and online learning in higher institutions of education: A survey of the perceptions of Ghanaian international students in China. Online J. Commun. Media Technol. 2020, 10, e202018. [Google Scholar] [CrossRef]

- Dhawan, S. Online learning: A panacea in the time of COVID-19 crisis. J. Educ. Technol. Syst. 2020, 49, 5–22. [Google Scholar] [CrossRef]

- Carroll, M.; Lindsey, S.; Chaparro, M.; Winslow, B. An applied model of learner engagement and strategies for increasing learner engagement in the modern educational environment. Interact. Learn. Environ. 2019, 1–15. [Google Scholar] [CrossRef]

- Bode, M.; Drane, D.; Kolikant, Y.B.-D.; Schuller, M. A clicker approach to teaching calculus. Not. AMS 2009, 56, 253–256. [Google Scholar]

- Trees, A.R.; Jackson, M.H. The learning environment in clicker classrooms: Student processes of learning and involvement in large university-level courses using student response systems. Learn. Media Technol. 2007, 32, 21–40. [Google Scholar] [CrossRef]

- Kennedy, G.E.; Cutts, Q.I. The association between students’ use of an electronic voting system and their learning outcomes. J. Comput. Assist. Learn. 2005, 21, 260–268. [Google Scholar] [CrossRef]

- Lasry, N. Clickers or flashcards: Is there really a difference? Phys. Teach. 2008, 46, 242–244. [Google Scholar] [CrossRef][Green Version]

- Greer, L.; Heaney, P.J. Real-time analysis of student comprehension: An assessment of electronic student response technology in an introductory earth science course. J. Geosci. Educ. 2004, 52, 345–351. [Google Scholar] [CrossRef]

- Zhu, E.; Bierwert, C.; Bayer, K. Qwizdom student survey December 06. Raw Data 2006, unpublished. [Google Scholar]

- Hall, R.H.; Collier, H.L.; Thomas, M.L.; Hilgers, M.G. A student response system for increasing engagement, motivation, and learning in high enrollment lectures. In Proceedings of the Americas Conference on Information Systems, Omaha, NE, USA, 11–14 August 2005; p. 255. [Google Scholar]

- Silliman, S.E.; Abbott, K.; Clark, G.; McWilliams, L. Observations on benefits/limitations of an audience response system. Age 2004, 9, 1. [Google Scholar]

- Koenig, K. Building acceptance for pedagogical reform through wide-scale implementation of clickers. J. Coll. Sci. Teach. 2010, 39, 46. [Google Scholar]

- Mason, R.B. Student engagement with, and participation in, an e-forum. J. Int. Forum Educ. Technol. Soc. 2011, 258–268. [Google Scholar]

- Walsh, J.P.; Chih-Yuan Sun, J.; Riconscente, M. Online teaching tool simplifies faculty use of multimedia and improves student interest and knowledge in science. CBE Life Sci. Educ. 2011, 10, 298–308. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.C.Y.; Rueda, R. Situational interest, computer self-efficacy and self-regulation: Their impact on student engagement in distance education. Br. J. Educ. Technol. 2012, 43, 191–204. [Google Scholar] [CrossRef]

- Middlebrook, G.; Sun, J.C.-Y. Showcase hybridity: A role for blogfolios. In Perspectives on Writing; The Wac Clearinghouse, University of California: Santa Barbara, CA, USA, 2013; pp. 123–133. [Google Scholar]

- Tømte, C.E.; Fossland, T.; Aamodt, P.O.; Degn, L. Digitalisation in higher education: Mapping institutional approaches for teaching and learning. Qual. High. Educ. 2019, 25, 98–114. [Google Scholar] [CrossRef]

- Valk, J.-H.; Rashid, A.T.; Elder, L. Using mobile phones to improve educational outcomes: An analysis of evidence from Asia. Int. Rev. Res. Open Distrib. Learn. 2010, 11, 117–140. [Google Scholar] [CrossRef]

- Şad, S.N.; Göktaş, Ö. Preservice teachers’ perceptions about using mobile phones and laptops in education as mobile learning tools. Br. J. Educ. Technol. 2014, 45, 606–618. [Google Scholar] [CrossRef]

- Ally, M. Mobile Learning: Transforming the Delivery of Education and Training; Athabasca University Press: Edmonton, AB, Canada, 2009. [Google Scholar]

- McCoy, B. Digital Distractions in the Classroom: Student Classroom Use of Digital Devices for Non-Class Related Purposes; University of Nebraska: Lincoln, NE, USA, 2013. [Google Scholar]

- Fried, C.B. In-class laptop use and its effects on student learning. Comput. Educ. 2008, 50, 906–914. [Google Scholar] [CrossRef]

- Burns, S.M.; Lohenry, K. Cellular phone use in class: Implications for teaching and learning a pilot study. Coll. Stud. J. 2010, 44, 805–811. [Google Scholar]

- Flanigan, A.E.; Babchuk, W.A. Digital distraction in the classroom: Exploring instructor perceptions and reactions. Teach. High. Educ. 2020, 1–19. [Google Scholar] [CrossRef]

- Leidner, D.E.; Jarvenpaa, S.L. The use of information technology to enhance management school education: A theoretical view. MIS Q. 1995, 19, 265–291. [Google Scholar] [CrossRef]

- Moallem, M. Applying constructivist and objectivist learning theories in the design of a web-based course: Implications for practice. Educ. Technol. Soc. 2001, 4, 113–125. [Google Scholar]

- Zendler, A. Teaching Methods for computer Science Education in the Context of Significant Learning Theories. Int. J. Inf. Educ. Technol. 2019, 9. [Google Scholar] [CrossRef]

- Ben-Ari, M. Constructivism in computer science education. J. Comput. Math. Sci. Teach. 2001, 20, 45–73. [Google Scholar]

- Barrows, H.S. How to Design a Problem-Based Curriculum for the Preclinical Years; Springer Pub. Co.: Berlin/Heidelberg, Germany, 1985; Volume 8. [Google Scholar]

- Boud, D. Problem-based learning in perspective. In Problem-Based Learning in Education for the Professions; Higher Education Research and Development Society of Australia: Sydney, Australia, 1985; Volume 13. [Google Scholar]

- Pluta, W.J.; Richards, B.F.; Mutnick, A. PBL and beyond: Trends in collaborative learning. Teach. Learn. Med. 2013, 25, S9–S16. [Google Scholar] [CrossRef]

- Bruce, S. Social Learning and Collaborative Learning: Enhancing Learner’s Prime Skills; International Specialised Skills Institute: Carlton, Australia, 2019; p. 64. [Google Scholar]

- Bruffee, K.A. Collaborative Learning: Higher Education, Interdependence, and the Authority of Knowledge. In ERIC; Johns Hopkins University Press: Baltimore, MD, USA, 1999. [Google Scholar]

- Slavin, R.E. Cooperative Learning: Theory, Research, and Practice; Educational Leadership; Johns Hopkins University Press: Baltimore, MD, USA, 1990. [Google Scholar]

- Pace, C.R. Measuring the quality of student effort. Curr. Issues High. Educ. 1980, 2, 10–16. [Google Scholar]

- Jelfs, A.; Nathan, R.; Barrett, C. Scaffolding students: Suggestions on how to equip students with the necessary study skills for studying in a blended learning environment. J. Educ. Media 2004, 29, 85–96. [Google Scholar] [CrossRef]

- Ginns, P.; Ellis, R. Quality in blended learning: Exploring the relationships between on-line and face-to-face teaching and learning. Internet High. Educ. 2007, 10, 53–64. [Google Scholar] [CrossRef]

- McCutcheon, G.; Jung, B. Alternative perspectives on action research. Theory Pract. 1990, 29, 144–151. [Google Scholar] [CrossRef]

- Schön, D.A. Knowing-in-action: The new scholarship requires a new epistemology. Chang. Mag. High. Learn. 1995, 27, 27–34. [Google Scholar] [CrossRef]

- McNiff, J. You and Your Action Research Project; Routledge: Milton, UK, 2016. [Google Scholar]

- Wlodkowski, R.J.; Ginsberg, M.B. Diversity & Motivation; Jossey-Bass Social and Behavioral Science Series; Jossey-Bass: San Francisco, CA, USA, 1995. [Google Scholar]

- Tyler, R.W. Basic Principles of Curriculum and Instruction; University of Chicago Press: Chicago, IL, USA, 2013. [Google Scholar]

- Prideaux, D. Curriculum design. BMJ 2003, 326, 268–270. [Google Scholar] [CrossRef] [PubMed]

- Greenwood, C.R.; Horton, B.T.; Utley, C.A. Academic Engagement: Current Perspectives in Research and Practice. Sch. Psychol. Rev. 2002, 31, 328–349. [Google Scholar] [CrossRef]

- Lee, J.; Song, H.-D.; Hong, A.J. Exploring factors, and indicators for measuring students’ sustainable engagement in e-learning. Sustainability 2019, 11, 985. [Google Scholar] [CrossRef]

- Zuber-Skerritt, O. A model for designing action learning and action research programs. Learn. Organ. 2002, 9, 143–149. [Google Scholar] [CrossRef]

- Gable, G.G. Integrating case study and survey research methods: An example in information systems. Eur. J. Inf. Syst. 1994, 3, 112–126. [Google Scholar] [CrossRef]

- Dillman, D.A.; Bowker, D.K. The web questionnaire challenge to survey methodologists. Online Soc. Sci. 2001, 53–71. [Google Scholar]

- Coffey, M.; Gibbs, G. The evaluation of the student evaluation of educational quality questionnaire (SEEQ) in UK higher education. Assess. Eval. High. Educ. 2001, 26, 89–93. [Google Scholar] [CrossRef]

- Marsh, H.W.; Hocevar, D. Students’ evaluations of teaching effectiveness: The stability of mean ratings of the same teachers over a 13-year period. Teach. Teach. Educ. 1991, 7, 303–314. [Google Scholar] [CrossRef]

- Marsh, H.W.; Roche, L.A. Making students’ evaluations of teaching effectiveness effective: The critical issues of validity, bias, and utility. Am. Psychol. 1997, 52, 1187. [Google Scholar] [CrossRef]

- Farzaneh, N.; Nejadansari, D. Students’ attitude towards using cooperative learning for teaching reading comprehension. Theory Pract. Lang. Stud. 2014, 4, 287. [Google Scholar] [CrossRef]

- Lewin, K. Action research and minority problems. J. Soc. Issues 1946, 2, 34–46. [Google Scholar] [CrossRef]

- Lewin, K. Frontiers in group dynamics II. Channels of group life; social planning and action research. Hum. Relat. 1947, 1, 143–153. [Google Scholar] [CrossRef]

- Dickens, L.; Watkins, K. Action research: Rethinking Lewin. Manag. Learn. 1999, 30, 127–140. [Google Scholar] [CrossRef]

- Barrows, H.S. A taxonomy of problem-based learning methods. Med. Educ. 1986, 20, 481–486. [Google Scholar] [CrossRef]

- Biggs, J. Enhancing teaching through constructive alignment. High. Educ. 1996, 32, 347–364. [Google Scholar] [CrossRef]

- Gibbs, G.; Jenkins, A. Break up your lectures: Or Christaller sliced up. J. Geogr. High. Educ. 1984, 8, 27–39. [Google Scholar] [CrossRef]

- Mayer, R.E.; Moreno, R. Nine ways to reduce cognitive load in multimedia learning. Educ. Psychol. 2003, 38, 43–52. [Google Scholar] [CrossRef]

- Ullah, A. GitHub Repository for Card Sorting—Data and Knowledge Management. Available online: https://github.com/abrarullah007/cardsorting.git (accessed on 2 November 2020).

- Thomas, J.; López-Fernández, V.; Llamas-Salguero, F.; Martín-Lobo, P.; Pradas, S. Participation and knowledge through Plickers in high school students and its relationship to creativity. In Proceedings of the UNESCOUNIR ICT & Education Latam Congress, Bogota, Colombia, 22–24 June 2016. [Google Scholar]

- Graham, K. TechMatters: Further Beyond Basic Presentations: Using Sli.do to Engage and Interact With Your Audience. LOEX Q. 2015, 42, 4. [Google Scholar]

- Bolkan, S. Intellectually stimulating students’ intrinsic motivation: The mediating influence of affective learning and student engagement. Commun. Rep. 2015, 28, 80–91. [Google Scholar] [CrossRef]

- Oh, J.; Kim, S.J.; Kim, S.; Vasuki, R. Evaluation of the effects of flipped learning of a nursing informatics course. J. Nurs. Educ. 2017, 56, 477–483. [Google Scholar] [CrossRef] [PubMed]

- Dochy, F.; Segers, M.; Van den Bossche, P.; Gijbels, D. Effects of problem-based learning: A meta-analysis. Learn. Instr. 2003, 13, 533–568. [Google Scholar] [CrossRef]

- Kirschner, F.; Kester, L.; Corbalan, G. Cognitive load theory and multimedia learning, task characteristics, and learning engagement: The current state of the art. Comput. Hum. Behav. 2010. [Google Scholar] [CrossRef]

- Wood, T.A.; Brown, K.; Grayson, J.M. Faculty and student perceptions of Plickers. In Proceedings of the American Society for Engineering Education, San Juan, PR, USA, 2017; Volume 5. [Google Scholar]

- Ha, J. Using Mobile-Based Slido for Effective Management of a University English Reading Class. Multimed. Assist. Lang. Learn. 2018, 21, 37–56. [Google Scholar]

- Stowell, J.R.; Nelson, J.M. Benefits of electronic audience response systems on student participation, learning, and emotion. Teach. Psychol. 2007, 34, 253–258. [Google Scholar] [CrossRef]

- Sun, J.C.-Y. Influence of polling technologies on student engagement: An analysis of student motivation, academic performance, and brainwave data. Comput. Educ. 2014, 72, 80–89. [Google Scholar] [CrossRef]

- McBurnett, B. Incorporating Paper Clicker (Plicker) Questions in General Chemistry Courses To Enhance Active Learning and Limit Distractions. In Technology Integration in Chemistry Education and Research (TICER); ACS Publications: Washington, DC, USA, 2019; pp. 177–182. [Google Scholar]

- Kelly, P.A.; Haidet, P.; Schneider, V.; Searle, N.; Seidel, C.L.; Richards, B.F. A comparison of in-class learner engagement across lecture, problem-based learning, and team learning using the STROBE classroom observation tool. Teach. Learn. Med. 2005, 17, 112–118. [Google Scholar] [CrossRef]

- Uchidiuno, J.; Yarzebinski, E.; Keebler, E.; Koedinger, K.; Ogan, A. Learning from african classroom pedagogy to increase student engagement in education technologies. In Proceedings of the 2nd ACM SIGCAS Conference on Computing and Sustainable Societies, Accra, Ghana, 3–5 July 2019. [Google Scholar]

- Tlhoaele, M.; Hofman, A.; Winnips, K.; Beetsma, Y. The impact of interactive engagement methods on students’ academic achievement. High. Educ. Res. Dev. 2014, 33, 1020–1034. [Google Scholar] [CrossRef]

- Mustami’ah, D.; Widanti, N.S.; Rahmania, A.M. Student Engagement, Learning Motivation And Academic Achievement Through Private Junior High School Students In Bulak District, Surabaya. Int. J. Innov. Res. Adv. Stud. IJIRAS 2020, 7, 130–139. [Google Scholar]

- Shibley Hyde, J.; Kling, K.C. Women, motivation, and achievement. Psychol. Women Q. 2001, 25, 364–378. [Google Scholar] [CrossRef]

- Garrison, D.R.; Anderson, T.; Archer, W. Critical inquiry in a text-based environment: Computer conferencing in higher education. Internet High. Educ. 1999, 2, 87–105. [Google Scholar] [CrossRef]

- Garrison, D.R.; Vaughan, N.D. Blended Learning in Higher Education: Framework, Principles, and Guidelines; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

| Learning |

| 1. I have found the course intellectually challenging and stimulating. |

| 2. I have learned something which I consider valuable. |

| 3. My interest in the subject has increased as a consequence of this course. |

| 4. I have learned and understood the subject materials of this course. |

| Organization |

| 5. Instructor’s explanations were clear. |

| 6. Course materials were well prepared and carefully explained. |

| 7. Proposed objectives agreed with those actually taught, so I knew where course was going. |

| 8. Instructor gave lectures that facilitated taking notes. |

| Group Interaction |

| 9. Students were encouraged to participate in class discussions. |

| 10. Students were invited to share their ideas and knowledge. |

| 11. Students were encouraged to ask questions and were given meaningful answers. |

| 12. Students were encouraged to express their own ideas and/or question the instructor. |

| Breadth |

| 13. Instructor contrasted the implications of various theories. |

| 14. Instructor presented the background or origin of ideas/concepts developed in class. |

| 15. Instructor presented points of view other than his/her own when appropriate. |

| 16. Instructor adequately discussed current developments in the field. |

| Comments/Feedback |

| 17. Please provide any additional comments or feedback. |

| Characteristic | Value |

|---|---|

| Programme | BSc (Computer Science) |

| Module | Data and Knowledge Management |

| Average Age | 20–22 |

| Nationalities | UK (21), China (1), Middle East (2) |

| Gender | Female: 7, Male: 17 |

| Total participants | 24 |

| SEEQ Questionnaire | Response (Mean) | |

|---|---|---|

| Learning | Pre | Post |

| 1. I have found the course intellectually challenging and stimulating. | 3.7 | 3.7 |

| 2. I have learned something which I consider valuable. | 3.6 | 4.2 |

| 3. My interest in the subject has increased as a consequence of this course. | 3.2 | 4.2 |

| 4. I have learned and understood the subject materials of this course. | 3.2 | 4.0 |

| Organization | ||

| 5. Instructor’s explanations were clear. | 3.4 | 4.1 |

| 6. Course materials were well prepared and carefully explained. | 3.3 | 4.3 |

| 7. Proposed objectives agreed with those actually taught so I knew where course was going. | 3.3 | 3.8 |

| 8. Instructor gave lectures that facilitated taking notes. | 3.3 | 4.0 |

| Group Interaction | ||

| 9. Students were encouraged to participate in class discussions. | 3.7 | 4.5 |

| 10. Students were invited to share their ideas and knowledge. | 3.8 | 4.2 |

| 11. Students were encouraged to ask questions and were given meaningful answers. | 3.8 | 4.4 |

| 12. Students were encouraged to express their own ideas and/or question the instructor. | 3.3 | 4.2 |

| Breadth | ||

| 13. Instructor contrasted the implications of various theories. | 3.4 | 3.8 |

| 14. Instructor presented the background or origin of ideas/concepts developed in class. | 3.6 | 4.0 |

| 15. Instructor presented points of view other than his/her own when appropriate. | 3.5 | 4.0 |

| 16. Instructor adequately discussed current developments in the field. | 3.3 | 3.9 |

| Comments/Feedback | ||

| 17. Please provide any additional comments or feedback. | x | x |

| No. | Questions | Mean | SD |

|---|---|---|---|

| Q.1 | I enjoyed working together in groups. | 4.42 | 0.82 |

| Q.2 | The group/collaborative approach method made understanding of “Database” concepts easy for me. | 4.25 | 0.83 |

| Q.3 | I felt responsibility to contribute to my group. | 4.21 | 0.82 |

| Q.4 | I get along with other group members. | 4.30 | 0.8 |

| Q.5 | The use of technology, e.g., Slido and Plickers, enhanced my learning experience in the class. | 4.46 | 0.64 |

| Q.6 | The card sorting format is the best way for me to learn the material. | 4.08 | 0.7 |

| Q.7 | The group-based problem-solving format is the best way for me to learn the material. | 4.08 | 0.81 |

| Q.8 | I enjoyed working with my class mates on group tasks. | 4.38 | 0.7 |

| Q.9 | The group-based problem-solving format was disruptive (r). | 3.70 | 1.3 |

| Q.10 | The group-based problem-solving approach was NOT effective (r). | 4.00 | 1.1 |

| Q.11 | The group task method helped me to develop enjoyment in coming to the class. | 3.75 | 0.78 |

| Q.12 | I prefer that my teacher use more group activities. | 4.08 | 0.86 |

| Q.13 | My work is better organized when I am in a group. | 3.58 | 0.91 |

| Q.14 | Group activities make the learning experience easier. | 4.08 | 0.64 |

| Q.15 | Cooperative learning can improve my attitude towards work. | 3.88 | 0.73 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ullah, A.; Anwar, S. The Effective Use of Information Technology and Interactive Activities to Improve Learner Engagement. Educ. Sci. 2020, 10, 349. https://doi.org/10.3390/educsci10120349

Ullah A, Anwar S. The Effective Use of Information Technology and Interactive Activities to Improve Learner Engagement. Education Sciences. 2020; 10(12):349. https://doi.org/10.3390/educsci10120349

Chicago/Turabian StyleUllah, Abrar, and Sajid Anwar. 2020. "The Effective Use of Information Technology and Interactive Activities to Improve Learner Engagement" Education Sciences 10, no. 12: 349. https://doi.org/10.3390/educsci10120349

APA StyleUllah, A., & Anwar, S. (2020). The Effective Use of Information Technology and Interactive Activities to Improve Learner Engagement. Education Sciences, 10(12), 349. https://doi.org/10.3390/educsci10120349