1. Introduction

As one of the world’s main exporters, Germany’s trade policy has received much attention in recent years. Professional forecasts play a crucial role in this context, as economic agents rely on such forecasts, when shaping economic expectations (

Carroll 2003). In order to make accurate policy and investment decisions, it is, therefore, necessary to correctly predict trade developments, as these dynamics greatly influence output growth and price levels through inflationary pressures from import prices (

D’Agostino et al. 2017). Additionally, optimal trade forecasts are an essential part of GDP forecasts as macroeconomic forecasters tend to implement a so-called disaggregated approach when forming such forecast. In that case, economic research institutes individually predict all components of the GDP and combine these predictions to a forecast of total output (see, among others, (

Angelini et al. 2010;

Heinisch and Scheufele 2018) for a comparison of direct and disaggregated forecasting approaches). Research on predictions of single GDP components mostly focuses on forecasts of private consumption (see, for instance,

Vosen and Schmidt 2011). In the case of trade developments, a study by

Ito (

1990) reports behavioral biases of importers and exporters when forming expectations about exchange rate changes, hinting that also macroeconomic trade forecasts might not be optimal.

Even though trade developments play a prominent role in the formation of macroeconomic forecasts, their evaluation has not been extensively studied. The focus of the research on trade forecasts lies on their formation. Here, studies either implement structural models of economic environments and simulate trade dynamics (

Hervé et al. 2011;

Riad et al. 2012) or other authors aim at optimizing the forecasting performance of time series models (

Frale et al. 2010;

Jakaitiene and Dées 2012;

Keck et al. 2009;

Yu et al. 2008). Studying German trade is especially interesting because of the country’s dependence on exports and its large trade surplus, which has been at the center of public attention in recent years. However, studies analyzing German trade forecasts are scarce. The existing studies concentrate on forecasting a single trade aggregate, i.e., German exports (

Elstner et al. 2013;

Grossmann and Scheufele 2019;

Jannsen and Richter 2012), or imports (

Grimme et al. 2019;

Hetemäki and Mikkola 2005). However, despite the fact that suboptimal trade forecasts can potentially be costly if, for instance, a protectionist trade policy is pursued due to such forecasts, the evaluation of trade forecasts has not received much attention.

To this end, I build on research by

Elliott et al. (

2005,

2008), who study optimal forecasts under asymmetric loss. The seminal work in this field by

Granger (

1969),

Varian (

1974), and

Zellner (

1986) as well as early applications (

Christoffersen and Diebold 1996,

1997) have recently been applied in several fields such as financial forecasting (

Aretz et al. 2011;

Fritsche et al. 2015), fiscal forecasting (

Artis and Marcellino 2001;

Elliott et al. 2005), central banking (e.g.,

Capistrán 2008;

Pierdzioch et al. 2016a), as well as GDP and inflation forecasting (

Christodoulakis and Mamatzakis 2008;

Pierdzioch et al. 2016b;

Sun et al. 2018). The basic assumption in this field of research is an asymmetric loss function. In other words, researchers assume that a forecaster’s loss function is not symmetric, which allows for the possibility that forecasters prefer, e.g., underestimating economic growth over overestimating. A possible scenario could be that forecasters fear unfavorable media coverage in the case of an unforeseen recession, whereas an upward correction of the forecast in economically good times might not have such ramifications. In case of trade forecasts, especially in the case of Germany, a motivation for forecasters to prefer underestimating export growth over overestimating, might be the recently increasing protectionist tendencies in international trade (see, among others,

Durusoy et al. 2015;

Park 2018;

Pelagidis 2018). An inflated export growth forecast for Germany could add into the spirit of protectionism of some of its trading partners.

Patton and Timmermann (

2007), for instance, find for GDP forecasts that, in times of low economic growth, overpredictions of output growth are costlier to the Federal Reserve Bank than underpredictions. The authors introduce the concept of a flexible loss function. The advantage of this concept is that no restrictions need to be imposed on the forecasters loss function; however, as a trade-off, restrictions need to be imposed on the data-generating process (for details on the concept of flexible loss and its implementation, see

Section 2). When analyzing the optimality of forecasts, it can be crucial to consider a flexible loss function as results can be misleading if a researcher assumes a certain type of loss function, which, in fact, is misspecified

Pierdzioch and Rülke (

2013). I, therefore, extend the

Behrens et al. (

2018c) approach to testing forecast optimality by estimating random regression forests with a quadratic, i.e., symmetric, loss function as well as random classification forests with a flexible loss function to analyze whether or not German trade forecasts are best described by a symmetric loss function. Tree-based models are powerful nonparametric modeling instruments, for which no model specifications regarding the linkage of predictor and response variables need to be imposed a priori and that can deal with datasets with a small number of observations relative to the number of predictor variables. I study export and import growth forecasts for Germany from 1970 to 2017 formed by four major German economic research institutes, a collaboration of German economic research institutes, and an international forecaster.

2. The Model

In their study of the Federal Reserve’s economic growth forecasts,

Patton and Timmermann (

2007) introduce a method to analyze the optimality of a forecast when the type of a forecaster’s loss function is unknown. This concept of a flexible loss function requires a trade-off regarding the underlying data generating process. That is, if (a) the loss function depends only on the forecast error and the data generating process only has dynamics in the conditional mean, or (b) the loss function is homogeneous in the forecast error and the data generating process has dynamics in the conditional mean and variance, a forecast is considered as optimal if the sequence,

, is independent of the forecaster’s information set at the time of forecast formation (see proposition 3,

Patton and Timmermann 2007, pp. 1175f). Here,

is an indicator function and

denotes a forecast error. Given the data at hand (see

Section 3 for details), there are no issues regarding dynamics of higher-order moments, as the analyzed forecasts are only available midyear and at the turn of a given year.

Patton and Timmermann (

2007) further show that forecast optimality can, hence, be tested by estimating a logit or probit model of the form:

where

is a matrix containing a forecaster’s information set in period of time

t,

denotes a vector of coefficients, and

is an error term. A forecast is considered as optimal if the null hypothesis, which states that the set of predictors does not have predictive value for the sign of the forecast error, i.e.,

, cannot be rejected.

However, such a linear approach to testing forecast optimality runs into problems if, for instance, the number of observed forecast errors is relatively small, such that a large set of predictors causes a problem regarding the degrees of freedom of the model. In this case, one could reduce the number of predictors, which are contained in

. However, choosing the omitted predictors is arbitrary at least to some degree. Another problem arises when modeling the link between the sign of the forecast error and the predictor variables. A priori, the researcher needs to define the nature of said link, i.e., if it is, for instance, linear, quadratic, or cubic. Furthermore, the predictor variables can be interdependent, such that this relationship also needs to enter the predefined estimation equation. When using a linear approach to testing forecast optimality, there are several sources of possible model misspecifications, which in turn result in biased results. I follow

Behrens et al. (

2018c), who propose a nonparametric tree-based model, implementing the

Patton and Timmermann (

2007) test of forecast optimality, to overcome these drawbacks. Tree-based models have first been introduced by

Breiman et al. (

1984) and have an advantage in, loosely speaking, letting the data decide on an appropriate form of the estimated model. No predefined linkages between predictor variables and the response variable or among the predictor variables need to be imposed by the researcher.

Behrens et al. (

2018c) implement Equation (

1) by means of random classification forests, which pool a large number of classification trees, such that forecast optimality under flexible loss can be studied by the following model (for an introduction to random forests, see (

Breiman 2001)):

where

is a single classification tree. I extend their model by reestimating Equation (

2) by means of random regression forests,

, in order to analyze forecast optimality of German export and import growth forecasts under quadratic and flexible loss.

Generally speaking, regression trees analyze the effects of predictors on a continuous response variable and classification trees do so on a discrete response variable. Both types of trees work along the same process, as they aim at structuring the feature space, i.e., the space set up by the analyzed

n predictors,

, into several non-overlapping regions,

, in which a simple model is fit (for a comprehensive introduction to tree-based methods, see (

James et al. 2015), whose notation I roughly follow, dropping the time index,

t, for simplification). These rectangles, or partitions, are generated by recursive, binary splits at the so-called interior nodes of each tree.

Figure 1 depicts this tree-building process. An exemplary tree is depicted in the left panel and the respectively divided feature space is shown on the right. The dataset is split into two subsequent regions at each node. The first split occurs at the root of the tree and observations with partitioning predictors

less than or equal to cutpoint

are sent down the left branch of the tree, where they reach a terminal node and are assigned to region

. For observations with partitioning predictors

greater than cutpoint

, another split occurs at the following node. Here, observations which satisfy

, are sent down this node’s left branch and observations with partitioning predictors

are sent down the right branch. At each following node, another split occurs with regard to partitioning predictor

, such that, at the first subsequent node, observations with partitioning predictors

and

are assigned to region

and those observations with

and

are assigned to region

. At the second subsequent node, i.e., for

, observations which satisfy

are assigned to region

, whereas observations with partitioning predictors

greater than cutpoint

are assigned to region

. The right panel of

Figure 1 depicts how a single tree divides the thusly obtained two-dimensional feature space into the respective regions. The same logic of recursive binary splitting can be analogously applied to a multi-dimensional feature space consisting of various predictor variables. Once the whole feature space is divided into regions, the same prediction is made for all observations, which fall into one region. In the case of a regression tree, the prediction is the mean response of all observations, i.e., forecast errors, in said region. For a classification tree, the response is the sign of the forecast error, which is determined by a majority vote of all observations in the respective region.

Regression and classification trees differ in the way of searching for optimal nodes. For both types of trees, a partitioning predictor and a cutpoint are chosen at each node in order to minimize a so-called node impurity measure. For regression trees, the residual sum of squares,

, measures node impurity under quadratic loss. For every pair of predictor variables,

z, and cutpoints,

c, the following equation is minimized:

where

denotes the mean forecast error response for observations falling into the left subsequent region of a given node and

denotes the mean forecast error response for observations falling into the right subsequent region of said node. In other words, for every possible split, the emerging regions are chosen such that they minimize the RSS of observations which they contain vis-à-vis the mean response of that region.

Analogous to the linear logit/probit

Patton and Timmermann (

2007) approach to testing forecast optimality, classification trees are a nonparametric way to implement a flexible loss function. Here, node impurity is measured by means of a Gini index,

G:

where

is the share of positive/negative (

) forecast errors in the subsequent left/right region of a given node. A small Gini index indicates nude purity, i.e., that a region mostly contains forecast errors with the same sign.

The advantage of this recursive binary splitting process is that no restrictions regarding the linkages of the response variable and the predictor variables or among the predictor variables need to be imposed beforehand. These possibly complex linkages can easily be modeled by regression and classification trees. However, the prediction of a single tree suffers from high variance. Therefore, it is reasonable to combine a large number of trees to a so-called random forest (for an introduction, see

Breiman 2001) in order to model the response variable. To this end, it is necessary to use bootstrap resampling to estimate a random regression or classification tree on every bootstrapped dataset (for an introduction to bootstrap resampling, see

James et al. 2015). Random trees have the distinct specification that they only use a subset of the set of predictors,

. This decorrelates the predictions of the single classification or regression trees and minimizes computation time. For every bootstrapped dataset, two thirds of the data are used to model a classification or regression tree and for the remaining third of the data out of bag predictions are made (for an introduction to out of bag error estimation using tree based models, see

Hastie et al. 2009). By means of these out of bag predictions, the predictive performance of a tree can be measured. In the case of a classification problem, predictive performance is measured by the out of bag misclassification rate, whereas, for regression trees, the share of the explained out of bag variance of the forecast errors, which is a pseudo

-statistic, serves as a performance metric.

Following

Behrens et al. (

2018c) and

Murphy et al. (

2010), I implement permutation tests to measure the statistical significance of the predictive power of a random classification or regression forest. By drawing hundreds of samples without replacement of the forecast errors and estimating random forests on every purely random sample, a benchmark model, which should have no predictive value for the (signs of the) forecast errors, is established. The implied pseudo

-statistics and misclassification rates vary by chance. Hence, the respective metrics obtained by means of the original model should, under the null hypothesis of forecast optimality, not perform significantly better than the formerly described metrics obtained by permuting the data. In other words, if trade forecasts are optimal, the pseudo

-statistic computed for the original random forest must not exceed, for instance, the 95th percentile of the permuted sampling distribution for random regression forests. On the other hand, for random classification forests, the misclassification error rate of the original model must not be smaller than the 5th percentile of the permuted sampling distribution. In summary, the permutation test is carried out along the following steps:

Fit a random classification/regression forest on the original data by means of bootstrap resampling and compute the respective out of bag performance metric (misclassification error rate/pseudo -statistic), .

Fit a random classification/regression forest on the permuted, i.e., randomized, data by drawing a sample without replacement and compute the respective out of bag performance metric, .

Repeat Step 2 1000 times and average over the respective performance metrics.

Compute p-value = , where is an indicator function.

The underlying concept is similar to the one of a standard F-test. If the set of predictors has joint predictive power with respect to the sign of the forecast error (classification problem under flexible loss) or the forecast error (regression problem under quadratic loss), the null hypothesis of forecast optimality can be rejected.

3. The Data

I collected data on annual export and import growth forecasts for Germany from 1970 to 2017 by six economic research institutes. Among the forecasters are four German economic research institutes (alphabetical order): Deutsches Institut für Wirtschaftsforschung Berlin (DIW), Hamburgisches Weltwirtschaftsarchiv/-institut (HWWI), ifo Institut für Wirtschaftsforschung Munich (ifo), Institut für Weltwirtschaft Kiel (IfW); a collaboration of German economic research institutes: Gemeinschaftsdiagnose (GD); and the Organisation for Economic Co-operation and Development (OECD). The latter is included as an international forecaster to see if German forecast institutes have a possible advantage because of geographical proximity (see, e.g.,

Bae et al. 2008;

Berger et al. 2009;

Malloy 2005). Due to data availability, which varies across forecasters and decades, I focus on annual forecasts with a forecast horizon of one year and half-a-year, which are published at the turn of the year and mid-year, respectively. In order to minimize data revision effects in computing forecast errors, I use initial release data from the German statistical office to measure realized values of export and import growth (see (

Statistisches Bundesamt 2018), data taken from “Wirtschaft und Statistik” publications). These realizations for the previous year are published by the German statistical office within the first months of a given year. Forecast errors are computed by subtracting these realized values for export or import growth from the forecast for the respective year (see also,

Behrens et al. 2018a):

where

denotes the annual forecast formed by forecaster

i in year

t (1970–2017),

h is the forecast horizon (half-a-year, one year),

j denotes the forecasted trade aggregate (export growth, import growth), and

y denotes the realization of the respective trade aggregate. Shortly after the German reunification (i.e., between 1992 and 1993), the economic research institutes form forecasts for reunified Germany instead of only West-Germany. I, therefore, adapt the corresponding time series for realized export or import growth for each economic research institute.

Table 1 summarizes the descriptive statistics for the forecast errors of all forecasters in the sample. Most observations (

N = 48) are available for the longer-term export and import growth forecasts of DIW and GD, whereas HWWI contributes the fewest observations (

N = 31) for their shorter-term forecasts. For the majority of forecasters, the mean forecast errors are close to zero. It is, however, striking that the reported mean forecast errors are generally larger than the ones usually obtained in the standard analyses of German inflation and economic growth forecasts (see, among others,

Behrens et al. 2018c;

Döpke et al. 2017). The relatively high mean trade forecast errors are in line with recent research (

Behrens 2019) and can be explained by the fact that exports and imports are among the most volatile GDP components (

Döhrn and Schmidt 2011). Regarding the standard deviations, the results are, as one could have anticipated, generally larger for the longer-term forecasts. It is noteworthy that GD’s standard deviation is the highest for all but one forecasting scenario, in which it is second highest. This might be explained by the fact that GD is the only collaboration of forecasters in the sample and hence possible controversies among the participating forecasters regarding economic theory or estimation techniques might arise. However, it also needs to be taken into account that the GD forecasts are published on average two months before the other forecasts, resulting in a longer forecast horizon. In addition, I report coefficients of first-order autocorrelation, which hint at weak efficiency of the trade forecasts, as the coefficients are relatively small (see

Section 4 for an in-depth analysis. Finally, the share of negative forecast errors is shown as an indicator of the symmetry of the forecasters’ loss functions. In case of symmetric loss, the

-statistic should equal 0.5. For most forecasters, the share of negative forecast errors is close to the 0.5-benchmark.

The predictor variables I use to model a forecaster’s information set at the time of forecast formation are shown in

Table 2. Recent studies on trade forecasts (

D’Agostino et al. 2017) and their evaluation (

Behrens 2019) show that typical trade variables as well as other macroeconomic variables are essential to accurately and efficiently predict future trade developments. My set of predictors, therefore, comprises, in addition to trade variables, several macroeconomic variables.

As

trade variables I include (following

Behrens 2019;

D’Agostino et al. 2017): Export and import prices for Germany as well as German export and import volumes lagged one year and the German real effective exchange rate as a measure of international price competitiveness (

Grimme et al. 2019;

Lehmann 2015). I also include lags for the last four periods of the real effective exchange rate for Germany to account for a possible J-curve effect (see e.g.,

Hacker and Hatemi-J 2004).

As

other macroeconomic variables, I include (following (

Behrens et al. 2018b,

2018c,

2019;

Döpke et al. 2017), who study German GDP and inflation forecasts): German industrial orders, German consumer and producer price indices, industrial production for Germany, the United States, France, the United Kingdom, Italy, and the Netherlands as leading indicators for Germany’s main trading partners (

Guichard and Rusticelli 2011), as well as the oil price, German business tendency surveys for manufacturing (current and future tendency; on macroeconomic survey data as predictors, see (

Frale et al. 2010;

Lehmann 2015)), finally, I include the OECD leading indicator for Germany as a composite indicator.

Following

Behrens et al. (

2018a), predictor variables are lagged by one period to account for a publication lag of the forecasts. That is, I assume that a forecast which was published, for instance, in July is formed by means of information available in June. I furthermore account for different publication lags of the predictors based on research by

Drechsel and Scheufele (

2012). As real time data are not available at a monthly frequency for the examined time period, I minimize data revision effects of the affected variables by means of a backward looking moving-average of order 12 for the CPI, PPI, the real effective exchange rate, industrial productions, orders, and trade variables (following

Behrens et al. 2018b;

Pesaran and Timmermann 1995), in order to proxy the information set which was available to a forecaster when a given forecast was formed.

4. Empirical Results

The following empirical results were obtained by means of the R programming environment for statistical computing (

R Core Team 2019) as well as the add-on packages “randomForest” (

Liaw andWiener 2002) for estimating random trees and “rfUtilities” (

Murphy et al. 2010;

Evans and Murphy 2017) for computing permutation tests. Regarding the hyper-parameters of the random forests, I follow the common approach in the literature in setting the maximum number of terminal nodes of a given tree to five and setting the number of predictors used for growing a random forest to the square root of the total number of predictors (see, e.g.,

Behrens 2019;

Hastie et al. 2009). For robustness checks, I report results for random forests, consisting of 250, 500, and 750 trees. Numbers in bold indicate a significantly (at the 5%-level) smaller out of bag misclassification error (classification problem) or a significantly larger pseudo

(regression problem) of the original model compared to the model estimated by means of the permuted data. In other words, cases in which I reject forecast optimality are printed in bold.

The results for the basic specification of the model are reported in

Table 3. The null hypothesis of forecast optimality cannot be rejected for DIW for all but one specification (longer-term import growth forecasts under quadratic loss, 750 trees). Regarding HWWI and ifo, the results are very interesting. Assuming a quadratic loss function for the forecasters results in the rejection of forecast optimality for both longer-term export and import growth forecasts, whereas, under flexible loss, forecast optimality cannot be rejected for these forecasts. Here, when evaluating the forecasting performance, the underlying loss function plays a crucial role. For IfW, I reject forecast optimality for their shorter-term export forecasts (500 and 750 trees) for both types of loss function. Forecast optimality for IfW’s longer-term export forecasts and all import forecasts cannot be rejected. The same applies to all trade forecasts of GD under quadratic as well as flexible loss. For OECD, the results are mixed. Under quadratic loss, I reject forecast optimality only for OECD’s longer-term import growth forecasts, whereas, under flexible loss, forecast optimality is rejected only for two cases (250, 750 trees) of their longer-term export growth forecasts. Overall, forecast optimality is rejected in more cases if the underlying loss function is assumed to be quadratic. Allowing for a flexible loss function results in a more favorable assessment of forecast optimality with respect to the forecasters. Additionally, for the forecasters’ shorter-term forecasts, optimality cannot be rejected even under quadratic loss, except for IfW’s export growth forecasts.

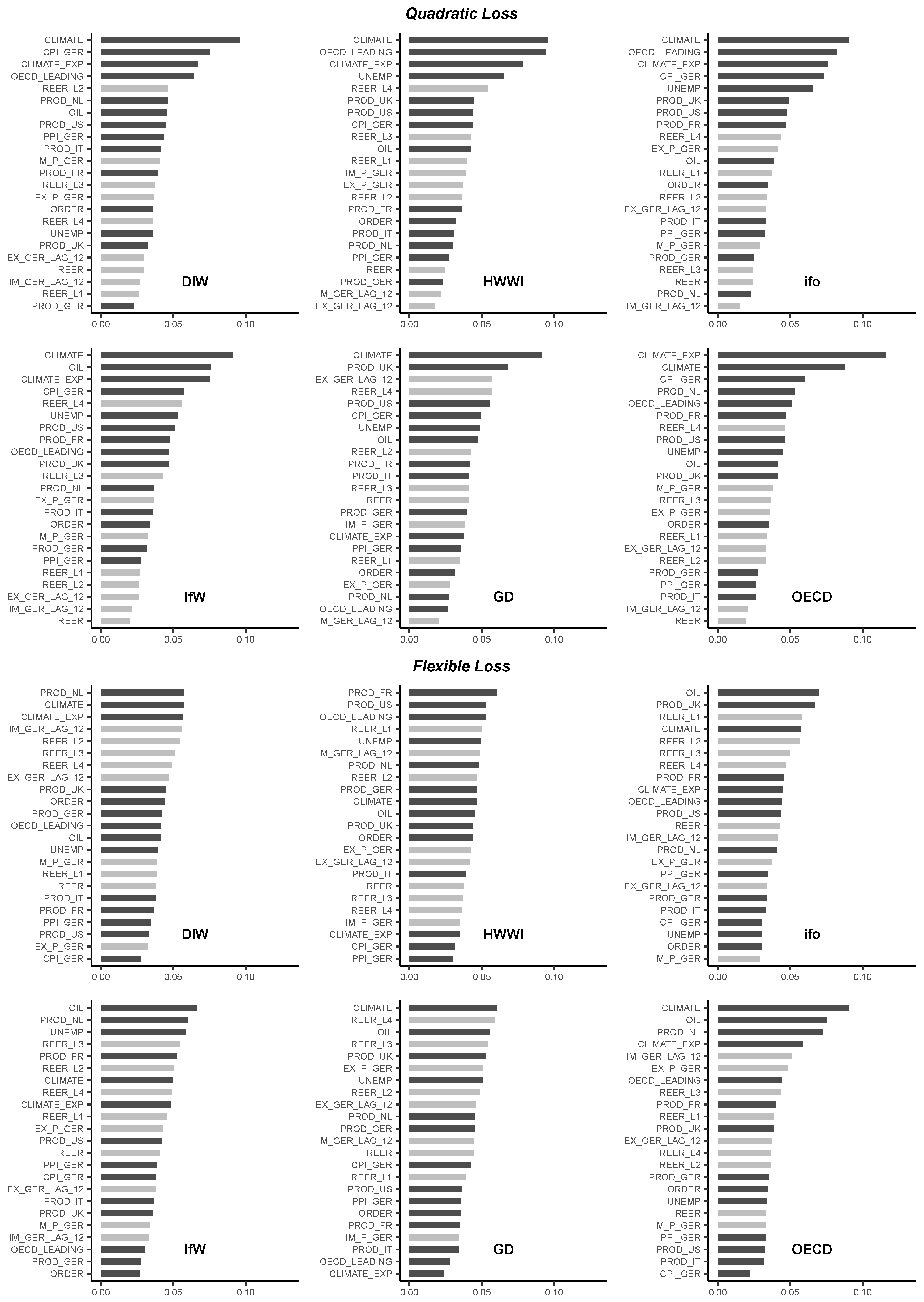

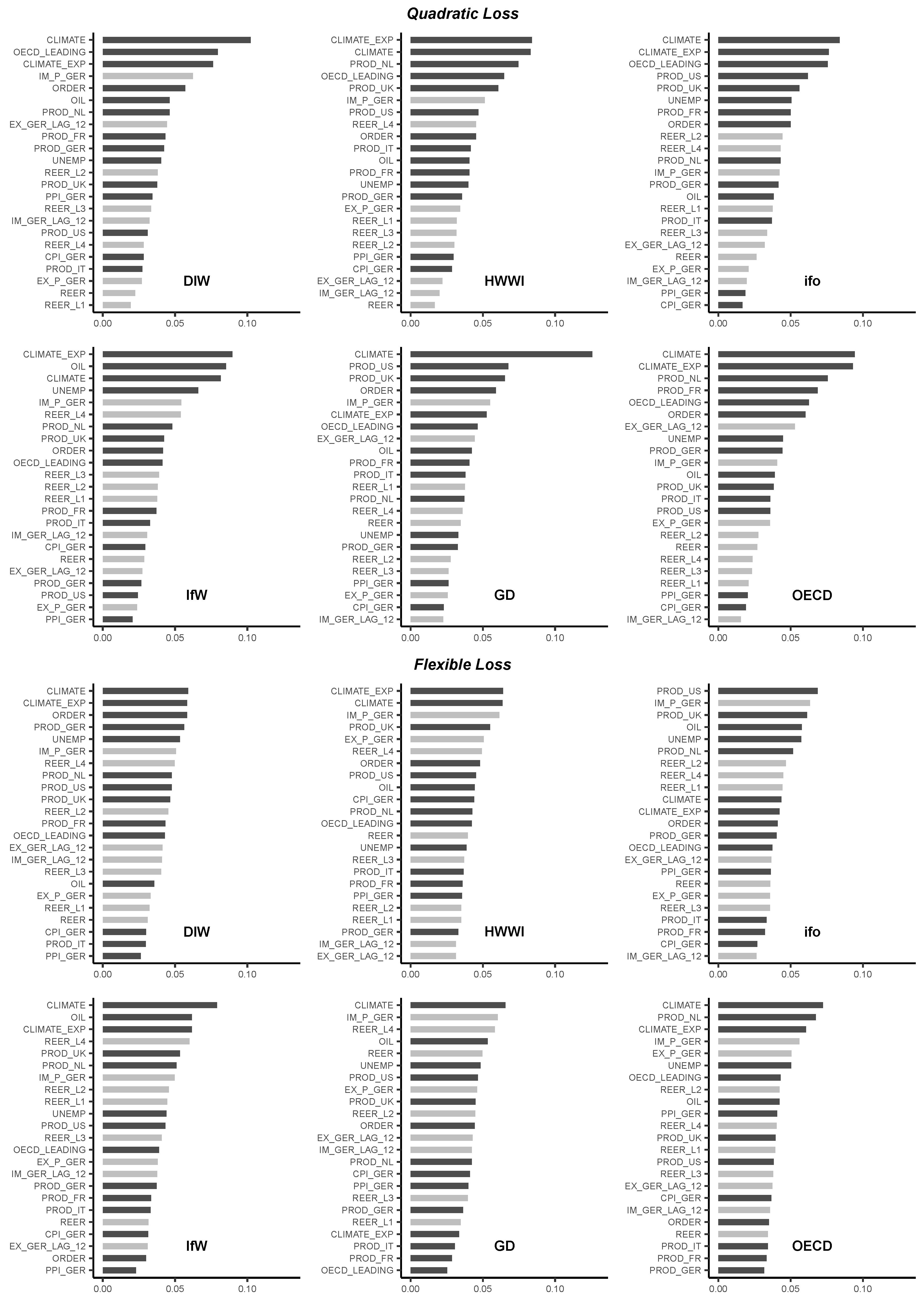

It is interesting to find out which predictors have explanatory power for the (sign of the) forecast error. I, therefore, report variable importance plots for the export growth forecast evaluation in

Figure 2 and for the import growth forecast evaluation in

Figure 3. Variable importance is computed by counting the times a predictor is chosen as splitting variable in the construction of a regression/classification tree. These measures are summed up over all trees and the share of each predictor as a splitting variable is computed by dividing by the number of trees in a forest, here 750. Hence, a large share indicates that this predictor has explanatory power for the (sign of the) forecast error. It is, therefore, not optimally incorporated into the respective forecast. On the other hand, if the share of a predictor variable is small, the predictor mentioned does not have explanatory power for the (sign of the) forecast error. It is, therefore, either optimally incorporated in the respective forecast or it does not have any explanatory power for the trade forecast. It is striking that, under quadratic loss, macroeconomic predictors other than typical trade aggregates (depicted in dark grey) are among the top splitting variables. In particular, macroeconomic survey indicators, such as the ifo business climate survey, are top splitting variables. Both findings are in line with recent research (

Behrens 2019;

D’Agostino et al. 2017). For most scenarios, lags of the German real effective exchange rate are among the top trade predictors (depicted in light grey), possibly hinting at a J-curve effect, which is not optimally incorporated in the analyzed trade forecasts. Another interesting observation is that, under flexible loss, the shares of the predictor variables as splitting variables are more evenly distributed. This is possibly due to the fact that the node impurity measure for regression trees (Equation (

3)) punishes outliers more severely. Regression trees, therefore, split the feature space more often than classification trees, in order to minimize the effects of outliers on the RSS. Thus, top predictors are chosen as splitting variables multiple times when growing a regression tree and their overall share as splitting variables increases.

As further robustness checks, I report results estimated by means of random forest models with different hyperparameters in

Table 4,

Table 5 and

Table 6. First, the maximum number of terminal nodes per tree is set to ten (

Table 4). Second, I halve the number of predictors that are randomly chosen at each node in building a tree and report the results in

Table 5. Finally,

Table 6 shows results for a scenario in which the minimum number of observations at each terminal node is set to five. There are minor changes in the statistical significance of the permutation tests for most institutes, as can be expected when changing the model’s hyperparameters. For HWWI and ifo, I still observe that forecast optimality is rejected under quadratic loss for their longer-term export and import growth forecasts, whereas, when assuming flexible loss, I cannot reject forecast optimality in these cases. There are two exceptions for HWWI’s forecasts in scenario two—

Table 5. For their longer-term import forecast computed with 500 trees and a quadratic loss function, the permutation test yields insignificant results, whereas the

p-value for their shorter-term export forecast computed by means of 500 trees under flexible loss becomes significant compared to the basic specification. In scenario one (

Table 4), there remains no evidence against the optimality of DIW’s longer-term import forecasts. For GD, the

p-value for one of their shorter-term import forecasts under flexible loss (250 trees) becomes significant. The same is true for GD in scenario two (

Table 5), in which also the

p-value for one of their longer-term import growth forecasts under quadratic loss (750 trees) becomes significant. Overall, however, the main findings from the basic specification do not change qualitatively for all robustness scenarios. An underlying flexible loss function continues to result in more favorable assessments of the trade forecasts with respect to forecast optimality.

Recently, the effects of uncertainty and trade policy uncertainty in particular on the economy and on international trade have been analyzed by several studies (see, among others,

Baker et al. 2016;

Fernández-Villaverde et al. 2015;

Hassan et al. 2017).

Caldara et al. (

2019) have developed a trade policy uncertainty index, which measures monthly media attention to news related to trade policy uncertainty. Electronic archives of seven leading U.S. newspapers are analyzed by means of automated text-search in order to construct the index (The list of newspapers comprises Boston Globe, Chicago Tribune, Guardian, Los Angeles Times, New York Times, Wall Street Journal, and Washington Post; for the data and further details on the construction of the index, see

Caldara et al. (

2019)). As U.S. trade policy uncertainty likely affects international as well as German trade, I add the trade policy uncertainty measure by

Caldara et al. (

2019) as well as a tariff volatility measure by the same authors to the list of predictors to proxy uncertainty regarding international trade and report the results in

Table 7 (quarterly tariff volatility is estimated by a stochastic volatility model by

Caldara et al. (

2019)). The results mostly do not change qualitatively. An exception is IfW, for which shorter-term export growth forecasts under flexible loss are no longer significant. For ifo, the shorter-term import growth forecast under flexible loss computed by means of 250 trees is now significant compared to the basic specification. For the most part, the trade uncertainty measures do not seem to add further explanatory power for the forecast errors.

Next, I check if the forecasters form weakly efficient forecasts, where weak efficiency holds if a forecaster’s current forecast error cannot be explained by its preceding forecast error (see, among others,

Behrens et al. 2018c;

Öller and Barot 2000;

Timmermann 2007). In other words, adding the lagged forecast error to the list of predictors should not lead to more efficient forecasts under the assumption of weak forecast efficiency. The results of the permutation tests reported in

Table 8, hence, should not be more significant than in the basic specification. Here, I observe only minor changes for single specifications of DIW, HWWI, and OECD, suggesting that, for all forecasters, the lagged forecast errors do not seem to have further predictive value, such that the forecasts are in line with the concept of weak efficiency.

As one of the world’s largest exporters and due to interwoven transnational production chains, Germany’s exports and imports are interdependent. I, therefore, add each forecaster’s import growth forecast to the list of predictors for their export growth forecast and vice versa, in order to study if the respective trade forecast has predictive value for the other trade forecast and report the results in

Table 9. Again, the results do not change qualitatively with respect to the basic specification. There are, however, three exceptions, where insignificant

p-values in the basic specification become significant when adding the respective other trade forecast to the forecasters’ information set, namely IfW’s and GD’s import forecasts as well as OECD’s export forecasts all under flexible loss.

Based on work by

Andrade and Le Bihan (

2013) who find evidence on rational inattention of professional forecasters, I extend the forecasters’ information set by adding the lagged realizations of the predictor variables (see also (

Behrens et al. 2018b), for an application to German GDP growth and inflation forecasts). The results presented in

Table 10 show only minor changes in the magnitude of the permutation tests’

p-values. For two forecasters, results of one permutation test scenario become significant compared to the basic specification, namely DIW’s longer-term export forecasts (750 trees) and OECD’s shorter-term import forecasts (250 trees) both under quadratic loss. Forecast optimality continues to be rejected in more cases if the underlying loss function is assumed to be quadratic.

Recent research in the forecasting area has focused on possible effects of the 2007/2008 financial crisis on macroeconomic forecasting (see, for instance,

Drechsel and Scheufele 2012;

Frenkel et al. 2011;

Müller et al. 2019).

Table 11 reports results for a scenario, in which forecasts from the years 2007 and 2008 are deleted from the sample (I follow

Behrens et al. 2018c, in this approach). In doing so, I check whether severe forecast errors made in these years affect the results of the entire sample. However, there are no qualitative changes in the results of the permutation tests. Only for OECD, one specification (250 trees) of their longer-term import growth forecasts under flexible loss, for which I cannot reject forecast optimality in the basic specification, now becomes significant.

In the same vein, I delete forecasts for the years 1992 and 1993 to check for possible biases due to large forecast errors in the time of German reunification (see also (

Behrens 2019), the German statistical office changed national accounts statistics from west Germany to reunified Germany in 1992). The results documented in

Table 12 are especially interesting for ifo, as forecast optimality for both longer-term trade forecasts under quadratic loss cannot be rejected anymore. This result documents the high volatility of results obtained from a scenario under the assumption of a quadratic loss function.

Finally, I report results for a scenario, in which I pool the data across forecast institutes, in order to enlarge the sample size. However, a disadvantage of this approach is that it is no longer possible to study the heterogeneity of the forecast institutes.

Table 13 shows results for a pooled version of the basic specification computed by means of random forests consisting of 500 trees. In this scenario I add dummy variables for each institute to the list of predictors. In case of a classification problem, I report results for an alternative dependent variable,

, since it is possible that some forecast errors are exactly equal to zero, as the institutes’ forecasts and the realizations of export and import growth are published as rounded two-digit numbers. I strongly reject forecast optimality for both trade aggregates, forecast horizons, types of loss function, and dependent variables.

5. Conclusions

I build on research by

Behrens (

2019) on the nonparametric evaluation of German trade forecasts. I extend the

Behrens et al. (

2018c) nonparametric adaption of the

Patton and Timmermann (

2007) approach to testing forecast optimality when the forecaster’s loss function is unknown. By means of random forests, I evaluate the optimality of German export growth and import growth forecasts, both under flexible and quadratic loss. To this end, I study annual trade forecasts from 1970 to 2017 published by four major German economic research institutes, one collaboration of German economic research institutes, and one international forecaster. A forecast is considered as optimal if a set of predictors, which models the information set of a forecaster at the time of forecast formation, has no explanatory power of the corresponding forecast error, in case of a regression problem under quadratic loss. For a classification problem, under flexible loss, the set of predictors should not have explanatory power for the sign of the forecast error, if the forecast is optimal.

For all but one forecaster, I cannot reject the optimality of export or import growth forecasts, neither under quadratic nor under flexible loss, at a forecast horizon of half-a-year. For longer-term forecasts, at a forecast horizon of one year, the results are more heterogeneous. For two forecasters, optimality of their longer-term trade forecasts is rejected under quadratic loss, whereas under flexible loss, forecast optimality cannot be rejected for these forecasters. Hence, the loss function plays a crucial role in the evaluation of these forecasters. Overall, an underlying flexible loss function results in a more favorable assessment of the trade forecasts with respect to forecast optimality. This analysis lends support to the study of

Behrens (

2019), who finds that macroeconomic predictors, other than typical trade aggregates, are not optimally incorporated in multivariate trade forecasts under quadratic loss. Variable importance plots show that, under quadratic loss, top predictors for the (sign of the) forecast error are not typical trade aggregates; however, under flexible loss, this finding cannot be confirmed. For these plots, the shares of predictors as splitting variables are more evenly distributed. Possibly, this is because, under quadratic loss, outliers are punished more severely, which in turn causes regression trees to split the feature space more often. Thus, top predictors are chosen more often as splitting variables than under flexible loss.

It needs to be mentioned that the results computed under flexible loss only hold if the assumptions underlying the

Patton and Timmermann (

2007) test for forecast optimality are fulfilled. It is, for instance, possible that the forecasters’ loss functions depend on other variables than the forecast error or that the loss functions are not homogeneous in the forecast error. It is, therefore, interesting for future research to see if these results hold for a multivariate analysis of both export and import growth forecasts under flexible loss. A multivariate analysis combining export and import growth forecasts would also be interesting when analyzing forecasts of a country’s trade balance, since this aggregate is often the subject of political debates. Furthermore, the nonparametric application of the

Patton and Timmermann (

2007) test of forecast optimality, brought forward by

Behrens et al. (

2018c), could be applied to other macroeconomic forecasts and/or countries.