Abstract

A sandstorm image has features similar to those of a hazy image with regard to the obtaining process. However, the difference between a sand dust image and a hazy image is the color channel balance. In general, a hazy image has no color cast and has a balanced color channel with fog and dust. However, a sand dust image has a yellowish or reddish color cast due to sand particles, which cause the color channels to degrade. When the sand dust image is enhanced without color channel compensation, the improved image also has a new color cast. Therefore, to enhance the sandstorm image naturally without a color cast, the color channel compensation step is needed. Thus, to balance the degraded color channel, this paper proposes the color balance method using each color channel’s eigenvalue. The eigenvalue reflects the image’s features. The degraded image and the undegraded image have different eigenvalues on each color channel. Therefore, if using the eigenvalue of each color channel, the degraded image can be improved naturally and balanced. Due to the color-balanced image having the same features as the hazy image, this work, to improve the hazy image, uses dehazing methods such as the dark channel prior (DCP) method. However, because the ordinary DCP method has weak points, this work proposes a compensated dark channel prior and names it the adaptive DCP (ADCP) method. The proposed method is objectively and subjectively superior to existing methods when applied to various images.

1. Introduction

A sandstorm image has different features from a hazy image, although they are obtained by means of a similar process. In general, a hazy image seems dimmed and darkened by haze particles. However, these images have no color cast due to the color channels not being degraded. A sandstorm image is obtained in the atmosphere, as with hazy image. However, these images have a yellowish or reddish color cast due to the color channel being degraded by sand particles. Many algorithms have been studied to improve hazy images. Sand dust images and hazy images are obtained by means of a similar procedure. In general, dehazing methods are used to enhance the sand dust image. However due to sandstorm image having a color cast, if a color channel compensation step is not employed, the enhanced image will have color distortion. Therefore, to enhance a sandstorm image naturally, a color balancing step is needed. Owing to hazy images and color-balanced sandstorm images having similar features, the dehazing algorithm is needed to improve the sandstorm image. There have been many studies into enhancing hazy images. He et al. [1] improved hazy image using the dark channel prior (DCP) method, which estimates the dark region of each color channel, and used the DCP method to estimate the image’s backscatter light and transmission map. The DCP [1] method is frequently used in dehazing fields. However, when the DCP is estimated, a certain size block is used, and because of this, the enhanced image has a halo effect, such as the square effect. To compensate for this effect, He et al. [1] used guided image filtering [2]. Meng et al. enhanced an image using boundary constraints on the transmission map [3]. Meng et al. method is similar to the DCP method [1] with regard to estimating the transmission map. However, the transmission map estimated by Meng et al.’s method has less of a halo effect than the DCP method, especially in the sky region [3]. Zhu et al. tried to dehaze images using the color attenuation prior (CAP). Zhu et al.’s method constructs a model which depicts the scene depth of the hazy image and estimates the transmission map [4]. Additionally, Zhu et al.’s method is good at dehazing, but it does not consider the scattering coefficient of the image [4]. Dhara et al. improved the hazy image using adaptive air light refinement and nonlinear color balancing [5].

The dehazing algorithm is able to enhance a hazy image in the case of no color distortion. However, most sand dust images have color distortion that is yellowish or reddish. To enhance distorted sand dust images, existing dehazing algorithms have limitations such as new color distortion due to the lack of a color compensation step. Therefore, to enhance the distorted image efficiently, the color compensation procedure is considered. Gao et al. enhanced distorted sand dust images using color correction and a transmission map [6]. To compensate for the color distortion, Gao et al. used the comparison with each color channel’s mean and the red color channel’s mean. Additionally, to estimate the transmission map, they used the reverse blue channel prior (RBCP) method [6]. Gao et al.’s color correction method is good at improving a degraded sand dust image, but, in cases of severely distorted sand dust images, Gao et al.’s color correction method is not sufficient, because of the rare color component. Cheng et al. proposed a sand dust enhancement algorithm using blue channel compensation and a guided image filter [2,7]. This method corrects the color distortion using blue channel compensation and white balancing [7,8]. Shi et al. tried to improve sand dust images using the mean shift of the color components and normalized gamma correction [9]. This method is efficient in distorted sand dust image enhancement, but new color distortion can be shown, due to mean shift. Shi suggested a sand dust image improvement method using the normalized gamma correction and mean shift of the color ingredients [10]. The weak point of this method is that it can cause color distortion by mean shift. Naseeba et al. proposed a dehazing algorithm using a combination of the three modules (depth estimation module (DEM), color analysis module (CAM), and visibility restoration module (VRM)) [11]. Naseeba et al.’s DEM used the median filter to refine the transmission map, thus reducing the halo effect, and their CAM used the gray world assumption [11,12]. Al-Ameen proposed a sand dust image enhancement method using tuned fuzzy intensification [13]. This method corrects the color using the three thresholds of the color channel [13]. This is efficient in the correction of the color channel. However, during the tuning procedure, a constant value is used, which causes a halo effect similar to color distortion, and thus this method is not a suitable method for various images.

Recently, machine learning-based dehazing algorithms have been studied. Ren et al. enhanced images using multiscale convolution neural networks (CNNs) [14]. This method enhances the image using two types of network: one is prediction and the other is refinement [14]. Wang et al. enhanced images using the atmospheric illumination prior method [15]. This method estimates the atmospheric illumination of the hazy image by the luminance channel and enhances the image using a multiscale CNN [15].

Many dehazing methods have been studied. Each method is good at improving the undistorted dust image. However, as with a sand dust image, if a certain color channel is degraded, a new distortion can occur, although the image is enhanced. So, to enhance a distorted sand dust image, a color correction procedure is needed. Consequently, this paper to enhance the sand dust image naturally without a halo effect proposes two steps. The first is a color correction step using the measure which can reflect the characteristic of image, and the other is adaptive transmission map estimation. The images corrected by the proposed method have features similar to those of hazy images. So, to enhance these images, the image adaptive dehazing algorithm is applied. The proposed method is subjectively and objectively superior to existing methods as a result of the comparison.

2. Proposed Method

2.1. Color Balance

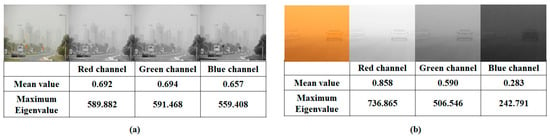

Sandstorm images have features similar to those of hazy images. However, they have a color cast that is yellowish or reddish. Because sandstorm image color channels are degraded and imbalanced, to enhance the sandstorm image naturally, the color balancing process is needed. If the color correction step and the dehazing step are switched, then the enhanced image appears to be dimmed due to balancing the color channel, and the hazy ingredients are also increased, and so, to enhance the sand dust image naturally, the color correction step is preprocessed before the dehazing procedure. If the color channels are balanced, the image seems to naturally be without a color cast. The eigenvalue is used in various image processing areas [16,17]. Tripathi et al. [17] analyzed images using the eigenvalue due to the eigenvalue containing image features. Therefore, this paper balances the degraded color channel using the eigenvalue. The eigenvalue reflects features of the image, such as the contrast. If the contrast of the image is low, the mean value of the image is also low. The eigenvalue also reflects the different features as with the contrast of the image. Figure 1 shows the image features and the eigenvalue for the different contrasts of the image. As shown in Figure 1, if the image’s mean value is high, the contrast is high, and the eigenvalue is also high. If not, the eigenvalue is low. Sand dust images can be divided into two categories, which are an undegraded color channel and a degraded color channel. As shown in Figure 1a, the undegraded sand dust image has balanced color channels, and the eigenvalues of the channel are uniform. However, as shown in Figure 1b, the color-degraded sand dust image has low contrast in the blue channel and the eigenvalue of this channel is lower than that of the other color channels.

Figure 1.

Eigenvalues in different circumstances: (a) the eigenvalues of the undegraded sand dust image; (b) the eigenvalues of the degraded sand dust image.

In other words, the eigenvalue is able to indicate the image’s features. Additionally, to enhance the degraded sand dust image, color channels need to be balanced. This paper uses the eigenvalue of the image to balance the color channel. The eigenvalue of the image is obtained as follows:

where is a nonzero vector, is the image matrix, and is the eigenvector. Additionally, Equation (1) is also described as follows:

where is the unit matrix. Additionally, as is a non-zero vector, the image’s eigenvalues are obtained by the determinant. During the eigenvalue decomposition process, the image size is fit in max between the row and col. The image’s eigenvalue is obtained as follows:

The variation of the eigenvalue with respect to the image is shown in Figure 1. As shown in Figure 1, if the image’s mean value is low, it is dark, and the image’s eigenvalue is also low. However, if the image is bright, its mean value and eigenvalue are high. Therefore, the eigenvalue reflects the features of the image. The sandstorm image’s color channels are imbalanced. The red channel is the brightest and the blue channel is the darkest. If the color channels are balanced, the image has a natural color without color cast. As shown in Figure 1, the brightest image has the highest eigenvalue. Additionally, the darkest image has the lowest eigenvalue. Using the relationship between the eigenvalue and the image’s contrast, this paper proposes the color balance method for the degraded sand dust image using each channel’s eigenvalue. As mentioned above, due to the red channel being the brightest among the color channels, its eigenvalue is the highest. Meanwhile, due to the blue channel being the darkest among the color channels, its eigenvalue is the lowest. Therefore, to apply the channel’s eigenvalues efficiently, a normalization step is needed. The obtaining step of the image’s normalized eigenvalue is described as follows:

where is the normalized eigenvalue, is the maximum eigenvalue in each color channel, is the maximum eigenvalue of each color channel’s maximum eigenvalues, and . If the eigenvalue is high, then the difference is greater in the degraded color channels. Thus, this paper uses the normalized eigenvalue with the maximum eigenvalues of each color channel. Additionally, to compensate for the degraded color channel, the normalized eigenvalue is applied as follows:

where is the balanced image, is the input image, and is the location of the pixel. Using Equation (5), the undegraded color channel is maintained and not enhanced, while only the degraded color channel is enhanced. The more degraded the channel is, the more enhanced it is.

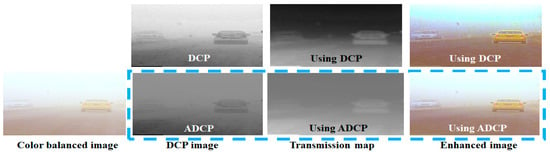

Figure 2 shows the color-balanced image using the proposed method. As shown in Figure 2, the proposed color balancing method improves the color channel and the images without a color cast. Additionally, the tables below the images indicate the maximum eigenvalue of each color channel. As shown in the tables, the degraded image’s eigenvalues are lower than those of the less degraded or undegraded channels. However, the balanced image’s eigenvalues of the color channels are similar to each other. Therefore, making the channel’s eigenvalue uniform is a reasonable method for balancing the color channel.

Figure 2.

The input image ( and color-balanced image ( using the proposed method. The below tables of images indicate the maximum eigenvalue of each color channel.

2.2. Estimation of the Adaptive Dark Channel Prior

The color-balanced image using the proposed method is similar to the hazy image because the sand dust image is obtained by the same process as the hazy image. Thus, to enhance the balanced image, a dehazing step is needed. Additionally, to enhance the hazy image, the DCP method [1] is frequently used. The DCP estimates that, in the clean image, at least one channel among the three color channels is close to zero, and it is described as follows [1]:

where is the DCP image, is the patch image (kernel size is 15 × 15), , and is the backscatter light of the balanced image. The DCP method estimates the image’s darkest region, and it is used to estimate the atmospheric light [1]. Additionally, the propagation path of the light is estimated by the transmission map, and it is obtained by the reverse of the DCP method [1]:

where is the transmission map, and the constant value, , applies the “aerial perspective” [1,18,19] in the image. The DCP [1] estimates the image’s dark region, but it can generate a halo effect in the enhanced image. If the image has a sky region, due to the sky region being bright in most areas, DCP is estimated brightly, while the DCP is estimated darkly elsewhere. Because the relationship between the transmission map and the DCP is a tradeoff, if the DCP is bright, then the transmission map is dark, and it causes a halo effect in the enhanced image. This is caused by the transmission map being applied in reverse in the dehazing procedure.

Therefore, this paper proposes the adaptive DCP (ADCP) method to compensate for the DCP, and it is described as follows:

where is the adaptive DCP, if the image has a sky region, and the intensity of is higher than that of 1 − because of the sky region being bright. Thus, if the result of the max operation between and 1 − is applied in the estimation procedure of DCP, the bright sky region is able to be compensated in the estimated DCP image. Additionally, the controls the intensity of . If the is close to zero, is bright. Therefore, is able to control the intensity of . If the has a sky region, then it is bright, and the mean value is also high. Therefore, when using the mean value of as a measure, the image adaptive DCP and transmission map are obtained, and these are able to estimate the haze region suitably, despite the fact that the image has a sky region. The is obtained as follows:

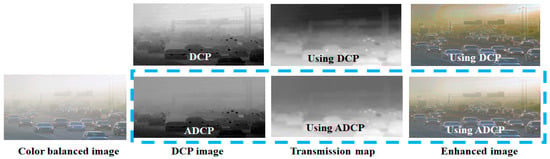

From Equations (8) and (9), although the image has a sky region and the is bright, due to the α also being high, the is dark, and it is thus able to estimate the image’s dark region without any hindrance. In Figure 3 and Figure 4, the differences between the existing DCP and the proposed ADCP methods when using them to estimate the transmission map and to enhance hazy images are presented (blue dotted square indicates the proposed method). As shown in Figure 3 and Figure 4, the DCP images estimated by the proposed ADCP method are darker than those estimated by the existing DCP method, despite the images having a sky region.

Figure 3.

The comparison between the existing DCP [1] and proposed ADCP method, and their transmission maps and enhanced images (blue dotted square indicates the proposed method).

Figure 4.

The comparison between the existing DCP [1] method and the proposed ADCP method, and their transmission maps and enhanced images (blue dotted square indicates the proposed method).

As a result, the enhanced image using the proposed ADCP method has no halo effect when compared with the DCP method—in particular, the transmission map estimated using the existing DCP method [1] has a halo effect in the sky region, but the transmission map estimated using the proposed ADCP has no halo effect. Additionally, to refine the transmission map, the guided image filter is applied [2] with a kernel size is set to 8, while eps is set to 0.42.

2.3. Enhancement of the Sandstorm Image

The degraded sand dust image is compensated by the proposed balancing method. Additionally, due to it having features similar to those of hazy images, to enhance the image, a dehazing algorithm is applied. The dehazing model [20,21,22,23] is described as follows:

where is the enhanced image, is the color-balanced image, t is the transmission map estimated using the ADCP method, is set to 0.1, and is the backscatter light of the color-balanced image, which is obtained by He et al.’s [1] method. Additionally, to refine the enhanced image, this paper uses the guided image filter [2]:

where is the guided filtered enhanced image; is the kernel size, set to 2; is set to ; is set to 5; is the refined and enhanced image; and is the guided image function.

3. Experimental Results and Discussion

The distorted sand dust image is balanced using the proposed method. Additionally, due to the balanced image having features similar to those of a hazy image, the dehazing algorithm is applied using the proposed ADCP method. This section indicates the suitability of the proposed method in sand dust image enhancement subjectively and objectively. The proposed method and state-of-the-art methods are compared subjectively and objectively. Additionally, to compare their application in various circumstances, the detection in adverse weather nature (DAWN) dataset is used [24]. The DAWN dataset [24] consists of 323 sand dust images.

3.1. Subjective Comparison

To compare the proposed method and the existing methods, two steps were carried out. The first step is the comparison of color correction, and the other is the comparison of the enhanced images. To compare the color correction, three color correction method types are used. Shi et al. [9] corrected the distorted color using mean shift of the color components, while Shi et al. [10] used mean shift of the color ingredients and gamma correction to balance the color. Additionally, Al-Ameen [13] rectified the color using three thresholds.

In Figure 5 and Figure 6, the corrected color images using each method for the sand dust image in various circumstances are shown. In Figure 5, in the case of Shi et al.’s method [9], bluish color distortion is observed in the corrected image. Shi et al.’s method [10] also demonstrates a bluish color degradation, but this distortion is less than that enhanced by the method presented in [9]. Al-Ameen’s [13] method results in the appearance of yellowish or reddish color degradation, despite having a color correction operation. The proposed method appropriately corrects the image so that it is neither yellowish nor reddishly degraded.

Figure 5.

The comparison of existing color correction methods and the proposed method.

Figure 6.

The comparison of existing color correction methods and the proposed method.

In Figure 6, the various distorted images, such as reddish, greenish, and dust, and the corrected images are shown. Shi et al.’s method [9] corrects the degraded sand dust image with a bluish color shift. Shi et al.’s method [9] uses the mean shift of the color components, and it causes a bluish artificial color shift. Shi et al.’s [10] method also demonstrates a bluish color shift in the corrected image due to the mean shift. Al-Ameen’s method [13] only enhances the lightly distorted image. Additionally, in some enhanced images, an artificial color shift that is yellowish and reddish can be observed. As shown in Figure 6, the color correction of lightly degraded sand dust images is an easy process. The proposed method enhances both lightly and severely degraded sand dust images. The image in the tenth row has a reddish color shift and low contrast. The existing methods correct the image, but the contrast is darker. However, the proposed method enhances the degraded image, and the contrast of the enhanced image is higher when compared to the other methods.

As shown in Figure 5 and Figure 6, the existing methods have limitations in the enhancement of degraded sand dust images in various circumstances. Additionally, the corrected image has a color shift, such as reddish, yellowish, and bluish. However, the proposed method is able to enhance the various sand dust images without an artificial color shift.

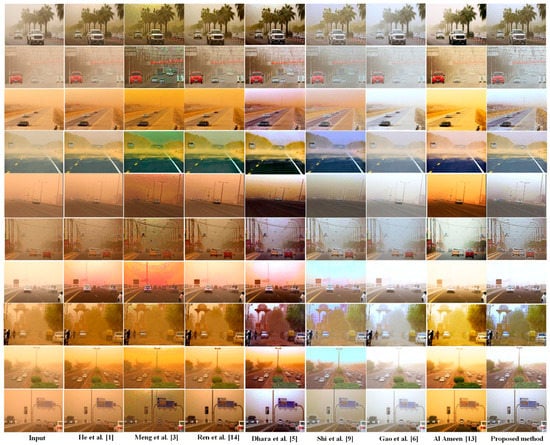

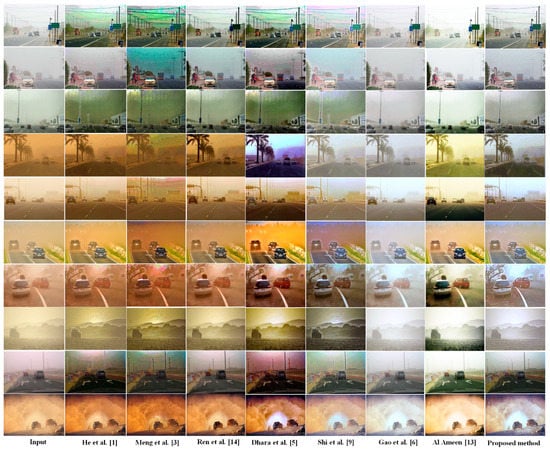

The images enhanced by state-of-the-art dehazing methods and the proposed method are compared. Because a sand dust image resembles a dusty or hazy image, state-of-the-art dehazing methods are also included in the comparison. He et al. enhanced hazy images using DCP [1], Meng et al. improved hazy images using compensated DCP [3], Ren et al. improved images using MSCNN [14], Dhara et al. improved hazy images using adaptive air light refinement and nonlinear color balancing [5], Shi et al. enhanced sand dust images using the mean shift of the color ingredients [9], Gao et al. enhanced sand dust images using RBCP [6], and Al-Ameen improved sand dust images using three thresholds [13].

Figure 7 and Figure 8 show the enhanced sand dust images using various methods. In Figure 7, He et al.’s method [1] is not able to enhance the sand dust image, and there is still color distortion because this method has no color correction step. Meng et al.’s method [3] also does not enhance the degraded sand dust image due to it having no color balance step. Ren et al.’s method [14] produces color distortion owing to it having no color correction procedure, as with other dehazing methods. Dhara et al.’s method [5] improves lightly distorted sand dust images. This method produces a yellowish color shift in some enhanced images. Shi et al.’s method [9] enhances the sand dust image. However, the enhanced image demonstrates color distortion due to the mean shift to correct the color distortion, which is bluish. Gao et al.’s method [6] is able to enhance sand dust images, and this method has no color distortion. However, in the enhanced images, the hazy effect still remains. Al-Ameen’s method [13] causes color degradation due to this method not using an image adaptive measure, instead using a constant measure, although it has a color balance procedure. However, the color channel is corrected exceptionally on lightly distorted sand dust images. The proposed method enhances the sand dust image well, without color degradation.

Figure 7.

The comparison of existing dehazing methods and the proposed method.

Figure 8.

The comparison of existing dehazing methods and the proposed method.

In Figure 8, the enhanced degraded sand dust images using the existing dehazing methods are shown. Figure 8 also includes the dust image without color shift to compare the dehazing performance between existing dehazing methods and the proposed dehazing method. He et al.’s [1] method is used in the dehazing area frequently. However, the color shift occurs due to the weak point of the DCP, although the image is not color-degraded. Meng et al.’s [3] method also produced a color shift in the sky region. Ren et al.’s method [14] causes less color shift in the sky region than the methods of He et al. [1] and Meng et al. [3]. Dhara et al.’s method [5] causes a greenish color shift in the sky region. Additionally, this method also causes distorted colors, such as bluish, reddish, and greenish distortion. Shi et al.’s method [9] produces a color shift in the sky region. Gao et al.’s method [6] enhances the degraded sand dust images without color shift. However, the enhanced image using this method appears to be dimmed due to the unsuitable dehazing procedure. Al-Ameen’s method [13] improves the image in cases of lightly degraded sand dust images and dust images without a color cast. The proposed method enhances the degraded sand dust images in various circumstances without an artificial color shift, despite the images having a sky region.

3.2. Objective Comparison

The sand dust images enhanced by the existing methods and the proposed method are compared subjectively in Figure 7 and Figure 8. Additionally, to compare these images objectively, the natural image quality evaluator (NIQE) [25], the underwater image quality measure (UIQM) [26], and the novel blind image quality assessment (NBIQA) [27] measure are used. The NIQE measure [25] shows the naturally enhanced image as a score; if the score is low, the image is enhanced well. The UIQM [26] expresses the improved image’s contrast, colorfulness, and sharpness as a combined score; if the image is enhanced naturally, the score is high. Additionally, the NBIQA [27] indicates the improved image’s naturalism using features of the image. If the image is enhanced well, then the NBIQA [27] score is high.

In Table 1 and Table 2, the NIQE [25] scores are shown for the images in Figure 7 and Figure 8. In Table 1, the NIQE scores of the images in Figure 7 are shown. The dehazing methods obtained a high NIQE score due to these methods having no color correction step. Gao et al.’s method [6] has a color correction step, but the NIQE score is high in some images due to the dehazing procedure being insufficient. Al-Ameen’s method [13] has a high NIQE score, although the color balance does not work adaptively in some images. Although the Meng et al. method [3] has no color balancing step, owing to its dehazing procedure operating adaptively, the NIQE score of Meng et al. [3] is lower than that given to the methods of Gao et al. [6] and Al-Ameen [13] in some images. Shi et al.’s method [9] enhances the images better than Gao et al.’s [6] method in some cases. However, with regard to the NIQE score, Shi et al.’s method [9] had a higher score than Al-Ameen’s method [13] in some images. The NIQE score of the images enhanced by Al-Ameen’s [13] method is better than that of existing dehazing methods in some images due to this method being able to correct the color in lightly degraded images. Dhara et al.’s method [5] has lower NIQE scores than the existing dehazing methods in some images. The proposed method operates in the color correction step and dehazing procedure effectively, and as a result, the NIQE score is also the lowest among the existing methods.

Table 1.

Comparison of the NIQE [25] score of the images in Figure 7 (If the score is low, the image is enhanced well).

Table 2.

Comparison of the NIQE [25] scores of the images in Figure 8 (If the score is low, the image is enhanced well).

Table 2 shows the NIQE scores of the images in Figure 8. The dehazing methods have high NIQE scores, although the images have no color distortion. The proposed method has a lower NIQE score than other methods due to the proposed method enhancing both the distorted and undistorted images well.

As shown in Table 1 and Table 2, to enhance the sand dust images naturally, both a color correction step and a dehazing procedure need to be applied adaptively to the image.

In Table 3 and Table 4, the UIQM scores of the images in Figure 7 and Figure 8 are shown. If the image is enhanced appropriately, the UIQM score is high. Table 3 shows the UIQM scores of the images in Figure 7. The UIQM scores of the dehazing methods are low due to these methods having no correction procedure and the color degradation still being present. Gao et al.’s method [6] has a color correction step, but its UIQM score is lower than that of He et al.’s method [1]. Meng et al.’s [3] method does not have a color balancing step, but the UIQM scores are higher than those of Gao et al.’s method [6] and Al-Ameen’s method [13] in some images, despite these methods having a color correction procedure. Although Ren et al.’s [14] method has color shift due to there being no color correction step, the UIQM score is higher than that of Gao et al.’s method [6] in some images, owing to the suitable dehazing step. Shi et al.’s method [9] has a higher UIQM score than Gao et al.’s [6] method, although the color shift does occur in some images. Dhara et al.’s method [5] has higher UIQM scores than other dehazing methods in some images, although some artificial color shift does occur. The proposed method has higher UIQM scores than other methods.

Table 3.

Comparison of the UIQM [26] scores of the images in Figure 7 (If the score is high, the image is enhanced well).

Table 4.

Comparison of the UIQM [26] scores of the images in Figure 8 (If the score is high, the image is enhanced well).

In Table 4, the UIQM scores for the images in Figure 8 are shown. In Table 4, the existing dehazing method has a low UIQM score. The proposed method has a higher UIQM score than other methods.

As shown in Table 3 and Table 4, to enhance sand dust images, both a color correction procedure and an image adaptive dehazing step are needed.

Table 5 and Table 6 indicate the NBIQA [27] scores for the images in Figure 7 and Figure 8. If the quality of the enhanced image is good, the NBIQA score is high. Table 5 shows the NBIQA score for the images in Figure 7. He et al.’s method [1] has a low NBIQA score. Ren et al.’s [14] method also has a low NBIQA score due to this method having no color correction step, although the dehazing procedure is suitable. The NBIQA score of the Meng et al. method [3] is lower than that of other methods in some images due to this method having no color compensation step. Dhara et al.’s method [5] obtains a lower NBIQA score than Shi et al.’s [9] method in some images. Shi et al.’s method [9] demonstrates a higher NBIQA score than the other dehazing methods in some images. The NBIQA score of Gao et al.’s [6] method is higher than that of the dehazing method, although the enhanced image looks dimmed. Al-Ameen’s method [13] has a low NBIQA score, despite this method having a color correction step, due to the image correction method not being adaptive. The NBIQA score of the proposed method is higher than that of the other methods.

Table 5.

Comparison of the NBIQA [27] scores for the images in Figure 7 (If the score is high, the image is enhanced well).

Table 6.

Comparison of the NBIQA [27] scores for the images in Figure 8 (If the score is high, the image is enhanced well).

In Table 6, the NBIQA scores of the images in Figure 8 are shown. In Table 6, although the enhanced images have a color shift, the NBIQA scores of the existing dehazing methods are higher than those of other methods in some images. The proposed method has a higher NBIQA score than other methods.

As shown in Table 1, Table 2, Table 3, Table 4, Table 5 and Table 6, depicting the NIQE [25], UIQM [26], and NBIQA [27] scores, to enhance sand dust images naturally, image adaptive color correction step and dehazing procedure are needed.

Table 7 and Table 8 show the average NIQE [25], average UIQM [26], and average NBIQA [27] scores of the resulting images using the proposed method and the existing methods with the DAWN dataset, which includes 323 images [24], and Figure 7 and Figure 8. In Table 7, the average scores of the images shown in Figure 7 and Figure 8 on each measure are shown. Al-Ameen method’s [13] UIQM score is higher than that of other methods, and the dehazing methods have a low score. The proposed method has a high UIQM score. Shi et al. method [9] is worse than Al-Ameen’s [13] method with regard to some scores. Dhara et al.’s [5] method is better than Shi et al.’s [9] method with regard to some scores. The NIQE scores of dehazing methods are lower than those of the existing sand dust image enhancement methods in most cases, although these have no color correction step. Because existing sand dust image enhancement methods do not improve the image suitably, these have a higher NIQE score than dehazing methods. The NBIQA scores of the dehazing methods are lower than those of the sand dust image enhancement methods. The proposed method has a higher UIQM and NBIQA score and a lower NIQE score than the other methods.

Table 7.

Comparison of the average NIQE [25], average UIQM [26], and average NBIQA [27] scores for the images in Figure 7 and Figure 8 (In the case of the NIQE measure [25], if the score is low, the image is enhanced well. In the case of the UIQM [26], if the value is high, the image is improved well. If the NBIQA [27] score is high, the image is also improved well).

Table 8.

Comparison of the average NIQE [25], average UIQM [26], and average NBIQA [27] scores on the results of the DAWN dataset [24] (In the case of the NIQE measure [25], if the score is low, the image is enhanced well. In the case of the UIQM [26], if the value is high, the image is well improved. The NBIQA [27] score indicates that if the score is high, the image is well enhanced).

In Table 8, the average scores of the enhanced images in the DAWN [24] dataset are shown. As shown in Table 8, dehazing methods have limitations in the enhancement of the degraded sand dust images. Dhara et al.’s method [5] has a better performance than other comparison methods in some scores, although the enhanced images have artificial color distortion. The proposed method outperforms other methods with regard to scores, as shown in the performance comparison.

In Table 9, the comparison of the computation times of the existing color correction methods and the proposed method is shown. The system environment comprises an Intel Core i7 8700 processor @ 3.20 GHz CPU with 32 GB of RAM. As shown in Table 9, the Al-Ameen [13] method is faster than the other methods.

Table 9.

Comparison of the computation time of the existing color correction methods and the proposed method.

In Table 10, the comparison of the processing time of the existing dehazing methods with a color correction procedure and the proposed color correction and dehazing method is presented. As shown in Table 10, the Al-Ameen method [13] is the fastest method due to this method having no dehazing stage, similar to DCP [1].

Table 10.

Comparison of the computation time between the dehazing methods with color correction procedure and proposed color correction and dehazing method.

As shown in Table 9 and Table 10, the computation time of the Al-Ameen [13] method is faster than that of the proposed method. However, the proposed method is faster than the Shi et al. [9] and Shi et al. [10] methods. Additionally, the Al-Ameen [13] method is the fastest due to this method having no dehazing step. However, in the enhanced image using the Al-Ameen method [13], much degradation occurs.

As observed in the experimental results, to enhance the sand dust image naturally, both color correction and a dehazing procedure are needed.

4. Conclusions

An image distorted by sand particles has a yellowish or reddish color cast due to the imbalance of the color channel. To correct the imbalance of the color channel, this paper used the normalized eigenvalue. Each color channel of the degraded image has different features. In other words, the red channel of the degraded image is bright and its eigenvalue is high, but the blue channel of the degraded image is dimmed and its eigenvalue is low. Using these properties, this paper proposed a normalized eigenvalue via the maximum eigenvalue on each color channel, and it is applied in color balance. Additionally, due to the balanced image having features similar to those of a hazy image, to enhance the image, the dehazing algorithm is applied using the proposed ADCP method, which compensates for the existing DCP. The enhanced image has good quality both subjectively and objectively, in comparison with state-of-the-art methods. As observed in the sand dust image enhancement step, to enhance the sand dust image naturally, color correction and adaptive transmission map estimation are needed. The proposed method will contribute appropriately to the various degraded image enhancement areas. Future research needs to explore more suitable color correction methods and adaptive transmission map estimation for degraded sand dust image enhancement. Especially in severely distorted sand dust images, the blue channel is rare, and because of that, the corrected image has an artificial color shift. Therefore, to enhance sand dust images, including rare blue components, work on adaptive color compensation is needed. Additionally, an adaptive transmission map such as an image’s distinguishable feature as with the ratio of the sky region in the image needs to be estimated.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The author declares no conflict of interest.

References

- Kaiming, H.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Kaiming, H.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013. [Google Scholar]

- Qingsong, Z.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dhara, S.K.; Roy, M.; Sen, D.; Biswas, P.K. Color cast dependent image dehazing via adaptive airlight refinement and non-linear color balancing. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 2076–2081. [Google Scholar] [CrossRef]

- Gao, G.; Lai, H.; Jia, Z.; Liu, Y.; Wang, Y. Sand-dust image restoration based on reversing the blue channel prior. IEEE Photonics J. 2020, 12, 1–16. [Google Scholar] [CrossRef]

- Cheng, Y.; Jia, Z.; Lai, H.; Yang, J.; Kasabov, N.K. A fast sand-dust image enhancement algorithm by blue channel compensation and guided image filtering. IEEE Access 2020, 8, 196690–196699. [Google Scholar] [CrossRef]

- Huo, J.Y.; Chang, Y.L.; Wang, J.; Wei, X.X. Robust automatic white balance algorithm using gray color points in images. IEEE Trans. Consum. Electron. 2006, 52, 541–546. [Google Scholar]

- Shi, Z.; Feng, Y.; Zhao, M.; Zhang, E.; He, L. Let you see in sand dust weather: A method based on halo-reduced dark channel prior dehazing for sand-dust image enhancement. IEEE Access 2019, 7, 116722–116733. [Google Scholar] [CrossRef]

- Shi, Z.; Feng, Y.; Zhao, M.; Zhang, E.; He, L. Normalised gamma transformation-based contrast-limited adaptive histogram equalisation with color correction for sand–dust image enhancement. IET Image Process. 2019, 14, 747–756. [Google Scholar] [CrossRef]

- Naseeba, T.; Harish Binu, K.P. Visibility Restoration of Single Hazy Images Captured in Real-World Weather Conditions. Int. Res. J. Eng. Technol. 2016, 3, 135–139. [Google Scholar]

- Kwok, N.M.; Wang, D.; Jia, X.; Chen, S.Y.; Fang, G.; Ha, Q.P. Gray world based color correction and intensity preservation for image enhancement. In Proceedings of the 2011 4th International Congress on Image and Signal Processing, Shanghai, China, 15–17 October 2011; IEEE: Piscataway, NJ, USA, 2011; Volume 2, pp. 994–998. [Google Scholar]

- Al-Ameen, Z. Visibility enhancement for images captured in dusty weather via tuned tri-threshold fuzzy intensification operators. Int. J. Intell. Syst. Appl. 2016, 8, 10. [Google Scholar] [CrossRef] [Green Version]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In European Conference on Computer Vision; Springer: Cham, Germany, 2016. [Google Scholar]

- Wang, A.; Wang, W.; Liu, J.; Gu, N. AIPNet: Image-to-image single image dehazing with atmospheric illumination prior. IEEE Trans. Image Process. 2018, 28, 381–393. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.I.; Du, Q. Interference and noise-adjusted principal components analysis. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2387–2396. [Google Scholar] [CrossRef] [Green Version]

- Tripathi, P.; Garg, R.D. Comparative analysis of singular value decomposition and eigen value decomposition based principal component analysis for earth and lunar hyperspectral image. In Proceedings of the 2021 11th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 March 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Goldstein, E.B. Sensation and Perception; Wadsworth Pub. Co.: Wadsworth, OH, USA, 1980. [Google Scholar]

- Preetham, A.J.; Shirley, P.; Smits, B. A practical analytic model for daylight. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 8–13 August 1999. [Google Scholar]

- Tan, R.T. Visibility in bad weather from a single image. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; IEEE: Piscataway, NJ, USA, 2008. [Google Scholar]

- Fattal, R. Single image dehazing. ACM Trans. Graph. (TOG) 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Shree, K.N. Chromatic framework for vision in bad weather. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hilton Head, SC, USA, 15 June 2000; CVPR 2000 (Cat. No. PR00662). IEEE: Piscataway, NJ, USA, 2000; Volume 1, pp. 598–605. [Google Scholar]

- Narasimhan, S.G.; Shree, K.N. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Kenk, M.A.; Mahmoud, H. DAWN: Vehicle Detection in Adverse Weather Nature Dataset. arXiv 2020, arXiv:2008.05402. [Google Scholar]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Ou, F.Z.; Wang, Y.G.; Zhu, G. A novel blind image quality assessment method based on refined natural scene statistics. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).