Abstract

The proliferation of Internet of Things devices and services and their integration in everyday environments led to the emergence of intelligent offices, classrooms, conference, and meeting rooms that adhere to the paradigm of Ambient Intelligence. Usually, the type of activities performed in such environments (i.e., presentations and lectures) can be enhanced by the use of large Interactive Boards that—among others—allow access to digital content, promote collaboration, enhance the process of exchanging ideas, and increase the engagement of the audience. Additionally, the board contents are expected to be plenty, in terms of quantity, and diverse, in terms of type (e.g., textual data, pictorial data, multimedia, figures, and charts), which unavoidably makes their manipulation over a large display tiring and cumbersome, especially when the interaction lasts for a considerable amount of time (e.g., during a class hour). Acknowledging both the shortcomings and potentials of Interactive Boards in intelligent conference rooms, meeting rooms, and classrooms, this work introduces a sophisticated framework named CognitOS Board, which takes advantage of (i) the intelligent facilities offered by the environment and (ii) the amenities offered by wall-to-wall displays, in order to enhance presentation-related activities. In this article, we describe the design process of CognitOS Board, elaborate on the available functionality, and discuss the results of a user-based evaluation study.

1. Introduction

Today, we are witnessing a new facet of Information and Communication Technologies (ICT), spearheaded by the ever-growing Internet of Things (IoT) [1] and the emerging Ambient Intelligence (AmI) paradigm [2]. Such technologies have the potential to be seamlessly integrated into the environment, deliver novel interaction techniques [3], offer enhanced user experience (UX) [4], and improve quality of life by appropriately responding to user needs [5]. Over the past few years, various intelligent environments (simulated or real life) targeting various domains e.g., domestic life, healthcare, education, workplace, etc., have started appearing.

At the same time, large-scale displays (also known as Interactive Boards or Interactive Walls) have permeated various spaces including public installations [6], educational settings [7], and high-end visualization laboratories [8]. Such digital spaces provide users with a large interactive surface, and permit collocated or remote collaboration [9]. Moreover, the affordances offered by large Interactive Boards, such as visibility, stability, direct manipulation, multimodality, and reusability, can support cumulative, collaborative, and recursive learning activities [10]. In particular, they can spark the interest of the audience and act as a conversation initiator around digital content about a topic of interest, created by both presenters and attendees, during joint events (e.g., a presentation, a brainstorming session, a focus group, a lecture) [11].

Hence, as a public display, a large board and its interactive tools unveil new opportunities for expressing publicly and evaluating ideas, by actively collaborating over a single display. Additionally, by displaying appropriate multimedia content, the explanation and, therefore, the comprehension of challenging concepts is inherently supported. To this end, the domains of workplace and education can potentially benefit the most from such installations, since meetings and lectures usually feature digital content (e.g., presentation slides) that must be displayed on an appropriate medium for everyone to see, foster the presentation of projects, favor the initiation of discussions, and in most cases require the collaboration of colleagues/students [12].

Inside a meeting room or a classroom, large Interactive Boards already host many applications that enhance productivity [13] and have a specific utility for their users (e.g., write notes regarding a specific topic). In such settings, users are expected to work up close to the display (e.g., performing tasks involving dealing with detailed information), or (ii) perform tasks from a distance (e.g., sorting photos and pages or presenting large drawings to a group) [14]. However, the bigger the display area, the more difficult for the users to access objects scattered around it, especially when they tend to stay relatively close to it, while keeping track of every application launched on the board becomes a tedious task. Besides presenters, the physical dimensions of the display also affect the perception of the audience, since unlike movie theaters where a single item (i.e., the movie) occupy the large wall, Interactive Boards usually present multiple windows simultaneously. Therefore, such windows inevitably compete to attract the attendees’ attention, regardless of the presenter’s intention to shift their focus towards a specific application [15].

Acknowledging both the shortcomings and the potentials of Interactive Boards in existing intelligent conference, classroom [16], and meeting rooms [17], this work introduces an innovative framework, named CognitOS Board, which takes advantage of (i) the intelligent facilities offered by the environment [18,19] (e.g., user localization service) and (ii) the amenities offered by wall-to-wall displays, in order to enhance presentation-related activities. The framework fuses principles from the domains of Human–Computer Interaction, Ambient Intelligence and Software Engineering (e.g., Microservices Architecture, SaaS, and multimodal and natural interaction) in new developments, towards providing a sophisticated unified working environment for large Interactive Boards, offering a natural and effortless interaction paradigm, which will allow users to orchestrate their presentation assets in an optimal way (e.g., use different workspaces to place different types of applications) and present rich material to the audience. Additionally, it aims to support preparation before a meeting or a class, by equipping presenters with user-friendly tools that will allow them to initialize the contents of the board with the appropriate material in advance.

Aiming to promote natural interaction, CognitOS Board relies on multimodal and well-established interaction metaphors (e.g., writing on a digital board is similar to writing on a plain whiteboard). Towards that direction, interaction is supported through the following: (i) touch on the wall surface with the finger or a special marker, (ii) a remote presenter, (iii) voice commands, (iv) sophisticated techniques that take advantage of the environment’s Ambient Intelligence facilities (e.g., through user position tracking, an application window can tail the educator while moving around or identify the height of the user and adjust the position of certain elements, so as to bring them within reach), and (v) the controller application for handheld devices (i.e., tablet, smartphone). Finally, CognitOS Board exposes its main functionality as a service to third-party applications, through a comprehensive RESTful API that permits external components to or explicitly control its behavior or enhance its functionality on demand.

This article presents CognitOS Board, describes the design process that was followed, elaborates on the available functionality, and discusses the results of a user-based evaluation study in the context of an Intelligent (University) Classroom.

2. Related Work

This section reviews existing frameworks targeting Interactive Boards and presents the features that those frameworks support.

2.1. Hardware-Specific Solutions

EPSON has created an Interactive Projector to “transform teaching” [20]. This projector can display content on scalable screens up to 100” in Full HD. The classroom can become a dynamic learning environment by supporting collaboration and virtual learning, while being able to project and share content between up to four different devices simultaneously. On the display, educators can highlight and annotate content to create an enhanced learning experience.

ViewBoard [21] is an Interactive Whiteboard application available for large displays, offering tools to enhance the learning process and engage students. These tools include general purpose utilities, such as a highlighter, an annotator and a virtual pen, as well as course-specific tools (e.g., rulers, a protractor and a caliper for the mathematics course). The educator can also drop files in the application from the web or any other device using cloud storage systems. ViewBoard comes with two independent pens used for writing, annotating, and highlighting. The two pens can be used at the same time, thus enabling collaboration between students. ViewBoard also features two sophisticated mechanisms: The first has the ability to recognize handwriting and transforms it into computer generated text, and the second one recognizes free-hand drawings and searches for similar content in Google Images. Finally, educators can record their lectures and save the content to any cloud service, as well as distribute it to students’ devices.

EZWrite [22] is an annotation application, exclusively installed on the BenQ’s Interactive Flat Panel (IFP), which transforms it into an Interactive Whiteboard. By using the two different accompanying pens, students can collaborate either together or with the educator, making the lecture a more fun experience. Additionally, a range of functions is available through a floating menu, including recording, screen capture, pen, eraser, and whiteboard. EZWrite also features an Intelligent Handwriting Recognition mechanism, which can transform letters, numbers, and shapes into legible materials. Moreover, the BenQ IFP comes with a video recording feature that allows educators to record lectures for class preparation or review purposes.

Furthermore, the content created during a lecture (e.g., annotation, notes, etc.) can be saved either as .pdf or .png files, and the educators can share them via emails, QR codes, USB drives or store them in the internal memory of the IFP. EZWrite offers a toolbox of useful utilities, which include a calculator and a geometry assistant (both of which identify free-hand writing and drawing and transform it into legible content), a timer and a collection of game-based features. These features include a collaboration space for up to three teams, a random selection mechanism for identifying which student should answer at any point, a buzzer which hosts game show style quizzes and a score board to keep track of the teams’ progress.

MagicIWB [23] is an all-in-one solution for an Interactive Whiteboard without the need for an external PC. MagicIWB offers tools to write, draw, highlight and annotate content, which can be saved internally and shared across multiple devices for the audience to view. MagicIWB supports multi-touch interaction from up to ten different users, thus making collaboration and active learning a fun process.

Oktopus [24] is an interactive software designed for being used together with AVer CP Series IFPs (Interactive Flat Panels) [25]. It is able to simultaneously show contents on students’ devices to let them involve more in learning, while it also permits users to annotate over any application, take notes, answer questions, and share educational material.

2.2. Hardware-Independent Solutions

The work in [26] introduces a Classroom Window Manager mechanism capable of decision-making for adjusting application placement on various classroom artifacts, i.e., AmIDesk, SmartDesk, AmIBoard, and SmartBoard. This mechanism provides a set of rules for designing applications so as to enable window placement on the various devices. In more detail, the SmartBoard and AmIBoard window managers permit designers to create applications that follow specific layouts (four in particular), so as to ensure reachability of interactive components. A set of tools is available, including an Application Switcher (a functionality similar to windows’ task switcher) and an Application Launcher responsible for displaying shortcuts to course-specific applications. Finally, given that board artifacts might have more screen real estate available than student’s personal devices, designers can exploit the “previews” feature to display snapshots of other information available through the same application.

The work in [27] introduces Intellichalk, a multi-display digital blackboard system. The system uses four digital displays placed in a linear manner, creating an “infinity” canvas, which can be used to write and/or place images. A tablet and a stylus are used as input devices offering a set of different pen colors to write and annotate content. Images can be selected either from the internal storage of the tablet, an external storage device or the web. In addition, the system offers a collection of plugins, such as a gallery of graphic elements and handwriting recognition. The writing area on the input device extends vertically, to match human writing habits; however the content is translated horizontally on the displays, where the rightmost one serves as the focus area. The first three displays act as “history” areas, hosting older content, as the educator produces further content. The system has been evaluated in the long-term during three different courses, i.e., two different mathematics courses and a computer science course, and both educators’ and students’ perceptions were noted. The results of the evaluation indicated a positive attitude towards using the system in everyday lectures, since it was easier to follow the educator’s thoughts as opposed to slide presentations.

Smart Learning Suite [28] can transform static content into interactive experiences, providing tools for lesson creation, student assessment, collaborative workspaces, game-based learning tools and subject-specific features. The Suite is a desktop software compatible with Windows and iOS. In addition to the desktop application, the Suite provides an online environment where educators can add interactive game-based features to a variety of file types (e.g., PowerPoint and PDF) and send the lessons to students. Students can then either work individually or collaborate online to solve the exercises.

MimioStudio [29] is an educational software developed to enable educators to create interactive courses and keep track of student progress. MimioStudio is the software behind the MimioClassroom suite, which offers a collection of tools, such as a document camera and a pen tablet. MimioStudio can be also used with any interactive large display, aiming to replace the traditional classroom whiteboard with an interactive one. Through this software educator can create a variety of small-scale assessments for students, such as short-answer and short-essay questions. The students’ answers are stored, graded, and available for viewing by the educator in the featured MimioStudio Gradebook. Finally, MimioStudio enables collaboration by connecting up to three mobile devices—with the MimioStudio mobile up downloaded—and sharing student’s work in front of the classroom.

ActivInspire [30] is a collaborative lesson delivery software for interactive displays. It offers a dashboard where educators can create engaging lesson with compelling content using the application’s vast suite of lesson creation tools. Lessons can be enhanced with external content, such as PowerPoint slides and PDF documents, and can embed multimedia files imported from existing resources. ActivInspire also supports multi-touch interaction to encourage collaboration and enhance student engagement with features like interactive math tools. Educators can use ActivInspire to create interactive activities selecting from the available customizable templates for Matching, Flashcards, Crosswords, and Memory games. These activities can be tailored to fit the needs of each course.

Canvus [31] collaboration software from MultiTaction is a giant workspace that is easily scalable to fit any display (e.g., laptops, tablets, smartphones, large displays, and wall displays). Canvus enables multiple users to share and manipulate data at the same time with very short to non-existent latency. Users can participate in meetings or brainstorming sessions either remotely or on-site. Users can place various assets or handwritten or Post-it® notes on the workspace using a virtual keyboard, an IR pen for handwriting recognition or drag and drop any other content from the canvas. Additionally, each canvas workspace can be split into multiple workspaces, essentially by creating duplicates of the first workspaces, which enables smaller teams to work independently on Canvus. Each workspace can be displayed on different devices. Finally, Canvus can be integrated with any videoconferencing platform, such as Zoom or Webex, to engage agile team members with videoconferencing.

Lü Interactive Playground [7] features one or two projectors and 3D cameras, in order to create engaging activities that make children move, play, and learn. The 3D camera system turns the giant wall projections into touchscreens that can detect multiple objects. For example, Figure 1 presents a math game that requires students to use balls in order to hit the targets on the wall that correspond to the correct answer.

Figure 1.

Interactive playground [7].

The work in [32] presents the Virtual MultiBoard (VMB), a combination between a traditional blackboard and slide presentations. VMB uses a large display as the main device, an interactive display as the input/annotation device and a smaller device (e.g., laptop) for the presenter’s view. In the large display three PowerPoint slides (or similar media, such as PDF files) are presented in a linear manner, from left to right. The left-most represents the current slide, where the focus of the educator resides at each moment; thus, its dimensions are bigger than the other two slides. The next two slides represent the “history” or the two previous slides from the current, in order to enable the audience to keep track of the presenter—even those who require more time to keep notes. The second screen—the interactive display—is used by the presenter to annotate and highlight the slides. These annotations are also displayed in real time on the main display and are pinned on the slides. Every member of the audience with a laptop and network access can submit questions to the presenter. The number of submitted questions—not the questions themselves—is displayed on the main screen to give feedback to the audience that their questions have been submitted. On his/her personal device—the third screen—the presenter can view those questions and decide to display them publicly to initiate discussion.

OneScreen Annotate [33] is an Interactive Whiteboard software featuring a variety of tools to annotate and enhance brainstorming sessions—or activities in general where multiple people are collaborating. Annotate can support up to 50 remote users in a shared whiteboard space. It offers unlimited content annotation capabilities using intuitive tools, such as pens, erasers, search engine assignment, and customizable toolbars. Additionally, the workspace size is unlimited, which facilitates collaboration between remote and on-site (or a combination of both) users. Through the drag-and-drop functionality, users can import multimedia files, either from the web or a cloud storage system. Moreover, work sessions can be saved, recorded, and reloaded on demand. By recording a work session, the system keeps track of both image, audio, and annotation simultaneously. Finally, the system is equipped with text, shape, and handwriting recognition, to transform even vague shapes into computer generated graphics.

Annotate [34] is a cloud-based web app that transforms handheld or mobile devices into a mobile Interactive Whiteboard, by mirroring the device’s screen on a projector. The presenter interacts with his device as he would with an Interactive Whiteboard. The Annotate client offers a variety of drawing tools, and supports multi-touch gestures to facilitate presentation-related activities and offer a dynamic experience.

2.3. Common Features of Interactive Boards

The literature review revealed several features that are supported by frameworks targeting Interactive Boards (Table 1).

Table 1.

Identified features of Interactive Boards.

Table 2 presents the features that each of the examined frameworks support. As seen, most systems mainly focus on direct on-screen interaction (i.e., annotation and multi-touch) and basic content sharing; therefore, key requirements that can potentially benefit the overall user experience, usability and usefulness (i.e., remote manipulation and support for multiple simultaneous windows and desktops) remain unmet. To address these limitations, CognitOS Board equips presenters with appropriate tools (as described in requirements A–J) that enable them to interact with the board in a natural and intuitive manner. Aiming to promote interaction and minimize the effort that presenters have to make in order to orchestrate the windows on such a large display, CognitOS Board also introduces a number of innovative features (i.e., Summon, Follow-Me, and Board Manager), that none of the existing systems include. Furthermore, CognitOS Board features sophisticated mechanisms that permit intercommunication with Intelligent Environments (IEs); for example, the IE can inform the system regarding the user location in front of the board or the lighting conditions of the room. These characteristics are targeted to improve the overall User Experience (UX) and offer opportunities that isolated systems cannot provide.

Table 2.

Comparison matrix of Interactive Board software.

3. Design Process of the CognitOS Board

3.1. Methodology

The process followed while designing the system was the Design Thinking methodology [35]. In a series of meetings with several potential end-users (male and female users aged between 20 and 45 who have experience in giving presentations), scenarios and personas were created for the “Empathize” and “Define” steps of Design Thinking. Next, for the “Ideate” step, multiple brainstorming sessions were organized, producing tens of ideas, which were then filtered through interviews with domain experts (i.e., software engineers, technical installation experts). Such process resulted in the identification and exclusion of the (currently) unfeasible ideas; for example, some of the ideas required the presenter to annotate the contents of the board from a distance, which is not optimally supported by the available hardware. Experienced interaction designers also reviewed the ideas and offered valuable insight and comments, as well as preferences in regards to which ideas had the most potential in their opinion in terms of innovation, research interest and higher possibilities to be accepted by end-users (e.g., ideas that sounded attractive/cool/fun).

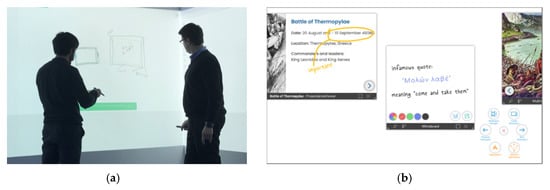

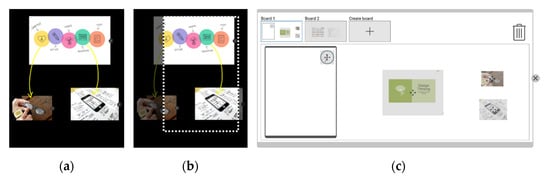

By properly shaping those ideas, a set of preliminary system requirements was extracted and the final requirements of the CognitOS Board were elaborated (Section 3.3). In the Prototype phase, the design team followed an iterative design process where low (Figure 2a) and high-fidelity interactive prototypes (Figure 2b) were created for the most promising ideas emerged from the ideation phase. Moreover, before proceeding with user testing, a cognitive walkthrough evaluation experiment of the CognitOS Board was conducted with the participation of five (5) User Experience (UX) experts. The goal was to assess the overall concept and its potential, identify any unsupported features and uncover potential usability errors by noting the comments and general opinion of experts. Finally, after improving the system based of the findings of the cognitive walkthrough, a user-based evaluation was organized in order to observe real users interacting with the system and gain valuable insights.

Figure 2.

(a) Design process—low-fidelity prototypes and (b) high-fidelity prototypes of the CognitOS Board.

3.2. Motivating Scenarios

Scenario building is a widely used requirements elicitation method [36] that can systematically contribute to the process of developing requirements. Scenarios are characterizations of users and their tasks in a specified context, which offer concrete representations of a user working with a computer system in order to achieve a particular goal. Their primary objective in the early phases of design is to generate end-user requirements and usability aims.

The following sections present two envisioned scenarios where a university professor and two managers of the Mobile-x company use the CognitOS Board during their presentation. In both cases, the CognitOS Board is projected on the front (main) wall of a room and on one of its side walls.

3.2.1. Teaching a University Class

Raymond teaches Human Computer Interaction at the Computer Science Department of a large university. The department features an Intelligent Classroom that has been assigned to Raymond’s course.

For today’s lecture, Raymond wants to introduce the Process of Design Thinking. He has retrieved a couple of images and an interesting video that he wishes to share with his students. So—in order to save time—before the lecture, he uses the PC at his university office to prepare the contents of the board. To do so, he launches the “CognitOS Board” desktop application and simply drags the multimedia and presentation files on the empty board. In order to present the video in full screen, without rearranging the remaining items on the board, he decides to create a new board and moves the respective file there. At 14:50, he leaves his office and moves towards the “Intelligent Amphitheater” to start his lecture.

He enters the room and greets a group of students who have already settled in. As he waits for the remaining students to join, Raymond launches the application that presents the course’s schedule in order to remind the student’s that they have an upcoming deadline.

Since this application presents secondary information, he uses the Board Manager tool in order to send it to the side wall of the classroom.

At this point all students have already arrived in the amphitheater, so Raymond greets them, reminds them of their assignment deadline and starts lecturing based on the PowerPoint presentation that he has prepared.

As he reaches slide number five, that displays a representation of the Design Thinking Process, he captures an instance of the diagram and moves it on the right side of the board. He then pins it at this location and moves the two pictures—that he has previously loaded—near it. The first picture is related to the empathize step of the process (depicted on the image previously captured from the presentation file), so he uses the annotation functionality to make a connection between the two images.

He verbally explains the importance of that step and then “swipes up” to reveal the video player that he has already prepared to launch a film showcasing an experiment carried out in a few restaurants in São Paulo surprising customers with uncomfortable objects.

As soon as the video ends, he uses the same gesture to return to the primary board that contains the presentation. He then continues explaining the steps of the design thinking process. For the prototyping step, he uses the annotation functionality to make a connection between the step and the second image that is already on the board. Aiming to showcase the process of prototyping, he creates a new empty board via the Board Manager tool, launches the application Whiteboard and sketches a login box. Next, he returns to the primary board, captures the three images along with the annotation and sends the file to the students. Finally, Raymond moves towards the side wall and reveals a hidden workspace containing the exercise submission application.

3.2.2. Meeting Room Presentation

The company Mobile-x, which is located in a big city, wants to introduce a new updated version of their mobile device. Today, the executives of the company have organized a meeting with the sales and marketing managers regarding the sale and advertising campaign of the product.

For today’s meeting both Niki, the sales manager, and Ryan, the marketing manager, have prepared the content of their presentations using the “CognitOS Board” desktop application.

The meeting is about to start, therefore Niki makes a gesture “S” on the board’s surface to launch the prepared content. The main wall displays a presentation file containing information about the past sales of the previous mobile model, while the side wall displays relevant graphs and plots, as well as the key points to be discussed in the meeting. Niki starts the presentation and as she reaches the slide that contains a detailed table regarding last year’s monthly sales, she uses the annotation tool to highlight the months with the lowest revenue.

Then she summons the plot application next to the presentation file. That way the window displaying the plot with the monthly expenses on advertising, relocates from the side wall and appears in the location that Niki indicated. At this point, she explains to the meeting participants that the monthly expenditure on advertising is low revenue shrinks. To refer to this information later, she captures the contents of both the presentation file and the plot window.

Niki continues with the presentation of the company’s sales data; on a subsequent slide, she lists statistics related to the sales per store in the city area. To illustrate these findings, she has already launched the map application on a second board and has added information correlating these statistics with the location of each store. In order to reveal the second board, she uses the paging component on the top area of the board. After completing this demonstration, she returns to the primary board containing the presentation file.

In the second part of the meeting, Ryan proceeds with the presentation of the advertising campaign of the product. The board initializes containing the content that Ryan has prepared, while since he is left-handed the window menu appears on the left side of the window frame. He carries on with presenting general information about the campaign; he explains the positive impact of advertising their product on monthly sales, and then “swipes up” to reveal the second board containing the three proposed videos and the potential posters of the campaign. Concluding his presentation, he asks the participants to vote and choose one of the videos and one of the posters. They have five minutes to vote, so Ryan launches the countdown application. As soon as the voting is completed, Ryan moves on the side wall and reveals the results.

The meeting is now completed, so Niki makes a “C” gesture to “switch off” the board and to set its status to “idle”.

3.3. High-Level Requirements

This section presents the high-level requirements that CognitOS Board and the related front-end tools (i.e., desktop, tablet, mobile applications) need to satisfy (Table 3). Such requirements have been collected through an extensive literature review and an iterative elicitation process based on multiple collection methods, including brainstorming, focus groups, observation and scenario building.

Table 3.

High-level requirements of the CognitOS Board.

4. The Technological Infrastructure of CognitOS Board

4.1. Board Installation

CognitOS Board is deployed within the Whiteroom of ICS-FORTH, an interactive mixed-reality environment located in an Ambient Intelligence Research Facility. The room is 5 m long by 5 m wide simulation space with six EPSON EB-696Ui projectors with their accompanying touch sensors, mounted on the ceiling. The provided touch sensors transform the walls into touch-enabled surfaces, whereas the integrated speakers enhance the space with audio capabilities that can create a multi-dimensional sound experience. Moreover, three Microsoft Kinect 2.0 sensors are mounted at the points of the inscribed triangle of the room, enabling high quality video and depth data capture of the entire space. The CognitOS Board uses three of the projectors to create a large, L-shaped, interactive display projected on the front and side wall (i.e., 5 m and 2.5 m long respectively); on both walls the lowest projection point is at 0.9 m from the ground and the highest at 2.3 m to ensure reachability. In terms of computational resources, a single Alienware PC equipped with an NVIDIA NVS 810 graphics card drives video output to all three projectors simultaneously.

Software-wise, the Whiteroom is accompanied by a mobile application—developed in-house—to assist with the control and management of the technological infrastructure (e.g., power on or off a projector and cycle through input sources). Moreover, every deployed application is registered as a service that can be remotely activated or deactivated on demand through the app. With respect to touch-based interaction, the LASIMUP approach [37] is used to allow touch interaction along all six (three in the case of CognitOS Board) projected views. Finally, motion tracking software [38] running on top of the ROS framework [39] broadcasts real-time motion capture data such as user detection, localization and tracking, to enable applications to make context-sensitive decisions.

4.2. Implementation Details

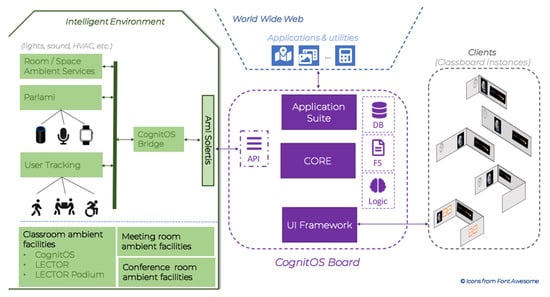

From an engineering perspective, the CognitOS Board framework—following a microservices architecture [40]—is implemented as a suite of independent, lightweight, self-contained (micro) services, which are conceptually clustered in the following categories [41]: (i) the core, (ii) the UI Framework, and (iii) the Application Suite. The core component is at the center of the architecture (Figure 3) since it is responsible to orchestrate the other components and services. In particular, the decision making microservice, schedules all the outgoing requests related to the application suite, the user interface (UI), and the intelligent environment and handles (through the CognitOS Board’s RPC microservice) the incoming requests and events that aim to update the state of any of the board’s instances. The UI framework handles the interaction of users with the system and manages windows’ placement in each individual client by applying the appropriate principles (e.g., minimize overlaps and open new windows nearby). Finally, the Application Suite instantiates and manages the state of the external and built-in applications that are hosted in every CognitOS Board instance and provides a set of commonly used utilities in the form of microservices (e.g., File Storage and State Storage).

Figure 3.

Overview of the CognitOS Board architecture at a software level.

CognitOS Board constitutes a Software-as-a-Service (SaaS) framework by design. It exposes its entire functionality through the AmI-Solertis framework [19] so that the ambient facilities (i.e., services and agents) can propagate information about the overall context of use (e.g., environment’s state and user actions) and implicitly or explicitly control its behavior or enhance its functionality on demand (e.g., integrate voice interaction [42] and enable location-based actions [43]). For example, if deployed inside an Intelligent Classroom [16], CognitOS Board architecture enables the delivery of context-sensitive interventions [44] that aim to relax stressed students or increase the attention level of distracted students through sophisticated educational applications [45,46], while it permits the implementation of external end-user applications that facilitate its control (e.g., enable remote management [47]).

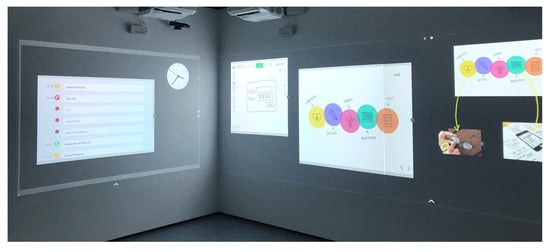

5. The CognitOS Board Framework

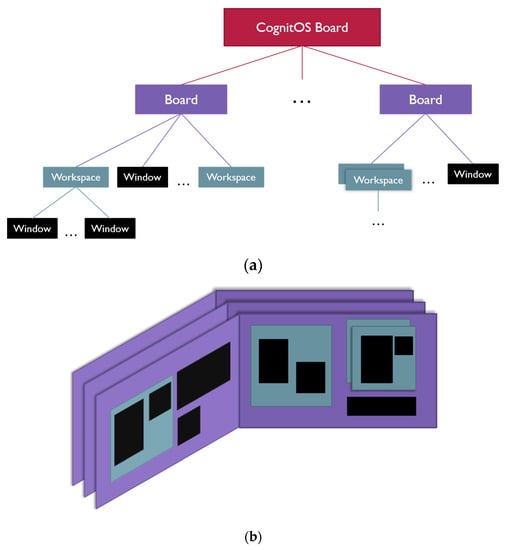

The CognitOS Board (Figure 4) provides a sophisticated window manager—appropriate for large displays—able to support the effortless manipulation of applications during presentations, while equipping presenters with useful tools to enhance interaction with the audience. Before proceeding with the description of the offered functionality, the terminology used in this work is introduced, namely, (A) wall, (B) board, (C) workspace, (D) window, and (E) application (Figure 5a).

Figure 4.

A snapshot of CognitOS Board.

Figure 5.

(a) Representation of the CognitOS Board components’ hierarchy. (b) Visualization of a sample system instantiation.

Wall. The system can be expanded on one or more physical walls. There is no limitation regarding the positioning of the walls inside a room; for example, the board might take up two adjacent or two facing walls.

Board. The board spans across the available walls and is able to host workspaces and windows. Presenters can create alternative boards, while only one board is visible at a time. That way, when users alternate amongst them (similarly to the multiple desktops of Windows 10) the audience is presented with entirely new content.

Workspace. A workspace is an area of a board which can host windows. The existence of workspaces facilitates presenters to organize the windows into groups and manipulate them concurrently (e.g., move them together). A workspace can have alternative views, which can be visible one at a time.

Window. A window is the graphical control element that hosts applications. It can be moved, resized and pinned, while it offers various extra features that will be described later.

Applications. In order to support users during a presentation, an application library is provided, consisting of both built-in and external applications.

To summarize, the system is able to transform the walls of a room into an Interactive Board, which can host windows and workspaces (containers of windows). Presenters can create alternative boards, which they can reveal/hide on demand, while this functionality is also available for the workspaces, offering a highly modular display area. The benefits of multiple boards and windows are explained in the following sections.

As far as the interaction paradigm is concerned, CognitOS Board enhances well-established interaction metaphors (e.g., writing on the digital board is similar to writing on a plain whiteboard) and supports multimodal interaction through the following: (i) touch on the wall surface with the finger or a special marker, (ii) a remote presenter, (iii) voice commands, (iv) sophisticated techniques that take advantage of the IE’s facilities (e.g., through user position tracking an application window can tail the user while moving around), and (v) the controller application for handheld devices (tablet).

During the design process, the team came across common challenges that often arise when designing for large public displays, which are described in detail in [48]. In summary, the main challenge was to alleviate reachability constraints stemming from the need to interact with objects scattered around on a wall-sized display, and also ensure that interactivity is not affected due to the users’ physical characteristics (e.g., tall vs. short users, and wheelchair users). Additionally, given the large size of the projected area, extra focus was given to developing a mechanism able to draw the attention of the audience to the active application.

5.1. The Board

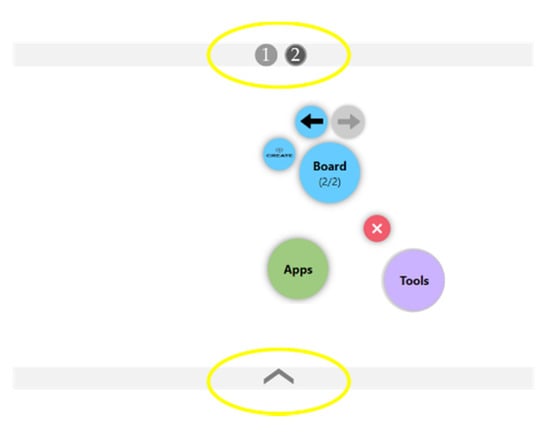

The presenter can create a new board on-the-fly or open a previously defined one. The ability to define a board a priori helps users in preparing their material offline and save time from launching and positioning the desired applications during the limited presentation time. The CognitOS Board permits users to create multiple boards to allow for a better organization of application windows. For example, a presenter might select to use a side wall for displaying various utilities relevant to the presentation (e.g., a map) and the primary wall to launch the presentation file. Presenters can switch between active boards in a linear manner (i.e., from the first to the second, etc.) or select a specific one. Additionally, two or more boards have the ability to merge on demand by moving all their applications to a single one. At the bottom of a board there is a dedicated touch-enabled area, were the user can perform a swipe up gesture in order to reveal the next board (Figure 6). On the top area of the board there is an interactive control (in the form of numeric pagination) that indicates the number of existing boards. Such control is used to provide information about the active board (e.g., the second board out of three existing boards is visible), while the users can load a specific one by simply clicking on the appropriate number of the paging component.

Figure 6.

Navigating between boards.

Each board can host multiple windows, while an underlying intelligent mechanism can automatically arrange their position or toggle their visibility based on numerous parameters (e.g., available space, user preferences, and past knowledge), if required (e.g., there is not enough space).

5.2. The Workspace

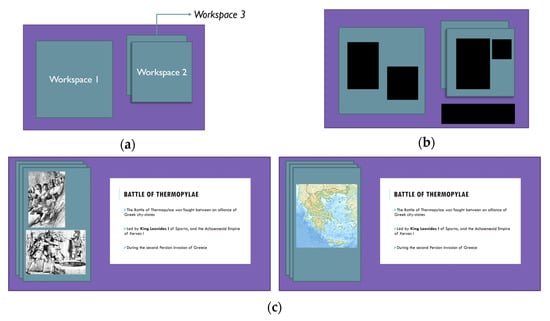

The user has the ability to group two or more application windows and organize them in a workspace (Figure 7a,b). As depicted in Figure 8a, the workspace component is framed; this framed area is used to move and resize that item. The presenter can create several workspaces on a board, given that they fit in the available space. For example, a board can contain two large workspaces covering the 100% of its dimensions, or several smaller workspaces. Additionally, for each workspace users can create up to four alternative views. In more detail, behind each visible workspace there is the opportunity to create other workspaces of the exact same dimensions containing different application windows. The presenter has the opportunity to alternate amongst these workspaces and reveal new content to a specific location on the Board. For example, consider an educator giving a lecture regarding the Battle of Thermopylae; the educator always has the PowerPoint file visible on the right side of the board and alternates amongst two parallel workspaces —placed on the left side of the board—containing the Multimedia application window and the Map application window, respectively (Figure 7c). Navigation amongst the alternative workspaces is achieved through a pagination component located on the workspace frame (Figure 8a).

Figure 7.

(a) Several workspaces can be created on the board, and behind each visible workspace there is the opportunity to create other workspaces of the exact same dimension. (b) Applications can be grouped and organized in a workspace. (c) Representation of alternative workspaces.

Figure 8.

(a) An example of a workspace. (b) Reachable interactive components.

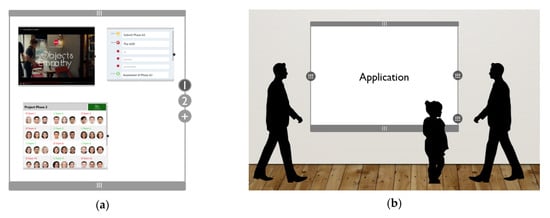

5.3. The Window

Windows can host either built-in or external applications, which are described in Section 5.6. The CognitOS Board windows have a thin frame that is used to move and resize them; the main color of the frame has a subtle greyish tint (Figure 8b), which, however, is able to change in order to draw the attention of the audience to a specific application. Furthermore, the window frame contains a menu button, which is used to open a context sensitive menu (Section 5.4). The positioning of the button depends on the location of the user who interacts with it; for example, if the user approaches the right side of the window, the button appears on the right of the frame, while it is vertically aligned in an appropriate position (depending on the height of the user) so as to ensure reachability. Additionally, users can manually perform a variety of actions on the application windows, including closing, maximizing, resizing, pining and grouping. The pin functionality refers to the ability of setting a fixed position for an application window within a specific board.

5.4. Context-Sensitive Menu

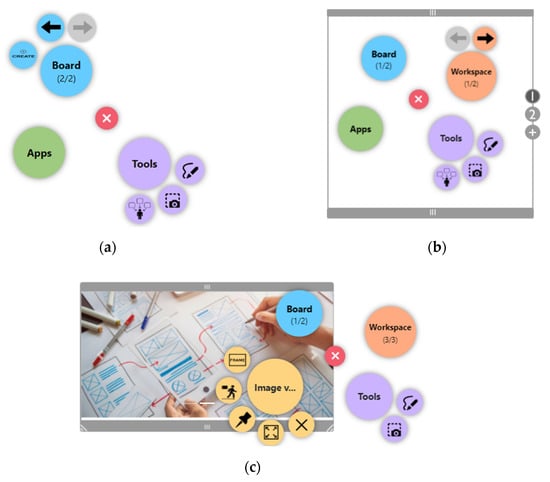

The CognitOS Board offers a context-sensitive menu that follows a modular design. The contained functionality is organized under five categories:

- Board: includes functions that apply to boards (e.g., next and previous).

- Workspace: includes functions that apply to workspaces (e.g., next and previous).

- Application window: includes functions that apply to windows (e.g., pin and hide frame) and some application specific functions (e.g., play video).

- Tools: includes global utilities (e.g., annotate and capture).

- Applications: includes shortcuts to relevant or recently used applications.

Each of the above categories is visualized as a circle, colored with a unique hue, surrounded by its available functions depicted as smaller circles of the same color (Figure 9). The larger circles, representing the categories, are organized in a circular layout, while they are either expanded (to reveal their functions) or collapsed depending on the component on top of which the menu is launched (i.e., board, workspace, or window). For example, if the user opens the menu over an empty area of the board the menu is adjusted to reveal the board-related functions (e.g., create a new board), while the Tools and Applications categories are also expanded, granting access to global utilities and application shortcuts, as well. Table 4 represents the various context-sensitive options that the menu can display.

Figure 9.

(a) Board-specific menu. (b) Workspace-specific menu. (c) Window-specific menu.

Table 4.

Context-sensitive menu states.

5.5. CognitOS Board Tools

Apart from hosting applications (e.g., Whiteboard, Presentation Viewer, Video Player, Calculator, etc.)—organized into boards and workspaces—the system offers a set of tools aiming to enhance presentations. By using these tools, presenters can easily manipulate (e.g., resize, close, and hide) boards, workspaces, and windows; create new boards and workspaces; launch new applications; and set their positions.

In more detail, the available tools are as follows:

Follow-Me. The users can select a specific window to follow their moves (Figure 10b). When the Follow-Me functionality is enabled, the window is transferred into a new layer on top of the other windows of the board. At the same time, the system monitors the movement of the user inside the physical environment (via the position tracking service) and relocates the window accordingly. That way, the user has constant access to the window and can interact with its contents immediately. As soon as the user disables the Follow-Me functionality, the window returns to its initial position.

Figure 10.

(a) Summon tool. (b) Follow-Me tool.

Summon. The users can “summon” a selected window near to their position. In more detail, the Summon tool displays a list of all applications that are currently running on the active board (Figure 10a), from where the users can select an application and “summon” it near them. That way users minimize their movements since they do not have to relocate near the desired application, while reachability constraints are also alleviated (e.g., height constrains, movement constrains, etc.).

Annotate. The user can activate or deactivate the annotation functionality on demand. Upon activation, a new transparent layer is enabled on top of the active board items (i.e., windows or workspaces), and permits presenters to handwrite notes and highlight content (Figure 11a). Annotation is not application specific, meaning that the user can write on top of two or more windows/workspaces in a single run. This utility is accompanied by a small toolbox that offers access to useful functionality, i.e., save, undo/redo, clear and annotation color selection.

Figure 11.

(a) Annotation functionality. (b) Capture functionality. (c) Board manager.

Capture. It permits presenters to capture an image of an entire window, workspace, or board, or manually select a specific area of the board (containing windows and workspaces). Regarding the latter, when the presenter desires to manually capture an area, a new layer over the entire board is created, permitting the user to draw a diagonal line defining the rectangle whose contents are going to be captured (Figure 11b). As soon as the capture is completed, the image viewer application is launched displaying the captured image, enabling the presenter to further interact with it (e.g., share it with the audience).

Board manager. It provides a smaller view of the entire board (Figure 11c) and permits users to manipulate the state of the board with minimum effort, by spending less time and by making fewer body movements. When the user interacts with the board manager (e.g., rearranges or resizes windows, and groups windows in workspaces), the changes that he/she makes is automatically reflected on the entire board.

5.6. Applications Suite

The CognitOS Board, in order to support users during presentations, provides a library consisting of various applications and practical utilities. More specifically, the applications suite is divided into two categories, (i) built-in and (ii) external applications and utilities. Built-in applications were designed and developed for the CognitOS Board, while external applications where imported by following certain specifications (Table 5):

Table 5.

Specifications that external applications should satisfy to be successfully integrated.

- The Presentation viewer enables users to present, highlight, and annotate slides.

- The Image Viewer displays image content. Users can create collections of photos and share them with the audience.

- The Video Player, as implied by its name, displays video files.

- The Whiteboard is an external application that permits users to sketch and handwrite notes on the board (as one would do on a common whiteboard).

- The Calendar application presents the daily schedule of the user, as well as reminders for future events.

- The Calculator is an external application permitting the execution of advanced mathematic calculations.

- The Clock application supports alarms, timers, and a stopwatch. It can be customized to use different styles (e.g., analog or digital) and colors.

- The Geometry assistant is an external application able to identify handwritten geometry shapes and transform them into high-level computer-generated shapes.

- The Graph assistant is an external application offering similar functionality to the Geometry assistant, identifying handwritten graphs.

- The Map is an external application featuring an interactive world map.

- The Notes application can be pinned on top of other applications and permits users to create handwritten messages that are always visible.

- The Submission Viewer application is an educational application targeting Intelligent Classrooms, which provides an overview of the submitted exercises.

- The Exercise viewer is an educational application that targets Intelligent Classrooms and permits the online representation of various types of exercises (e.g., multiple-choice and fill in the blank).

- The Periodic table is an educational application targeting Intelligent Classrooms. It contains interactive chemical elements, which can be highlighted and grouped in order to create chemical compounds.

5.7. Interaction Paradigm

As a result of extensive research on interaction techniques, CognitOS Board was designed featuring multimodal interaction methods, including multi-touch, touch gestures, mouse gestures, mid-air gestures, voice commands, and user position tracking. The interaction with the various areas of the system (i.e., boards, workspaces, and windows) can be divided in two categories: on-surface interaction and above-surface—or air-based—interaction.

5.7.1. On-Surface Interaction

Interaction on the surface signifies touch interactions directly on the reachable parts of the display using fingers, hands and tangible objects, in order to select, grab, throw, scale, move objects, etc. CognitOS Board supports on-surface interaction both through the interactive marker and the user’s fingers/hands. In more detail, the system can identify fine movements and differences between the number of fingers and hands used at each moment, thus enabling the creation of an extensive library of various gestures. Various on-surface gestures are employed in order to speed-up interaction with windows and workspaces (i.e., pan left to move a window towards that direction), while more advanced gestures (e.g., circles, numbers, and letters) can be used as time-saving shortcuts. Such shortcuts can trigger predefined system actions like opening a menu, alternating between workspaces, summoning applications, etc.

5.7.2. Above-Surface Interaction

While interacting with large displays, artifacts may be out of reach and selecting items may require excessive movement [14], while when touching the screen, the user can only see the area directly in front of them. In order to overcome these difficulties, the system detects gestures and directions indicated by the movement of users (e.g., hands, arms, and body) in order to support interaction while being far from the screen (e.g., change workspace and summon an application). Hence, CognitOS Board features a set of mid-air gestures that correspond to simple or complex actions (e.g., swipe left to go to the next slide and clap twice to clean up the entire workspace). Furthermore, implicit interaction is achieved by tracking the position of users in front of the board and reacting accordingly (e.g., rearranging windows and delivering personalized content). Finally, a small set of voice commands are available for presenters; for example, the utterances “next workspace” and “previous workspace” permit users to switch between active workspaces in a linear manner.

5.7.3. Remote Interaction from Desktop and Mobile Platforms

Given the size of the projected area, it would be cumbersome for presenters to keep track of every application launched on the board and its status. Additionally, keeping in mind that in some cases presenters sit at a desk with their personal device in front of them, expecting them to stand up and move in front of the board to perform actions that could be easily done from their workstation (e.g., launching a multimedia viewer) seems unrealistic and inefficient. To this end, a smartphone, a tablet and a desktop application were developed enabling presenters to get an overview of the current board status, manage the board workspaces and manipulate application windows.

The desktop application allows presenters to configure the state of the board a priori. Using this application, the user can prepare the layout and the content of the board before the presentation. In more detail, presenters can create new boards and organize them. On each of the created boards, they can launch applications and place them into specific positions, while at the same time they can resize, pin, maximize them and initialize their content (e.g., open the presentation viewer and load a specific presentation file). Furthermore, the users can group the application windows into workspaces, so as to manipulate them concurrently. Finally, it is important to mention that the application provides an assistant providing helpful tips during the aforementioned process.

The tablet application helps the user to have an overview of the board’s state during the presentation and permits the remote manipulation of its contents (e.g., create new boards, launch new applications, rearrange or resize workspaces or windows, group windows into workspaces, pin windows in specific positions, etc.). Finally, the smartphone application can be used as a mini remote controller to interact with the board from a distance and perform a subset of the available actions over the board or specific applications (e.g., move to the next board, close application, etc.).

6. User-Based Evaluation

User-based evaluation is the most fundamental usability inspection method and is irreplaceable [49]. This method helps the design team understand the problems of an interface, a system, an application, etc., by observing people using it. When representative participants attempt realistic activities, the researchers gain qualitative insights into what is causing trouble to the users and helps them to determine how to improve the design.

The user-based evaluation of the CognitOS Board was conducted with the participation of 10 users, which were asked to play the role of a university professor. The study did not entail the participation of an audience; however, the users were asked to complete a series of tasks, as if there were students attending their class. The goal of the study was to identify any unsupported features, uncover potential usability issues and assess the users’ overall experience before proceeding with an evaluation including the active participation of the audience. In particular, the following research questions were investigated:

- Research Question 1:

- Is the CognitOS Board usable?

- Research Question 2:

- Is the integrated functionality useful?

- Research Question 3:

- Do the proposed modalities and paradigms enable intuitive interaction?

6.1. Participants

The intended users of the system are both adults (e.g., presenters and educators) and children (e.g., students); however, this study focused on getting insights by observing presenters/educators interacting with the system. To this end, a total of ten (10) adults participated in the study; all of them had experience either in giving presentations (e.g., presentations in conferences or corporate meetings) or teaching students. This number of participants is appropriate for preliminary evaluations, as it can identify important usability problems before proceeding to large scale experiments [49].

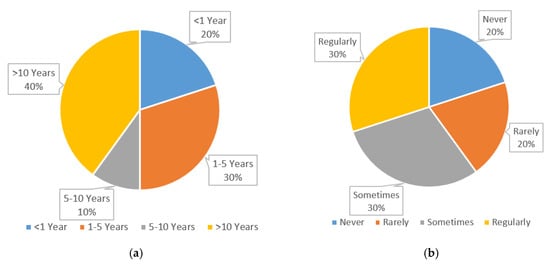

The selected users meet different characteristics (e.g., age, gender, and teaching/presentation experience). In particular, from the total of 10 users, 60% were females and 40% were males. Moreover, participants were selected in order to cover an as wide as possible range of ages; three of the users were in their twenties, four users were between 30 and 40 years old, and three were older than 40 years old. Additionally, their presentation or teaching experience varied so as to record the opinion and comments of different types of users (e.g., users having a teaching experience of more than 10 years, versus users with a teaching experience of less than a year) (Figure 12a).

Figure 12.

(a) Participant’s experience over lectures/presentations. (b) Use of a board during lectures.

None of the participants had experience with Interactive Boards. However, the majority of them (90%) use projectors to display their presentations, while 80% of them also use the traditional whiteboard (Figure 12b) to write important information or make notes, etc. In the future, we intend to perform studies that include users who have experience with commercial boards, so as to record their comments and opinion, as well.

6.2. Data Collection

In order to explore the aforementioned research questions, a pre-evaluation questionnaire, a custom observation grid and two post-evaluation questionnaires were used. During the evaluation period, only the necessary data was collected and processed through a “pseudonymization” process, while participants’ identities will remain confidential and will not be revealed. In the context of the evaluation process, both the European Union (EU) regulation on General Data Protection (GDPR; 679/2016) and the Greek Applicable Law 4624/2019 have been properly taken into account and approval has been granted by the FORTH Ethics Committee. In addition, participants were informed about the nature of the evaluation and all aspects of participation, and a consent form was signed.

6.2.1. Pre-Evaluation Questionnaire

A pre-evaluation questionnaire has been used in order to collect participants’ demographics and other user-related information:

- The age and gender of the participants;

- The teaching/presentation experience of the participants, both in terms of duration (i.e., less than a year, 1–5 years, 5–10 years, and more than 10 years) and frequency (i.e., weekly, monthly, one over six months, and once a year);

- Frequency and reason of use of the traditional board;

- Experience with Interactive Whiteboards;

- Experience with projected presentations and experience with presentation gadgets (e.g., presenters).

6.2.2. Observation Grid

A custom observation grid was used to collect qualitative and quantitative information:

- The overall duration of the running test;

- The comments of the users during the test;

- The number and type of errors made during each task;

- The number of hints/helps given during each task;

- The time it took users to complete each task.

6.2.3. Post-Evaluation Questionnaires

Two (2) questionnaires were used, aiming to reveal the usability of the system, as well as the overall user satisfaction. The first one was the System Usability Scale (SUS) [50], a subjective satisfaction questionnaire which includes 10 questions, with five response options for respondents, from strongly agree to strongly disagree. The SUS questionnaire was translated in Greek, according to [51], so as to be more comprehensible by the participants. The second one was a custom questionnaire including 10 questions the about users’ overall impression regarding the CognitOS Board:

- How did you find the idea of an Intelligent Board that aims to enhance presentations?

- Do you believe that a board of such dimensions (wall-to-wall) would be useful for teaching or giving presentations?

- Would you like a similar board for your presentations? How often would you use it?

- How did you find the interaction with the board?

- What do you think of the Follow-Me functionality?

- What do you think of the Summon functionality?

- What was the most difficult thing that you had to do during the execution of the scenario?

- What did you like the most?

- Is there something that you did not like, and that you would prefer to change?

- Is there any functionality that you would expect to find, but it was not integrated in the system?

6.3. Procedure

The study included four stages: (i) preparation, (ii) introduction, (iii) running the test, and (iv) debriefing. In order to make participants feel comfortable and ensure that the evaluation progresses as planned, a facilitator was responsible for orchestrating the entire process and assisting the users when required, while an observer was responsible for recording information in a custom observation grid. Additionally, a member from the experimental team was available in order to manage any technical difficulties.

6.3.1. Preparation

During the preparation stage, all the appropriate actions were completed before the arrival of the participants, in order to make sure that the evaluation would be conducted smoothly. In particular, the projectors were turned-on, the system was initialized, and the room A/C was set at a comfortable temperature. Moreover, the material required for the study (i.e., pre- and post- evaluation questionnaires, observation grid, and scenario) was prepared and organized in a way that both the facilitator and the observer would be able to manipulate it easily.

6.3.2. Introduction

During the introduction stage, the facilitator welcomed the participants, gave a brief explanation of the purpose of the experiment, and highlighted the importance of their participation. Next, the concept of the CognitOS Board and its main features (i.e., boards, workspaces, and windows) was described. Next, a demonstration of the interaction paradigm followed, including three simple tasks performed by the facilitator (i.e., launch menu, launch application, and summon application to side wall).

After that, the facilitator clarified that the users’ personal data would be anonymized, as described in the Informed Consent Form, which they were asked to sign prior to their participation in the experiment. Finally, the facilitator told them that they could stop the experiment whenever they wanted and encouraged them to follow the Think-Aloud protocol [52]. According to this protocol, the participants were asked to verbalize their thoughts as they moved through the User Interface so as to provide valuable feedback regarding the difficulties they faced during the evaluation as well as their overall impression of the system.

6.3.3. Running the Test

After the introductory phase, the participants were asked to follow a scenario including ten (10) tasks (based on the scenario described in Section 3.2.1), which were projected on a side wall. The users could either read the tasks by themselves or ask the facilitator to narrate them. During that process, the facilitator refrained from interacting with the participants and did not express any personal opinion regarding the experiment. The only exception to this rule was made when a participant was having some difficulty in completing a task; at this point, the facilitator intervened to provide a helpful tip. At the same time the observer was keeping notes on the custom observation grid, a member of the experimental team was available (outside of the room), in order to manage any technical difficulties.

6.3.4. Debriefing

After the evaluation was completed, the users were asked to fill in the SUS questionnaire by themselves. Then the facilitator debriefed them according to a set of 10 questions (Section 6.2.3), in order to investigate their overall impression and record any thoughts or suggestions that they had to make. Finally, the facilitator and the observer thanked the users for their participation and offered them some treats in order to express the gratitude of the entire team.

6.4. Results

In this section the results of the user-based evaluation are presented in relation to the research questions that have been mentioned previously.

- Research Question 1:Is the CognitOS Board usable?

The evaluation results indicated that in general the users did not encounter major problems and there were no failures. In more detail, the distinct errors that users made were 13 (Table 6), while the total number of erroneous interactions was 29. These errors occurred due to minor usability issues that were revealed, which can be easily eliminated for the next version of the CognitOS Board in order to help users avoid such missteps.

Table 6.

The errors made during the evaluation.

As depicted in the following chart (Figure 13), 30% of the users made only one error, 50% made two to four errors, while the remaining 20% made five or six errors. The total number of errors per user is relatively small, considering that they had to complete a quite demanding scenario (consisting of 10 tasks), and that it was their first time interacting with the system. The analysis of the evaluation findings revealed that none of the users repeated the same errors, which means that they became familiar with the system during their interaction with it. In fact, some users pointed out this aspect by themselves: “I did this task before, now I know how to do it! It is easy”, and “Previously I was slightly confused, because it was the first time, but now I know what to do! It is clear!” Finally, as depicted in the chart (Figure 13) in general the users received less hints than the errors they made, while the observation notes reveal that when an error occurred the majority of the users where able to recover from it easily and complete the required task in a timely manner.

Figure 13.

Errors and hints distribution per user.

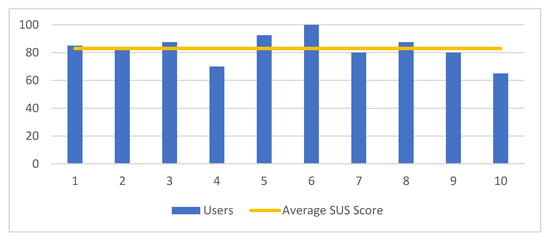

Furthermore, the SUS questionnaire was used in order to measure the perceived usability. In general, participants’ responses were positive, as it is visualized in Figure 14. The overall score was 83, which is higher than the average SUS score (68). Moreover, according to the curved grading scale for SUS [53], the CognitOS Board received overall an A score. Further investigation of the individual SUS scores reveals that 50% of the users rated it with an A+ (ranging from 85 to 100), the 30% of the users rated it with an A and A- (ranging from 80 to 82.5), while the remaining 20% rated it with a C (ranging from 65 to 70).

Figure 14.

Over SUS score per user.

- Research Question 2:Is the Integrated Functionality useful?

In order to understand whether the integrated functionality is useful, we studied the comments made by the users during the evaluation, as well as their responses to the custom post-evaluation questionnaire. It was revealed that all users found the system extremely useful and responded that they would definitely use it, if it was available. For example, one participant said that “The system is excellent, I would like to use it during my lectures”, a second one stated that “The idea is brilliant, it would be very beneficial for educational purposes”, while another one expressed that “It is a very useful system, it provides many facilities that can enhance presentations”.

Furthermore, the user responses to the questions 5,6, and 8 of the post-evaluation questionnaire (Section 6.2.3) revealed useful insights regarding the Follow-Me, Summon, Capture, and Annotate functionalities. In more detail, all users were particularly fond of the Summon functionality; all of them recognized that the ability to summon an application located in a far position is of outmost importance. Indicatively, some of the users’ comments include: “It is the best and most useful feature of the system”, “Ohhh! That functionality is very good”, and “It requires less effort than dragging windows around the board”.

The Follow-Me functionality impressed fewer participants. In more detail, 60% of them found this feature necessary and useful, 20% thought that it would be more essential for larger boards, while the remaining 20% believed that it is fun, but they questioned its usefulness. Some participants made some very positive comments, such as the following: “It is very useful, I like that the window is moving based on my location, so I could interact with the window faster than in usual”, and “It is great, very useful, you could make the window you want to emphasize to follow you”. Based on these findings, we believe that in a large-scale experiment that will take place in a room with a larger board, the full potential of this functionality will be revealed.

Finally, the integration of the Annotate and Capture functionalities received positive feedback, such as “I enjoy the annotation functionality, I could use it all the time to emphasize or highlight content”, “The combination of Capture and Annotation functionalities is extremely useful, one can easily combine digital content with handwritten annotation and share to the audience”.

- Research Question 3:Do the proposed modalities and paradigms enable intuitive interaction?

During the evaluation it was observed that the users were confused regarding when to press the pen’s physical button while interacting with the system. In general, when the pressure sensitive tip touches the surface of the wall, it automatically acts as a mouse cursor, while pressing its physical button activates the right-click functionality. The latter is required only in two cases: (i) launching the menu and (ii) performing a gesture.

So, despite the fact that during the introduction made by the facilitator, the interaction paradigm was explained to the users, two types of errors were revealed: (i) the users did not press the Pen button when required, and (ii) the users pressed the button every time. However, it was observed that the more they interacted with the system, the more accustomed they became with the interaction paradigm.

Another issue that was revealed is the fact that the users were not sure where to perform a gesture. In particular, 4 users tried to make the gesture over an application, instead of over an empty space of the board. However, this is something that can be learnt by providing some support to novice users (tutorials and on-the-fly tips), the same way that Window users have learned that right clicking on an empty desktop area reveals a different menu than right clicking on top of an application icon.

Another interaction related issue that this study aimed to investigate, was whether the user would understand how to navigate amongst the multiple boards. Interestingly, 40% of the users at their first attempt used the swipe up area on the bottom of the board to reveal the next one, 20% used the menu and the remaining 40% used the pagination component located on the top of the board. The tallest users immediately used the pagination component, while the ones that could not reach it sought for an alternative. In any case, the navigation amongst the available boards was possible and easily performed by all users.

Finally, another important aspect that we wanted to examine was how the users would try to move applications amongst walls. The findings reveal that 70% of the users instinctively used the Summon functionality (that was already demonstrated by the facilitator during the introductory phase), 20% of the users tried by dragging and dropping the window on the side wall, while one user used the Board Manager. Additionally, one user suggested that one way to accomplish this task would be with the Follow-Me functionality. It was quite satisfactory to observe that the system supported all the approaches that the users selected to follow.

7. Conclusions and Future Work

This work presented a sophisticated framework, named CognitOS Board, which takes advantage of (i) the intelligent facilities offered by IEs (e.g., intelligent conference, classrooms, and meeting rooms) and (ii) the amenities offered by the wall-to-wall board, in order to enhance presentation-related activities and provide enhance the User Experience.

Presenters are equipped with a unified working environment, appropriate for large Interactive Boards, that offers a natural and effortless interaction paradigm, allowing the effective orchestration of presentation assets (e.g., use different workspaces to place different types of applications). Additionally, users can initialize the contents of the board with the appropriate material before the actual presentation via a desktop application.

CognitOS Board enhances well-established interaction metaphors (e.g., writing on the digital board is similar to writing on a plain whiteboard) and supports multimodal interaction through the following: (i) touch on the wall surface with the finger or a special marker, (ii) a remote presenter, (iii) voice commands, (iv) sophisticated techniques that take advantage of the IE’s facilities (e.g., through user position tracking an application window can tail the user while moving around), and (v) the controller application for handheld devices (tablet).

As indicated by the evaluation study, in general the users did not encounter major problems and there were no failures, while according to the curved grading scale for SUS, CognitOS Board received overall an “A” score. In particular, the system proved to be very usable and useful and the majority of users stated that they would definitely use it, if it was available. The Annotate and Capture functionalities received positive feedback, since on the one hand they inherently enhance presentations by giving presenters the ability to emphasize, highlight and share content, while on the other hand, their integration in the CognitOS Board was proven to be beneficial. Additionally, in terms of interaction, it seems that the Follow-Me and Summon functionalities, along with the Board Manager tool, provide a satisfactory solution to the perplexing problem of moving applications over a large board area in an effortless manner. The minor issues that were revealed during the evaluation are going to be resolved in the next version of the system, while we are going to take into consideration several suggestions made by the users so as to improve the overall User Experience. In order to do so, an iterative process will be followed including brainstorming and focus groups sessions with the design team in order to introduce potential solutions for each identified issue, while the participation of UX experts in order to evaluate the improvements—before organizing an additional user-based evaluation—is imperative. Finally, one major future goal is to conduct a large-scale experiment, inside a room with a bigger board, spanning across multiple walls. We wish to observe presenters interacting with the system under real circumstances, while audience is also present and actively participates to the study according to a rich scenario. Such an experiment can uncover any shortcomings that were not identified during the laboratory study, but can also reveal the full potential of the CognitOS Board. Towards that direction, we are currently in the process of installing the CognitOS Board inside the meeting room of our facility, in order to make it available to all laboratory members that wish to use it during their meetings. That way, users will be able to use the CognitOS Board frequently, at will and in the manner they prefer (as opposed to the way dictated by an evaluation scenario), which will enable the design team to collect valuable feedback either by observing a meeting or by interviewing the meeting participants.

Potential future improvements, apart from the evaluation findings, include the following: (i) extensive interoperation with the various services of the Intelligent Environment, so as to provide a better user experience (both to presenters and audience); (ii) the improvement of the items placement algorithm, to further assist presenters in better organizing their teaching assets; (iii) the exploitation of recurring user interaction patterns (e.g., how the user usually sets up the board and the organization of the available workspaces), so as to provide tips and suggestions (e.g., suggest appropriate layouts to set up the board, place launched applications at specific positions on the board, etc.); (iv) the enhancement of supported gestures and shortcuts; (v) the development of a monitoring mechanism to guarantee a proper Quality of Service (QoS) from the components that comprise the system and the external applications; and, finally, (vi) the introduction of more applications and tools to support lecturing/presentations.

Author Contributions